1. Introduction

In psychometric research, the objects of study, such as personality traits, motivation, or attitudes, are often not directly observable or measurable. Consequently, the structure and effects of these unobservable variables, referred to as “latent variables”, are typically investigated through specific techniques like factor analysis. These methods aim to statistically relate the covariation between observed variables to latent variables [

1].

Factor analysis, originally developed by Spearman [

2], is a popular and widely used multivariate technique. Its goal is to approximate the original observed variables of a data set by linear combinations of a smaller number of latent variables called factors. In psychological research, factors are fundamental as they reduce dimensionality and represent psychological constructs strongly tied to psychological theory [

3]. Indeed, factor analysis is mostly used to assess the degree to which the items on a scale conform to a theoretically indicated higher-order structure, investigating the construct validity and internal validity of psychometric tests [

4].

However, factor analysis relies on a set of assumptions that may not always hold in psychological data [

5]. Notably, it assumes that the relationships between indicators and factors are linear. The use of factor analysis for theory validation implies that the theory behind the factor-indicator relationship must assume linearity. Hence, it constrains the kinds of theories that can be validated through factor analysis.

In cases where nonlinear relationships exist, this assumption can lead to biased results and misleading interpretations [

6]. Bauer [

6] and Belzak and Bauer [

7] highlighted two significant consequences of excluding nonlinear relationships. Firstly, it can result in the rejection of measurement invariance tests, and secondly, it can lead to the misidentification of nonlinear effects as interaction effects. Bauer [

6] demonstrated that even a slight curvature in the relationship between a single indicator and a factor could cause metric and scalar invariance tests to be rejected. The rejection occurs because the data from different groups cover different regions of the nonlinear function, resulting in different slopes and intercepts when fitting a linear factorial model, leading to the rejection of invariance tests. Additionally, failure to account for nonlinear effects can confound the identification of interaction effects, as shown by Busemeyer and Jones [

8] and later by Belzak and Bauer [

7].

It is important to consider that items with nonlinear relationships may deviate from the assumptions underlying traditional psychometric methods. As a result, these items are often excluded during the initial stage of test construction or validation, even though they may contain valuable information about the latent variable being measured. Therefore, including them in the analysis could enhance the overall performance of the hypothesized model and provide a deeper understanding of the construct.

In this context, the availability of analysis techniques that do not rely on the assumption of linearity and allow for the exploration of the relationship between items and factors while accommodating nonlinearities could significantly improve psychometric research. Such techniques would enable researchers to fully explore the nature of the relationships within the data and capture the complex dynamics that may exist.

Various nonlinear factor analysis techniques have been proposed over the years. When considering nonlinearity in latent variable models, it is crucial to examine how and where the nonlinearity is modeled. Specifically, nonlinearity can exist in the relationship between items and factors or in the relationship between factors, referring to the measurement model or the latent model, respectively. The focus of this study is on the former kind of relationship.

To the best of our knowledge, although some studies have proposed nonlinear factor analysis models, the focus has primarily been on nonlinear relationships among factors, with a few exceptions. Since McDonald’s [

9] pioneering work, new estimation methods for nonlinear factor analysis models have been developed successively. Some of these include the maximum-likelihood-based methods proposed by Klein and Moosbrugger [

10], Klein and Muthén [

11], Lee and Zhu [

12], and Yalcin and Amemiya [

13]. Other relevant methods include the method of moments introduced by Wall and Amemiya [

14] and the Bayesian approaches developed by Arminger and Muthén [

15] and Zhu and Lee [

16]. However, the proposed methods to estimate nonlinear factor models are computationally demanding and require specialized techniques. Unlike linear factor models that often have closed-form solutions, nonlinear models typically involve iterative optimization algorithms. These algorithms aim to estimate the model parameters by minimizing the discrepancy between the observed data and the model’s predicted values. The complexity of nonlinear optimization routines, combined with the potential presence of local optima, can pose challenges in achieving convergence and obtaining reliable estimates.

Furthermore, these techniques are rarely used because they are complex to implement and interpret. Nonlinear relationships are often more nuanced and complex than their linear counterparts, making the identification and meaningful interpretation of latent factors more intricate. Researchers must carefully interpret the nature and direction of nonlinear associations, considering the specific functional form employed in the analysis. This interpretation process requires expertise and a deep understanding of the underlying constructs.

Despite these complexities, nonlinear methods hold great potential for advancing psychometric research, as they provide a means to capture the intricacies and nonlinear dynamics present in psychological constructs.

One interesting solution to address the issue of nonlinearity lies in the use of artificial neural networks (ANNs). ANNs are computational models inspired by the structure and function of biological neurons. They possess the ability to learn relationships between input and output variables automatically through training data without the need for manually programmed decision rules. This characteristic enables ANNs to extract relevant features from raw input data, eliminating the requirement for manual feature engineering [

17]. Consequently, ANNs can handle complex and nonlinear patterns within the input-output relationship.

Unlike traditional psychometric methods that rely on predefined notions about the data’s nature and variables’ relationships to create data models, ANNs do not assume a specific function or relationship between variables. Instead, they operate as algorithmic models aiming to find the function that maximizes predictive power for a given dataset.

Autoencoders, a type of ANN, have been extensively studied for their capacity to reduce the dimensionality of input data [

18,

19]. Their aim is to reconstruct the input data and encode the most relevant information for input reconstruction in a smaller central layer, known as the “bottleneck layer” [

20]. While perfect reconstruction of the input vectors is not possible due to the smaller size of the bottleneck layer, the central neurons of the autoencoder are associated with the intrinsic dimensionality of the data [

21]. In certain conditions, autoencoders can converge to the solution of principal component analysis (PCA) [

22].

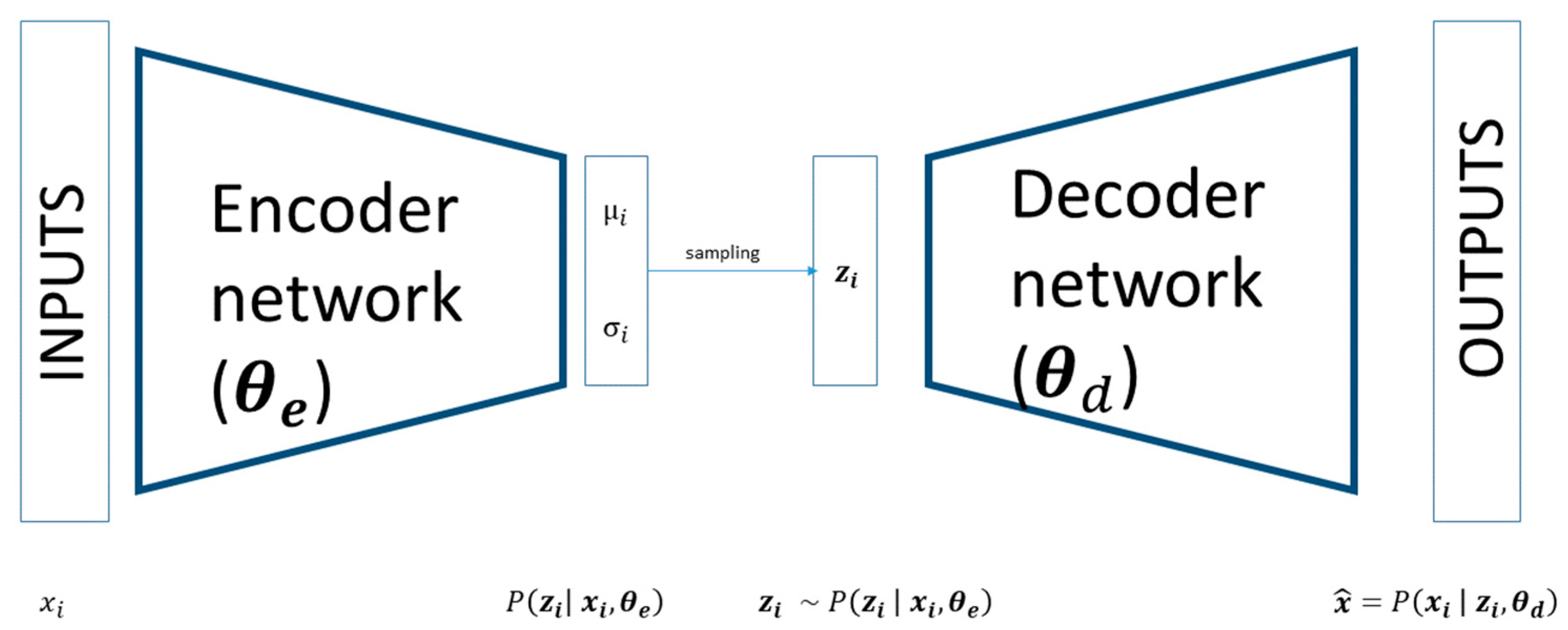

Among the variants of autoencoders, the variational autoencoder (VAE) is of particular interest and has garnered attention in psychometric research [

23,

24]. VAE is a generative model that aims to describe how a dataset is generated in terms of a probabilistic model. It differs from traditional autoencoders as it imposes restrictions on the distribution of the central nodes, encouraging the latent space of the VAE to be independent and follow a predefined distribution, often a normal distribution [

25]. This characteristic makes the latent space of VAEs more interpretable than that of simple autoencoders. The structured latent space of VAEs can also be used for data generation.

In this study, our primary objective is to employ variational autoencoders as a tool for identifying nonlinear relationships between items and the underlying factors. Our research goal is to identify the relationship between the items and the underlying factors without having an a priori idea about this relationship. We remain within the framework of classical test theory and compare factor analysis and variational autoencoders using different datasets, real and simulated.

In the initial phase of our work, we focus on analyzing the characteristics of autoencoder solutions using two synthetic datasets. The first synthetic dataset demonstrates a linear relationship between items and a single factor. Here, our aim is to evaluate whether the variational autoencoder’s solution converges toward a similar outcome as that of linear factor analysis. The second synthetic dataset illustrates a nonlinear relationship between a varying number of items and the factor. Specifically, the relationship between items and the factor follows a sigmoidal function. In this scenario, our hypothesis is that the autoencoder will yield more accurate estimates of factor scores and effectively capture the shape of the relationships between the input variables and the factor. At this point, we also investigate the generalizability of our results using a simulated dataset based on a two-factor model.

Furthermore, as we will provide an illustrative example of applying VAEs to a real-world dataset, demonstrating their applicability and relevance in practical scenarios.

Thus, we propose autoencoders as a valuable tool for exploring the relationship between observed and latent variables, enabling dimensionality reduction while accounting for nonlinear relationships.

This work is structured as follows. First, we discuss the use of artificial neural networks and autoencoders in psychometrics research, offering an overview of the existing literature. Next, we present the technical details of variational autoencoders and describe how the data used in this study were simulated. We then proceed to present our analyses and discuss the results derived from the application of the VAE model to the synthetic datasets. At this point, we show an illustrative example of an application on a real dataset. Finally, we offer concluding remarks that summarize the key findings and discuss the implications of employing VAEs in psychometric research.

2. Artificial Neural Networks in Psychometrics

Artificial neural networks have proven to be an advantageous predictive methodology in psychometrics, both in applied contexts and in the realm of methodology research. ANNs can easily master very large and different types of data, removing some constraints that characterize more traditional techniques of data analysis, especially when applied to behavioral recorded data, which are often noisy, large in quantity, and structured in a complex temporal fashion.

In the realm of psycho-diagnosis, for example, Linstead et al. [

26,

27] used neural networks to predict the extent to which children with autism spectrum disorders would benefit from early behavioral interventions. A recent study by Perochon et al. [

28] automated the detection of ASD by analyzing motion features detected during a task performed on a tablet, assuming that motor abnormalities may be a potential hallmark of ASD [

29]. Milano et al. [

30], using a variational autoencoder, showed that the motion features of children with autism differ consistently from those of children with typical development. ANNs have also been used to enhance the diagnosis of psychological disorders, as shown in a review by Kaur and Sharma [

31]. Growing evidence suggests that artificial intelligence approaches to classify psychiatric patients offer superior predictions of treatment outcomes compared with traditional DSM/ICD-based diagnoses [

32].

From a methodological perspective, as noted by Lanovaz and Bailey [

33], artificial neural networks have been used for the development and evaluation of psychological theories [

34,

35], for behavior measurement [

36,

37,

38], and within the Item Response Theory framework [

39,

40]. Furthermore, many tutorial works specifically targeted at psychologists about machine learning and neural networks have been proposed recently [

17,

41].

In particular, artificial neural networks have been used to select variables for inclusion in a psychopathological model [

42] and for the development of short forms of tests [

43]. Staying within the methodological realm, the demonstrated ability of autoencoders to extract essential information from data has paved the way for new applications of autoencoders. Urban and Bauer [

23] introduced a novel deep learning-based variational inference (VI) algorithm using an importance-weighted autoencoder (IWAE) for exploratory item factor analysis (IFA). The IWAE can predict the log-likelihood of all possible responses on a Likert scale, enabling the retrieval of the five-factor structure of the Big Five model from a large Big5 dataset. In addition, Huang and Zhang [

44] proposed a variational autoencoder (VAE) model to study the structure of personality tests, comparing it with linear factor analysis. In their work, they used autoencoders to derive the values of factor loadings. Finally, Esposito et al. [

45] explored the use of autoencoders as a method to extract causal structure from psychometric data, showing that a nonlinear autoencoder has a greater ability than PCA to capture item–factor relationships.

4. Results

The Results section is structured as follows: First, we test the VAE and factor analysis with the linear dataset, where the items have a linear relationship with their underlying factor. Next, we evaluate both methods using datasets with nonlinear relationships. The simulated data results conclude with a paragraph that compiles all the previous results and provides an extension using a two-factor dataset. Finally, we test the FA and VAE in a real-world scenario, as discussed in the final paragraph of the Results section.

4.1. Linear Case

For the first series of experiments, we use the dataset described by Equation (11), and then we perform a factor analysis to estimate the loadings and the factor scores (

) and reconstruct the item values (

). Formally, we can connect these two quantities:

The

f function ideally should be equivalent to Equation (11), where the slope of

f is the loadings

of each item. To verify this hypothesis, we fit

f with the linear Equation (11) using the least squares method, leaving as a free parameter the loadings

.

Table 1 reports the values and the standard deviation obtained from the fitting and the loadings and communalities directly derived from the FA:

As shown in

Table 1, the slope of the curve obtained from the fitting process matches the loadings used to generate the dataset. In this case, we achieve a total explained variance of 0.92. To visualize the relationship between the reconstructed item values and the estimated factor scores,

Figure 2 reports the observed item response with respect to the true factor scores (blue dots), the reconstructed items with respect to estimated scores (red dots), and the result of curve fitting (black solid line).

In

Figure 2, it is clear how FA captures the linear relationship between the factor scores and each item. In

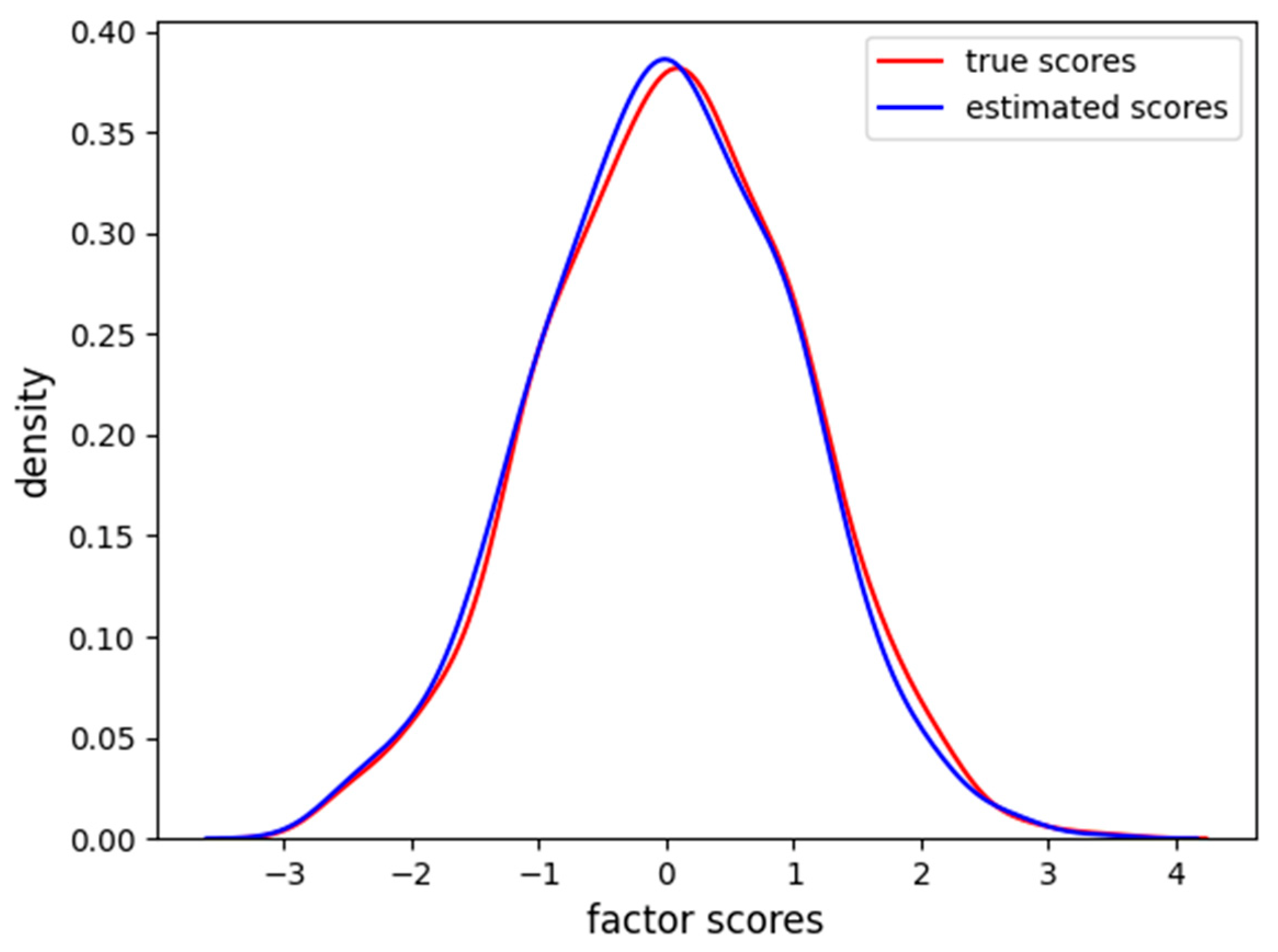

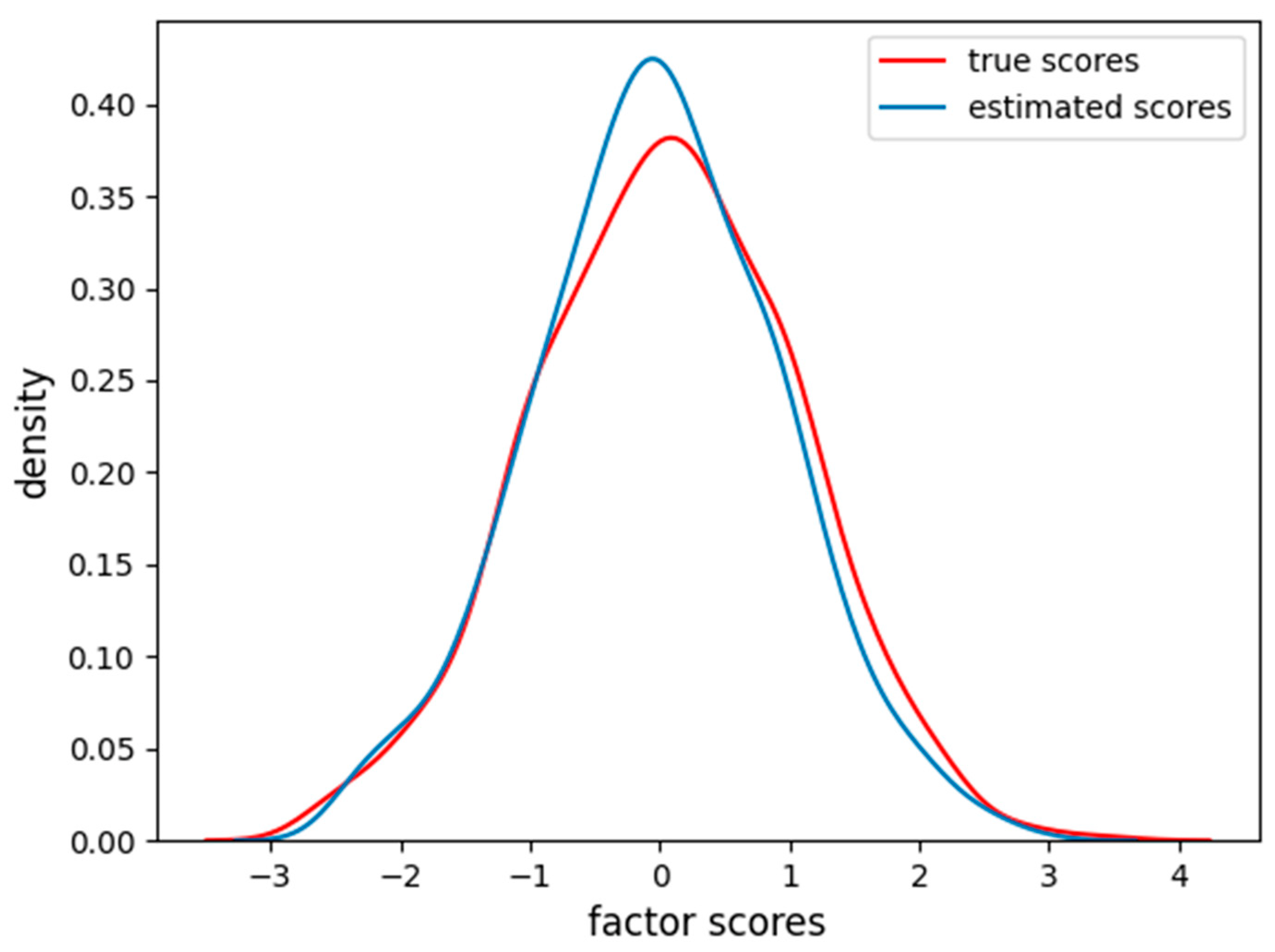

Figure 3, we also report the distribution of the factor scores for the true and estimated scores.

Figure 3 shows how the density of estimated scores from FA correctly resembles the true Gaussian distribution of the factor scores.

We measured an MAE of 0.11 and 0.15 RMSE between the two distributions. For the variational autoencoder, we repeated the same analysis. We connected the reconstruction

and the estimated scores

through the linear function

f and performed a least-squares fitting to find the slope of the curve representing the factor loadings. Results are reported in

Table 2.

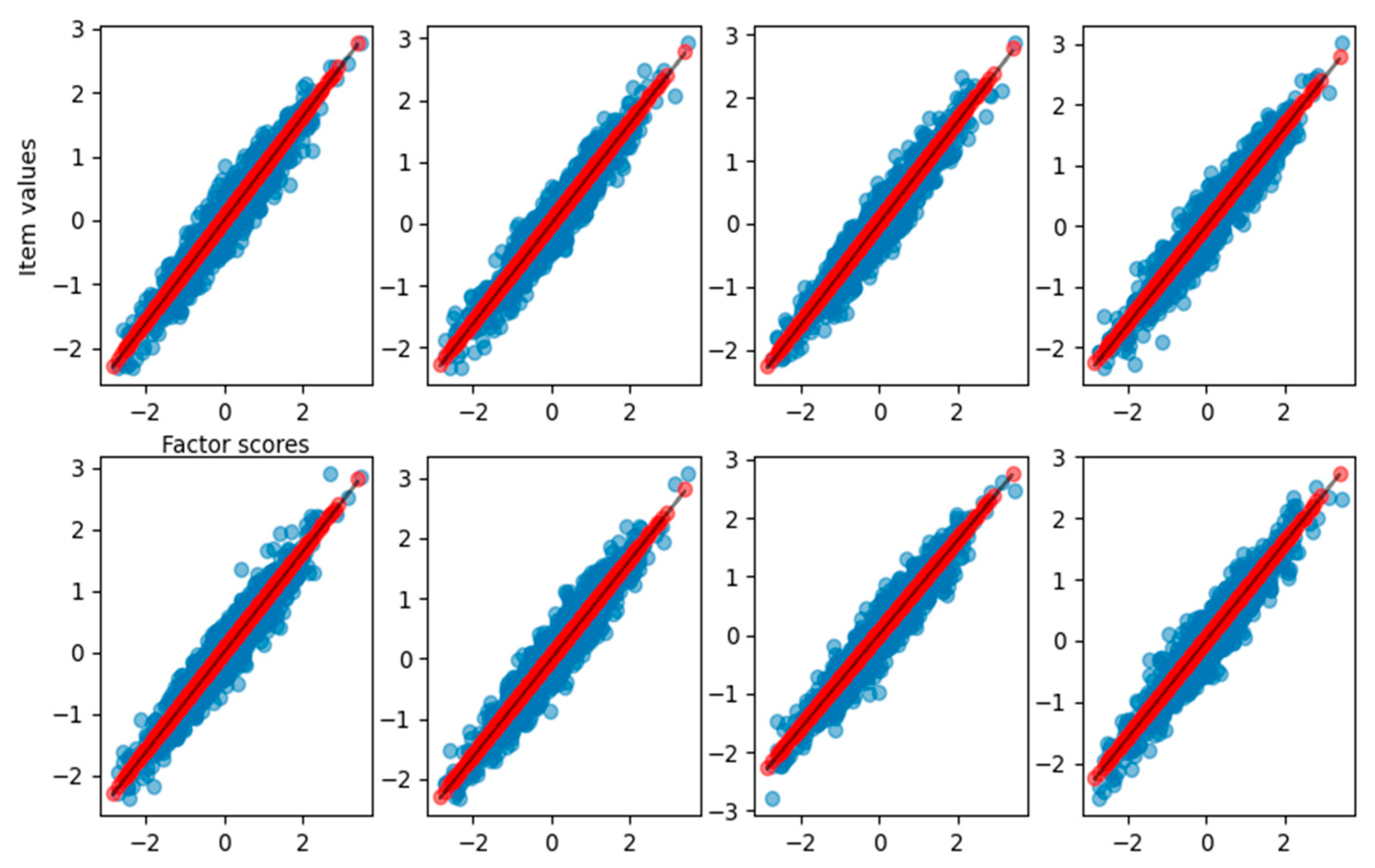

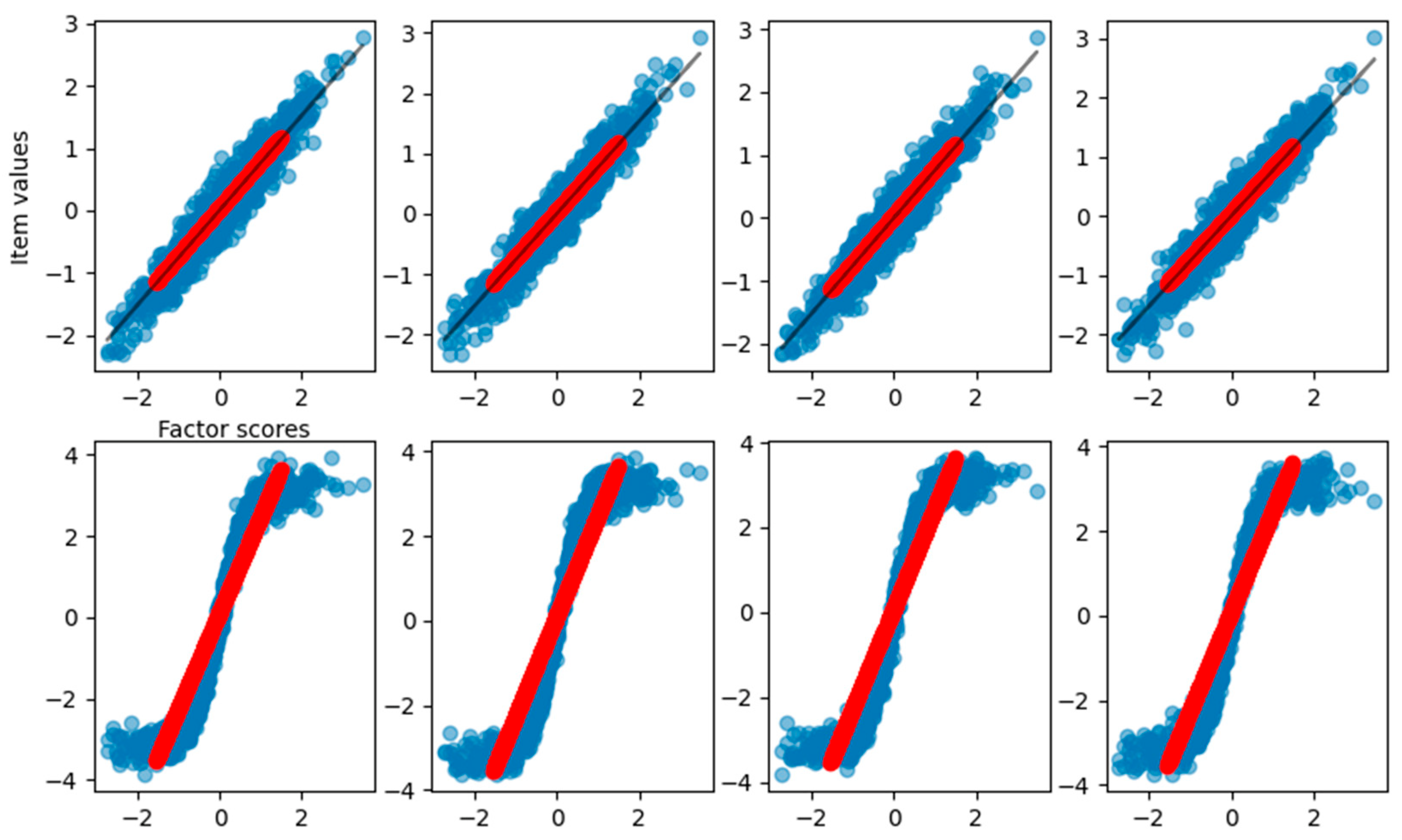

The VAE, just like the FA, correctly reconstructs the relationship between factor scores and item values with an explained variance of 0.91. In

Figure 4, the graphical reconstruction is reported.

From

Figure 4, we can see that the VAE, differently from the FA, also reconstructs the noise in the relationship between factor scores and item values. This is due to the fact that sampling from the latent space with a single MonteCarlo draw is subject to Gaussian noise, and the reconstruction results are more scattered with respect to the FA. Nevertheless, the noise does not affect the correct reconstruction of the function used to specify the relationship between the factor and the items. The density of factor scores estimated from the VAE, along with the true scores density, is also reported in

Figure 5.

The VAE correctly finds the Gaussian distribution of the factor scores as imposed by the regularization on the VAE latent space. The MAE between the true and estimated scores is 0.13, and the RMSE is 0.17, a little bit higher than FA in the linear setting.

These findings show that a VAE with a single hidden layer correctly finds the underlying relationship between factor scores and observed items in a linear scenario, retrieving the same loadings of the FA.

4.2. Nonlinear Case

In this section, we modify the dataset by injecting one or more items with a nonlinear relationship to their underlying factor, as described by Equation (13). The fitting process is the same as described above, with the difference that we use for the function f, representing the connection between items and scores in the nonlinear equation.

In the first experiment that we conducted, item number eight of the dataset was modified to have a nonlinear relationship with the factor. The loadings and communalities estimated from the FA are reported in

Table 3, along with the parameters estimated from the fitting.

As we can see, the loadings of item number eight returned from FA are completely different from the right loading. In

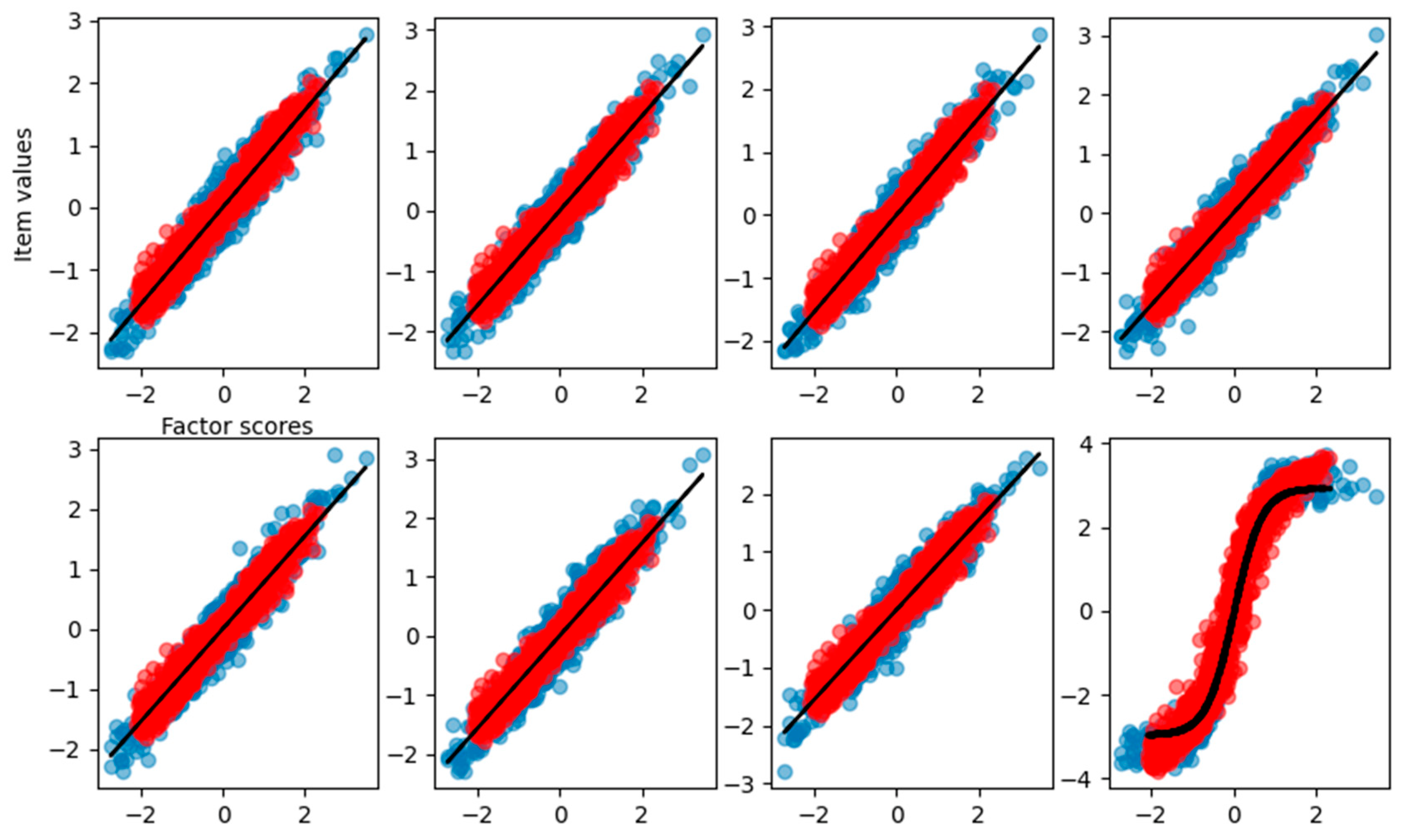

Figure 6, the graphical representation is reported.

We can see that the last item–factor relationship, represented by the FA with a linear function, does not capture the correct relation. The fitting curve is not reported because it is not possible to perform the fit between a linear data reconstruction and a nonlinear sigmoidal curve. The explained variance is still pretty high, 0.88, but lower than before. In

Figure 7 is reported the density of estimated factor scores and the true scores density. We can see that the estimation still resembles the true Gaussian distribution (MAE = 0.16, RMSE = 0.20) also if an item is wrongly reconstructed.

On the other hand, the VAE correctly captures the relationship between all the items and the factor, fitting all the items as well and retrieving the corresponding loadings, as used to generate the data (see

Table 4 and

Figure 8).

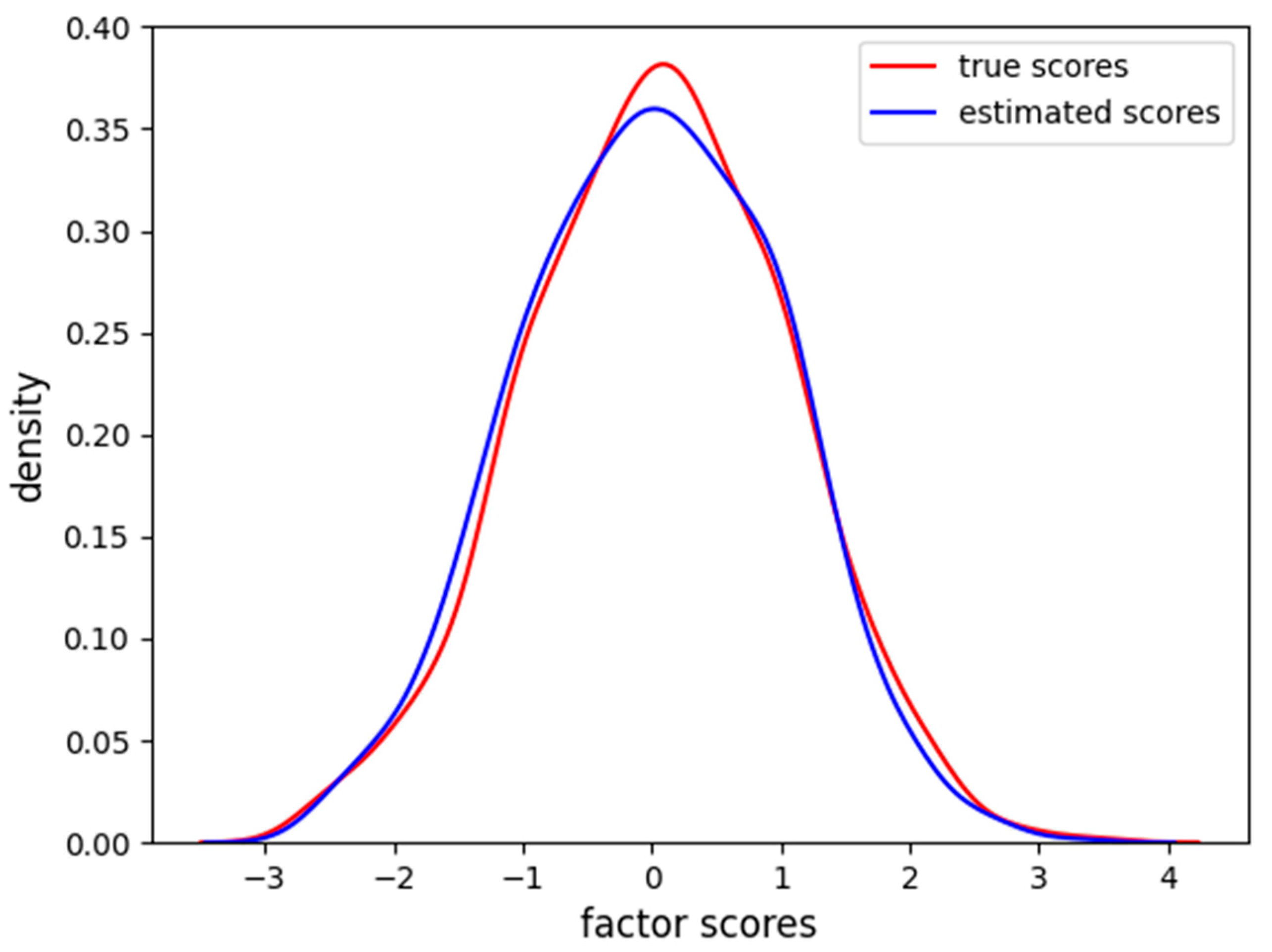

We can see how the last item is correctly approximated, and the fit is able to find the loading connecting the scores and the item values through the sigmoid function. The explained variance of the VAE is 0.92. In

Figure 9, the density of the estimated factor scores is reported.

The Gaussian distribution is correctly identified with an MAE of 0.12 and RMSE of 0.16. The VAE is able to reconstruct the relationship and to estimate the factor scores also when the dataset is composed of one nonlinear item.

When the dataset is composed of a mixture of 50% of linear items and 50% of nonlinear items, we found that the FA correctly finds the loading for the linear items but completely fails on the nonlinear reconstruction of the remaining items (

Table 5).

From the graphical reconstruction showed in

Figure 10, we can see how the FA tries to minimize the error, focusing mostly on the linear part of the sigmoid and leaving out item values related to high and low factor scores. We can see that also the linear items are reconstructed only in the central part of factor scores, leaving the more extreme values basically unpredictable.

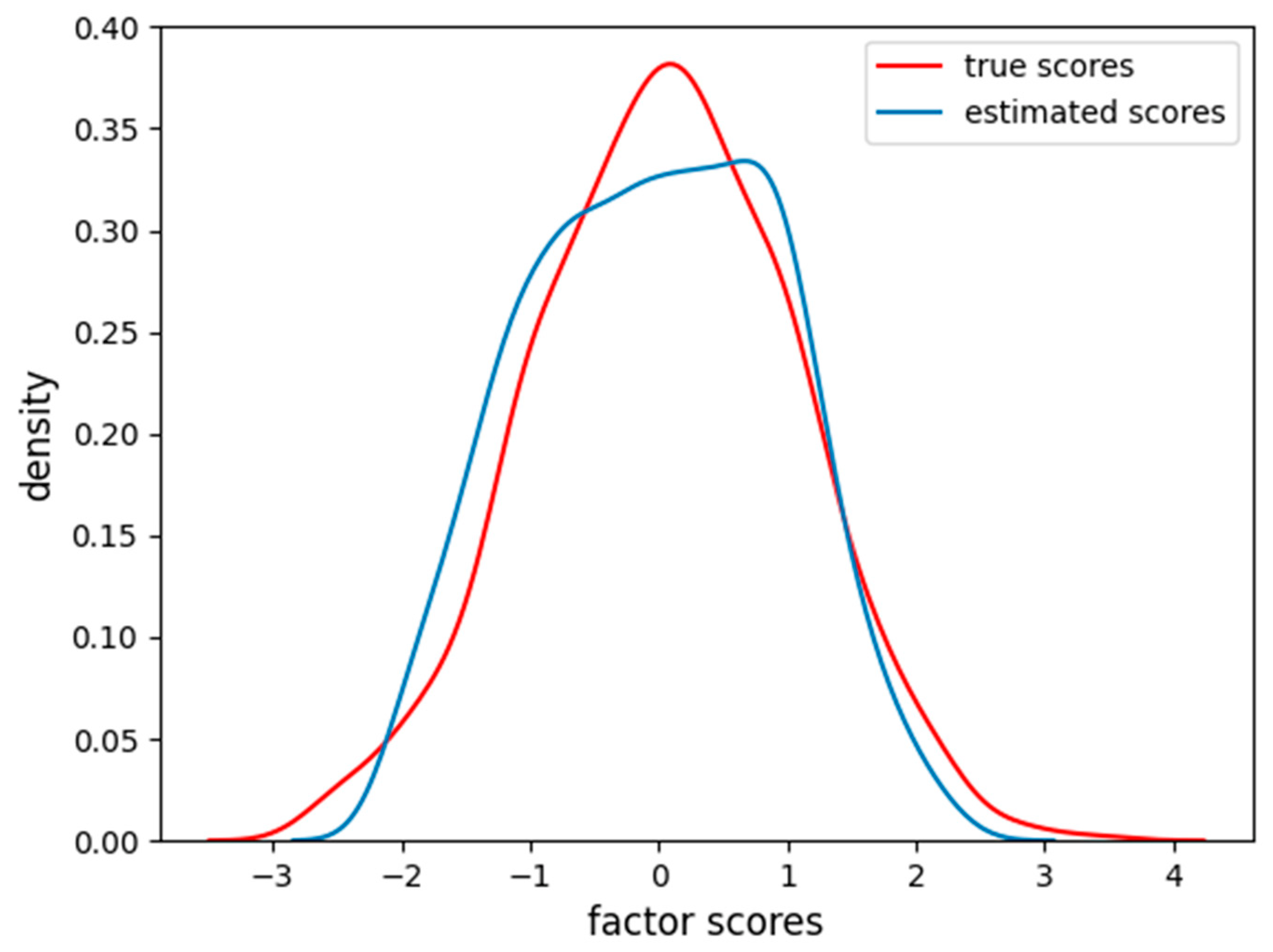

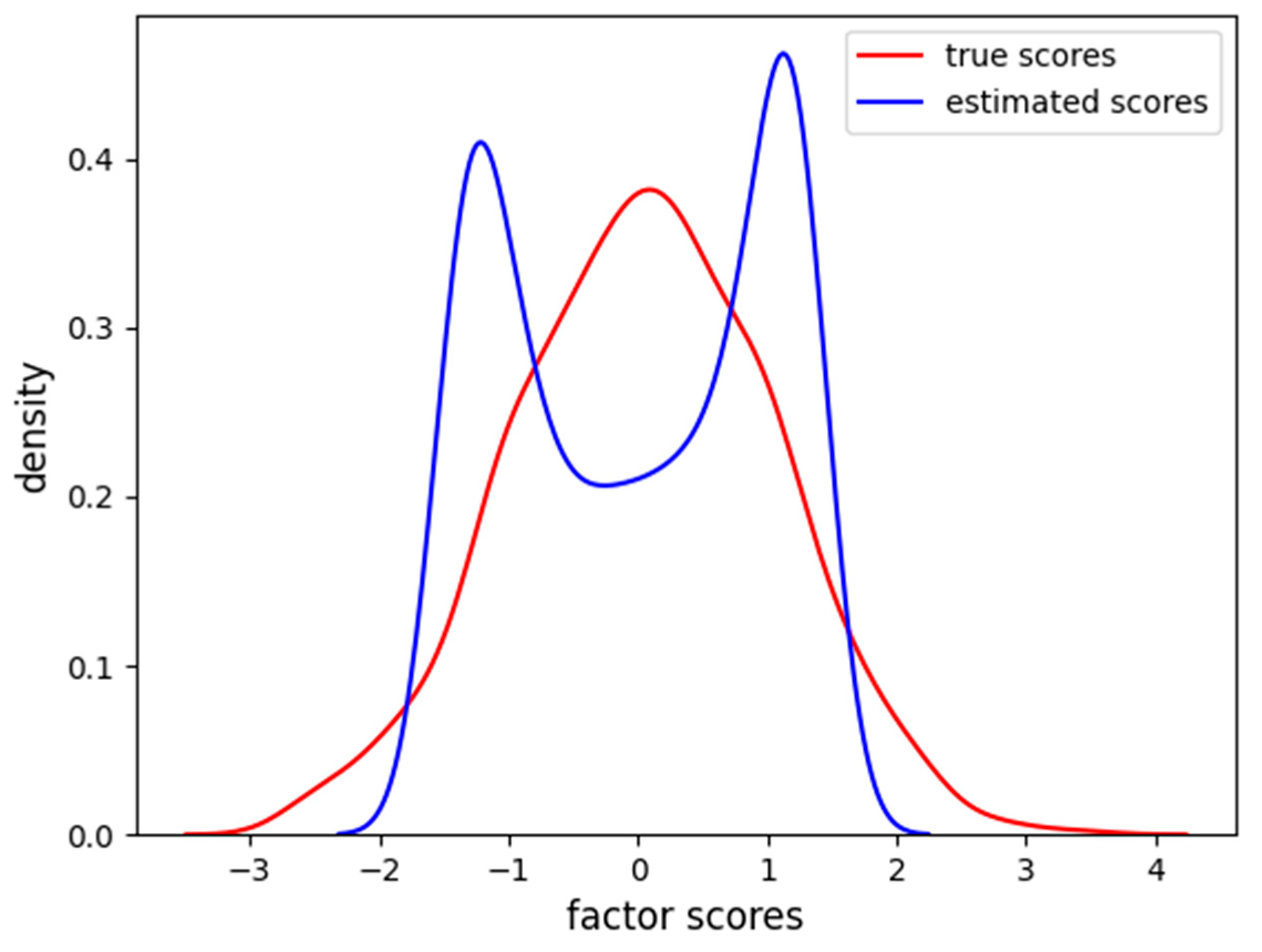

The factor scores estimation is less accurate than before (MAE = 0.35, RMSE = 0.46), and, from

Figure 11, we can see how there are two peaks in the density estimation, the two classes of the items (linear and sigmoidal) are probably separated into distinct latent factor scores and the FA is not able to find the correct Gaussian distribution of the factor scores.

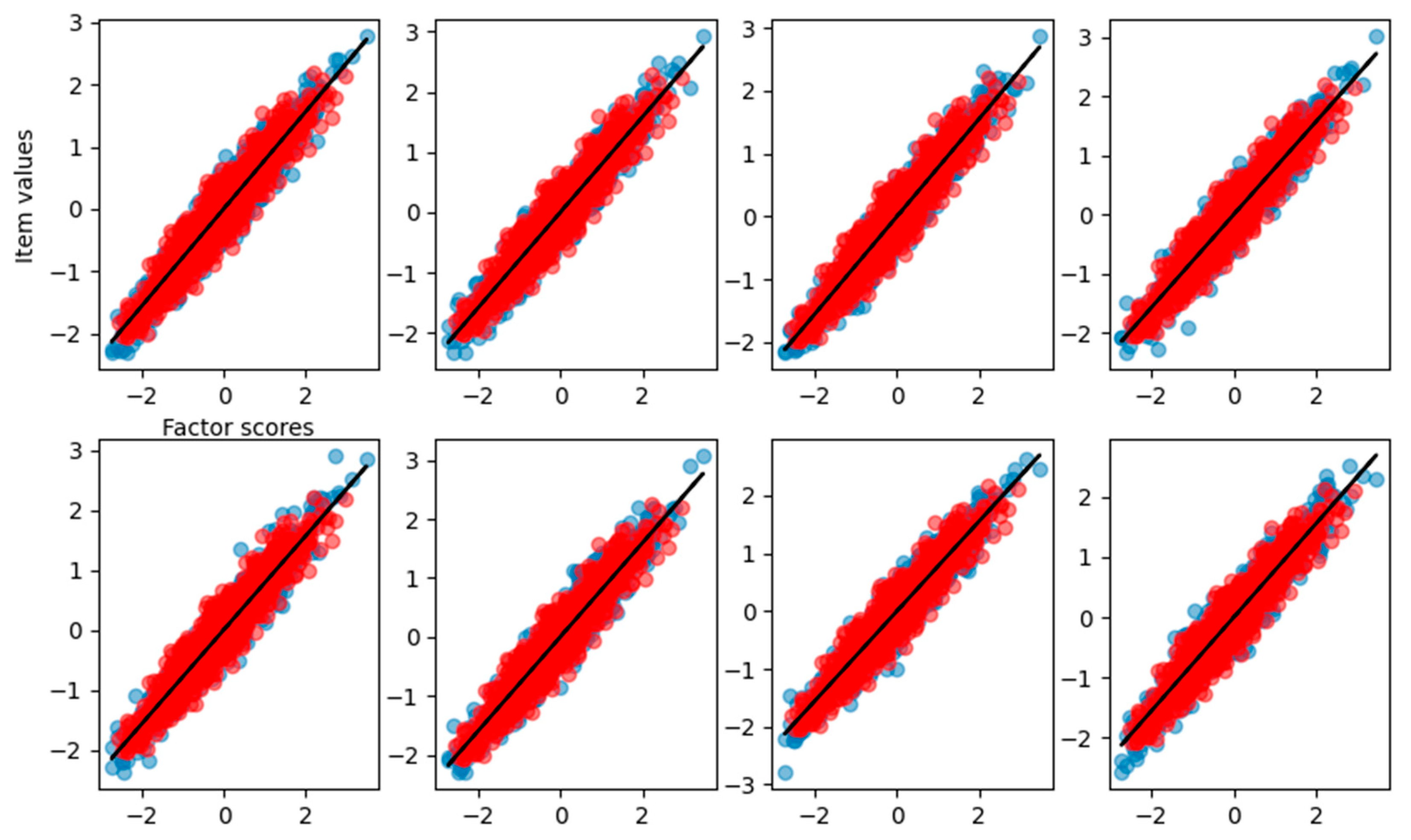

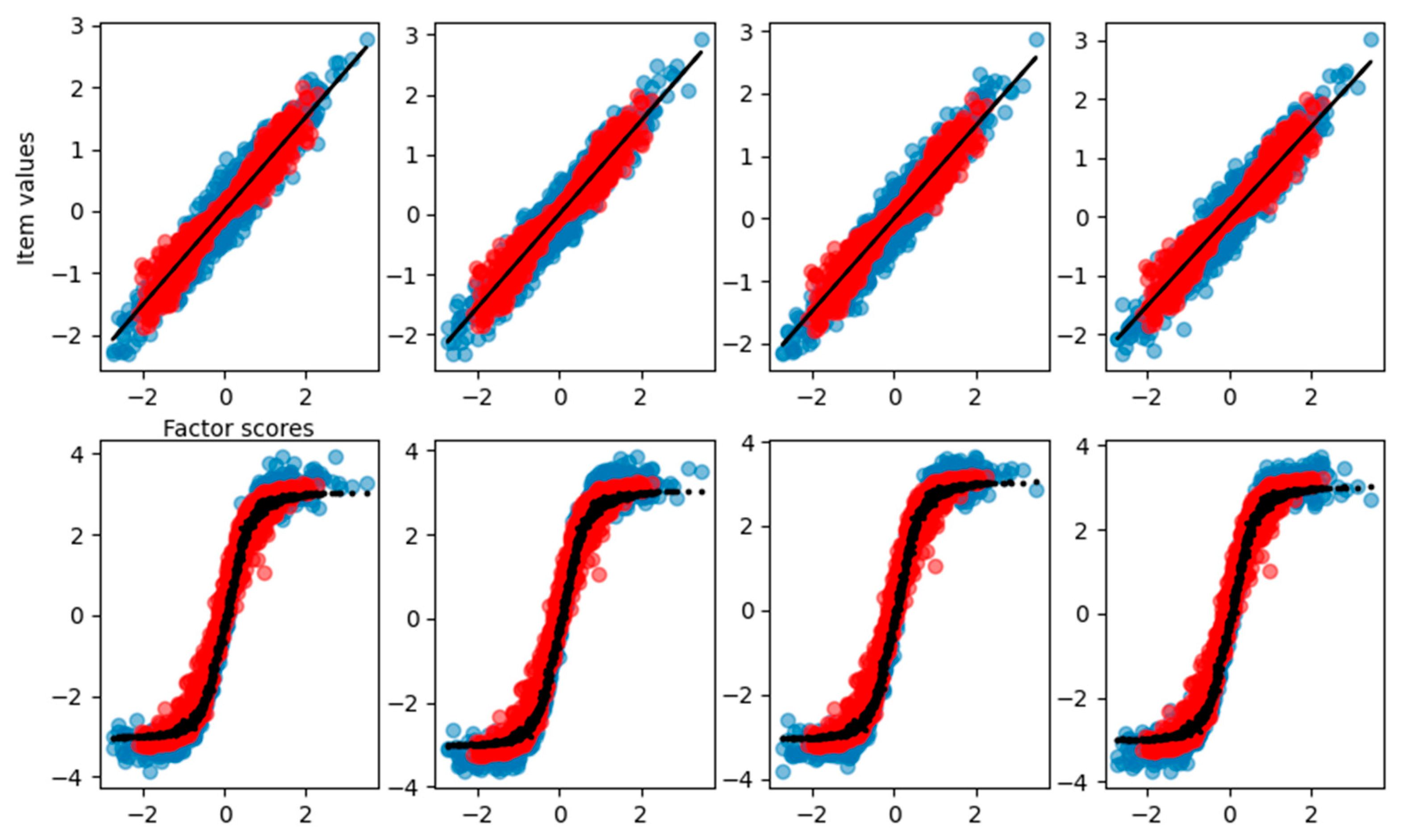

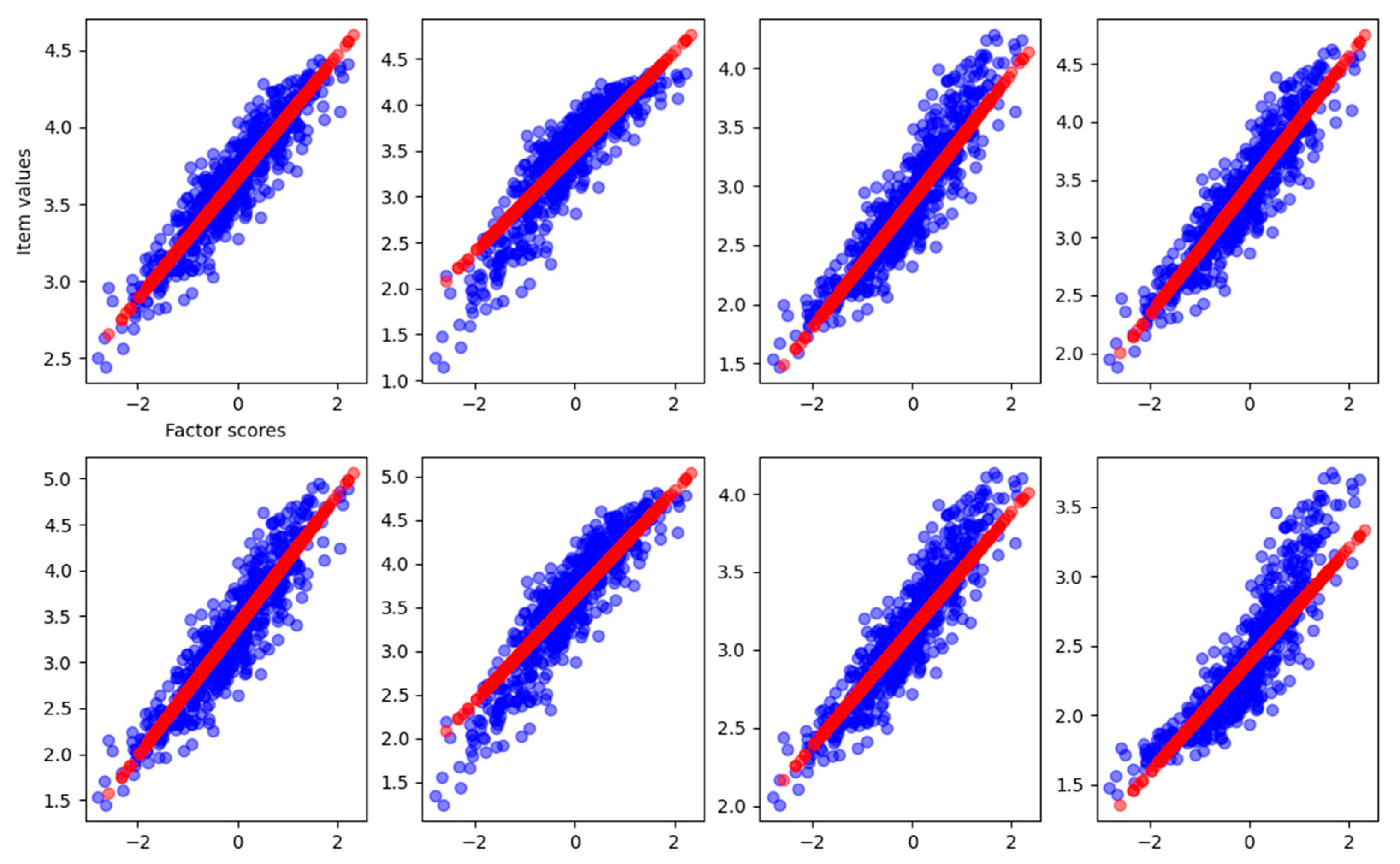

The VAE, on the other hand, correctly reconstructs all the item–factor relationships (

Figure 12) and the right loadings (

Table 6) with an explained variance of 0.92.

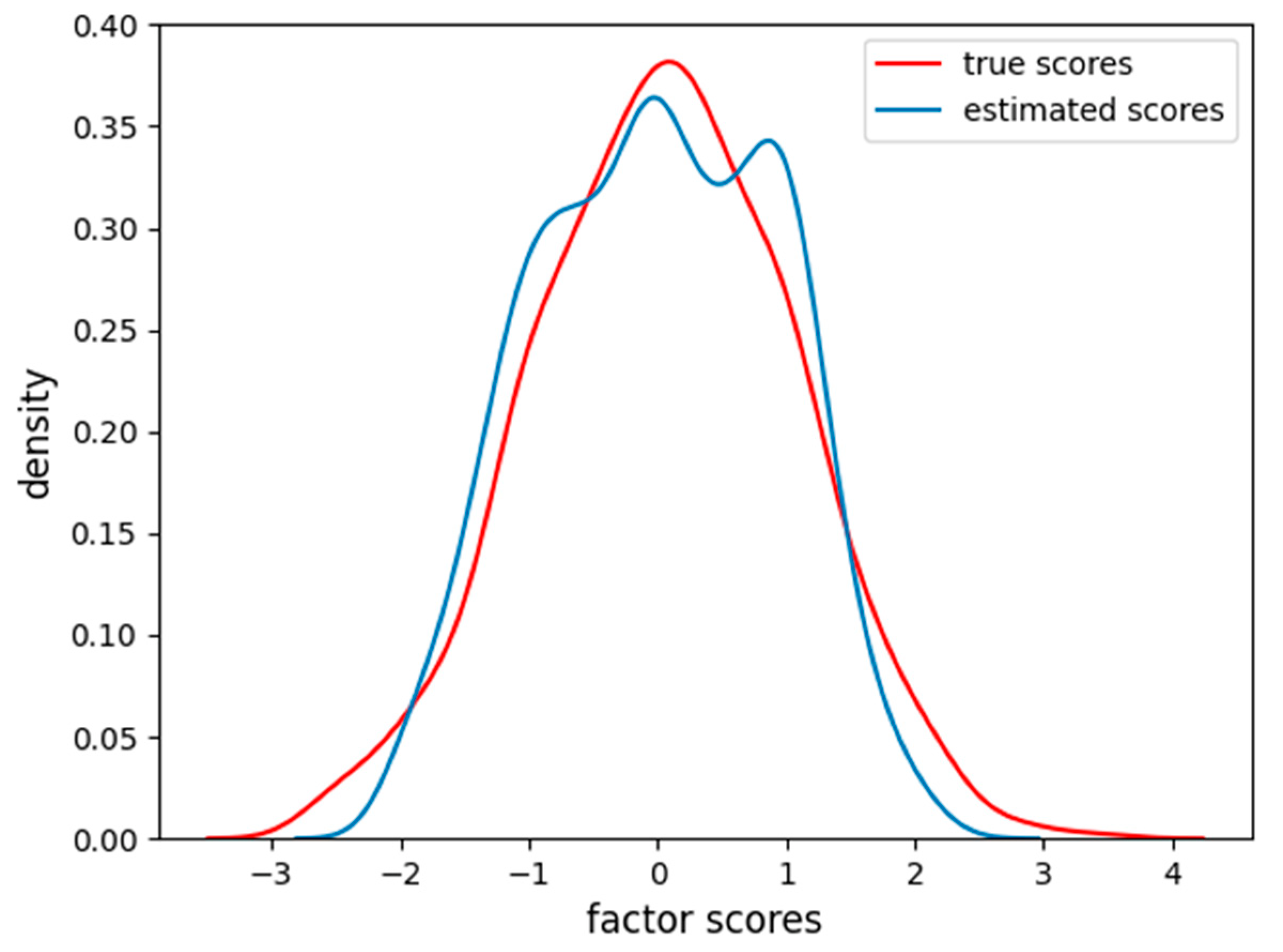

Furthermore, the VAE correctly infers the Gaussian distribution of the factor score, with an error just a little bit higher than in the previous, simpler experiments (MAE = 0.15, RMSE = 0.18) (

Figure 13). The VAE is able to reconstruct the relation for a larger range of factor scores providing a more accurate reconstruction in both linear and nonlinear items.

4.3. Results Summary and Generalization to a Two-Factors Dataset

In this section, for clarity of reading, we present a table summarizing all previous results, including the explained variance of item reconstruction and the MAEs and RMSEs of factor scores retrieval in

Table 7. Additionally, we include the results of experiments with a two-factor dataset in

Table 8. The data generation procedure follows the same method as before: for each factor, eight related items are generated according to Equations (11) and (14) for linear and nonlinear relationships.

As shown in

Table 8, the FA results are worse for two factors in terms of explained variance, while the VAE remains stable. This is due to the increased amount of nonlinearity in the dataset; in this case, each factor has one to four nonlinear items, doubling the total number of nonlinear items compared with the experiments presented in

Table 1. The factor analysis performance in terms of factor score reconstruction is similar to the errors obtained with the one-factor reconstruction, likely because the number of nonlinear items related to each factor remains the same. We report the average MAE and RMSE between the two factors.

4.4. Discussion

In the study described in the previous paragraph, we tested a variational autoencoder on two datasets simulated from a factor-based population. The first dataset contained linear relationships between items and the factor, while the second dataset had nonlinear relationships.

Our results show that when the relationships between items and factors are linear, the VAE produces results comparable to linear factor analysis. Indeed, the VAE converged towards the factor analysis solution, accurately estimating the latent scores and giving the possibility to retrieve the factor loading values.

However, when the relationships between items and factors are nonlinear, the VAE outperforms factor analysis in terms of estimating latent scores and reconstructing the original data. In particular, the results show that the relationship between the internal nodes of the VAE and the reconstructed output approximates the function that defined the relationship between the items and the factors. By fitting this function, we obtained the values of the loadings, which are the parameters of the function.

One important limitation of such a study is that common psychometric data are often ordinal in nature. In the context of psychometric data analysis, several methods, such as polychoric correlation and weighted least-squares estimation techniques, have been proposed to deal with ordinal data in the linear case.

Moreover, while we simulated a sigmoid function in this study and successfully retrieved the factor loading values, the relationships between items and factors in real cases may exhibit more complex and peculiar forms. Consequently, research should focus on exploring how VAEs learn these intricate relationships.

So, it seems clear that testing the VAE on real datasets is dutiful to evaluate its performance under more realistic conditions and consequently to test its applicability in practical situations.

In the next study presented, we explore the application of VAEs on real and ordinal data, considering both linear and nonlinear relationships among items and factors.