Abstract

Subjective brightness perception reportedly differs among the peripheral visual fields owing to lower- and higher-order cognition. However, there is still a lack of information associated with subjective brightness perception in the world-centered coordinates, not in the visual fields. In this study, we aimed to investigate the anisotropy of subjective brightness perception in the world-centered coordinates based on pupillary responses to the stimuli in five locations by manipulating the world-centered coordinates through active (requiring head movement) and passive scenes (without head movement) in a virtual reality environment. Specifically, this study aimed to elucidate if there is an ecological advantage in the five different locations in the world-centered coordinates. The pupillary responses to glare and halo stimuli indicated that the brightness perception differed among the five locations in the world-centered coordinates. Furthermore, we found that the pupillary response to stimuli at the top location might be influenced by ecological factors (such as from the bright sky and the sun’s existence). Thus, we have contributed to the understanding of the extraretinal information influence on subjective brightness perception in the world-centered coordinates, demonstrating that the pupillary response is independent of head movement.

1. Introduction

Different perceptions of an identical object located in the different eye visual fields (VFs) are known as VF anisotropy. VF anisotropy may be evoked by the opponent processes of many neural functions in the visual system. For example, the visual input signals projected onto the retina from the left VF are carried to the right primary visual cortex (visual area 1; V1) and vice versa. Furthermore, in human visual processing, the input signals from V1 are projected to the prestriate cortex (visual area 2; V2) via the ventral stream, representing visual input derived from the natural world.

In terms of a visual input representation, Andersen et al. (1993) proposed that the spatial information’s representation is configured by collecting visual stimuli information that is formed by various coordinate transformations during visual processing [1]. Furthermore, visual processing starts when the light rays hit the retina, and visual input signals are encoded in the retinal coordinates. Hereafter, the visual signals (retinal coordinates) are combined with the non-visual signals (extraretinal coordinates) in the brain to encode the visual stimuli. These extraretinal coordinates can be obtained from non-retinal coordinates. For example, first, head-centered coordinates refer to the head frame as the reference defined by integrating the retinal coordinates and position of the eye. Second, body-centered coordinates can be obtained by combining information regarding retinal, eye, and head positions. Third, world-centered coordinates are formed by collecting information of the head-centered coordinates and vestibular input (information source that senses the rotational movement for spatial updating).

In addition, in most recent studies focusing on perceptual differences among the VFs, the observers’ head was fixed, and the gaze was fixated on a reference object placed in the central VF. Many notable reports have been made on VF anisotropy (manipulating retinal coordinates) regarding many aspects of visual perception [2,3,4,5]. Specifically, the vertical hemifield has a dominant effect among the VFs compared with the horizontal hemifield [6]. Moreover, during psychophysical experiments that require attentional resources in response to a change in the light source, pupil sensitivity to light is higher in the upper visual field (UVF) than in the lower visual field (LVF) [7,8,9]. Additionally, objects located in the UVF are biased toward the extrapersonal region (for scene memory), whereas objects in the LVF are biased toward the peripersonal (PrP) region (for visual grasping) in 3D-spatial interactions. Other advantages of the LVF include better contrast sensitivity [10], visual accuracy [11], motion processing [12,13], and spatial resolution of attention and spatial frequency sensitivity [14]. The LVF bias in processing information about an object is caused by the substantially higher number (60% more) of ganglion cells in the superior hemiretina than in the inferior hemiretina [15], which results in an improved visual performance in the former.

VFs are also known to evoke different brightness perceptions. The perceptual brightness modulation is associated with cognitive factors such as memory and visual experience. This effect has been studied using pupillometry, with photographs and paintings of the sun as the stimuli. Binda et al. (2013) confirmed that sun photographs yielded a greater constriction of the pupils than did other stimuli despite physical equiluminant (i.e., squares with the same mean luminance as each sun photograph, phase-scrambled images of each sun photograph, and photographs of the moon) [16]. Subsequently, Castellotti et al. (2020) discovered that paintings including a depiction of the sun produce greater pupil constriction than paintings that include a depiction of the moon or no depiction of a light source, despite having the same overall mean luminance [17]. Recently, Istiqomah et al. (2022) reported that pupillary response to the image stimuli perceived as the sun yielded larger constricted pupils than those perceived as the moon under average luminance-controlled conditions [18]. Their results indicated that perception has a dominant role rather than a mere physical luminance of the image stimuli due to the influence of ecological factors such as the existence of the sun. All of these studies demonstrate that pupillometry reflects not just the physical luminance (low-order cognition) but also the subjective brightness perception (higher-order cognition) in response to the stimuli. In addition, the previous study by Tortelli et al. (2022) confirmed that pupillary response was influenced by contextual information (such as from the sun’s images) considering the differences of inter-individual differences in the observer’s perception [19].

Pupillometry is a metric used to measure pupil size in response to stimuli and may reflect various cognitive states. The initial change in pupil diameter is caused by the pupillary light reflex (PLR). However, the degree of change in pupil diameter is influenced by visual attention, visual processing, and the subjective interpretation of brightness. For example, Laeng and Endestad (2012) reported that a glare illusion conveyed brighter than its physical luminance induced greater constricted pupils [20]. This glare illusion has a luminance gradient converged toward the pattern’s center that enhances the brightness intensely [21,22]. Furthermore, Laeng and Sulutvedt (2014) revealed that, owing to the response of the eyes to hazardous light (such as sunshine), the pupils considerably constricted when the participant imagined a sunny sky or the face of their mother under the sunlight [23]. Other previous study by Mathôt et al. (2017) revealed that words conveying a sense of brightness yielded a greater constriction of pupils than those conveying a sense of darkness [24]. These differences indicated the pupils’ response to a source that may damage the eyes despite only occurring in the observer’s imagination. In addition, Suzuki et al. (2019) revealed that the pupillary response to the blue glare illusion generated the largest pupil constrictions, reporting that blue is a dominant color in the human visual system in natural scenes (e.g., the blue sky) and indicating that, despite the average physical luminance of glare and control stimuli being identical, pupillary responses to the glare illusion reflect the subjective brightness perception [22].

Recently, we demonstrated that the pupillary response to glare and halo stimuli differed depending on whether the stimuli were presented in the upper, lower, left, or right VFs by manipulating the retinal coordinates [25]. We found that pupillary responses to the stimuli (glare and halo) in the UVF resulted in the largest pupil dilation and significantly reduced pupil dilation, specifically in response to the glare illusion due to higher-order cognition. The previous results reflect that the glare illusion was a dazzling light source (the sun) influencing the pupillary responses. However, our previous study and other studies regarding the subjective brightness perception analysis in the VFs (also mentioned in paragraph 3) raise the possibility that the differences in retinal coordinates and many opponent processes in the human visual system will affect the subjective brightness perception in the VFs. Therefore, clarifying whether there is anisotropy of subjective brightness perception by maintaining identical retinal coordinates and manipulating the world-centered coordinates could provide valuable insights into the anisotropy of subjective brightness perception in the world-centered coordinates based on pupillary responses to the glare illusion and halo stimuli. Particularly, this study aimed to elucidate whether there is an ecological advantage in five different locations in the world-centered coordinates based on pupillary responses to the glare illusion overtly that conveys a dazzling effect.

The difference between our previous and present studies is the visual input, which used the retinal coordinates manipulation in our previous study, and world-centered coordinates (formed by collecting information of the head-centered coordinates and vestibular input) manipulation in this work. To investigate the anisotropy of subjective brightness perception in the world-centered coordinates, we presented the glare and halo as stimuli in five different locations (top, bottom, left, right, and center) in the world-centered coordinates based on the pupillary responses to the stimuli (glare and halo) while the observers fixated on a fixation cross located in the middle of the stimulus. We used a virtual environment to easily control the physical luminance of the stimuli and the designated environment. In addition, the contextual cues of the 3D virtual environment provide more cues of features associated with the given tasks and advantages in decreasing the visual perception area; thus, the observers would perceive the stimuli easily [26]. Furthermore, to form the world-centered coordinates, adding vestibular input to be combined with head-centered coordinates (retinal coordinates and eye position integration) is required. Therefore, we adopted an active scene that instructed the observers to move their heads in accordance with the stimulus’ location in the world-centered coordinate as the vestibular input. To ensure that the present study’s results are not merely pupil size artifacts induced by the head movement during the active scene, we manipulated the scene by automatically moving the virtual environment as the substance of the head movement in the active scene, called the passive scene, which did not allow the head movement during the stimulus presentation. In addition, we also applied glare as the stimuli and halo manipulation as the stimuli to find out whether there is any distinction between pupillary responses to the glare and halo stimuli, particularly, associated with ecological factors, as the representation of the sun [22,25], in five locations in the world-centered coordinates. In the present study, through an active and passive scene, we hypothesized that there is anisotropy in the pupillary responses in the world-centered coordinates; particularly, the results would generate the highest difference between pupillary responses to the glare (more constrict than halo) and halo stimuli at the top, and pupillary responses to the stimuli at the top would yield the highest degree of pupillary constriction as a consequence of ecological factors such as avoiding the dazzling effect of sunshine entering the retina.

2. Materials and Methods

2.1. Participants

A total of 20 participants (15 men and 5 women, aged between 23 and 35 years; mean age = 27.1 and SD = 4.04 years) participated in this study. Two observers’ data regarding the change in pupil size were excluded from the analyses as the trial rejection ratio did not exceed 30% after interpolation and filtering in the pre-processing stage. All participants had a normal or corrected-to-normal vision. All experimental procedures were conducted according to the ethical principles outlined in the Declaration of Helsinki and approved by the Committee for Human Research at our university. The experiment was conducted with complete adherence to the approved guidelines of the committee. Written informed consent was obtained from the participants after procedural details had been explained to them.

2.2. Stimuli and Apparatus

We conducted two experiments on each observer, i.e., the active and passive scenes in the VR environment. We used Tobii Pro VR Integration, which has an eye-tracker installed in HTC Vive HMD, to present the stimuli. We measured pupil diameter and eye gaze movements using an infrared camera at a sampling rate of 90 Hz. As the output, the device produced pupil diameter in meter. We developed the VR environment by using the Unity version 2018.4.8f1 game engine. The HTC Vive HMD has a total resolution of 2160 × 1200 pixels on two active-matrix organic light-emitting diode screens and a 110° field of view.

The pupil size data measured by the Tobii Pro were transferred to Unity to be saved and processed with the stimulus presentation data. The observer’s location in the VR environment was in the center of the gray-grid-sphered background developed in Blender 2.82 software (open-source software for 3D computer graphics). The gray-grid-sphered background was used to provide a sign that the VR environment moved when the observer moved their head.

Moreover, we conducted two experiments through the active scene, in which the observer needed to move their head according to the location of the stimulus in the VR environment, and the passive scene, in which the observer needed to keep their head stable during the experiment. For the passive scene, we recorded the head movement coordinates of four people in a preliminary study using the HTC Vive Pro Eye HMD with an identical VR environment and refresh rate of 90 Hz. Each recording was played to the participants as a replacement for their head movements. By reproducing the head movement coordinates, the VR environment moved automatically according to the location of the stimuli during the experiment. Detailed information on the flow of the experiments is presented in the Procedure subsection.

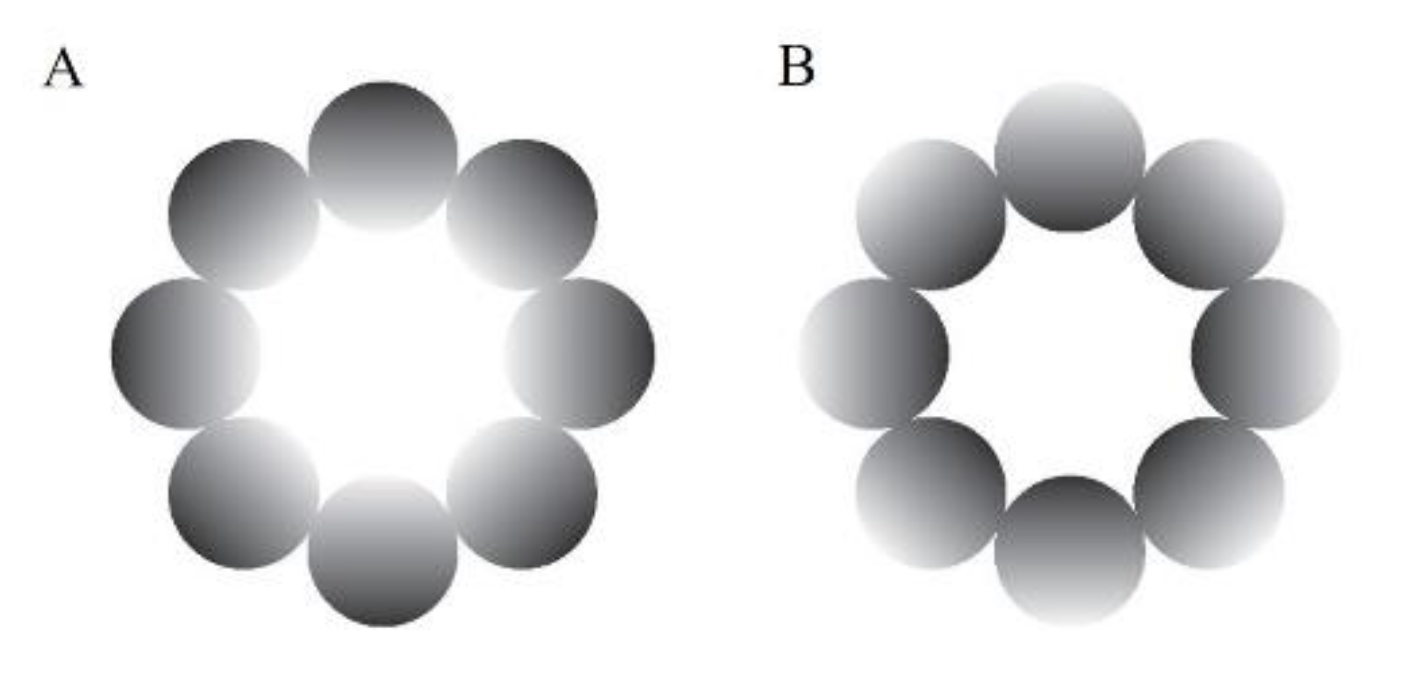

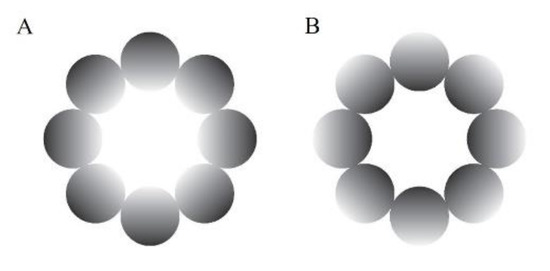

An achromatic glare illusion (Figure 1A), in which the luminance gradation increases from the periphery to the central white region, and a halo stimulus (Figure 1B), in which the luminance gradation diverges from the periphery to the center, were presented as the stimuli in this study. We used these types of illusion because they have many advantages over the Asahi and ring-shaped glare illusions (Istiqomah, et al., 2022), such as easily distributing the stimulus’ physical luminance evenly in the retina compared with the Asahi and ring-shaped glare illusions, creating its inverse form, and ensuring that the average physical luminance between the glare and its inverse form (halo) was the same. In the gray-grid-sphered background, we used the RGB colors [130, 130, 130] and [100, 100, 100] for the gray circle and fixation cross, respectively. Furthermore, for the unit of detailed stimuli and VR environment, we used the Unity unit (one Unity unit identical to one meter). The distance between the participant and the stimulus in the VR environment was 100 m. The stimuli comprised eight luminance gradation circles, each positioned with its center 14.41 m from the center of the stimulus (approximate visual angle of 8.24°), and each gradation circle’s diameter was 11.19 m (approximately 6.40°). The central white area of the stimulus was 17.62 m in diameter (approximately 10.07°). Therefore, the overall stimulus diameter was 40 m (approximately 22.62°). The fixation cross was 2.93 m in diameter (approximately 1.68°). The stimuli presented at the VR environment’s top, bottom, left, and right were tilted 76.64 m from the central position (approximately 65°). In addition, we analyzed the pupillary size data using MATLAB R2021a.

Figure 1.

Experimental stimuli. (A) The glare illusion, with an increasing luminance gradation from the periphery to the central white region; (B) the halo stimulus, with a decreasing luminance gradation from the periphery to the center.

2.3. Procedure

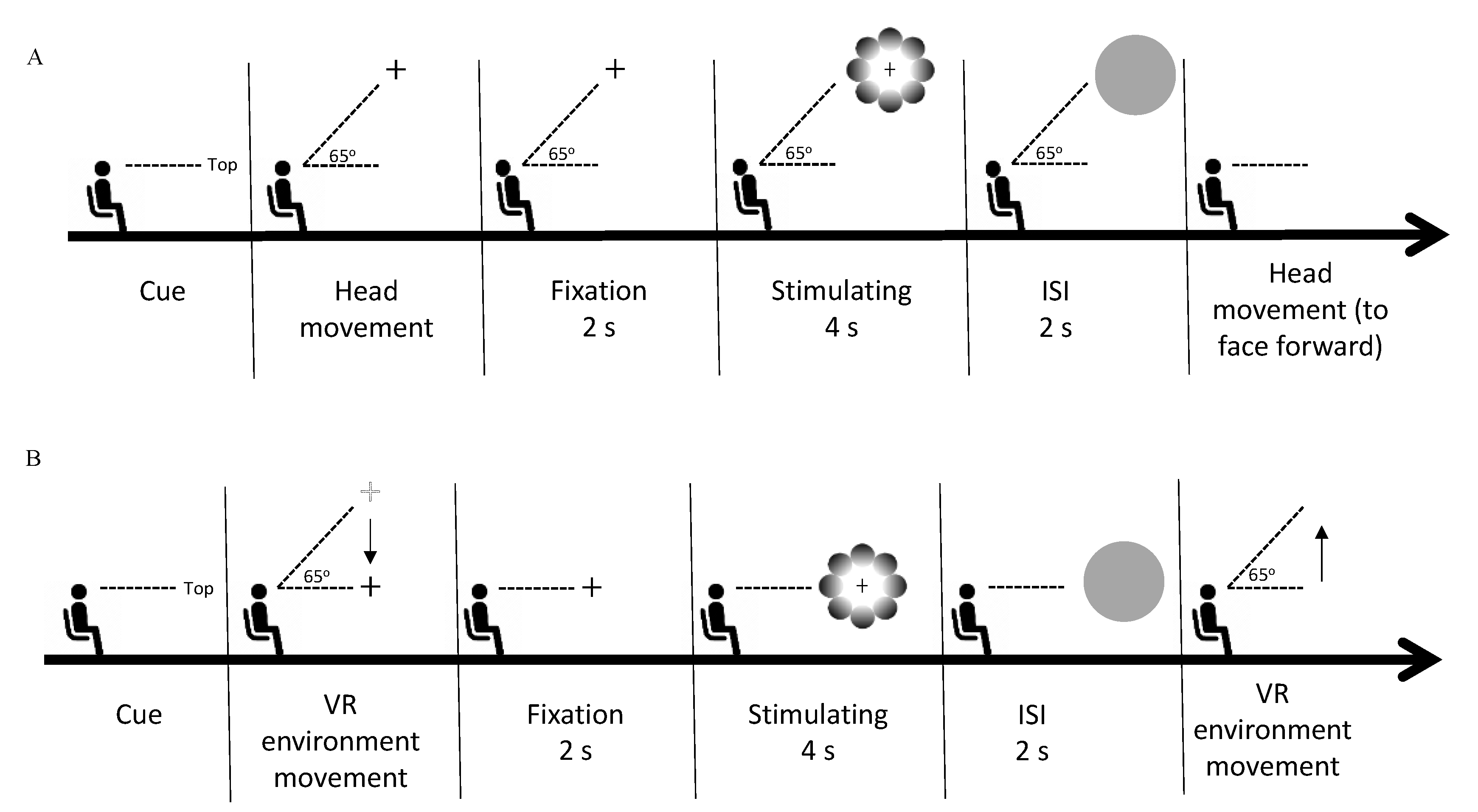

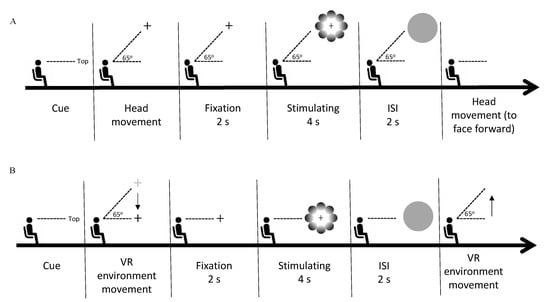

We were able to produce the same retinal coordinates through the active and passive scenes by placing the stimulus in the five locations of the VR environment and instructing the observer to fixate their gaze on the fixation cross located in the stimulus center, corresponding to world-centered coordinates. In the active scene, participants were required to move their heads, whereas, in the passive scene, the recording of head movement coordinates displaced the head movement toward the stimulus location. We measured the pupil diameter in response to the stimuli in accordance with the stimulus’ location during the stimuli presentation. Both experiments (active and passive) were conducted with the observer in the sitting position and facing forward. The experiments were conducted on different days randomly to prevent eye fatigue caused by the first experiment from influencing the pupillary response in the second experiment. We calibrated the integrated eye tracker on the HMD by performing a standard, five-point calibration before the beginning of each session. In the active scene, each trial started with a direction text presentation of the stimulus locations, appearing in the center of the observer’s VF. The observer was instructed to move their head in the direction indicated by the text prompt (top, bottom, left, right, or center, in random order), where they would find the fixation cross. After fixating on the fixation cross for two seconds, the observer was presented with a random stimulus (glare or halo), and the fixation cross remained in the center of the stimulus for four seconds. In the next stage, a gray circle appeared for two seconds to neutralize the observer’s pupil size. The observer had to keep their head stable until the gray circle disappeared. Thereafter, the observer reoriented their head to face forward. The procedure for the passive scene was the same as that for the active scene, except that the observer was instructed not to move their head, as the VR environment would automatically move in the direction indicated by the text prompt by playing the recording of the preliminary study (see the Stimuli and apparatus subsection). Details of the procedures in the present study are provided in Figure 2. In each experiment, each stimulus (glare and halo) was presented 15 times per location (top, bottom, left, right, and center). Thus, each experiment consisted of 150 trials (5 locations × 2 gradient patterns × 15 trials), including two breaks of approximately 15 min each, and the session after the break started with the eye-tracker calibration.

Figure 2.

Experimental procedure. (A) The phase sequence of one trial in the active scene. Each trial started with the presentation of a textual cue for the direction of the stimulus, following which the observer moved their head in the indicated direction. Next, the observer fixated on the fixation cross for 2 s (fixation phase). Thereafter, a random stimulus (glare/halo) was presented for 4 s (stimulus presentation phase), during which the observer had to keep fixating on the fixation cross without blinking their eyes. Subsequently, the observer was requested to keep their head stable during the 2-s presentation of a gray circle (interval phase). In the last phase, the observer moved their head back to the original position to face forward. (B) In the passive scene, the procedure of one trial was the same as in the active scene, except that the observer was not allowed to move their head. After the directional cue was presented, the VR environment automatically moved in the indicated direction. In the last phase, the VR environment moved back to its original location to substitute the head movement to face forward. The automated VR environment movement occurred by playing the coordinates of prerecorded head movements.

2.4. Pupil and Eye Gaze Analyses

We used cubic Hermite interpolation for the pupil, and eye gaze data during eye blinks displayed as “NaN” values for the pupil data and zero values for the gaze data. Thereafter, we applied the subtractive baseline correction by calculating the mean of 0.2 s pupillary responses before the stimulus onset to define the baseline and subtracting the pupil size from the baseline in each trial (the dotted line in Figure 3 represents the baseline period). Furthermore, a low-pass filter for data smoothing with a 4-Hz cut-off frequency was implemented, as in a previous study [27]. The analysis excluded data from trials with additional artifacts, calculated by thresholding the peak changes on the velocity of change in pupil size (more than 0.001 mm/ms). In addition, the trials were rejected with a Euclidian distance (calculated using the first and second principal components) exceeding 3 σ of all trials. After that, we also rejected the trials if the average of eye gaze fixation during the stimuli presentation exceeded the radius of 5.035° (i.e., the central white area of the stimulus). In the last stage of preprocessing data, we rejected two participants due to the rejected trials ratio exceeding 30%. The average rejection ratios were 14.20% and 1.7% of all trials per observer in the active and passive scenes, respectively. We applied this preprocessing procedure to pupil and eye gaze data.

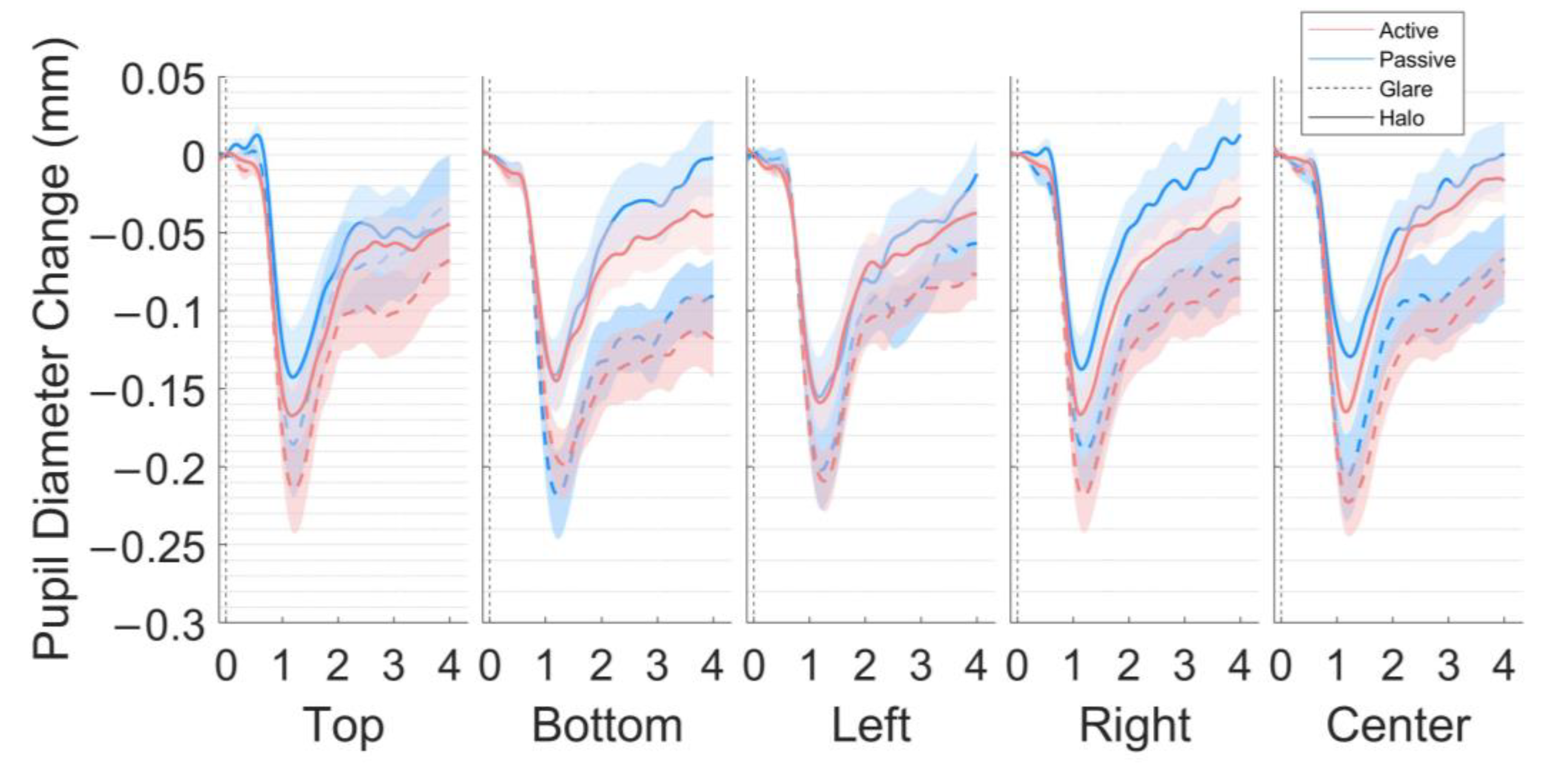

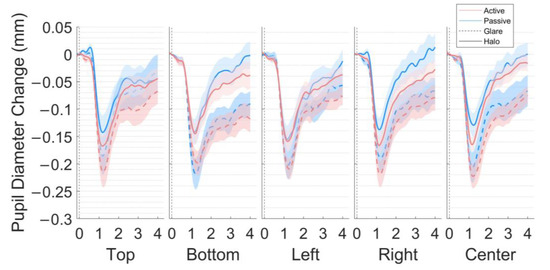

Figure 3.

Pupil size changes in the five locations (top, bottom, left, right, and center) in the world-centered coordinates. The grand average of pupillary response to the glare illusion and halo stimulus (millimeters) during the 4-s stimulus presentation for 18 participants after subtractive baseline correction in the active and passive scenes. The dotted line represents the baseline period (−0.2 s).

In addition, for pupil diameter data, we separated the data into two approaches, early and late components [25,28,29].

(1) The early component reflected pupillary responses modulated by the physical luminosity of the stimuli via low-order cognition. First, we calculated the pupil slope using second-order accurate central differences to attain the maximum pupil constriction latency (MPCL) of the series data from the beginning of the stimulus presentation until 1 s, which accommodated the large pupil diameter change triggered by the PLR, in each trial and participant (the exact procedure with our previous work to obtain MPCL values, [25]). Thereafter, we grand averaged the pupil data using the following function: , where shows the pupil size at approximately 0.1 s before and after the MPCL as the early component (in millimeters, mm).

(2) The late component (using area under curve, AUC) was significantly influenced by emotional arousal as well as subjective brightness perception via higher-order cognition [25,28,29]. Furthermore, the late component represented the pupil diameter in more time to come back to its initial state, which was calculated as follows:

where represents the pupil diameter at seconds when the MPCL occurred until stimulus offset at 4 s. We applied this function to all series data of pupil size in each trial and observer. In the last step, we grand-averaged the size data across the trials and observers for each stimulus pattern and location (in the unit of mm).

2.5. Statistical Analyses

We used three-way repeated-measures (rm) analysis of variance (ANOVA) to compare the pupillary responses and y-axes of eye gaze data between the active and passive scenes. The rmANOVA conditions were as follows: two scenes (active and passive), five stimulus locations (top, bottom, left, right, and center), and two stimulus patterns (glare illusion and halo stimulus). We used Greenhouse–Geisser correction when Mauchly’s sphericity test revealed significant differences between the variances of the differences. For the main effect and post-hoc pairwise comparisons, p-values were corrected with the Holm–Bonferroni method, and the resultant significance level (α) was set at <0.05 for all analyses. Cohen’s d and the partial () were used to represent effect sizes [30]. All statistical analyses were performed using JASP version 0.16.4.0 software [31]. Additionally, we also performed a Bayesian rmANOVA analysis using JASP with default priors, and the BFM and BF10 represent the effect in the model comparison and post hoc comparison by only considering ‘matched models’ due to a more conservative assessment than ‘across all models’, and ‘compared to best model’ as the ‘Order’ [32]. We used the recommendation of Jeffreys (1961) as the guidelines for Bayes factor interpretation [32].

3. Results

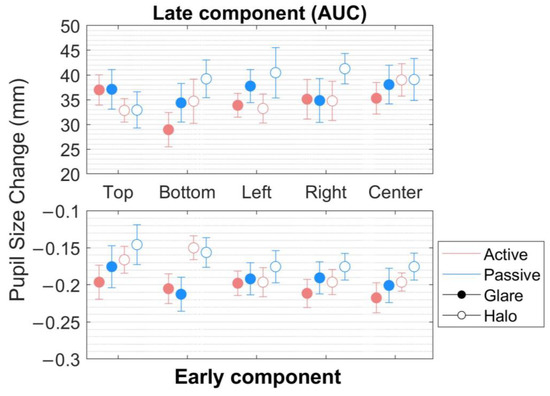

The main results of the present study are presented as the pupil size and y-axis of eye gaze in response to the glare and halo stimuli for four seconds across the five locations in each scene. The time courses of the pupillary responses to each stimulus pattern (glare and halo), stimulus location (top, bottom, left, right, and center), and scene (active and passive) are illustrated in Figure 3 (4-s exposure). We separated the pupil size data, based on the MPCL value), i.e., early and late components (Figure 4).

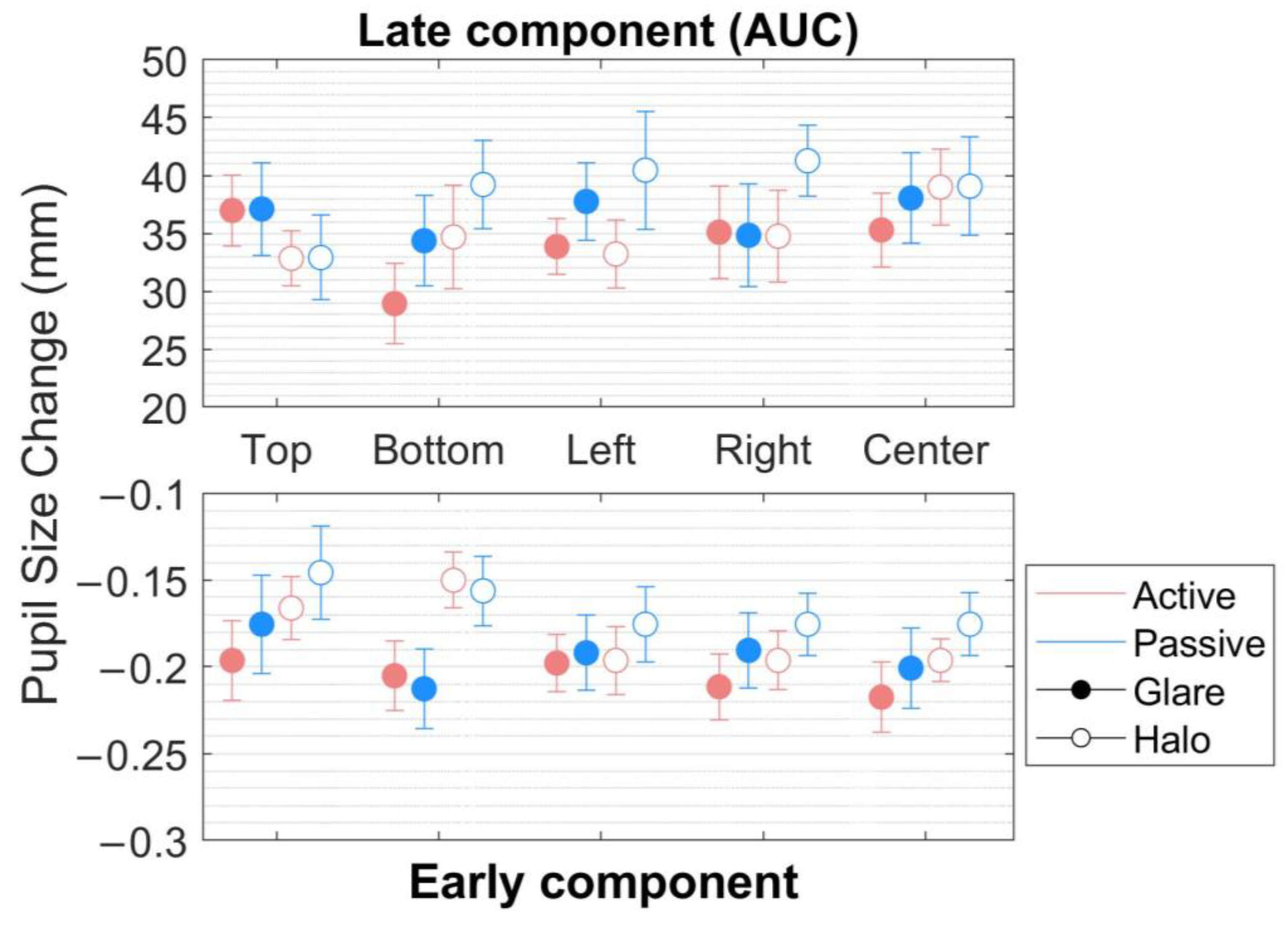

Figure 4.

Pupillary response to the glare and halo stimuli in the early component. Pupil diameter changes (mm) in five locations (top, bottom, left, right, and center) in the world-centered coordinates for 20 participants in the active and the passive scenes. Error bars indicate standard errors of the mean.

(1) In the early component (Figure 4, bottom), within the range of around 0.1 s before and after MPCL value, an rmANOVA of the pupillary response to the stimuli revealed very strong evidence for the presence of stimulus pattern (F[1,17] = 58.899, p < 0.001, = 0.776, BFM = 90.205) but not of the scene, location, and no interaction effect between the parameters (scene, stimulus pattern, and location) (Table 1 and Table 2).

Table 1.

The main effect of three-way rmANOVA in the early component.

Table 2.

Model comparison using Bayesian rmANOVA in the early component.

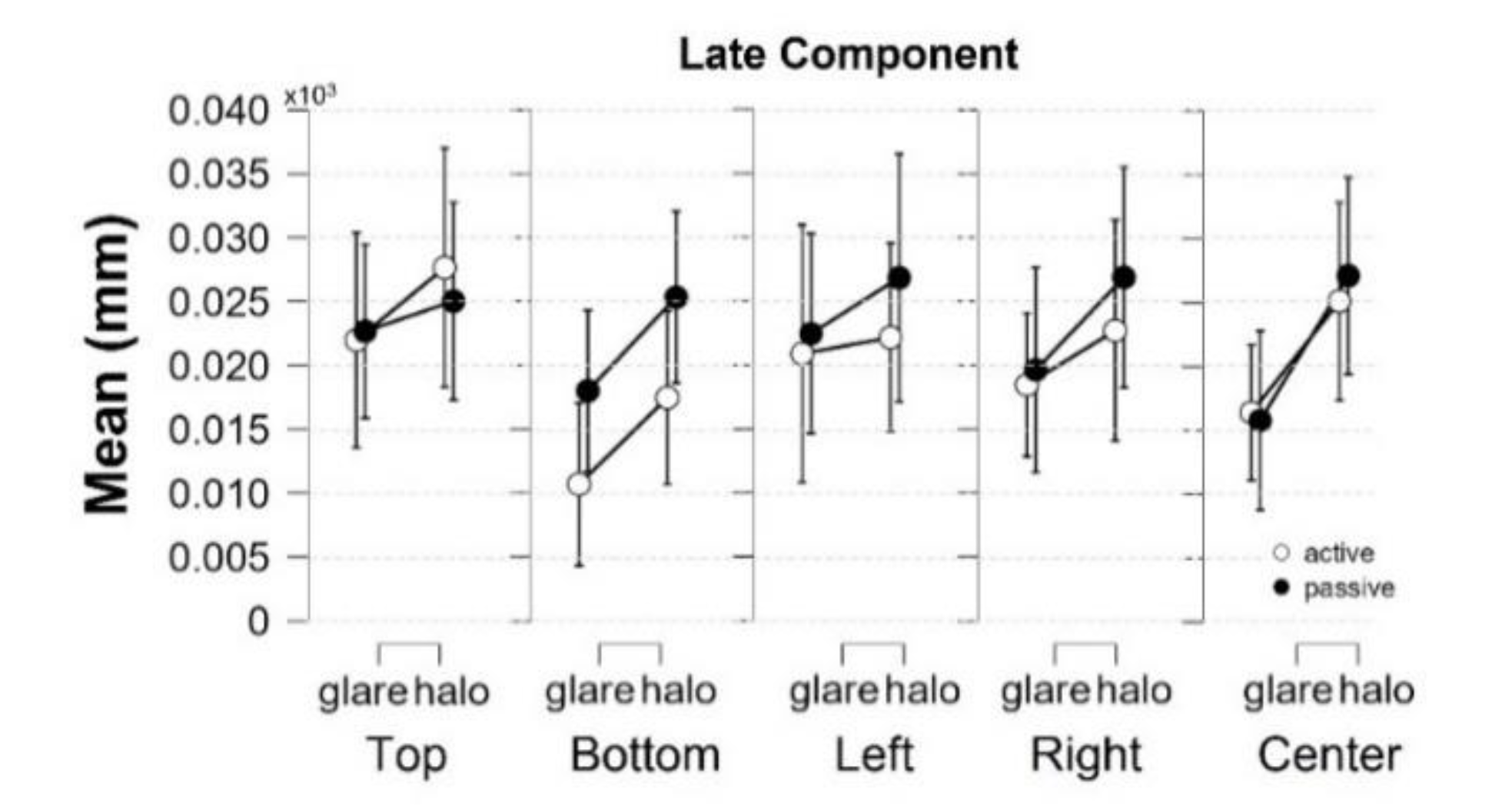

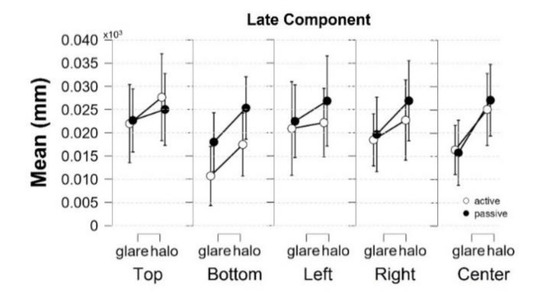

(2) In the late component (the area under the curve [AUC]) (Figure 4, top), defined as integral values of pupillary responses from MPCL value to the end of the stimulus presentation, three-way rmANOVA revealed strong evidence for the presence of a stimulus pattern (F[1,17] = 12.437, p = 0.003, = 0.423, BFM = 26.005), and a significant main effect on location (F[2.944,50.044] = 3.469, p = 0.023, = 0.169, BFM = 0.019) (Table 3 and Table 4). Nevertheless, the post hoc comparisons on location (from the classical frequentist), the Bayesian rmANOVA on location, and other conditions neither show a significant effect. Moreover, further investigation on the post hoc comparison of location from Bayesian analysis obtained moderate evidence only in pairs of top-bottom (t[18] = 2.586, p = 0.192, Cohen’s d = 0.312, BF10,U = 6.660) and bottom-left (t[18] = −2.927, p = 0.094, Cohen’s d = −0.251, BF10,U = 3.469). Additionally, we plotted the descriptive information of Bayesian rmANOVA (Figure 5), and the results indicated that the pupillary response to the stimuli at the bottom location has the smallest mean of pupil size change in AUC compared with other conditions.

Table 3.

The main effect of a three-way rmANOVA in the late component.

Table 4.

Model comparison using Bayesian rmANOVA in the late component.

Figure 5.

Descriptive plots of Bayesian rmANOVA in the late component. The mean of pupil diameter change (mm) in response to the stimuli at the bottom was the smallest value compared with the other locations in the world-centered coordinates. Error bars indicate lower and upper values with a 95% credible interval.

Finally, we conducted a three-way rmANOVA (5 locations × 2 stimulus patterns × 2 scenes) on the y-axis of the eye gaze data to verify that the retinal coordinates were identical across the stimulus locations and patterns between the scenes. We found moderate evidence in favor of the stimulus patterns (F[1,17] = 4.195, p = 0.056, = 0.198, BFM = 6.845) (Table 5 and Table 6). However, there was neither evidence in the post hoc comparison of stimulus patterns in the Bayesian rmANOVA.

Table 5.

The main effect of three-way repeated measures ANOVA in y-axis gaze data.

Table 6.

Model comparison using Bayesian rmANOVA in y-axis gaze data.

4. Discussion

Our previous study reported that the peripheral VFs (upper, lower, left, and right) in which the glare and halo stimuli were located influenced the subjective brightness perception of participants, as represented by the pupillary response to those stimuli [25]. The UVF generated a greater pupil dilation in response to either stimulus than did the other VFs, and reduced pupil dilation in response to the glare illusion than that in response to the halo stimulus. The results were attributed to higher-order cognitive bias formed by statistical regularity in the processing of natural scenes. However, in our previous study’s results, it is possible that the differences in retinal coordinates would affect pupil size. The pupillary responses to the stimuli were influenced by pupil sensitivity, spatial resolution, and brightness perception (lower-order cognition) [7,14,33]. Therefore, to further investigate subjective brightness perception, not only in the peripheral VFs (our previous study’s results), we conducted experiments through active and passive scenes by maintaining identical retinal coordinates and manipulating the world-centered coordinates, that is, by presenting the glare and halo as the stimuli in five different locations (top, bottom, left, right, and center) in the VR environment to investigate the anisotropy of subjective brightness perception in the world-centered coordinates. By manipulating the world-centered coordinates, we confirmed that the pupillary responses in each location differed despite the retinal coordinates being identical.

Furthermore, we divided the pupil size data into two components based on the MPCL values, that is, the early component, to evaluate the pupillary responses induced by the PLR around the area of 0.1 s before to after MPCL value, and the late component (the AUC), to access higher-order cognition (e.g., emotional arousal and subjective brightness perception) using Function 1 [25,28,29].

(1) The early component. Our data provide very strong evidence for the presence of stimulus patterns (F[1,17] = 58.899, p < 0.001, = 0.776, BFM = 90.205). The significantly constricted pupil in response to the glare compared to halo stimuli reflects the enhancement of perceived brightness [20]. In previous studies, the pupillary responses, especially during the PLR period, revealed the alteration of physical light intensity by means of lower-level visual processing [21,34]. The PLR is elicited by visual attention, visual processing and interpretation of the visual input [34] and, possibly, higher-order cognitive involvement [35]. Hence, the low-order cognition (enhancement of brightness perception) may affect the pupillary response in the early component, as evoked by the enhancement in brightness perception. However, the early component analysis in the present study was insufficient. It had not yet fulfilled the present work’s aim to elucidate whether there is an ecological advantage in the five different locations in the world-centered coordinates, which belong to high-level visual processing.

Therefore, we further investigated the pupillary response in the late component.

(2) Late component (AUC). The presence of stimulus pattern generated strong evidence (F[1,17] = 12.437, p = 0.003, = 0.423, BFM = 26.005) in the effect of stimuli’s physical light intensity entered the retina (low-order cognition) after the minimum peak of pupil response (MPCL). This evidence might be neither merely induced by the physical luminance of glare and halo stimuli, yet also indicated the complex visual processing.

Furthermore, our data show a significant main effect in location (F[2.944,50.044] = 3.469, p = 0.023, = 0.169, BFM = 0.019). We were further investigating the post hoc comparison of location from the classical frequentist rmANOVA, and there were no significant effects in any pairs of locations. In line with the previous study by Keysers et al. (2020), we used the Bayesian factor hypothesis to overcome the absence of evidence in the post hoc comparison of location from the classical frequentist rmANOVA [36]. Considering the Bayesian factor hypothesis, the post hoc comparisons on location generated moderate evidence in the pairs of top-bottom (t[18] = 2.586, p = 0.192, Cohen’s d = 0.312, BF10,U = 6.660) and bottom-left(t[18] = −2.927, p = 0.094, Cohen’s d = −0.251, BF10,U = 3.469). Moreover, descriptive plots generated by JASP (Figure 5) exhibit the smallest mean of pupil size change in response to the stimuli at the bottom. Contrary to our hypothesis that the pupil would be most constricted in response to the stimuli at the top, we demonstrated that the response to the stimuli at the bottom obtained a higher degree of pupil constriction than the stimuli at the top location.

The highest degree of pupil constriction produced by the pupillary response to the stimuli at the bottom was linked to one of four areas in the 3D-spatial interactions model theory proposed by Previc (1998) [37]. One of those areas is the region in which a person can easily grasp items (such as edible objects for consumption), known as the PrP region. The PrP region has a lower field bias within a 2-m radius from the observer. Objects that have already been observed are processed in the PrP region. Furthermore, the PrP region in the virtual environment, especially as the first person (FP) without an extended part of the FP (as we did in the present work), is defined by the peripheral space of the FP. It will have a large field of visual perception compared to the extended PrP region and no visual obstacle [38]. Therefore, visual processing (recognition and memorization) of objects in the PrP region requires minimal effort (an easier task for an observer’s eyes). The low demand for responses to stimuli presented at the bottom in world-centered coordinates resulted in a higher degree of pupil constriction than that in response to stimuli presented at the top. In addition, statistical analysis of pupil data in the present study revealed no significant main effect of the scene in either the early or late component. This result confirmed that the head movement did not affect the pupillary response during the stimulus onset.

Considered together, the complex visual processing induced by the glare and halo stimuli and the moderate evidence from the Bayesian factor, particularly in the pair of top-bottom locations, in the late component implies that the subjective brightness perception represented by the pupillary responses to the stimuli at the top in the world-centered coordinates might be influenced by the ecological factors. For instance, first, the ecological factor evoked by the glare and halo stimuli due to the glare illusion in the present study represents the sun [22,25]. Second, the stimuli at the top were perceived as darker than those at the bottom due to the cognitive bias related to the natural scenery where the bright blue sky is present [22]. All the evidence in our study demonstrates anisotropy of subjective brightness perception among the five locations in the world-centered coordinates. These differences in subjective brightness perception occurred even though we applied the same stimulus luminance and the same retinal coordinates across the five locations due to extraretinal information tied to the ecological factors. Moreover, the y-axis gaze angle did not seem to affect the pupil diameter, indicating identical retinal coordinates. For future studies, presenting different stimuli (e.g., the ambiguous sun and moon images) and asking the observer’s perception whether the stimuli perceived as the sun or moon should be conducted to fully segregate the low-order cognition involvement on pupillary response to the stimuli.

We have two limitations in the present study. First, the eye rotation during the experiment (foreshortening with gaze angle) may have influenced the pupil size measurements in this study owing to the HMD being integrated with cameras that are used to record eye movements. We attempted to minimize this limitation during the experiment by instructing the participants to fixate on the fixation cross. Furthermore, we rejected trials based on the fixation of the eye gaze. Second, we considered only the vertical field of world centered-coordinates due to the fact that we would elucidate whether the ecological factors (such as from the sun’s existence) affect the subjective brightness perception in the world-centered coordinates. Thus, we believe that the present study offers valuable insights into the anisotropy of subjective brightness perception among the five locations (top, bottom, left, right, and central) in the world-centered coordinates, especially to understand the extraretinal information influence on subjective brightness perception in the world-centered coordinates, as revealed by using the glare illusion, manipulating the world-centered coordinates in a VR environment, and performing pupillometry. In addition, the present study provides valuable insight into the ophthalmology field that the pupillary response is not affected by head movement.

5. Conclusions

In the present study, we conducted the experiment by presenting the stimuli and manipulating the world-centered coordinates (top, bottom, left, right, and center) in a VR environment through active and passive scenes based on pupillary response to the glare and halo. We found anisotropy of subjective brightness perception among the five locations in the world-centered coordinates due to extraretinal information triggered by the ecological factors. In addition, we confirmed the independence of head movement in pupil diameter. In future studies, showing different stimuli (e.g., the ambiguous sun and moon images) and asking the observer’s perception whether the stimuli perceived as the sun or moon should be conducted to fully segregate the low-order cognition in our results on pupillary response to the stimuli should be conducted.

Author Contributions

N.I., Y.K., T.M., and S.N. designed the experiment. N.I. conducted the experiment and collected the data. N.I. and Y.K. analyzed and validated the data. N.I. drafted the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Grants-in-Aid for Scientific Research from the Japan Society for the Promotion of Science (Grant No. 20H04273, 120219917, and 20H05956).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Review Board of Toyohashi University of Technology, Japan (protocol code 2021-02, 1 April 2022).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are openly available at https://zenodo.org/deposit/6815352.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Andersen, R.A.; Snyder, L.H.; Li, C.-S.; Stricanne, B. Coordinate transformations in the representation of spatial information. Curr. Opin. Neurobiol. 1993, 3, 171–176. [Google Scholar] [CrossRef] [PubMed]

- Qian, K.; Mitsudo, H. Spatial dynamics of the eggs illusion: Visual field anisotropy and peripheral vision. Vision Res. 2020, 177, 12–19. [Google Scholar] [CrossRef]

- Sakaguchi, Y. Visual field anisotropy revealed by perceptual filling-in. Vision Res. 2003, 43, 2029–2038. [Google Scholar] [CrossRef]

- Bertulis, A.; Bulatov, A. Distortions in length perception: Visual field anisotropy and geometrical illusions. Neurosci. Behav. Physiol. 2005, 35, 423–434. [Google Scholar] [CrossRef] [PubMed]

- Wada, Y.; Saijo, M.; Kato, T. Visual field anisotropy for perceiving shape from shading and shape from edges. Interdiscip. Inf. Sci. 1998, 4, 157–164. [Google Scholar] [CrossRef]

- Schwartz, S.; Kirsner, K. Laterality effects in visual information processing: Hemispheric specialisation or the orienting of attention? Q. J. Exp. Psychol. Sect. A 1982, 34, 61–77. [Google Scholar] [CrossRef]

- Portengen, B.L.; Roelofzen, C.; Porro, G.L.; Imhof, S.M.; Fracasso, A.; Naber, M. Blind spot and visual field anisotropy detection with flicker pupil perimetry across brightness and task variations. Vision Res. 2021, 178, 79–85. [Google Scholar] [CrossRef]

- Hong, S.; Narkiewicz, J.; Kardon, R.H. Comparison of Pupil Perimetry and Visual Perimetry in Normal Eyes: Decibel Sensitivity and Variability. Invest. Ophthalmol. Vis. Sci. 2001, 42, 957–965. [Google Scholar]

- Sabeti, F.; James, A.C.; Maddess, T. Spatial and temporal stimulus variants for multifocal pupillography of the central visual field. Vision Res. 2011, 51, 303–310. [Google Scholar] [CrossRef]

- Carrasco, M.; Talgar, C.P.; Cameron, E.L. Characterizing visual performance fields: Effects of transient covert attention, spatial frequency, eccentricity, task and set size. Spat. Vis. 2001, 15, 61–75. [Google Scholar] [CrossRef]

- Talgar, C.P.; Carrasco, M. Vertical meridian asymmetry in spatial resolution: Visual and attentional factors. Psychon. Bull. Rev. 2002, 9, 714–722. [Google Scholar] [CrossRef] [PubMed]

- Levine, M.W.; McAnany, J.J. The relative capabilities of the upper and lower visual hemifields. Vision Res. 2005, 45, 2820–2830. [Google Scholar] [CrossRef] [PubMed]

- Amenedo, E.; Pazo-Alvarez, P.; Cadaveira, F. Vertical asymmetries in pre-attentive detection of changes in motion direction. Int. J. Psychophysiol. 2007, 64, 184–189. [Google Scholar] [CrossRef] [PubMed]

- Intriligator, J.; Cavanagh, P. The Spatial Resolution of Visual Attention. Cogn. Psychol. 2001, 43, 171–216. [Google Scholar] [CrossRef]

- Curcio, C.A.; Allen, K.A. Topography of ganglion cells in human retina. J. Comp. Neurol. 1990, 300, 5–25. [Google Scholar] [CrossRef]

- Binda, P.; Pereverzeva, M.; Murray, S.O. Pupil constrictions to photographs of the sun. J. Vis. 2013, 13, 8. [Google Scholar] [CrossRef] [PubMed]

- Castellotti, S.; Conti, M.; Feitosa-Santana, C.; del Viva, M.M. Pupillary response to representations of light in paintings. J. Vis. 2020, 20, 14. [Google Scholar] [CrossRef] [PubMed]

- Istiqomah, N.; Takeshita, T.; Kinzuka, Y.; Minami, T.; Nakauchi, S. The Effect of Ambiguous Image on Pupil Response of Sun and Moon Perception. In Proceedings of the 8th International Symposium on Affective Science and Engineering, Online Academic Symposium, 27 March 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Tortelli, C.; Pomè, A.; Turi, M.; Igliozzi, R.; Burr, D.C.; Binda, P. Contextual Information Modulates Pupil Size in Autistic Children. Front. Neurosci. 2022, 16, 1–10. [Google Scholar] [CrossRef]

- Laeng, B.; Endestad, T. Bright illusions reduce the eye’s pupil. Proc. Natl. Acad. Sci. USA 2012, 109, 2162–2167. [Google Scholar] [CrossRef] [PubMed]

- Mamassian, P.; de Montalembert, M. A simple model of the vertical-horizontal illusion. Vision Res. 2010, 50, 956–962. [Google Scholar] [CrossRef]

- Suzuki, Y.; Minami, T.; Laeng, B.; Nakauchi, S. Colorful glares: Effects of colors on brightness illusions measured with pupillometry. Acta Psychol. (Amst) 2019, 198, 102882. [Google Scholar] [CrossRef]

- Laeng, B.; Sulutvedt, U. The Eye Pupil Adjusts to Imaginary Light. Psychol. Sci. 2014, 25, 188–197. [Google Scholar] [CrossRef] [PubMed]

- Mathôt, S.; Grainger, J.; Strijkers, K. Pupillary Responses to Words That Convey a Sense of Brightness or Darkness. Psychol. Sci. 2017, 28, 1116–1124. [Google Scholar] [CrossRef] [PubMed]

- Istiqomah, N.; Suzuki, Y.; Kinzuka, Y.; Minami, T.; Nakauchi, S. Anisotropy in the peripheral visual field based on pupil response to the glare illusion. Heliyon 2022, 8, e09772. [Google Scholar] [CrossRef]

- Chun, M.M. Contextual cueing of visual attention. Trends Cogn. Sci. 2000, 4, 170–178. [Google Scholar] [CrossRef] [PubMed]

- Jackson, I.; Sirois, S. Infant cognition: Going full factorial with pupil dilation. Dev. Sci. 2009, 12, 670–679. [Google Scholar] [CrossRef] [PubMed]

- Kinner, V.L.; Kuchinke, L.; Dierolf, A.M.; Merz, C.J.; Otto, T.; Wolf, O.T. What our eyes tell us about feelings: Tracking pupillary responses during emotion regulation processes. Psychophysiology 2017, 54, 508–518. [Google Scholar] [CrossRef]

- Bradley, M.M.; Miccoli, L.; Escrig, M.A.; Lang, P.J. The pupil as a measure of emotional arousal and autonomic activation. Psychophysiology 2008, 45, 602–607. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Routledge: New York, NY, USA, 1988. [Google Scholar]

- van den Bergh, D.; Wagenmakers, E.-J.; Aust, F. Bayesian Repeated-Measures ANOVA: An Updated Methodology Implemented in JASP. PsyArXiv 2022. [Google Scholar] [CrossRef]

- Jeffreys, H. The Theory of Probability, 3rd ed.; OUP: Oxford, UK, 1961; ISBN 9780198503682. [Google Scholar]

- Wilhelm, H.; Neitzel, J.; Wilhelm, B.; Beuel, S.; Lüdtke, H.; Kretschmann, U.; Zrenner, E. Pupil perimetry using M-sequence stimulation technique. Invest. Ophthalmol. Vis. Sci. 2000, 41, 1229–1238. [Google Scholar]

- Mathôt, S. Pupillometry: Psychology, physiology, and function. J. Cogn. 2018, 1, 1–23. [Google Scholar] [CrossRef] [PubMed]

- Mathôt, S.; van der Linden, L.; Grainger, J.; Vitu, F. The pupillary light response reveals the focus of covert visual attention. PLoS One 2013, 8, e78168. [Google Scholar] [CrossRef] [PubMed]

- Keysers, C.; Gazzola, V.; Wagenmakers, E.J. Using Bayes factor hypothesis testing in neuroscience to establish evidence of absence. Nat. Neurosci. 2020, 23, 788–799. [Google Scholar] [CrossRef]

- Previc, F.H. The neuropsychology of 3-D space. Psychol. Bull. 1998, 124, 123–164. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Cheon, M.; Moon, S.E.; Lee, J.S. Peripersonal space in virtual reality: Navigating 3D space with different perspectives. In Proceedings of the UIST 2016 Adjunct—29th Annual Symposium on User Interface Software and Technology, Tokyo, Japan, 16–19 October 2016; pp. 207–208. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).