Measuring Learner Satisfaction of an Adaptive Learning System

Abstract

:1. Introduction

1.1. Learner Satisfaction

1.2. Antecedents of Learner Satisfaction

1.3. Measures of Learner Satisfaction

2. Methodology

2.1. Participants

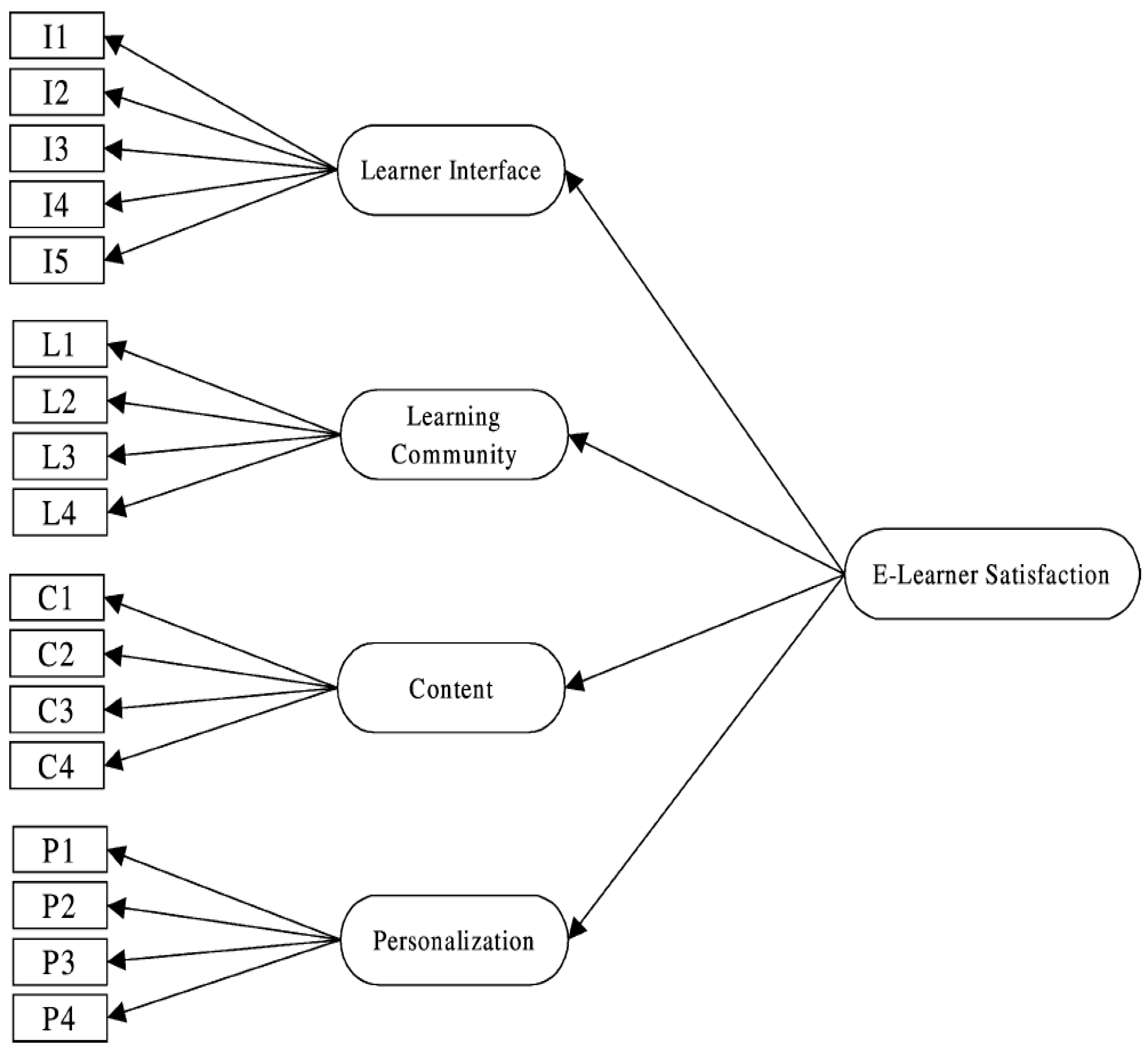

2.2. Stage 1

2.3. AdLeS

2.4. Stage 2

3. Results

4. Discussion and Directions for Future Research

5. Conclusions and Practical Implications

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix B

- The system is easy to use (LI1).

- The system is user-friendly (LI2).

- The operation of the system is stable (LI3).

- The system makes it easy for me to find the content I need (LI4).

- The system provides up-to-date content (CONT1).

- The system provides content that exactly fits my needs (CONT2).

- The system provides sufficient content (CONT3).

- The system provides useful content (CONT4).

- The system enables me to learn the content I need (CONT5).

- The system enables me to choose what I want to learn (PERS1).

- The system enables me to control my learning progress (PERS2).

- The system records my learning progress and performance (PERS3).

- The system supports my learning * (CONT6).

- The system recommends topics that reflects my learning progress * (PERS4).

Appendix C

| Variable | Kurtosis | Skewness |

|---|---|---|

| Q_1 | 0.7414892 | −0.8999211 |

| Q_2 | 1.8786178 | −1.0495770 |

| Q_3 | 1.0670180 | −0.8986991 |

| Q_4 | −0.4323551 | −0.4749700 |

| Q_5 | 0.4405646 | −0.5534692 |

| Q_6 | −0.6011248 | −0.2631777 |

| Q_7 | −0.8418520 | −0.2865288 |

| Q_8 | 0.2607167 | −0.6221956 |

| Q_9 | −0.4444183 | −0.5042582 |

| Q_10 | −0.3667205 | −0.3052960 |

| Q_11 | 0.3347129 | −0.6470117 |

| Q_12 | 0.1587396 | −0.5971343 |

| Q_13 | 0.6893963 | −0.6702294 |

| Q_14 | 0.1228709 | −0.7080154 |

References

- Ho, Y.Y.; Lim, L. Targeting student learning needs: The development and preliminary validation of the Learning Needs Questionnaire for a diverse university student population. High. Educ. Res. Dev. 2020, 40, 1452–1465. [Google Scholar] [CrossRef]

- Knowles, M.S.; Holton, E.F.; Swanson, R.A. The Adult Learner: The Definitive Classic in Adult Education and Human Resource Development, 7th ed.; Elsevier Inc.: London, UK, 2011. [Google Scholar]

- Albert, A.; Hallowel, M.R. Revamping occupational safety and health training: Integrating andragogical principles for the adult learner. Constr. Econ. Build. 2013, 13, 128–140. [Google Scholar] [CrossRef] [Green Version]

- Taylor, J.A. What is student centredness and is it enough? Int. J. First Year High. Educ. 2013, 4, 39–48. [Google Scholar] [CrossRef]

- Lea, S.J.; Stephenson, D.; Troy, J. Higher Education Students’ Attitudes to Student-centred Learning: Beyond ‘educational bulimia’? Stud. High. Educ. 2003, 28, 321–334. [Google Scholar] [CrossRef]

- Tangney, S. Student-centred learning: A humanist perspective. Teach. High. Educ. 2014, 19, 266–275. [Google Scholar] [CrossRef]

- White, G. Adaptive Learning Technology Relationship with Student Learning Outcomes. J. Inf. Technol. Educ. Res. 2020, 19, 113–130. [Google Scholar] [CrossRef]

- O’Donnell, E.; Lawless, S.; Sharp, M.; Wade, V. A review of personalised e-learning: Towards supporting learner diversity. Int. J. Distance Educ. Technol. 2015, 13, 22–47. [Google Scholar] [CrossRef] [Green Version]

- Yazon, J.M.; Mayer-Smith, J.; Redfield, R.R. Does the medium change the message? The impact of web-based genetics course on university students’ perspectives on learning and teaching. Comput. Educ. 2002, 38, 267–285. [Google Scholar] [CrossRef]

- Walkington, C.A. Using adaptive learning technologies to personalize instruction to student interests: The impact of relevant contexts on performance and learning outcomes. J. Educ. Psychol. 2013, 105, 932–945. [Google Scholar] [CrossRef]

- Abuhassna, H.; Al-Rahmi, W.M.; Yahya, N.; Zakaria, M.A.Z.M.; Kosnin, A.B.M.; Darwish, M. Development of a new model on utilizing online learning platforms to improve students’ academic achievements and satisfaction. Int. J. Educ. Technol. High. Educ. 2020, 17, 38. [Google Scholar] [CrossRef]

- DeLone, W.H.; McLean, E.R. Information Systems Success: The Quest for the Dependent Variable. Inf. Syst. Res. 1992, 3, 60–95. [Google Scholar] [CrossRef] [Green Version]

- Yakubu, N.; Dasuki, S. Assessing eLearning Systems Success in Nigeria: An Application of the DeLone and McLean Information Systems Success Model. J. Inf. Technol. Educ. Res. 2018, 17, 183–203. [Google Scholar] [CrossRef] [Green Version]

- Zogheib, B.; Rabaa’I, A.; Zogheib, S.; Elsaheli, A. University Student Perceptions of Technology Use in Mathematics Learning. J. Inf. Technol. Educ. Res. 2015, 14, 417–438. [Google Scholar] [CrossRef] [Green Version]

- Lim, L.; Lim, S.H.; Lim, W.Y.R. A Rasch analysis of students’ academic motivation toward Mathematics in an adaptive learning system. Behav. Sci. 2022, 12, 244. [Google Scholar] [CrossRef] [PubMed]

- Ramayah, T.; Lee, J.W.C. System characteristics, satisfaction and e-learning usage: A structural equation model (SEM). Turk. Online J. Educ. Technol. 2012, 11, 196–206. Available online: https://files.eric.ed.gov/fulltext/EJ989028pdf (accessed on 30 May 2022).

- Salam, M. A Technology Integration Framework and Co-Operative Reflection Model for Service Learning. Ph.D. Thesis, University Malaysia Sarawak, Kota Samarahan, Malaysia, 2020. Available online: https://ir.unimas.my/id/eprint/28754/ (accessed on 30 May 2022).

- Xu, F.; Du, J.T. Examining differences and similarities between graduate and undergraduate students’ user satisfaction with digital libraries. J. Acad. Libr. 2019, 45, 102072. [Google Scholar] [CrossRef]

- Delone, W.H.; McLean, E.R. The DeLone and McLean Model of Information Systems Success: A Ten-Year Update. J. Manag. Inf. Syst. 2003, 19, 9–30. [Google Scholar] [CrossRef]

- Forster, Y.; Hergeth, S.; Naujoks, F.; Krems, J.F.; Keinath, A. What and how to tell beforehand: The effect of user education on understanding, interaction and satisfaction with driving automation. Transp. Res. Part F Traffic Psychol. Behav. 2020, 68, 316–335. [Google Scholar] [CrossRef]

- Ojo, A.I. Validation of the DeLone and McLean Information Systems Success Model. Health Inform. Res. 2017, 23, 60–66. [Google Scholar] [CrossRef]

- Wixom, B.H.; Todd, P.A. A Theoretical Integration of User Satisfaction and Technology Acceptance. Inf. Syst. Res. 2005, 16, 85–102. [Google Scholar] [CrossRef]

- Mardiana, S.; Tjakraatmadja, J.H.; Aprianingsih, A. DeLone-McLean information system success model revisited: The separation of intention to use and the integration of technology acceptance models. Int. J. Econ. Financ. Issues 2015, 5, 172–182. Available online: https://www.econjournals.com/index.php/ijefi/article/view/1362 (accessed on 30 May 2022).

- Tsai, W.-H.; Lee, P.-L.; Shen, Y.-S.; Lin, H.-L. A comprehensive study of the relationship between enterprise resource planning selection criteria and enterprise resource planning system success. Inf. Manag. 2012, 49, 36–46. [Google Scholar] [CrossRef]

- Delone, W.H.; McLean, E.R. Measuring e-Commerce Success: Applying the DeLone & McLean Information Systems Success Model. Int. J. Electron. Commer. 2004, 9, 31–47. [Google Scholar] [CrossRef]

- Liao, P.W.; Hsieh, J.Y. What influences Internet-based learning? Soc. Behav. Personal. 2011, 39, 887–896. [Google Scholar] [CrossRef]

- Debourgh, G. Technology is the Tool, Teaching is the Task: Student Satisfaction in Distance Learning. In Proceedings of the Society for Information and Technology & Teacher Education International Conference; San Antonio, TX, USA, 28 February 1999. Available online: http://files.eric.ed.gov/fulltext/ED432226.pdf (accessed on 30 May 2022).

- Ali, A.; Ahmad, I. Key Factors for Determining Student Satisfaction in Distance Learning Courses: A Study of Allama Iqbal Open University. Contemp. Educ. Technol. 2011, 2, 118–134. [Google Scholar] [CrossRef]

- Yukselturk, E.; Yildirim, Z. Investigation of interaction, online support, course structure and flexibility as the contributing factors to students’ satisfaction in an online certificate program. Educ. Technol. Soc. 2008, 11, 51–65. Available online: https://eric.ed.gov/?redir=http%3a%2f%2fwww.ifets.info%2fabstract.php%3fart_id%3d889 (accessed on 30 May 2022).

- Jung, H. Ubiquitous learning: Determinants impacting learners’ satisfaction and performance with smartphones. Lang. Learn. Technol. 2014, 18, 97–119. Available online: https://eric.ed.gov/?redir=http%3a%2f%2fllt.msu.edu%2fissues%2foctober2014%2fjung.pdf (accessed on 30 May 2022).

- Naranjo-Zolotov, M.; Oliveira, T.; Casteleyn, S. Citizens’ intention to use and recommend e-participation. Inf. Technol. People 2019, 32, 364–386. [Google Scholar] [CrossRef] [Green Version]

- Martins, J.; Branco, F.; Gonçalves, R.; Au-Yong-Oliveira, M.; Oliveira, T.; Naranjo-Zolotov, M.; Cruz-Jesus, F. Assessing the success behind the use of education management information systems in higher education. Telemat. Inform. 2018, 38, 182–193. [Google Scholar] [CrossRef]

- Al-Samarraie, H.; Teng, B.K.; Alzahrani, A.I.; Alalwan, N. E-learning continuance satisfaction in higher education: A unified perspective from instructors and students. Stud. High. Educ. 2017, 43, 2003–2019. [Google Scholar] [CrossRef]

- Cidral, W.A.; Oliveira, T.; Di Felice, M.; Aparicio, M. E-learning success determinants: Brazilian empirical study. Comput. Educ. 2018, 122, 273–290. [Google Scholar] [CrossRef] [Green Version]

- Abuhassna, H. Examining Students’ Satisfaction and Learning Autonomy through Web-Based Courses. Int. J. Adv. Trends Comput. Sci. Eng. 2020, 9, 356–370. [Google Scholar] [CrossRef]

- Tawafak, R.M.; Romli, A.B.; Arshah, R.A. Continued Intention to Use UCOM: Four Factors for Integrating With a Technology Acceptance Model to Moderate the Satisfaction of Learning. IEEE Access 2018, 6, 66481–66498. Available online: https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=8513820 (accessed on 30 May 2022). [CrossRef]

- Graven, O.H.; Helland, M.; MacKinnon, L. The influence of staff use of a virtual learning environment on student satisfaction. In Proceedings of the IEEE 7th International Conference on Information Technology Based Higher Education and Training, Ultimo, Australia, 10–13 July 2006; pp. 423–441. Available online: https://ieeexplore.ieee.org/document/4141657 (accessed on 30 May 2022).

- Bi, T.; Lyons, R.; Fox, G.; Muntean, G.-M. Improving Student Learning Satisfaction by Using an Innovative DASH-Based Multiple Sensorial Media Delivery Solution. IEEE Trans. Multimed. 2020, 23, 3494–3505. Available online: https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=9204841 (accessed on 30 May 2022). [CrossRef]

- Salam, M.; Farooq, M.S. Does sociability quality of web-based collaborative learning information system influence students’ satisfaction and system usage? Int. J. Educ. Technol. High. Educ. 2020, 17, 26. [Google Scholar] [CrossRef]

- Mtebe, J.S.; Raphael, C. Key factors in learners’ satisfaction with the e-learning system at the University of Dares Salaam, Tanzania. Australas. J. Educ. Technol. 2018, 34, 107–122. Available online: https://ajet.org.au/index.php/AJET/article/view/2993/1502 (accessed on 30 May 2022). [CrossRef] [Green Version]

- Virtanen, M.A.; Kääriäinen, M.; Liikanen, E.; Haavisto, E. The comparison of students’ satisfaction between ubiquitous and web-basedlearning environments. Educ. Inf. Technol. 2017, 22, 2565–2581. [Google Scholar] [CrossRef]

- Asoodar, M.; Vaezi, S.; Izanloo, B. Framework to improve e-learner satisfaction and further strengthen e-learning implementation. Comput. Hum. Behav. 2016, 63, 704–716. [Google Scholar] [CrossRef]

- Kuo, Y.-C.; Walker, A.E.; Schroder, K.E.; Belland, B.R. Interaction, Internet self-efficacy, and self-regulated learning as predictors of student satisfaction in online education courses. Internet High. Educ. 2014, 20, 35–50. [Google Scholar] [CrossRef]

- Ladyshewsky, R.K. Instructor Presence in Online Courses and Student Satisfaction. Int. J. Sch. Teach. Learn. 2013, 7, 13. [Google Scholar] [CrossRef] [Green Version]

- Paechter, M.; Maier, B.; Macher, D. Students’ expectations of, and experiences in e-learning: Their relation to learning achievements and course satisfaction. Comput. Educ. 2010, 54, 222–229. [Google Scholar] [CrossRef]

- Wu, J.-H.; Tennyson, R.D.; Hsia, T.-L. A study of student satisfaction in a blended e-learning system environment. Comput. Educ. 2010, 55, 155–164. [Google Scholar] [CrossRef]

- Wang, Y.-S. Assessment of learner satisfaction with asynchronous electronic learning systems. Inf. Manag. 2003, 41, 75–86. [Google Scholar] [CrossRef]

- Terzis, V.; Economides, A. The acceptance and use of computer based assessment. Comput. Educ. 2011, 56, 1032–1044. [Google Scholar] [CrossRef]

- Lim, L. Development and Initial Validation of the Computer-Delivered Test Acceptance Questionnaire for Secondary and High School Students. J. Psychoeduc. Assess. 2019, 38, 182–194. [Google Scholar] [CrossRef]

- American Educational Research Association; American Psychological Association; National Council on Measurement in Education. Standards for Educational and Psychological Testing; American Educational Research Association: Washington, DC, USA, 2014. [Google Scholar]

- Frey, B. The SAGE Encyclopedia of Educational Research, Measurement, and Evaluation; SAGE Publications: Thousand Oaks, CA, USA, 2018; Volume 1–4. [Google Scholar] [CrossRef]

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E. Multivariate Data Analysis Eight Edition; Cengage: Hampshire, UK, 2019. [Google Scholar]

- Wolf, E.J.; Harrington, K.M.; Clark, S.L.; Miller, M.W. Sample size requirements for structural equation models an evaluation of power, bias, and solution propriety. Educ. Psychol. Meas. 2013, 73, 913–934. [Google Scholar] [CrossRef] [PubMed]

- Kline, R.B. Principles and Practice of Structural Equation Modelling, 3rd ed.; Guilford Press: New York, NY, USA, 2010. [Google Scholar]

- West, S.G.; Finch, J.F.; Curran, P.J. Structural equation models with nonnormal variables: Problems and remedies. In Structural Equation Modelling: Concepts, Issues and Applications; Hoyle, R.H., Ed.; Sage: Thousand Oaks, CA, USA, 1995; pp. 56–75. [Google Scholar]

- Brown, T.A. Confirmatory Factor Analysis for Applied Research; The Guildford Press: New York, NY, USA, 2015. [Google Scholar]

- Hu, L.T.; Bentler, P.M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Model. Multidiscip. J. 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Lim, L.; Chapman, E. Development and Preliminary Validation of the Moral Reasoning Questionnaire for Secondary School Students. SAGE Open 2022, 12, 21582440221085271. [Google Scholar] [CrossRef]

- Yale, R.N.; Jensen, J.D.; Carcioppolo, N.; Sun, Y.; Liu, M. Examining First- and Second-Order Factor Structures for News Credibility. Commun. Methods Meas. 2015, 9, 152–169. [Google Scholar] [CrossRef]

- Edsurge. Decoding Adaptive; Pearson: London, UK, 2016. [Google Scholar]

| Author(s) | Participants | Antecedents of Learner Satisfaction | Learner Satisfaction Measure |

|---|---|---|---|

| [35] Abuhassna et al. (2020) | 243 higher education students |

| Of the 27-item questionnaire across five subscales, one five-item subscale was used to measure learner satisfaction. Items were not reported though entire measurement model was validated by means of structural equation modelling. |

| [38] Bi et al. (2020) | 44 students completed the activity as part of an online business management module |

| Learner satisfaction was reflected by single items (e.g., 55.6% of participants satisfied with the platform used to deliver content). |

| [39] Salam and Farooq (2020) | 120 undergraduate students using an online information-based system |

| Four-item subscale (e.g., I like working with the platform; I find the platform useful for collaborative learning) out of a 48-item questionnaire was used to measure learner satisfaction. Validation of questionnaire was by means of structural equation modelling. |

| [33] Al-Samarraie et al. (2018) | 38 postgraduate students and 9 instructors with e-learning experience |

| Meta-analysis via the Fuzzy Decision Making Trial and Evaluation Laboratory (DEMATEL) method. |

| [40] Mtebe and Raphael (2018) | 153 students using an e-learning platform (i.e., Moodle) |

| 25-item questionnaire across six subscales with one subscale of three items on learner satisfaction. Validation was limited to exploratory factor analysis. |

| [36] Tawafak et al. (2018) | 295 undergraduates using an e-learning system |

| 35-item questionnaire across 12 subscales with one subscale of two items on learner satisfaction. Validation of questionnaire was by means of structural equation modelling. |

| [41] Virtanen et al. (2017) | 115 students using virtual and digital learnings |

| 24-item questionnaire across five subscales. Validation of questionnaire was limited to a reliability measure (i.e., Cronbach’s alpha). |

| [42] Asoodar et al. (2016) | 600 undergraduates using an e-learning system (i.e., Moodle) |

| 132-item questionnaire across six subscales. Validation of questionnaire was limited to principal components and parallel analyses. |

| [43] Kuo et al. (2014) | 221 participants from undergraduate and graduate online classes |

| Five-item subscale on learner satisfaction focussed on satisfaction about course (e.g., this course contributed to my educational development; in the future, I would be willing to take a fully online course again). |

| [44] Ladyshewsky (2013) | Post graduate participants from six online courses with class sizes averaging around 35 students |

| Learner satisfaction data (11 items) was collected using the university’s standardised course evaluation system. Validation of learner satisfaction measure was not observed. |

| [45] Paechter et al. (2010) | 2196 participants using an e-learning system |

| Learner satisfaction was reflected by learner expectations and assessment of course outcomes. Validation of learner satisfaction measure was not observed. |

| [46] Wu et al. (2010) | 212 participants from blended e-learning course |

| 21-item questionnaire across seven subscales with one subscale of four items on learner satisfaction. Validation of questionnaire was validated via confirmatory factor analysis. |

| [47] Wang (2003) | 116 adult learners using an e-learning system |

| 17-item questionnaire across four subscales. Validation of questionnaire was limited to exploratory factor analysis. |

| Course/Semester/Year | Number of Enrolled Students | Number of Students Who Volunteered and Completed the LSQa |

|---|---|---|

| Calculus I/2/2021 | 93 | 80 |

| Calculus II/2/2021 | 56 | 41 |

| Model | x2 | x2diff | df | x2/df | CFI | RMSEA | SRMR | AIC | SBC |

|---|---|---|---|---|---|---|---|---|---|

| One-factor | 160.91 * | − | 77 | 2.09 | 0.87 | 0.10 | 0.08 | 216.91 | 295.19 |

| Correlated 3-factor | 111.72 | 74 | 1.74 | 0.94 | 0.07 | 0.07 | 173.72 | 260.39 | |

| Second-order 3-factor | 111.72 | 74 | 1.74 | 0.94 | 0.07 | 0.07 | 173.72 | 260.39 | |

| Bifactor 3-factor | 96.71 | 56 | 1.73 | 0.64 | 0.08 | 0.07 | 194.71 | 331.70 |

| Construct and Items | Standardised Loading | Average Variance Extracted | Construct Reliability |

|---|---|---|---|

| LI (F1) | 0.64 | 0.98 | |

| LI1 | 0.85 | ||

| LI2 | 0.85 | ||

| LI3 | 0.65 | ||

| LI4 | 0.82 | ||

| CONT (F2) | 0.61 | 0.99 | |

| CONT1 | 0.73 | ||

| CONT2 | 0.85 | ||

| CONT3 | 0.76 | ||

| CONT4 | 0.79 | ||

| CONT5 | 0.80 | ||

| CONT6 | 0.75 | ||

| PERS (F3) | 0.54 | 0.97 | |

| PERS1 | 0.75 | ||

| PERS2 | 0.81 | ||

| PERS3 | 0.59 | ||

| PERS4 | 0.78 |

| Construct | LI | CONT | PERS |

|---|---|---|---|

| LI | 0.80 * | ||

| CONT | 0.81 | 0.78 * | |

| PERS | 0.66 | 0.81 | 0.74 * |

| Construct and Items | Standardised Loading | Average Variance Extracted | Construct Reliability |

|---|---|---|---|

| LS | 0.77 | 0.98 | |

| LI | 0.81 | ||

| CONT | 1.00 | ||

| PERS | 0.81 | ||

| LI | 0.64 | 0.98 | |

| LI1 | 0.85 | ||

| LI2 | 0.85 | ||

| LI3 | 0.82 | ||

| LI4 | 0.65 | ||

| CONT | 0.61 | 0.99 | |

| CONT1 | 0.73 | ||

| CONT2 | 0.85 | ||

| CONT3 | 0.76 | ||

| CONT4 | 0.75 | ||

| CONT5 | 0.80 | ||

| CONT6 | 0.79 | ||

| PERS | 0.54 | 0.97 | |

| PERS1 | 0.75 | ||

| PERS2 | 0.81 | ||

| PERS3 | 0.78 | ||

| PERS4 | 0.59 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lim, L.; Lim, S.H.; Lim, R.W.Y. Measuring Learner Satisfaction of an Adaptive Learning System. Behav. Sci. 2022, 12, 264. https://doi.org/10.3390/bs12080264

Lim L, Lim SH, Lim RWY. Measuring Learner Satisfaction of an Adaptive Learning System. Behavioral Sciences. 2022; 12(8):264. https://doi.org/10.3390/bs12080264

Chicago/Turabian StyleLim, Lyndon, Seo Hong Lim, and Rebekah Wei Ying Lim. 2022. "Measuring Learner Satisfaction of an Adaptive Learning System" Behavioral Sciences 12, no. 8: 264. https://doi.org/10.3390/bs12080264

APA StyleLim, L., Lim, S. H., & Lim, R. W. Y. (2022). Measuring Learner Satisfaction of an Adaptive Learning System. Behavioral Sciences, 12(8), 264. https://doi.org/10.3390/bs12080264