Visual Affective Stimulus Database: A Validated Set of Short Videos

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Video Materials

2.3. Sociodemographic Variables and Self-Administered Questionnaire

2.3.1. Beck Depression Inventory-II in Chinese Version (BDI-II-C)

2.3.2. Generalized Anxiety Disorder Scale (7-Item Generalized Anxiety Disorder Scale, GAD-7)

2.3.3. Emotion Rating Scale

2.4. Stimuli Presentation and Online Experiment Procedure

2.5. Statistical Analysis

3. Results

3.1. Rating of Emotional Videos

3.2. Internal Consistency

3.3. Ratings for Emotional Dimensions and Gender Differences

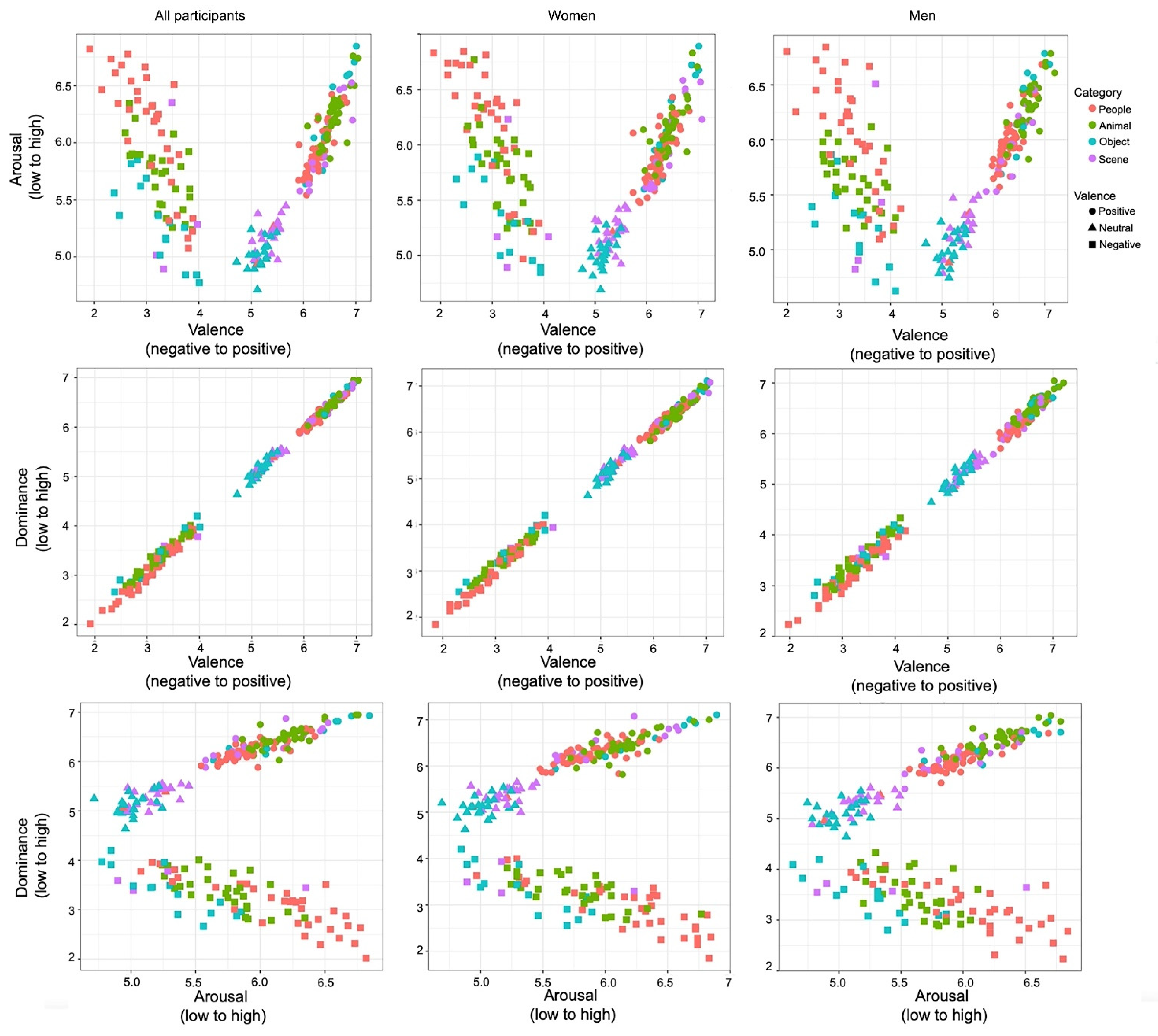

3.4. Scatter Plots of Rating Distribution for Emotional Dimensions

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

References

- Hantke, N.C.; Gyurak, A.; Van Moorleghem, K.; Waring, J.D.; Adamson, M.M.; O’Hara, R.; Beaudreau, S.A. Disentangling cognition and emotion in older adults: The role of cognitive control and mental health in emotional conflict adaptation. Int. J. Geriatr. Psychiatry 2017, 32, 840–848. [Google Scholar] [CrossRef] [PubMed]

- Phelps, E.A.; Lempert, K.M.; Sokol-Hessner, P. Emotion and decision making: Multiple modulatory neural circuits. Annu. Rev. Neurosci. 2014, 37, 263–287. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zinchenko, A.; Kanske, P.; Obermeier, C.; Schröger, E.; Kotz, S.A. Emotion and goal-directed behavior: ERP evidence on cognitive and emotional conflict. Soc. Cogn. Affect. Neurosci. 2015, 10, 1577–1587. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sullivan, S.K.; Strauss, G.P. Electrophysiological evidence for detrimental impact of a reappraisal emotion regulation strategy on subsequent cognitive control in schizophrenia. J. Abnorm. Psychol. 2017, 126, 679–693. [Google Scholar] [CrossRef] [PubMed]

- Keutmann, M.K.; Moore, S.L.; Savitt, A.; Gur, R.C. Generating an item pool for translational social cognition research: Methodology and initial validation. Behav. Res. Methods 2015, 47, 228–234. [Google Scholar] [CrossRef] [PubMed]

- Aldao, A.; Nolen-Hoeksema, S.; Schweizer, S. Emotion-regulation strategies across psychopathology: A meta-analytic review. Clin. Psychol. Rev. 2010, 30, 217–237. [Google Scholar] [CrossRef] [PubMed]

- Scherer, K.; Schorr, A.; Johnstone, T. Appraisal Processes in Emotion: Theory, Methods, Research; Oxford University Press: Oxford, UK, 2001. [Google Scholar]

- Lang, P.; Cuthbert, B.M. International Affective Picture System (IAPS): Instruction Manual and Affective Ratings; Technical Report A-4; The Center for Research in Psychophysiology, University of Florida: Gainesville, FL, USA, 1999. [Google Scholar]

- Lang, P.J.; Greenwald, M.K.; Bradley, M.M.; Hamm, A.O. Looking at pictures: Affective, facial, visceral, and behavioral reactions. Psychophysiology 1993, 30, 261–273. [Google Scholar] [CrossRef]

- Modinos, G.; Pettersson-Yeo, W.; Allen, P.; McGuire, P.K.; Aleman, A.; Mechelli, A. Multivariate pattern classification reveals differential brain activation during emotional processing in individuals with psychosis proneness. NeuroImage 2012, 59, 3033–3041. [Google Scholar] [CrossRef]

- Weinberg, A.; Hajcak, G. Beyond good and evil: The time-course of neural activity elicited by specific picture content. Emotion 2010, 10, 767–782. [Google Scholar] [CrossRef] [Green Version]

- Huang, Y.X.; Luo, Y.J. Native assessment of international affective picture system. Chin. Ment. Health J. 2004, 18, 631–634. [Google Scholar]

- Wang, Y.; Xu, J.; Zhang, B.W.; Feng, X. Native Assessment of International Affective Picture System among 116 Chinese Aged. Chin. Ment. Health J. 2008, 22, 903–907. [Google Scholar]

- Lu, B.; Hui, M.A.; Yu-Xia, H. The Development of Native Chinese Affective Picture System-A pretest in 46 College Students. Chin. Ment. Health J. 2005, 19, 719–722. [Google Scholar]

- Wehrle, T.; Kaiser, S.; Schmidt, S.; Scherer, K.R. Studying the dynamics of emotional expression using synthesized facial muscle movements. J. Pers. Soc. Psychol. 2000, 78, 105–119. [Google Scholar] [CrossRef] [PubMed]

- Bänziger, T.; Mortillaro, M.; Scherer, K.R. Introducing the Geneva Multimodal expression corpus for experimental research on emotion perception. Emotion 2012, 12, 1161–1179. [Google Scholar] [CrossRef]

- Negrão, J.G.; Osorio, A.A.C.; Siciliano, R.F.; Lederman, V.R.G.; Kozasa, E.H.; D’Antino, M.E.F.; Tamborim, A.; Santos, V.; de Leucas, D.L.B.; Camargo, P.S. The Child Emotion Facial Expression Set: A Database for Emotion Recognition in Children. Front. Psychol. 2021, 12, 666245. [Google Scholar] [CrossRef]

- Van der Schalk, J.; Hawk, S.T.; Fischer, A.H.; Doosje, B. Moving faces, looking places: Validation of the Amsterdam Dynamic Facial Expression Set (ADFES). Emotion 2011, 11, 907–920. [Google Scholar] [CrossRef]

- Chung, E.S.; Chung, K.M. Development and validation of the Yonsei-Cambridge Mindreading Face Battery for assessing complex emotion recognition. Psychol. Assess. 2021, 33, 843–854. [Google Scholar] [CrossRef]

- Du, J.; Yao, Z.; Xie, S.P.; Shi, J.; Cao, Y.; Teng, A. The Primary Development of Chinese Facial Expression Video System. Chin. Ment. Health J. 2007, 5, 333–337. [Google Scholar]

- Chen, X.J.; Wang, C.G.; Li, Y.H.; Sui, N. Psychophysiological and self-reported responses in individuals with methamphetamine use disorder exposed to emotional video stimuli. Int. J. Psychophysiol. 2018, 133, 50–54. [Google Scholar] [CrossRef]

- Xu, P.; Huang, Y.X.; Luo, Y.J. Establishment and assessment of native Chinese affective video system. Chin. Ment. Health J. 2010, 24, 551–554. [Google Scholar]

- Moser, D.A.; Suardi, F.; Rossignol, A.S.; Vital, M.; Manini, A.; Serpa, S.R.; Schechter, D.S. Parental Reflective Functioning correlates to brain activation in response to video-stimuli of mother-child dyads: Links to maternal trauma history and PTSD. Psychiatry Res. Neuroimaging 2019, 293, 110985. [Google Scholar] [CrossRef] [PubMed]

- Kılıç, B.; Aydın, S. Classification of Contrasting Discrete Emotional States Indicated by EEG Based Graph Theoretical Network Measures. Neuroinformatics, 2022; online ahead of print. [Google Scholar] [CrossRef]

- Kim, H.; Seo, P.; Choi, J.W.; Kim, K.H. Emotional arousal due to video stimuli reduces local and inter-regional synchronization of oscillatory cortical activities in alpha- and beta-bands. PLoS ONE 2021, 16, e0255032. [Google Scholar] [CrossRef] [PubMed]

- Mundfrom, D.J.; Shaw, D.G.; Ke, T.L. Minimum Sample Size Recommendations for Conducting Factor Analyses. Int. J. Test. 2005, 5, 159–168. [Google Scholar] [CrossRef]

- Yang, W.; Xiong, G. Validity and demarcation of common depression scale for screening depression in diabetic adolescents. Chin. J. Clin. Psychol. 2016, 24, 1010–1015. [Google Scholar]

- Zhou, Y.; Bi, Y.; Lao, L.; Jiang, S. Application of the Generalized Anxiety Scale in Screening Generalized Anxiety Disorder. Chin. J. Gen. Pract. 2018, 17, 735–737. [Google Scholar]

- Colden, A.; Bruder, M.; Manstead, A.S.R. Human content in affect-inducing stimuli: A secondary analysis of the international affective picture system. Motiv. Emot. 2008, 32, 260–269. [Google Scholar] [CrossRef]

- Beck, A.T.; Steer, R.A.; Brown, G.K. Manual for the Beck Depression Inventory-II; Psychological Corporation: San Antonio, TX, USA, 1996; Available online: http://www.nctsn.org/content/beck-depression-inventory-second-edition-bdi-ii (accessed on 1 May 2022).

- Wang, Z.; Yuan, C.M.; Huang, J.; Li, Z.Z.; Chen, J.; Zhang, H.Y.; Fang, Y.R.; Xiao, Z.P. Reliability and validity of the Chinese version of Beck Depression Inventory-II among depression patients. Chin. Ment. Health J. 2011, 25, 476–479. [Google Scholar]

- Yang, W. Screening for Adolescent Depression: Validity and Cut-off Scores for Depression Scales. Chin. J. Clin. Psychol. 2016, 24, 1010–1015. [Google Scholar]

- Löwe, B.; Decker, O.; Müller, S.; Brähler, E.; Schellberg, D.; Herzog, W.; Herzberg, P.Y. Validation and standardization of the Generalized Anxiety Disorder Screener (GAD-7) in the general population. Med. Care 2008, 46, 266–274. [Google Scholar] [CrossRef]

- Spitzer, R.L.; Kroenke, K.; Williams, J.B.W.; Lowe, B. A brief measure for assessing generalized anxiety disorder—The GAD-7. Arch. Intern. Med. 2006, 166, 1092–1097. [Google Scholar] [CrossRef] [Green Version]

- He, X.Y.; Li, C.B.; Qian, J.; Cui, H.S.; Wu, W.Y. Reliability and validity of a generalized anxiety disorder scale in general hospital outpatients. Gen. Psychiatry 2010, 22, 200–203. [Google Scholar]

- Gong, X.; Huang, Y.X.; Wang, Y.; Luo, Y.J. Revision of the Chinese Facial Affective Picture System. Chin. Ment. Health J. 2011, 25, 40–46. [Google Scholar]

- Liu, J.; Ren, Y.; Qu, W.; Chen, N.; Fan, H.; Song, L.; Zhang, M.; Tian, Z.; Zhao, Y.; Tan, S. Establishment of the Chinese facial emotion images database with intensity classification. Chin. Ment. Health J. 2019, 33, 120–125. [Google Scholar]

- Ahmed, I.; Ishtiaq, S. Reliability and validity: Importance in Medical Research. J. Pak. Med. Assoc. 2021, 71, 2401–2406. [Google Scholar] [CrossRef]

- Yi, J.; Liu, M.; Luo, Y.; Zhong, M.; Ling, Y.; Yao, S. Gender differences in emotional picture response. Chin. J. Clin. Psychol. 2006, 14, 583–585. Available online: https://www.cnki.com.cn/Article/CJFDTotal-ZLCY200606010.htm (accessed on 1 May 2022).

- Zhao, Y.; Wu, C. Childhood maltreatment experiences and emotion perception in young Chinese adults: Sex as a moderator. Stress Health, 2021; online ahead of print. [Google Scholar] [CrossRef]

- Silva, H.D.; Campagnoli, R.R.; Mota, B.E.F.; Araújo, C.R.V.; Álvares, R.S.R.; Mocaiber, I.; Rocha-Rego, V.; Volchan, E.; Souza, G.G. Bonding Pictures: Affective ratings are specifically associated to loneliness but not to empathy. Front. Psychol. 2017, 8, 1136. [Google Scholar] [CrossRef]

- Ionta, S.; Costantini, M.; Ferretti, A.; Galati, G.; Romani, G.L.; Aglioti, S.M. Visual similarity and psychological closeness are neurally dissociable in the brain response to vicarious pain. Cortex 2020, 133, 295–308. [Google Scholar] [CrossRef]

- Pamplona, G.S.P.; Salgado, J.A.D.; Staempfli, P.; Seifritz, E.; Gassert, R.; Ionta, S. Illusory Body Ownership Affects the Cortical Response to Vicarious Somatosensation. Cereb. Cortex 2022, 32, 312–328. [Google Scholar] [CrossRef]

- Bhardwaj, H.; Tomar, P.; Sakalle, A.; Ibrahim, W. EEG-Based Personality Prediction Using Fast Fourier Transform and DeepLSTM Model. Comput. Intell. Neurosci. 2021, 2021, 6524858. [Google Scholar] [CrossRef]

- Moctezuma, L.A.; Abe, T.; Molinas, M. Two-dimensional CNN-based distinction of human emotions from EEG channels selected by multi-objective evolutionary algorithm. Sci. Rep. 2022, 12, 3523. [Google Scholar] [CrossRef]

| Valence | Arousal | Dominance | |

|---|---|---|---|

| Total (n = 242) | 0.968 | 0.984 | 0.970 |

| Positive (n = 112) | 0.987 | 0.987 | 0.987 |

| Neutral (n = 47) | 0.923 | 0.937 | 0.920 |

| Negative (n = 83) | 0.973 | 0.982 | 0.978 |

| Valence | Arousal | Dominance | |

|---|---|---|---|

| People (n = 84) | 0.923 | 0.968 | 0.931 |

| Animals (n = 68) | 0.932 | 0.966 | 0.931 |

| Objects (n = 47) | 0.799 | 0.879 | 0.800 |

| Scene (n = 43) | 0.933 | 0.936 | 0.938 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Q.; Zhao, Y.; Gong, B.; Li, R.; Wang, Y.; Yan, X.; Wu, C. Visual Affective Stimulus Database: A Validated Set of Short Videos. Behav. Sci. 2022, 12, 137. https://doi.org/10.3390/bs12050137

Li Q, Zhao Y, Gong B, Li R, Wang Y, Yan X, Wu C. Visual Affective Stimulus Database: A Validated Set of Short Videos. Behavioral Sciences. 2022; 12(5):137. https://doi.org/10.3390/bs12050137

Chicago/Turabian StyleLi, Qiuhong, Yiran Zhao, Bingyan Gong, Ruyue Li, Yinqiao Wang, Xinyuan Yan, and Chao Wu. 2022. "Visual Affective Stimulus Database: A Validated Set of Short Videos" Behavioral Sciences 12, no. 5: 137. https://doi.org/10.3390/bs12050137

APA StyleLi, Q., Zhao, Y., Gong, B., Li, R., Wang, Y., Yan, X., & Wu, C. (2022). Visual Affective Stimulus Database: A Validated Set of Short Videos. Behavioral Sciences, 12(5), 137. https://doi.org/10.3390/bs12050137