Trust, Media Credibility, Social Ties, and the Intention to Share towards Information Verification in an Age of Fake News

Abstract

:1. Introduction

2. Literature Review

2.1. Internet Bots/Fake Accounts

2.2. Clickbait

2.3. Filter Bubbles

2.4. Internet Trolls

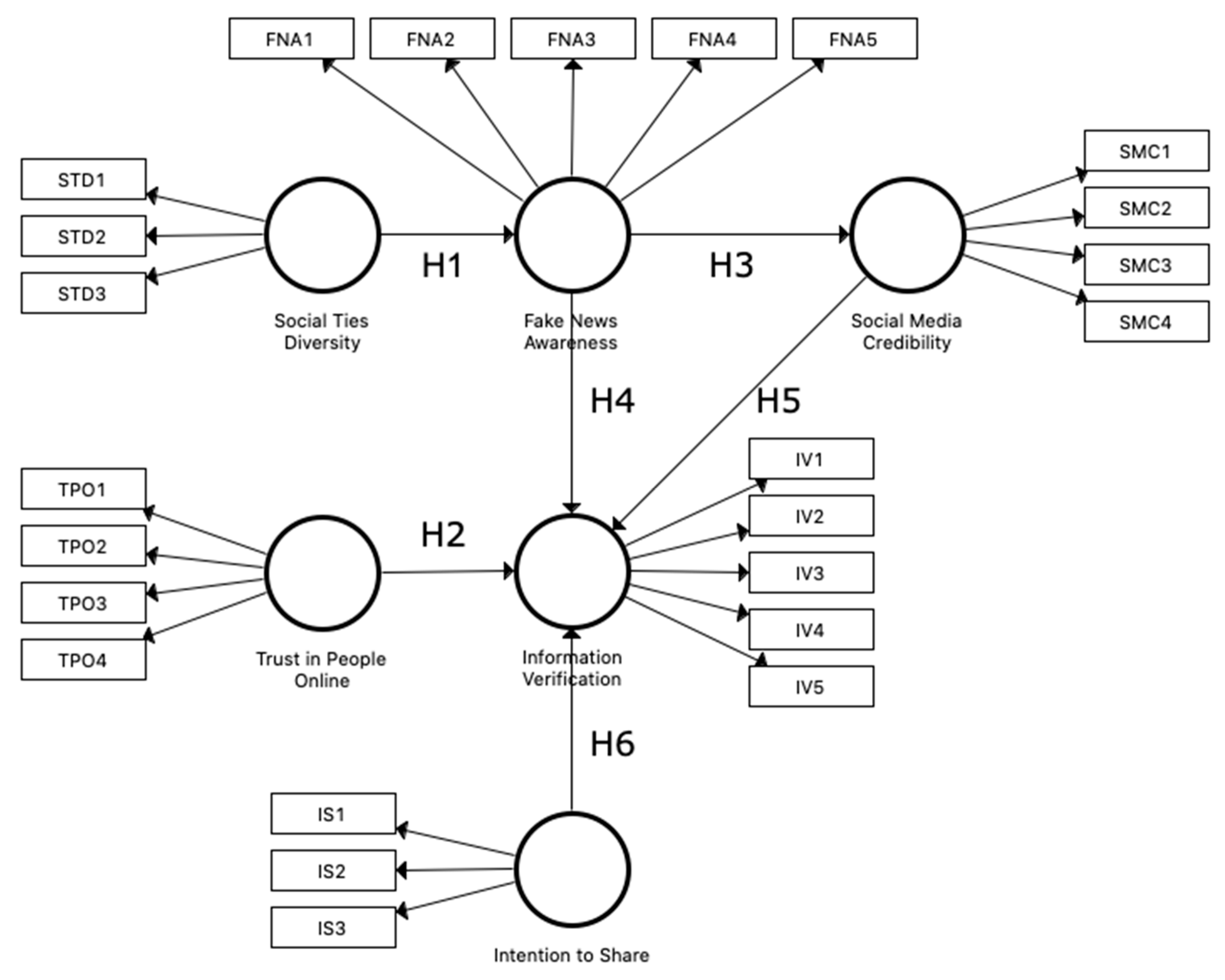

2.5. Hypotheses Development

3. Method

4. Results

5. Discussion

5.1. Theoretical Implications

5.2. Practical Implications

5.3. Limitation and Future Research

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Variable | Item | Question |

|---|---|---|

| Social ties diversity | STD1 | The people I interact with through social media represent the different groups I am involved in. |

| STD2 | The people I interact with through social media represent many stages of my life. | |

| STD3 | The people I interact with through social media are diverse in terms of how I met them. | |

| Fake news awareness | FNA1 | I am aware of the existence of fake news and the social consequences it entails. |

| FNA2 | I am concerned about the phenomenon of fake news. | |

| FNA3 | I am aware that I may come across fake news when using social media. | |

| FNA4 | I have sufficient knowledge about fake news and its social impact. | |

| FNA5 | I understand the concerns about fake news and its negative impact on society. | |

| Social media credibility | SMC1 | I believe that most of the news that is published on social networks is credible. |

| SMC2 | I believe that most of the news that is published on social networks is relevant/accurate. | |

| SMC3 | I believe that most of the news that is published on social networks is trustworthy. | |

| SMC4 | I believe that most of the news that is published on social networks contains all the information on a topic. | |

| Trust in people online | TPO1 | It is easy for me to trust another person on the internet. |

| TPO2 | My tendency to trust another person online is high. | |

| TPO3 | I tend to trust people who publish information on the internet even though I have little knowledge of the subject. | |

| TPO4 | Trusting someone or something on the internet is not difficult. | |

| Information verification | IV1 | I check who the author is of the news I see on social media. |

| IV2 | I look for official confirmation of information or a recommendation from someone I know to verify news that is published on social media. | |

| IV3 | I pay attention to whether published information on social media has a stated source. | |

| IV4 | I verify the author of published information or news I see. | |

| IV5 | I consider the purpose of the information published by an author. | |

| Intention to share | IS1 | In the future, I intend to share news on social networks. |

| IS2 | I intend to share news regularly on social networks. | |

| IS3 | I expect to share news with other users on social media. |

References

- Gelfert, A. Fake News: A Definition. Informal Log. 2018, 38, 84–117. [Google Scholar] [CrossRef]

- Lazer, D.M.J.; Baum, M.A.; Benkler, Y.; Berinsky, A.J.; Greenhill, K.M.; Menczer, F.; Metzger, M.J.; Nyhan, B.; Pennycook, G.; Rothschild, D.; et al. The science of fake news. Science 2018, 359, 1094–1096. [Google Scholar] [CrossRef] [PubMed]

- Flintham, M.; Karner, C.; Bachour, K.; Creswick, H.; Gupta, N.; Moran, S. Falling for Fake News. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; ACM: New York, NY, USA, 2018; pp. 1–10. [Google Scholar]

- Wardle, C.; Derakhshan, H. Thinking about ‘information disorder’: Formats of misinformation, disinformation, and mal-information. In Handbook for Journalism Education and Training UNESCO Series on Journalism Education; Unesco: Paris, France, 2018; ISBN 9789231002816. [Google Scholar]

- Naeem, S.B.; Bhatti, R.; Khan, A. An exploration of how fake news is taking over social media and putting public health at risk. Heal. Inf. Libr. J. 2020, 38, 143–149. [Google Scholar] [CrossRef] [PubMed]

- Vosoughi, S.; Roy, D.; Aral, S. The spread of true and false news online. Science 2018, 359, 1146–1151. [Google Scholar] [CrossRef] [PubMed]

- Ong, J.C.; Cabañes, J.A.V. Architects of Networked Disinformation: Behind the Scenes of Troll Accounts and Fake News Production in the Philippines. Archit. Netw. Disinformation Scenes Troll Acc. Fake News Prod. Philipp. 2018, 74, 1–83. [Google Scholar] [CrossRef]

- Allcott, H.; Gentzkow, M. Social Media and Fake News in the 2016 Election. J. Econ. Perspect. 2017, 31, 211–236. [Google Scholar] [CrossRef] [Green Version]

- Spohr, D. Fake news and ideological polarization. Bus. Inf. Rev. 2017, 34, 150–160. [Google Scholar] [CrossRef]

- Grinberg, N.; Joseph, K.; Friedland, L.; Swire-Thompson, B.; Lazer, D. Fake news on Twitter during the 2016 U.S. presidential election. Science 2019, 363, 374–378. [Google Scholar] [CrossRef]

- McNair, B. Fake News; Series: Disruptions: Studies in Digital Journalism; Routledge: London, UK; New York, NY, USA, 2017; ISBN 9781315142036. [Google Scholar]

- Molina, M.D.; Sundar, S.S.; Le, T.; Lee, D. “Fake News” Is Not Simply False Information: A Concept Explication and Taxonomy of Online Content. Am. Behav. Sci. 2021, 65, 180–212. [Google Scholar] [CrossRef]

- Gorwa, R.; Guilbeault, D. Unpacking the Social Media Bot: A Typology to Guide Research and Policy. Policy Internet 2020, 12, 225–248. [Google Scholar] [CrossRef] [Green Version]

- Shu, K.; Sliva, A.; Wang, S.; Tang, J.; Liu, H. Fake News Detection on Social Media. ACM SIGKDD Explor. Newsl. 2017, 19, 22–36. [Google Scholar] [CrossRef]

- Gorwa, R. Computational Propaganda in Poland: False Amplifiers and the Digital Public Sphere; Project on Computational Propaganda: Oxford, UK, 2017; pp. 1–32. [Google Scholar]

- Shao, C.; Ciampaglia, G.L.; Varol, O.; Yang, K.-C.; Flammini, A.; Menczer, F. The spread of low-credibility content by social bots. Nat. Commun. 2018, 9, 4787. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kalveks, T. Clickbait. In The Blackwell Encyclopedia of Sociology; John Wiley & Sons, Ltd.: Oxford, UK, 2018; pp. 1–2. [Google Scholar]

- Khater, S.R.; Al-sahlee, O.H.; Daoud, D.M.; El-Seoud, M.S.A. Clickbait Detection. In Proceedings of the 7th International Conference on Software and Information Engineering—ICSIE ’18, Cairo, Egypt, 2–4 May 2018; ACM Press: New York, NY, USA, 2018; pp. 111–115. [Google Scholar]

- Chakraborty, A.; Paranjape, B.; Kakarla, S.; Ganguly, N. Stop Clickbait: Detecting and preventing clickbaits in online news media. In Proceedings of the 2016 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining (ASONAM), San Francisco, CA, USA, 18–21 August 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 9–16. [Google Scholar]

- Zheng, H.-T.; Chen, J.-Y.; Yao, X.; Sangaiah, A.; Jiang, Y.; Zhao, C.-Z. Clickbait Convolutional Neural Network. Symmetry 2018, 10, 138. [Google Scholar] [CrossRef] [Green Version]

- Probierz, B.; Stefański, P.; Kozak, J. Rapid detection of fake news based on machine learning methods. Procedia Comput. Sci. 2021, 192, 2893–2902. [Google Scholar] [CrossRef]

- Probierz, B.; Kozak, J.; Stefański, P.; Juszczuk, P. Adaptive Goal Function of Ant Colony Optimization in Fake News Detection; Springer: Cham, Switzerland, 2021; pp. 387–400. [Google Scholar]

- Flaxman, S.; Goel, S.; Rao, J.M. Filter Bubbles, Echo Chambers, and Online News Consumption. Public Opin. Q. 2016, 80, 298–320. [Google Scholar] [CrossRef] [Green Version]

- DiFranzo, D.; Gloria-Garcia, K. Filter bubbles and fake news. XRDS Crossroads ACM Mag. Stud. 2017, 23, 32–35. [Google Scholar] [CrossRef]

- Buckels, E.E.; Trapnell, P.D.; Paulhus, D.L. Trolls just want to have fun. Personal. Individ. Differ. 2014, 67, 97–102. [Google Scholar] [CrossRef]

- Daskal, E.; Wentrup, R.; Shefet, D. Taming the Internet Trolls With an Internet Ombudsperson: Ethical Social Media Regulation. Policy Internet 2020, 12, 207–224. [Google Scholar] [CrossRef]

- Zubiaga, A.; Ji, H. Tweet, but verify: Epistemic study of information verification on Twitter. Soc. Netw. Anal. Min. 2014, 4, 163. [Google Scholar] [CrossRef] [Green Version]

- Grabner-Kräuter, S.; Bitter, S. Trust in online social networks: A multifaceted perspective. Forum Soc. Econ. 2015, 44, 48–68. [Google Scholar] [CrossRef] [Green Version]

- Lee, C.S.; Ma, L. News sharing in social media: The effect of gratifications and prior experience. Comput. Hum. Behav. 2012, 28, 331–339. [Google Scholar] [CrossRef]

- Gawron, M.; Strzelecki, A. Consumers’ Adoption and Use of E-Currencies in Virtual Markets in the Context of an Online Game. J. Theor. Appl. Electron. Commer. Res. 2021, 16, 71. [Google Scholar] [CrossRef]

- Rieh, S.Y. Judgment of information quality and cognitive authority in the Web. J. Am. Soc. Inf. Sci. Technol. 2002, 53, 145–161. [Google Scholar] [CrossRef]

- Scheufele, D.A.; Krause, N.M. Science audiences, misinformation, and fake news. Proc. Natl. Acad. Sci. USA 2019, 116, 7662–7669. [Google Scholar] [CrossRef] [Green Version]

- Flanagin, A.J.; Metzger, M.J. The role of site features, user attributes, and information verification behaviors on the perceived credibility of web-based information. New Media Soc. 2007, 9, 319–342. [Google Scholar] [CrossRef]

- Cooke, N.A. Posttruth, Truthiness, and Alternative Facts: Information Behavior and Critical Information Consumption for a New Age. Libr. Q. 2017, 87, 211–221. [Google Scholar] [CrossRef]

- Flanagin, A.J.; Metzger, M.J. Perceptions of Internet Information Credibility. J. Mass Commun. Q. 2000, 77, 515–540. [Google Scholar] [CrossRef] [Green Version]

- Van Duyn, E.; Collier, J. Priming and Fake News: The Effects of Elite Discourse on Evaluations of News Media. Mass Commun. Soc. 2019, 22, 29–48. [Google Scholar] [CrossRef]

- Lukowicz, K.; Strzelecki, A. User Satisfaction on Social Media Profile of E-sports Organization. Mark. Manag. Innov. 2020, 4, 61–75. [Google Scholar] [CrossRef]

- Vitak, J.; Kim, J. You can’t block people offline. In Proceedings of the 17th ACM Conference on Computer Supported Cooperative Work & Social Computing, Baltimore, Maryland, 15–19 February 2014; pp. 461–474. [Google Scholar]

- Torres, R.; Gerhart, N.; Negahban, A. Epistemology in the Era of Fake News: An Exploration of Information Verification Behaviors among Social Networking Site Users. ACM SIGMIS Database Database Adv. Inf. Syst. 2018, 49, 78–97. [Google Scholar] [CrossRef]

- Vinodh, S.; Joy, D. Structural Equation Modelling of lean manufacturing practices. Int. J. Prod. Res. 2012, 50, 1598–1607. [Google Scholar] [CrossRef]

- Hox, J.J.; Bechger, T.M. An introduction to structural equation modeling. Stud. Health Technol. Inform. 1999, 213, 3–6. [Google Scholar] [CrossRef]

- Gerhart, N.; Sidorova, A. The Effect of Network Characteristics on Online Identity Management Practices. J. Comput. Inf. Syst. 2017, 57, 229–237. [Google Scholar] [CrossRef]

- Bulgurcu, C. Benbasat Information Security Policy Compliance: An Empirical Study of Rationality-Based Beliefs and Information Security Awareness. MIS Q. 2010, 34, 523. [Google Scholar] [CrossRef] [Green Version]

- McKnight, D.H.; Chervany, N.L. Conceptualizing trust: A typology and e-commerce customer relationships model. In Proceedings of the 34th Annual Hawaii International Conference on System Sciences, Maui, HI, USA, 6 January 2001; IEEE Computer Society: Washington, DC, USA, 2001; p. 10. [Google Scholar]

- Ringle, C.M.; Wende, S.; Becker, J.-M. SmartPLS 3; SmartPLS: Bönningstedt, Germany, 2015. [Google Scholar]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Mark. Sci. 2015, 43, 115–135. [Google Scholar] [CrossRef] [Green Version]

- Lenartowicz, M.; Strzelecki, A. Moderate Effect of Satisfaction on Intention to Follow Business Profiles on Instagram. Int. J. Mark. Commun. New Media 2021, 9, 4–24. [Google Scholar]

- Juszczuk, P.; Kozak, J.; Dziczkowski, G.; Głowania, S.; Jach, T.; Probierz, B. Real-World Data Difficulty Estimation with the Use of Entropy. Entropy 2021, 23, 1621. [Google Scholar] [CrossRef]

- Pilgrim, J.; Vasinda, S. Fake News and the “Wild Wide Web”: A Study of Elementary Students’ Reliability Reasoning. Societies 2021, 11, 121. [Google Scholar] [CrossRef]

- Trninić, D.; Kuprešanin Vukelić, A.; Bokan, J. Perception of “Fake News” and Potentially Manipulative Content in Digital Media—A Generational Approach. Societies 2021, 12, 3. [Google Scholar] [CrossRef]

- Cicha, K.; Rutecka, P.; Rizun, M.; Strzelecki, A. Digital and Media Literacies in the Polish Education System—Pre- and Post-COVID-19 Perspective. Educ. Sci. 2021, 11, 532. [Google Scholar] [CrossRef]

- Iosifidis, P. The public sphere, social networks and public service media. Inf. Commun. Soc. 2011, 14, 619–637. [Google Scholar] [CrossRef] [Green Version]

- Bail, C.A.; Guay, B.; Maloney, E.; Combs, A.; Hillygus, D.S.; Merhout, F.; Freelon, D.; Volfovsky, A. Assessing the Russian Internet Research Agency’s impact on the political attitudes and behaviors of American Twitter users in late 2017. Proc. Natl. Acad. Sci. USA 2020, 117, 243–250. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gary, R. Lies, Propaganda and Fake News: A Challenge for our Age. BBC News, 1 March; 2017.

- Fitzpatrick, N. Media Manipulation 2.0: The Impact of Social Media on News, Competition, and Accuracy. Athens J. Mass Media Commun. 2018, 4, 45–62. [Google Scholar] [CrossRef]

- Seargeant, P.; Tagg, C. Social media and the future of open debate: A user-oriented approach to Facebook’s filter bubble conundrum. Discourse Context Media 2019, 27, 41–48. [Google Scholar] [CrossRef] [Green Version]

- Rubin, V.L.; Chen, Y.; Conroy, N.K. Deception detection for news: Three types of fakes. Proc. Assoc. Inf. Sci. Technol. 2015, 52, 1–4. [Google Scholar] [CrossRef]

- Calvillo, D.P.; Garcia, R.J.B.; Bertrand, K.; Mayers, T.A. Personality factors and self-reported political news consumption predict susceptibility to political fake news. Pers. Individ. Dif. 2021, 174, 110666. [Google Scholar] [CrossRef]

- Calvillo, D.P.; Rutchick, A.M.; Garcia, R.J.B. Individual Differences in Belief in Fake News about Election Fraud after the 2020 U.S. Election. Behav. Sci. 2021, 11, 175. [Google Scholar] [CrossRef] [PubMed]

- Calvillo, D.P.; Ross, B.J.; Garcia, R.J.B.; Smelter, T.J.; Rutchick, A.M. Political Ideology Predicts Perceptions of the Threat of COVID-19 (and Susceptibility to Fake News About It). Soc. Psychol. Personal. Sci. 2020, 11, 1119–1128. [Google Scholar] [CrossRef]

| Gender | Number of Respondents | Percentage |

|---|---|---|

| Female | 143 | 58.4% |

| Male | 102 | 41.6% |

| Age | Number of respondents | Percentage |

| Less than 18 years | 7 | 2.9% |

| 18–24 years | 159 | 64.9% |

| 25–34 years | 60 | 24.5% |

| 35–44 years | 18 | 7.3% |

| 45–54 years | 1 | 0.4% |

| 55–64 years | 0 | 0% |

| 65 years and over | 0 | 0% |

| Education | Number of respondents | Percentage |

| Primary education | 11 | 4.5% |

| Vocational education | 6 | 2.4% |

| Secondary Education | 132 | 53.9% |

| Higher education | 96 | 39.2% |

| Professional status | Number of respondents | Percentage |

| Pupil/student | 157 | 64.1% |

| Employed full-time | 63 | 25.7% |

| Part-time employee | 15 | 6.1% |

| Not employed | 10 | 4.1% |

| The social platform where you most often come across fake news | Number of respondents | Percentage |

| 223 | 91% | |

| 92 | 37.6% | |

| Snapchat | 15 | 6.1% |

| 39 | 15.9% | |

| Wykop.pl | 29 | 11.8% |

| 10 | 4.1% |

| Variable | Item | Loadings | Indicator Reliability | AVE |

|---|---|---|---|---|

| >0.7 | >0.5 | >0.5 | ||

| FNA | FNA2 | 0.891 | 0.794 | 0.584 |

| FNA3 | 0.856 | 0.750 | ||

| FNA5 | 0.864 | 0.747 | ||

| IS | IS1 | 0.959 | 0.920 | 0.887 |

| IS2 | 0.981 | 0.963 | ||

| SMC | SMC1 | 0.943 | 0.891 | 0.812 |

| SMC2 | 0.948 | 0.900 | ||

| SMC3 | 0.954 | 0.912 | ||

| STD | STD1 | 0.923 | 0.853 | 0.585 |

| STD2 | 0.871 | 0.759 | ||

| STD3 | 0.819 | 0.672 | ||

| TPO | TPO1 | 0.924 | 0.855 | 0.713 |

| TPO2 | 0.918 | 0.843 | ||

| TPO3 | 0.913 | 0.834 | ||

| IV | IV1 | 0.902 | 0.814 | 0.673 |

| IV2 | 0.846 | 0.717 | ||

| IV3 | 0.916 | 0.840 | ||

| IV4 | 0.948 | 0.900 | ||

| IV5 | 0.904 | 0.818 |

| Construct | Cronbach’s Alpha | Reliability ρA (rho_A) | Composite Reliability |

|---|---|---|---|

| 0.7–0.9 | >0.7 | >0.7 | |

| FNA | 0.701 | 0.754 | 0.808 |

| IS | 0.877 | 0.963 | 0.940 |

| SMC | 0.884 | 0.887 | 0.928 |

| STD | 0.703 | 0.755 | 0.808 |

| TPO | 0.806 | 0.840 | 0.881 |

| IV | 0.877 | 0.891 | 0.911 |

| FNA | IS | SMC | STD | TPO | |

|---|---|---|---|---|---|

| IS | 0.049 | ||||

| SMC | 0.232 | 0.219 | |||

| STD | 0.372 | 0.085 | 0.182 | ||

| TPO | 0.274 | 0.225 | 0.470 | 0.176 | |

| IV | 0.451 | 0.155 | 0.347 | 0.407 | 0.393 |

| Hypothesis | Path | Path Coefficient | T-Statistics | ƒ2 | p-Value < 0.05 | Hypothesis Supported |

|---|---|---|---|---|---|---|

| H1 | STD → FNA | 0.270 | 3.715 | 0.079 | 0.000 | Yes |

| H2 | TPO → IV | −0.238 | 3.561 | 0.061 | 0.000 | Yes |

| H3 | FNA → SMC | −0.168 | 2.356 | 0.029 | 0.018 | Yes |

| H4 | FNA → IV | 0.267 | 4.084 | 0.091 | 0.000 | Yes |

| H5 | SMC → IV | −0.205 | 2.859 | 0.046 | 0.004 | Yes |

| H6 | IS → IV | 0.191 | 2.534 | 0.046 | 0.011 | Yes |

| Variable | R2 | Q2 |

|---|---|---|

| IV | 0.262 | 0.249 |

| SMC | 0.028 | 0.024 |

| FNA | 0.073 | 0.069 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Majerczak, P.; Strzelecki, A. Trust, Media Credibility, Social Ties, and the Intention to Share towards Information Verification in an Age of Fake News. Behav. Sci. 2022, 12, 51. https://doi.org/10.3390/bs12020051

Majerczak P, Strzelecki A. Trust, Media Credibility, Social Ties, and the Intention to Share towards Information Verification in an Age of Fake News. Behavioral Sciences. 2022; 12(2):51. https://doi.org/10.3390/bs12020051

Chicago/Turabian StyleMajerczak, Przemysław, and Artur Strzelecki. 2022. "Trust, Media Credibility, Social Ties, and the Intention to Share towards Information Verification in an Age of Fake News" Behavioral Sciences 12, no. 2: 51. https://doi.org/10.3390/bs12020051

APA StyleMajerczak, P., & Strzelecki, A. (2022). Trust, Media Credibility, Social Ties, and the Intention to Share towards Information Verification in an Age of Fake News. Behavioral Sciences, 12(2), 51. https://doi.org/10.3390/bs12020051