Assessing Teaching Effectiveness in Blended Learning Methodologies: Validity and Reliability of an Instrument with Behavioral Anchored Rating Scales

Abstract

1. Introduction

1.1. Behavioral Anchored Rating Scales (BARS) and the Assessment of Teaching Effectiveness

1.2. Validity and Reliability of the Instruments Used the Assess Teaching Effectiveness

1.2.1. Validity

1.2.2. Reliability

1.3. Objective

- RQ1: Is the BARS questionnaire examined a valid instrument to assess teaching effectiveness in blended learning methodologies?

- RQ2: Is the BARS questionnaire examined a reliable instrument to assess teaching effectiveness in blended learning methodologies?

2. Materials and Methods

2.1. Instrument

2.2. Participants

2.3. Phases of the Analysis

3. Results

3.1. Comprehension Validity Analysis

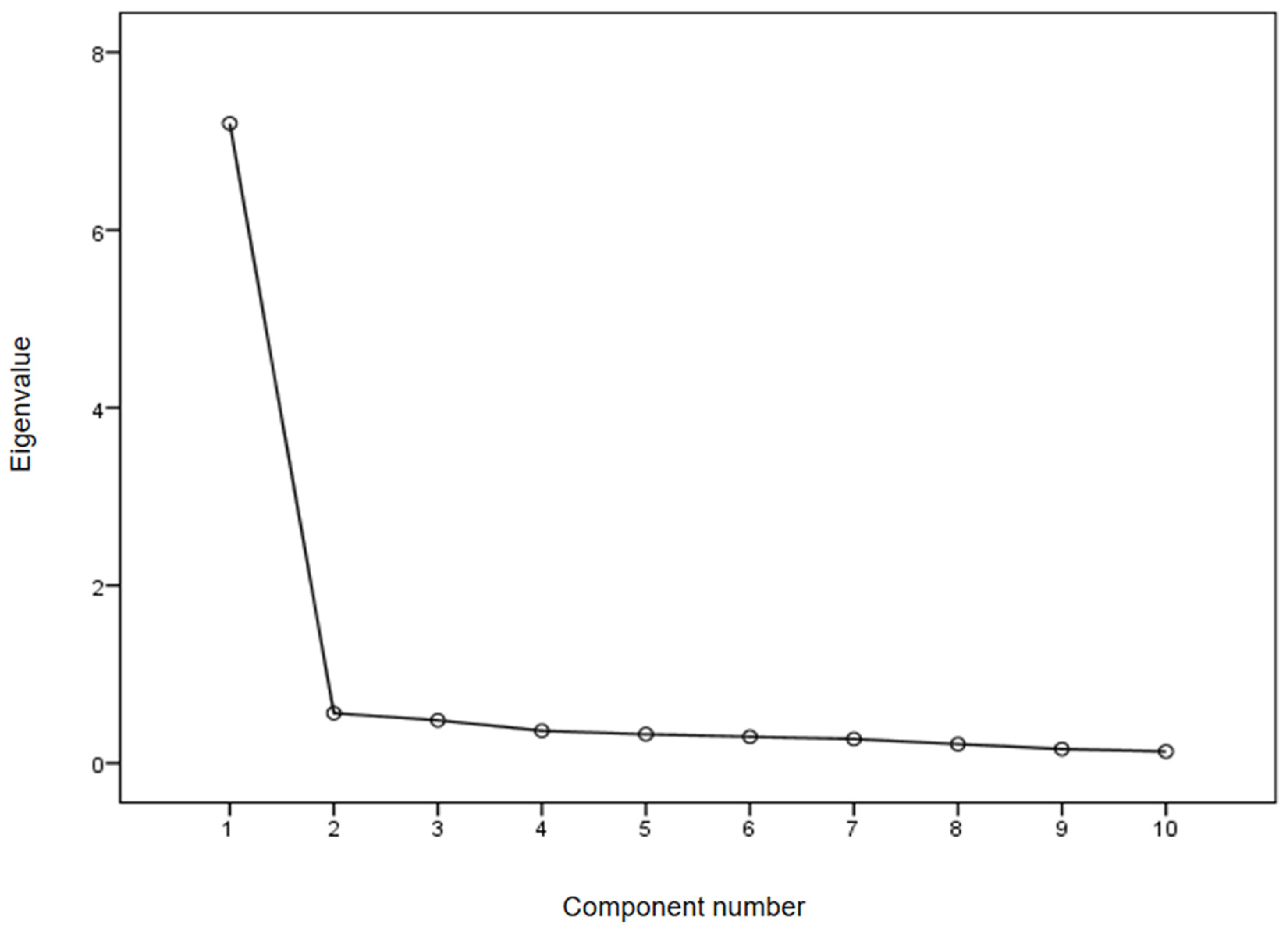

3.2. Construct Validity Analysis

- Factor 1. The construct with six items (General satisfaction, Follow-up easiness, Dealing with doubts, General availability, Explicative capacity, and Time management) explains 42.92% of the variance. This construct encompasses aspects related to the teacher’s skills (for example, dealing with doubts or explicative capacity), as well as others that refer to the teacher’s attitude during the course (for example, follow-up ease or availability). This factor is named by the authors as Teacher’s Aptitude and Attitude.

- Factor 2. The construct with four items (Evaluation system implementation, Course introduction, Evaluation system description, and Organizational consistency) explains 34.68% of the variance. This construct involves aspects pertaining to the presentation and organization of the course, as well as those related to the evaluation system. The researchers name this factor Structure and Evaluation.

3.3. Confirmation of Construct Validity

3.4. Analysis of the Instrument Reliability

3.5. Descriptive Results Obtained with the Instrument

4. Discussion and Conclusions

Limitations and Directions for Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

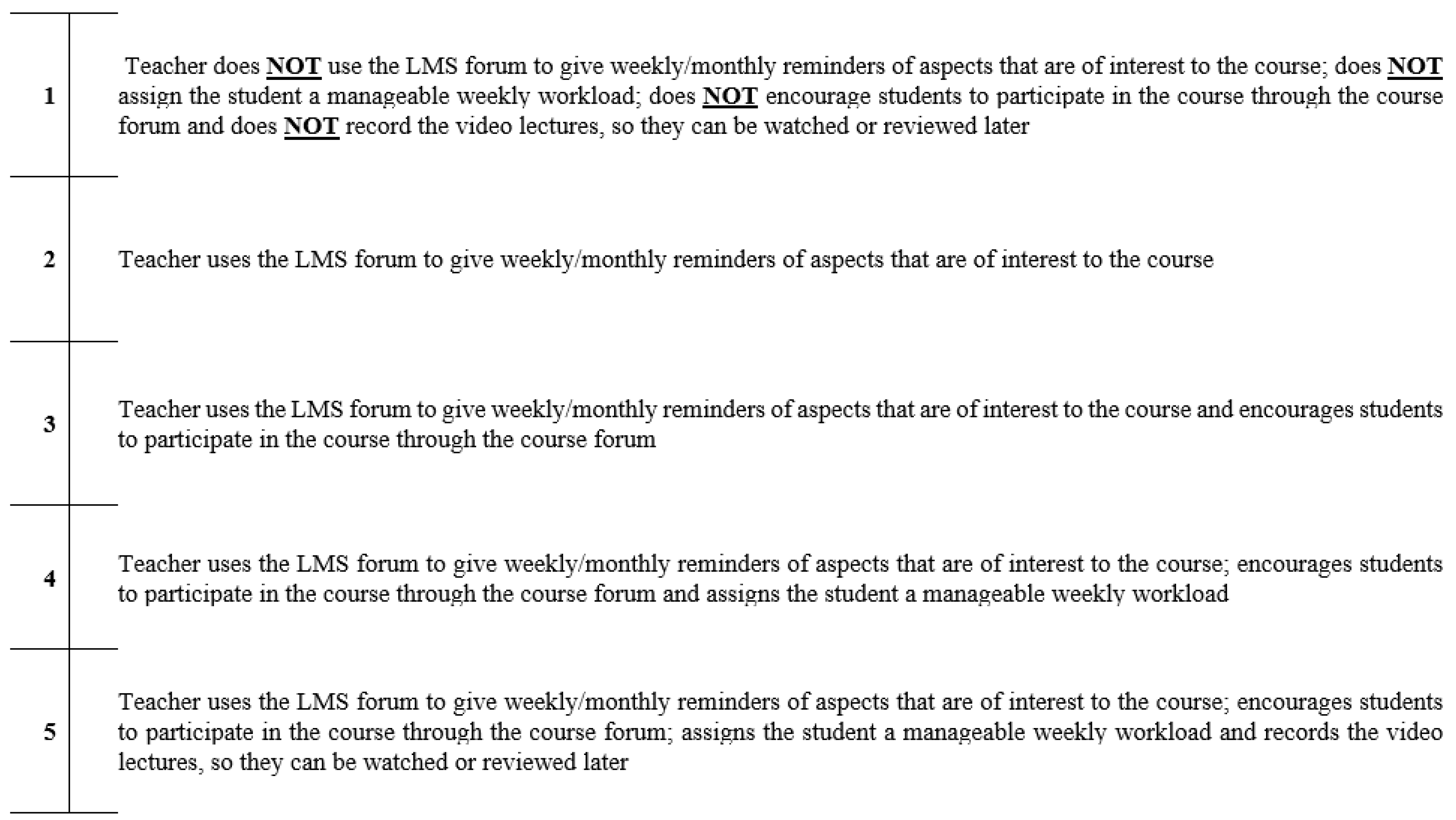

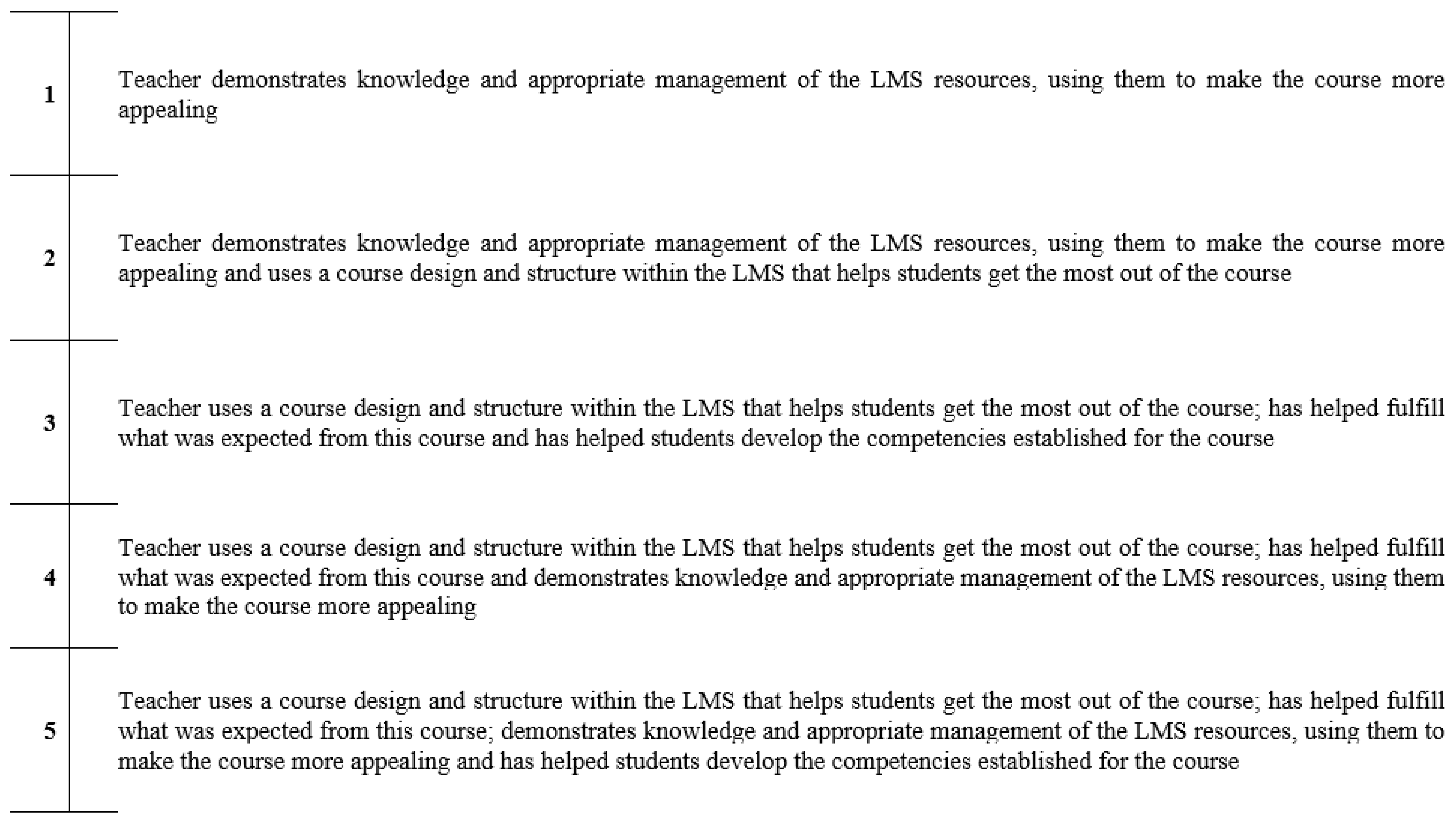

Appendix A

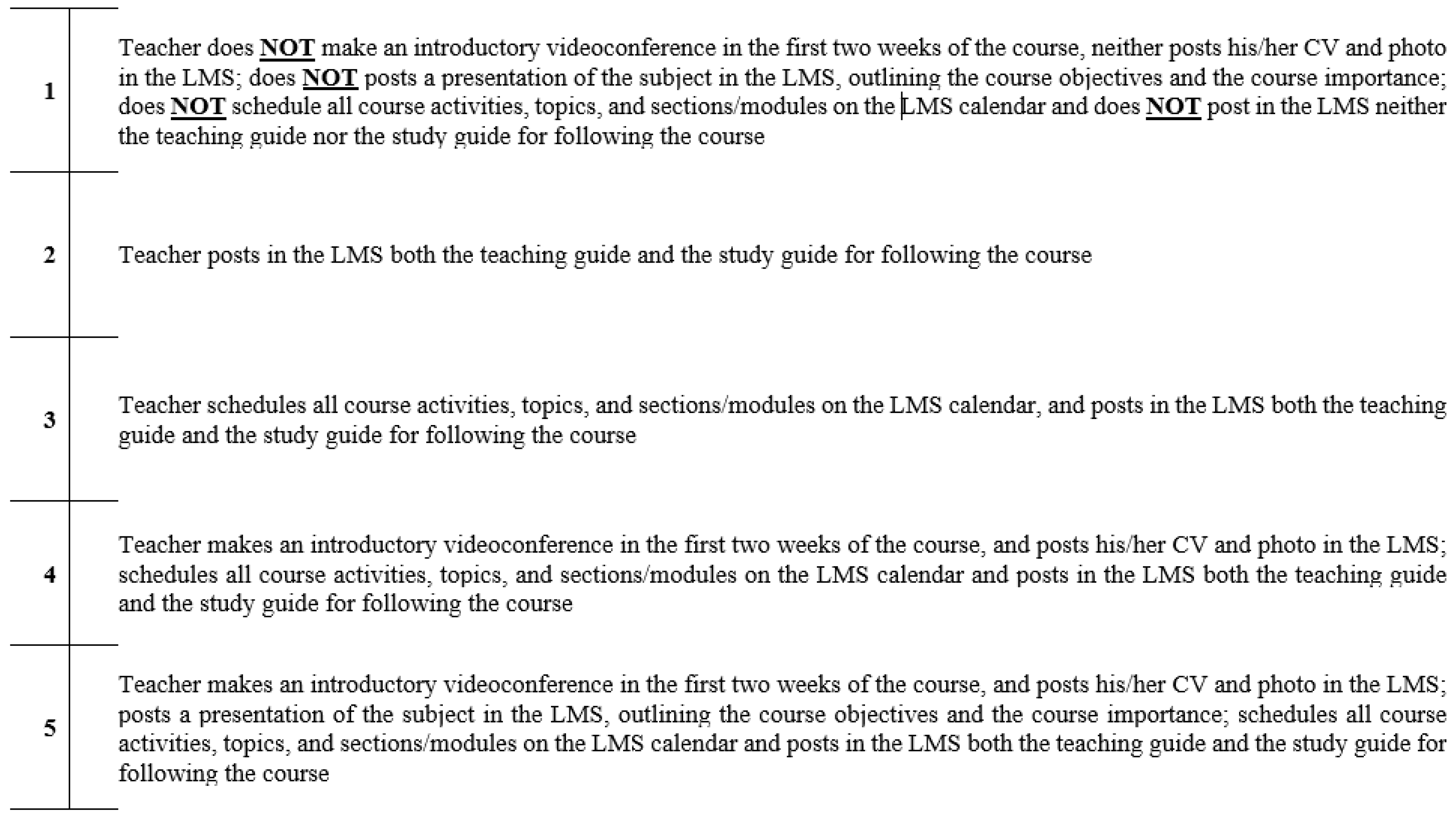

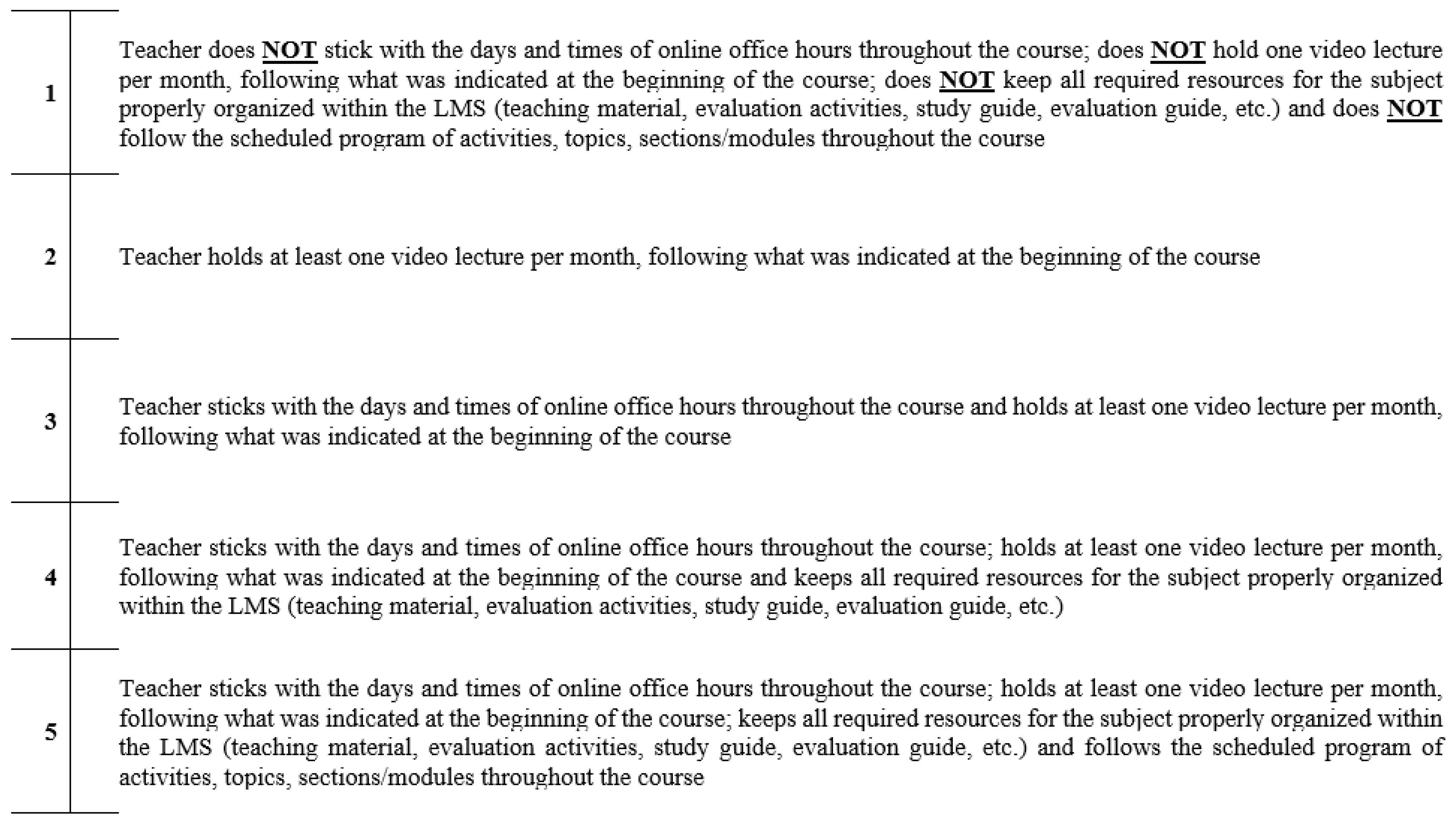

Appendix A.1. Course Introduction

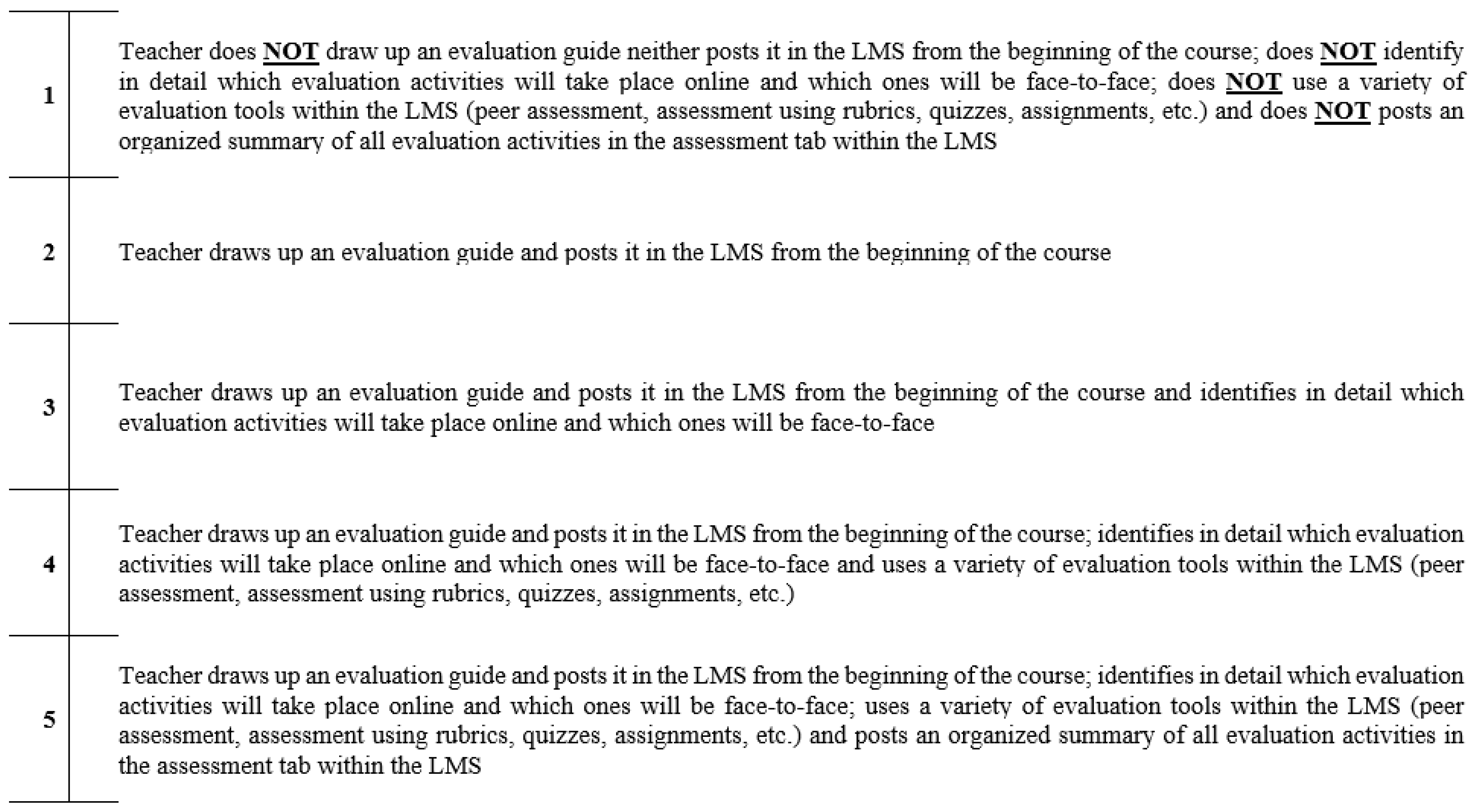

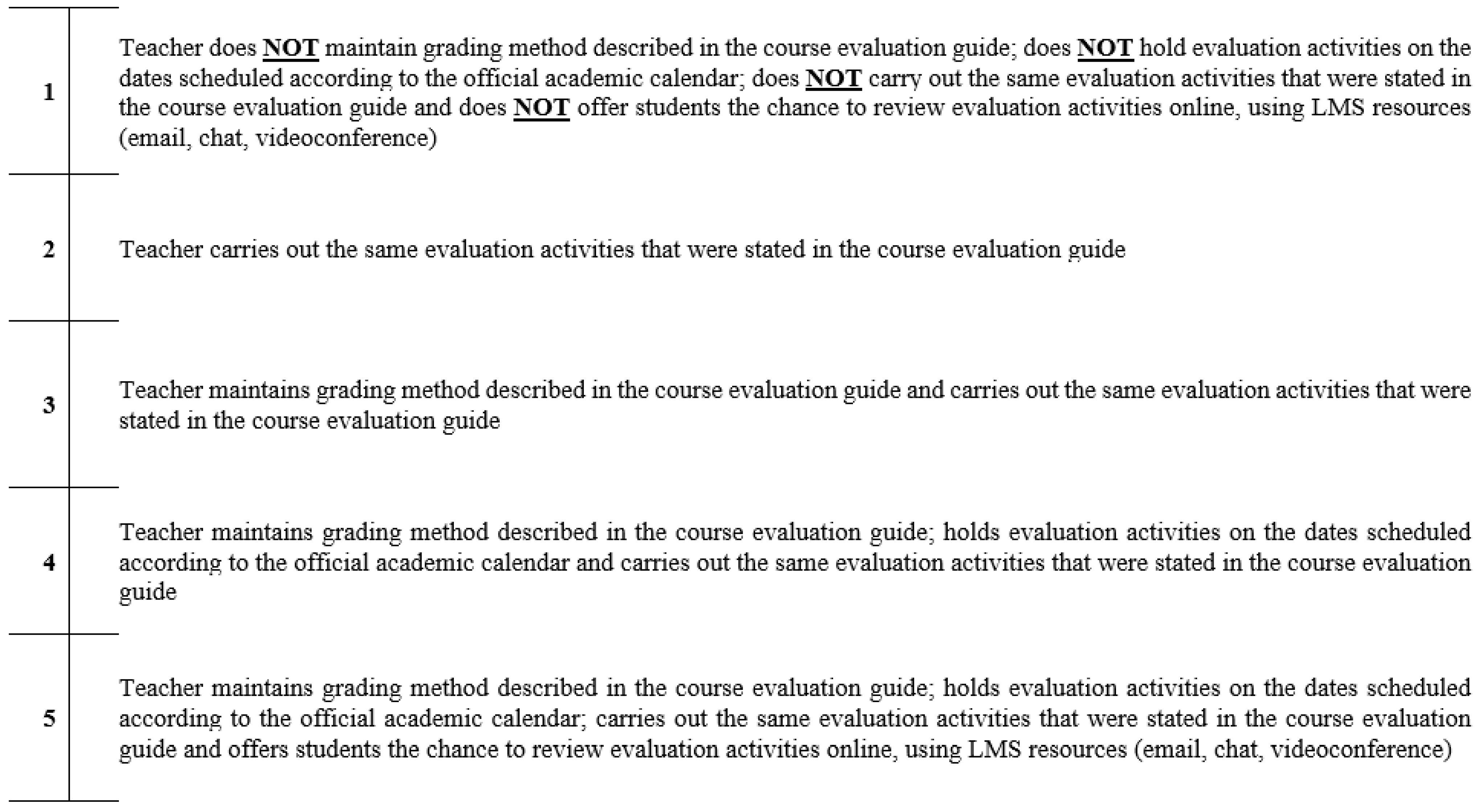

Appendix A.2. Evaluation System Description

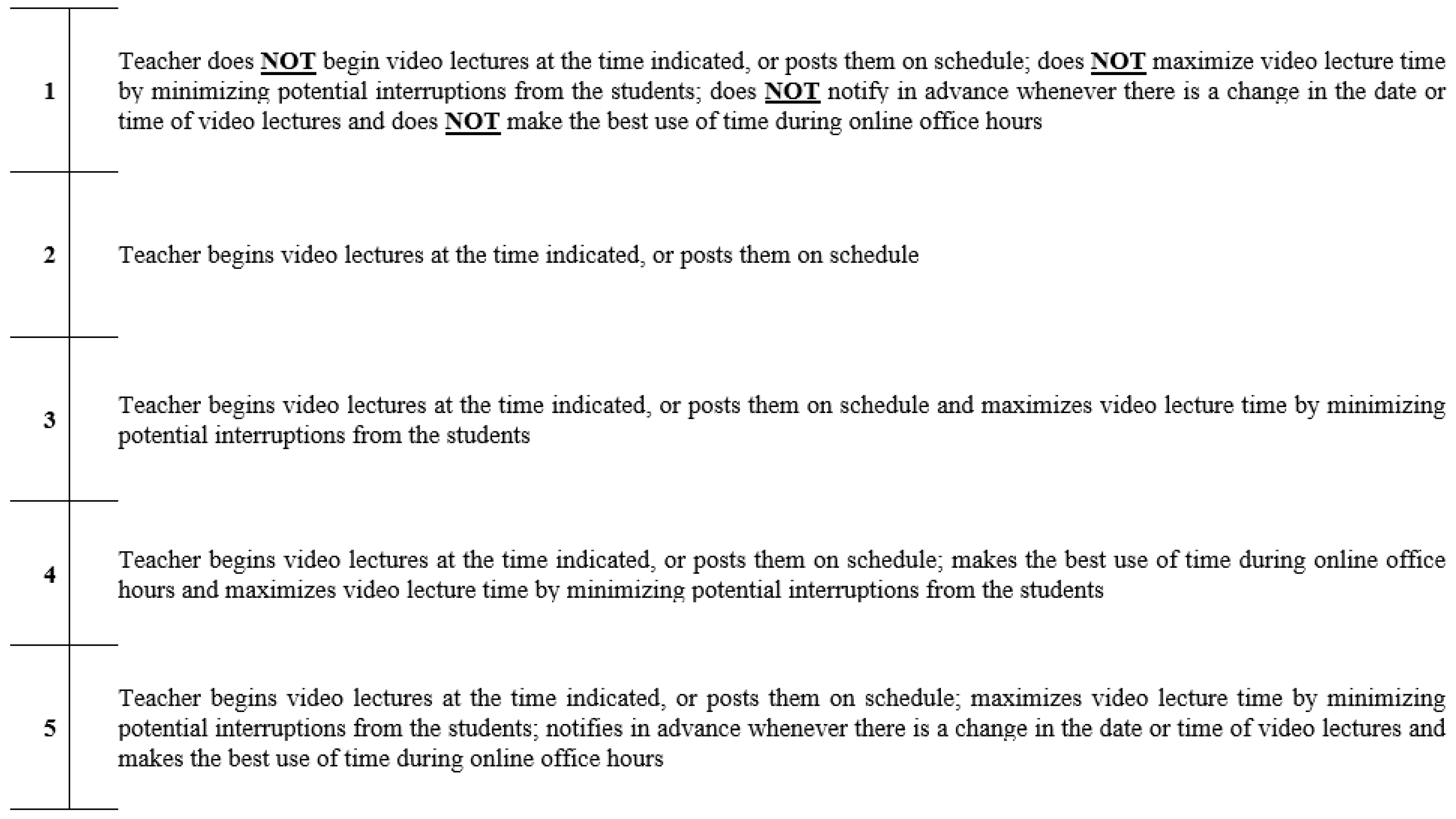

Appendix A.3. Time Management

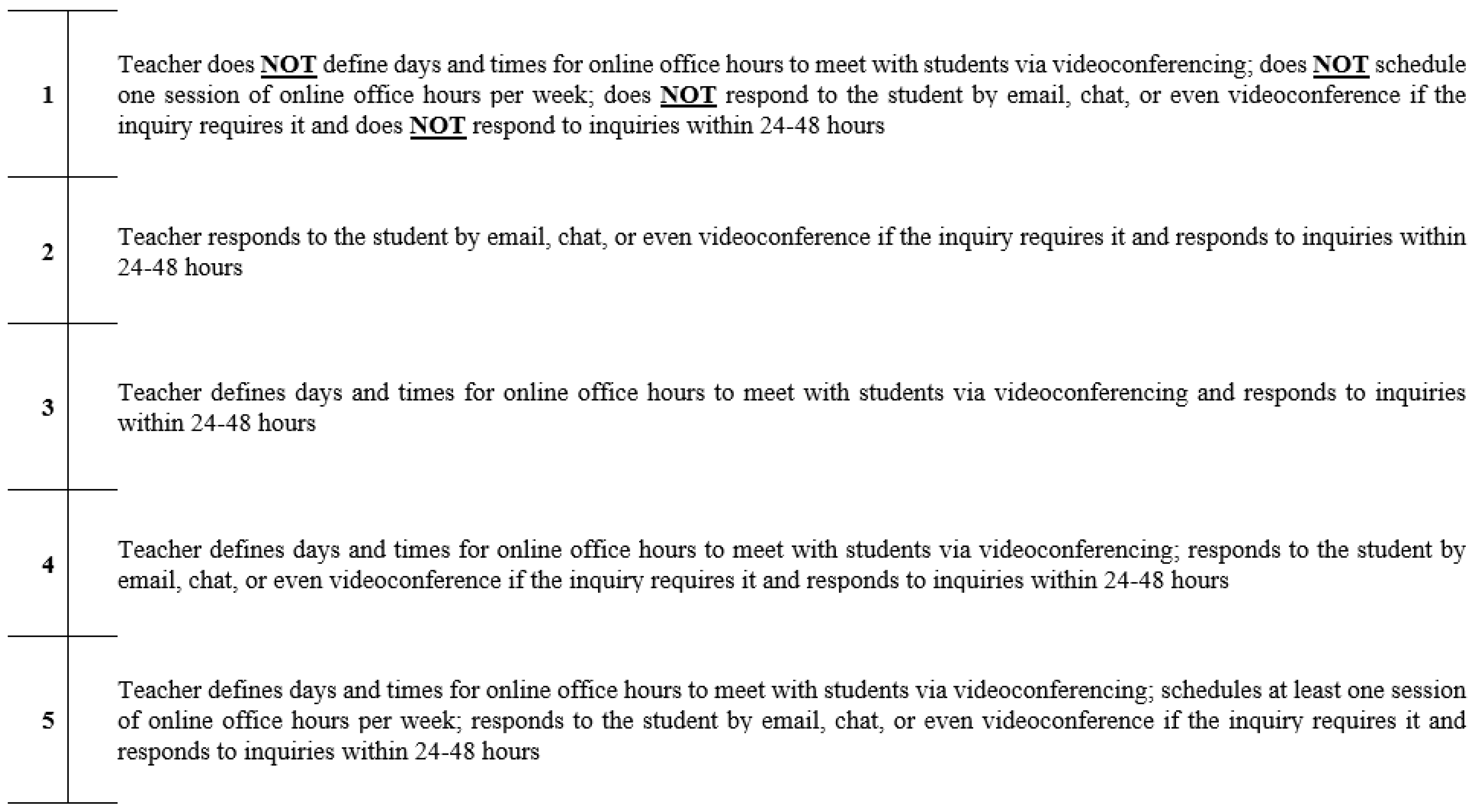

Appendix A.4. General Availability

Appendix A.5. Organizational Consistency

Appendix A.6. Evaluation System Implementation

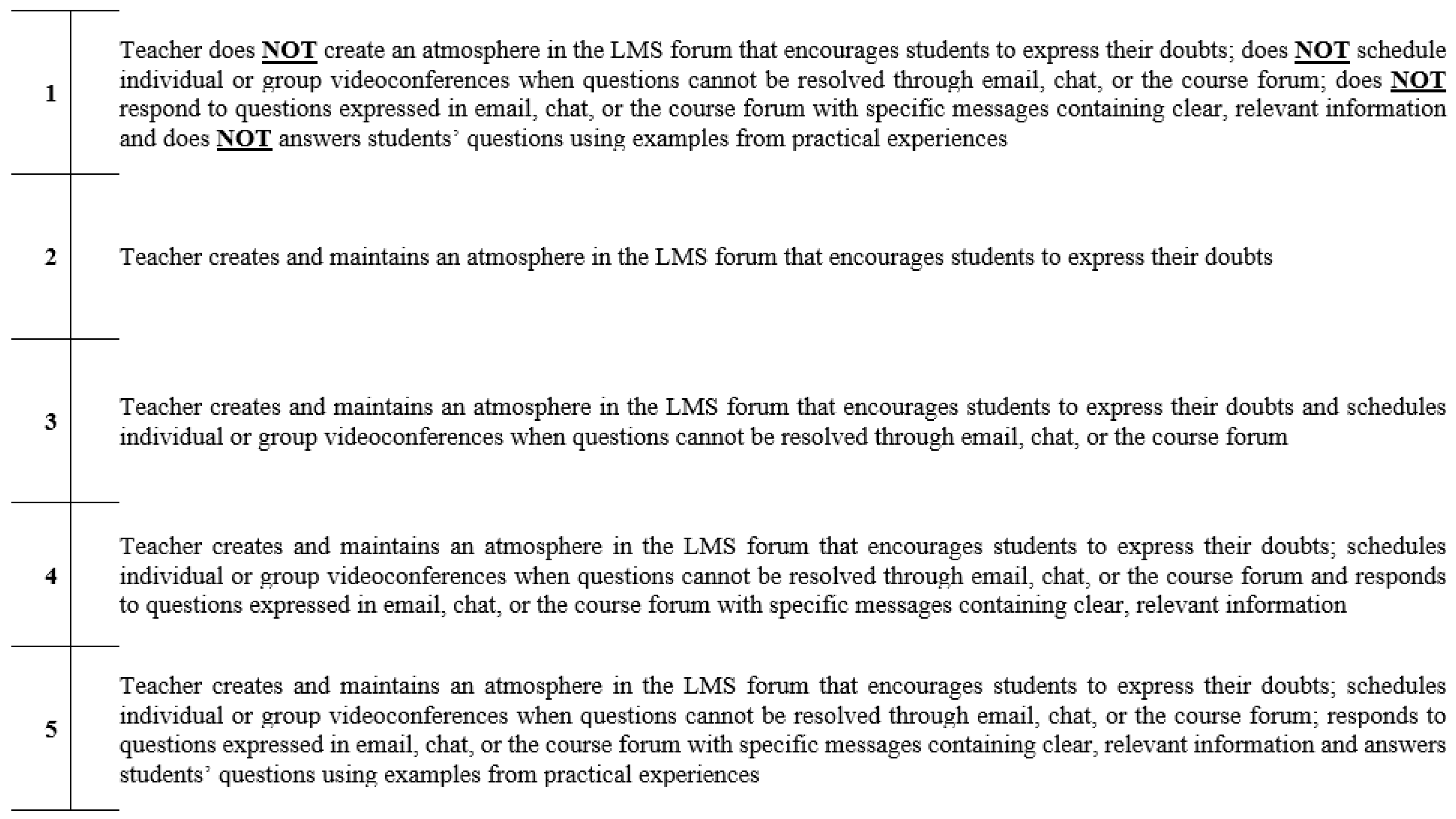

Appendix A.7. Dealing with Doubts

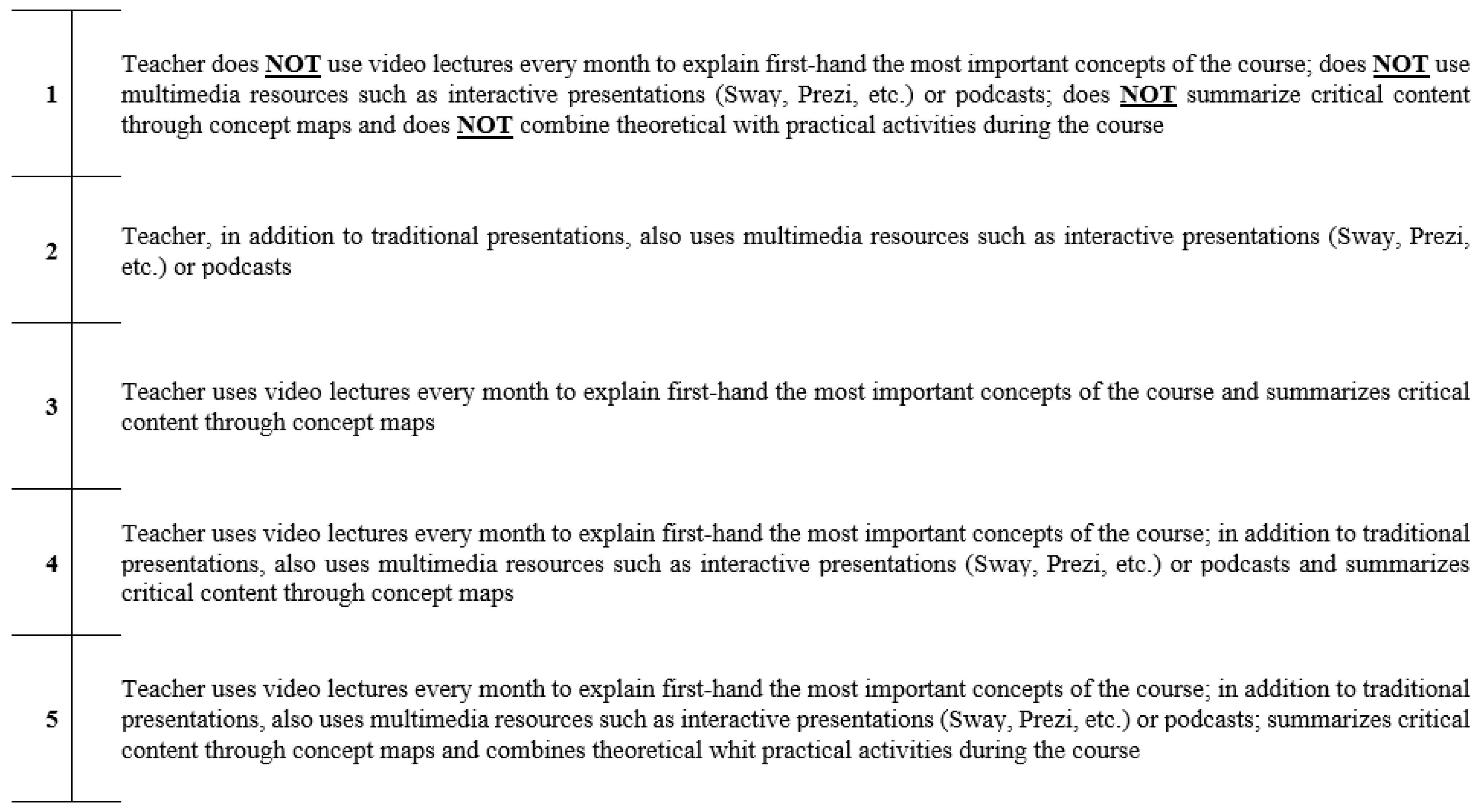

Appendix A.8. Explicative Capacity

Appendix A.9. Follow-Up Easiness

Appendix A.10. General Satisfaction

References

- Singh, J.; Steele, K.; Singh, L. Combining the Best of Online and Face-to-Face Learning: Hybrid and Blended Learning Approach for COVID-19, Post Vaccine, & Post-Pandemic World. J. Educ. Technol. Syst. 2021, 50, 140–171. [Google Scholar]

- Ayman, U.; Kaya, A.K.; Kuruç, Ü.K. The impact of digital communication and pr models on the sustainability of higher education during crises. Sustainability 2020, 12, 8295. [Google Scholar] [CrossRef]

- Aznam, N.; Perdana, R.; Jumadi, J.; Nurcahyo, H.; Wiyatmo, Y. Motivation and satisfaction in online learning during COVID-19 pandemic: A systematic review. Int. J. Eval. Res. Educ. 2022, 11, 753–762. [Google Scholar] [CrossRef]

- Rizvi, F. Reimagining recovery for a more robust internationalization. High. Educ. Res. Dev. 2020, 39, 1313–1316. [Google Scholar] [CrossRef]

- Alhazmi, R.A.; Alghadeer, S.; Al-Arifi, M.N.; Alamer, A.A.; Mubarak, A.M.; Alwhaibi, A.; Alfayez, R.; Alsubaie, S. Prevalence and Factors of Anxiety During the Coronavirus-2019 Pandemic Among Teachers in Saudi Arabia. Front. Public Health 2022, 10, 554. [Google Scholar] [CrossRef] [PubMed]

- Matosas-López, L. La innovación docente de base tecnológica ante su primera prueba de resistencia real. In Estrategias de Adaptación Metodológica y Tecnología Ante la Pandemia del COVID-19; Caceres, C., Borras, O., Esteban, N., Becerra, D., Eds.; Dykinson: Madrid, Spain, 2021; pp. 523–531. ISBN 978-84-1377-930-0. [Google Scholar]

- Aristovnik, A.; Keržič, D.; Ravšelj, D.; Tomaževič, N.; Umek, L. Impacts of the COVID-19 pandemic on life of higher education students: A global perspective. Sustainability 2020, 12, 8438. [Google Scholar] [CrossRef]

- Gruber, T.; Reppel, A.; Voss, R. Understanding the characteristics of effective professors: The student’s perspective. J. Mark. High. Educ. 2010, 20, 175–190. [Google Scholar] [CrossRef]

- Yau, O.; Kwan, W. The Teaching Evaluation Process: Segmentation of Marketing Students. J. Mark. High. Educ. 1993, 4, 309–323. [Google Scholar] [CrossRef]

- de Oliveira Santini, F.; Ladeira, W.J.; Sampaio, C.H.; da Silva Costa, G. Student satisfaction in higher education: A meta-analytic study. J. Mark. High. Educ. 2017, 27, 1–18. [Google Scholar] [CrossRef]

- Clayson, D.E. Student Evaluations of Teaching: Are They Related to What Students Learn? J. Mark. Educ. 2009, 31, 16–30. [Google Scholar] [CrossRef]

- Murray, H.G. Student Evaluation of Teaching: Has It Made a Difference? In Proceedings of the Fifth Annual International Conference on the Scholarship of Teaching and Learning (SoTL) 2005, London, UK, 12–13 May 2005. [Google Scholar]

- Darawong, C.; Sandmaung, M. Service quality enhancing student satisfaction in international programs of higher education institutions: A local student perspective. J. Mark. High. Educ. 2019, 29, 268–283. [Google Scholar] [CrossRef]

- Matosas-López, L.; García-Sánchez, B. Beneficios de la distribución de cuestionarios web de valoración docente a través de mensajería SMS en el ámbito universitario: Tasas de participación, inversión de tiempo al completar el cuestionario y plazos de recogida de datos. Rev. Complut. Educ. 2019, 30, 831–845. [Google Scholar] [CrossRef]

- Ruiz-Corbella, M.; Aguilar-Feijoo, R.-M. Competencias del profesor universitario; elaboración y validación de un cuestionario de autoevaluación. Rev. Iberoam. Educ. Super. 2017, 8, 37–65. [Google Scholar] [CrossRef]

- Lizasoain-Hernández, L.; Etxeberria-Murgiondo, J.; Lukas-Mujika, J.F. Propuesta de un nuevo cuestionario de evaluación de los profesores de la Universidad del País Vasco. Estudio psicométrico, dimensional y diferencial. Reli. Rev. Electrón. Investig. Eval. Educ. 2017, 23, 1–21. [Google Scholar] [CrossRef][Green Version]

- Andrade-Abarca, P.S.; Ramón-Jaramillo, L.N.; Loaiza-Aguirre, M.I. Aplicación del SEEQ como instrumento para evaluar la actividad docente universitaria. Rev. Investig. Educ. 2018, 36, 259–275. [Google Scholar] [CrossRef]

- Matosas-López, L.; Bernal-Bravo, C.; Romero-Ania, A.; Palomero-Ilardia, I. Quality Control Systems in Higher Education Supported by the Use of Mobile Messaging Services. Sustainability 2019, 11, 6063. [Google Scholar] [CrossRef]

- Bangert, A.W. The development and validation of the student evaluation of online teaching effectiveness. Comput. Sch. 2008, 25, 25–47. [Google Scholar] [CrossRef]

- García Mestanza, J. Propuesta de evaluación de la actividad docente universitaria en entornos virtuales de aprendizaje. Rev. Española Pedagog. 2010, 246, 261–280. [Google Scholar]

- Cañadas, I.; Cuétara, I. De Estudio psicométrico y validación de un cuestionario para la evaluación del profesorado universitario de enseñanza a distancia. Rev. Estud. Investig. Psicol. Educ. 2018, 5, 102–112. [Google Scholar]

- Smith, P.C.; Kendall, L.M. Retranslation of Expectations: An approach to the construction of unambiguous anchors for rating scales. J. Appl. Psychol. 1963, 47, 149–155. [Google Scholar] [CrossRef]

- Woods, R.H.; Sciarini, M.; Breiter, D. Performance appraisals in hotels: Widespread and valuable. Cornell Hotel. Restaur. Adm. Q. 1998, 39, 25–29. [Google Scholar] [CrossRef]

- MacDonald, H.A.; Sulsky, L.M. Rating formats and rater training redux: A context-specific approach for enhancing the effectiveness of performance management. Can. J. Behav. Sci. 2009, 41, 227–240. [Google Scholar] [CrossRef]

- Al-Hazzaa, H.M.; Alothman, S.A.; Albawardi, N.M.; Alghannam, A.F.; Almasud, A.A. An Arabic Sedentary Behaviors Questionnaire (ASBQ): Development, Content Validation, and Pre-Testing Findings. Behav. Sci. 2022, 12, 183. [Google Scholar] [CrossRef] [PubMed]

- Magnano, P.; Faraci, P.; Santisi, G.; Zammitti, A.; Zarbo, R.; Howard, M.C. Psychometric Investigation of the Workplace Social Courage Scale (WSCS): New Evidence for Measurement Invariance and IRT Analysis. Behav. Sci. 2022, 12, 119. [Google Scholar] [CrossRef] [PubMed]

- Vanacore, A.; Pellegrino, M.S. How Reliable are Students’ Evaluations of Teaching (SETs)? A Study to Test Student’s Reproducibility and Repeatability. Soc. Indic. Res. 2019, 146, 77–89. [Google Scholar] [CrossRef]

- Zhao, J.; Gallant, D.J. Student evaluation of instruction in higher education: Exploring issues of validity and reliability. Assess. Eval. High. Educ. 2012, 37, 227–235. [Google Scholar] [CrossRef]

- Murphy, K.R.; Pardaffy, V.A. Bias in Behaviorally Anchored Rating Scales: Global or scale-specific? J. Appl. Psychol. 1989, 74, 343–346. [Google Scholar] [CrossRef]

- Bernardin, H.J.; Alvares, K.M.; Cranny, C.J. A recomparison of behavioral expectation scales to summated scales. J. Appl. Psychol. 1976, 61, 564–570. [Google Scholar] [CrossRef]

- Borman, W.C.; Dunnette, M.D. Behavior-based versus trait-oriented performance ratings: An empirical study. J. Appl. Psychol. 1975, 60, 561–565. [Google Scholar] [CrossRef]

- Debnath, S.C.; Lee, B.; Tandon, S. Fifty years and going strong: What makes Behaviorally Anchored Rating Scales so perennial as an appraisal method? Int. J. Bus. Soc. Sci. 2015, 6, 16–25. [Google Scholar]

- Martin-Raugh, M.; Tannenbaum, R.J.; Tocci, C.M.; Reese, C. Behaviourally Anchored Rating Scales: An application for evaluating teaching practice. Teach. Teach. Educ. 2016, 59, 414–419. [Google Scholar] [CrossRef]

- Bearden, R.M.; Wagner, M.; Simon, R. Developing Behaviorally Anchored Rating Scales for the Machinist’s Mate Rating; Navy Personnel Research and Development Center: San Diego, CA, USA, 1988. [Google Scholar]

- Goodale, J.G.; Burke, R.J. Behaviorally based rating scales need not be job specific. J. Appl. Psychol. 1975, 60, 389–391. [Google Scholar] [CrossRef]

- Borman, W. Job Behavior, Performance, and Effectiveness. In Handbook of Industrial and Organizational Psychology; Dunnette, M.D., Hough, L.M., Eds.; Consulting Psychologists Press, Inc.: Palo Alto, CA, USA, 1991; pp. 271–326. [Google Scholar]

- Bernardin, H.J.; Beatty, R.W. Performance Appraisal: Assessing Human Behavior at Work; PWS, Ed.; Kent Pub. Co.: Boston, MA, USA, 1984; ISBN 0534013988. [Google Scholar]

- Jacobs, R.; Kafry, D.; Zedeck, S. Expectations of behaviorally anchored rating scales. Pers. Psychol. 1980, 33, 595–640. [Google Scholar] [CrossRef]

- Kavanagh, M.J.; Duffy, J.F. An extension and field test of the retranslation method for developing rating scales. Pers. Psychol. 1978, 31, 461–470. [Google Scholar] [CrossRef]

- Fernández Millán, J.M.; Fernández Navas, M. Elaboración de una escala de evaluación de desempeño para educadores sociales en centros de protección de menores. Intang. Cap. 2013, 9, 571–589. [Google Scholar]

- Hom, P.W.; DeNisi, A.S.; Kinicki, A.J.; Bannister, B.D. Effectiveness of performance feedback from behaviorally anchored rating scales. J. Appl. Psychol. 1982, 67, 568–576. [Google Scholar] [CrossRef]

- Matosas-López, L.; Leguey-Galán, S.; Doncel-Pedrera, L.M. Converting Likert scales into Behavioral Anchored Rating Scales(Bars) for the evaluation of teaching effectiveness for formative purposes. J. Univ. Teach. Learn. Pract. 2019, 16, 1–24. [Google Scholar] [CrossRef]

- Dickinson, T.L.; Zellinger, P.M. A comparison of the behaviorally anchored rating and mixed standard scale formats. J. Appl. Psychol. 1980, 65, 147–154. [Google Scholar] [CrossRef]

- Harari, O.; Zedeck, S. Development of Behaviorally Anchored Scales for the Evaluation of Faculty Teaching. J. Appl. Psychol. 1973, 58, 261–265. [Google Scholar] [CrossRef]

- Matosas-López, L.; Romero-Ania, A.; Cuevas-Molano, E. ¿Leen los universitarios las encuestas de evaluación del profesorado cuando se aplican incentivos por participación? Una aproximación empírica. Rev. Iberoam. Sobre Calid. Efic. Cambio Educ. 2019, 17, 99–124. [Google Scholar] [CrossRef]

- Akour, M.M.; Hammad, B.K. Psychometric Properties of an Instrument Developed to Assess Students’ Evaluation of Teaching in Higher Education. J. Educ. Psychol. Stud. 2020, 14, 656. [Google Scholar] [CrossRef]

- Spooren, P.; Mortelmans, D.; Denekens, J. Student evaluation of teaching quality in higher education: Development of an instrument based on 10 Likert-scales. Assess. Eval. High. Educ. 2007, 32, 667–679. [Google Scholar] [CrossRef]

- Kember, D.; Leung, D.Y.P. Establishing the validity and reliability of course evaluation questionnaires. Assess. Eval. High. Educ. 2008, 33, 341–353. [Google Scholar] [CrossRef]

- Spooren, P.; Brockx, B.; Mortelmans, D. On the Validity of Student Evaluation of Teaching: The State of the Art. Rev. Educ. Res. 2013, 83, 598–642. [Google Scholar] [CrossRef]

- Usart Rodríguez, M.; Cantabrana Lázaro, J.L.; Gisbert Cervera, M. Validación de una herramienta para autoevaluar la competencia digital docente. Educ. XX1 2021, 24, 353–373. [Google Scholar]

- Lacave Rodero, C.; Molina Díaz, A.I.; Fernández Guerrero, M.M.; Redondo Duque, M.A. Análisis de la fiabilidad y validez de un cuestionario docente. Rev. Investig. Docencia Univ. Inf. 2016, 9, 2. [Google Scholar]

- Lloret-Segura, S.; Ferreres-Traver, A.; Hernández-Baeza, I.; Tomás-Marco, A. El análisis factorial exploratorio de los ítems: Una guía práctica, revisada y actualizada. An. Psicol. 2014, 30, 1151–1169. [Google Scholar] [CrossRef]

- Calderón, A.; Arias-Estero, J.L.; Meroño, L.; Méndez-Giménez, A. Diseño y Validación del Cuestionario de Percepción del Profesorado de Educación Primaria sobre la Inclusión de las Competencias Básicas (#ICOMpri3). Estud. Sobre Educ. 2018, 34, 67–97. [Google Scholar]

- Casero Martínez, A. Propuesta de un cuestionario de evaluación de la calidad docente universitaria consensuado entre alumnos y profesores. Rev. Investig. Educ. 2008, 26, 25–44. [Google Scholar]

- Lukas, J.F.; Santiago, K.; Etxeberria, J.; Lizasoain, L. Adaptación al Espacio Europeo de Educación Superior de un cuestionario de opinión del alumnado sobre la docencia de su profesorado. Reli. Rev. Electrón. Investig. Eval. Educ. 2014, 20, 1–20. [Google Scholar] [CrossRef][Green Version]

- Lemos, M.S.; Queirós, C.; Teixeira, P.M.; Menezes, I. Development and validation of a theoretically based, multidimensional questionnaire of student evaluation of university teaching. Assess. Eval. High. Educ. 2011, 36, 843–864. [Google Scholar] [CrossRef]

- Apodaca, P.; Grad, H. The dimensionality of student ratings of teaching: Integration of uni- and multidimensional models. Stud. High. Educ. 2005, 30, 723–748. [Google Scholar] [CrossRef]

- Abrami, P.C.; d’Apollonia, S. Multidimensional students’ evaluations of teaching effectiveness: Generalizability of “N=1” research: Comment on Marsh (1991). J. Educ. Psychol. 1991, 83, 411–415. [Google Scholar] [CrossRef]

- Marsh, H.W. A multidimensional perspective on students evaluations of teaching effectiveness-reply to Abrami and Dapollonia (1991). J. Educ. Psychol. 1991, 83, 416–421. [Google Scholar] [CrossRef]

- Sun, A.; Valiga, M.J.; Gao, X. ACT Using Generalizability Theory to Assess the Reliability of Student Ratings of Academic Advising. J. Exp. Educ. 1997, 65, 367–379. [Google Scholar] [CrossRef]

- D’Ancona, M.A.C. Métodos de Encuesta: Teoría y Práctica, Errores y Mejora; Síntesis: Madrid, Spain, 2004; ISBN 9788497562508. [Google Scholar]

- Varela Mallou, J.; Lévy Mangin, J.P. Modelización con Estructuras de Covarianzas en Ciencias Sociales: Temas Esenciales, Avanzados y Aportaciones Especiales—Dialnet; Netbiblo: Madrid, Spain, 2006. [Google Scholar]

- Candela, P.P.; Gumbo, M.T.; Mapotse, T.A. Adaptation of the Attitude Behavioural Scale section of a PATT instrument for the Omani context. Int. J. Technol. Des. Educ. 2021, 32, 1605–1627. [Google Scholar] [CrossRef]

- Spooren, P.; Vandermoere, F.; Vanderstraeten, R.; Pepermans, K. Exploring high impact scholarship in research on student’s evaluation of teaching (SET). Educ. Res. Rev. 2017, 22, 129–141. [Google Scholar] [CrossRef]

- Uttl, B.; White, C.A.; Gonzalez, D.W. Meta-analysis of faculty’s teaching effectiveness: Student evaluation of teaching ratings and student learning are not related. Stud. Educ. Eval. 2017, 54, 22–42. [Google Scholar] [CrossRef]

- Moreno Guerrero, A.J. Estudio bibliométrico de la producción científica en Web of Science: Formación Profesional y blended learning. Pixel-Bit Rev. Medios Educ. 2019, 56, 149–168. [Google Scholar]

- Matosas-López, L.; Aguado-Franco, J.C.; Gómez-Galán, J. Constructing an instrument with behavioral scales to assess teaching quality in blended learning modalities. J. New Approaches Educ. Res. 2019, 8, 142–165. [Google Scholar] [CrossRef]

- Aniţei, M.; Chraif, M. A Model for the Core Competences Validation Using Behavioral Anchored Rated Scales within the Romanian Agency for Employment as Changing Organization. In Proceedings of the International Conference on Humanity, History and Society IPEDR, Bangkok, Thailand, 7–8 April 2012; IACSIT Press: Singapore, 2012; pp. 52–57. [Google Scholar]

- Ficapal-Cusí, P.; Torrent-Sellens, J.; Boada-Grau, J.; Sánchez-García, J.-C. Evaluación del e-learning en la formación para el empleo: Estructura factorial y fiabilidad. Rev. Educ. 2013, 361, 539–564. [Google Scholar]

- Gómez-García, M.; Matosas-López, L.; Palmero-Ruiz, J. Social Networks Use Patterns among University Youth: The Validity and Reliability of an Updated Measurement Instrument. Sustainability 2020, 12, 3503. [Google Scholar] [CrossRef]

- Carter, R.E. Faculty scholarship has a profound positive association with student evaluations of teaching—Except when it doesn’t. J. Mark. Educ. 2016, 38, 18–36. [Google Scholar] [CrossRef]

- Luna Serrano, E. Validación de constructo de un cuestionario de evaluación de la competencia docente. Rev. Electron. Investig. Educ. 2015, 17, 14–27. [Google Scholar]

- Marsh, H.; Guo, J.; Dicke, T.; Parker, P. Confirmatory Factor Analysis (CFA), Exploratory Structural Equation Modeling (ESEM) & Set-ESEM: Optimal Balance between Goodness of Fit and Parsimony. Multivar. Behav. Res. 2020, 55, 102–119. [Google Scholar]

- Spooren, P.; Mortelmans, D.; Christiaens, W. Assessing the validity and reliability of a quick scan for student’s evaluation of teaching. Results from confirmatory factor analysis and G Theory. Stud. Educ. Eval. 2014, 43, 88–94. [Google Scholar] [CrossRef]

- Timmerman, M.E.; Lorenzo-Seva, U. Dimensionality assessment of ordered polytomous items with parallel analysis. Psychol. Methods 2011, 16, 209–220. [Google Scholar] [CrossRef] [PubMed]

- Ruiz Carrascosa, J. La evaluación de la docencia en los planes de mejora de la universidad. Educ. XX1 2005, 8, 87–102. [Google Scholar] [CrossRef][Green Version]

- Martín García, A.V.; García del Dujo, Á.; Muñoz Rodríguez, J.M. Factores determinantes de adopción de blended learning en educación superior. Adaptación del modelo UTAUT*. Educ. XX1 2014, 17, 217–240. [Google Scholar] [CrossRef][Green Version]

- Martínez Clares, P.; Pérez Cusó, F.J.; González Morga, N. ¿Qué necesita el alumnado de la tutoría universitaria? Validación de un instrumento de medida a través de un análisis multivariante. Educ. XX1 2019, 22, 189–213. [Google Scholar] [CrossRef]

- Tanaka, J.S.; Huba, G.J. A fit index for covariance structure models under arbitrary GLS estimation. Br. J. Math. Stat. Psychol. 1985, 38, 197–201. [Google Scholar] [CrossRef]

- Bentler, P.M. Comparative fit indexes in structural models. Psychol. Bull. 1990, 107, 238–246. [Google Scholar] [CrossRef] [PubMed]

- Guàrdia-Olmos, J. Esquema y recomendaciones para el uso de los Modelos de Ecuaciones Estructurales. Rev. Estud. Investig. Psicol. Educ. 2016, 3, 75. [Google Scholar]

- Hu, L.; Bentler, P.M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Model. A Multidiscip. J. 1999, 6, 1–55. [Google Scholar] [CrossRef]

- George, D.; Mallery, P. SPSS for Windows Step by Step: A Simple Guide and Reference; Allyn and Bacon: Boston, MA, USA, 2003; ISBN 0205375529. [Google Scholar]

- Marsh, H.W.; Roche, L.A. Making Students’ Evaluations of Teaching Effectiveness Effective: The Critical Issues of Validity, Bias, and Utility. Am. Psychol. 1997, 52, 1187–1197. [Google Scholar] [CrossRef]

- Gerstman, B.B. Student evaluations of teaching effectiveness: The interpretation of observational data and the principle of faute de mieux Student Evaluations of Teaching Effectiveness. J. Excell. Coll. Teach. 1995, 6, 115–124. [Google Scholar]

- Matosas, L. Aspectos de comportamiento básico del profesor universitario en los procesos de valoración docente para modalidades blended learning. Espacios 2018, 39, 10–24. [Google Scholar]

- Matosas-López, L.; Soto-Varela, R.; Gómez-García, M.; Boumadan, M. Quality Systems for a Responsible Management in the University. In Sustainable and Responsible Entrepreneurship and Key Drivers of Performance; Popescu, C.R., Verma, R., Eds.; IGI Global: Hershey, PA, USA, 2021; pp. 102–124. [Google Scholar]

- Matosas-López, L.; Bernal-Bravo, C. Presencia de las TIC en el diseño de un instrumento BARS para la valoración de la eficiencia del profesorado en modalidades de enseñanza online. Psychol. Soc. Educ. 2020, 12, 43–56. [Google Scholar] [CrossRef]

- Lattuca, L.R.; Domagal-Goldman, J.M. Using qualitative methods to assess teaching effectiveness. New Dir. Inst. Res. 2007, 2007, 81–93. [Google Scholar] [CrossRef]

- Muñoz Cantero, J.M.; De Deus, M.P.R.; Abalde Paz, E. Evaluación docente vs Evaluación de la calidad. Reli. Rev. Electrón. Investig. Eval. Educ. 2002, 8, 103–134. [Google Scholar] [CrossRef][Green Version]

- González López, I.; López Cámara, A.B. Sentando las bases para la construcción de un modelo de evaluación a las competencias del profesorado universitario. Rev. Investig. Educ. 2010, 28, 403–423. [Google Scholar]

- Leguey Galán, S.; Leguey Galán, S.; Matosas López, L. ¿De qué depende la satisfacción del alumnado con la actividad docente? Espacios 2018, 39, 13–29. [Google Scholar]

- Matosas-López, L. University management and quality systems. Assessment of working efficiency in teaching staff: A comparison of Likert and BARS instruments. Cuad. Adm. 2022, 38, e2011993. [Google Scholar]

- Matosas-López, L. Distintas formas de dar clase, distintas formas de evaluar al profesorado universitario: La alternativa de las Behavioral Anchored Rating Scales (BARS). In Proceedings of the Congreso Universitario Internacional sobre la Comunicación en la profesión y en la Universidad de hoy: Contenidos, Investigación, Innovación y Docencia (CUICIID), Madrid, Spain, 23–24 October; 2019; Padilla Castillo, G., Ed.; Fórum XXI. 2019; p. 462. [Google Scholar]

- Pulham, E.; Graham, C.R. Comparing K-12 online and blended teaching competencies: A literature review. 2018, 39, 411–432. Distance Educ. 2018, 39, 411–432. [Google Scholar] [CrossRef]

- García-Peñalvo, F.J.; Seoane-Pardo, A.M. Una revisión actualizada del concepto de eLearning: Décimo aniversario. Teoría Educ. 2015, 16, 119–144. [Google Scholar] [CrossRef]

- Soffer, T.; Kahan, T.; Livne, E. E-assessment of online academic courses via students’ activities and perceptions. Stud. Educ. Eval. 2017, 54, 83–93. [Google Scholar] [CrossRef]

- Jaggars, S.S.; Xu, D. How do online course design features influence student performance? Comput. Educ. 2016, 95, 270–284. [Google Scholar] [CrossRef]

- Tang, T.; Abuhmaid, A.M.; Olaimat, M.; Oudat, D.M.; Aldhaeebi, M.; Bamanger, E. Efficiency of flipped classroom with online-based teaching under COVID-19. Interact. Learn. Environ. 2020, 1–12. [Google Scholar] [CrossRef]

- Kang, X.; Zhang, W. An experimental case study on forum-based online teaching to improve student’s engagement and motivation in higher education. Interact. Learn. Environ. 2020, 1–12. [Google Scholar] [CrossRef]

- Bernardin, H.J.; Smith, P.C. A clarification of some issues regarding the development and use of behaviorally anchored ratings scales (BARS). J. Appl. Psychol. 1981, 66, 458–463. [Google Scholar] [CrossRef]

| Item | Asymmetry | Kurtosis | Corrected Item-Total Correction |

|---|---|---|---|

| Course introduction | −0.441 | −0.424 | 0.754 |

| Evaluation system description | −0.245 | −0.232 | 0.793 |

| Time management | −0.062 | −0.730 | 0.874 |

| General availability | 0.187 | −0.433 | 0.833 |

| Organizational consistency | 0.054 | −0.627 | 0.830 |

| Evaluation system implementation | −0.145 | −0.465 | 0.718 |

| Dealing with doubts | 0.236 | −0.594 | 0.874 |

| Explicative capacity | 0.435 | −0.276 | 0.830 |

| Follow-up easiness | 0.161 | −0.559 | 0.791 |

| General satisfaction | 0.393 | −0.571 | 0.797 |

| Item | Factor 1 | Factor 2 |

|---|---|---|

| General satisfaction | 0.828 | |

| Follow-up easiness | 0.799 | |

| Dealing with doubts | 0.785 | |

| General availability | 0.737 | |

| Explicative capacity | 0.736 | |

| Time management | 0.701 | |

| Evaluation system implementation | 0.783 | |

| Course introduction | 0.775 | |

| Evaluation system description | 0.769 | |

| Organizational consistency | 0.659 |

| Regression Coefficients | Standardized Coefficients | |||||

|---|---|---|---|---|---|---|

| Relationship between Items and Factors | Estimation | SE | CR | Estimation | ||

| General satisfaction | <=> | Teacher’s Aptitude and Attitude | 0.908 | 0.04 | 23.82 | 0.831 |

| Follow-up easiness | <=> | Teacher’s Aptitude and Attitude | 0.863 | 0.04 | 23.62 | 0.827 |

| Dealing with doubts | <=> | Teacher’s Aptitude and Attitude | 0.983 | 0.03 | 29.05 | 0.906 |

| General availability | <=> | Teacher’s Aptitude and Attitude | 0.858 | 0.03 | 25.02 | 0.850 |

| Explicative capacity | <=> | Teacher’s Aptitude and Attitude | 0.867 | 0.04 | 25.06 | 0.851 |

| Time management | <=> | Teacher’s Aptitude and Attitude | 1 | 0.899 | ||

| Evaluation system implementation | <=> | Structure and Evaluation | 0.786 | 0.04 | 18.79 | 0.759 |

| Course introduction | <=> | Structure and Evaluation | 0.879 | 0.04 | 2.22 | 0.795 |

| Evaluation system description | <=> | Structure and Evaluation | 0.825 | 0.04 | 21.93 | 0.833 |

| Organizational consistency | <=> | Structure and Evaluation | 1 | 0.866 | ||

| Teacher’s Aptitude and Attitude | <=> | Structure and Evaluation | 1.999 | 0.16 | 12.27 | 0.947 |

| Indicator | Usual Threshold | Value Obtained |

|---|---|---|

| χ2/g.l. (Chi-squared ratio/Degrees of freedom) | <3.00 | 2.091 |

| CFI (Comparative fit index) | >0.90 | 0.940 |

| GFI (Goodness of fit index) | >0.90 | 0.920 |

| AGFI (Adjusted goodness of fit index) | >0.90 | 0.902 |

| RMSEA (Root mean square error of approximation) | <0.05 | 0.042 |

| SRMR (Standardized root mean square error residuals) | <0.08 | 0.027 |

| Factor | Cronbach’s Alpha | AVE | Composite Reliability |

|---|---|---|---|

| Teacher’s Aptitude and Attitude | 0.945 | 0.586 | 0.894 |

| Structure and Evaluation | 0.886 | 0.560 | 0.835 |

| Item | Mean (Values from 1 to 5) | SD |

|---|---|---|

| Teacher’s Aptitude and Attitude | ||

| General satisfaction | 3.01 | 1.279 |

| Follow-up easiness | 3.11 | 1.395 |

| Dealing with doubts | 2.75 | 1.248 |

| General availability | 2.98 | 1.173 |

| Explicative capacity | 2.79 | 1.279 |

| Time management | 3.14 | 1.189 |

| Structure and Evaluation | ||

| Evaluation system implementation | 3.53 | 1.022 |

| Course introduction | 3.48 | 1.089 |

| Evaluation system description | 3.39 | 1.072 |

| Organizational consistency | 3.55 | 1.101 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Matosas-López, L.; Cuevas-Molano, E. Assessing Teaching Effectiveness in Blended Learning Methodologies: Validity and Reliability of an Instrument with Behavioral Anchored Rating Scales. Behav. Sci. 2022, 12, 394. https://doi.org/10.3390/bs12100394

Matosas-López L, Cuevas-Molano E. Assessing Teaching Effectiveness in Blended Learning Methodologies: Validity and Reliability of an Instrument with Behavioral Anchored Rating Scales. Behavioral Sciences. 2022; 12(10):394. https://doi.org/10.3390/bs12100394

Chicago/Turabian StyleMatosas-López, Luis, and Elena Cuevas-Molano. 2022. "Assessing Teaching Effectiveness in Blended Learning Methodologies: Validity and Reliability of an Instrument with Behavioral Anchored Rating Scales" Behavioral Sciences 12, no. 10: 394. https://doi.org/10.3390/bs12100394

APA StyleMatosas-López, L., & Cuevas-Molano, E. (2022). Assessing Teaching Effectiveness in Blended Learning Methodologies: Validity and Reliability of an Instrument with Behavioral Anchored Rating Scales. Behavioral Sciences, 12(10), 394. https://doi.org/10.3390/bs12100394