Mapping the Featural and Holistic Face Processing of Bad and Good Face Recognizers

Abstract

1. Introduction

2. Material and Methods

2.1. Participants

2.1.1. Participant Selection: IFA-Q and CFMT

The Italian Face Ability Questionnaire (IFA-Q)

Cambridge Face Memory Test (CFMT)

2.2. Stimuli

2.3. Task

2.4. EEG Data Recording

2.5. EEG Data Analysis

3. Results

3.1. Behavioral Results

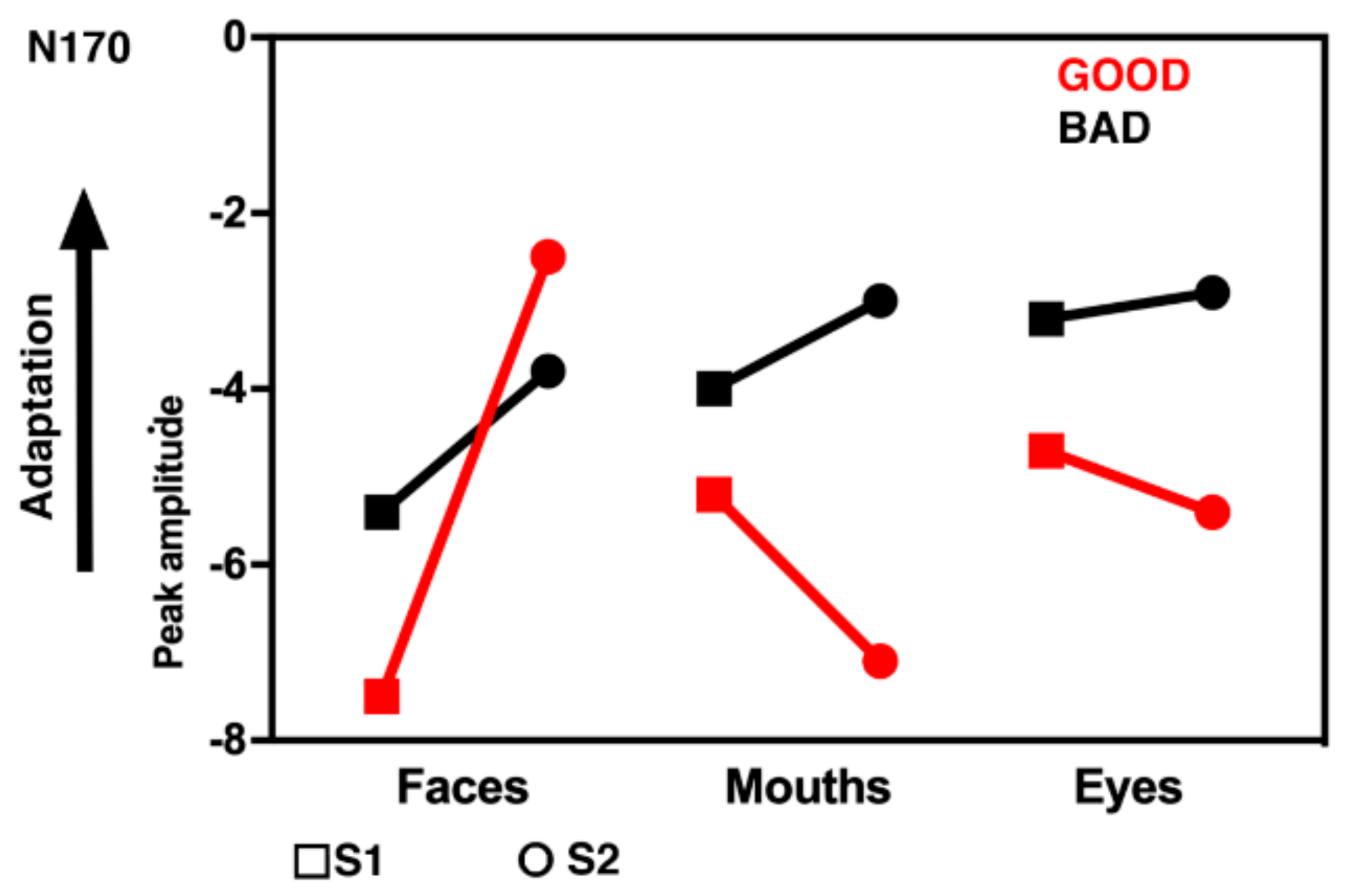

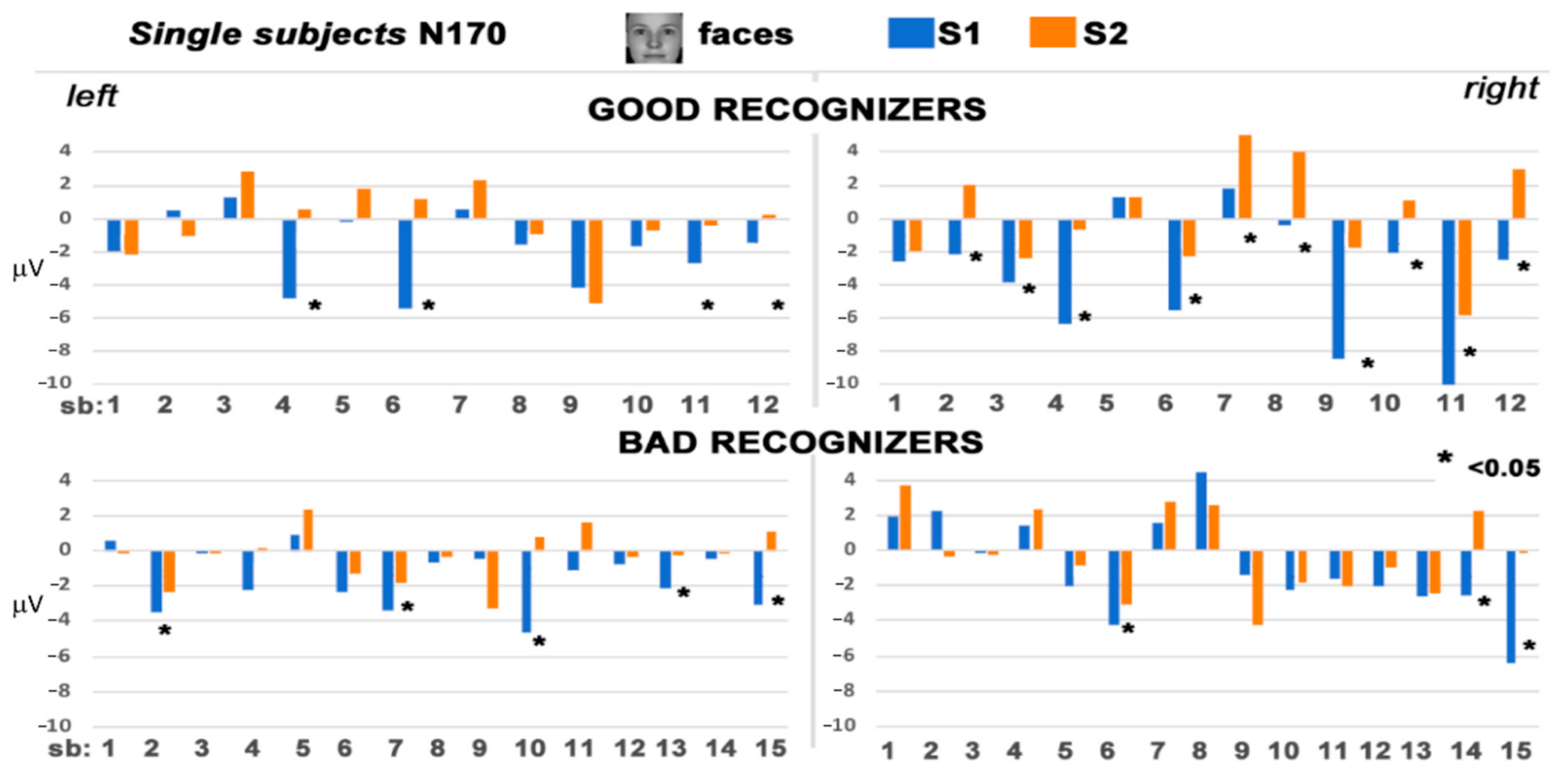

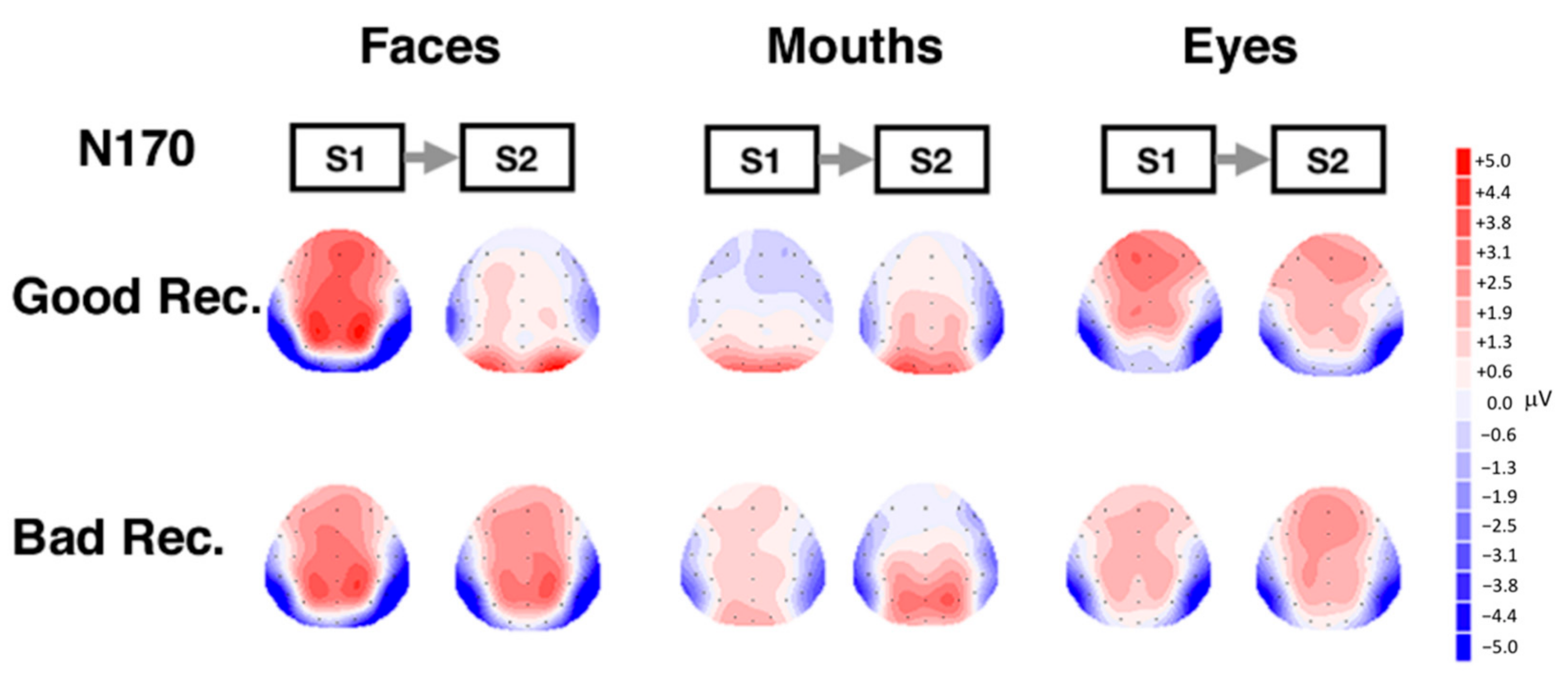

3.2. Electrophysiological Results: Responses to S2 Stimuli

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bruce, V.; Young, A. Face Perceotion; Psychology Press: New York, NY, USA, 2012. [Google Scholar]

- Righi, S.; Gronchi, G.; Marzi, T.; Rebai, M.; Viggiano, M.P. You are that smiling guy I met at the party! Socially positive signals foster memory for identities and contexts. Acta Psychol. 2015, 159, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Pierguidi, L.; Righi, S.; Gronchi, G.; Marzi, T.; Caharel, S.; Giovannelli, F.; Viggiano, M.P. Emotional contexts modulate intentional memory suppression of neutral faces: Insights from ERPs. Int. J. Psychophysiol. 2016, 106, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Fisher, A.D.; Ristori, J.; Castellini, G.; Cocchetti, C.; Cassioli, E.; Orsolini, S.; Sensi, S.; Romani, A.; Mazzoli, F.; Cipriani, A.; et al. Neural Correlates of Gender Face Perception in Transgender People. J. Clin. Med. 2020, 9, 1731. [Google Scholar] [CrossRef] [PubMed]

- Turano, M.T.; Giganti, F.; Gavazzi, G.; Lamberto, S.; Gronchi, G.; Giovannelli, F.; Peru, A.; Viggiano, M.P. Spatially Filtered Emotional Faces Dominate during Binocular Rivalry. Brain Sci. 2020, 10, 998. [Google Scholar] [CrossRef]

- Schindler, S.; Bublatzky, F. Attention and emotion: An integrative review of emotional face processing as a function of attention. Cortex 2020, 130, 362–386. [Google Scholar] [CrossRef]

- Duchaine, B.; Nakayama, K. The Cambridge Face Memory Test: Results for neurologically intact individuals and an investigation of its validity using inverted face stimuli and prosopagnosic participants. Neuropsychologia 2006, 44, 576–585. [Google Scholar] [CrossRef]

- Russell, R.; Chatterjee, G.; Nakayama, K. Developmental prosopagnosia and super-recognition: No special role for surface reflectance processing. Neuropsychologia 2012, 50, 334–340. [Google Scholar] [CrossRef]

- McCaffery, J.M.; Robertson, D.J.; Young, A.W.; Burton, A.M. Individual differences in face identity processing. Cogn. Res. 2018, 3, 21. [Google Scholar] [CrossRef]

- Nowparast Rostami, H.; Sommer, W.; Zhou, C.; Wilhelm, O.; Hildebrandt, A. Structural encoding processes contribute to individual differences in face and object cognition: Inferences from psychometric test performance and event-related brain potentials. Cortex 2017, 95, 192–210. [Google Scholar] [CrossRef]

- Turano, M.T.; Marzi, T.; Viggiano, M.P. Individual differences in face processing captured by ERPs. Int. J. Psychophysiol. 2016, 101, 1–8. [Google Scholar] [CrossRef]

- Gauthier, I.; Curran, T.; Curby, K.M.; Collins, D. Perceptual interference supports a non-modular account of face processing. Nat. Neurosci. 2003, 6, 428–432. [Google Scholar] [CrossRef]

- Tanaka, J.W.; Farah, M.J. Parts and wholes in face recognition. Q. J. Exp. Psychol. 1993, 46, 225–245. [Google Scholar] [CrossRef]

- Maurer, D.; Grand, R.; Le Mondloch, C.J. The many faces of configural processing. Trends Cogn. Sci. 2002, 6, 255–260. [Google Scholar] [CrossRef]

- Rossion, B. Picture-plane inversion leads to qualitative changes of face perception. Acta Psychol. 2008, 128, 274–289. [Google Scholar] [CrossRef]

- Tanaka, J.W.; Simony, D. The “parts and wholes” of face recognition: A review of the literature. Q. J. Exp. Psychol. 2016, 69, 1876–1889. [Google Scholar] [CrossRef]

- Ramon, M.; Miellet, S.; Dzieciol, A.; Konrad, B.; Dresler, M.; Caldara, R. Super-Memorizers are not Super-Recognizers. PLoS ONE 2016, 11, e0150972. [Google Scholar]

- Wilhelm, O.; Herzmann, G.; Kunina, O.; Danthiir, V.; Schacht, A.; Sommer, W. Individual differences in perceiving and recognizing faces—one element of social cognition. J. Pers. Soc. Psychol. 2010, 99, 530–548. [Google Scholar] [CrossRef]

- Olsson, N.; Juslin, P. Can self-reported encoding strategy and recognition skill be diagnostic of performance in eyewitness identifications? J. Appl. Psychol. 1999, 84, 42–49. [Google Scholar] [CrossRef]

- Freire, A.; Lee, K.; Symons, L.A. The face-inversion effect as a deficit in the encoding of configural information: Direct evidence. Perception 2000, 29, 159–170. [Google Scholar] [CrossRef]

- Hoffman, D.D.; Richards, W.A. Parts in recognition. Cognition 1985, 18, 65–96. [Google Scholar] [CrossRef]

- Nemrodov, D.; Anderson, T.; Preston, F.F.; Itier, R.J. Early sensitivity for eyes within faces: A new neuronal account of holistic and featural processing. Neuroimage 2014, 97, 81–94. [Google Scholar] [CrossRef] [PubMed]

- Sekiguchi, T. Individual differences in face memory and eye fixation patterns during face learning. Acta Psychol. 2011, 137, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Barton, J.J.; Zhao, J.; Keenan, J.P. Perception of global facial geometry in the inversion effect and prosopagnosia. Neuropsychologia 2003, 41, 1703–1711. [Google Scholar] [CrossRef]

- Bukach, C.M.; Le Grand, R.; Kaiser, M.D.; Bub, D.N.; Tanaka, J.W. Preservation of mouth region processing in two cases of prosopagnosia. J. Neuropsychol. 2008, 2 Pt 1, 227–244. [Google Scholar] [CrossRef]

- Caldara, R.; Schyns, P.G.; Mayer, E.; Smith, M.L.; Gosselin, F.; Rossion, B. Does prosopagnosia take the eyes out of face representations? Evidence for a defect in representing diagnostic facial information following brain damage. J. Cogn. Neurosci. 2005, 17, 1652–1666. [Google Scholar] [CrossRef]

- DeGutis, J.; Cohan, S.; Mercado, R.J.; Wilmer, J.; Nakayama, K. Holistic processing of the mouth but not the eyes in developmental prosopagnosia. Cogn. Neuropsychol. 2012, 29, 419–446. [Google Scholar] [CrossRef]

- Le Grand, R.; Cooper, P.A.; Mondloch, C.J.; Lewis, T.L.; Sagiv, N.; de Gelder, B.; Maurer, D. What aspects of face processing are impaired in developmental prosopagnosia? Brain Cogn. 2006, 61, 139–158. [Google Scholar] [CrossRef]

- Avidan, G.; Tanzer, M.; Behrmann, M. Impaired holistic processing in congenital prosopagnosia. Neuropsychologia 2011, 49, 2541–2552. [Google Scholar] [CrossRef]

- Palermo, R.; Willis, M.L.; Rivolta, D.; McKone, E.; Wilson, C.E.; Calder, A.J. Impaired holistic coding of facial expression and facial identity in congenital prosopagnosia. Neuropsychologia 2011, 49, 1226–1235. [Google Scholar] [CrossRef]

- Wang, R.; Li, J.; Fang, H.; Tian, M.; Liu, J. Individual differences in holistic processing predict face recognition ability. Psychol. Sci. 2012, 23, 169–177. [Google Scholar] [CrossRef]

- Bentin, S.; Allison, T.; Puce, A.; Perez, E.; McCarthy, G. Electrophysiological studies of face perception in humans. J. Cogn. Neurosci. 1996, 8, 551–565. [Google Scholar] [CrossRef]

- Bentin, S.; Deouell, L.Y. Structural encoding and identification in face processing: ERP evidence for separate mechanisms. Cogn. Neuropsychol. 2000, 17, 35–55. [Google Scholar] [CrossRef]

- Eimer, M. The face-specific N170 component reflects late stages in the structural encoding of faces. Neuroreport 2000, 11, 2319–2324. [Google Scholar] [CrossRef]

- Schweinberger, S.R.; Pickering, E.C.; Jentzsch, I.; Burton, A.M.; Kaufmann, J.M. Event-related brain potential evidence for a response of inferior temporal cortex to familiar face repetitions. Cogn. Brain Res. 2002, 14, 398–409. [Google Scholar] [CrossRef]

- Jacques, C.; Rossion, B. The speed of individual face categorization. Psychol. Sci. 2006, 17, 485–492. [Google Scholar] [CrossRef]

- Rossion, B.; Jacques, C. Does physical interstimulus variance account for early electrophysiological face sensitive responses in the human brain? Ten lessons on the N170. Neuroimage 2008, 39, 1959–1979. [Google Scholar] [CrossRef]

- Eimer, M.; Kiss, M.; Nicholas, S. Response profile of the face-sensitive N170 component: A rapid adaptation study. Cereb. Cortex 2010, 20, 2442–2452. [Google Scholar] [CrossRef]

- Sagiv, N.; Bentin, S. Structural encoding of human and schematic faces: Holistic and part-based processes. J. Cogn. Neurosci. 2001, 13, 937–951. [Google Scholar] [CrossRef]

- Taylor, M.J.; Edmonds, G.; McCarthy, G.; Allison, T. Eyes first! Eye processing develops before face processing in children. Neuroreport 2001, 12, 1671–1676. [Google Scholar] [CrossRef]

- Nemrodov, D.; Itier, R.J. The role of eyes in early face processing: A rapid adaptation study of the inversion effect. Br. J. Psychol. 2011, 102, 783–798. [Google Scholar] [CrossRef]

- Kaufmannm, J.M.; Schulz, C.; Schweinberger, S.R. High and low performers differ in the use of shape information for face recognition. Neuropsychologia 2013, 51, 1310–1319. [Google Scholar] [CrossRef]

- Harris, A.M.; Nakayama, K. Rapid face-selective adaptation of an early extrastriate component in MEG. Cereb. Cortex 2007, 17, 63–70. [Google Scholar] [CrossRef]

- Feuerriegel, D.; Churches, O.; Hofmann, J.; Keage, H.A. The N170 and face perception in psychiatric and neurological disorders: A systematic review. Clin. Neurophysiol. 2015, 126, 1141–1158. [Google Scholar] [CrossRef]

- Schweinberger, S.R.; Neumann, M.F. Repetition effects in human ERPs to faces. Cortex 2016, 80, 141–153. [Google Scholar] [CrossRef]

- Henson, R.N. Neuroimaging studies of priming. Prog. Neurobiol. 2003, 70, 53–81. [Google Scholar] [CrossRef]

- Vizioli, L.; Rousselet, G.A.; Caldara, R. Neural repetition suppression to identity is abolished by other-race faces. Proc. Natl. Acad. Sci. USA 2010, 107, 20081–20086. [Google Scholar] [CrossRef]

- Turano, M.T.; Viggiano, M.P. The relationship between face recognition ability and socioemotional functioning throughout adulthood. Neuropsychol. Dev. Cogn. B Aging Neuropsychol. Cogn. 2017, 24, 613–630. [Google Scholar] [CrossRef]

- Dupuis-Roy, N.; Fortin, I.; Fiset, D.; Gosselin, F. Uncovering gender discrimination cues in a realistic setting. J. Vis. 2009, 9, 1–10. [Google Scholar] [CrossRef]

- Vinette, C.; Gosselin, F.; Schyns, P.G. Spatio-temporal dynamics of face recognition in a flash: It’s in the eyes. Cogn. Sci. 2004, 28, 289–301. [Google Scholar] [CrossRef]

- Orban de Xivry, J.J.; Ramon, M.; Lefèvre, P.; Rossion, B. Reduced fixation on the upper area of personally familiar faces following acquired prosopagnosia. J. Neuropsychol. 2008, 2 Pt 1, 245–268. [Google Scholar] [CrossRef]

- Nunn, J.A.; Postma, P.; Pearson, R. Developmental prosopagnosia: Should it be taken at face value? Neurocase 2001, 7, 15–27. [Google Scholar] [CrossRef] [PubMed]

- Duchaine, B.; Nakayama, K. Dissociations of face and object recognition in developmental prosopagnosia. J. Cogn. Neurosci. 2005, 17, 249–261. [Google Scholar] [CrossRef] [PubMed]

- Carbon, C.C.; Grüter, T.; Weber, J.E.; Lueschow, A. Faces as objects of non-expertise: Processing of thatcherised faces in congenital prosopagnosia. Perception 2007, 36, 1635–1645. [Google Scholar] [CrossRef] [PubMed]

- Kennerknecht, I.; Plumpe, N.; Edwards, S.; Raman, R. Hereditary prosopagnosia (HPA): The irst report outside the caucasian population. J. Hum. Genet. 2007, 52, 230–236. [Google Scholar] [CrossRef]

- Kennerknecht, I.; Grüter, T.; Welling, B.; Wentzek, S.; Horst, J.; Edwards, S.; Grüter, M. First report of prevalence of non-syndromic hereditary prosopagnosia (HPA). AJMG 2006, 140, 1617–1622. [Google Scholar] [CrossRef]

- Grüter, T.; Grüter, M.; Carbon, C.C. Neural and genetic foundations of face recognition and prosopagnosia. J. Neuropsychol. 2008, 2 Pt 1, 79–97. [Google Scholar] [CrossRef]

- Tree, J.J.; Wilkie, J. Face and object imagery in congenital prosopagnosia: A case series. Cortex 2010, 46, 1189–1198. [Google Scholar] [CrossRef]

- Eimer, M.; Gosling, A.; Nicholas, S.; Kiss, M. The N170 component and its links to configural face processing: A rapid neural adaptation study. Brain Res. 2011, 1376, 76–87. [Google Scholar] [CrossRef]

- Yardley, L.; McDermott, L.; Pisarski, S.; Duchaine, B.; Nakayama, K. Psychosocial consequences of developmental prosopagnosia: A problem of recognition. J. Psychosom. Res. 2008, 65, 445–451. [Google Scholar] [CrossRef]

- DeGutis, J.; Mercado, R.J.; Wilmer, J.; Rosenblatt, A. Individual differences in holistic processing predict the own-race advantage in recognition memory. PLoS ONE 2013, 8, e58253. [Google Scholar] [CrossRef]

- Richler, J.J.; Cheung, O.S.; Gauthier, I. Holistic processing predicts face recognition. Psychol. Sci. 2011, 22, 464–471. [Google Scholar] [CrossRef]

- Konar, Y.; Bennett, P.J.; Sekuler, A.B. Holistic processing is not correlated with face-identification accuracy. Psychol. Sci. 2010, 21, 38–43. [Google Scholar] [CrossRef]

- Rossion, B.; de Gelder, B.; Dricot, L.; Zoontjes, R.; De Volder, A.; Bodart, J.-M.; Crommelinck, M. Hemispheric asymmetries for whole-based and part-based face processing in the human fusiform gyrus. J. Cogn. Neurosci. 2000, 12, 793–802. [Google Scholar] [CrossRef]

- Itier, R.J.; Alain, C.; Sedore, K.; McIntosh, A.R. Early face processing specificity: It’s in the eyes! J. Cogn. Neurosci. 2007, 19, 1815–1826. [Google Scholar] [CrossRef]

- Rossion, B. Distinguishing the cause and consequence of face inversion: The perceptual field hypothesis. Acta Psychol. 2009, 132, 300–312. [Google Scholar] [CrossRef]

- Van Belle, G.; de Graef, P.; Verfaillie, K.; Busigny, T.; Rossion, B. Whole not hole: Expert face recognition requires holistic perception. Neuropsychologia 2010, 48, 2620–2629. [Google Scholar] [CrossRef]

- Schiltz, C.; Dricot, L.; Goebel, R.; Rossion, B. Holistic perception of individual faces in the right middle fusiform gyrus as evidenced by the composite face illusion. J. Vis. 2010, 10, 25. [Google Scholar] [CrossRef]

- Yovel, G. Neural and cognitive face-selective markers: An integrative review. Neuropsychologia 2016, 83, 5–13. [Google Scholar] [CrossRef]

- Eimer, M. The Oxford Handbook of Face Perception; Calder, A., Rhodes, G., Johnson, M., Haxby, J., Eds.; Oxford University Press: Oxford, UK, 2011; Chapter 17; p. 329. [Google Scholar]

- Negrini, M.; Brkić, D.; Pizzamiglio, S.; Premoli, I.; Rivolta, D. Neurophysiological Correlates of Featural and Spacing Processing for Face and Non-face Stimuli. Front. Psych. 2017, 8, 333. [Google Scholar] [CrossRef]

- Lueschow, A.; Weber, J.E.; Carbon, C.-C.; Deffke, I.; Sander, T.; Grüter, T.; Grüter, M.; Trahms, L.; Curio, G. The 170ms Response to Faces as Measured by MEG (M170) Is Consistently Altered in Congenital Prosopagnosia. PLoS ONE 2015, 10, e0137624. [Google Scholar] [CrossRef]

- Rossion, C.B. The Oxford Handbook of ERP Components; Kappenman, E.S., Luck, S.J., Eds.; Oxford University Press: Oxford, UK, 2011; Chapter 5; p. 115. [Google Scholar]

- Moret-Tatay, C.; Fortea, I.B.; Sevilla, M.D.G. Challenges and insights for the visual system: Are face and word recognition two sides of the same coin? J. Neurolinguist. 2020, 56, 100941. [Google Scholar] [CrossRef]

- Andrews, S.; Burton, A.M.; Schweinberger, S.R.; Wiese, H. Event-related potentials reveal the development of stable face representations from natural variability. Q. J. Exp. Psychol. 2017, 70, 1620–1632. [Google Scholar] [CrossRef] [PubMed]

- Towler, J.; Eimer, M. Electrophysiological evidence for parts and wholes in visual face memory. Cortex 2016, 83, 246–258. [Google Scholar] [CrossRef] [PubMed]

- Tsao, D.Y.; Livingstone, M.S. Mechanisms of face perception. Annu. Rev. Neurosci. 2008, 31, 411–437. [Google Scholar] [CrossRef]

- Freiwald, W.A.; Tsao, D.Y.; Livingstone, M.S. A face feature space in the macaque temporal lobe. Nat. Neurosci. 2009, 12, 1187–1196. [Google Scholar] [CrossRef]

- Ohayon, S.; Freiwald, W.A.; Tsao, D.Y. What makes a cell face selective? The importance of contrast. Neuron 2012, 74, 567–581. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Marzi, T.; Gronchi, G.; Turano, M.T.; Giovannelli, F.; Giganti, F.; Rebai, M.; Viggiano, M.P. Mapping the Featural and Holistic Face Processing of Bad and Good Face Recognizers. Behav. Sci. 2021, 11, 75. https://doi.org/10.3390/bs11050075

Marzi T, Gronchi G, Turano MT, Giovannelli F, Giganti F, Rebai M, Viggiano MP. Mapping the Featural and Holistic Face Processing of Bad and Good Face Recognizers. Behavioral Sciences. 2021; 11(5):75. https://doi.org/10.3390/bs11050075

Chicago/Turabian StyleMarzi, Tessa, Giorgio Gronchi, Maria Teresa Turano, Fabio Giovannelli, Fiorenza Giganti, Mohamed Rebai, and Maria Pia Viggiano. 2021. "Mapping the Featural and Holistic Face Processing of Bad and Good Face Recognizers" Behavioral Sciences 11, no. 5: 75. https://doi.org/10.3390/bs11050075

APA StyleMarzi, T., Gronchi, G., Turano, M. T., Giovannelli, F., Giganti, F., Rebai, M., & Viggiano, M. P. (2021). Mapping the Featural and Holistic Face Processing of Bad and Good Face Recognizers. Behavioral Sciences, 11(5), 75. https://doi.org/10.3390/bs11050075