Assessing Mothers’ Postpartum Depression From Their Infants’ Cry Vocalizations

Abstract

1. Introduction

1.1. Postpartum Depression Identification

1.2. Infant Cry

1.3. Cloud Based Model

1.4. Aim and Hypothesis

2. Methods

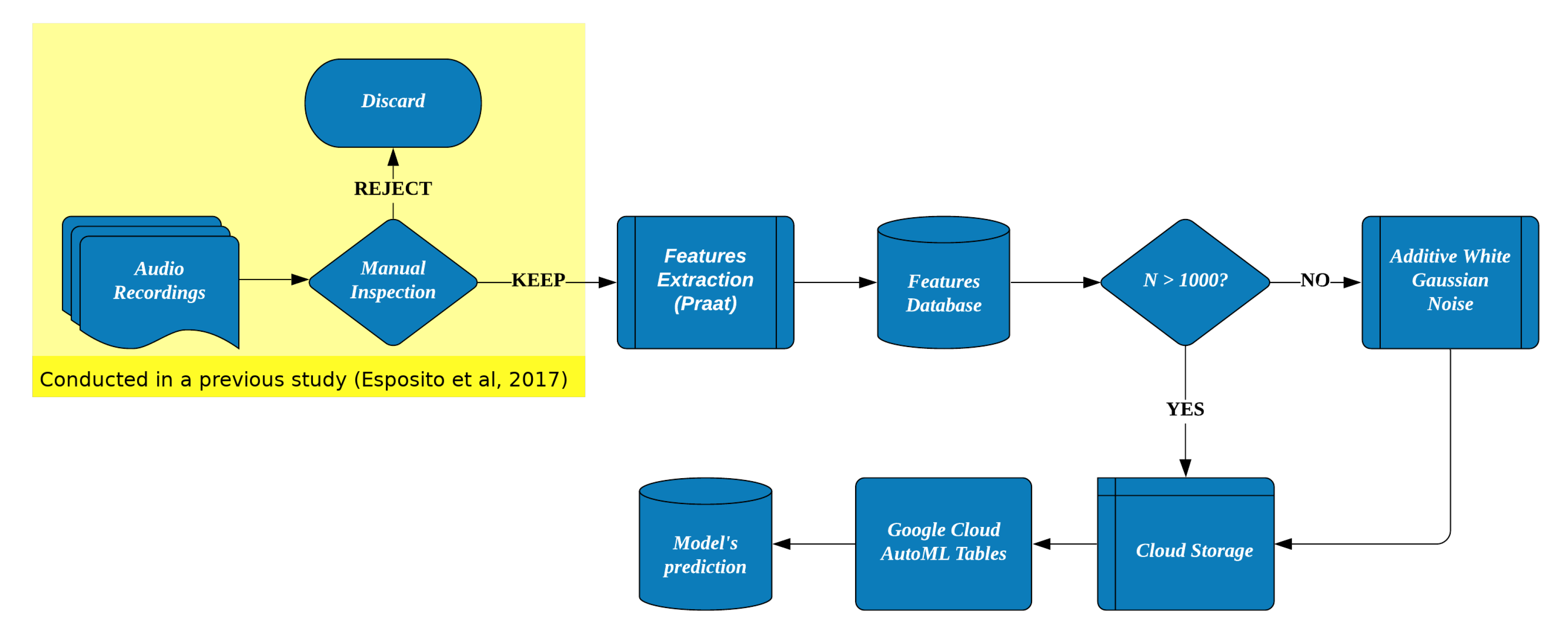

2.1. Analytic Plan

2.2. Data

2.3. Features Extraction

2.4. Classification

Data Augmentation

3. Results

4. Discussion

Limitations

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| PPD | Postpartum Depression |

| CNS | Central Nervous System |

| DSM | Diagnostic and Statistical Manual |

| SCID | Structured Clinical Interview |

| SaaS | Software as a Service |

| SSL | Secure Sockets Layer |

| AWGN | Addittive White Gaussian Noise |

| LTAS | Long-Term Average Spectrum |

| AUC PR | Area Under the Curve: Precision-Recall |

| AUC ROC | Area Under the Curve: Receiver Operative Characteristics |

References

- Esposito, G.; Venuti, P. Understanding early communication signals in autism: A study of the perception of infants’ cry. J. Intellect. Disabil. Res. 2010, 54, 216–223. [Google Scholar] [CrossRef] [PubMed]

- Mende, W.; Wermke, K.; Schindler, S.; Wilzopolski, K.; Hock, S. Variability of the cry melody and the melody spectrum as indicators for certain CNS disorders. Early Child Dev. Care 1990, 65, 95–107. [Google Scholar] [CrossRef]

- Lester, B.M. Spectrum analysis of the cry sounds of well-nourished and malnourished infants. Child Dev. 1976, 1, 237–241. [Google Scholar] [CrossRef]

- Bornstein, M.H. Children’s parents. In Handbook of Child Psychology and Developmental Science; John Wiley & Sons Inc.: Hoboken, NJ, USA, 2015; pp. 1–78. [Google Scholar]

- Sroufe, L.A.; Egeland, B.; Carlson, E.A.; Collins, W.A. The Development of the Person: The Minnesota Study of Risk and Adaptation from Birth to Adulthood; Guilford Press: New York, NY, USA, 2009. [Google Scholar]

- Ainsworth, M.D.S.; Blehar, M.C.; Waters, E.; Wall, S.N. Patterns of Attachment: A Psychological Study of the Strange Situation; Psychology Press: Hillsdale, NJ, USA, 2015. [Google Scholar]

- Higley, E.; Dozier, M. Nighttime maternal responsiveness and infant attachment at one year. Attach. Hum. Dev. 2009, 11, 347–363. [Google Scholar] [CrossRef]

- Esposito, G.; Nakazawa, J.; Venuti, P.; Bornstein, M. Perceptions of distress in young children with autism compared to typically developing children: A cultural comparison between Japan and Italy. Res. Dev. Disabil. 2012, 33, 1059–1067. [Google Scholar] [CrossRef]

- O’hara, M.W.; Swain, A.M. Rates and risk of postpartum depression—A meta-analysis. Int. Rev. Psychiatry 1996, 8, 37–54. [Google Scholar] [CrossRef]

- Paulson, J.F.; Bazemore, S.D. Prenatal and postpartum depression in fathers and its association with maternal depression: A meta-analysis. JAMA J. Am. Med. Assoc. 2010, 303, 1961–1969. [Google Scholar] [CrossRef]

- Donovan, W.L.; Leavitt, L.A.; Walsh, R.O. Conflict and depression predict maternal sensitivity to infant cries. Infant Behav. Dev. 1998, 21, 505–517. [Google Scholar] [CrossRef]

- Esposito, G.; Manian, N.; Truzzi, A.; Bornstein, M.H. Response to infant cry in clinically depressed and non-depressed mothers. PLoS ONE 2017, 12, e0169066. [Google Scholar] [CrossRef]

- Bornstein, M.H.; Arterberry, M.E.; Mash, C.; Manian, N. Discrimination of facial expression by 5-month-old infants of nondepressed and clinically depressed mothers. Infant Behav. Dev. 2011, 34, 100–106. [Google Scholar] [CrossRef]

- Esposito, G.; Del Carmen Rostagno, M.; Venuti, P.; Haltigan, J.; Messinger, D. Brief report: Atypical expression of distress during the separation phase of the strange situation procedure in infant siblings at high risk for ASD. J. Autism Dev. Disord. 2014, 44, 975–980. [Google Scholar] [CrossRef] [PubMed]

- Murray, L.; Hipwell, A.; Hooper, R.; Stein, A.; Cooper, P. The cognitive development of 5-year-old children of postnatally depressed mothers. J. Child Psychol. Psychiatry 1996, 37, 927–935. [Google Scholar] [CrossRef] [PubMed]

- Brand, S.; Furlano, R.; Sidler, M.; Schulz, J.; Holsboer-Trachsler, E. Associations between infants’ crying, sleep and cortisol secretion and mother’s sleep and well-being. Neuropsychobiology 2014, 69, 39–51. [Google Scholar] [CrossRef] [PubMed]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders 5th Edition (DSM-5®); American Psychiatric Association: Arlington VA, USA, 2013. [Google Scholar]

- Beck, C.T. Predictors of postpartum depression: An update. Nurs. Res. 2001, 50, 275–285. [Google Scholar] [CrossRef] [PubMed]

- Bloch, M.; Schmidt, P.J.; Danaceau, M.; Murphy, J.; Nieman, L.; Rubinow, D.R. Effects of gonadal steroids in women with a history of postpartum depression. Am. J. Psychiatry 2000, 157, 924–930. [Google Scholar] [CrossRef] [PubMed]

- Ko, J.Y.; Rockhill, K.M.; Tong, V.T.; Morrow, B.; Farr, S.L. Trends in postpartum depressive symptoms—27 states, 2004, 2008, and 2012. MMWR. Morb. Mortal. Wkly. Rep. 2017, 66, 153. [Google Scholar] [CrossRef]

- Ko, J.Y.; Farr, S.L.; Dietz, P.M.; Robbins, C.L. Depression and treatment among US pregnant and nonpregnant women of reproductive age, 2005–2009. J. Women’s Health 2012, 21, 830–836. [Google Scholar] [CrossRef]

- Caparros-Gonzalez, R.A.; Romero-Gonzalez, B.; Strivens-Vilchez, H.; Gonzalez-Perez, R.; Martinez-AugustinLondon, O.; Peralta-Ramirez, M.I. Hair cortisol levels, psychological stress and psychopathological symptoms as predictors of postpartum depression. PLoS ONE 2017, 12, e0182817. [Google Scholar] [CrossRef]

- Jahangard, L.; Mikoteit, T.; Bahiraei, S.; Zamanibonab, M.; Haghighi, M.; Sadeghi Bahmani, D.; Brand, S. Prenatal and postnatal hair steroid levels predict postpartum depression 12 weeks after delivery. J. Clin. Med. 2019, 8, 1290. [Google Scholar] [CrossRef]

- Cox, J.L.; Holden, J.M.; Sagovsky, R. Detection of postnatal depression: Development of the 10-item Edinburgh Postnatal Depression Scale. Br. J. Psychiatry 1987, 150, 782–786. [Google Scholar] [CrossRef]

- Cox, J. Origins and development of the 10 item Edinburgh Postnatal Depression Scale. In Perinatal Psychiatry; Gaskell: London, UK, 1994; pp. 115–124. [Google Scholar]

- Forman, R.F.; Svikis, D.; Montoya, I.D.; Blaine, J. Selection of a substance use disorder diagnostic instrument by the National Drug Abuse Treatment Clinical Trials Network. J. Subst. Abus. Treat. 2004, 27, 1–8. [Google Scholar] [CrossRef] [PubMed]

- First, M.B. Structured Clinical Interview for the DSM (SCID); American Psychiatric Association: Washington, DC, USA, 2014; pp. 1–6. [Google Scholar]

- Spitzer, R.L.; Williams, J.B.; Gibbon, M.; First, M.B. The structured clinical interview for DSM-III-R (SCID): I: History, rationale, and description. Arch. Gen. Psychiatry 1992, 49, 624–629. [Google Scholar] [CrossRef] [PubMed]

- Tejaswini, S.; Sriraam, N.; Pradeep, G. Recognition of infant cries using wavelet derived mel frequency feature with SVM classification. In Proceedings of the 2016 International Conference on Circuits, Controls, Communications and Computing (I4C), Bangalore, India, 4–6 October 2016; pp. 1–4. [Google Scholar]

- Esposito, G.; Hiroi, N.; Scattoni, M.L. Cry, baby, cry: Expression of distress as a biomarker and modulator in autism spectrum disorder. Int. J. Neuropsychopharmacol. 2017, 20, 498–503. [Google Scholar] [CrossRef]

- Sheinkopf, S.J.; Iverson, J.M.; Rinaldi, M.L.; Lester, B.M. Atypical cry acoustics in 6-month-old infants at risk for autism spectrum disorder. Autism Res. 2012, 5, 331–339. [Google Scholar] [CrossRef]

- Garcia, J.O.; Garcia, C.R. Mel-frequency cepstrum coefficients extraction from infant cry for classification of normal and pathological cry with feed-forward neural networks. In Proceedings of the International Joint Conference on Neural Networks, Portland, OR, USA, 20–24 July 2003; Volume 4, pp. 3140–3145. [Google Scholar]

- Gabrieli, G.; Scapin, G.; Bornstein, M.H.; Esposito, G. Are Cry Studies Replicable? An Analysis of Participants, Procedures, and Methods Adopted and Reported in Studies of Infant Cries. Acoustics 2019, 1, 866–883. [Google Scholar] [CrossRef]

- Milgrom, J.; Westley, D.; McCloud, P. Do infants of depressed mothers cry more than other infants? J. Paediatr. Child Health 1995, 31, 218–221. [Google Scholar] [CrossRef]

- Miller, A.R.; Barr, R.G.; Eaton, W.O. Crying an motor behavior of six-week-old infants and postpartum maternal mood. Pediatrics 1993, 92, 551–558. [Google Scholar]

- Grace, S.L.; Evindar, A.; Stewart, D. The effect of postpartum depression on child cognitive development and behavior: A review and critical analysis of the literature. Arch. Women’s Ment. Health 2003, 6, 263–274. [Google Scholar] [CrossRef]

- Kune, R.; Konugurthi, P.K.; Agarwal, A.; Chillarige, R.R.; Buyya, R. The anatomy of big data computing. Softw. Pract. Exp. 2016, 46, 79–105. [Google Scholar] [CrossRef]

- Fernandes, S.; Bernardino, J. What is bigquery? In Proceedings of the 19th International Database Engineering & Applications Symposium, Yokohama, Japan, 13–15 July 2015; pp. 202–203. [Google Scholar]

- Introduction to BigQuery. Available online: https://cloud.google.com/bigquery/what-is-bigquery (accessed on 10 January 2020).

- Buckets. Available online: https://cloud.google.com/storage/docs/json_api/v1/buckets (accessed on 10 January 2020).

- Tanner, M.A.; Wong, W.H. The calculation of posterior distributions by data augmentation. J. Am. Stat. Assoc. 1987, 82, 528–540. [Google Scholar] [CrossRef]

- Grover, P.; Sahai, A. Shannon meets Tesla: Wireless information and power transfer. In Proceedings of the 2010 IEEE International Symposium on Information Theory, Austin, TX, USA, 13–18 June 2010; pp. 2363–2367. [Google Scholar]

- Hughes, B. On the error probability of signals in additive white Gaussian noise. IEEE Trans. Inf. Theory 1991, 37, 151–155. [Google Scholar] [CrossRef]

- Rochac, J.F.R.; Zhang, N.; Xiong, J.; Zhong, J.; Oladunni, T. Data Augmentation for Mixed Spectral Signatures Coupled with Convolutional Neural Networks. In Proceedings of the 2019 9th International Conference on Information Science and Technology (ICIST), Hulunbuir, China, 2–5 August 2019; pp. 402–407. [Google Scholar]

- Bjerrum, E.J.; Glahder, M.; Skov, T. Data augmentation of spectral data for convolutional neural network (CNN) based deep chemometrics. arXiv 2017, arXiv:1710.01927. [Google Scholar]

- Manian, N.; Bornstein, M.H. Dynamics of emotion regulation in infants of clinically depressed and nondepressed mothers. J. Child Psychol. Psychiatry 2009, 50, 1410–1418. [Google Scholar] [CrossRef] [PubMed]

- Beck, A.T.; Steer, R.A.; Brown, G.K. Beck depression inventory-II. San Antonio 1996, 78, 490–498. [Google Scholar]

- Boersma, P.; Weenink, D. Praat: Doing Phonetics by Computer. 2009. Computer Program. 2005. Available online: http://www.praat.org (accessed on 1 December 2019).

- Gabrieli, G.; Leck, W.Q.; Bizzego, A.; Esposito, G. Are Praat’s default settings optimal for infant cry analysis? In Proceedings of the Linux Audio Conference, LAC 2019, Stanford, LA, USA, 23–26 March 2019; pp. 83–88. [Google Scholar]

- Gabrieli, G.; Esposito, G. Related Data for: Assessing Mothers’ Postpartum Depression from Their Infants’ Cry Vocalizations; DR-NTU: Singapore, 2019. [Google Scholar] [CrossRef]

- Bisong, E. An Overview of Google Cloud Platform Services. In Building Machine Learning and Deep Learning Models on Google Cloud Platform; Apress: Berkeley, CA, USA, 2019; pp. 7–10. [Google Scholar]

- Henrich, J.; Heine, S.J.; Norenzayan, A. Most people are not WEIRD. Nature 2010, 466, 29. [Google Scholar] [CrossRef]

| Metric | Score |

|---|---|

| AUC PR | 0.954 |

| AUC ROC | 0.969 |

| Logarithmic Loss | 0.250 |

| Accuracy | 89.5% |

| Precision | 90.4% |

| True positive rate (Recall) | 88.8% |

| False positive rate | 0.090 |

| Predicted Label | ||

|---|---|---|

| True Label | False | True |

| False | 88% | 12% |

| True | 9% | 91% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gabrieli, G.; Bornstein, M.H.; Manian, N.; Esposito, G. Assessing Mothers’ Postpartum Depression From Their Infants’ Cry Vocalizations. Behav. Sci. 2020, 10, 55. https://doi.org/10.3390/bs10020055

Gabrieli G, Bornstein MH, Manian N, Esposito G. Assessing Mothers’ Postpartum Depression From Their Infants’ Cry Vocalizations. Behavioral Sciences. 2020; 10(2):55. https://doi.org/10.3390/bs10020055

Chicago/Turabian StyleGabrieli, Giulio, Marc H. Bornstein, Nanmathi Manian, and Gianluca Esposito. 2020. "Assessing Mothers’ Postpartum Depression From Their Infants’ Cry Vocalizations" Behavioral Sciences 10, no. 2: 55. https://doi.org/10.3390/bs10020055

APA StyleGabrieli, G., Bornstein, M. H., Manian, N., & Esposito, G. (2020). Assessing Mothers’ Postpartum Depression From Their Infants’ Cry Vocalizations. Behavioral Sciences, 10(2), 55. https://doi.org/10.3390/bs10020055