An Improved Single Shot Multibox Detector Method Applied in Body Condition Score for Dairy Cows

Abstract

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

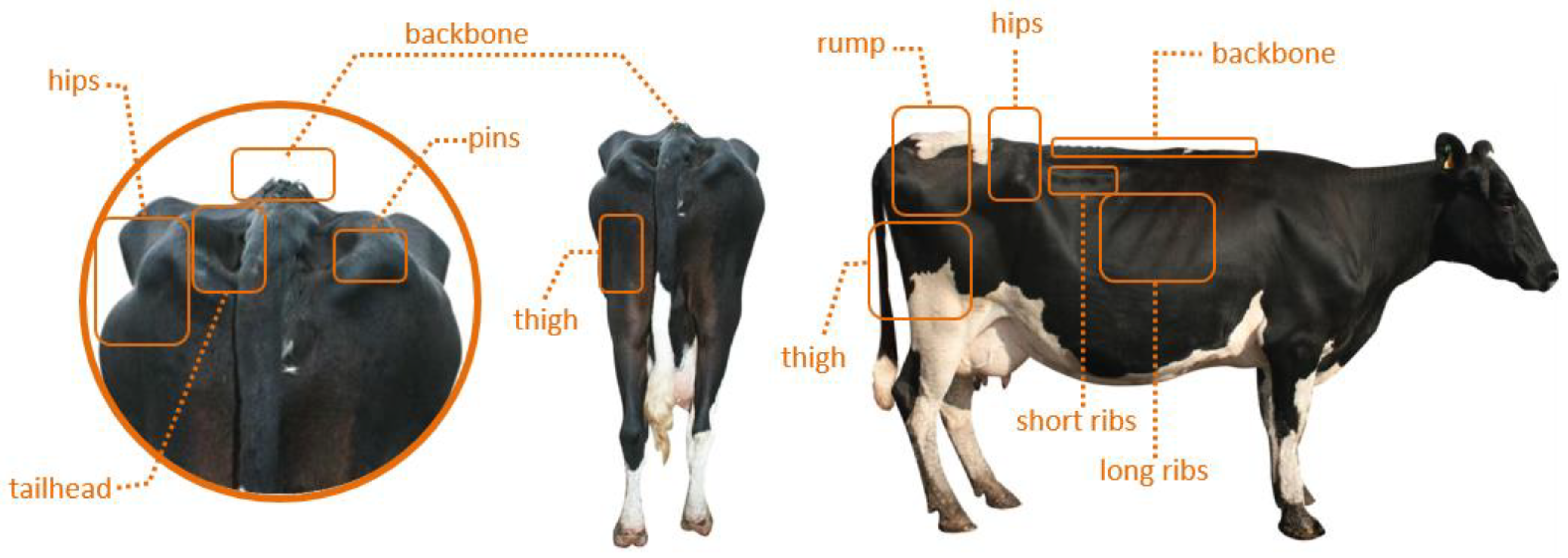

2.1. Principle of BCS Assessing

2.2. Data Collection Platform

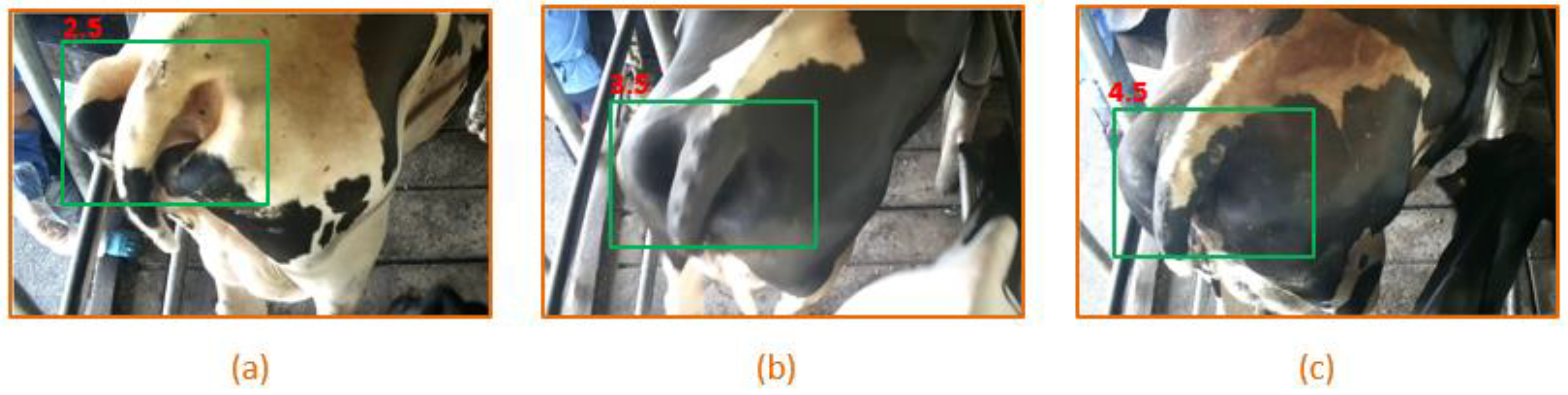

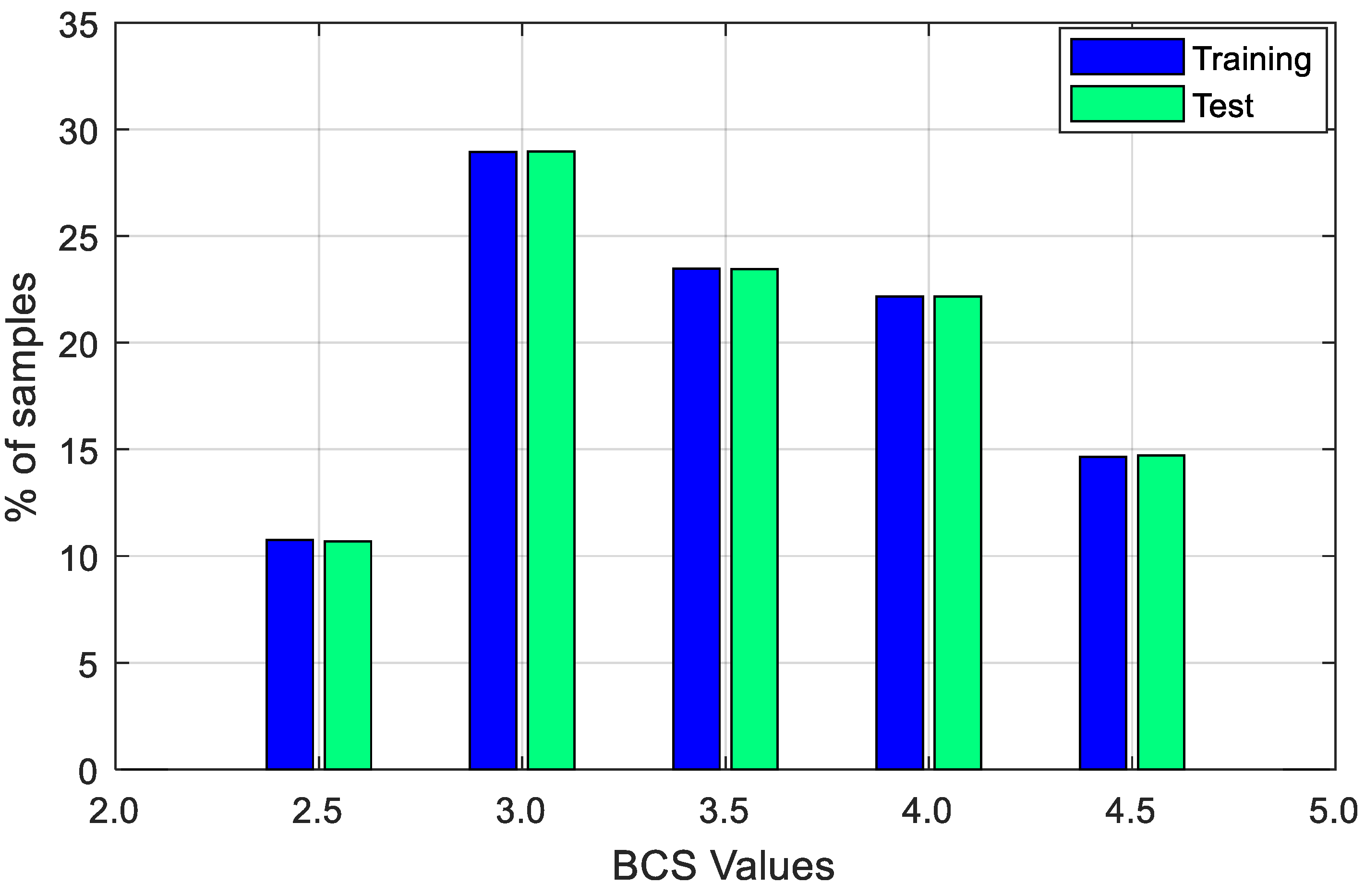

2.3. Image Samples and Dataset

2.4. BCS Estimation Models

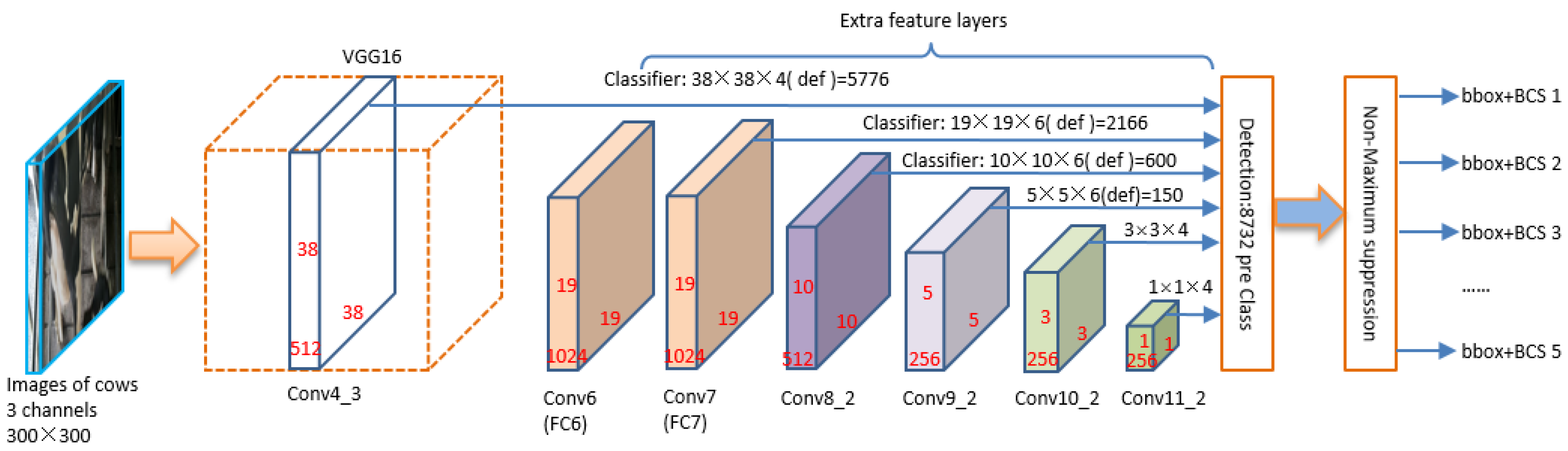

2.4.1. Original SSD Model for BCS Assessing

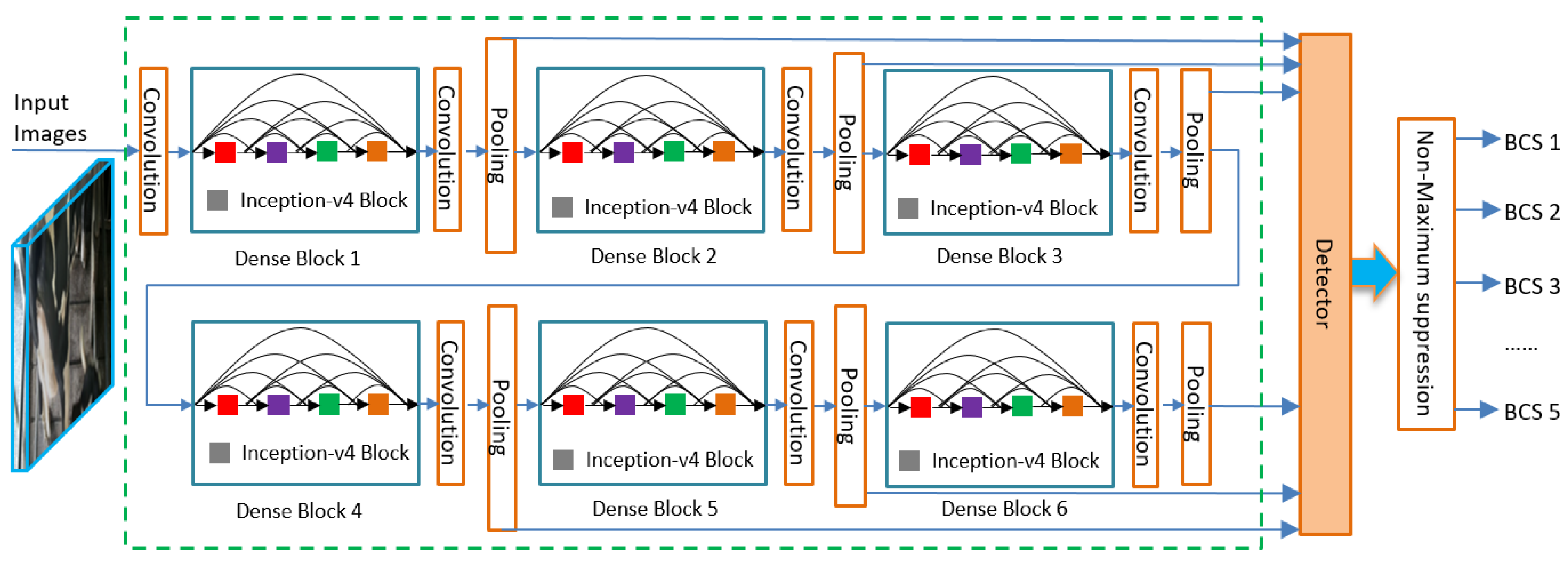

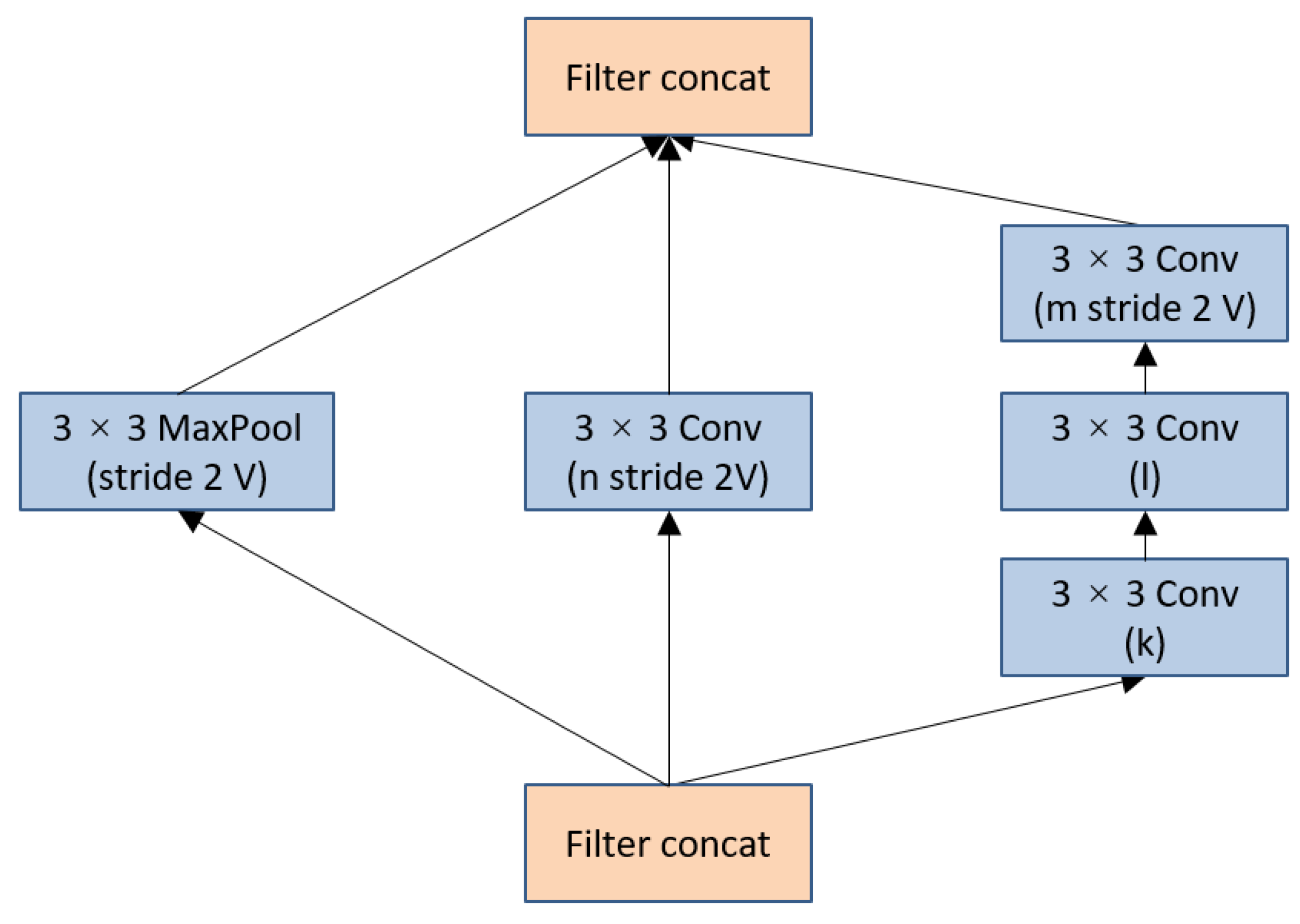

2.4.2. The Improved SSD Model

2.5. Performance Evaluation

- Intersection Over Union (IoU): It evaluates the overlap between two bounding boxes. It requires a ground truth bounding box (as shown in Figure 3, our labeled rectangle) and a predicted bounding box (that is our algorithms predicted rectangle).

- Mean IoU: It is the mean value of for all the test sample images.

- Classification Accuracy (): it represents the effectiveness of a classifier. Its calculating method is given in Equation (4) where is the abbreviation of true positives, is true negatives, is false positives, and is false negatives.

- Mean Average Precision (mAP): it is a metric to measure the mean accuracy of N classes (N is the class number for all), which is different from AP (for one class).

- Confusion Matrix: in order to assess the accuracy of an image classification, creating a confusion matrix is common practice. It identifies the nature of the classification errors, as well as their quantities.

- Frame Per Second (FPS): it is a speed measure for the network model to run each image frame. Second per frame (SPF) is also another form of speed metric to evaluate the performance of the models.

- Model Size: it is a storage space measure (in MB) for the validated model after training work. This parameter has a significant importance when the network model is transplanted into the embedded system. The smaller the model is, the lower the system costs.

3. Training

4. Results and Analysis

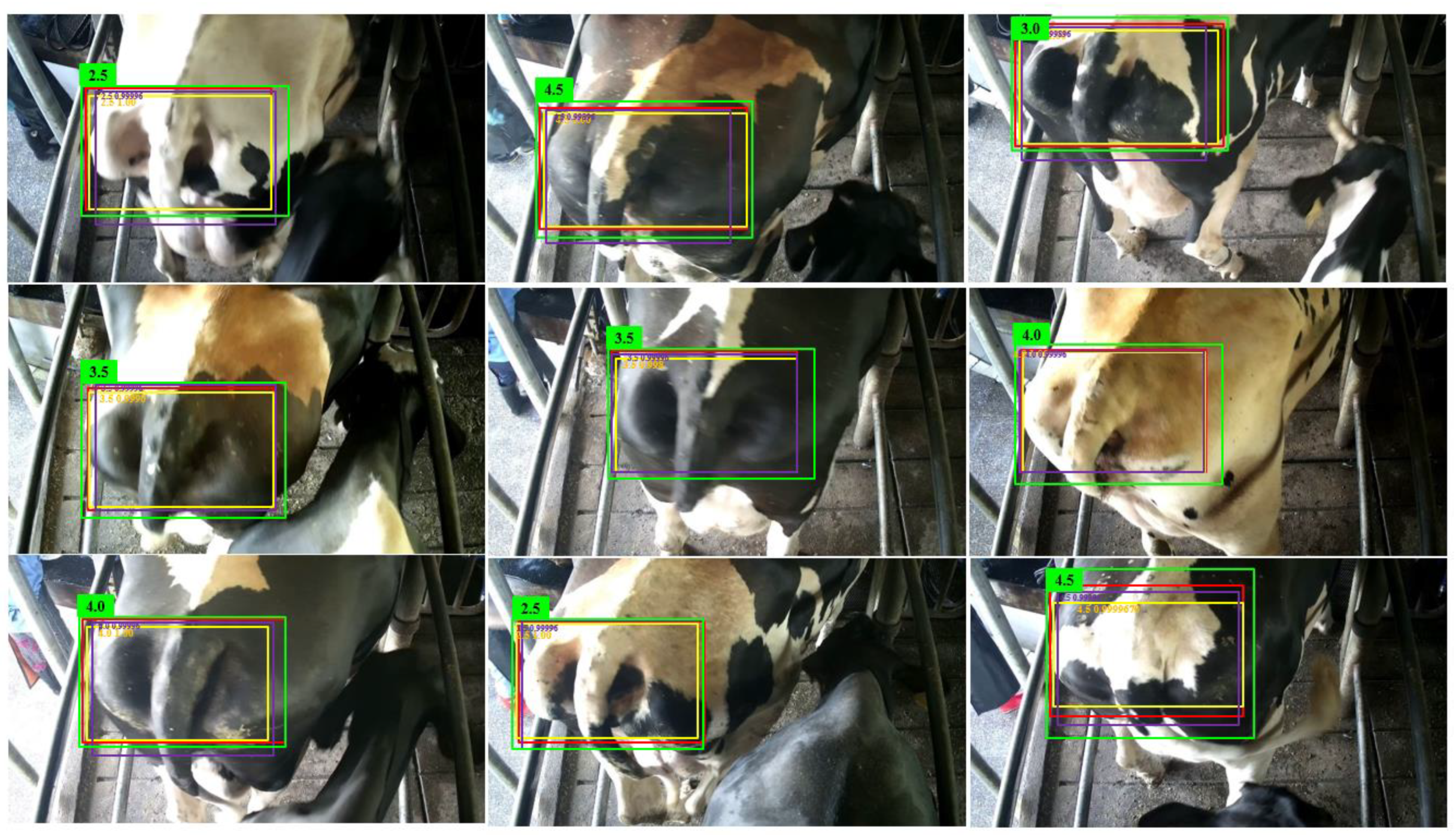

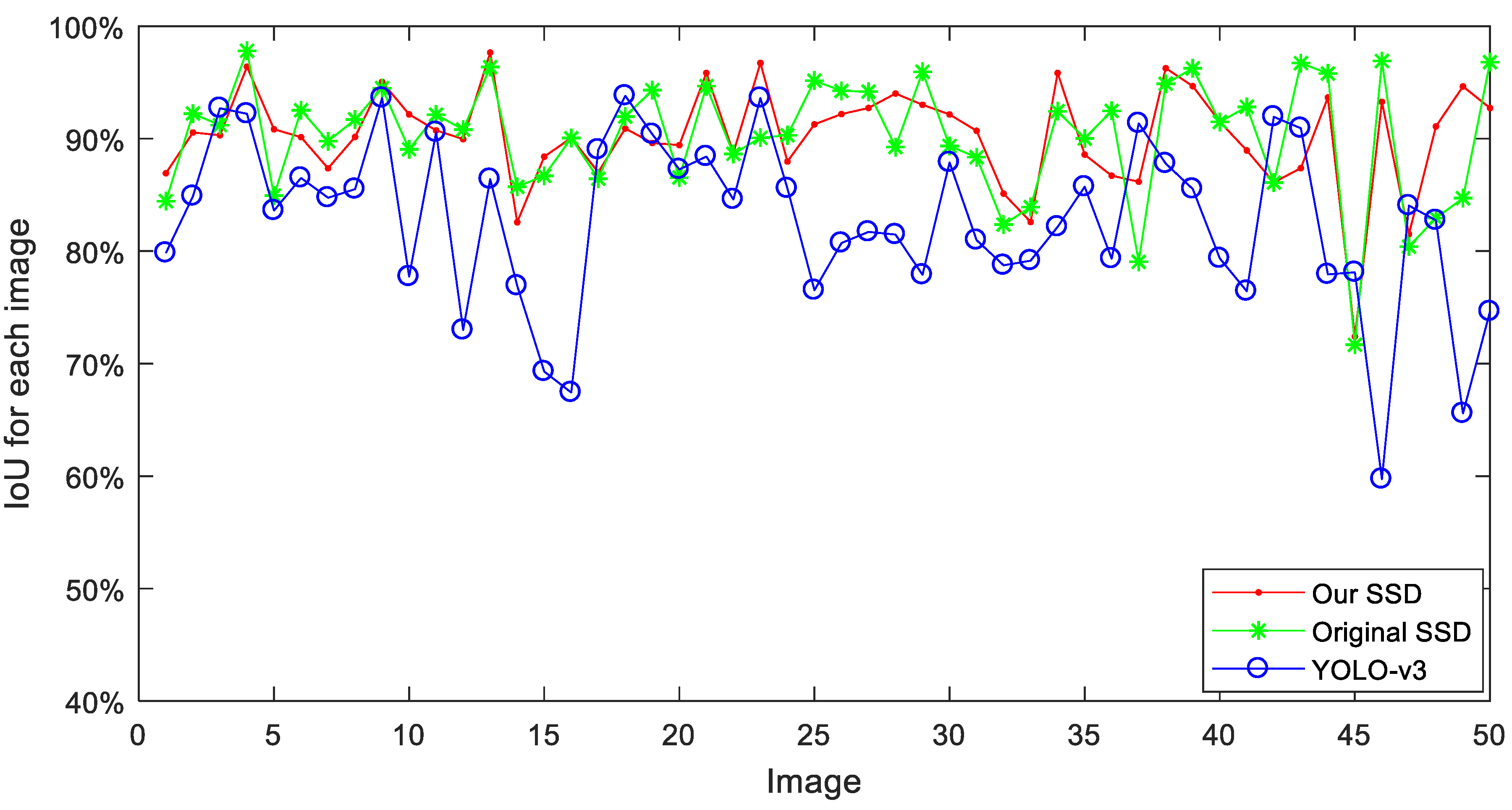

4.1. Position Results and Comparison

4.2. BCS Assessing Results and Comparison

4.3. Running Speed and Model Size Analysis

5. Discussion

5.1. Why the Detection Totals are Different from the Test Data Set?

5.2. Can Veterinarians Score on Images?

5.3. More Training Data vs. More Accurate Data

5.4. Future Work

- For lacking of unhealthy or over-fed dairy cows, he BCS below 2.5 and BCS 5 are lacking, a complete 5-point scale BCS system need to be consummated.

- The performance of the speed and accuracy still need to be further improved, especially for BCS from 3.0 to 4.0. The shape of cows in this section are easily confused when experts scoring the image samples. If more veterinary experts joint the scoring work to eliminate subjective error, the CA of each BCS level may be increased. Although the CA of our SSD is 98.46% that it can be improved a little more. An effective way to improve it is that the monitoring system should be lasted over a long period of time, and the image samples size should be expanded.

- Because of the unresolved identification, the BCS of the individual cow cannot be picked out from the database. In order to connect BCS score to the ID of each cow, the combination of camera and wearable sensors, such as pedometer and electronic ear tag may be a good solution in future.

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Roche, J.R.; Macdonald, K.A.; Schütz, K.E.; Matthews, L.R.; Verkerk, G.A.; Meier, S.; Loor, J.J.; Rogers, A.R.; McGowan, J.; Morgan, S.R.; et al. Calving body condition score affects indicators of health in grazing dairy cows. J. Dairy Sci. 2013, 96, 5811–5825. [Google Scholar] [CrossRef]

- Dickinson, S.E.; Elmore, M.F.; Kriese-Anderson, L.; Elmore, J.B.; Walker, B.N.; Dyce, P.W.; Biase, F.H. Evaluation of age, weaning weight, body condition score, and reproductive tract score in pre-selected beef heifers relative to reproductive potential. J. Anim. Sci. Biotechnol. 2019, 10, 18. [Google Scholar] [CrossRef]

- Akbar, H.; Grala, T.M.; Vailati, R.M.; Cardoso, F.C.; Verkerk, G.; McGowan, J.; Macdonald, K.; Webster, J.; Schutz, K.; Meier, S.; et al. Body condition score at calving affects systemic and hepatic transcriptome indicators of inflammation and nutrient metabolism in grazing dairy cows. J. Dairy Sci. 2015, 98, 1019–1032. [Google Scholar] [CrossRef] [PubMed]

- Gillespie, J.; Flanders, F. Modern Livestock & Poultry Production; Cengage Learning: Boston, MA, USA, 2009. [Google Scholar]

- Spoliansky, R.; Edan, Y.; Parmet, Y.; Halachmi, I. Development of automatic body condition scoring using a low-cost 3-dimensional Kinect camera. J. Dairy Sci. 2016, 99, 7714–7725. [Google Scholar] [CrossRef]

- Thorup, V.M.; Edwards, D.; Friggens, N.C. On-farm estimation of energy balance in dairy cows using only frequent body weight measurements and body condition score. J. Dairy Sci. 2012, 95, 1784–1793. [Google Scholar] [CrossRef]

- DeMol, R.M.; Lpema, A.H.; Bleumer, E.J.B.; Hogewerf, P.H. Application of trends in body weight measurements to predict changes in body condition score. In Proceedings of the 6th European Conference on Precision Livestock Farming, ECPLF, Leuven, Belgium, 10–12 September 2013; pp. 551–560. [Google Scholar]

- Janzekovic, M.; Mocnik, U.; Brus, M. Ultarasound Measurements for body condition score assessment of dairy cows. In DAAAM İnternational Scientific Book; DAAAM International: Vienna, Austria, 2015; Chapter 5; pp. 51–58. [Google Scholar]

- Bünemann, K.; Von Soosten, D.; Frahm, J.; Kersten, S.; Meyer, U.; Hummel, J.; Dänicke, S. Effects of Body Condition and Concentrate Proportion of the Ration on Mobilization of Fat Depots and Energetic Condition in Dairy Cows during Early Lactation Based on Ultrasonic Measurements. Animals 2019, 9, 131. [Google Scholar] [CrossRef] [PubMed]

- Halachmi, I.; Klopčič, M.; Polak, P.; Roberts, D.J.; Bewley, J.M. Automatic assessment of dairy cattle body condition score using thermal imaging. Comput. Electron. Agric. 2013, 99, 35–40. [Google Scholar] [CrossRef]

- Zin, T.T.; Pyke, T.; Kobayashi, I.; Horii, Y. An automatic estimation of dairy cow body condition score using analytic geometric image features. In Proceedings of the 2018 IEEE 7th Gloabal Conference on Consumer Electronics, GCCE, Nara, Japan, 9–12 October 2018. [Google Scholar]

- DeLaval. Body Condition Scoring BCS. Available online: http://www.delavalcorporate.com (accessed on 4 July 2019).

- Imamura, S.; Zin, T.T.; Kobayashi, I.; Horii, Y. Automatic evaluation of cow’s body condition score using 3D camera. In Proceedings of the IEEE 6th Global Conference on Consumer Electronics (GCCE), Nagoya, Japan, 24–27 October 2017; pp. 1–2. [Google Scholar]

- Rodríguez, A.J.; Arroqui, M.; Mangudo, P.; Toloza, J.; Jatip, D.; Rodríguez, J.M.; Teyseyre, A.; Sanz, C.; Zunino, A.; Machado, C.; et al. Body condition estimation on cows from depth images using Convolutional Neural Networks. Comput. Electron. Agric. 2018, 155, 12–22. [Google Scholar] [CrossRef]

- Shigeta, M.; Ike, R.; Takemura, H.; Ohwada, H. Automatic measurement and determination of body condition score of cows based on 3D images using CNN. J. Robot. Mechatron. 2018, 30, 206–213. [Google Scholar] [CrossRef]

- Hansen, M.F.; Smith, M.L.; Abdul, J.K. Automated monitoring of dairy cow body condition, mobility and weight using a single 3D video capture device. Comput. Ind. 2018, 98, 14–22. [Google Scholar] [CrossRef] [PubMed]

- Rodríguez Alvarez, J.; Arroqui, M.; Mangudo, P.; Toloza, J.; Jatip, D.; Rodriguez, J.M.; Mateos, C. Estimating body condition score in dairy cows from depth images using convolutional neural networks, transfer learning and model ensembling techniques. Agronomy 2018, 9, 90. [Google Scholar] [CrossRef]

- Syed, M.A.; Shailendra, N.S. Region-based Object Detection and Classification using Faster R-CNN. In Proceedings of the 4th International Conference on Computational Intelligence & Communication Technology (CICT 2018), Ghaziabad, India, 9–10 February 2018; pp. 1–6. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2016), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv, 2018; arXiv:1804.02767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, C.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the 14th European Conference on Computer Vision, ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Cheng, N.; Huajun, Z.; Yan, S.; Jinhui, T. Inception Single Shot MultiBox Detector for object detection. In Proceedings of the 2017 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Hong Kong, China, 10–14 July 2017; pp. 549–554. [Google Scholar]

- Lynn, N.C.; Kyu, Z.M.; Zin, T.T.; Kobayashi, I. Estimating body condition score of cows from images with the newly developed approach. In Proceedings of the 18th IEEE/ACIS International Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing (SNPD), Kanazawa, Japan, 26–28 June 2017; pp. 91–94. [Google Scholar]

- Heng, P.; Jinrong, H.; Ling, Y.; Guoliang, H. Regression analysis for dairy cattle body condition scoring based on dorsal images. In Proceedings of the 8th International Conference on Intelligence Science and Big Data Engineering, IScIDE 2018, Lanzhou, China, 18–19 August 2018; pp. 557–566. [Google Scholar]

- Windman, E.E.; Jones, G.M.; Wagner, P.E.; Boman, R.L.; Troutt, J.H.F.; Lesch, T.N. A dairy cow body condition scoring system and its relationship to selected production characteristics. J. Dairy Sci. 1982, 65, 495–501. [Google Scholar] [CrossRef]

- Earle, D.F. A guide to scoring dairy cow condition. J. Agric. Vic. 1976, 74, 228–231. [Google Scholar]

- Macdonald, K.A.; Roche, J.R. Condition Scoring Made Easy, Condition Scoring Dairy Herds, 1st ed.; Dexcel Ltd.: Hamilton, New Zealand, 2004; ISBN 0-476-00217-6. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the 2017 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2017), Honolulu, HI, USA, 21–27 July 2017; pp. 2261–2269. [Google Scholar]

- Christian, S.; Vincent, V.; Sergey, L.; Jon, S.; Zbigniew, W. Rethinking the inception architecture for computer vision. In Proceedings of the 2016 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2016), Las Vegas, NV, USA, 9 December 2016; pp. 2818–2826. [Google Scholar]

- Christian, S.; Sergey, L.; Vincent, V.; Alex, A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. arXiv, 2016; arXiv:1602.07261. [Google Scholar]

- Ferguson, J.D.; Azzaro, G.; Licitra, G. Body condition assessment using digital images. J. Dairy Sci. 2006, 89, 3833–3841. [Google Scholar] [CrossRef]

- Bewley, J.M.; Peacock, A.M.; Lewis, O.; Boyce, R.E.; Roberts, D.J.; Coffey, M.P.; Kenyon, S.J.; Schutz, M.M. Potential for estimation of body condition scores in dairy cattle from digital images. J. Dairy Sci. 2008, 91, 3439–3453. [Google Scholar] [CrossRef] [PubMed]

- Shen, Z.; Liu, Z.; Li, J.; Jiang, Y.G.; Chen, Y.; Xue, X. DSOD: Learning deeply supervised object detectors from scratch. arXiv, 2017; arXiv:1708.01241v1. [Google Scholar]

- Krasin, I.; Duerig, T.; Alldrin, N.; Veit, A. Openimages: A Public Dataset for Large-Scale Multi-Label and Multi-Class Image Classification. Available online: https://github.com/openimages (accessed on 18 August 2016).

| BCS Value | Training Images | Test Images | Total Images |

|---|---|---|---|

| 2.5 | 865 | 99 | 964 |

| 3.0 | 2343 | 254 | 2597 |

| 3.5 | 1894 | 212 | 2106 |

| 4.0 | 1782 | 207 | 1989 |

| 4.5 | 1190 | 126 | 1316 |

| Total | 8074 | 898 | 8972 |

| Layers | Structure | Output Shape |

|---|---|---|

| Input | 448 × 448 | 448 × 448 |

| Dense Block (1) | Inception block × 6 | 224 × 224 × 32 |

| Transition Layer (1) | 1 × 1 conv; 2 × 2 max pool, stride 1 | |

| Dense Block (2) | Inception block × 6 | 112 × 112 × 64 |

| Transition Layer (2) | 1 × 1 conv; 2 × 2 max pool, stride 1 | |

| Dense Block (3) | Inception block × 6 | 56 × 56 × 128 |

| Transition Layer (3) | 1 × 1 conv; 2 × 2 max pool, stride 1 | |

| Dense Block (4) | Inception block × 6 | 28 × 28 × 128 |

| Transition Layer (4) | 1 × 1 conv; 2 × 2 max pool, stride 1 | |

| Dense Block (5) | Inception block × 6 | 14 × 14 × 128 |

| Transition Layer (5) | 1 × 1 conv; 2 × 2 max pool, stride 1 | |

| Dense Block (6) | Inception block × 6 | 7 × 7 × 128 |

| Transition Layer (6) | 1 × 1 conv; 2 × 2 max pool, stride 1 | |

| Prediction Layers | Plain/Dense | – |

| Our SSD | Original SSD | YOLO-v3 |

|---|---|---|

| 89.63% | 90.55% | 83.64% |

| BCS | YOLO-v3 | Original SSD | Our SSD |

|---|---|---|---|

| 2.5 | 98.99% | 98.99% | 99.0% |

| 3.0 | 90.49% | 99.60% | 98.83% |

| 3.5 | 87.75% | 98.58% | 98.14% |

| 4.0 | 79.37% | 98.56% | 97.67% |

| 4.5 | 99.10% | 100% | 99.21% |

| mAP | 88.84% | 99.10% | 98.46% |

| Algorithm | BCS | 2.5 | 3.0 | 3.5 | 4.0 | 4.5 |

|---|---|---|---|---|---|---|

| Our SSD | 2.5 | 99 | 0 | 0 | 0 | 0 |

| 3.0 | 1 | 254 | 0 | 0 | 0 | |

| 3.5 | 0 | 1 | 211 | 5 | 0 | |

| 4.0 | 0 | 2 | 4 | 205 | 1 | |

| 4.5 | 0 | 0 | 0 | 0 | 126 | |

| Original SSD | 2.5 | 98 | 1 | 0 | 0 | 0 |

| 3.0 | 1 | 252 | 1 | 0 | 0 | |

| 3.5 | 0 | 0 | 209 | 3 | 0 | |

| 4.0 | 0 | 0 | 2 | 205 | 0 | |

| 4.5 | 0 | 0 | 0 | 0 | 126 | |

| YOLO-v3 | 2.5 | 98 | 3 | 0 | 0 | 0 |

| 3.0 | 0 | 257 | 11 | 1 | 0 | |

| 3.5 | 1 | 24 | 179 | 30 | 0 | |

| 4.0 | 0 | 0 | 12 | 200 | 1 | |

| 4.5 | 0 | 0 | 2 | 21 | 110 |

| Performance | Our SSD | Original SSD | YOLO-v3 |

|---|---|---|---|

| Running speed | 115 FPS | 39 FPS | 17 FPS |

| Model size | 23.1 MB | 97.1 MB | 246 MB |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, X.; Hu, Z.; Wang, X.; Yang, X.; Zhang, J.; Shi, D. An Improved Single Shot Multibox Detector Method Applied in Body Condition Score for Dairy Cows. Animals 2019, 9, 470. https://doi.org/10.3390/ani9070470

Huang X, Hu Z, Wang X, Yang X, Zhang J, Shi D. An Improved Single Shot Multibox Detector Method Applied in Body Condition Score for Dairy Cows. Animals. 2019; 9(7):470. https://doi.org/10.3390/ani9070470

Chicago/Turabian StyleHuang, Xiaoping, Zelin Hu, Xiaorun Wang, Xuanjiang Yang, Jian Zhang, and Daoling Shi. 2019. "An Improved Single Shot Multibox Detector Method Applied in Body Condition Score for Dairy Cows" Animals 9, no. 7: 470. https://doi.org/10.3390/ani9070470

APA StyleHuang, X., Hu, Z., Wang, X., Yang, X., Zhang, J., & Shi, D. (2019). An Improved Single Shot Multibox Detector Method Applied in Body Condition Score for Dairy Cows. Animals, 9(7), 470. https://doi.org/10.3390/ani9070470