A Lightweight Model for Small-Target Pig Eye Detection in Automated Estrus Recognition

Simple Summary

Abstract

1. Introduction

- (1)

- A comprehensive analysis of porcine eye features across different estrus stages, leading to the establishment of a dataset encompassing eye images of sows in pre-estrus, mid-estrus, and post-estrus phases;

- (2)

- Algorithmic improvements, including the introduction of the MSCA module to enhance small-object detection efficiency, the PPA and GAM modules to strengthen feature extraction capabilities, and the adaptive threshold focal loss (ATFL) function to improve model focus on hard-to-classify samples;

- (3)

- A comparative analysis of ECA–YOLO against YOLOv5n, YOLOv7tiny, YOLOv8n, YOLOv10n, YOLOv11n, and Faster R-CNN using the estrus sow eye dataset.

2. Materials and Methods

2.1. Materials

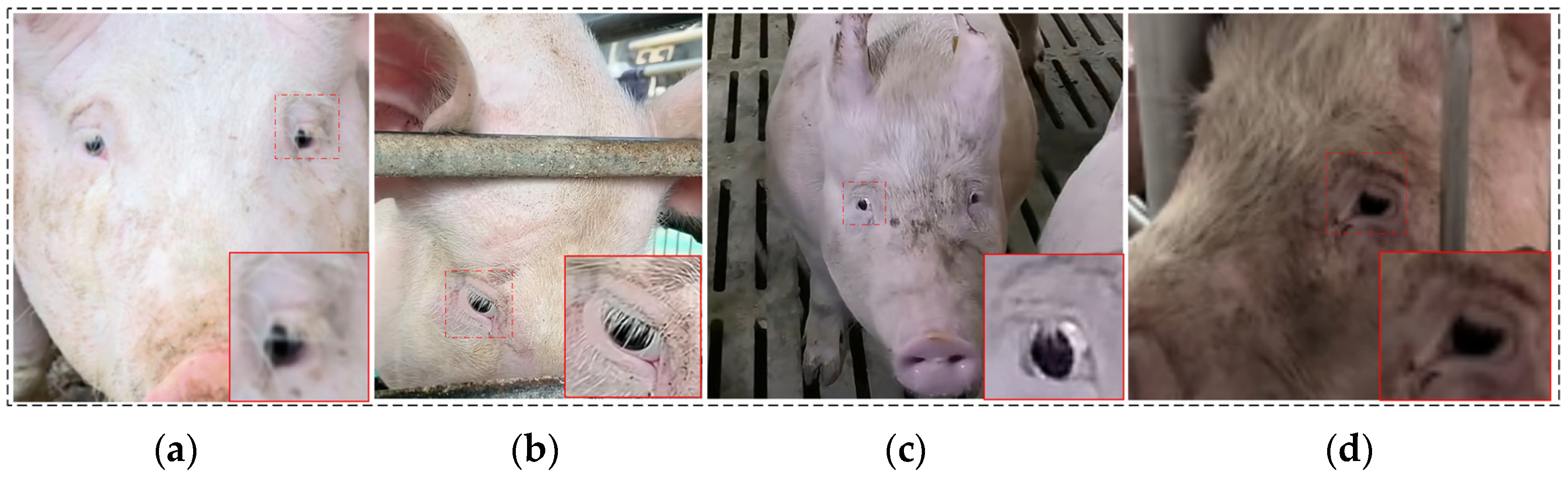

2.1.1. Data Collection

2.1.2. Data Set Construction

2.2. Methods

2.2.1. YOLOv11

2.2.2. MSCA Module

2.2.3. PPA Module

2.2.4. GAM Module

2.2.5. ATFL Focus Loss Function

2.3. Experimental Platforms

2.4. Assessment Indicators

- (1)

- Accuracy

- (2)

- Precision

- (3)

- Recall

- (4)

- F1-Score

- (5)

- GFLOPs

- (6)

- Parameters

- (7)

- Detect Speed

3. Results

3.1. Ablation Experiment

3.2. ECA–YOLO Training Results

3.3. Comparison of Similar Models

4. Model Verification and Discussion

4.1. Model Validation

4.1.1. Validation Data Collection

4.1.2. Validation Experiment Procedure

4.2. Discussion

4.2.1. Analysis of Model Limitations

4.2.2. Discussion of the Impact of Confidence Thresholds on ICAE-YOLO Performance

5. Conclusions

- (1)

- The study investigates ocular appearance variations across different estrus stages and establishes a dataset of sow eye images covering pre-estrus, estrus, and post-estrus periods. Validation results show that ECA–YOLO achieves a mean average precision (mAP) of 93.2%, an F1-score of 88.0%, with model parameters of 5.31M, and FPS reaches 75.53 frames per second.

- (2)

- Experimental results indicate significant phenotypic changes in the eye region across estrus stages, confirming that ocular features can serve as reliable indicators for estrus detection.

- (3)

- Compared to YOLOv5n, YOLOv7tiny, YOLOv8n, YOLOv10n, YOLOv11n, and Faster R-CNN, ECA–YOLO achieves the highest detection accuracy while maintaining a fast inference speed.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Verhoeven, S.; Chantziaras, I.; Bernaerdt, E.; Loicq, M.; Verhoeven, L.; Maes, D. The evaluation of an artificial intelligence system for estrus detection in sows. Porc. Health Manag. 2023, 9, 9. [Google Scholar] [CrossRef] [PubMed]

- Soede, N.; Wetzels, C.; Zondag, W.; De Koning, M.; Kemp, B. Effects of time of insemination relative to ovulation, as determined by ultrasonography, on fertilization rate and accessory sperm count in sows. Reproduction 1995, 104, 99–106. [Google Scholar] [CrossRef]

- Kemp, B.; Soede, N. Relationship of weaning-to-estrus interval to timing of ovulation and fertilization in sows. J. Anim. Sci. 1996, 74, 944–949. [Google Scholar] [CrossRef] [PubMed]

- Fernandez-Bueno, I.; Pastor, J.C.; Gayoso, M.J.; Alcalde, I.; Garcia, M.T. Müller and macrophage-like cell interactions in an organotypic culture of porcine neuroretina. Mol. Vis. 2008, 14, 2148. [Google Scholar]

- Cao, Y.; Yin, Z.; Duan, Y.; Cao, R.; Hu, G.; Liu, Z. Research on improved sound recognition model for oestrus detection in sows. Comput. Electron. Agric. 2025, 231, 109975. [Google Scholar] [CrossRef]

- Xu, Z.; Sullivan, R.; Zhou, J.; Bromfield, C.; Lim, T.T.; Safranski, T.J.; Yan, Z. Detecting sow vulva size change around estrus using machine vision technology. Smart Agric. Technol. 2023, 3, 100090. [Google Scholar] [CrossRef]

- McGlone, J.J.; Aviles-Rosa, E.O.; Archer, C.; Wilson, M.M.; Jones, K.D.; Matthews, E.M.; Gonzalez, A.A.; Reyes, E. Understanding sow sexual behavior and the application of the boar pheromone to stimulate sow reproduction. In Animal Reproduction in Veterinary Medicine; IntechOpen: London, UK, 2020. [Google Scholar] [CrossRef]

- Nath, K.; Purbey, L.; Luktuke, S. Behavioural pattern of oestrus in the sow. Indian Vet. J. 1983, 60, 654–659. [Google Scholar]

- Kraeling, R.R.; Webel, S.K. Current strategies for reproductive management of gilts and sows in North America. J. Anim. Sci. Biotechnol. 2015, 6, 3. [Google Scholar] [CrossRef]

- Reith, S.; Hoy, S. Behavioral signs of estrus and the potential of fully automated systems for detection of estrus in dairy cattle. Animal 2018, 12, 398–407. [Google Scholar] [CrossRef]

- Lei, K.; Zong, C.; Du, X.; Teng, G.; Feng, F. Oestrus analysis of sows based on bionic boars and machine vision technology. Animals 2021, 11, 1485. [Google Scholar] [CrossRef]

- Wu, Z.; Zhang, H.; Fang, C. Research on machine vision online monitoring system for egg production and quality in cage environment. Poult. Sci. 2025, 104, 104552. [Google Scholar] [CrossRef]

- Xia, X.; Zhang, N.; Guan, Z.; Chai, X.; Ma, S.; Chai, X.; Sun, T. PAB-Mamba-YOLO: VSSM assists in YOLO for aggressive behavior detection among weaned piglets. Artif. Intell. Agric. 2025, 15, 52–66. [Google Scholar] [CrossRef]

- Luo, Y.; Lin, K.; Xiao, Z.; Lv, E.; Wei, X.; Li, B.; Lu, H.; Zeng, Z. PBR-YOLO: A lightweight piglet multi-behavior recognition algorithm based on improved yolov8. Smart Agric. Technol. 2025, 10, 100785. [Google Scholar] [CrossRef]

- Huang, M.; Li, L.; Hu, H.; Liu, Y.; Yao, Y.; Song, R. IAT-YOLO: A black pig detection model for use in low-light environments. In Proceedings of the 2024 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), Zhuhai, China, 22–24 November 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Wang, Z.; Deng, H.; Zhang, S.; Xu, X.; Wen, Y.; Song, H. Detection and tracking of oestrus dairy cows based on improved YOLOv8n and TransT models. Biosyst. Eng. 2025, 252, 61–76. [Google Scholar] [CrossRef]

- Zheng, H.; Zhang, H.; Song, S.; Wang, Y.; Liu, T. Automatic detection of sow estrus using a lightweight real-time detector and thermal images. Int. J. Agric. Biol. Eng. 2023, 16, 194–207. [Google Scholar] [CrossRef]

- Wang, Y.; Li, S.; Zhang, H.; Liu, T. A lightweight CNN-based model for early warning in sow oestrus sound monitoring. Ecol. Inform. 2022, 72, 101863. [Google Scholar] [CrossRef]

- Chen, P.; Yin, D.; Yang, B.; Tang, W. A Fusion Feature for the Oestrous Sow Sound Identification Based on Convolutional Neural Networks. J. Phys. Conf. Ser. 2022, 2203, 012049. [Google Scholar] [CrossRef]

- Wang, J.; Si, Y.; Wang, J.; Li, X.; Zhao, K.; Liu, B.; Zhou, Y. Discrimination strategy using machine learning technique for oestrus detection in dairy cows by a dual-channel-based acoustic tag. Comput. Electron. Agric. 2023, 210, 107949. [Google Scholar] [CrossRef]

- Gao, Y.; Wu, Z.; Sheng, B.; Zhang, F.; Cheng, Y.; Yan, J.; Peng, Q. The enlightenment of artificial intelligence large-scale model on the research of intelligent eye diagnosis in traditional Chinese medicine. Digit. Chin. Med. 2024, 7, 101–107. [Google Scholar] [CrossRef]

- Zou, C.; Yin, L.; Ye, J.; Wei, Z. Exploring the Innovation of Eye Diagnosis Theory Based on the Principle of State Identification. Med. Diagn. 2022, 12, 237. [Google Scholar] [CrossRef]

- Wang, Z.; Li, C.; Shao, H.; Sun, J. Eye recognition with mixed convolutional and residual network (MiCoRe-Net). IEEE Access 2018, 6, 17905–17912. [Google Scholar] [CrossRef]

- Wong, K.-H.; Koopmans, S.A.; Terwee, T.; Kooijman, A.C. Changes in spherical aberration after lens refilling with a silicone oil. Investig. Ophthalmol. Vis. Sci. 2007, 48, 1261–1267. [Google Scholar] [CrossRef][Green Version]

- Ruiz-Ederra, J.; García, M.; Hernández, M.; Urcola, H.; Hernández-Barbáchano, E.; Araiz, J.; Vecino, E. The pig eye as a novel model of glaucoma. Exp. Eye Res. 2005, 81, 561–569. [Google Scholar] [CrossRef]

- Kim, M.K.; Oh, J.Y.; Ko, J.H.; Lee, H.J.; Jung, J.H.; Wee, W.R.; Lee, J.H.; Park, C.-G.; Kim, S.J.; Ahn, C. DNA microarray-based gene expression profiling in porcine keratocytes and corneal endothelial cells and comparative analysis associated with Xeno-related rejection. J. Korean Med. Sci. 2009, 24, 189–196. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Li, N.; Al Riza, D.F.; Ouma, O.S.; Shibasaki, M.; Fukushima, M.; Fujiura, T.; Ogawa, Y.; Kondo, N.; Suzuki, T. Blood vitamin A level prediction in Japanese black cattle based on chromatic and dynamic eye features using double imaging system. Biosyst. Eng. 2024, 244, 107–113. [Google Scholar] [CrossRef]

- Rojas-Olivares, M.; Caja, G.; Carné, S.; Salama, A.; Adell, N.; Puig, P. Retinal image recognition for verifying the identity of fattening and replacement lambs. J. Anim. Sci. 2011, 89, 2603–2613. [Google Scholar] [CrossRef]

- Saygılı, A.; Cihan, P.; Ermutlu, C.Ş.; Aydın, U.; Aksoy, Ö. CattNIS: Novel identification system of cattle with retinal images based on feature matching method. Comput. Electron. Agric. 2024, 221, 108963. [Google Scholar] [CrossRef]

- Zhang, B.; Xiao, D.; Liu, J.; Huang, S.; Huang, Y.; Lin, T. Pig eye area temperature extraction algorithm based on registered images. Comput. Electron. Agric. 2024, 217, 108549. [Google Scholar] [CrossRef]

- Schmitz, L.; Motani, R. Morphological differences between the eyeballs of nocturnal and diurnal amniotes revisited from optical perspectives of visual environments. Vis. Res. 2010, 50, 936–946. [Google Scholar] [CrossRef]

- Simões, V.G.; Lyazrhi, F.; Picard-Hagen, N.; Gayrard, V.; Martineau, G.-P.; Waret-Szkuta, A. Variations in the vulvar temperature of sows during proestrus and estrus as determined by infrared thermography and its relation to ovulation. Theriogenology 2014, 82, 1080–1085. [Google Scholar] [CrossRef]

- Adrian, T.E. Radioimmunoassay. In Neuropeptide Protocols; Humana Press Inc.: Totowa, NJ, USA, 1997; pp. 251–267. [Google Scholar] [CrossRef]

- Bakurov, I.; Buzzelli, M.; Schettini, R.; Castelli, M.; Vanneschi, L. Structural similarity index (SSIM) revisited: A data-driven approach. Expert Syst. Appl. 2022, 189, 116087. [Google Scholar] [CrossRef]

- Xie, W.; Zhu, D.; Tong, X. A small target detection method based on visual attention. Comput. Eng. Appl. 2013, 49, 125–128. [Google Scholar]

- Guo, M.-H.; Lu, C.-Z.; Hou, Q.; Liu, Z.; Cheng, M.-M.; Hu, S.-M. Segnext: Rethinking convolutional attention design for semantic segmentation. Adv. Neural Inf. Process. Syst. 2022, 35, 1140–1156. [Google Scholar]

- Xu, S.; Zheng, S.; Xu, W.; Xu, R.; Wang, C.; Zhang, J.; Teng, X.; Li, A.; Guo, L. Hcf-net: Hierarchical context fusion network for infrared small object detection. In Proceedings of the 2024 IEEE International Conference on Multimedia and Expo (ICME), Niagara Falls, ON, Canada, 15–19 July 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global attention mechanism: Retain information to enhance channel-spatial interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar] [CrossRef]

- Tang, F.; Ding, J.; Quan, Q.; Wang, L.; Ning, C.; Zhou, S.K. Cmunext: An efficient medical image segmentation network based on large kernel and skip fusion. In Proceedings of the 2024 IEEE International Symposium on Biomedical Imaging (ISBI), Athens, Greece, 27–30 May 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Valero-Carreras, D.; Alcaraz, J.; Landete, M. Comparing two SVM models through different metrics based on the confusion matrix. Comput. Oper. Res. 2023, 152, 106131. [Google Scholar] [CrossRef]

| Category | Number of Raw Data | Total Number of Raw Data | Expansion Method | Number of Expanded Data | Total Number of Expanded Data Sets |

|---|---|---|---|---|---|

| Non-estrus | 382 | 1487 | 1.Brightness Increase 2.Flip Image 3.Angle of Rotation | 1146 | 4461 |

| Pre-estrus | 369 | 1107 | |||

| Mid-estrus | 371 | 1113 | |||

| Late-estrus | 365 | 1095 |

| Core Hardware Configuration | Processor | Intel Core i5-12450H |

| Graphics Card | NVIDIA GeForce 3060 Laptop | |

| Memory | 16G | |

| Hard Disk | 512G SSD | |

| Core Software Configuration | Anaconda | Anaconda3 2019.10(64-bit) |

| Python | 3.8 | |

| CUDA | 11.2 | |

| torch | 1.8.0 | |

| TorchVision | 0.9.0 | |

| Labelimg | 1.8.6 | |

| Operating System | Windows11(24H2) |

| Model | mAP | F1-Score | Parameters (M) | Detect Speed (ms·Frame−1) |

|---|---|---|---|---|

| YOLOv11n(baseline) | 0.904 | 0.850 | 4.95 | 6.20 |

| YOLOv11n + PPA | 0.915 | 0.860 | 5.05 | 6.33 |

| YOLOv11n + MSCA | 0.910 | 0.860 | 5.02 | 6.31 |

| YOLOv11n + GAM | 0.908 | 0.850 | 5.00 | 6.28 |

| YOLOv11n + ATFL | 0.907 | 0.850 | 4.97 | 6.27 |

| YOLOv11n + PPA + GAM + ATFL | 0.925 | 0.870 | 5.20 | 6.46 |

| YOLOv11n + MSCA + GAM + ATFL | 0.920 | 0.870 | 5.18 | 6.43 |

| YOLOv11n + MSAC + PPA + ATFL | 0.928 | 0.870 | 5.22 | 6.49 |

| YOLOv11n + MSCA + PPA + GAM | 0.930 | 0.870 | 5.25 | 6.55 |

| YOLOv11n + MSCA + PPA + GAM + ATFL(ECA-YOLO) | 0.932 | 0.880 | 5.31 | 6.62 |

| Models | mAP | F1-Score | GFLOPs | Parameters (M) | Detect Speed (ms·Frame−1) |

|---|---|---|---|---|---|

| YOLOv5n | 0.865 | 0.86 | 7.2 | 4.79 | 6.89 |

| YOLOv7tiny | 0.855 | 0.83 | 9.6 | 8.83 | 11.46 |

| YOLOv8n | 0.896 | 0.84 | 8.2 | 5.75 | 7.53 |

| YOLOv10n | 0.873 | 0.82 | 8.4 | 5.17 | 7.84 |

| YOLOv11n | 0.904 | 0.85 | 6.4 | 4.95 | 6.20 |

| Faster R-CNN | 0.840 | 0.80 | 27 | 31.49 | 25.35 |

| ECA–YOLO | 0.932 | 0.88 | 6.7 | 5.31 | 6.62 |

| Models | mAP Boost Rate (%) | F1-Score Boost Rate (%) | GFLOPs Reduction Rate (%) | Parameters Reduction Rate (%) | Detect Speed Reduction Rate (%) |

|---|---|---|---|---|---|

| YOLOv5n | 7.7457 | 2.3256 | 6.9444 | −10.8559 | 3.9187 |

| YOLOv7tiny | 9.0058 | 6.0241 | 30.2083 | 39.8641 | 42.2339 |

| YOLOv8n | 4.0179 | 4.7619 | 18.2927 | 7.6522 | 12.0850 |

| YOLOv10n | 6.7583 | 7.3171 | 20.2381 | −2.7079 | 15.5612 |

| YOLOv11n | 3.0973 | 3.5294 | −4.6875 | −7.2727 | −6.7742 |

| Faster R-CNN | 10.9524 | 10.0000 | 75.1852 | 83.1375 | 73.8856 |

| APPre-estrus | APMid-estrus | APLate-estrus | APNot-estrus | APAllclass | |

|---|---|---|---|---|---|

| YOLOv5n | 0.939 | 0.732 | 0.932 | 0.855 | 0.865 |

| YOLOv7tiny | 0.893 | 0.826 | 0.956 | 0.747 | 0.855 |

| YOLOv8n | 0.957 | 0.844 | 0.897 | 0.887 | 0.896 |

| YOLOv10n | 0.931 | 0.832 | 0.904 | 0.826 | 0.873 |

| YOLOv11n | 0.953 | 0.945 | 0.898 | 0.822 | 0.904 |

| FasterR-CNN | 0.929 | 0.770 | 0.766 | 0.893 | 0.840 |

| ECA–YOLO | 0.966 | 0.968 | 0.888 | 0.905 | 0.932 |

| Indicator | Value (%) |

|---|---|

| Precision | 91.16 |

| Recall | 90.20 |

| F1-Score | 90.67 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, M.; Duan, Y.; Gao, T.; Gao, X.; Hu, G.; Cao, R.; Liu, Z. A Lightweight Model for Small-Target Pig Eye Detection in Automated Estrus Recognition. Animals 2025, 15, 1127. https://doi.org/10.3390/ani15081127

Zhao M, Duan Y, Gao T, Gao X, Hu G, Cao R, Liu Z. A Lightweight Model for Small-Target Pig Eye Detection in Automated Estrus Recognition. Animals. 2025; 15(8):1127. https://doi.org/10.3390/ani15081127

Chicago/Turabian StyleZhao, Min, Yongpeng Duan, Tian Gao, Xue Gao, Guangying Hu, Riliang Cao, and Zhenyu Liu. 2025. "A Lightweight Model for Small-Target Pig Eye Detection in Automated Estrus Recognition" Animals 15, no. 8: 1127. https://doi.org/10.3390/ani15081127

APA StyleZhao, M., Duan, Y., Gao, T., Gao, X., Hu, G., Cao, R., & Liu, Z. (2025). A Lightweight Model for Small-Target Pig Eye Detection in Automated Estrus Recognition. Animals, 15(8), 1127. https://doi.org/10.3390/ani15081127