Generative AI Meets Animal Welfare: Evaluating GPT-4 for Pet Emotion Detection

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

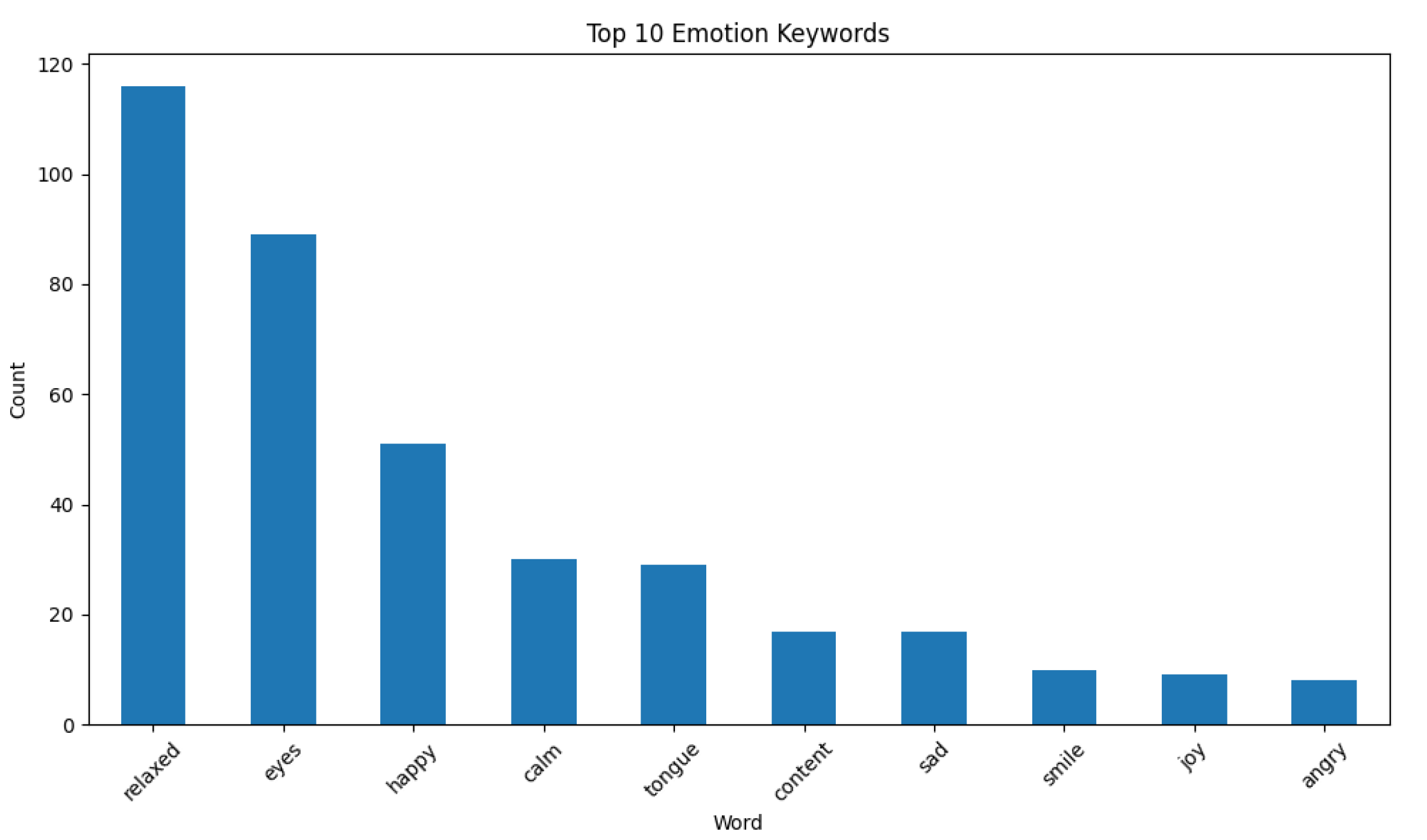

2.1. Data Collection

2.2. Experimental Setup

2.2.1. General Pet/Animal Emotion Classification

2.2.2. Dog Emotion Classifications and Sentiment Analysis

2.3. Generative AI Model and Evaluation Metrics

3. Results

3.1. Phase 1—General Pet Emotion Classification Results

3.2. Phase 2—Dog Emotion Classification Results

3.3. Discussion of Successful and Failed Cases

4. Discussion

4.1. General Pet Emotion Classification

4.2. Dog-Specific Emotion Classification

4.3. Insights from Comparative Emotion Detection

4.4. Limitations, Challenges, and Future Directions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ekman, P. Are there basic emotions? Psychol. Rev. 1992, 99, 550–553. [Google Scholar] [CrossRef] [PubMed]

- Mota-Rojas, D.; Marcet-Rius, M.; Ogi, A.; Hernández-Ávalos, I.; Mariti, C. Current advances in the assessment of dog’s emotions, facial expressions, and their use for clinical recognition of pain. Animals 2021, 11, 3334. [Google Scholar] [CrossRef]

- Caeiro, C.C.; Burrows, A.M.; Waller, B.M. Development and application of CatFACS: Are human cat adopters influenced by cat facial expressions? Appl. Anim. Behav. Sci. 2017, 189, 74–88. [Google Scholar] [CrossRef]

- Kaminski, J.; Hynds, J.; Morris, P.; Waller, B.M. Human attention affects facial expressions in domestic dogs. Sci. Rep. 2017, 7, 12914. [Google Scholar] [CrossRef]

- Waller, B.M.; Peirce, K.; Caeiro, C.C.; Scheider, L.; Burrows, A.M.; McCune, S.; Kaminski, J. DogFACS: A coding system for identifying facial action units in dogs. Behav. Process. 2013, 96, 1–10. [Google Scholar] [CrossRef]

- Boneh-Shitrit, T.; Feighelstein, M.; Bremhorst, A.; Amir, S.; Distelfeld, T.; Dassa, Y.; Yaroshetsky, S.; Riemer, S.; Shimshoni, I.; Mills, D.S.; et al. Explainable automated recognition of emotional states from canine facial expressions: The case of positive anticipation and frustration. Sci. Rep. 2022, 12, 22611. [Google Scholar] [CrossRef] [PubMed]

- Franzoni, V.; Biondi, G.; Milani, A. Advanced techniques for automated emotion recognition in dogs from video data through deep learning. Neural Comput. Appl. 2024, 36, 17669–17688. [Google Scholar] [CrossRef]

- Guo, K.; Correia-Caeiro, C.; Mills, D.S. Category-dependent contribution of dog facial and bodily cues in human perception of dog emotions. Appl. Anim. Behav. Sci. 2024, 280, 106427. [Google Scholar] [CrossRef]

- Narkhede, P.; Thakare, A.; Shitole, S.; Dongardive, A.; Guldagad, V. Intelligent Application for Dog Emotion Detection using EfficientNet-B0. In Proceedings of the 2024 8th International Conference on Computing, Communication, Control and Automation (ICCUBEA), Pune, India, 23–24 August 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Lee, J.W.; Lee, H.R.; Shin, D.J.; Weon, I.Y. A Detection of Behavior and Emotion for Companion animal using AI. In Proceedings of the Korea Information Processing Society Conference, Gangwon-Chuncheon, Republic of Korea, 3–5 November 2022; Korea Information Processing Society: Seoul, Republic of Korea, 2022; pp. 580–582. [Google Scholar] [CrossRef]

- Bhave, A.; Hafner, A.; Bhave, A.; Gloor, P.A. Unsupervised Canine Emotion Recognition Using Momentum Contrast. Sensors 2024, 24, 7324. [Google Scholar] [CrossRef]

- Lian, Z.; Sun, L.; Chen, K.; Wen, Z.; Gu, H.; Liu, B.; Tao, J. GPT-4V with emotion: A zero-shot benchmark for generalized emotion recognition. Inf. Fusion 2024, 108, 102367. [Google Scholar] [CrossRef]

- Wang, J.; Luo, J.; Yang, G.; Hong, A. Is GPT powerful enough to analyze the emotions of memes? In Proceedings of the 2023 International Conference on Machine Learning and Applications (ICMLA), Jacksonville, FL, USA, 15–17 December 2023. [Google Scholar] [CrossRef]

- Iyer, A.; Vojjala, S.; Andrew, J. Augmenting sentiments into Chat-GPT using facial emotion recognition. In Proceedings of the 2024 10th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 14–15 March 2024. [Google Scholar] [CrossRef]

- Hasani, A.M.; Singh, S.; Zahergivar, A.; Ryan, B.; Nethala, D.; Bravomontenegro, G.; Mendhiratta, N.; Ball, M.; Farhadi, F.; Malayeri, A. Evaluating the performance of Generative Pre-trained Transformer-4 (GPT-4) in standardizing radiology reports. Eur. Radiol. 2024, 34, 3566–3574. [Google Scholar] [CrossRef]

- Anwar, A. Pet’s Facial Expression Image Dataset. Kaggle Repository. 2023. Available online: https://www.kaggle.com/datasets/anshtanwar/pets-facial-expression-dataset (accessed on 10 December 2024). [CrossRef]

- Balico, D.S. Dog Emotion Image Classification Dataset. Kaggle Repository. 2023. [Data set]. Available online: https://www.kaggle.com/datasets/danielshanbalico/dog-emotion (accessed on 15 January 2025). [CrossRef]

- Bloom, T. Human Ability to Recognize Dogs’ (Canis familiaris) Facial Expressions: From Cross Cultural to Cross Species Research on the Universality of Emotions. Ph.D. Thesis, Walden University, Minneapolis, MN, USA, 2011. [Google Scholar]

- Ferres, K.; Schloesser, T.; Gloor, P.A. Predicting dog emotions based on posture analysis using DeepLabCut. Future Internet 2022, 14, 97. [Google Scholar] [CrossRef]

- Mendl, M.; Burman, O.H.; Paul, E.S. An integrative and functional framework for the study of animal emotion and mood. Proc. R. Soc. B Biol. Sci. 2010, 277, 2895–2904. [Google Scholar] [CrossRef] [PubMed]

- Burza, L.B.; Bloom, T.; Trindade, P.H.E.; Friedman, H.; Otta, E. Reading emotions in Dogs’ eyes and Dogs’ faces. Behav. Process. 2022, 202, 104752. [Google Scholar] [CrossRef] [PubMed]

- Diogo, R.; Wood, B.A.; Aziz, M.A.; Burrows, A. On the origin, homologies and evolution of primate facial muscles, with a particular focus on hominoids and a suggested unifying nomenclature for the facial muscles of the Mammalia. J. Anat. 2009, 215, 300–319. [Google Scholar] [CrossRef]

- Panksepp, J. The basic emotional circuits of mammalian brains: Do animals have affective lives? Neurosci. Biobehav. Rev. 2011, 35, 1791–1804. [Google Scholar] [CrossRef]

- Caeiro, C.; Guo, K.; Mills, D. Dogs and humans respond to emotionally competent stimuli by producing different facial actions. Sci. Rep. 2017, 7, 15525. [Google Scholar] [CrossRef] [PubMed]

| Labeled “Angry” | Labeled “Happy” | Labeled “Sad” | |

|---|---|---|---|

| Predicted “Angry” | 96 | 3 | 9 |

| Predicted “Happy” | 147 | 235 | 188 |

| Predicted “Sad” | 2 | 9 | 47 |

| Predicted “Unsure” | 5 | 3 | 6 |

| Precision = 0.556 | |||

| Recall Score = 0.512 | |||

| F1 Score = 0.533 | |||

| Accuracy Score = 0.502 | |||

| Labeled “Angry” | Labeled “Happy” | Labeled “Relaxed” | Labeled “Sad” | |

|---|---|---|---|---|

| Labeled “Angry” | 254 | 3 | 6 | 8 |

| Labeled “Happy” | 91 | 331 | 7 | 4 |

| Labeled “Relaxed” | 18 | 47 | 292 | 111 |

| Labeled “Sad” | 10 | 0 | 50 | 284 |

| Precision = 0.768 | ||||

| Recall Score = 0.788 | ||||

| F1 Score = 0.767 | ||||

| Accuracy Score = 0.766 | ||||

| (A) | ||

| Labeled “Angry” Predicted “Angry” | This prediction is based on the dog’s facial expression and body language. The dog has bared teeth, which is a typical sign of aggression or anger. Its ears may be pulled back tightly, and the overall posture suggests it is feeling threatened or upset. Additionally, the tense muscles and glaring eyes further reinforce the idea of anger. |

| (B) | ||

| Labeled “Angry” Predicted “Happy” | The prediction of “Happy” is based on the dog’s open mouth, which looks like it might be smiling or playfully panting. The wide eyes and relaxed facial expression contribute to this impression of joy and contentment. Additionally, the overall posture and fur style suggest a well-groomed and cared-for pet, which often reflects a positive emotional state. |

| (C) | ||

| Labeled “Happy” Predicted “Happy” | The puppy appears to have a relaxed body posture and is standing in a playful position. Its tongue is out, which often indicates excitement and a jovial mood in dogs. The lush green grass adds to the cheerful atmosphere, suggesting that it is enjoying its environment. Overall, these visual cues suggest a state of happiness. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cetintav, B.; Guven, Y.S.; Gulek, E.; Akbas, A.A. Generative AI Meets Animal Welfare: Evaluating GPT-4 for Pet Emotion Detection. Animals 2025, 15, 492. https://doi.org/10.3390/ani15040492

Cetintav B, Guven YS, Gulek E, Akbas AA. Generative AI Meets Animal Welfare: Evaluating GPT-4 for Pet Emotion Detection. Animals. 2025; 15(4):492. https://doi.org/10.3390/ani15040492

Chicago/Turabian StyleCetintav, Bekir, Yavuz Selim Guven, Engincan Gulek, and Aykut Asım Akbas. 2025. "Generative AI Meets Animal Welfare: Evaluating GPT-4 for Pet Emotion Detection" Animals 15, no. 4: 492. https://doi.org/10.3390/ani15040492

APA StyleCetintav, B., Guven, Y. S., Gulek, E., & Akbas, A. A. (2025). Generative AI Meets Animal Welfare: Evaluating GPT-4 for Pet Emotion Detection. Animals, 15(4), 492. https://doi.org/10.3390/ani15040492