Simple Summary

Robust pig detection in complex farming environments requires a unified representation of both global semantics and local details, which remains a challenge. This paper proposes VM-RTDETR, an enhanced RT-DETR (transformer-based real-time object detector) model that addresses this by synergizing a Vision State-Space Duality (VSSD) backbone with a Multi-scale Encoder (M-Encoder). The VSSD module breaks through the causal constraints of traditional state-space models (efficiently capturing long-range dependencies and global context within an image) to capture long-range dependencies and global context, while the M-Encoder extracts parallel multi-scale features to handle appearance variations. This collaboration yields a detector that robustly handles scale changes, occlusions, and complex backgrounds. On challenging datasets, VM-RTDETR elevates the state of the art, surpassing strong baselines like RT-DETR by significant margins. It provides a reliable and efficient vision solution for automated livestock monitoring.

Abstract

Pig detection is a fundamental yet challenging task in intelligent livestock farming, primarily due to difficulties in capturing both global contextual information and multi-scale features within complex environments. To address this, we propose VM-RTDETR, a novel detection model based on an enhanced RT-DETR architecture. The model incorporates a Vision State-Space Duality (VSSD) backbone, leveraging a novel Non-Causal State-Space Duality (NC-SSD) mechanism to overcome the limitations of traditional SSMs by enabling efficient modeling of long-range dependencies and global context. Furthermore, we design a Multi-Scale Efficient Hybrid Encoder (M-Encoder) that employs parallel convolutional kernels to capture both local details and global contours, effectively addressing scale variations. The synergistic design of the VSSD backbone and the M-Encoder enables our model to achieve more comprehensive feature representation. Experimental results on a custom dataset of 8070 images from a pig farm (with 6955 images for training and 1115 for testing) demonstrate that VM-RTDETR significantly outperforms existing mainstream detectors, improving , , and by up to 2.35%, 0.63%, and 2.76%, respectively, over the strong R50-RTDETR baseline. Our model significantly enhances detection robustness in complex scenarios, offering an efficient and accurate solution for intelligent livestock farming.

1. Introduction

Pig detection is a core technology for automated and standardized livestock farming, playing a crucial role in individual animal management, disease monitoring, and optimized resource allocation [1]. However, this task is particularly challenging in complex farm environments. Issues such as occlusions, varying lighting conditions, and overlapping among pigs significantly degrade detection performance [2,3,4]. These challenges often lead to two key limitations in existing methods: insufficient capture of global contextual features and inadequate extraction of discriminative multi-scale representations [5,6].

With the advancement of deep learning, a variety of detection models have been applied to this domain. Early research primarily focused on two-stage detectors. Yang et al., for instance, employed Faster R-CNN [7] to localize pigs and their heads for recognizing feeding behavior [8]. Tu et al. later introduced PigMS R-CNN, integrating ResNet-101 with a Feature Pyramid Network (FPN) and soft-NMS to mitigate false detections in dense scenarios [9]. However, their reliance on region proposal generation leads to slow inference speeds, limiting their practicality for real-time monitoring. This computational bottleneck has driven a shift in research focus toward more efficient single-stage detectors [10].

For instance, Shen et al. demonstrated that YOLOv3 achieved comparable accuracy to Faster R-CNN in piglet detection while being 1.5 times faster, highlighting the superior speed–accuracy trade-off of single-stage detectors [11]. Subsequent research has largely focused on improving YOLO-series architectures for pig detection [12], incorporating attention mechanisms [13,14,15,16], advanced feature pyramids [14,17], and other specialized modules to enhance performance in complex scenarios.

The evolution of single-stage detectors has continued with the recent introduction of the Real-Time Detection Transformer (RT-DETR) [18]. This end-to-end model leverages the transformer architecture [19] to directly predict bounding boxes without anchors or complex post-processing, offering promising performance and real-time capability. Therefore, we adopt RT-DETR as our baseline. Nonetheless, when applied to the specific challenges of pig detection, such as distinguishing individuals in dense groups under complex conditions, we identify two potential limitations in its default architecture: its capacity for global feature capture and the richness of its multi-scale feature extraction could be further enhanced.

The core research gap lies in the lack of a unified model that effectively integrates long-range global semantics with hierarchical multi-scale local details. While some methods excel in local feature extraction, they often fail to model the broader contextual relationships necessary for disambiguating occluded or overlapping pigs. Conversely, global modeling approaches may overlook fine-grained details critical for precise localization under scale variations. This gap becomes especially pronounced in densely populated and visually cluttered farming environments [20,21].

To address these specific limitations, we propose VM-RTDETR, a novel detection model that synergistically enhances the RT-DETR framework. The main contributions of this work are summarized as follows:

- We introduce a Vision State-Space Duality (VSSD) module into the backbone network. Its core, a novel Non-Causal State-Space Duality (NC-SSD) mechanism, overcomes the unidirectional constraint of traditional state-space models by enabling bidirectional contextual modeling. This allows for highly efficient parallel computation while significantly strengthening the model’s ability to capture global structural features and long-range dependencies within an image.

- We design a Multi-Scale Efficient Hybrid Encoder (M-Encoder). This module utilizes parallel multi-scale depth-wise convolutional kernels to perform hierarchical feature extraction, simultaneously capturing fine-grained local details and broader contour information. This design effectively enriches the feature representation, improving the model’s robustness to scale variations caused by differing viewing distances and individual pig sizes.

- Through extensive experiments, we demonstrate that our VM-RTDETR model achieves state-of-the-art performance on challenging pig detection datasets, significantly boosting key metrics such as , , and compared to existing mainstream approaches. The model provides a robust and efficient solution for intelligent livestock farming.

2. Materials and Methods

2.1. Dataset

The dataset utilized in this study was collected from a large-scale commercial pig farm located in Linfen City, Shanxi Province. The pig house features an enclosed structure where pigs at different growth stages are physically separated by railings. The flooring is constructed with concrete and textured cement panels, providing both ventilation and liquid drainage capabilities. Video data were acquired using six Hikvision cameras (Hangzhou, China) strategically installed at various locations within the facility. All cameras were configured to record at a resolution of 1920 × 1080 pixels (1080p) and a frame rate of 25 fps. Each camera was mounted approximately 1.7 m above the ground, an optimal height and angle for obtaining comprehensive and clear footage of the pigs. Data collection was conducted over a two-month period from August to October 2022, yielding approximately 2 TB of raw video data. An obtained dataset sample is shown in Figure 1.

Figure 1.

Collected data samples for pigs.

To mitigate the impact of low-quality data on model training, a structured processing pipeline was implemented. Initially, video segments affected by insufficient lighting or extreme weather conditions were manually filtered out. The specific criteria for exclusion were as follows: Insufficient lighting was defined as scenes where the overall illumination was too dark to distinguish the contours of pigs clearly without digital enhancement, typically corresponding to nighttime hours or periods of severe overcast. Extreme weather conditions primarily referred to heavy rain or snow that visibly obscured the camera lens or significantly degraded image clarity. Subsequently, frames were extracted at 20 s intervals from the validated videos to construct a preliminary image set in JPG format. Subsequently, a manual visual inspection was conducted to remove any remaining blurred or low-quality images, resulting in a refined dataset of 8070 unique, high-quality original images for model training and validation. This set of 8070 original images constitutes our final curated dataset before any augmentation.

The selected images were annotated using the Make Sense.ai online annotation tool (https://www.makesense.ai/). The annotation process was conducted by two annotators. To ensure consistency and accuracy, all annotations were cross-validated, meaning that each annotation created by one annotator was reviewed and verified by the other. As this study focuses solely on pig detection, a single class label, “pig”, was used for all bounding boxes. The bounding boxes were stored in the YOLO format, where each annotation in the TXT files contains the values <class_id>, <x_center>, <y_center>, <width>, and <height>, with all coordinates being normalized relative to the image dimensions. The annotations from the TXT files were subsequently converted into a structured JSON format for ease of use. The final JSON format follows the standard COCO dataset schema, containing keys for “images”, “annotations”, and “categories”. In this COCO-compliant JSON, the bounding box for each object is stored as a list [x_min, y_min, width, height], where the coordinates (x_min, y_min) are the absolute pixel values of the top-left corner of the bounding box, and width and height are the absolute dimensions in pixels. Some annotation examples are shown in Figure 2.

Figure 2.

Some examples of annotated images.

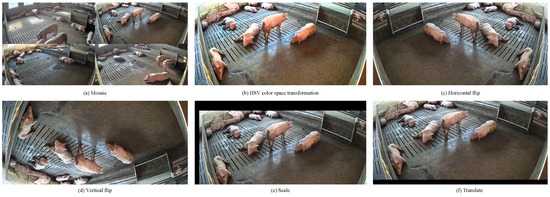

To enhance model performance and prevent overfitting, several data augmentation techniques were employed during the model training process. These included Mosaic (randomly combining four images into one), HSV color space enhancement (increasing saturation by 50% and brightness by 30%), horizontal flipping, vertical flipping, scaling, and translation (shifting images by 50 pixels along the x-axis and 100 pixels along the y-axis). Examples of augmented images are shown in Figure 3.

Figure 3.

Some examples of data augmentation.

To ensure the generalization ability of the model and avoid bias introduced by random splitting, it was ensured that the training and test sets had similar distributions. Furthermore, to ensure the rigor of model validity evaluation and enhance the reproducibility of the experiments, the dataset of 8070 original images was randomly split with a fixed random seed to guarantee consistency in the partitioning. The value of the random seed used for this split was 42. This process resulted in a training set (6955 images) and a held-out test set (1115 images). No separate validation set was created. The test set was used exclusively for the final performance evaluation reported in this paper and was not used for model selection, early stopping, or hyperparameter tuning at any stage.

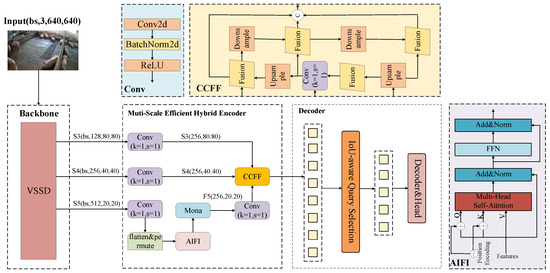

2.2. The Proposed Model, VM-RTDETR

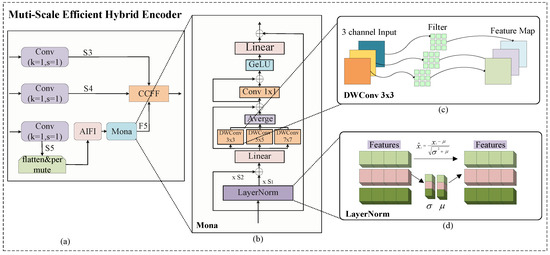

The overall architecture of the proposed pig detection model, VM-RTDETR, is illustrated in Figure 4. It consists of three main components: a feature extraction backbone, a Multi-Scale Efficient Hybrid Encoder (M-Encoder), and a decoder with a prediction head. The core innovations include the introduction of a VSSD [22] module into the feature extraction backbone and the novel design of the Multi-Scale Efficient Hybrid Encoder (M-Encoder). These enhancements effectively address the limitations of existing models in capturing global features and extracting rich multi-scale representations under complex farming environments.

Figure 4.

VM-RTDETR network structure diagram. The AIFI indicates Attention-based Intra-scale Feature Interaction module, the CCFF denotes the CNN-based Cross-scale Feature Fusion module, and the Mona indicates Multi-cognitive Visual Adapter module.

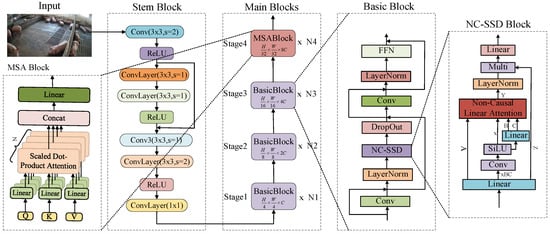

2.2.1. Backbone

The RT-DETR model employs ResNet [23] as its backbone network, which exhibits certain limitations in pig detection tasks. Since ResNet’s convolutional operations primarily focus on local feature extraction, the resulting feature representations lack global contextual information, thereby compromising detection performance. Furthermore, during deep feature extraction, ResNet tends to lose high-frequency details, making precise localization challenging. Although RT-DETR [18] incorporates an Attention-based Intra-scale Feature Interaction (AIFI) module and CNN-based Cross-scale Feature Fusion (CCFF) module in its later stages, the initial feature representation inadequacy of the backbone hinders these modules from fully compensating for the lost information, ultimately affecting overall detection accuracy. To address these issues, the original ResNet backbone of the standard RT-DETR was replaced with our novel Vision State-Space Duality (VSSD) backbone in the proposed VM-RTDETR model. The structure of this new VSSD backbone is illustrated in Figure 5. This complete replacement is crucial, as the VSSD backbone is specifically designed to overcome the limitations of ResNet by effectively capturing global structural features with long-range dependencies in images through its core NC-SSD mechanism.

Figure 5.

The feature extraction backbone network architecture of the VSSD module.

As illustrated in Figure 5, the VSSD module is designed to overcome the limitations of ResNet by incorporating a novel Non-Causal State-Space Duality (NC-SSD) mechanism as its core component. This mechanism breaks through the causal constraints of traditional state-space models (SSMs) and enables efficient modeling of global image features. Its core improvement lies in reformulating the state transition logic. By discarding magnitude information in the interaction between hidden states and inputs while preserving relative weights, it eliminates the limitation of causal masking on information flow.

In sequential models, causal masking ensures that the state at time step t only depends on past inputs, which is crucial for tasks like language modeling. This causal constraint is a fundamental characteristic of many traditional state-space models (SSMs), including the selective scan mechanism (SSM) that underpins recent vision models like VMamba [24]. However, this constraint is unnatural for images, where pixels are spatially correlated without an inherent temporal order. Applying such causal SSMs to image patches serialized into a 1D sequence thus unnecessarily restricts the model’s ability to integrate information from all parts of the image, as the representation at any position cannot be informed by subsequent tokens.

The core improvement of our proposed NC-SSD mechanism lies in its fundamental reformulation of the state transition logic to explicitly break this causal dependency. In contrast to the sequential, unidirectional processing of causal SSMs, the NC-SSD transforms the traditional recursive process into a global, non-causal accumulation operation (as formalized in Equation (4)). This key distinction allows every token in the sequence to independently and directly contribute to the hidden state of every other token, enabling truly bidirectional and parallel contextual modeling. This design is inherently more aligned with the physics of image data, where the understanding of a local feature can be refined by global contextual information from any other part of the image, leading to more effective global feature representation compared to the directionally constrained scans of prior SSM-based vision approaches.

In the traditional State-Space Duality (SSD) model [25], “SSD” refers specifically to State-Space Duality and should not be confused with the “Single Shot Detector” in object detection. The hidden state updates rely on historical input (causality), formulated as Equation (1):

where denotes a scalar transformation coefficient at time t, which functions to adjust the contribution weight of the current input feature to the hidden state. represents the input projection matrix at time t, which linearly transforms the current input feature into the dimension space of the hidden state. In an SSM, is used to control the retention ratio of historical hidden states; h(t−1) denotes the historical hidden state, carrying the knowledge and features accumulated from previous input information; and corresponds to the image token information input at the current moment, serving as the source of the latest information. This formula clearly shows that in traditional SSD, the current hidden state heavily depends on the previous hidden state h(t−1) and the current input . Such a causal update mechanism limits the model’s ability to integrate global information efficiently. The matrix C is the output projection matrix, which transforms the high-dimensional hidden state into the model’s output .

To overcome the causal constraints inherent in the traditional State-Space Duality (SSD), this study formally derives the Non-Causal State-Space Duality (NC-SSD) mechanism through a rigorous recursive expansion process, thereby revealing the global cumulative nature of the hidden state. Based on the hidden state update rule of traditional SSD, to deconstruct its temporal dependencies, this study performs a step-by-step expansion of the aforementioned recursive equation. By successively substituting and subsequent states, the following expansion is obtained:

When the recursive expansion reaches the initial state and setting , the above expansion simplifies to a pure accumulation form:

In this expression, the term represents the multiplicative accumulation effect of the scalar coefficients from time step to t. This derivation mathematically confirms that the hidden state at any time step t is essentially an explicit weighted sum of all historical inputs in the sequence. The weights are jointly determined by the model parameters and the sequential coefficients .

Based on this mathematical essence, the NC-SSD mechanism further reconstructs the complex cross-time-step dependencies encapsulated by the product term into an adaptive importance weight applied to the current input . This reformulation embodies its core innovation: transforming the sequential, state-dependent update into a parallelizable, input-weighted aggregation.

Mathematically, this reformulation leads to an equivalent, global representation of the hidden state, as shown in Equation (4):

Here, serves as a learnable importance weight that directly and independently governs the contribution intensity of the i-th token to the final state, thereby completely eliminating the causal constraints of the traditional model. represents the input projection weight (a scalar learnable parameter) of the i-th token, used to linearly scale the current input feature , mapping it to a base value that directly influences the contribution to the hidden state. The tensor Z is introduced in the derivation of the Non-Causal SSD (NC-SSD) and represents the projected input token. It is defined as , meaning that each token is first transformed by the input-dependent projection matrix .

The essence of the accumulation process is the weighted aggregation of all sequence features. In visual tasks, after an image is flattened into a 1D sequence, the correlation between adjacent pixels or regions may be disrupted due to serialization (for example, adjacent pixels in a 2D image might be far apart in the 1D sequence). NC-SSD enables the features of each pixel to directly participate in the construction of the final hidden state through global accumulation. For instance, the pixel feature at the edge of the image and the pixel feature at the center can directly contribute to through their respective weights of and . This mechanism captures long-range spatial dependencies more efficiently without relying on the sequential transmission of intermediate token states.

2.2.2. M-Encoder

The , , and features extracted by VM-RTDETR in the backbone network module were fed into the improved Multi-Scale Efficient Hybrid Encoder (M-Encoder). Compared to the shallower and features, the feature contains deeper, higher-level, and semantically richer information. The newly designed M-Encoder employs parallel multi-scale depth-wise convolutional filters with kernel sizes of 3 × 3, 5 × 5, and 7 × 7. This multi-scale design is grounded in the cognitive principle that visual information is processed at varying receptive fields to capture both local details and global context. It effectively overcomes the limitations of traditional linear adapters in visual signal processing and breaks through the constraint of single-scale feature extraction. The selected kernel sizes effectively balance feature richness and parameter efficiency. The 3 × 3 kernels capture fine-grained local features (e.g., textures and edges of pigs), the 5 × 5 kernels extract intermediate structural patterns (e.g., limbs and head contours), and the 7 × 7 kernels integrate broader contextual information (e.g., overall body posture and spatial relationships among multiple pigs). This configuration enables a comprehensive representation of porcine features across varying scales, thereby significantly enhancing the model’s robustness in complex environments. The overall structure of the M-Encoder is shown in Figure 6.

Figure 6.

The architecture of the Multi-Scale Efficient Hybrid Encoder module M-Encoder. Here, (a) is the architecture of the Multi-Scale Efficient Hybrid Encoder M-Encoder we proposed, where the outputs of the three parallel depth-wise convolutional branches are fused using an element-wise average operation. (b) is the core module Mona [26] in the M-Encoder, used for multi-scale feature extraction. (c) is the detailed structure diagram of DWConv 3 × 3 . (d) is the detailed part of LayerNorm.

The M-Encoder addresses the limitations of traditional single-scale encoding by incorporating parallel multi-scale convolutions, feature normalization, and cross-scale fusion. It accurately captures multi-dimensional visual patterns, providing more discriminative feature representations for pig detection.

The module first applies a hybrid normalization operation to the input feature , comprising Layer Normalization (LN) and learnable scaling factors. The definition is as follows:

LN is applied to the input feature along the channel dimension, bringing the distribution of each channel closer to a standard normal distribution, and enhancing the learning consistency of the model across different channels. Here, and are learnable scalar parameters that are shared across all channels and adaptively adjust the global fusion weights between the LN features and the original feature during training. This design mitigates the loss of detail commonly associated with conventional normalization methods, allowing the model to flexibly balance stabilizing feature distributions and preserving original information. In the context of pig detection, this mechanism not only regularizes the feature distribution to facilitate model optimization but also preserves critical details such as the original appearance and texture of the pigs.

The normalized feature is then processed through three parallel depth-wise convolution (DWConv) branches with kernel sizes of 3 × 3, 5 × 5, and 7 × 7. This process is defined as

Here, denotes a depth-wise convolution with a kernel size of k. This operation first performs spatial convolution channel-wise on to capture within-channel spatial patterns (such as local textures and edges of pigs), followed by point-wise convolution (1 × 1 convolution) to fuse information across channels. The Avg operation performs an element-wise averaging of the three multi-scale feature maps to integrate their multi-scale features, enabling the collaborative combination of detailed features from 3 × 3 convolution, medium-scale structural features from 5 × 5 convolution, and global contextual features from 7 × 7 convolution. To clearly illustrate the internal structure, a detailed schematic of the DWConv 3 × 3 operation is provided in Figure 6c. The architectural principles for the DWConv 5 × 5 and DWConv 7 × 7 branches are analogous to those of the DWConv 3 × 3 branch, differing only in the kernel size of the depth-wise convolution operation. Therefore, separate sub-figures for these similar structures are omitted for conciseness. The residual connection helps alleviate gradient vanishing and ensures effective propagation of multi-scale features to subsequent processing stages.

To meet the input requirements of the downstream detection, the multi-scale features are dimensionally expanded and fused with the original information. The mathematical expression is as follows:

The 1 × 1 convolution operation enables preliminary integration of multi-scale features along the channel dimension. The operation is defined as a linear (fully connected) layer that restores the channel dimension of the features. Specifically, it projects the feature map processed by the 1 × 1 convolution and activation back to the original channel depth of , ensuring dimensional consistency for the subsequent residual addition.

By incorporating a residual connection from , essential details in the original input, such as distinctive color patterns and limb structures of pigs, are preserved, preventing the loss of critical information during multi-scale encoding. This design allows the original and multi-scale enhanced features to complement each other, significantly improving the richness and completeness of feature representation.

Finally, the Mona module performs cross-scale feature fusion to effectively integrate multi-source information, combining its processed features with other scale features from the backbone network. This leverages multi-scale information in the image comprehensively. Specifically, the and features are first pre-processed; a dedicated 1 × 1 convolution (without weight sharing between scales) is applied to each to unify their channel dimensions to match those of the feature map processed by the Mona module. This is followed by an upsampling operation, implemented using bilinear interpolation, to align their spatial sizes with the feature map. Finally, the processed and feature maps are element-wise summed with to obtain the final multi-scale feature fusion result . The mathematical expression can be described as follows:

Here, denotes the feature processed by the Mona module, and Upsample refers to the upsampling operation that adjusts the spatial size of feature maps through interpolation or other methods to achieve dimensional alignment.

2.2.3. Decoder

The configuration of the decoder, including the number of queries, the parameters of the -aware query selection mechanism, and the absence of NMS (as it is an end-to-end detector), is identical to that of the original RT-DETR [18]. For specific values and implementation details, we direct the reader to the aforementioned reference, as these are core components of the baseline model which we utilized without modification.

2.2.4. Synergistic Design of VSSD and M-Encoder

The architectural innovation of VM-RTDETR extends beyond the independent enhancements of the VSSD backbone and the M-Encoder. The most significant contribution lies in their synergistic collaboration, which was deliberately designed to address the two intertwined fundamental challenges in pig detection: modeling long-range contextual dependencies and capturing discriminative features across multiple scales.

As illustrated in Figure 4, the VSSD backbone and the M-Encoder form two complementary feature processing pathways:

The VSSD backbone, empowered by the NC-SSD mechanism, establishes a global contextual understanding of the entire image. By breaking through the causal constraints of traditional SSMs, it allows any pixel or region in the image to directly influence the representation of any other, regardless of distance. This capability is crucial for reasoning in complex scenarios, such as inferring the presence of an occluded pig based on the overall herd structure or understanding spatial relationships between distant individuals.

The M-Encoder operates as a powerful local multi-scale feature extractor. Its parallel convolutional kernels with varying receptive fields (3 × 3, 5 × 5, 7 × 7) are specialized in capturing hierarchical visual patterns. The 3 × 3 kernels focus on fine-grained textures, the 5 × 5 kernels aggregate these into intermediate contours, and the 7 × 7 kernels integrate broader contextual shapes.

The synergy emerges from the fusion of these pathways’ outputs. The VSSD pathway provides a “top-down” semantic guide, informing the model about the global scene layout and the likely locations and relationships of pig entities. The M-Encoder pathway provides “bottom-up”, detailed, multi-scale evidence about local appearances and boundaries. The subsequent feature fusion, particularly in the CCFF module and the decoder, integrates these two streams of information.

This synergistic design ensures that the final feature representation used for detection is both semantically coherent and locally precise. For instance, the global context from VSSD can help resolve ambiguities in crowded regions, while the multi-scale details from the M-Encoder enable precise localization of individual pigs, even when their sizes vary greatly or they are partially hidden. This collaborative mechanism is the cornerstone of VM-RTDETR’s robustness and superior performance, effectively tackling the core limitations of prior methods that often excelled in only one of these two aspects.

2.3. Evaluation Metric

To comprehensively evaluate the performance of our pig detection model, we adopt several standard metrics in object detection. The primary metrics are based on Average Precision () at different Intersection-over-Union () thresholds. The measures the overlap between a predicted bounding box and the ground truth.

Specifically, we report the following:

- (abbreviated as ): The mean averaged over thresholds from 0.5 to 0.95 with a step size of 0.05. This is the primary metric for comprehensive performance evaluation.

- : The at an threshold of 0.5.

- : The at a stricter threshold of 0.75.

The for a single class is defined as the area under the precision–recall curve, as shown in Equation (9):

where denotes precision as a function of recall. Accordingly, the for multiple categories is defined by Equation (10):

where N is the number of categories and is the for the n-th category.

Since our dataset contains only one class (pig), the mean Average Precision () across all classes is equivalent to the for the pig class.

To analyze the model’s performance across objects of different sizes, we also report

- : for medium objects ( pixel area < 96 × 96);

- : for large objects (pixel area ).

It is noteworthy that the standard metric for small objects (, area < 32 × 32 pixels) is not applicable to our dataset. The distribution of bounding box areas confirmed the absence of a significant population of “small” objects as per the COCO standard, as the pigs in our images, even at a young age, predominantly fall into the medium or large size categories due to the fixed camera perspective and resolution.

Besides detection accuracy, we evaluate the model’s efficiency utilizing two metrics:

- Params: The total number of trainable parameters, indicating the model’s size.

- GFLOPs (Giga Floating-Point Operations): The total number of floating-point operations required for a single forward pass, measured in billions. It reflects the model’s computational complexity. A lower value indicates higher computational efficiency.

3. Experimental Results and Analyses

To thoroughly evaluate the effectiveness of the proposed VM-RTDETR model for pig detection, we conducted a series of comprehensive experiments. The following sections detail the experimental setup, comparative results with mainstream models, and an in-depth analysis of the findings.

3.1. Experimental Setup

The experiments were conducted on a server running Ubuntu 18.04, equipped with eight NVIDIA Tesla P100 GPUs (each with 16GB memory). We utilized the PyTorch 2.1.1 deep learning framework with CUDA 11.8 and Python 3.10.17. The input image size was set to 640 × 640, with a batch size of 4 for 100 training epochs. The Adam optimizer was adopted with an initial learning rate of 0.0001, a weight decay of 0.0001, and beta parameters (, ) set to (0.9, 0.999). A standard random sampler was used for constructing training batches. Detailed hardware and software configurations are listed in Table 1.

Table 1.

Configuration of hardware and software environment for experiments.

3.2. Comparison of Different Models

To validate the effectiveness of VM-RTDETR, we compared it against current mainstream detectors, including Faster R-CNN [7], YOLOv5s/n, YOLOv6s/n, YOLOv7 [27], YOLOv8s/n, YOLOv10n, YOLOv12s/n, and R50-RTDETR [18]. The quantitative results are summarized in Table 2.

Table 2.

Performance comparison of different models.

As shown in Table 2, VM-RTDETR achieves state-of-the-art performance across core metrics. It attains an of 60.9%, significantly outperforming all compared models. We highlight the following key comparisons: Our model surpasses the widely used YOLOv7 by a relative improvement of 1.67% in and, more importantly, exceeds its baseline R50-RTDETR by a relative improvement of 2.35%. Similar leading trends are observed in (95.5%) and (63.3%), confirming comprehensive improvements in both overall detection quality and precise localization accuracy. Significantly, VM-RTDETR achieves these superior results with enhanced efficiency, requiring only 97.9 GFLOPs and 28.4 M parameters, a significant reduction compared to R50-RTDETR (137.7 GFLOPs, 42.7 M parameters). Additionally, a single dash (-) indicates that the metric was not reported in the evaluation output for that specific model ( for YOLOv7). A double dash (–) indicates that the metrics were not available or not computed due to architectural incomparability with the standard evaluation framework.

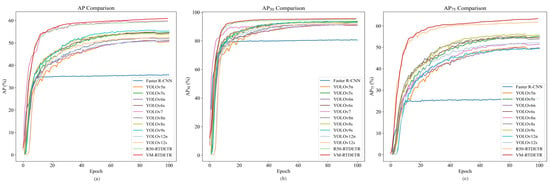

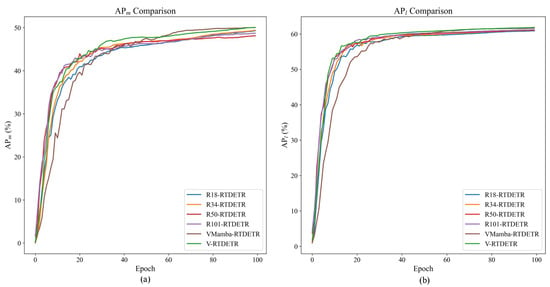

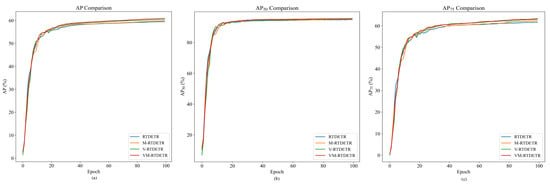

The convergence behavior and performance stability of different models during training are compared in Figure 7.

Figure 7.

Performance comparison of curves of different models.

As shown in Figure 7a, the curve of VM-RTDETR not only converges to the highest value but also exhibits a faster and more stable rise compared to other models. Similarly, its curve in Figure 7b maintains a clear leading advantage, indicating robust localization capability from the early stages. Most notably, the curve in Figure 7c shows a substantial performance gap, especially in later epochs, underscoring the model’s enhanced capability for high-precision bounding box regression under stringent thresholds.

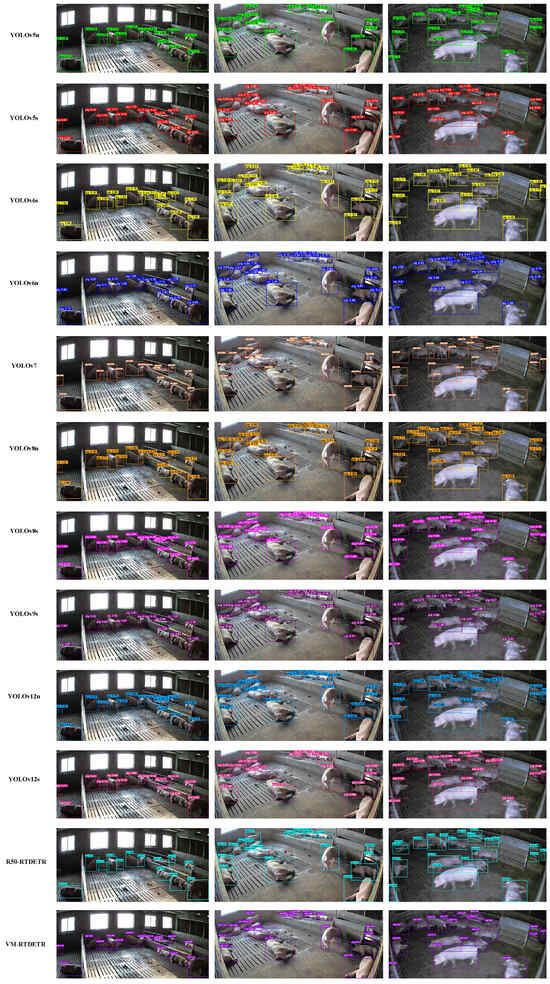

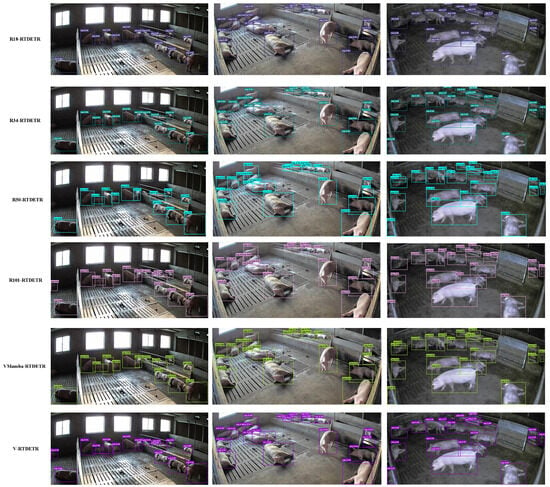

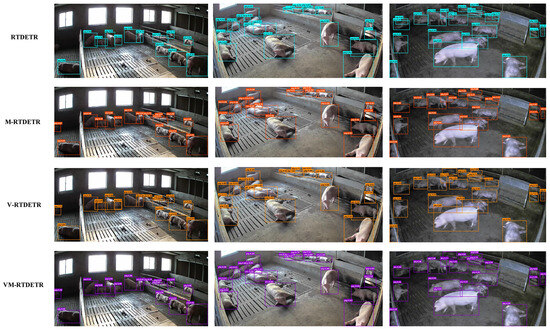

We further compare the bounding box quality and detection accuracy qualitatively in Figure 8. The results demonstrate that VM-RTDETR produces the most precise and complete bounding boxes, indicating a superior ability to localize pig targets and delineate their boundaries accurately in complex scenes.

Figure 8.

Comparison of detection boxes and accuracy of different models.

The superior performance of VM-RTDETR can be attributed to its synergistic architectural design. First, the VSSD backbone, empowered by the NC-SSD mechanism, breaks through the causal constraints of traditional state-space models. This enables efficient modeling of long-range dependencies and significantly enhances the extraction of global structural features from the image. Second, the M-Encoder tackles the challenge of scale variation by replacing single-scale processing with a parallel architecture employing 3 × 3, 5 × 5, and 7 × 7 convolutional kernels. This design simultaneously captures fine-grained details (via 3 × 3 kernels), intermediate contours (via 5 × 5 kernels), and broader spatial contexts (via 7 × 7 kernels). The subsequent fusion of these multi-scale features constructs a comprehensive hierarchical representation. Consequently, through the synergistic optimization of the VSSD backbone and M-Encoder, VM-RTDETR demonstrates stronger robustness and higher detection accuracy in complex scenarios (such as occlusion and lighting variations), providing an efficient and precise solution for automated pig detection.

3.3. Ablation Studies

3.3.1. Verification of the VSSD Module

For the sake of simplicity in writing, we will represent the backbone as B and the encoder as E, represent the encoder as a transformer with T, and represent the M-Encoder as ME. To validate the superiority of the VSSD module, we integrated it into the RT-DETR framework and compared it against several established backbones, including ResNet-18, ResNet-34, ResNet-50, ResNet-101, and VMamba [24]. The results are summarized in Table 3.

Table 3.

Performance comparison of the VSSD module.

As shown in Table 3, the V-RTDETR model achieves the best overall performance, demonstrating comprehensive advantages across all core metrics. Specifically, it attains an of 60.4%, achieving a relative improvement over R18-RTDETR, R34-RTDETR, R50-RTDETR, R101-RTDETR, and VMamba-RTDETR of 1.51%, 1.34%, 1.51%, 1.17%, and 0.33%, respectively. Similarly, its of 95.3% is 0.42%, 0.11%, 0.42%, and 0.21% higher than those of the R18-RTDETR, R34-RTDETR, R50-RTDETR, and VMamba-RTDETR, respectively, comparable to that of the R101-RTDETR. The advantage is even more pronounced on the stricter metric (62.9%), which shows relative improvements of 1.94%, 1.78%, 2.11%, 1.29%, and 0.64% over the comparison models. Notably, the V-RTDETR achieves an excellent balance between computational complexity and parameter count. It is important to highlight that the VSSD backbone alone brings a substantial reduction in computational cost, reducing GFLOPs by 28.9% (from 137.7 G to 97.9 G) compared to the R50-RTDETR baseline. While its GFLOPs (97.9 G) and parameters (28.4 M) are slightly higher than those of VMamba-RTDETR (87.0 G, 26.3 M) and R18-RTDETR (61.1 G, 20.0 M), they are substantially lower than R50-RTDETR (137.7 G, 42.7 M) and R101-RTDETR (260.6 G, 76.4 M), while delivering comprehensively superior performance. Therefore, V-RTDETR achieves the optimal trade-off between accuracy and efficiency.

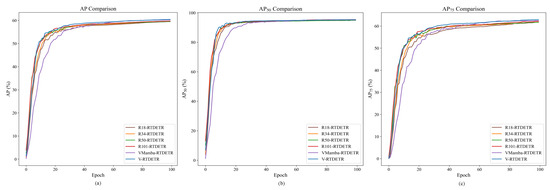

The convergence advantages of the VSSD backbone are visualized in Figure 9 and Figure 10, which plot the evaluation metrics against training iterations. Figure 9 shows that the curves for V-RTDETR for , , and consistently dominate those of all baseline models throughout the training process, while Figure 10 further confirms that its curves for and also maintain a steady leading position compared to the same set of baselines. This demonstrates that our model not only achieves a higher final accuracy but also maintains a stable and leading performance advantage from the early stages of training.

Figure 9.

Comparison curves of values between R18-RTDETR, R34-RTDETR, R50-RTDETR, R101-RTDETR, VMamba-RTDETR, and V-RTDETR models.

Figure 10.

Comparison curves of and values between R18-RTDETR, R34-RTDETR, R50-RTDETR, R101-RTDETR, VMamba-RTDETR, and V-RTDETR models.

Qualitative results are provided in Figure 11. V-RTDETR generates more precise bounding boxes and exhibits stronger robustness in challenging scenarios, such as occlusion, compared to the other backbone variants.

Figure 11.

Comparison of the detection boxes and accuracy between R18-RTDETR, R34-RTDETR, R50-RTDETR, R101-RTDETR, VMamba-RTDETR, and V-RTDETR models.

The superior performance of the model with the VSSD backbone can be attributed to the following reason: As the feature extraction backbone, the VSSD module adopts the NC-SSD mechanism. It breaks through the limitation of traditional SSMs, where hidden states only depend on historical inputs, and constructs a unified global hidden state. This enables each token to directly access complete contextual information, thus allowing the model to effectively capture global structural features with long-range dependencies in images.

3.3.2. Verification of the M-Encoder Module

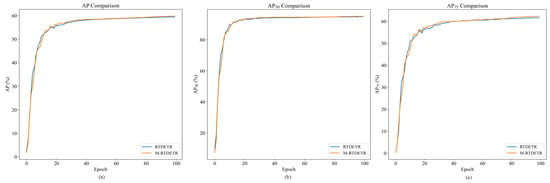

To assess the effectiveness of the proposed M-Encoder module, we integrate it into the RT-DETR encoder, forming the M-RTDETR model. A comparative analysis between the original RTDETR and M-RTDETR is presented in Table 4.

Table 4.

Performance comparison of the M-Encoder module.

As shown in Table 4, the M-RTDETR model outperforms the baseline RTDETR across all evaluation metrics. Specifically, it achieves an of 59.8% (a gain of +0.50%), an of 95.1% (+0.21%), an of 62.3% (+1.14%), an of 48.2% (+0.42%), and an of 61.4% (+0.66%). These consistent improvements validate the contribution of the M-Encoder module. Importantly, these performance gains are achieved without increasing computational costs, as both models maintain identical GFLOPs (137.7 G) and parameters (42.7 M), demonstrating the efficiency of the M-Encoder design.

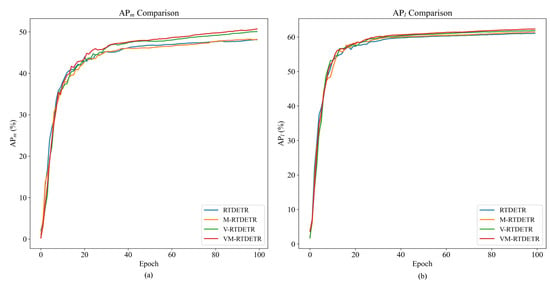

The training dynamics of M-RTDETR are further visualized in Figure 12 and Figure 13. Figure 12 shows that the convergence curves for , , and of M-RTDETR consistently lie above those of RT-DETR across training epochs. This advantage is also evident in the medium- and large-object detection metrics ( and ), as shown in Figure 13. The observed improvements for both medium and large objects underscore that the multi-scale fusion capability of the M-Encoder effectively handles pigs at various sizes and distances, which is a key requirement for robust herd monitoring in practical farming environments. Together, these figures demonstrate that the performance gain from the M-Encoder module is consistent and stable throughout the entire training process.

Figure 12.

Comparison of values between RTDETR and M-RTDETR models.

Figure 13.

Comparison curves of and values between RTDETR and M-RTDETR models.

The performance contribution of the M-Encoder module is mainly reflected in its ability to extract parallel multi-scale features. By synchronously using convolution kernels of different scales such as 3 × 3, 5 × 5, and 7 × 7, this module constructs a hierarchical feature extraction system. Specifically, the 3 × 3 convolution kernel focuses on capturing local fine-grained features, the 5 × 5 convolution kernel is responsible for extracting medium-range contour information, and the 7 × 7 convolution kernel covers a broader spatial context. The parallel extraction and deep fusion of these multi-scale features enable the model to effectively deal with complex scenarios such as inconsistent target scales and mutual occlusion, thereby significantly improving the accuracy of recognition and localization for targets.

3.3.3. Verification of the VM-RTDETR Model

To validate the effectiveness of our proposed modules, we design a series of ablation baselines: RTDETR (original architecture), V-RTDETR (with our VSSD backbone only), M-RTDETR (with our M-Encoder module only), and our full model, VM-RTDETR (integrating both the VSSD backbone and the M-Encoder module). This progressive comparison allows us to isolate the contribution of each component. The results, presented in Table 5, conclusively demonstrate the superiority of our complete VM-RTDETR model.

Table 5.

Performance comparison between RTDETR, M-RTDETR, V-RTDETR, and VM-RTDETR models.

As shown in Table 5, our VM-RTDETR model achieves state-of-the-art performance across all metrics, demonstrating clear advantages over the baseline models (RTDETR, V-RTDETR, and M-RTDETR). Specifically, VM-RTDETR attains an of 60.9%, which is 2.35%, 0.83%, and 1.84% higher than RTDETR, V-RTDETR, and M-RTDETR, respectively. The performance gap is even more pronounced on more stringent metrics: a 63.3% (representing a relative lead of 2.76%, 0.64%, and 1.61%) and a 50.7% (representing a relative gain of 5.63%, 1.40%, and 5.19%). Consistent relative improvements are also observed in (95.5%, +0.63%/+0.21%/+0.42%) and (62.3%, +2.13%/+0.81%/+1.47%). Remarkably, VM-RTDETR achieves these performance gains with only 97.9 GFLOPs and 28.4 M parameters, substantially more efficient than both RTDETR and M-RTDETR (137.7 GFLOPs, 42.7 M parameters) while matching the efficiency of V-RTDETR, demonstrating an optimal balance between accuracy and computational cost.

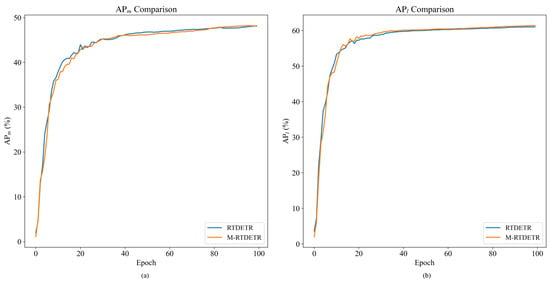

The performance advantages of our proposed VM-RTDETR are further visualized in Figure 14 and Figure 15, which plot the evaluation metrics against training iterations. As shown, the curves for VM-RTDETR (across , , , , and ) consistently dominate those of the baseline models (RTDETR, V-RTDETR, and M-RTDETR) throughout the training process. This demonstrates that our model not only achieves superior final performance but also maintains a stable and leading convergence advantage from start to finish.

Figure 14.

Comparison of values between RTDETR, M-RTDETR, V-RTDETR, and VM-RTDETR models.

Figure 15.

Comparison curves of and values between RTDETR, M-RTDETR, V-RTDETR, and VM-RTDETR models.

Figure 16 compares the detection boxes and detection accuracy of four models, RTDETR-R50, M-RTDETR, RTDETR-VSSD, and VM-RTDETR. As clearly shown in the first column of images, VM-RTDETR successfully detected pigs in the upper-left area that were missed by RTDETR-R50, while achieving higher detection accuracy compared to other models. In the second column, the original model failed to detect the seven overlapping pigs at the top, whereas VM-RTDETR accurately identified all pigs under occlusion. In the third column, VM-RTDETR detected the top pig under challenging lighting conditions, while other models failed, demonstrating superior detection accuracy and significantly reducing the missed detection rate. These comparative results visually corroborate the quantitative performance gains reported in Table 2 and Table 5, demonstrating VM-RTDETR’s practical advantages in handling real-world complexities such as occlusion, dense grouping, and lighting variations.

Figure 16.

Comparison of detection boxes and accuracy of RTDETR, M-RTDETR, V-RTDETR, and VM-RTDETR models.

The superior performance of the VM-RTDETR model stems not only from the independent enhancements of its core components but, more critically, from their effective synergy. Specifically, the VSSD backbone establishes a comprehensive global contextual understanding of the image, while the M-Encoder module captures fine-grained local details through its multi-scale convolutional architecture. The subsequent fusion of these complementary representations, integrating high-level semantics with low-level spatial details, enables a more discriminative and robust feature representation. This synergistic design empowers the model to maintain high accuracy under challenging conditions, including illumination variations, significant scale differences, orientational diversity, and partial occlusions.

4. Discussion

This study proposes VM-RTDETR, a model that achieves significant performance gains in pig detection by synergistically enhancing global context modeling and multi-scale local feature extraction. The ablation studies confirm that the VSSD backbone and M-Encoder module are mutually reinforcing. The comparative analysis further elucidates the source of VM-RTDETR’s advantages. When compared to convolution-heavy architectures like the YOLO series [12,27], our model exhibits superior capability in capturing global contextual information, thanks to the VSSD module. Conversely, against the original RTDETR baseline [18], VM-RTDETR demonstrates a significantly enhanced capacity for multi-scale feature extraction due to the M-Encoder. This dual optimization of both global and local feature pathways distinguishes our approach and underpins its state-of-the-art performance.

Notwithstanding these promising results, we acknowledge certain limitations that provide valuable directions for future research. Firstly, the current study is constrained by its dataset, which was collected from a single commercial indoor farm in Shanxi Province using a fixed overhead-ish camera angle and primarily consists of grower-finisher pigs. This limits the model’s validated performance for other production systems (e.g., outdoor), geographical locations, camera viewpoints, or distinct animal classes like piglets. Secondly, while VM-RTDETR demonstrates excellent performance on pig detection, its scalability to multi-category livestock detection remains to be verified. The model’s generalization capability across species with diverse morphological characteristics (e.g., cattle, sheep, or poultry) requires further investigation through cross-species validation. Thirdly, although our model shows improved computational efficiency compared to the baseline R50-RTDETR, its practical deployment on resource-constrained edge devices commonly used in farm environments warrants additional optimization. Future work should explore model compression techniques and specialized optimization for edge deployment. Fourthly, while the current model exhibits robustness to environmental variations within our dataset, its performance under more extreme conditions—such as severe weather, drastically different farm architectures, or highly challenging lighting situations—needs further validation. To address these limitations, future work will prioritize expanding the dataset’s diversity in terms of farm types, locations, viewpoints, and animal categories. Furthermore, investigating multi-modal sensing approaches, particularly the fusion of RGB with thermal imaging, represents a promising research direction to enhance model robustness in adverse conditions.

Future work will therefore focus on three key aspects: cross-species generalization to extend the model’s applicability beyond porcine detection, efficient edge deployment to enable real-world implementation, and multi-modal robustness enhancement to ensure reliable performance across diverse environmental conditions. Through these investigations, we aim to transition VM-RTDETR from a powerful proof-of-concept to a versatile and practical solution for intelligent livestock farming.

5. Conclusions

This paper proposes a novel pig detection model, VM-RTDETR, to address the critical challenges of global feature capture and multi-scale feature extraction in pig detection. By integrating the innovative VSSD module into the backbone network, our model utilizes a Non-Causal State-Space Duality mechanism to overcome the causal constraints of traditional state-space models, enabling effective modeling of long-range dependencies within images and significantly enhancing the extraction of global structural features of pigs in complex farming environments. Furthermore, the novel Multi-Scale Efficient Hybrid Encoder M-Encoder employs parallel multi-scale convolutional kernels to simultaneously capture local detailed features and global contour information of pigs. This design effectively alleviates the problem of insufficient multi-scale feature extraction caused by varying observation distances and individual size differences among pigs. Compared to traditional models, VM-RTDETR demonstrates significant performance improvements in key metrics: increased by 2.35% to 10.93%, improved by 0.63% to 2.82%, and rose by 2.76% to 16.36%. Therefore, the experimental results show that VM-RTDETR provides an efficient and accurate monitoring method for intelligent breeding in animal husbandry.

Author Contributions

Conceptualization, W.H. and S.-A.X.; Methodology, W.H. and S.-A.X.; Software, W.H., M.H. and Y.L.; Validation, W.H., S.-A.X., M.H. and H.S.; Formal Analysis, S.-A.X., Y.L. and H.L.; Investigation, F.L. and H.L.; Data Curation, S.-A.X. and H.S.; Writing—Original Draft Preparation, W.H.; Writing—Review and Editing, W.H., S.-A.X. and H.S.; Visualization, S.-A.X. and H.L.; Supervision, W.H., M.H., Y.L. and F.L.; Project Administration, F.L.; Funding Acquisition, W.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the GHfund D [ghfund202407042032]; Shanxi Province Basic Research Program [202203021212444]; Shanxi Agricultural University Science and Technology Innovation Enhancement Project [CXGC2023045]; Key Research and Development Program of Zhejiang Province under Grants 2024C01104 and 2024C01026; Shanxi Agricultural University Academic Restoration Research Project [2020xshf38]; Young and Middle-aged Top-notch Innovative Talent Cultivation Program of the Software College, Shanxi Agricultural University [SXAUKY2024005]; Teaching Reform Project of Shanxi Agricultural University [JG-202523]; and Shanxi Postgraduate Education and Teaching Reform Project Fund [2025YZLJC039].

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Ethics Committee of Institutional Animal Care (SXAU-EAW-2022P.OP.011022001, date of approval 23 November 2022).

Informed Consent Statement

Informed consent was obtained from the owner of the commercial pig farm for the use of the farm’s data and facilities in this study. A blank copy of the consent form is available.

Data Availability Statement

The dataset was developed by our research team and will be made publicly accessible upon reasonable request.

Acknowledgments

The authors would like to thank the anonymous reviewers for their valuable comments and suggestions.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Rowe, E.; Dawkins, M.S.; Gebhardt-Henrich, S.G. A systematic review of precision livestock farming in the poultry sector: Is technology focussed on improving bird welfare? Animals 2019, 9, 614. [Google Scholar] [CrossRef] [PubMed]

- Okinda, C.; Nyalala, I.; Korohou, T.; Okinda, C.; Wang, J.; Achieng, T.; Wamalwa, P.; Mang, T.; Shen, M. A review on computer vision systems in monitoring of poultry: A welfare perspective. Artif. Intell. Agric. 2020, 4, 184–208. [Google Scholar] [CrossRef]

- Lee, S.; Ahn, H.; Seo, J.; Chung, Y.; Park, D.; Pan, S. Practical monitoring of undergrown pigs for iot-based large-scale smart farm. IEEE Access 2019, 7, 173796–173810. [Google Scholar] [CrossRef]

- Plà-Aragonès, L.M. The evolution of dss in the pig industry and future perspectives. In EURO Working Group on DSS: A Tour of the DSS Developments Over the Last 30 Years; Springer: Berlin/Heidelberg, Germany, 2021; pp. 299–323. [Google Scholar]

- Elizar, E.; Zulkifley, M.A.; Muharar, R.; Zaman, M.H.M.; Mustaza, S.M. A review on multiscale-deep-learning applications. Sensors 2022, 22, 7384. [Google Scholar] [CrossRef] [PubMed]

- Jiao, L.; Wang, M.; Liu, X.; Li, L.; Liu, F.; Feng, Z.; Yang, S.; Hou, B. Multiscale deep learning for detection and recognition: A comprehensive survey. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 5900–5920. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Yang, Q.; Xiao, D.; Lin, S. Feeding behavior recognition for group-housed pigs with the faster r-cnn. Comput. Electron. Agric. 2018, 155, 453–460. [Google Scholar] [CrossRef]

- Tu, S.; Yuan, W.; Liang, Y.; Wang, F.; Wan, H. Automatic detection and segmentation for group-housed pigs based on pigms r-cnn. Sensors 2021, 21, 3251. [Google Scholar] [CrossRef] [PubMed]

- Hosain, M.T.; Zaman, A.; Abir, M.R.; Akter, S.; Mursalin, S.; Khan, S.S. Synchronizing object detection: Applications, advancements and existing challenges. IEEE Access 2024, 12, 154129–154167. [Google Scholar] [CrossRef]

- Shen, M.; Tai, M.; Cedric, O. Real-time detection method of newborn piglets based on deep convolution neural network [j/ol]. Trans. Chin. Soc. Agric. Mach. 2019, 50, 270–279. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Peng, N.; Li, F.; Luo, X. Plm-yolov5: Improved yolov5 for pig detection in livestock monitoring. In Proceedings of the 2024 International Conference on Intelligent Robotics and Automatic Control (IRAC), Guangzhou, China, 29 November–1 December 2024; pp. 619–625. [Google Scholar]

- Li, G.; Shi, G.; Jiao, J. Yolov5-kcb: A new method for individual pig detection using optimized k-means, ca attention mechanism and a bi-directional feature pyramid network. Sensors 2023, 23, 5242. [Google Scholar] [CrossRef] [PubMed]

- Huang, M.; Li, L.; Hu, H.; Liu, Y.; Yao, Y.; Song, R. Iat-yolo: A black pig detection model for use in low-light environments. In Proceedings of the 2024 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), Zhuhai, China, 22–24 November 2024; pp. 1–5. [Google Scholar]

- Liao, Y.; Qiu, Y.; Liu, B.; Qin, Y.; Wang, Y.; Wu, Z.; Xu, L.; Feng, A. Yolov8a-sd: A segmentation-detection algorithm for overlooking scenes in pig farms. Animals 2025, 15, 1000. [Google Scholar] [CrossRef] [PubMed]

- He, P.; Zhao, S.; Pan, P.; Zhou, G.; Zhang, J. Pdc-yolo: A network for pig detection under complex conditions for counting purposes. Agriculture 2024, 14, 1807. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 1–11. [Google Scholar]

- Chen, Y.; Wang, Y.; Xiong, S.; Lu, X.; Zhu, X.X.; Mou, L. Integrating detailed features and global contexts for semantic segmentation in ultrahigh-resolution remote sensing images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–14. [Google Scholar] [CrossRef]

- Li, Z.; Hu, J.; Wu, K.; Miao, J.; Zhao, Z.; Wu, J. Local feature acquisition and global context understanding network for very high-resolution land cover classification. Sci. Rep. 2024, 14, 12597. [Google Scholar] [CrossRef] [PubMed]

- Shi, Y.; Dong, M.; Li, M.; Xu, C. Vssd: Vision mamba with non-causal state space duality. arXiv 2024, arXiv:2407.18559. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Jiao, J.; Liu, Y. Vmamba: Visual state space model. Adv. Neural Inf. Process. Syst. 2024, 37, 103031–103063. [Google Scholar]

- Dao, T.; Gu, A. Transformers are ssms: Generalized models and efficient algorithms through structured state space duality. arXiv 2024, arXiv:2405.21060. [Google Scholar] [CrossRef]

- Yin, D.; Hu, L.; Li, B.; Zhang, Y.; Yang, X. 5%> 100%: Breaking performance shackles of full fine-tuning on visual recognition tasks. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 10–17 June 2025; pp. 20071–20081. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. Yolov7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 7464–7475. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).