1. Introduction

In modern animal husbandry, the swine industry is a critical pillar of the global food supply and agricultural economy [

1]. A pig’s body weight is a vital physiological metric linked to growth rate, feed conversion efficiency, nutritional requirements, and overall health [

2]. Therefore, achieving accurate, unobtrusive, real-time monitoring of pig body weight has immense practical value. However, traditional methods for measuring pig weight primarily rely on contact-based mechanical or electronic scales. These approaches not only require considerable labor for herding and weighing, but also readily induce stress responses in the animals. With rapid advances in computer vision and artificial intelligence, image-based, contactless livestock weight estimation has emerged as a highly promising research direction. This approach substantially reduces disturbance and stress to the animals, allowing measurements in a more natural state, while providing data with far greater real-time continuity compared to conventional methods.

In current studies, three primary approaches—3D point clouds, depth maps, and 2D RGB images—are used to extract features from pigs, which are then combined with machine learning or deep learning models to estimate body weight.

3D point-cloud–based techniques capture detailed morphological features of pigs and provide richer body-size parameters; these methods are highly robust to complex surface geometry and partial occlusions and can achieve high-precision measurements in dynamic environments, thereby substantially improving weight-estimation accuracy [

3]. He et al. [

4] employed 3D imaging and a regression network for contactless pig weight measurement; their study introduced an image-enhancement preprocessing pipeline and a BotNet-based regression network to accurately predict pig body weight. Li et al. [

5] conducted experiments using morphometric measurements from 50 pigs as predictor variables in regression models, and their results demonstrated the accuracy and reliability of the Kinect v2 sensor for capturing body dimensions and estimating weight. Nguyen et al. [

6] used a handheld, portable RGB-D imaging system to generate point clouds for each pig and estimate weight, subsequently comparing the performance of machine learning and deep learning models. Kwon et al. [

7] proposed a deep-learning–based approach to rapidly reconstruct mesh models from pig point-cloud data and extract various measurements for real-time weight estimation, and they developed DNN models for weight prediction. Selle et al. [

8] presented a 3D data-modeling method for swine production; by constructing a statistical shape model they quantitatively and visually analyzed variations in body shape, morphology, and posture, and using linear regression with volume as the sole predictor they achieved accurate weight prediction. However, 3D point cloud devices are relatively expensive, and the acquisition and preprocessing of point cloud data are more complex, requiring operations such as filtering, registration, and denoising, and impose substantial computational demands, which limits their suitability for large-scale, low-cost field deployment.

A depth camera can capture scene depth information in a single exposure, thereby generating a depth map corresponding to the RGB image. Condotta et al. [

9] utilized depth images to predict the weight of live animals, employing a linear equation for estimation. Cang et al. [

10] proposed an intelligent method for pig weight estimation using deep learning; they designed a deep neural network that takes a top-view depth image of a pig’s back as input and outputs the estimated weight. Fernandes et al. [

11] developed a system that uses a depth camera to acquire pig body measurements and automatically predict body weight. Na et al. [

12] developed a Raspberry Pi–based pig weight prediction system, in which a Raspberry Pi module segments pigs from captured depth images and extracts features from the segmented images for weight prediction. However, depth cameras have limited ranging accuracy and effective measurement range; when the pig’s surface texture is uniform or highly reflective, depth data are prone to missing values or noise, necessitating interpolation and depth-map restoration, which increases algorithmic complexity.

Weight estimation methods based on conventional planar RGB images employ deep learning or traditional image processing techniques to extract contour area, shape features, and color–texture information from top-view or side-view images of pigs. These methods require minimal equipment—only a standard camera—offering low cost and ease of large-scale deployment. Kaewtapee et al. [

13] captured dorsal images of pigs using a camera and developed a pig weight estimation model based on regression analysis and artificial neural networks (ANN). Banhazi et al. [

14] developed a single-camera system to detect pig body width, length, and area, and estimated weight using these parameters. Thapar et al. [

15] acquired top-view and side-view images of pigs to measure body dimensions; the technique was specifically applied to Ghoongroo pigs, successfully predicting their body weights. da et al. [

16] presented a method for pig weight prediction using 2D images in conjunction with computer vision and machine learning algorithms. They developed a multivariate linear regression model capable of automatically extracting morphometric data from images to predict pig body weight. Wan et al. [

17] employed a monocular vision approach based on an improved EfficientVit-C model, integrating image segmentation, depth estimation, and an adaptive regression network to achieve rapid, accurate, and non-invasive pig weight estimation. Ji et al. [

18] proposed a machine learning–based method for real-time pig weight estimation by extracting image features; the approach maintains high accuracy while reducing computational demands through on-the-fly image segmentation for feature extraction. However, pure 2D images cannot directly capture depth information and are highly sensitive to changes in lighting, background interference, and variations in pig posture, often requiring strict imaging conditions and calibration procedures; otherwise, feature extraction may become unstable, thereby affecting the accuracy of weight estimation.

The most prominent characteristics of traditional machine learning methods are their simplicity, broad applicability, and ease of use. With the advancement of mathematical regression techniques, feature parameters have evolved from one-dimensional representations to multidimensional forms. Integrating machine learning methods into the regression prediction process can significantly enhance prediction accuracy. Compared with traditional machine learning, deep learning algorithms can accurately and efficiently extract high-value features from high-dimensional and complex data, potentially yielding more accurate and reliable results [

19].

Building upon the aforementioned studies and considering the practical characteristics of agricultural engineering requirements, both 3D point cloud and depth image methods, although demonstrating excellent performance, suffer from high equipment costs, stringent constraints on data acquisition environments, and significant computational resource demands. These limitations hinder their applicability in capturing pigs in their most natural states within complex environments and make lightweight deployment challenging. Therefore, this study adopts standard planar RGB images for predicting pig body weight. Using RGB images eliminates the need for expensive acquisition devices, simplifies data processing, ensures high data availability, and results in a low proportion of noisy data, thereby facilitating practical application in agricultural engineering. This study aims to address the following issues: In previous research utilizing planar RGB images, the prevailing approach has been a “one-step” strategy, in which images are directly subjected to recognition or segmentation without additional preprocessing. Moreover, the employed methods are relatively outdated, resulting in limited predictive accuracy [

20,

21]. In contrast, certain methods that achieve higher accuracy often do so by constraining the background environment and the posture of pigs [

22], which reduces robustness and transferability. To overcome these limitations, this study proposes a “two-step” image processing pipeline: first, the background is removed to eliminate irrelevant interference, and subsequently, state-of-the-art instance segmentation models are applied to the target. In addition, camera height is incorporated as an independent feature, compensating for the lack of depth information in planar RGB images. Furthermore, a novel feature parameter, “body curvature,” is introduced to characterize pig posture; its interaction with other features enhances both accuracy and robustness. Finally, eight mainstream models, including traditional machine learning and deep learning approaches, are systematically compared, and the optimal solution for this study is identified.

The contributions of this work are as follows:

Utilizing the publicly available dataset provided by [

18], the study enables easy reproducibility and comparison, breaking the limitation of widespread reliance on proprietary datasets in the field of automatic livestock weight estimation.

Innovatively integrating the latest high-resolution segmentation network (BiRefNet) [

23] with the instance segmentation model (YOLOv11-seg), this approach enables precise extraction of the pig’s dorsal region under free-moving conditions in complex scenes without confining the animal to a fixed area.

Based on previous studies and our analytical discussions, a total of 17 relevant features were identified and validated through ablation experiments. This not only enriches the feature set but also provides valuable references for future research in this field.

A comparative analysis of eight mainstream models, encompassing both traditional machine learning and deep learning methods, ultimately confirms the superiority of ensemble tree models for this task.

This paper is organized as follows.

Section 2 describes the dataset, presents the proposed method, analyzes the extracted features and provides a discussion of the model.

Section 3 provides the experimental conclusions.

Section 4 discusses this conclusion and compares the results of other papers.

Section 5 concludes the paper.

3. Experiments and Results

In this experiment, we used a laptop equipped with a 2.10 GHz Intel(R) Core (TM) i7-14700HX processor, 32 GB DDR5 6000 MHz RAM, and an NVIDIA GeForce RTX 4070 Laptop GPU, running Windows 11 Home Edition 23H2. The experimental setup is cost-effective and readily accessible, making it suitable for practical deployment in small- to medium-sized farms and other agricultural engineering contexts.

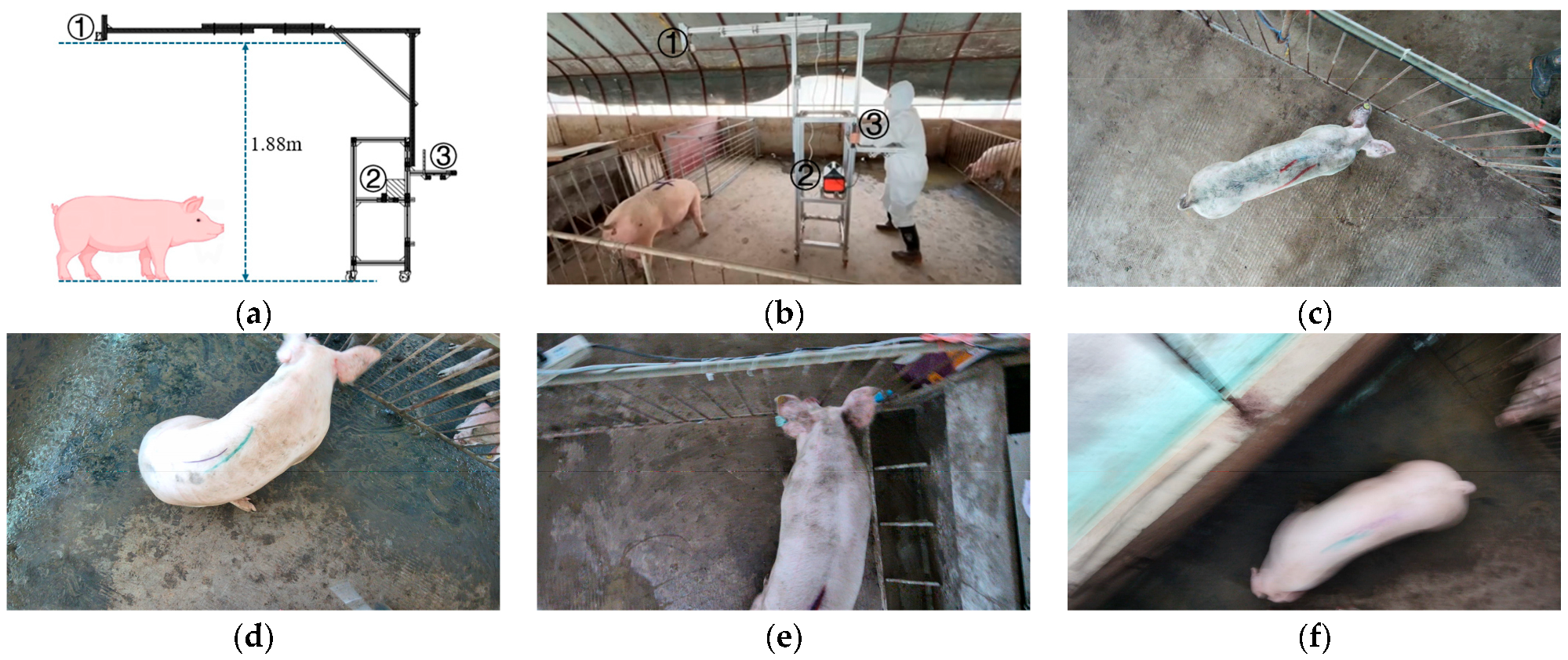

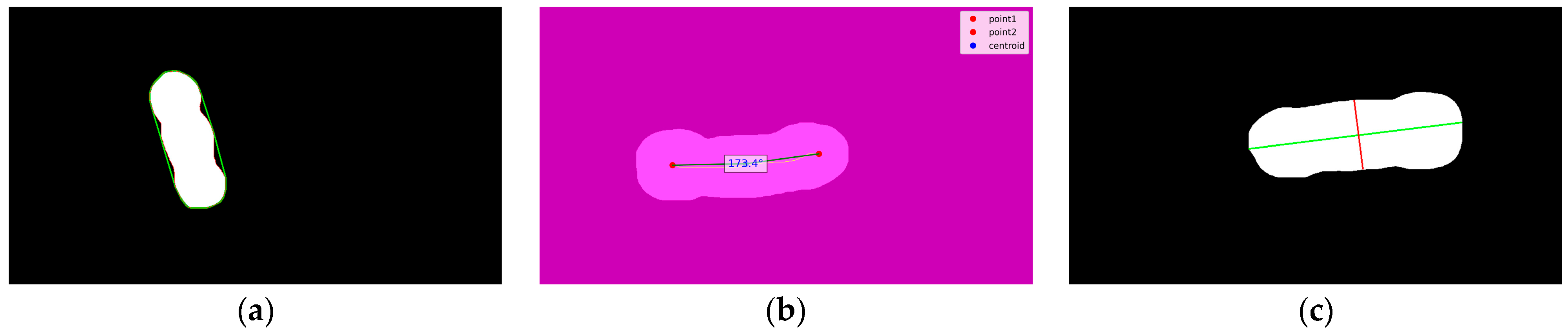

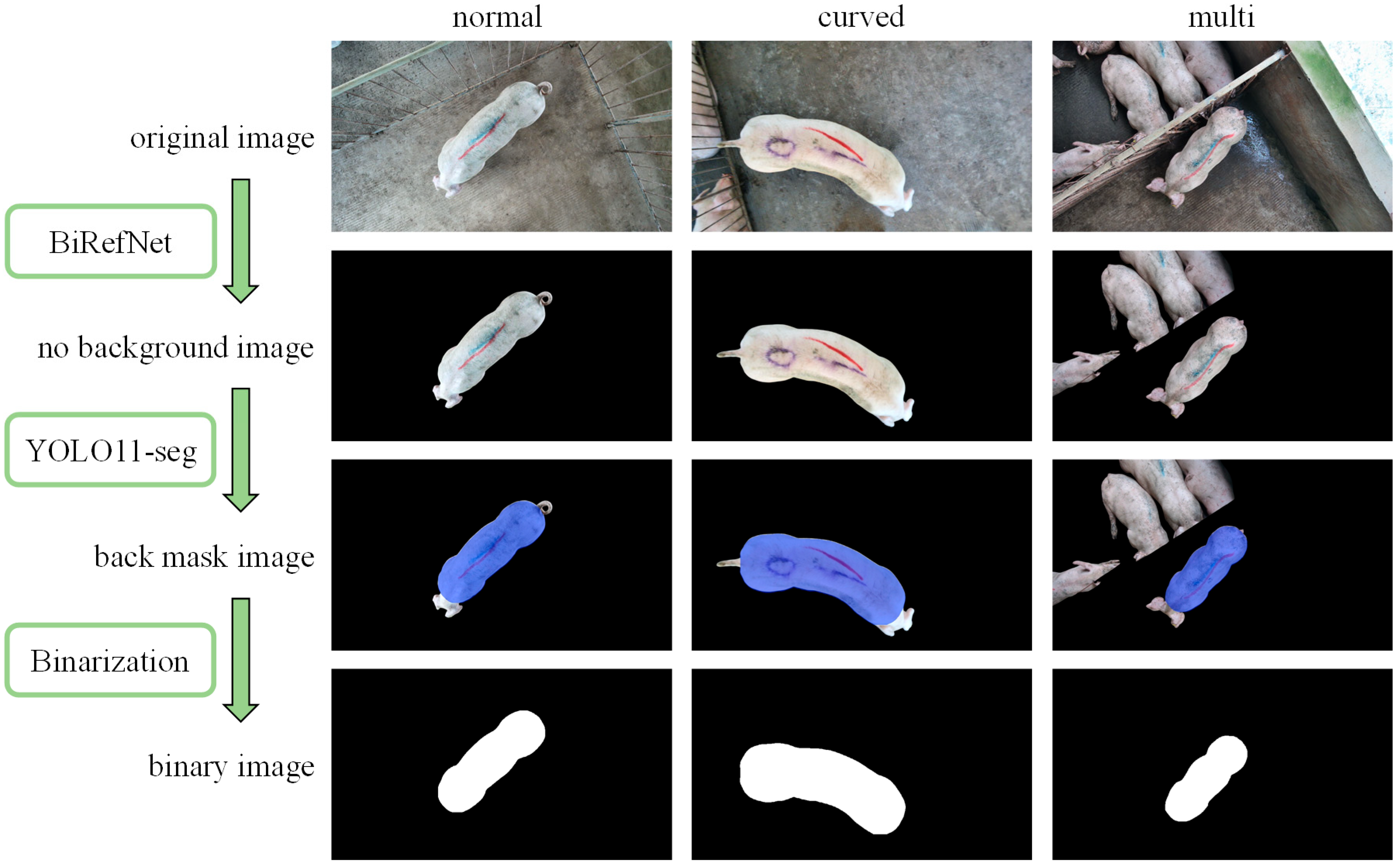

First, the BiRefNet model pre-trained on the DIS dataset was applied directly to remove the background from the original images. Owing to the model’s excellent performance in complex and fine-grained scenarios and considering the satisfactory segmentation results obtained in this study, no additional training was necessary, as the model fully met the task requirements. Subsequently, 1500 background-removed foreground images were randomly selected for manual annotation of the back region and split into training and validation sets at an 8:2 ratio. The YOLOv11-seg framework was then employed for back region learning and segmentation. The training was conducted in a Python 3.8 and PyTorch 2.4.1 environment, using the official YOLOv11-seg pre-trained model, with an input resolution of 960 × 540, 100 training epochs, the optimizer set to “auto,” and all other parameters kept at default values. Upon completion of training, the back segmentation achieved an mAP50-95 of 0.995 in the validation set, which satisfied the requirements of this experiment. The results of data processing are illustrated in

Figure 4, while the YOLOv11-seg training results for back segmentation are presented in

Figure 5.

3.1. Evaluation Metrics

In this experiment, the evaluation metrics selected were the Mean Absolute Error (MAE), Mean Squared Error (MSE), Root Mean Squared Error (RMSE), and the coefficient of determination (R2).

MAE: Intuitively reflects the average deviation between predicted and actual values, using the same units and being easy to interpret. The calculation of MAE is given in Formula (14).

MSE: Assigns greater penalty to larger errors, helping the model focus on reducing substantial deviations. The calculation of MSE is given in Formula (15).

RMSE: Shares the same units as the original variables, making it easier to interpret compared to MSE. The calculation of RMSE is given in Formula (16).

R2: Indicates the proportion of variance explained by the model, typically ranging from (−∞, 1], with values closer to 1 indicating better fit. The calculation of

R2 is given in Formula (17).

3.2. Experimental Results

To comprehensively evaluate the effectiveness of the feature set proposed in this study, we compared eight mainstream regression models. All models were trained, validated, and tested on the same dataset partitioned in a 6:2:2 ratio, with Grid Search employed to obtain optimal hyperparameters. The performance evaluation results of these models on the test set are shown in

Table 1.

The experimental results clearly demonstrate that ensemble tree-based models, such as Random Forest, XGBoost and LightGBM, as well as deep learning models specifically designed for tabular data, like TabNet, significantly outperform traditional linear regression and support vector machine (SVM) models across all evaluation metrics. This indicates the existence of a complex nonlinear relationship between the phenotypic features extracted from pig images and their body weight. The four best-performing models achieved comparable results. Among them, the TabNet model yielded the lowest MAE (3.7711 kg), while the XGBoost model achieved the lowest MSE (27.4287 kg) and RMSE (5.2372 kg), as well as the highest R

2 (0.9814). Based on these outcomes, XGBoost was selected as the optimal model in this study. To further investigate its performance, we conducted visual analyses of feature importance, prediction results, and residual distribution, as shown in

Figure 6.

3.3. Preserve the Original Body Shape of the Pig

To verify the hypothesis proposed in

Section 2.2.2 that “removing the head and tail regions and retaining only the main body of the pig’s back can improve prediction accuracy,” we conducted a comparative experiment. In this experiment, we re-extracted all 17 features using mask images that included the pig’s complete contour and trained and evaluated them with the same 8 models. The experimental results on the test set are presented in

Table 2.

The results indicate that when the head and tail regions are retained, all models exhibit a consistent decrease in performance metrics. Taking the optimal XGBoost model as an example, its R2 decreased from 0.9814 to 0.9757. We attribute this to the fact that the posture of the pig’s head, ears, and tail varies greatly and has weak correlation with body weight; their inclusion introduces substantial noise into feature extraction. For instance, features such as maximum contour curvature and convex hull area are heavily affected by head rotation or tail movement, thereby weakening their correlation with the pig’s core body shape. This comparative experiment demonstrates that the strategy of segmenting only the back region, as proposed in this study, is both correct and necessary, effectively eliminating interference from irrelevant variables and improving the accuracy and robustness of weight estimation.

3.4. Removing Low-Importance Features

To investigate the contribution of each feature and validate the completeness of the selected 17 features, we designed further ablation experiments based on the feature importance ranking shown in

Figure 6a. First, we removed the 8 least important features and retained only the top 9 features for model training, with the results on the test set presented in

Table 3.

The experimental results demonstrate that, after removing certain low-importance features, the performance of most models declined to slightly degrees. This trend supports our hypothesis that, although some features have relatively low importance scores, they play a crucial fine-tuning role in the final prediction by providing subtle information that highly important features fail to capture. Notably, there is an exception: when the eight least important features were removed, the performance of the Nonlinear Regression model (SVR) improved. We argue that, first, SVR—particularly with an RBF kernel—depends critically on Euclidean distances in feature space. Irrelevant or noisy features dilute pairwise distances and thus degrade the discriminative structure of the kernel matrix; removing such features can therefore restore the kernel’s sensitivity to meaningful signal and improve generalization. Second, SVR is vulnerable to the curse of dimensionality in low-sample regimes: eliminating noise dimensions reduces variance and can substantially improve out-of-sample performance. Third, features with measurement error or extreme values can adversely affect the effective kernel scale (gamma); after removing these features, previously chosen hyperparameters may become more appropriate for the reduced-dimensional space, yielding better performance.

Subsequently, we conducted a more extreme test by retaining only the four most important features. This evaluation was carried out on the four best-performing models, as well as the model that showed improved performance after the removal of low-importance features in the previous experiment. The results on the test set are presented in

Table 4.

The experimental results indicate that, whether for the models that originally achieved the best performance or for those that improved after the removal of low-importance features, retaining only the four most important features led to a decline in performance. This finding further validates our hypothesis that low-importance features play a critical fine-tuning role in prediction by providing subtle information that highly important features fail to capture. When only the high-importance features are preserved, the loss of such details results in reduced predictive performance.

3.5. Feature Correlation Analysis

To gain a deeper understanding of the relationships between each feature and body weight, as well as the interactions among features, we calculated the Pearson correlation coefficients between all 17 input features and the real_weight, with the results presented in

Figure 7.

Features that directly reflect pig size, such as mask_area (0.95), Convex_Hull_Area (0.94), perimeter (0.94), longest (0.92), and shortest (0.92), exhibit very strong positive correlations with actual body weight, which aligns with intuitive expectations and serves as the cornerstone for weight prediction. Additionally, height (−0.51) shows a moderate negative correlation, further confirming the necessity of including camera height as a key calibration parameter. Features such as body_curve and dif/mask have weak direct linear correlations with actual body weight, but they show significant associations with other features (e.g., the correlation between body_curve and difference is −0.53). This validates the design rationale discussed in

Section 2.2.4: these “correction features” are intended to quantify deviations in core size features caused by postural variations such as body curvature, thereby indirectly improving model accuracy. The outline_curve and Hu Moment series (Hu_1 to Hu_7) generally show low linear correlations with body weight. However, considering the feature importance analysis in

Figure 6a, these features—particularly outline_curve—contribute substantially to the final model. This indicates that these features may capture nonlinear shape information related to body weight, which cannot be measured by linear correlation analysis but is crucial for tree-based models such as XGBoost.

In summary, the feature correlation analysis further validates the rationality of the feature engineering in this study: the selected 17 features form a multidimensional and information-complementary feature space that encompasses direct predictors, indirect correction features, and nonlinear supplementary features, laying a solid foundation for subsequent high-precision modeling.

4. Discussion

For the XGBoost model, which achieved the best performance in the experiments, we applied it to predict the outcomes on the designated test set. Among the 2222 test samples, the body weights ranged from 33.64 kg to 192.39 kg, covering a broad spectrum and avoiding a focus solely on any specific weight range.

For these 2222 samples, the average difference between the true and predicted weights was 3.93 kg, meeting the requirements for agricultural engineering applications. Specifically, 416 samples had a predicted weight within 1 kg of the true weight, 1546 samples within 5 kg, and 2115 samples within 10 kg. Meanwhile, the average ratio of the difference to the true weight across all samples was 0.044, with 443 samples showing a predicted weight within 1% of the true weight and 1627 samples within 5%.

From

Figure 6b, which shows the prediction results of the best-performing model, it can be seen that the predicted values exhibit a strong linear relationship with the true values, indicating a high degree of model fit. From the residual plot in

Figure 6c, it is observed that the distribution of errors is not entirely random. In the regions where the actual weight is around 60 kg and above 170 kg, the residuals exhibit concentrated overestimation and underestimation, indicating that the model shows certain biases in weight estimation for pigs within these specific ranges. This phenomenon may suggest that the relationship between body shape features and weight varies subtly across different growth stages. This provides a direction for future work, such as developing stage-specific weight estimation models to further improve overall accuracy.

As shown in the feature importance plot in

Figure 6a, the area of the back region accounts for an importance score of 0.474 in weight estimation. Therefore, the accuracy of calculating the back area can directly affect the precision of weight prediction. The method proposed in this study, which combines a high-resolution segmentation network (BiRefNet) with an instance segmentation model (YOLOv11-seg), achieves an mAP50−95 accuracy of 0.995 for the back region on the validation set, further enhancing the accuracy of weight prediction. It is also observed that camera height, short axis length, and convex hull area significantly affect weight. Due to perspective scaling, the camera height directly influences the captured area of the pig’s back, while the length of the short axis can indicate the pig’s fatness, with heavier pigs generally being fatter. The convex hull area additionally reflects the roundness of the pig’s body contour.

Considering the four parameters that have the greatest impact on weight, their relationships are not simply linear. For example, the relationship between camera height and back area requires complex mathematical functions to fit. Therefore, ensemble tree-based models (Random Forest, XGBoost, LightGBM) and the neural network-based TabNet model outperform traditional linear models overall. This provides a new direction for future research, suggesting that the adoption of such models may yield favorable results in estimating the body weight of poultry animals.

The performance of our method compared to other approaches using planar RGB images for pig weight estimation is shown in

Table 5. First, compared with the article from which our dataset originates [

18], under the same dataset conditions, our method achieves better MAE, MSE, RMSE, and R

2. Specifically, MAE, MSE, and RMSE decreased by 10.31%, 22.60%, and 11.89%, respectively, while R

2 increased by 1.70%, demonstrating the effectiveness of our BiRefNet + YOLOv11-seg image processing approach. Among the other compared models, three achieved higher R

2 values than our method. In the study [

22], the proposed method requires pigs to pass through a narrow corridor for image collection. Firstly, driving pigs through this corridor may induce stress responses. Additionally, the method discards images where the pigs’ bodies are slightly bent, keeping only fully straightened postures, which greatly increases the workload. In contrast, our approach does not require driving the pigs; data are collected while the pigs are in their most natural state, without imposing specific postures, resulting in higher robustness. In the study [

35], the estimation is based on the weekly average weight per pen rather than individual pigs. Since pig weight trends follow a certain regular pattern, averaging the predicted weights per pen yields excellent results. In the study [

36], a series of methods produced good results; however, the dataset was very small, with only 39 pigs concentrated between 104 kg and 138 kg, resulting in low model robustness and potential overfitting risks. In contrast, our study involves 124 pigs, with weights ranging from 33.1 kg to 192 kg, covering a wide range. This ensures high model robustness and strong resistance to overfitting.

Table 6 compares the performance of our planar RGB image-based pig weight estimation method with depth-image-based approaches. From the comparison, although our method does not reach the top performance reported, it consistently exceeds the average level. Additionally, our approach benefits from easy data collection, low cost, and high reproducibility, making it more practical for agricultural engineering applications.

In

Table 7, we compare the performance of our planar RGB image-based pig weight estimation method with 3 D point cloud-based approaches. Considering the differences in datasets, our method achieves performance nearly comparable to the best results in the table. Although 3D point cloud methods can capture additional features and parameters unavailable in planar RGB images, their data acquisition and preprocessing are relatively complex, requiring filtering, registration, and denoising operations, as well as stringent environmental conditions. This makes the collected samples susceptible to noise, potentially leading to biased data and suboptimal outcomes. In contrast, our method is more robust, resistant to interference, and yields more stable results.

This study also has certain limitations. The first limitation is related to the data. The pig images used in this study all come from a single breed; however, different breeds exhibit variations in body fat percentage, body shape, and other characteristics [

51]. Using the same model to predict the weight of pigs from other breeds may result in poor performance and limited generalizability. The second limitation concerns posture. The system cannot automatically identify “dirty data” such as extremely twisted pigs or incomplete back regions, which were manually removed in this study. Failure to exclude such data could lead to poor prediction results. Next, environmental conditions pose a limitation. The pigs in the dataset were generally clean, resulting in high recognition accuracy. If pigs have mud on their backs or if lighting conditions vary drastically, the accuracy of segmentation and feature extraction could be adversely affected. Finally, regarding camera height, variations in this parameter in practical farming scenarios may pose challenges, potentially leading to a sudden drop in recognition accuracy.

Based on this, we have the following plans for future work. First, we plan to expand the dataset by collecting data from pigs of different breeds to enhance the generalizability of our study. Additionally, at the beginning of the processing pipeline, a lightweight classification network could first identify the breed, and then switch to the corresponding model, thereby eliminating manual intervention in subsequent steps. Second, we plan to add an automatic “dirty data” removal step after the BiRefNet process. For example, images with pig detection confidence below a certain threshold could be removed (addressing extreme distortions or blurred images), and images where pigs touch the frame could also be removed (addressing potential incomplete pig regions). Then, since a high-accuracy back region mask extraction model has already been trained, transfer learning using only a small amount of additional pig data from other environments would suffice to adapt the model. Also, we plan to deploy the model on edge computing devices for on-site farm testing to verify its real-time performance and stability. Finally, regarding the issue of camera height, we propose the following considerations. If there is a global change in camera height, the model can be updated by retraining with modified feature values to obtain new model weights. Furthermore, when sufficient data are available, camera height can be treated as a continuous rather than a discrete parameter in the model. This approach allows the model to effectively accommodate any variations in camera height, thereby improving its adaptability and robustness.

5. Conclusions

In this study, we propose a simple computer vision–based method for estimating pig body weight, allowing real-time weight measurement from top-view images of pigs in their normal state on farms, requiring only the recording of the camera height. First, we use BiRefNet to remove the background from the top-view pig images and then apply YOLOv11-seg to segment the pig’s back mask. From these mask images, we extract features including dorsal area, convex hull area, body curvature, contour perimeter, contour curvature, major axis, minor axis, and Hu Moments. Using these features for prediction, the XGBoost model achieves the best performance, with MAE, MSE, RMSE, and R2 values of 3.9350, 27.4287, 5.2372, and 0.9814, respectively. Compared with other methods on the same dataset, MAE, MSE, and RMSE were reduced by 10.31%, 22.60%, and 11.89%, respectively, while R2 increased by 1.70%. Because our study relies solely on planar RGB images and employs only two simple networks for image processing alongside machine learning for weight prediction, the model architecture is compact, and both training and inference are computationally efficient. In summary, our method demonstrates good adaptability across pigs of different weights and can be easily and cost-effectively applied in practical agricultural settings.