1. Introduction

Broiler production plays a pivotal role in the global supply of animal protein. With continued population growth and accelerated urbanization, broiler chickens have become a primary source of animal protein because of their high productivity and low production cost [

1]. The broiler industry not only contributes to food security and market supply but also plays an important role in promoting agricultural economic development and increasing farmers’ incomes [

2,

3]. In recent years, driven by rapid expansion of farming scale and growing demand for intelligent management, precision poultry farming has become a major development direction [

4]. This approach integrates modern sensing and information technologies to enable automated and fine-grained monitoring of flocks, aiming to improve production efficiency while safeguarding animal welfare [

5].

In precision farming scenarios, comprehensive collection of individual level information supports the formulation of scientific breeding strategies, enables early disease warning, and effectively reduces systemic risks during production [

4,

6,

7]. Precise detection of individual broilers within a flock is the essential first step for acquiring such detailed individual information [

8]. Previous studies have shown that the facial region of chickens exhibits relatively stable texture and morphological patterns, and therefore it serves as a key anatomical site for individual identification and health monitoring [

8,

9]. Accordingly, chicken face detection not only provides a feasible pathway for identity recognition but also constitutes a core component for building intelligent visual perception systems.

However, high density rearing environments are often characterized by severe occlusion among individuals, frequent pose variation, and complex illumination conditions [

10], which pose substantial challenges to chicken face detection [

11,

12]. At the same time, traditional manual observation and video replay methods are inefficient and costly, and they struggle to meet the real-time and large-scale processing requirements of modern farms [

13]. These limitations have accelerated the adoption of deep learning-based object detection techniques for avian monitoring.

In the object detection field, the YOLO series has been widely applied to poultry monitoring because of its favorable trade off between speed and accuracy [

14,

15]. Some studies have employed YOLO-based convolutional neural networks to detect broiler heads and to estimate feeding duration by detecting head entry into feeders [

16]. Another study built a broiler leg disease detection model using YOLOv8 and demonstrated promising performance for early lesion diagnosis [

17]. To address dense occlusion, researchers have proposed single class dense detection networks such as YOLO-SDD to enhance robustness [

11]. Other work has applied YOLOv5 for diseased bird detection and reported an accuracy of approximately 89.2 percent [

18]. Nevertheless, these YOLO-based detectors typically rely on non-maximum suppression NMS for post processing, which reduces inference speed and introduces multiple hyperparameters, thus causing instability in the speed versus accuracy trade off [

14].

By contrast, DETR, the DEtection TRansformer, employs a Transformer architecture and a set prediction mechanism to form an end-to-end detection pipeline and thereby eliminates dependence on NMS [

19]. However, despite removing post processing, the standard DETR design incurs considerable computational overhead and its inference speed often fails to meet real time requirements [

19]. To this end, RT-DETR retains the advantages of the DETR paradigm while introducing a convolutional backbone and an efficient hybrid encoder, achieving a more favorable balance between detection accuracy and speed [

20].

Although RT-DETR demonstrates advantages in structural simplicity and global modeling capability, its original ResNet backbone has limited representational power when handling targets with highly similar structures and subtle texture variations, making it difficult to capture fine interindividual differences [

21]. In addition, the built-in Anchor Free Instance Interaction AIFI architecture exhibits insufficient synergy between global modeling and local detail recovery in the chicken face detection context, and normalization procedures show degraded efficiency under parallel computation. These issues are accompanied by high computational and memory costs, loss of fine detail information, and inconsistent behavior across scales [

22,

23,

24,

25]. Meanwhile, the Varifocal Loss VFL used in RT-DETR is insensitive to low-quality matching samples, which results in insufficient discrimination between positive and negative samples and thereby limits the effectiveness of matching optimization and the speed of training convergence [

26].

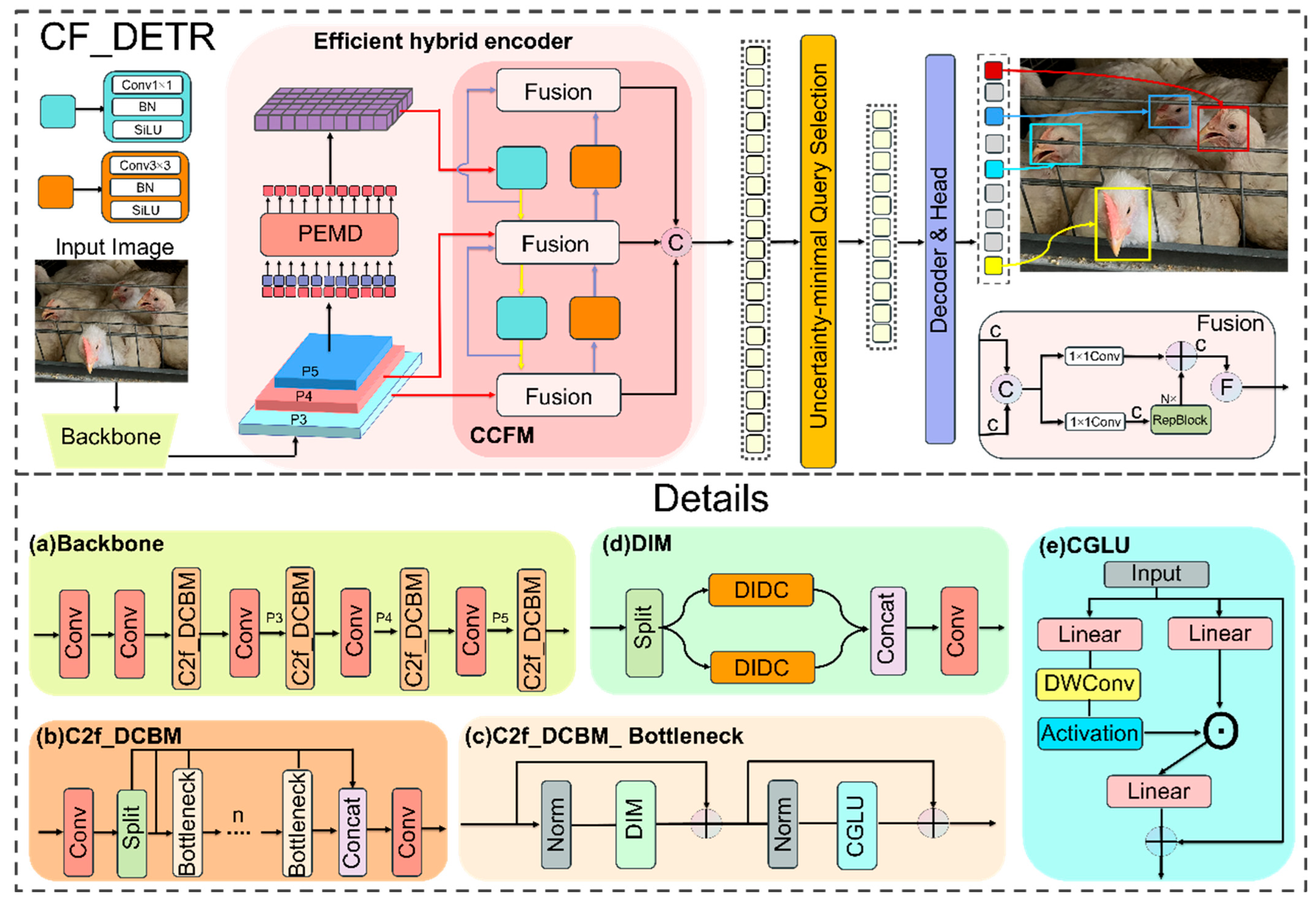

In summary, although RT-DETR offers end-to-end detection benefits, it still faces multiple bottlenecks in backbone design, encoding mechanisms, and loss formulation, which hinder its direct application to chicken face detection, a task that involves complex structural patterns and subtle textures. To meet the accuracy and real-time requirements of chicken face detection in complex farm environments, this paper proposes a systematic set of structural optimizations to the RT-DETR framework that focuses on three critical aspects of the detection pipeline: feature extraction, information encoding, and loss design. By synergistically improving these components, we construct an end-to-end detection model CF-DETR with enhanced structural adaptability and expressive capacity. The main contributions are summarized as follows:

- (i)

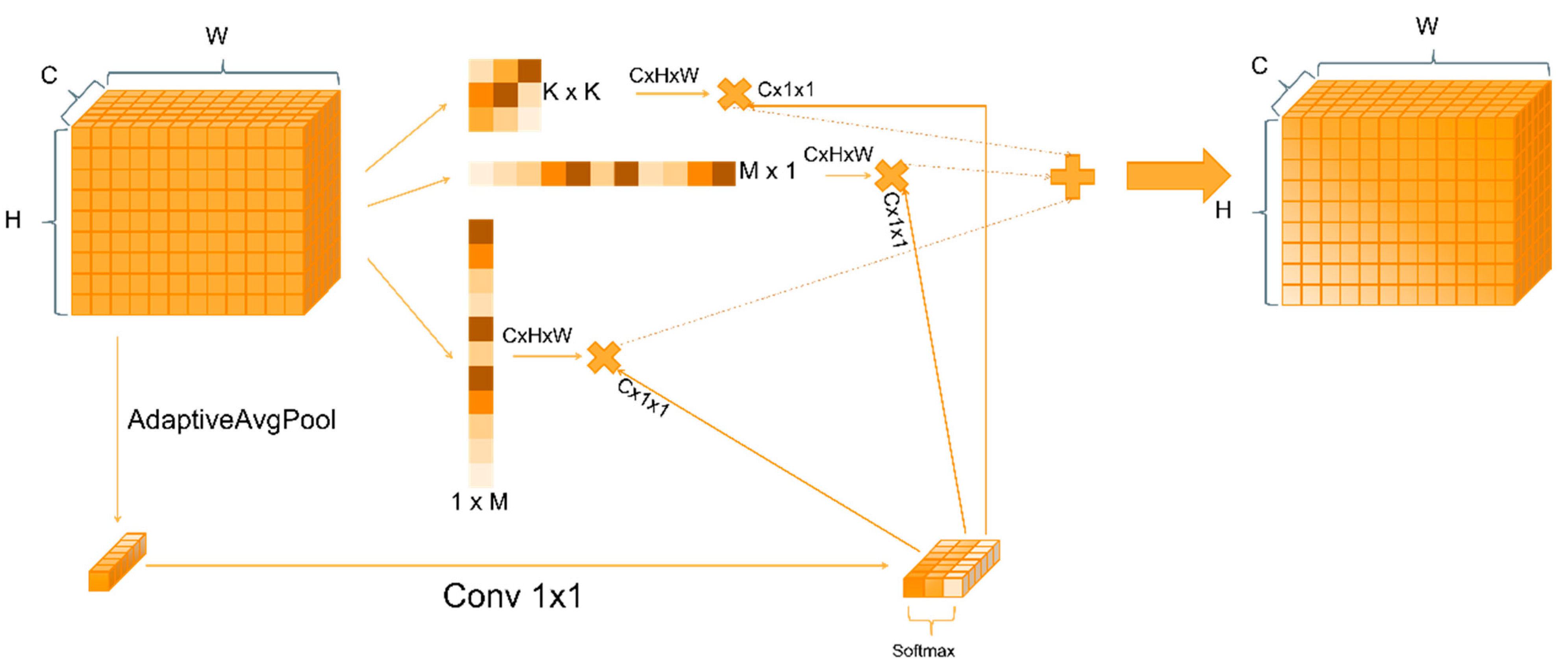

Dynamic Inception Depthwise Convolution DIDC. To overcome limitations of conventional backbones in representing diverse spatial structures, we design a convolutional operator based on multi path depthwise separable convolutions and a dynamic fusion mechanism to strengthen feature extraction under complex poses and occlusion.

- (ii)

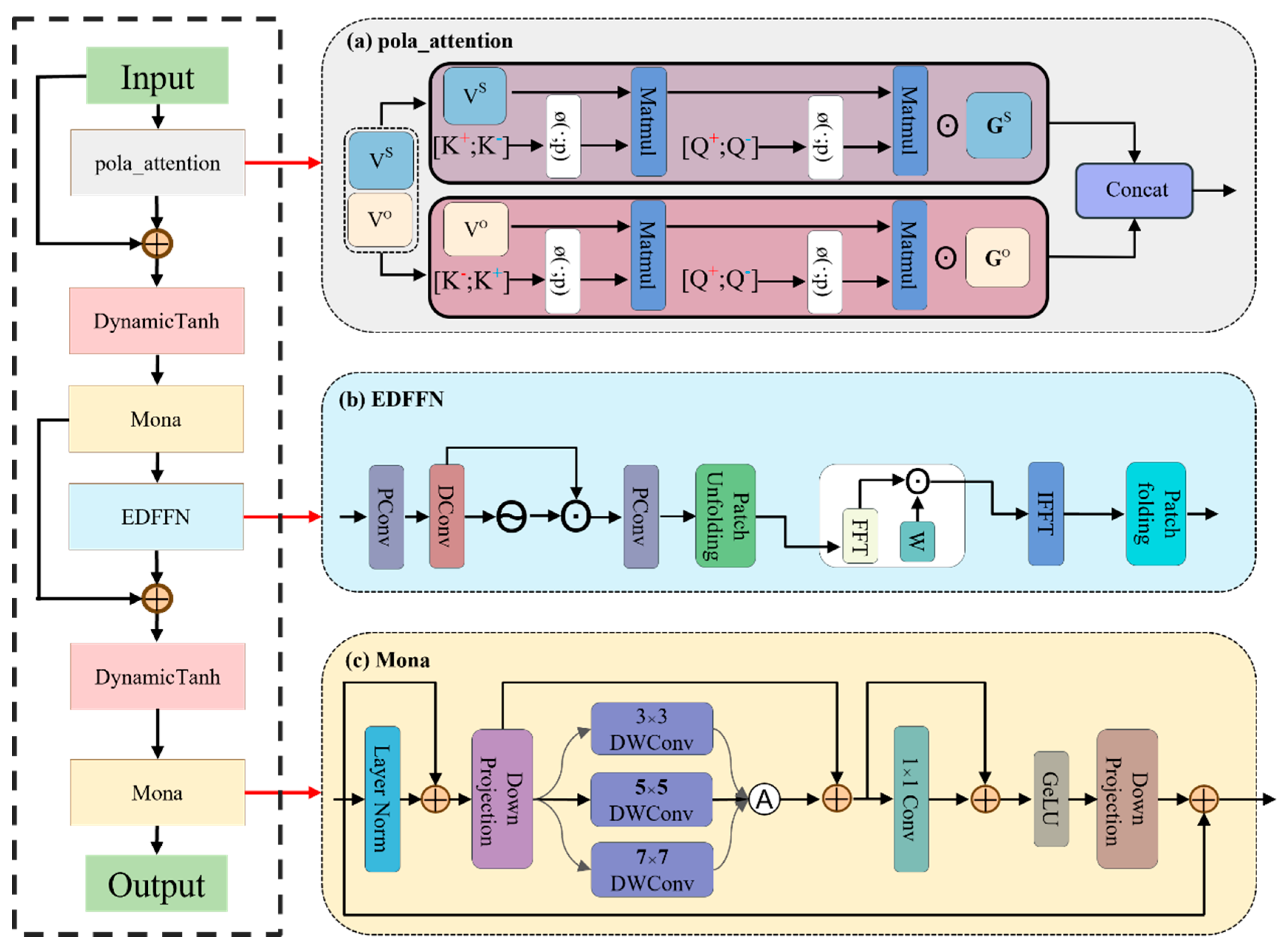

Polar Embedded Multi-scale Encoder PEMD. To address key issues of global modeling, normalization efficiency, multi-scale fusion, and detail compensation, we propose a structurally closed loop encoder module that improves coordination and stability in representing global context and local texture.

- (iii)

Matchability Aware Loss MAL. In the design of training objectives, we introduce a dynamic label weighting mechanism, so that predicted confidence adapts to matching strength, thereby optimizing training convergence and enhancing discriminability between targets and background.

3. Results

3.1. Backbone Comparison Experiment

To verify the effectiveness of the DIDC module, we compared four backbones under the same experimental settings: the original RT-DETR, a backbone based on the C2f structure, a backbone based on C2f integrated with the PKI module, and a backbone based on DIDC, i.e., C2f_DCMB (denoted as DIDC(C2f) in the table). Regarding lightweight design and embedded deployment requirements, the DIDC module employs three parallel depthwise separable convolution branches (square, horizontal stripe, and vertical stripe) along with a global context-based dynamic weight generation mechanism, reducing the model parameters from 19.9 M to 13.2 M and FLOPs from 56.9 G to 43.5 G, while maintaining an inference speed of 73.8 FPS, indicating that even with further reduction in computational cost, the DIDC module does not noticeably affect real-time performance and better meets the requirements for subsequent embedded deployment.

From the perspective of feature extraction capability, the DIDC module leverages multi-branch convolutions covering different directions and receptive fields and adaptively fuses the outputs of each branch with global semantic signals, effectively enhancing the model’s adaptability to chicken face regions of varying scales and complex shapes. As shown in

Table 3, after introducing the DIDC module, Precision increased from 93.8% to 96.2%, F

1-Score from 92.9% to 94.3%, mAP50 from 95.4% to 96.3%, and mAP50:95 from 60.2% to 61.4%, surpassing all other comparison models on multiple key metrics and fully validating the design advantages of the DIDC module in multi-scale spatial feature extraction and dynamic fusion.

3.2. Classification Loss Comparison Experiment

To meet the dual requirements of high accuracy and fast convergence in chicken face detection, this study compared four classification loss functions: VariFocal Loss (VFL) [

30], Slide Loss [

31], EMASlide Loss [

32], and MAL [

26] to determine the optimal solution.

As shown in

Table 4, in the comparison experiment of the four classification loss functions, MAL outperformed all others across all key detection metrics. Specifically, MAL achieved 95.2% Precision, 93.1% Recall, and 94.1% F

1-Score, significantly exceeding VFL’s 93.8%, 92.1%, and 92.9%; meanwhile, MAL reached 96.3% mAP50 and 61.1% mAP50:95, representing improvements of 0.9 and 0.9 percentage points over VFL, respectively, and also outperformed Slide Loss (95.6%/60.7%) and EMASlide Loss (96.0%/59.3%). The mAP50 curves corresponding to each loss function in

Figure 7b clearly show that the MAL curve remains at the highest level and maintains the smallest decline during later iterations, further demonstrating its advantages in accuracy and robustness.

From the perspective of training convergence speed, the loss curves shown in

Figure 7a indicate that MAL reaches a convergence plateau at around 31 epochs, whereas VFL and Slide Loss require 37 and 39 epochs, respectively. Although EMASlide Loss also converges at approximately 31 epochs, its final mAP50 and mAP50:95 still lag behind MAL. Considering MAL’s leading performance in Precision, Recall, F

1, and mAP metrics as shown in

Table 4, along with the fastest convergence speed and lowest training oscillation in

Figure 7a, it is evident that MAL not only significantly shortens the training cycle but also improves detection performance. Based on this rigorous data comparison and analysis, MAL was ultimately selected as the classification loss function for the CF-DETR model.

3.3. Ablation Experiment

To verify the effectiveness of the systematic structural optimization proposed in this study within the RT-DETR framework—focusing on three key aspects: feature extraction (DIDC), information encoding (PEMD), and loss design (MAL)—we conducted ablation experiments based on RT-DETR under the same experimental settings. The experiments considered not only commonly used detection accuracy metrics (mAP50, mAP50:95, Precision, Recall, F1) but also included model parameters, FLOPs, and inference speed, enabling a comprehensive evaluation from both detection performance and embedded deployment feasibility perspectives.

As shown in

Table 5, introducing the DIDC module alone reduces the model parameters from 19.9 M to 13.2 M (a 33.7% decrease) and FLOPs from 56.9 G to 43.5 G (a 23.5% decrease), while maintaining an inference speed of 73.8 FPS, demonstrating its suitability for embedded deployment. In addition, its multi-scale convolutional branches and global context-based dynamic weight fusion enable finer extraction of local textures and edge information of chicken faces, increasing Precision to 96.2% (+2.4%) and mAP50:95 to 61.4% (+1.2%), while Recall fluctuates by no more than 0.5% (92.6%), validating its efficient feature extraction capability. Although the PEMD encoding module increases the model parameters to 20.0 M and FLOPs to 57.2 G, its PolarAttention reconstructs global dependencies in the polar coordinate domain with linear complexity, the Mona branch adaptively fuses multi-scale pooling and convolutional branches, and EDFFN with DynamicTanh compensates high-frequency details and stabilizes feature distributions, resulting in Recall increasing to 93.6% (+1.5%) and mAP50:95 rising to 61.2% (+1.0%), fully demonstrating its advantage in global–local collaborative perception for small and occluded chicken-face targets.

When the DIDC and PEMD modules are applied simultaneously, the model further balances accuracy and speed while maintaining efficient computation, achieving 94.9% Precision and 93.1% Recall, with mAP50 increasing to 96.7% and mAP50:95 rising to 62.0%, while parameters remain at 13.3 M, FLOPs at 43.8 G, and the frame rate improves to 80.6 FPS. On this basis, by integrating the Matchability Aware Loss (MAL) into CF-DETR, the model’s learning signal becomes more focused on low-quality matched samples, resulting in a more balanced performance improvement. The final CF-DETR achieves 95.5% Precision, 94.6% Recall, and 95.1% F1-Score, representing a 1.1 percentage point improvement in F1 compared to the combination model without MAL; mAP50 reaches 96.9%, mAP50:95 further increases to 62.8%, while parameters and FLOPs remain at 13.3 M and 43.8 G, and the frame rate stabilizes at 81.4 FPS.

The training curves in

Figure 8 clearly demonstrate the combined advantages of the DIDC–PEMD–MAL configuration in convergence speed, training stability, and final plateau height. First, the DIDC module enhances the representation of fine-grained and direction-sensitive features, promoting the quality of candidate boxes in the early training stages and accelerating the rise of mAP. Second, PEMD, through polar-coordinate attention, distribution stabilization, and high-frequency detail compensation, effectively mitigates performance decline in the middle and later stages, resulting in a smoother convergence curve and a higher plateau. Finally, MAL strengthens the gradient response to low-quality matched samples through its match-driven loss design, significantly suppressing training oscillations and improving consistency in localization and confidence. Therefore, the steeper rise, earlier stabilization, and higher plateau shown in the figure are not isolated phenomena but a direct reflection of the three modules complementing each other during training, indicating that DIDC–PEMD–MAL indeed outperforms other comparison configurations in terms of convergence and generalization capability.

This combined configuration outperforms any single- or dual-module combination in both detection accuracy and deployment efficiency, fully validating the synergistic optimization of DIDC, PEMD, and MAL in structural design, information encoding, and loss modeling. Overall, the modules complement each other across different task levels, providing robust support for high-performance, low-resource embedded real-time chicken face detection.

3.4. Visualization Analysis

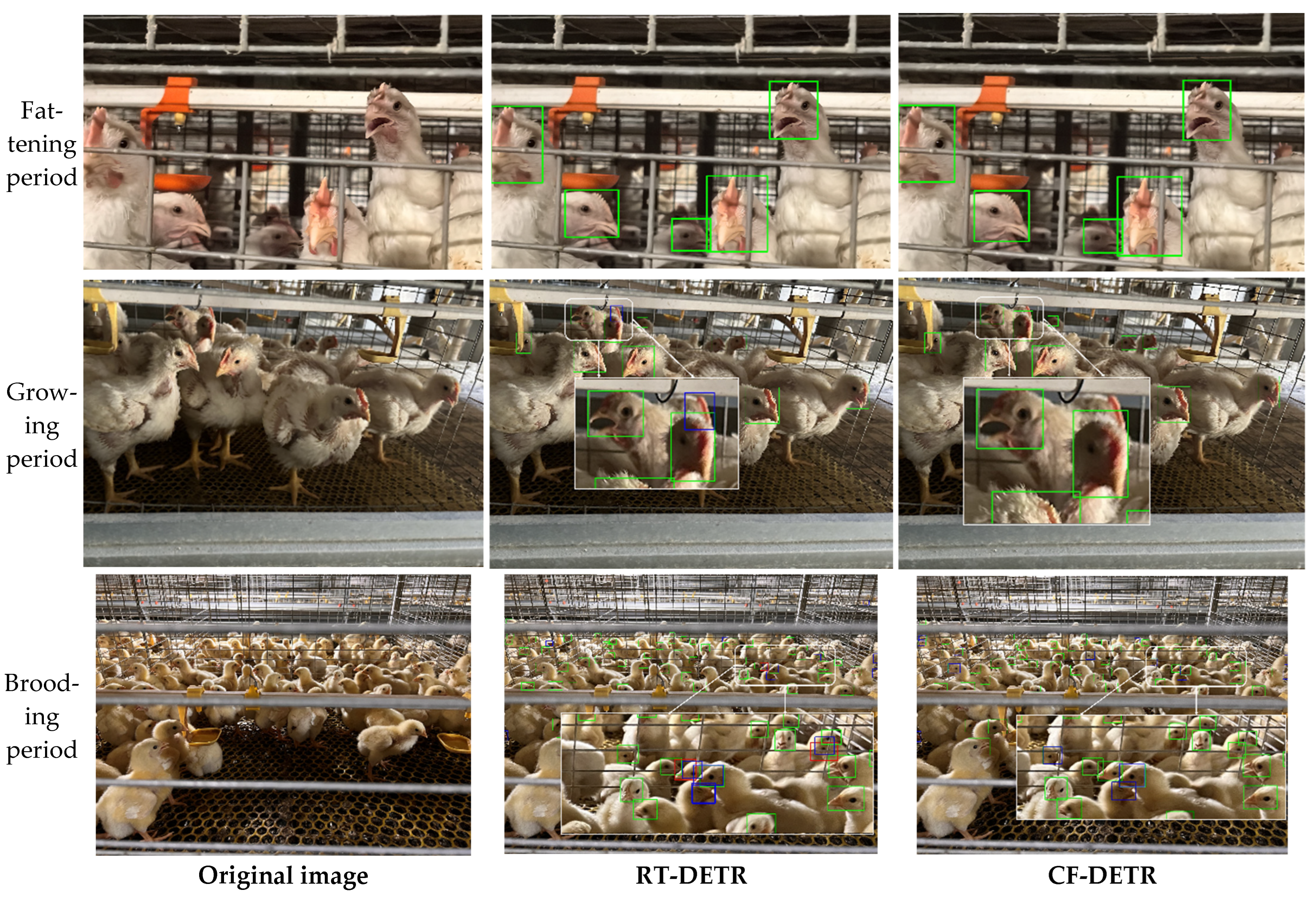

To intuitively evaluate the detection robustness and interpretability of the baseline RT-DETR and the proposed CF-DETR in complex farming environments across different growth stages, we selected representative samples from the fattening, growing, and brooding periods for visual comparison. As shown in

Figure 9, during the fattening period, the targets are relatively large and feature distinctions are high, allowing both models to perform stable detection with almost no false positives or false negatives. In the growing period, as target size decreases and neighborhood interference increases, the baseline model produces false positives due to the similarity of adjacent head features, whereas CF-DETR maintains stable detection results, showing no missed detections and significantly fewer false positives. In the most challenging brooding period, targets are smaller, stocking density is higher, and occlusion is severe, causing the baseline model to exhibit both missed detections and false positives. Missed detections result from feature weakening and lost candidate boxes, while false positives mainly arise from background textures and neighboring interference. In contrast, CF-DETR maintains zero missed detections during this stage, with fewer false positives than the baseline RT-DETR, fully demonstrating its significant advantages in feature extraction, occlusion handling, and interference suppression.

To further reveal the internal mechanism behind performance differences in the brooding period, we conducted heatmap visualization analysis on brooding-period samples. As shown in

Figure 10, compared with the baseline model, CF-DETR exhibits clear and stable activation responses for more targets during the brooding period, whereas the baseline model lacks identifiable activation signals on several true targets. Further observation shows that the unactivated targets in the heatmaps highly coincide with the detector’s missed detections, indicating that the absence of internal responses is an important cause of missed detections. From this, it can be concluded that heatmaps, as a form of explainable evidence, support that CF-DETR possesses stronger feature response capability and higher recall in small-scale, high-density, and heavily occluded brooding-period scenarios, providing an intuitive visual confirmation of the performance improvement of our method. It should be emphasized that heatmaps are an interpretability tool rather than direct detection outputs; this section aims to provide an intuitive understanding of model behavior and supplementary evidence.

3.5. Comparison with Other Models

To comprehensively and objectively evaluate the practical performance of the proposed CF-DETR in chicken face detection, we conducted a horizontal comparison under the same dataset and evaluation criteria with representative single-stage detectors (YOLOv10m, YOLOv11m) [

33,

34], anchor-based single-stage detector (TOOD) [

35], two-stage detector (Faster R-CNN) [

36], and Transformer-based detectors (DETR, RT-DETR, RT-DETR-r34). The comparison metrics include detection accuracy (Precision, Recall, mAP@0.5, mAP@0.5:0.95), real-time performance (FPS), and model complexity (Parameters, FLOPs), aiming to reveal the strengths and limitations of each method across the three dimensions of accuracy, speed, and resource efficiency. The results are shown in

Table 6.

From the data, the models exhibit clear performance differentiation. YOLOv11m slightly leads in Precision (P = 95.8%), but its inference speed is only 31.6 FPS, indicating that although post-processing reduces false positives, the heavy computation and post-processing overhead limit its real-time performance for this task. Similarly, YOLOv10m achieves a near-leading mAP50 (96.5%) but an FPS of only 37.7, showing that single-stage networks on this dataset are still affected by NMS post-processing and model complexity. Two-stage and improved two-stage methods (Faster R-CNN, TOOD) show moderate performance in some accuracy metrics but at the cost of substantially higher parameters and FLOPs (e.g., Faster R-CNN parameters ≈ 41.3 M, FLOPs ≈ 208 G), resulting in low FPS (below 34), which is unfavorable for embedded and real-time applications. The original DETR performs poorly in this high-density scenario (mAP50 = 88.6%), indicating that standard Transformer-only architecture struggles with tiny-scale and occluded chicken faces without targeted local-scale enhancement. RT-DETR provides a good speed–accuracy trade-off (mAP50 = 95.4%, FPS = 74.1), but compared with the proposed CF-DETR, it still lags in Recall (RT-DETR R = 92.1% vs. CF-DETR R = 94.6%) and overall mAP (95.4% vs. 96.9%). The comparison results indicate that CF-DETR achieves a better balance between accuracy and efficiency, attaining the highest mAP50 (96.9%) and mAP50:95 (62.8%) while maintaining the lowest parameter count (13.3 M) and computational cost (43.8 G), and leading all compared models with an inference speed of 81.4 FPS, demonstrating the practical benefits of the synergistic optimization across backbone (DIDC), encoder (PEMD), and loss design (MAL) in this study.

In summary, although some YOLO-series models show advantages in individual metrics (such as Precision or single-point mAP), their disadvantages in real-time performance or computational resource requirements limit their feasibility for practical deployment. Two-stage methods and pure DETR-based approaches are also constrained in this task due to model complexity or lack of adaptation mechanisms. In contrast, CF-DETR, through lightweight structural design and targeted encoding and loss strategies, demonstrates the most balanced and superior performance in chicken face detection under high-density and heavily occluded real-world scenarios.

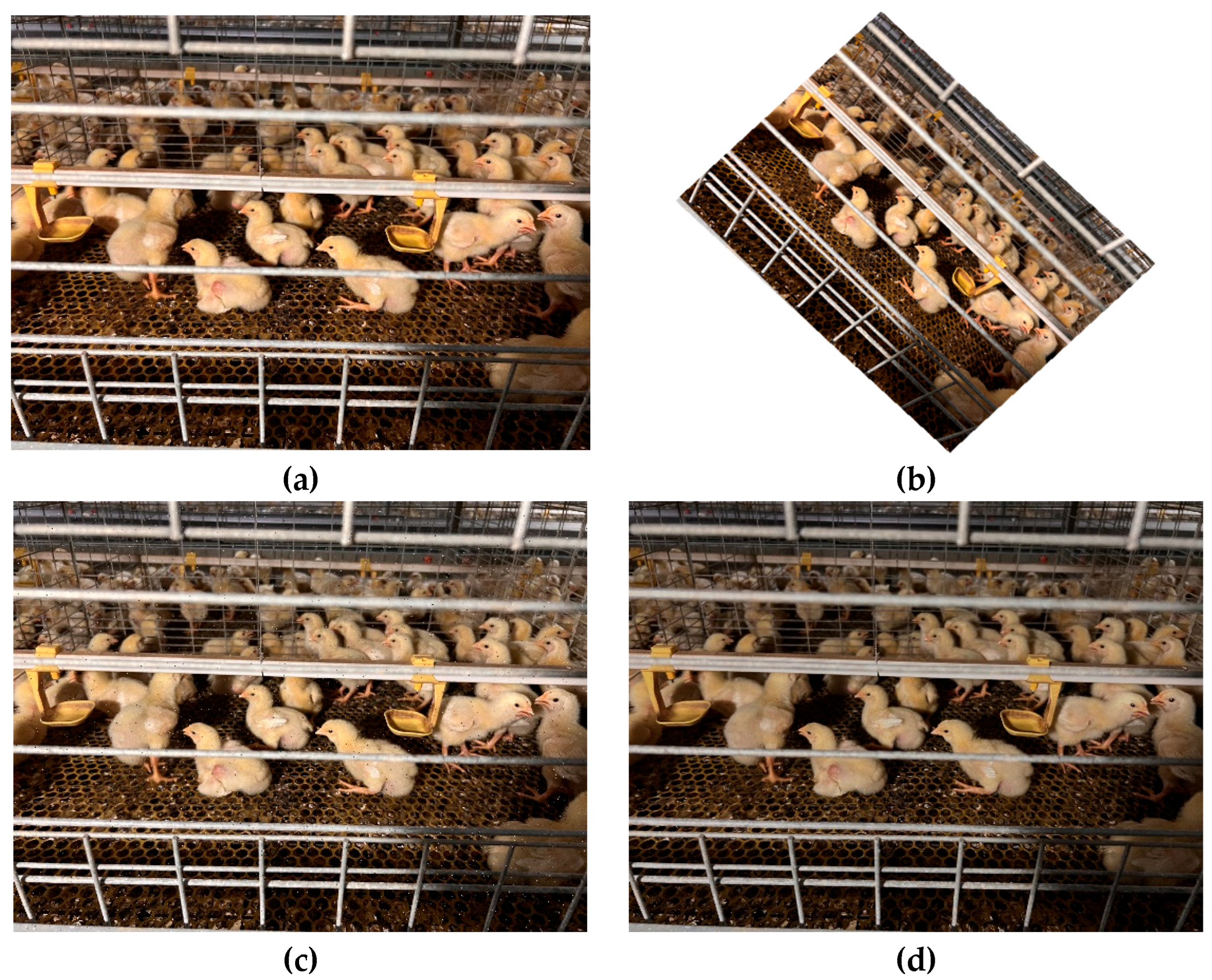

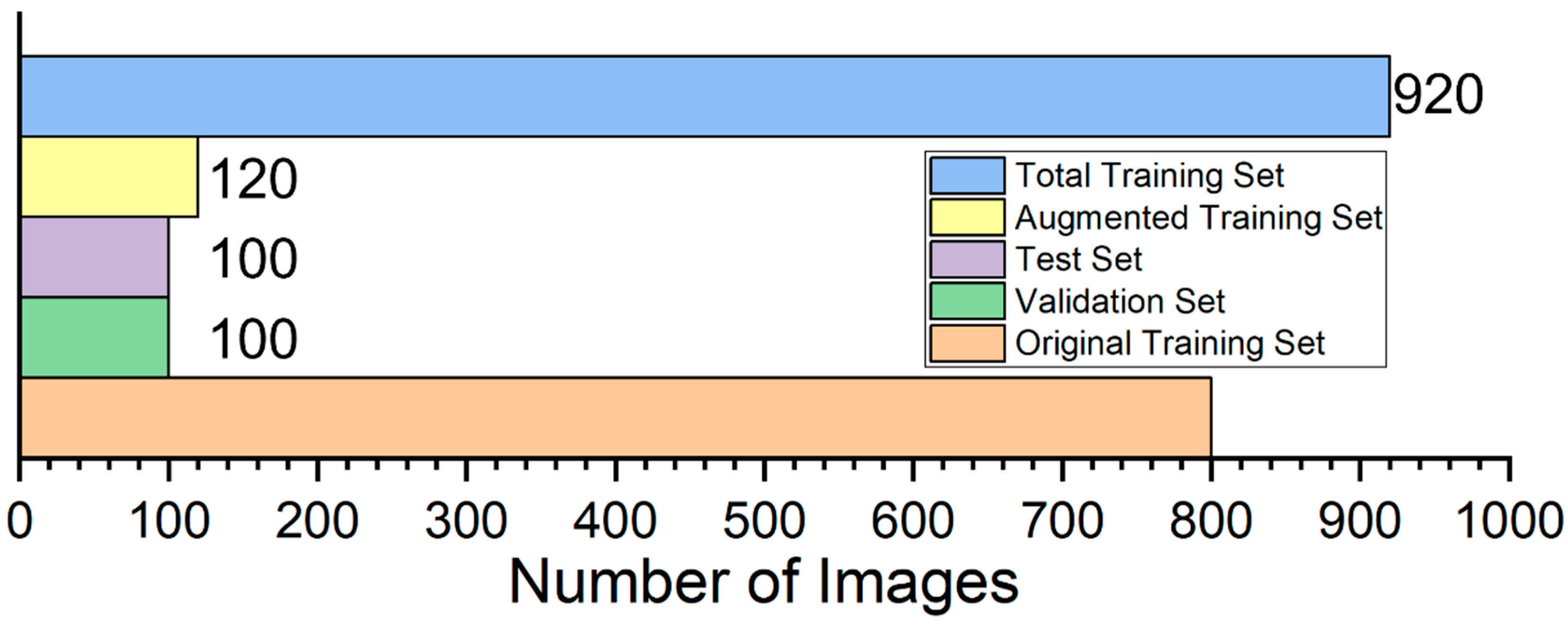

4. Discussion

This study constructed a chicken-face detection dataset covering the entire growth cycle of chickens, from the chick stage to the fattening stage, encompassing significant variations in facial size and texture across different stages (see

Figure 1). During the early chick stage, the facial region is extremely small, features are difficult to discern, and high stocking density results in severe occlusion. In the growing stage, the facial area increases but neighboring interference also rises. In the fattening stage, the facial region is largest, and features are most stable. Such high-density, fine-grained detection scenarios pose significant challenges to detectors [

10,

11]. To address this, we selected RT-DETR as the starting point. RT-DETR offers advantages in end-to-end detection and the removal of NMS, while achieving a balance between accuracy and speed through a hybrid convolutional backbone and an efficient encoder. However, the native RT-DETR structure exhibits shortcomings in chicken face scenarios. The ResNet backbone has limited capability to express subtle texture differences [

37]. The AIFI module provides sufficient global modeling ability but insufficient local detail recovery [

25], and attention based on normalization and Softmax introduces parallelism and efficiency bottlenecks [

23,

24], while lacking juxtaposed structural interaction and multi-receptive-field collaboration [

22]. These factors further limit the effectiveness of the matching mechanism optimization and training convergence speed [

26], and previous work has pointed out that the DETR framework struggles to capture fine-grained information [

38]. Therefore, this study proposes a systematic improvement scheme addressing the three major bottlenecks of RT-DETR: feature extraction, information encoding, and loss design.

First, at the backbone network level, we designed the DIDC module, which employs multi-branch depthwise separable convolutions (including stripe convolutions in different orientations) combined with global semantic dynamic fusion, effectively enhancing the perception of chicken faces of varying scales and complex shapes. As shown in

Table 3, after introducing DIDC, both Precision and mAP improved significantly (Precision increased from 93.8% to 96.2%, mAP50 increased from 95.4% to 96.3%), while the model parameters decreased by over one-third, indicating that the multi-branch convolutions preserve fine-grained texture features while improving efficiency. Second, at the encoder level, we proposed the PEMD module, which incorporates polar-coordinate attention and a high-frequency detail compensation mechanism. Polar-coordinate attention enables global context capture with linear complexity, while the Mona multi-scale fusion branch together with EDFFN and DynamicTanh compensates for high-frequency information. In the ablation experiments shown in

Table 5, introducing PEMD alone increased Recall by 1.5 percentage points (from 92.1% to 93.6%) and raised mAP50:95 from 60.2% to 61.2%. These results indicate that PEMD enhances the model’s global–local collaborative perception under occlusion and background interference, thereby improving detection robustness and overall performance. Furthermore, the proposed Matchability Aware Loss (MAL) makes the training signal more sensitive to hard samples through dynamic label weighting. Comparative experiments in

Table 4 demonstrate that MAL outperforms traditional losses such as VariFocal Loss in both precision and mAP (Precision increased from 93.8% to 95.2%, mAP50 increased from 95.4% to 96.3%), and as shown in

Figure 7, it achieves faster convergence and minimal training oscillation. Specifically, MAL converges in approximately 31 epochs, whereas VariFocal Loss requires 37 epochs; as illustrated in the loss and mAP curves in

Figure 7a, MAL consistently maintains the highest level, further validating its effectiveness in training stability and performance improvement.

Based on the comprehensive ablation results (

Table 5) and the convergence curves (

Figure 7 and

Figure 8), the synergistic effects of the three mechanisms are clearly evident. DIDC accelerated the early-stage rise of mAP during training, PEMD mitigated the late-stage performance decline, and MAL smoothed oscillations during training, resulting in steeper convergence curves and higher performance plateaus. In the visualization analysis of representative samples, CF-DETR exhibited more robust detection performance compared to the baseline RT-DETR. For growing-stage samples, RT-DETR produced false positives due to similar neighboring head features, whereas CF-DETR showed almost zero missed and false detections. In the most challenging chick-stage samples, RT-DETR exhibited obvious missed and false detections (missed detections resulting from weakened features and false detections caused by background interference), while CF-DETR maintained zero missed detections and significantly reduced false detections. Grad-CAM heatmaps (

Figure 10) further confirmed this, showing that CF-DETR generated clear and stable activation responses for more true chicken face targets, whereas RT-DETR lacked identifiable responses for some targets, and these weak-response regions highly corresponded to missed detection instances. This indicates that the modules in CF-DETR effectively enhance the model’s feature response capability for small-scale and occluded targets, providing visual evidence supporting the performance improvement. In comparison with other mainstream models (

Table 6), CF-DETR achieved the highest mAP and real-time detection speed while maintaining the lowest parameter count and computational load, demonstrating the practical benefits of the design optimizations.

Although this study demonstrates significant advantages in chicken face detection across the full growth cycle, there are still two limitations to note. First, the data source is relatively homogeneous, covering only WOD168 white-feather broilers, which somewhat limits the model’s generalization ability to other breeds and different rearing conditions. Second, in chick-stage scenarios, although overall performance is markedly improved compared to the baseline model, CF-DETR still produces some false positives due to extremely small target sizes and frequent occlusion, as illustrated in

Figure 9. To address these issues, future work will focus on expanding cross-breed and cross-scenario data validation, and incorporating targeted small-object enhancement and temporal information modeling in the model design to further improve robustness and generalizability. Moreover, considering potential on-farm deployment, future research will further investigate hardware adaptability, energy efficiency, and seamless integration with existing farm management systems to ensure practical applicability in real-world poultry farming environments. Finally, as the dataset used in this study is currently under collaboration with relevant enterprises and has not been publicly released, future plans include making the dataset available upon project completion to facilitate subsequent research.

In summary, the experimental analysis demonstrates that the proposed multi-branch convolutional feature extraction module (DIDC), closed-loop encoder (PEMD), and match aware loss (MAL) effectively address the adaptation bottlenecks of RT-DETR in chicken face detection, significantly enhancing performance in high-density and heavily occluded complex scenarios. Each improvement mechanism has been thoroughly validated through ablation and visualization experiments: the DIDC module enriches spatial multi-scale feature representation, the PEMD module compensates high-frequency details and strengthens global–local fusion, and the MAL module focuses the learning signal on low-quality matched samples, improving recall and the consistency of localization confidence. The proposed modules exhibit complementarity, aligning with recent Transformer improvement strategies that emphasize stronger feature representation and more complete multi-scale information aggregation to enhance the model’s adaptability to occlusion, background interference, and scale variation [

37,

39]. Therefore, CF-DETR demonstrates clear advantages in accurately and efficiently detecting broiler chicken faces, providing a solid foundation for real-time intelligent poultry monitoring.