1. Introduction

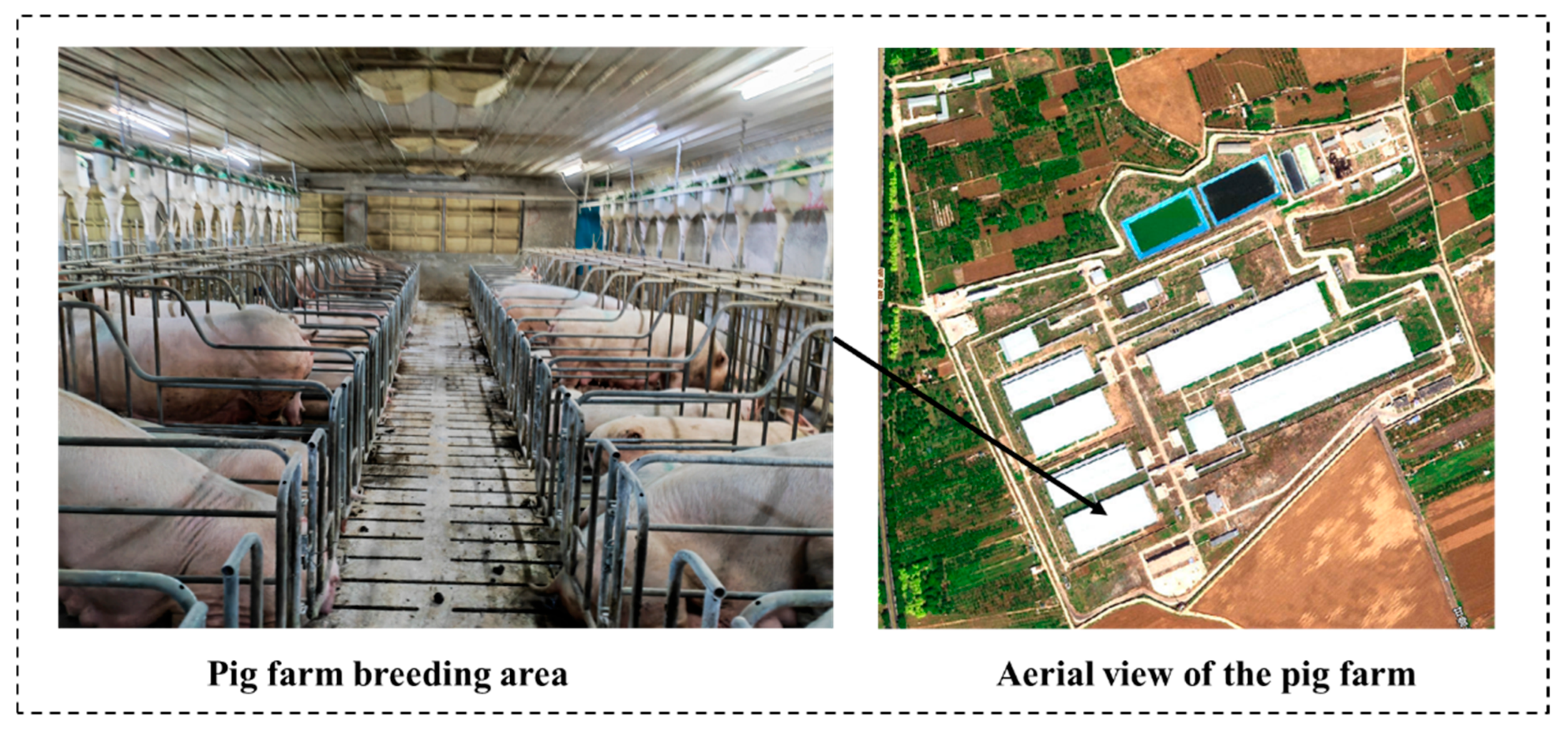

With the intensification of the livestock industry, improving sows’ reproductive efficiency has become a key factor in ensuring a stable meat supply [

1,

2]. Although synchronization techniques are widely used in large-scale pig farms for production management, they can induce group stress reactions that may lead to instances of non-pregnancy and adversely affect animal welfare [

3,

4]. Traditional estrus detection primarily relies on human observation of sows’ standing reflexes, vulvar swelling, and mucosal coloration; however, this method has inherent limitations [

5,

6]: inspection is labor- and time-intensive, making round-the-clock monitoring challenging [

7]. Additionally, the results are highly subjective due to differences in individual expertise [

8]. Furthermore, existing automated devices often rely on contact sensors, which suffer from complex installation and high maintenance costs [

9], thereby restricting the widespread adoption of the technology.

Accurate detection of the sow estrus cycle directly impacts reproductive efficiency. Studies indicate that the sow estrus cycle averages 21 days, with the effective estrus period lasting only 40–60 h [

10], and ovulation typically occurring during the final two-thirds of the estrus period [

11]. To achieve optimal conception rates, artificial insemination must be precisely timed within 0–24 h before ovulation [

9], placing stringent requirements on the timeliness of detection. Failure to promptly recognize the estrus state may result in the sow’s annual piglet production (PSY) falling below the economic threshold of 20–30 piglets [

12]. Under such conditions, the risk of culling sows due to substandard reproductive performance increases significantly, accompanied by reduced farrowing rates and prolonged non-productive days (NPDs), which greatly elevate the operational costs of pig farms [

13]. Therefore, there is an urgent need to develop an objective, real-time, and non-contact estrus detection device to support modern sow estrus monitoring.

The advancement of computer vision technology has provided new approaches for estrus detection. Previous studies have attempted to analyze behavioral features using deep learning—such as detecting erect ears during estrus [

14] or changes in activity levels [

15,

16]—but these features are often affected by individual variations, making them unstable across different breeds and growth stages. In contrast, changes in the vulva, as a direct physiological indicator of estrus, have stronger biological foundations and greater potential for quantitative analysis [

17]. Existing vision-based detection methods have primarily focused on texture, size changes, or temperature distribution. For example, Seo et al. used vulvar texture analysis to determine estrus state [

18]; however, due to complex environments and contamination in practical pig farms, the detection accuracy was only about 70%. Zhang et al. employed YOLOv4 to measure vulvar dimensions and convert them into millimeter-level measurements, achieving an Accuracy of 97% for size detection [

19]. Nevertheless, due to the difficulty of obtaining reliable vulvar measurements, this metric is hard to promote as a stable indicator for estrus detection. Xu et al. combined LiDAR-acquired 3D information with behavioral data to achieve a detection accuracy of 95.2 ± 4.8% [

20], but the high hardware costs and complex maintenance limited the method’s practical application. Similarly, Xue et al. used infrared thermography to monitor temperature changes [

21], but contamination on the sow’s body interfered with the image quality and detection stability, and the high cost was prohibitive for many farms. Although computer vision technology offers new ideas for sow estrus detection, existing methods still face several challenges in practical applications [

22]. Moreover, current mainstream end-to-end deep learning methods, although capable of directly outputting estrus judgments, suffer from a “black box” nature that makes their decision processes difficult to interpret; differences among pig groups, environments, and lighting conditions can easily lead to a reduced generalization ability and compromised detection stability. These challenges stem primarily from the lack of explicit modeling of key morphological cues and poor robustness in region localization. Most existing methods rely solely on RGB-based appearance features without incorporating multi-scale spatial semantics or attention-based refinement, leading to the inaccurate detection of the vulvar area under complex lighting and occlusion conditions.

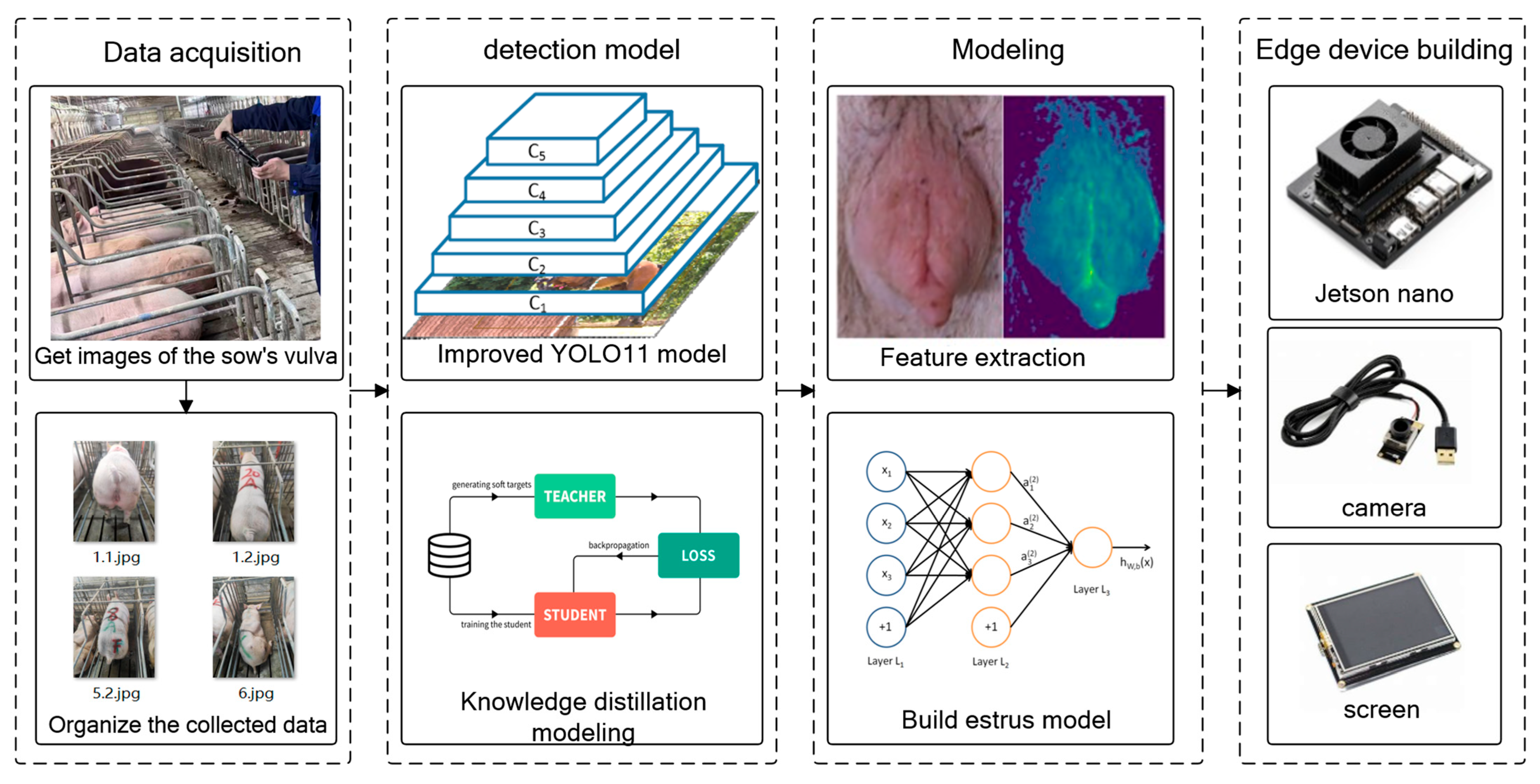

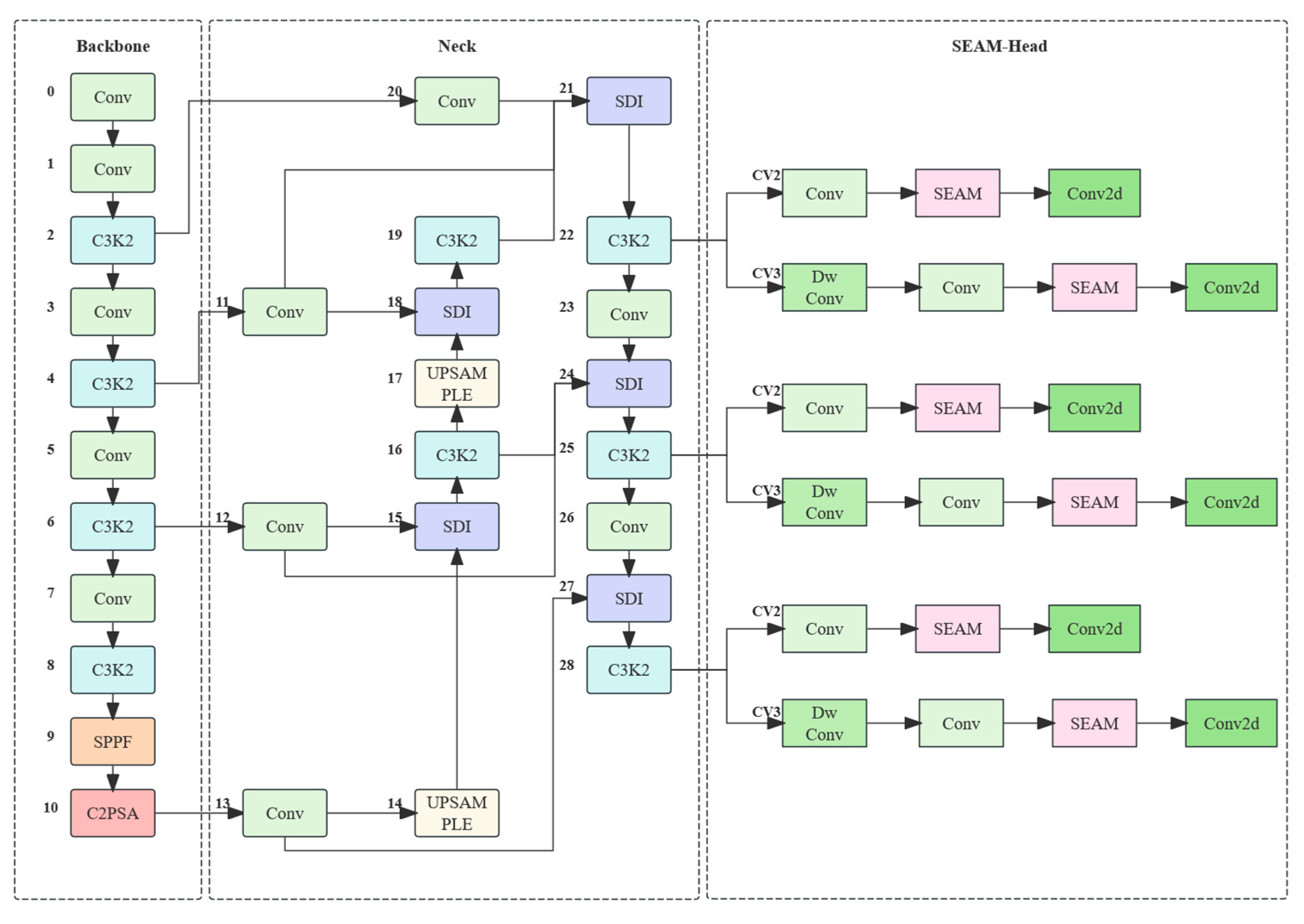

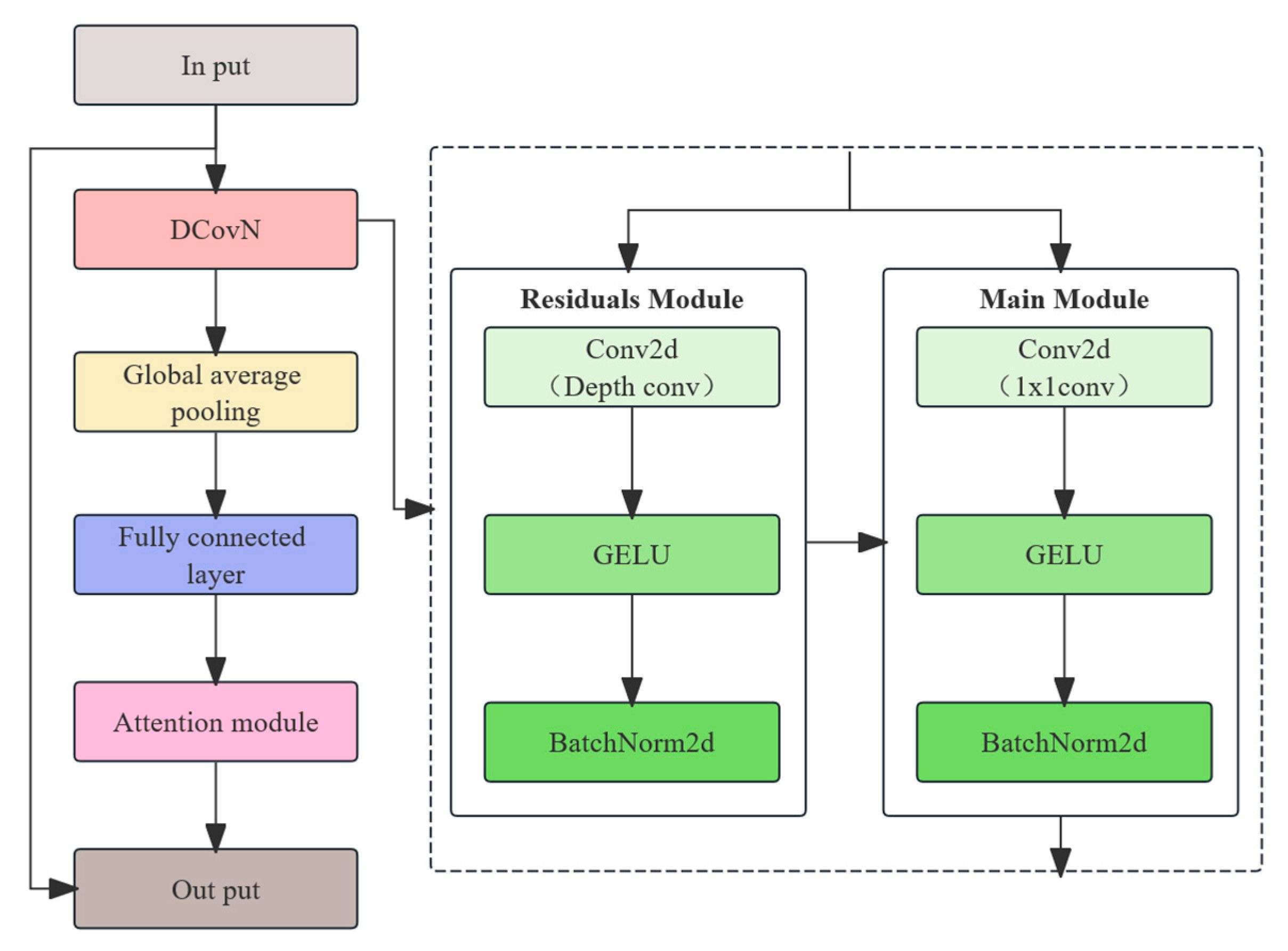

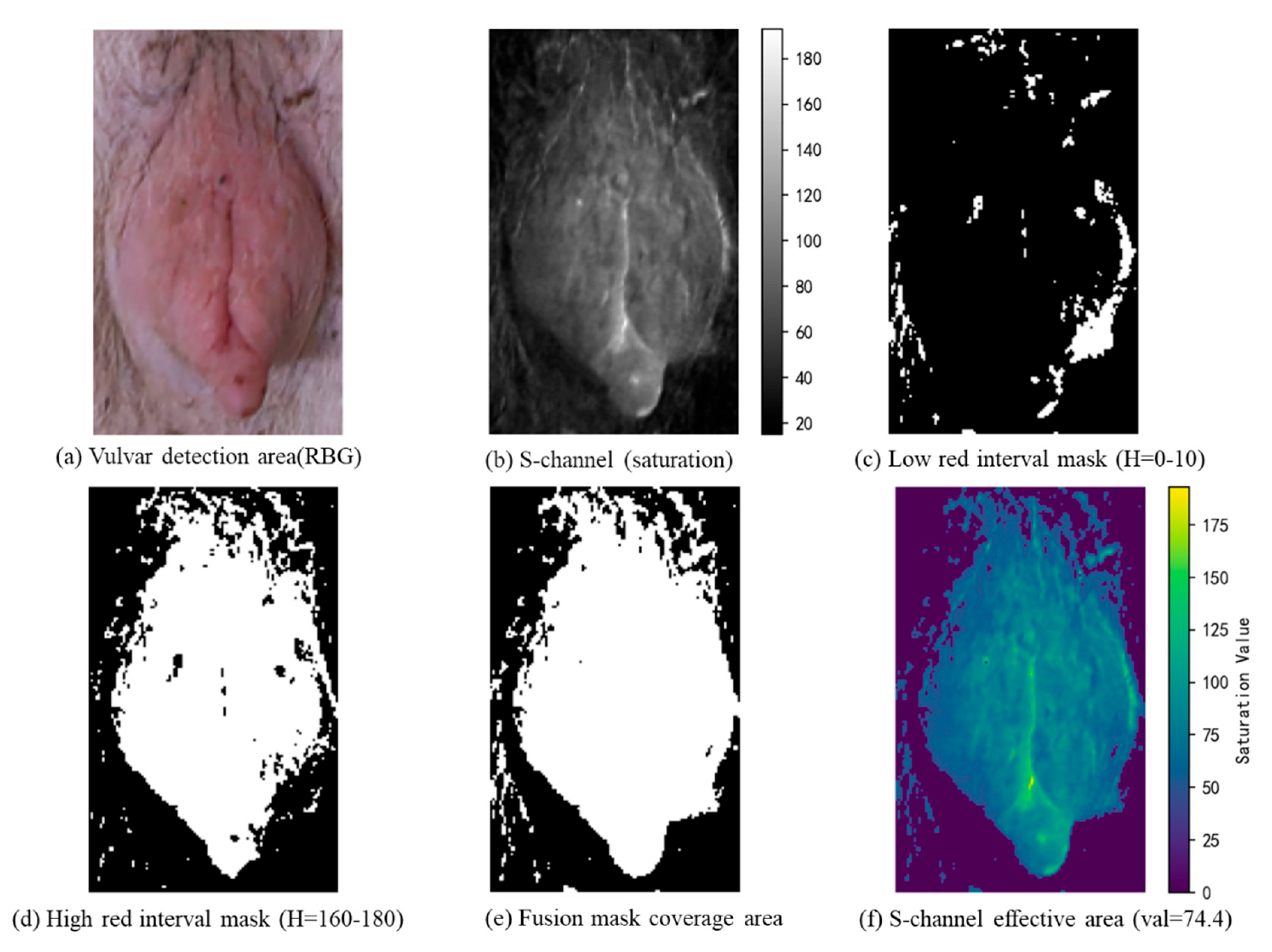

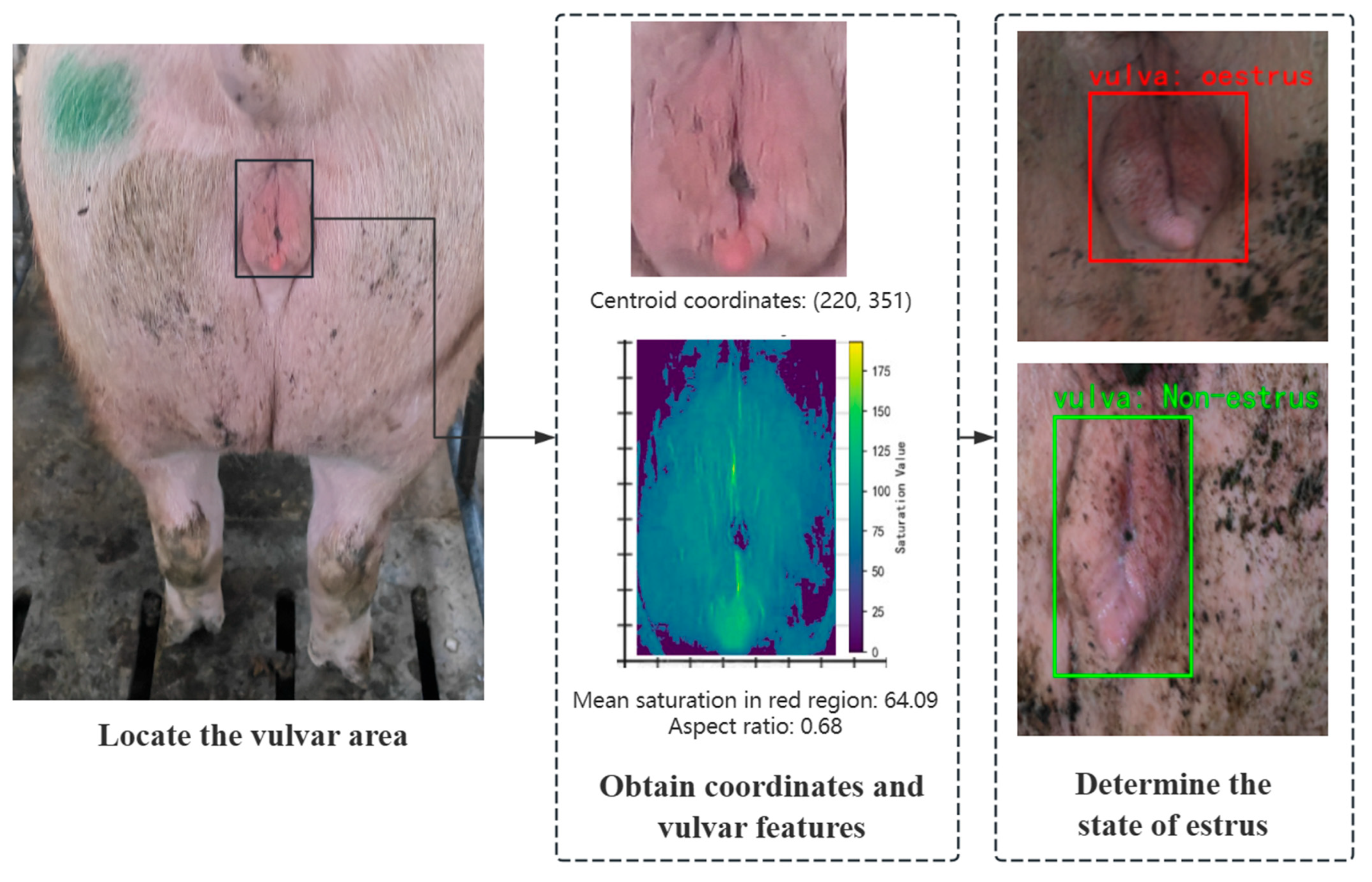

This study focuses on the efficient detection of sows’ vulvar features and proposes a lightweight estrus detection method based on an improved YOLOv11 model. Unlike approaches that directly output estrus results, our method incorporates a feature selection mechanism within the detection framework: YOLOv11 is first used to accurately locate the vulvar region, and then biological features are integrated for classification. To enhance both the detection accuracy and robustness, key features such as the aspect ratio and saturation are employed. The aspect ratio, as a normalized morphological parameter, quantifies changes in vulvar shape while mitigating the effects of individual differences and variations in camera distance; saturation, on the other hand, enhances the model’s sensitivity to blood flow changes during estrus and reduces the influence of varying lighting conditions on color features. The two independent models are optimized separately and then combined with machine learning and physiological insights, resulting in detection outputs that are more interpretable and better suited to complex farming environments.

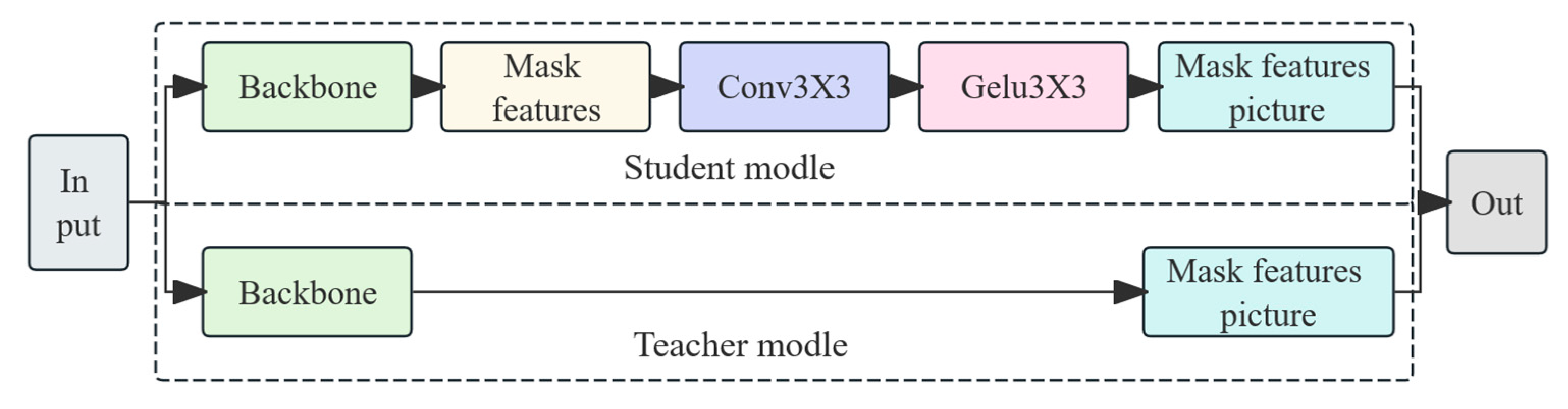

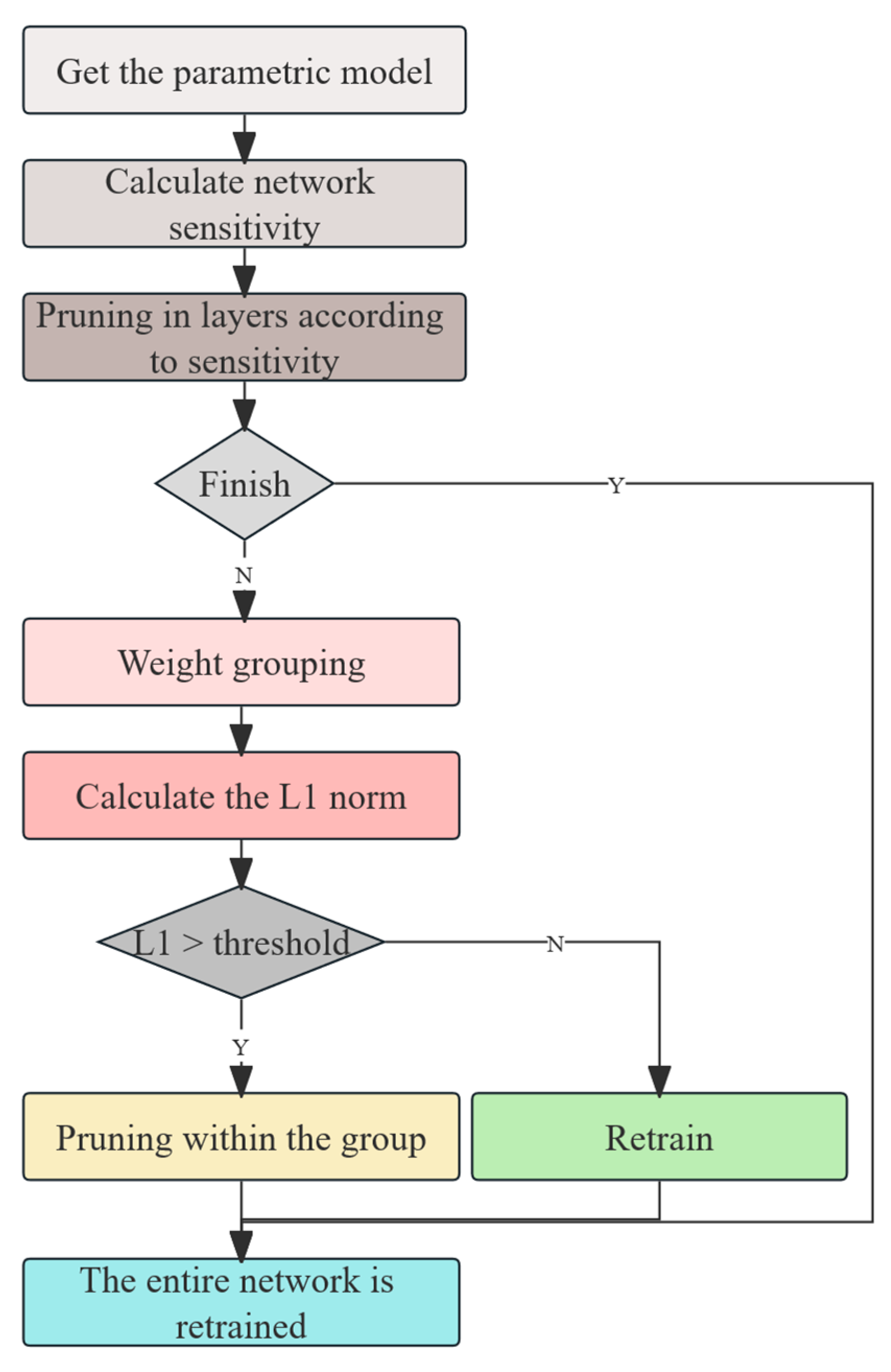

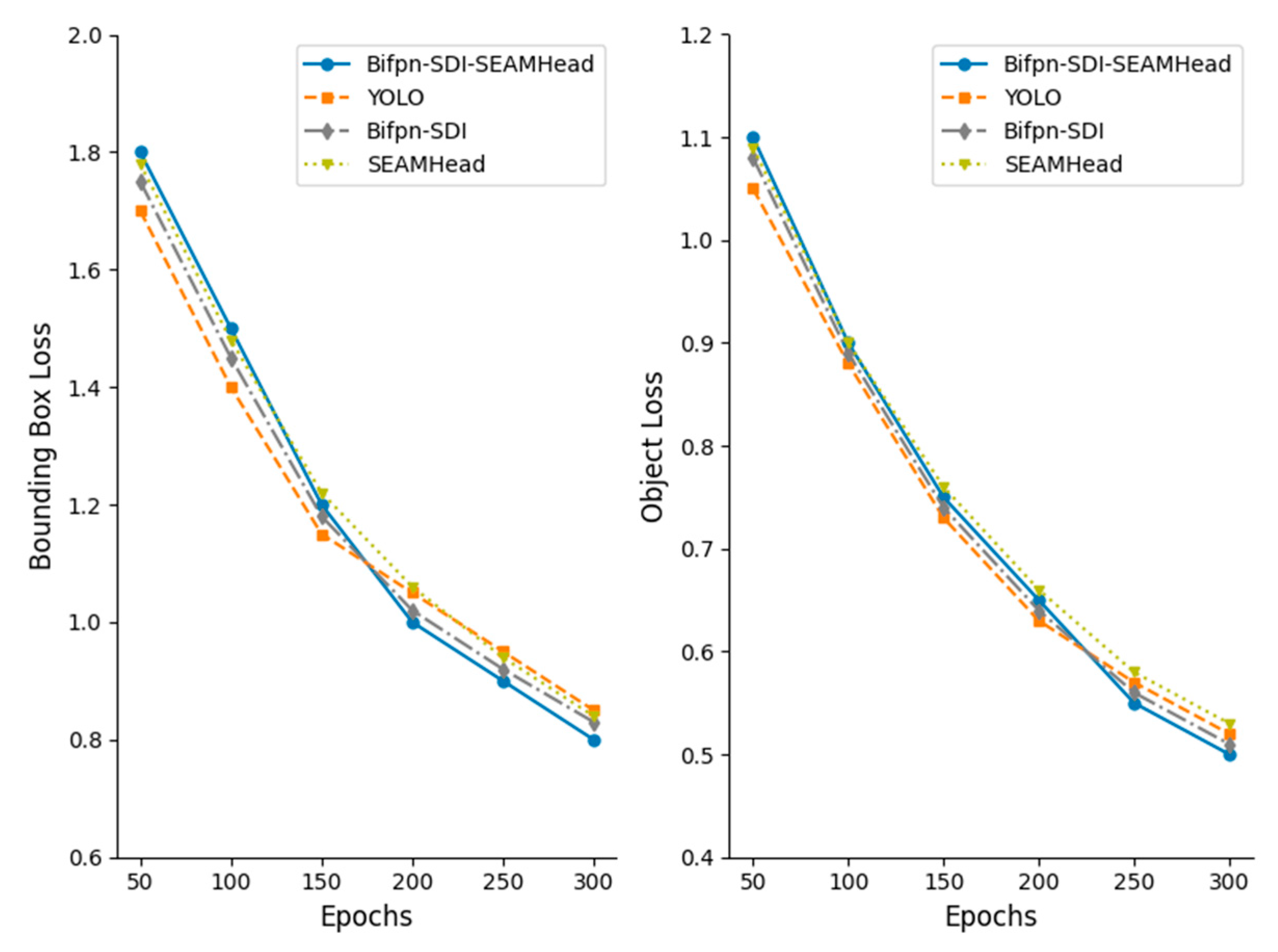

In terms of model optimization, this study introduces a BiFPN-SDI feature fusion module to improve multi-scale feature extraction and employs a SEAM-Head channel attention mechanism to enhance the expression of key features. To reduce computational costs, MGD knowledge distillation is used to compress the model, reducing its size by 91% to 3.96 MB while optimizing the detection efficiency with a per-frame detection time of 18.87 ms, enabling real-time operation on low-cost edge devices such as the Jetson Nano. Experimental results indicate that even under complex conditions, the proposed method maintains high detection accuracy. The device assists caretakers in quickly identifying suspected estrus animals during routine inspections, effectively reducing missed mating opportunities and improving breeding efficiency. It is especially suitable for farms with limited manpower. Compared with existing YOLOv4-based detection methods, our approach not only improves adaptability, but also reduces the computational burden, making the estrus detection system more viable for practical deployment in large-scale pig farms.

Figure 1 shows the technical process of this research.

4. Discussion

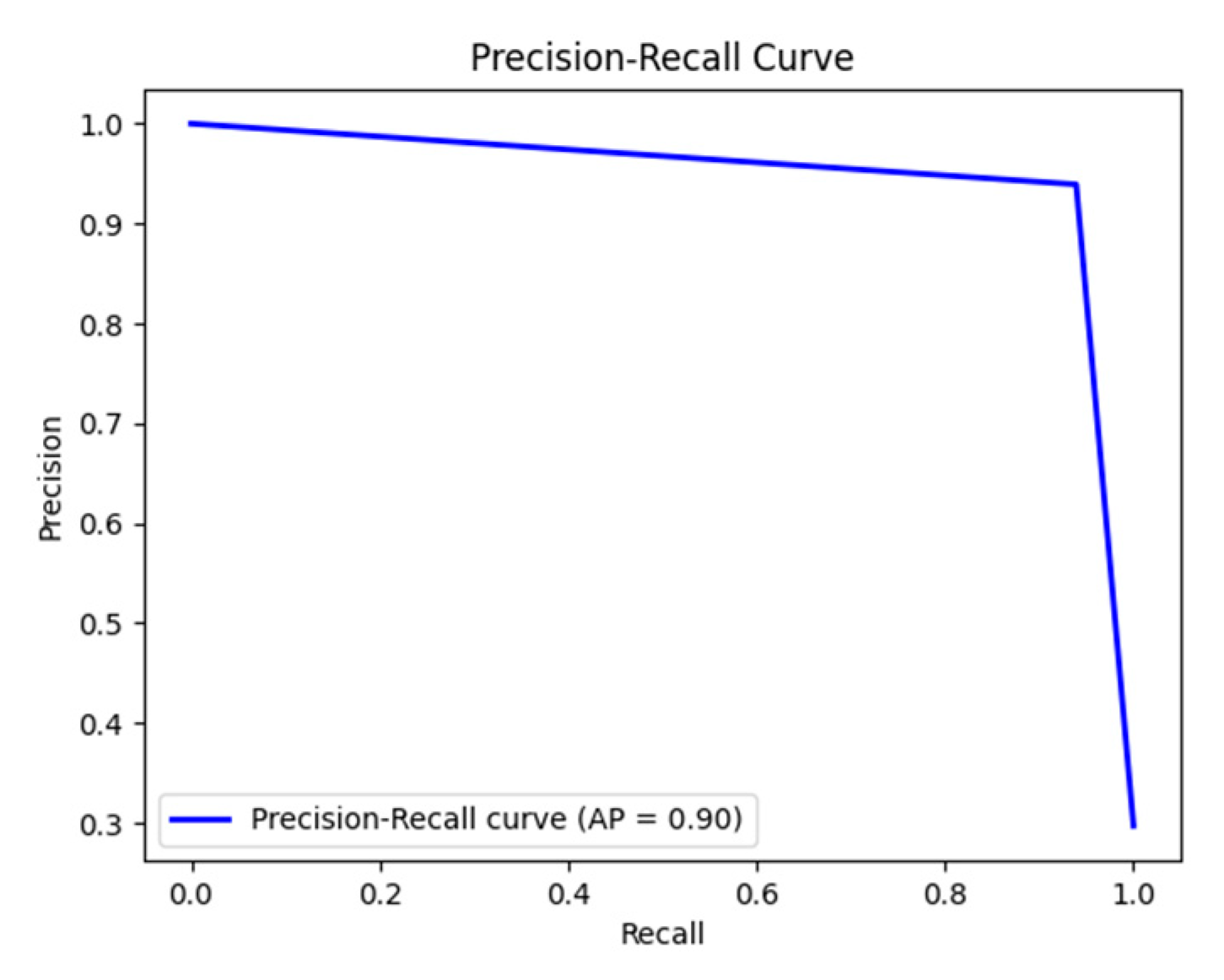

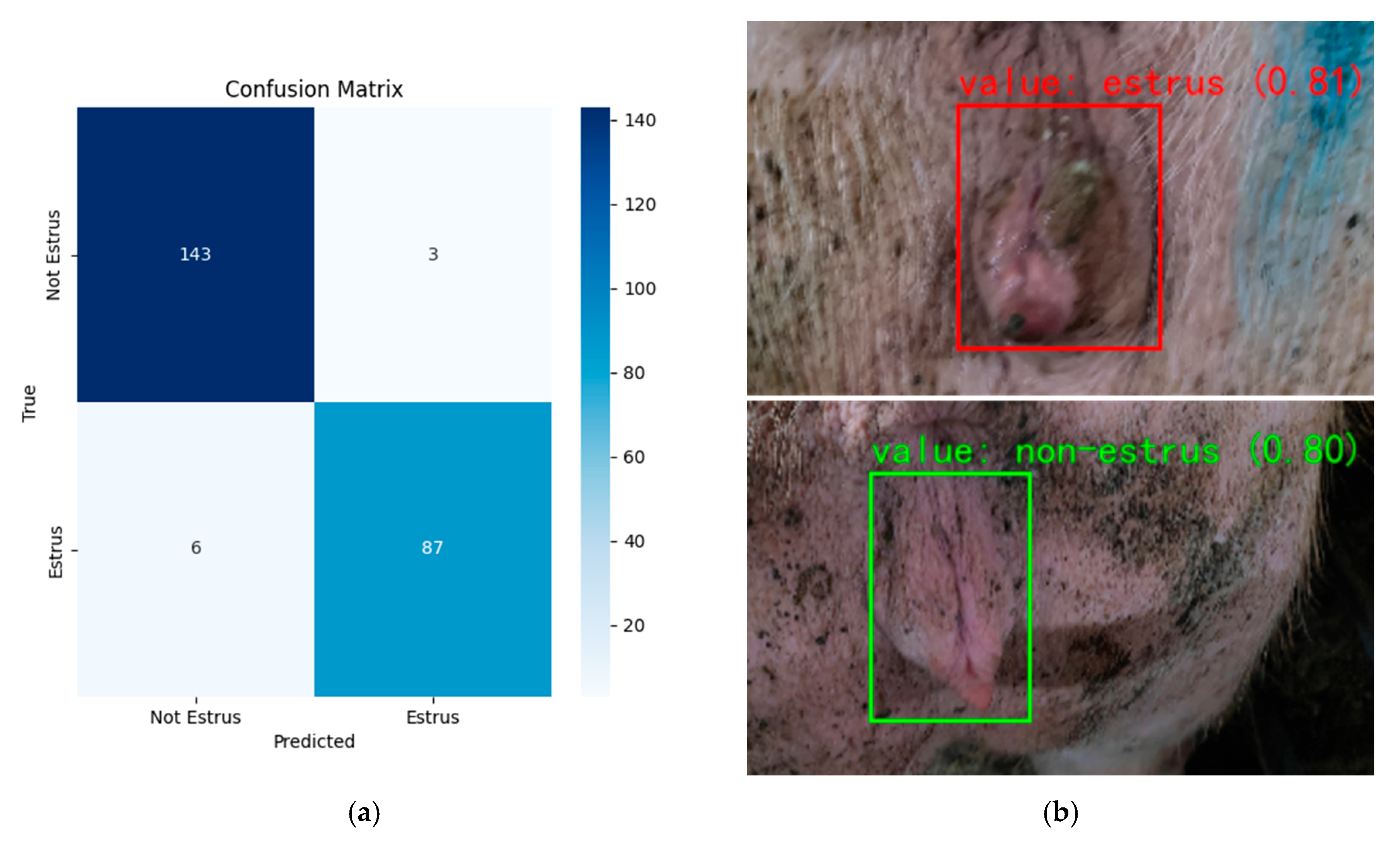

The present study demonstrates that our improved YOLOv11-BiFPN-SDI-SEAMHead detector, combined with a lightweight MLP classifier, enables accurate and interpretable estrus prediction based on vulva localization and feature fusion. Through model enhancements—including BiFPN-SDI and SEAM-Head modules—and mask-guided knowledge distillation, we achieved ~91% model compression (from 67 GFLOPs to 6 GFLOPs, ~3.96 MB) while maintaining high detection accuracy (mAP50 ≈ 0.94, AUC ≈ 0.96) and real-time performance (~16 fps) on a Jetson Nano.

4.1. Evaluation of the Estrous Detection Models

As summarized in

Table 9, previous studies have explored different sensing and inference strategies. Texture-based methods using GLCM and neural networks [

18] offered limited accuracy (~70%) and lacked real-time capability. YOLOv4-based size estimation [

19] achieved high object detection performance (>97%), but required geometric calibration and did not include any estrus classification mechanisms, limiting its practical utility. Robotic imaging approaches with 3D vulva volume reconstruction and 1D-CNNs [

20] demonstrated strong test accuracy (~98%) by integrating behavior and structural features, but their reliance on LiDAR sensors and overhead robotic platforms substantially increases system complexity and deployment cost. Infrared-based systems such as Xue et al.’s [

21] combined YOLOv5s-based segmentation with ensemble regression, to predict the rectal temperature from vulva thermal images, achieved high precision (MSE = 0.114 °C and IoU = 91.5%). However, their study did not address estrus detection or classification; instead, it focused solely on non-contact physiological monitoring. The segmentation and regression components were functionally separate, and no model was trained to infer the estrus status.

In contrast, our approach directly extracts biologically grounded morphological and color features (aspect ratio and red saturation) from RGB images, enabling efficient estrus classification using low-cost, scalable hardware. The system runs in real time on edge devices without the need for sensor calibration, 3D reconstruction, or behavior fusion, offering a practical balance of accuracy, interpretability, and deployment feasibility for commercial sow estrus monitoring.

This study also found that YOLO alone is insufficient for reliable estrus classification. While the modified YOLO module enhanced the localization of the vulva region, it failed to capture subtle physiological signals. In contrast, combining biologically meaningful features with a lightweight MLP classifier significantly improved accuracy and interpretability, confirming the necessity of a two-stage design.

4.2. Current Deficiencies and Future Studies

Figure 16 shows the test results. Despite the overall strong performance, the system still encounters some failure cases under challenging conditions. The partial occlusion of the sow’s vulva or motion blur in the images can cause the detector to miss or mis-localize the target, thereby reducing the recognition accuracy. In addition, if the vulva’s red coloration is not pronounced due to suboptimal lighting or limited camera sensitivity, the color cue that the classifier relies on is weakened, which may lead to missed estrus detections. Certain non-estrus physiological conditions such as inflammation or postpartum changes can also cause vulvar swelling or redness that the model might mistakenly interpret as estrus.

While the optimized model performed well in our tests, it still faces certain limitations and challenges in more complex, real-world farm environments. First, because the training data were collected under relatively uniform conditions, the model’s generalizability to different breeds, group housing systems, and variable lighting conditions remains to be verified. Second, vulvar changes caused by factors unrelated to estrus—for example, infections or injuries—could confuse the feature-based diagnosis, indicating the need for the more robust discrimination of estrus-specific cues. To further enhance the system’s robustness, future work will expand the diversity of the dataset by incorporating sows of various breeds, ages, and housing conditions, and will explore multi-modal data fusion to mitigate the effects of lighting variations and occlusion. We also plan to incorporate temporal analysis by tracking the same sow’s vulvar changes across multiple estrus cycles, which could help to distinguish true estrus signals from anomalous variations over time. Addressing these issues through cross-modal integration and longitudinal monitoring will improve the model’s versatility and reliability, paving the way for deployment in more complex farm settings.