Simple Summary

Individual cow identification is a prerequisite for automatically monitoring behavior patterns, health status, and growth data of each cow. Image-based methods have poor adaptability to different environments and target size, and low detection accuracy in complex scenes. To solve these issues, this study designs a Dy_Conv (i.e., dynamic convolution) module and innovatively constructs a Dynamic_Bottleneck module based on the Dy_Conv and S2Attention (Sparse-shift Attention) mechanism. On this basis, we replace the first and fourth bottleneck layers of Resnet50 with the Dynamic_Bottleneck to achieve accurate extraction of local features and global information of cows. Furthermore, the QAConv (i.e., query adaptive convolution) module is introduced into the front end of the backbone network, in order to adapt to the scale changes in cow targets in input images, at the same time, NAM (i.e., normalization-based attention module) attention is embedded into the backend of the network to better distinguish visually similar individual cows. The experiments are conducted on the public datasets collected from different cowsheds. The experimental results showed that the proposed model enhanced the accuracy of individual cow identification in complex scenes.

Abstract

Individual cow identification is a prerequisite for automatically monitoring behavior patterns, health status, and growth data of each cow, and can provide the assistance in selecting excellent cow individuals for breeding. Despite high recognition accuracy, traditional implantable electronic devices such as RFID (i.e., Radio Frequency Identification) can cause some degree of harm or stress reactions to cows. Image-based methods are widely used due to their non-invasive advantages, but these methods have poor adaptability to different environments and target size, and low detection accuracy in complex scenes. To solve these issues, this study designs a Dy_Conv (i.e., dynamic convolution) module and innovatively constructs a Dynamic_Bottleneck module based on the Dy_Conv and S2Attention (Sparse-shift Attention) mechanism. On this basis, we replaces the first and fourth bottleneck layers of Resnet50 with the Dynamic_Bottleneck to achieve accurate extraction of local features and global information of cows. Furthermore, the QAConv (i.e., query adaptive convolution) module is introduced into the front end of the backbone network, and can adjust the parameters and sizes of convolution kernels to adapt to the scale changes in cow targets and input images. At the same time, NAM (i.e., normalization-based attention module) attention is embedded into the backend of the network to achieve the feature fusion in the channels and spatial dimensions, which contributes to better distinguish visually similar individual cows. The experiments are conducted on the public datasets collected from different cowsheds. The experimental results showed that the Rank-1, Rank-5, and mAP metrics reached 96.8%, 98.9%, and 95.3%, respectively. Therefore, the proposed model can effectively capture and integrate multi-scale features of cow body appearance, enhancing the accuracy of individual cow identification in complex scenes.

1. Introduction

Individual identification of cows is a key technology for the refined management. It can not only automatically monitor the behavior patterns, health status, and growth data of each cow, but also provide strong technical support for selecting excellent breeding cows. Therefore, in the intelligent management of modern farms and ranches, automatic identification of individual cows is of great significance for ensuring scientific feeding of cows and improving the economic benefits [1,2].

Most cow identification technologies used portable electronic devices such as RFID ear tags to establish cow profiles [3,4]. However, the invasive electronic tags may cause a certain degree of harm and stress response [5,6]. In recent years, computer vision technologies have been widely applied in many fields [7,8,9], including agricultural and livestock management [10,11,12].

The cow identification methods based on machine vision mainly adopted deep learning algorithms to extract the cow’s back pattern features [13,14] or face features [15,16,17], in order to distinguish different individual cows. Some studies were dedicated to scope with the challenges such as lighting changes, various animal postures, partial occlusions and so on. For example, Xiao et al. [18] enhanced the quality of the images under low-light by introducing the image enhancement algorithm MSRCP (i.e., multi-Scale Retinex with chromaticity preservation), and utilized spatial pyramid pooling (SPP) structure to enhance the feature extraction ability of the model. These improvements significantly increased the recognition accuracy under complex lighting conditions. Wang et al. [19] presented a spatial transformation deep feature extraction module integrating the SimAM attention mechanism and ArcFace loss function, in order to improve the model’s adaptability to the challenges such as various animal postures, partial occlusions and complex lighting conditions.

Some studies have utilized multimodal image fusion technologies for individual cow identification [20]. For example, Andrew et al. [21] extracted the local features of cow’s back patterns from RGB-D images for cow identification. Zhao et al. [22] improved the point cloud model PointNet++ to utilize anchor point detection and surface pattern features in depth images for individual identification of Holstein cows. Human re-identification technology has also been used for individual identification of cows [23]. Chen et al. [17] proposed a deep network model GPN that combines three branch modules (middle branch, global branch, and local branch) to extract facial features of cows in different dimensions, and further optimized the extraction of local features through a spatial transformation network (STN). In addition, the spatio-temporal model CNN-BiLSTM has also been applied to individual cattle recognition [24].

However, the above methods are usually designed for specific cowsheds. When applied to different cowsheds with complex environments, these methods may suffer from low recognition accuracy and generalization ability. In addition, with the expansion of breeding scale and the increase in the number of cows, the problem of high similarity in appearance characteristics between individual cows has become more prominent, which poses greater challenges for cow identification [25]. The existing models are difficult to effectively capture enough features to distinguish individual cows, which results in low recognition accuracy.

To address the aforementioned issues, this paper proposes a novel cow identification model based on an innovatively improved Resnet50. Firstly, QAConv was embedded into the front of the Resnet50 structure, in order to adaptively adjust the size and parameters of convolution kernels according to the feature map of the input query image, thereby focusing on the cow’s back features and suppressing irrelevant backgrounds. Then, in the bottleneck structure, we designed a Dy_Conv module and innovatively constructed a Dynamic_Bottleneck module based on the Dy_Conv and S2Attention mechanism. On this basis, we replaced the first and fourth bottleneck layers of Resnet50 with the Dynamic_Bottleneck to achieve accurate extraction of local detail features and global information of cows. Finally, NAM attention mechanism was introduced into the backend of the Resnet50 structure to achieve feature fusion in the channels and spatial dimensions, which was beneficial for better distinguishing visually similar individual cows.

The primary contributions of the study can be summarized as follows:

- In order to effectively extract the multi-scale features, we designed a dynamic convolution module Dy_Conv with a parallel structure of multiple branches. Dy_Conv generates feature maps with receptive fields of different sizes, allowing the model to simultaneously focus on the global features and back pattern features of cows.

- We constructed the Dynamic_Bottleneck module combining Dy_Conv with S2Attention, and replaced the 1st and 4th bottleneck layer of Resnet50, reducing computational complexity while efficiently fusing the multi-scale features. This helped the model to be applied to different cowsheds with complex environments.

- We embedded QAConv into the front of Resnet50, which adjusted the parameters and sizes of convolution kernels to adapt to the scale changes in cow targets in input images.

- To enhance the perception of local details, NAM attention mechanism was introduced into the backend of Resnet50 to achieve the feature fusion in the channels and spatial dimensions, which contributed to better distinguish visually similar individual cows.

2. Materials and Methods

2.1. Experimental Dataset

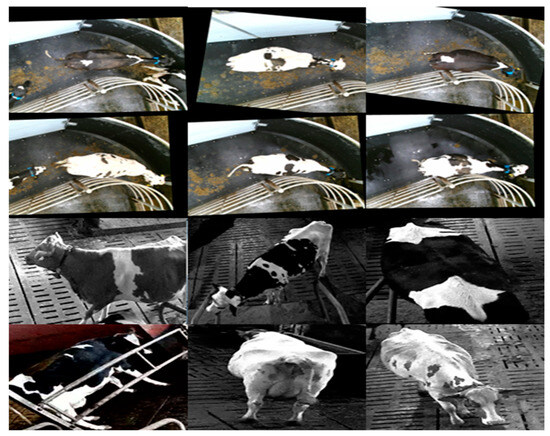

The images in the experimental dataset are derived from two public datasets. One is provided by the University of Bristol, containing 940 images from 89 cow individuals in the milking room (https://data.bris.ac.uk/data/dataset/2yizcfbkuv4352pzc32n54371r (accessed on 6 December 2024)). The other dataset consists of cow surveillance videos from different cowsheds (available at https://doi.org/10.5281/zenodo.3981400 (accessed on 20 June 2024)). In the dataset, the images in a video recorded by a fixed camera have the same background, and each video has a short duration, so most individual cows are only captured at a fixed angle. To validate the model more convincingly, we manually cropped 206 images of 30 cow individuals taken from various angles. The example images are displayed in Figure 1.

Figure 1.

The example images of the dataset. The samples in the top two rows are taken from the milking room; the bottom rows are taken from the cowshed.

Referring to the dataset partitioning method of the pedestrian re-identification, we divided the dataset into a training set and a testing set. The testing set is further divided into the Gallery set and the Query set, where the Gallery set refers to the cows with a known identity number, and the Query set contains the cows to be identified by the model. The ratio of training set, Gallery set, and Query set is 6:3:1.

2.2. Methods

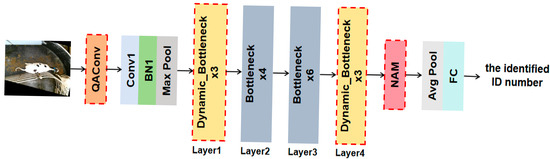

We designed the dynamic convolution module Dy_Conv and innovatively constructed the dynamic bottleneck block Dynamic_Bottleneck based on Dy_Conv and S2Attention mechanism. On this basis, we replaced the first and fourth bottleneck layers of ResNet50 with the Dynamic_Bottleneck. The overall framework of the cow individual recognition model is shown in Figure 2. Firstly, the QAConv module was introduced at the front end of the network structure to adapt to the scale changes in the targets by adaptively adjusting the parameters and size of convolution kernels based on the input cow images. Next, a four-layer bottleneck structure was used to gradually extract high-level semantic features of cows. In the Dynamic_Bottleneck structure, the Dy_Conv consists of a depthwise convolution and two dilated convolutions with different dilation rates. The parallel branch structure can achieve the accurate extraction of local features and global information of cows. The S2Attention mechanism adopts the feature channel compression and the region sparsity sampling strategy to achieve multi-scale feature interaction and fusion. Then, by introducing the NAM attention mechanism in the backend of the network, the local features representation of the cow’s back pattern were strengthened in the spatial dimension, thus the feature fusion in the channel-spatial dimension can better distinguish visually similar individual cows. Finally, the proposed model compressed the spatial dimension through a global average pooling layer, and obtained feature vectors with global representation ability, which were then mapped to the classification space through a fully connected layer.

Figure 2.

Overall framework of the cow individual recognition model. The QAConv module is introduced to adapt to the scale changes in the target. The proposed Dynamic_Bottleneck replaces the first and fourth bottleneck layers for the accurate extraction of local features and global information of cows. NAM is embedded for the feature fusion in the channel-spatial dimension.

2.2.1. Low-Level Feature Extraction Based on QAConv

The convolution kernel with the fixed parameters and size has the limitations when facing the challenging images such as occlusions or targets of different sizes, as shown in Figure 3, thus the fixed receptive fields are difficult to adapt to scale changes in targets. The size and parameters of QAConv’s convolution kernel can be adaptively adjusted according to the feature maps of the input query image, enhancing the model’s attention to the cow’s back characteristics while suppressing the interference of irrelevant backgrounds.

Figure 3.

The challenging cow targets.

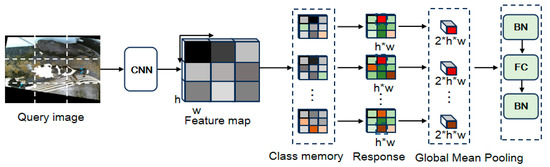

The structure of QAConv [26] is shown in Figure 4. The query image of individual cows is input into the network, and CNN backbone networks are used to extract feature maps of the query image and candidate Gallery images. Class Memory extracts a fixed size local region at each spatial position of the feature map to adaptively adjust the convolution kernel parameters. In the Response phase, the local matching scores are calculated by the pointwise convolution of the adaptive convolution kernel and feature map. Next, Global Mean Pooling aggregates local matching scores into global matching scores while reducing dimensionality. Finally, BN-FC-BN converts the matching score into the similarity measure to match the query image and candidate images.

Figure 4.

The structure of QAConv.

2.2.2. Dynamic_Bottleneck for Multi-Scale Feature Extraction

The Bottleneck of the original ResNet50 has the limited ability to capture global features due to the fixed receptive fields. In addition, the feature fusion relies solely on simple residual connections, which lacks the ability to dynamically adjust the weights of feature channels. Compared with the standard convolution, dynamic convolution can adjust convolution kernel parameters based on input feature maps, thereby enhancing the feature expression ability of the model and making it more suitable for complex tasks.

In the network structure of individual cow recognition, we constructed the Dynamic_Bottleneck and replaced the first and fourth bottleneck layers of ResNet50 with Dynamic_Bottleneck, as shown in Figure 1. Compared with the original bottleneck, the Dynamic_Bottleneck in the Layer1 can preserve more low-level visual features and avoid local detail loss, and the Dynamic_Bottleneck of Layer4 can expand the receptive fields through the Dy_Conv with a multi-branch structure, enhancing the model’s ability to integrate global information. The number of channels in Layer2 and Layer3 is relatively small, and the performance improvement brought by Dynamic_Bottleneck is limited. Moreover, the flexibility of dynamic convolution is not conducive to stable modeling of context, so the standard bottleneck structure is retained in Layer2 and Layer3.

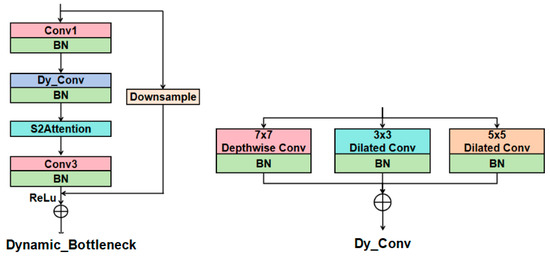

The structure of the proposed Dynamic_Bottleneck is shown in Figure 5. Dynamic_Bottleneck was constructed by introducing Dy_Conv and S2Attention into the bottleneck of ResNet50. Dy_Conv is a parallel structure consisting of a depthwise convolution branch and two dilated convolution branches. Depthwise convolution is used to capture local features, 5 × 5 dilated convolution expands the receptive fields to capture large-scale contextual information, and 3 × 3 dilated convolution is used to enhance local details. Therefore, the Dy_Conv can generate feature maps with receptive fields of different sizes. Finally, the weighted fusion features include both local features and contextual information, allowing the model to simultaneously focus on the global features and back pattern features of cows, which is very beneficial for individual cow identification.

Figure 5.

The structure of Dynamic_Bottleneck. Dy_Conv and S2Attention are embedded into the Bottleneck. Dy_Conv is a parallel structure consisting of a depthwise convolution branch and two dilated convolution branches.

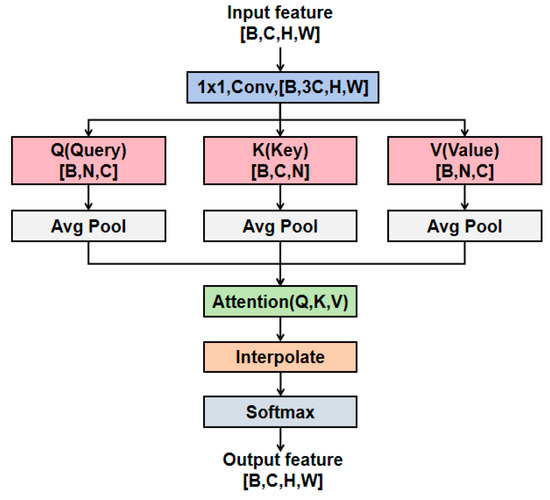

The S2Attention module [27] achieves the attention modeling of key regions using the sparse sampling strategy. In addition, the multi-scale feature interaction based on the down-sampling and up-sampling operations enhances the model’s perception ability of cow targets of different sizes. As shown in Figure 6, S2Attention first uses 1 × 1 convolution to expand the channels of the feature map by three times, and splits it into Query, Key, and Value vectors followed by average pooling, thereby constructing multi-scale feature representation. Then, the attention is calculated in the down-sampled feature space. Finally, the initial spatial dimensions are restored by the up-sampling operation.

Figure 6.

The structure of S2Attention. B is the batch size, C represents the number of channels, H and W, respectively, represent the height and width of the feature maps, , where is set as 2 for the balance of feature representation and computational cost.

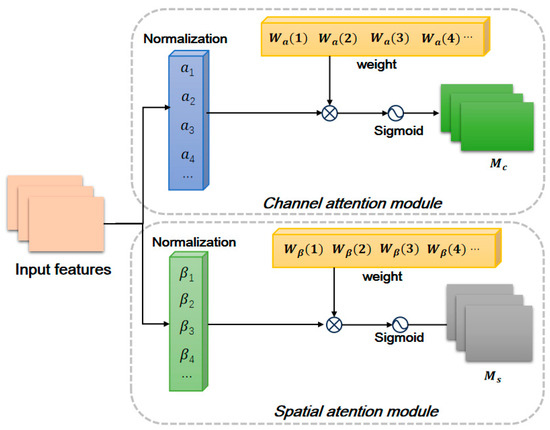

2.2.3. NAM for the Fusion of Channels and Spatial Features

The NAM attention mechanism [28] computes channel attention and spatial attention in parallel. The channel attention sub-module dynamically adjusts the weight of each channel to pay more attention to important channels. The spatial attention sub-module generates pixel attention weights to focus on the pixels with the most relevant information in the cow image. This fine-grained spatial attention mechanism enhances the local feature representation of the cow’s back patterns. Therefore, the NAM attention mechanism integrates features in the channels and space dimensions, which is beneficial for better distinguishing visually similar individual cows and improving the accuracy of individual cow recognition.

The NAM attention mechanism [28] calculates the Channel attention module and Spatial attention module in parallel. As shown in Figure 7, the NAM attention mechanism normalizes the feature map. The normalization formula of the channel attention module is as follows:

where the and denote the input feature map and output feature map, respectively. and are batch data of mean and variance, respectively. and are the trainable affine transformation parameters (i.e., scale factor and shift factor) for each channel, respectively [28,29]. Then, the results are normalized to reduce the numerical differences between different channels. The normalized channel weights is shown in Equation (2):

where is the scale factor of each channel and is the weight. The normalized result is calculated by using the Sigmoid function, in order to calculate the output of the NAM channel attention module . The calculation formula can be computed by Equation (3):

Figure 7.

The structure of NAM.

Similarly, pixel normalization can be performed on the spatial attention module to obtain the pixel normalized weights, and then the Sigmoid function can be used to calculate the output of the channel attention module of NAM.

3. Results

3.1. Experimental Details

The experiments are implemented on the NVIDIA RTX 3060 GPU and the PyTorch 1.71 framework. The input image size was resized as 384 × 192. Several data enhancement methods were applied to augment the number of training samples, such as random cropping, masking, horizontal flipping, and mirroring. In order to compare all the models fairly, we used the same hyper-parameters for training them, namely, 10 image padding, 16 batch sizes, and 0.9 momentum. The training period was 80 epochs, with an initial learning rate of 0.0001. During training, the network utilized the Adam optimizer and triplet loss function. Cumulative Matching Characteristics (CMC) and Mean Average Precision metrics were used for evaluating the experimental results, where we used Rank-1 and Rank-5 to evaluate the CMC.

3.2. Ablation Experiments

To validate the effectiveness of the improvements on individual cow recognition, we conducted the ablation experiments on QAConv, NAM attention, Dy_Conv, and S2Attention. As seen in Table 1, the evaluation metrics Rank-1 and mAP of the baseline model ResNet50 are only 89.7% and 90.8%, respectively. After QAConv was introduced, the size and parameters of the convolution kernels could be adaptively adjusted, enhancing the model’s adaptability to target scale changes in different input images, which resulted in a 4.9% and 2.9% improvement in Rank-1 accuracy and mAP metrics, respectively. However, when the bottleneck blocks of Layer1 and Layer4 were replaced with Dynamic_Bottleneck, the indicator Rank-1 actually decreased by 0.3%, although both Rank-5 and mAP values had slight improvements. The decrease in Rank-1 means that the model reduced the performance in distinguishing individual cows with similar appearance features, because Dy_Conv did not integrate the features well after extracting features from different receptive fields. Therefore, the model’s performance is further reduced when all the four Dynamic_Bottleneck layers were used to replace the original bottleneck layers. After incorporating S2Attention into Dynamic_Bottleneck to effectively fuse these features, all three evaluation metrics improved, especially the value of Rank-1 increased by 1.4%. Furthermore, compared with 2 Dynamic_Bottleneck layers with S2Attention, 4 Dynamic_Bottleneck layers with S2Attention had lower performance despite less parameters. The NAM attention mechanism enhanced the local feature representation of cow back patterns through spatial attention; therefore, it improved the Rank-1, Rank-5, and mAP values by 1.1%, 1.3%, and 0.6%, respectively. To reduce the number of parameters, we down-sampled the Layer2 and Layer3 bottlenecks of ResNet50.

Table 1.

Ablation experiment.

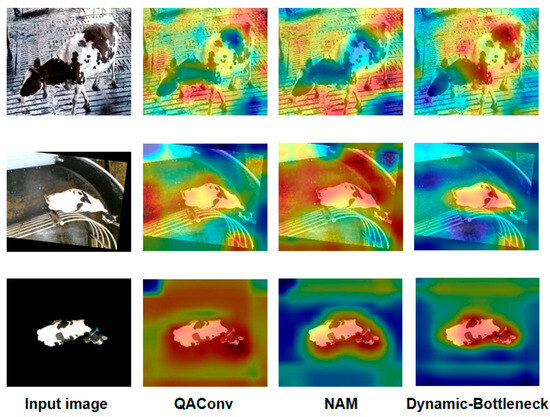

Figure 8 visualizes the feature heatmaps of three modules (QAConv, Dynamic_Bottleneck, and NAM) introduced into ResNet50. As seen in Figure 8, the QAConv module focuses more on the cow’s back pattern features, but it also pays attention to many irrelevant backgrounds. The embedding of NAM reduces background attention to a certain extent, especially in the third row of Figure 8, but sometimes it can also cause the model to not pay enough attention to the key features of cows, as shown in the first row of Figure 8. Furthermore, after replacing the bottleneck of Resnet50 with Dynamic_Bottleneck, the Dy_Conv in Dynamic_Bottleneck extracts multi-scale features based on different receptive fields, and the S2Attention module achieves multi-scale feature interaction during the feature fusion. Therefore, the output feature heatmap pays high attention to the back pattern of cows, while significantly reducing the attention to irrelevant backgrounds.

Figure 8.

Visualization of the feature heatmaps.

3.3. Model Evaluation

To validate the performance of the proposed cow individual recognition model, we compared it with the existing mainstream animal re-identification models (TigerReID, Part-Pose ReID) and pedestrian re-identification models (BDB and OSNet-AIN). All the experiments were conducted on the two datasets described in Section 2.1, and the datasets were divided according to the same standards, in order to compare all the models fairly. As shown in Table 2, and the proposed method outperforms the comparison methods in all the evaluation metrics. Compared with the optimal method OSNet-AIN, Rank-1, Rank-5, and mAP values of the proposed method are 3.1%, 1.1%, and 1.4% higher, respectively. The experimental data sources from different cowsheds, especially from actual cowshed scenes where the appearance of individual cows is very similar, and the shooting light is relatively dark, which also verifies the generalization ability of our method in different application scenarios.

Table 2.

Comparison with advanced identification methods.

Table 2.

Comparison with advanced identification methods.

| Method | Rank-1 | Rank-5 | mAP |

|---|---|---|---|

| TigerReID [30] | 86.1% | 93.3% | 87.5% |

| Part-Pose ReID [31] | 89.7% | 94.7% | 91.7% |

| BDB [32] | 89.7% | 95.7% | 89.7% |

| OSNet-AIN [33] | 93.7% | 97.8% | 93.9% |

| Ours | 96.8% | 98.9% | 95.3% |

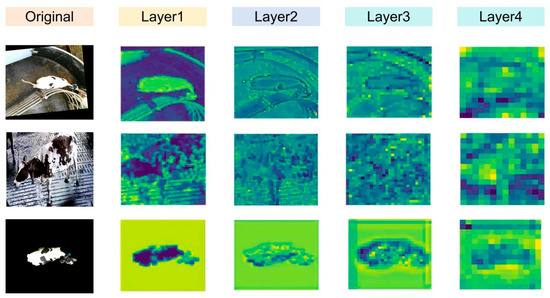

3.4. Visualization of Feature Maps

We visualized the feature map of each bottleneck layer of the proposed model (shown in Figure 2). Layer1 and Layer4 used the Dynamic_Bottleneck proposed in this study, while Layer2 and Layer3 used the bottleneck of Resnet50. The feature map output by the last convolution in each layer is shown in Figure 9. Layer1 and Layer2 extracted low-level features such as cow contour features and fine-grained features like back pattern texture; Layer3 and Layer4 extracted high-level semantic information such as the topological structure and spatial distribution of cow back patterns, in order to distinguish the feature differences in different cow individuals. This process achieved a hierarchical mapping from low-level visual patterns to high-level semantic abstractions.

Figure 9.

Visualization of the feature map of bottleneck structure. The feature maps are output by the last convolution in each layer. Layer1 and Layer4 used the Dynamic_Bottleneck proposed in this study, while Layer2 and Layer3 used the bottleneck of Resnet50.

4. Discussion

4.1. Advantage of Dynamic_Bottleneck Design

Dynamic_Bottleneck combines the dynamic convolution module Dy_Conv and S2Attention. As shown in Table 1, after Dynamic_Bottleneck is used in the bottleneck structure, the model’s metrics Rank-1, Rank-5, and mAP are significantly improved. From the visualization feature maps in Figure 8 and Figure 9, it can be seen that the model simultaneously focuses on the global features and back pattern features of cows. This indicates that the Dynamic_Bottleneck module effectively extracts and integrates local detail features and global contextual information, providing rich feature representations for individual cow recognition.

Compared with the original bottleneck of ResNet50, Dynamic_Bottleneck requires a lower number of parameters in practical applications. This is because the Dy_Conv block in Dynamic_Bottleneck uses depthwise separable convolution and parallel branch design, which has much fewer parameters than traditional convolution, and the three channels are independently processed by three parallel branches. Therefore, compared to 3 × 3 convolution, Dy_Conv requires fewer parameters. Especially for the Layer4 Dynamic_Bottleneck with a large number of channels, the parameters has a significant decrease.

To verify the feature interaction and fusion ability of S2Attention, we replaced S2Attention with SE (Squeeze and Excitation) attention mechanism. The experimental results are shown in the third and fourth rows of Table 3. Although the parameters of S2Attention module in Dynamic_Bottleneck slightly increased compared to SE attention module, the values of Rank-1, Rank-5, and mAP increased by 1.1%, 2.1%, and 1.2%, respectively. This is because the S2Attention module maintains attention modeling of key regions through sparse sampling strategy. In addition, multi-scale feature interaction is achieved through down-sampling and up-sampling operations, enhancing the model’s perception ability of multi-scale features.

Table 3.

Comparison of Attention Mechanisms.

4.2. Comparison Between NAM and Mainstream Attentions

In addition, we also compared the effects of NAM attention and two other mainstream attention mechanisms on individual cow recognition. To ensure the fairness of the experiment, all tests were conducted using the same hyper-parameter settings. As shown in Table 3, compared with the other attention mechanisms, NAM performs excellently in improving model performance. NAM attention effectively integrates feature information in the channel-space dimension through parallel computation of channel attention and spatial attention, thereby better distinguishing visually similar individual cows. In addition, NAM also has a relatively lower number of parameters.

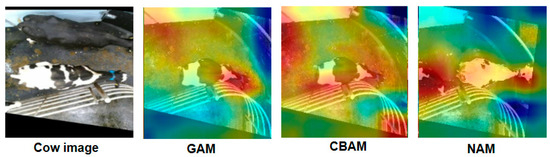

Figure 10 visualizes the heatmaps of different attention modules. GAM and CBAM simultaneously focus on the body of cows and a large number of background areas, which is not conducive to capturing key features of cows and thus reduces the accuracy of identifying individual cows. Compared with GAM and CBAM, NAM focuses more on the torso area of cows, and reduces interference with irrelevant backgrounds.

Figure 10.

The heatmaps of different attention modules.

4.3. Application on Cowsheds

For the dataset in the milking room, we directly adopt the proposed method for individual cow recognition. In another dataset, each cow video frame contains multiple cows. We labeled a small portion of images in the dataset and fine-tuned the pretrained YOLOv8 to detect the cow targets. Next, we cropped the cow targets in the bounding boxes generated by YOLOv8. The cropped images were regarded as the images in Query set, then, the cow individual recognition model matched the cropped cow target images with the images in Gallery set, in order to identify the ID number of the cow in the video frame. Since we selected 30 individual cows, only these 30 cows in the dataset can be assigned ID numbers, as shown in Figure 11.

Figure 11.

Sample images of identifying multiple individual cows in a video frame. The cows are located by YOLOv8, and their ID numbers are identified by the proposed model.

4.4. Accuracy and Limitations

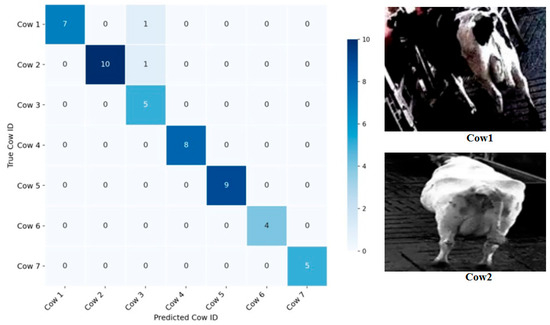

Accuracy is also used to evaluate the performance of the proposed model. The confusion matrix is shown in Figure 12. We randomly selected the IDs of seven cows, totaling 50 cow images. The number of images for each individual cow is shown in Table 4. As seen from the left image of Figure 12, 48 out of 50 cow images were correctly identified, thus the identification accuracy was 96%. Among them, each of ID Number Cow1 and Cow2 had one image given the wrong ID (namely Cow3). The two misidentified cow images are shown on the right side of Figure 10. The black areas of the Cow1 body are difficult to be distinguished from the backgrounds due to the shooting angle and dim lighting. The body of the Cow2 is perpendicular to the camera lens plane, which makes it impossible to extract its back pattern features.

Figure 12.

Confusion matrix for individual identification of cows. (Left): confusion matrix; (Right): two mis-identified cow images.

Table 4.

The number of images for each individual cow.

5. Conclusions

This article presented a novel individual cow recognition method to adapt to complex scenarios in different cowsheds, as well as to distinguish visually similar individual cows. The QAConv module was introduced to front-end of the backbone network to address the scale changes in cow targets in the input image. The Dynamic_Bottleneck module based on Dy_Conv and S2Attention mechanism achieved accurate extraction of local detail features and global information of cows. NAM attention was embedded into the backend of the network for the feature fusion in both channel and spatial dimensions. The experiment verified that the proposed method had better performance and fewer parameters than the state-of-the-art re-identification methods. The proposed method can reduce the interference of complex backgrounds and improve the recognition accuracy of visually similar individual cows. In addition, this method can be extended to other livestock breeding scenarios.

However, the method proposed in this article has some limitations. Due to the shooting angle, the black patches on the cow body almost blend in with the dark background in low light environments, as a result, these local features are not accurately extracted by the model. In addition, when the body of a cow is at a vertical angle to the camera lens, most of the patterns on its torso are occluded except for the face or tail. Cows captured at this angle are often misidentified.

In future research, we will combine the advanced image restoration techniques into the individual cow recognition method, in order to solve the problem of individual cow identification in low light images. We will also use multiple cameras to capture images of the same cow from different angles for model training, and explore fusion strategies for multi angle input images to improve the accuracy of individual cow recognition.

Author Contributions

Conceptualization, H.Q. and T.S.; methodology, H.Q. and Y.Z.; software, T.S. and H.Q.; data curation, H.Q.; writing—review and editing, T.S. and Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China, grant number 32371583. This research was funded by the Student Practice Innovation and Training Program of Jiangsu Province, grant number 202210298018Z.

Institutional Review Board Statement

The datasets are from two public datasets, and the videos are captured by the cameras. The experimental results are obtained by the algorithms. The research does not involve animal ethics.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hossain, M.E.; Kabir, M.A.; Zheng, L.; Swain, D.L.; McGrath, S.; Medway, J. A systematic review of machine learning techniques for cattle identification: Datasets, methods and future directions. Artif. Intell. Agric. 2022, 6, 138–155. [Google Scholar] [CrossRef]

- Garcia, R.; Aguilar, J.; Toro, M.; Pinto, A.; Rodriguez, P. A systematic literature review on the use of machine learning in precision livestock farming. Comput. Electron. Agric. 2020, 179, 105826. [Google Scholar] [CrossRef]

- Ramudzuli, Z.R.; Malekian, R.; Ye, N. Design of a RFID system for real-time tracking of laboratory animals. Wirel. Pers. Commun. 2017, 95, 3883–3903. [Google Scholar] [CrossRef]

- Mora, M.; Piles, M.; David, I.; Rosa, G.J. Integrating computer vision algorithms and RFID system for identification and tracking of group-housed animals: An example with pigs. J. Anim. Sci. 2024, 102, skae174. [Google Scholar] [CrossRef]

- Yukun, S.; Pengju, H.; Yujie, W.; Ziqi, C.; Yang, L.; Baisheng, D.; Runze, L.; Yonggen, Z. Automatic monitoring system for individual dairy cows based on a deep learning framework that provides identification via body parts and estimation of body condition score. J. Dairy Sci. 2019, 102, 10140–10151. [Google Scholar] [CrossRef] [PubMed]

- Parivendan, S.; Sailunaz, K.; Neethirajan, S. Socializing AI: Integrating Social Network Analysis and Deep Learning for Precision Dairy Cow Monitoring—A Critical Review. Animals 2025, 15, 1835. [Google Scholar] [CrossRef]

- Sun, Y.P.; Jiang, Y.; Wang, Z.; Zhang, Y.; Zhang, L.L. Wild Bird Species Identification Based on a Lightweight Model With Frequency Dynamic Convolution. IEEE Access 2023, 11, 54352–54362. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhu, S. Occluded pedestrian re-identification via Res-ViT double-branch hybrid network. Multimed. Syst. 2024, 30, 5. [Google Scholar] [CrossRef]

- Tang, H.; Fei, L.; Zhu, H.; Tao, H.; Xie, C. A Two-Stage Network for Zero-Shot Low-Illumination Image Restoration. Sensors 2023, 23, 792. [Google Scholar] [CrossRef]

- Kumar, S.; Pandey, A.; Satwik, K.S.R.; Kumar, S.; Singh, S.K.; Singh, A.K.; Mohan, A. Deep learning framework for recognition of cattle using muzzle point image pattern. Measurement 2018, 116, 1–17. [Google Scholar] [CrossRef]

- Weng, Z.; Meng, F.; Liu, S.; Zhang, Y.; Zheng, Z.; Gong, C. Cattle face recognition based on a Two-Branch convolutional neural network. Comput. Electron. Agric. 2022, 196, 106871. [Google Scholar] [CrossRef]

- Shen, W.; Hu, H.; Dai, B.; Wei, X.; Sun, J.; Jiang, L.; Sun, Y. Individual identification of dairy cows based on convolutional neural networks. Multimed. Tools Appl. 2020, 79, 14711–14724. [Google Scholar] [CrossRef]

- Hu, H.; Dai, B.; Shen, W.; Wei, X.; Sun, J.; Li, R.; Zhang, Y. Cow identification based on fusion of deep parts features. Biosyst. Eng. 2020, 192, 245–256. [Google Scholar] [CrossRef]

- Zhang, H.; Zheng, L.; Tan, L.; Gao, J.; Luo, Y. YOLOX-S-TKECB: A Holstein Cow Identification Detection Algorithm. Agriculture 2024, 14, 1982. [Google Scholar] [CrossRef]

- Weng, Z.; Liu, S.; Zheng, Z.; Zhang, Y.; Gong, C. Cattle facial matching recognition algorithm based on multi-view feature fusion. Electronics 2022, 12, 156. [Google Scholar] [CrossRef]

- Yang, L.; Xu, X.; Zhao, J.; Song, H. Fusion of retinaface and improved facenet for individual cow identification in natural scenes. Inf. Process. Agric. 2024, 11, 512–523. [Google Scholar] [CrossRef]

- Chen, X.; Yang, T.; Mai, K.; Liu, C.; Xiong, J.; Kuang, Y.; Gao, Y. Holstein cattle face re-identification unifying global and part feature deep network with attention mechanism. Animals 2022, 12, 1047. [Google Scholar] [CrossRef]

- Qiao, Y.; Kong, H.; Clark, C.; Lomax, S.; Su, D.; Eiffert, S.; Sukkarieh, S. Intelligent perception for cattle monitoring: A review for cattle identification, body condition score evaluation, and weight estimation. Comput. Electron. Agric. 2021, 185, 106143. [Google Scholar] [CrossRef]

- Wang, B.; Li, X.; An, X.; Duan, W.; Wang, Y.; Wang, D.; Qi, J. Open-Set Recognition of Individual Cows Based on Spatial Feature Transformation and Metric Learning. Animals 2024, 14, 1175. [Google Scholar] [CrossRef]

- Ferreira, R.E.; Bresolin, T.; Rosa, G.J.; Dórea, J.R. Using dorsal surface for individual identification of dairy calves through 3D deep learning algorithms. Comput. Electron. Agric. 2022, 201, 107272. [Google Scholar] [CrossRef]

- Andrew, W.; Hannuna, S.; Campbell, N.; Burghardt, T. Automatic individual holstein friesian cattle identification via selective local coat pattern matching in RGB-D imagery. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; IEEE: New York, NY, USA; pp. 484–488. [Google Scholar] [CrossRef]

- Zhao, K.; Wang, J.; Chen, Y.; Sun, J.; Zhang, R. Individual Identification of Holstein Cows from Top-View RGB and Depth Images Based on Improved PointNet++ and ConvNeXt. Agriculture 2025, 15, 710. [Google Scholar] [CrossRef]

- Andrew, W.; Gao, J.; Mullan, S.; Campbell, N.; Dowsey, A.W.; Burghardt, T. Visual identification of individual Holstein-Friesian cattle via deep metric learning. Comput. Electron. Agric. 2021, 185, 106133. [Google Scholar] [CrossRef]

- Qiao, Y.; Clark, C.; Lomax, S.; Kong, H.; Su, D.; Sukkarieh, S. Automated individual cattle identification using video data: A unified deep learning architecture approach. Front. Anim. Sci. 2021, 2, 759147. [Google Scholar] [CrossRef]

- Du, Y.; Kou, Y.; Li, B.; Qin, L.; Gao, D. Individual identification of dairy cows based on deep learning and feature fusion. Anim. Sci. J. 2022, 93, e13789. [Google Scholar] [CrossRef]

- Liao, S.; Shao, L. Interpretable and generalizable person re-identification with query-adaptive convolution and temporal lifting. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Part XI 16. Springer International Publishing: Cham, Switzerland, 2020; pp. 456–474. [Google Scholar] [CrossRef]

- Yu, T.; Li, X.; Cai, Y.; Sun, M.; Li, P. S2-MLPV2: Improved spatial-shift MLP architecture for vision. arXiv 2021, arXiv:2108.01072. [Google Scholar]

- Liu, Y.; Shao, Z.; Teng, Y.; Hoffmann, N. NAM: Normalization-based attention module. arXiv 2021. [Google Scholar] [CrossRef]

- Sergey, I.; Christian, S. Batch normalization: Accelerating deep network training byreducing internal covariate shift. In Proceedings of the International Conference on Machine Learning 2015, Lille, France, 7–9 July 2015; pp. 448–456. [Google Scholar]

- Yu, J.; Su, H.; Liu, J.; Yang, Z.; Zhang, Z.; Zhu, Y.; Yang, L.; Jiao, B. A strong baseline for tiger re-id and its bag of tricks. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Liu, C.; Zhang, R.; Guo, L. Part-pose guided amur tiger re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Dai, Z.; Chen, M.; Gu, X.; Zhu, S.; Tan, P. Batch dropblock network for person re-identification and beyond. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3691–3701. [Google Scholar]

- Zhou, K.; Yang, Y.; Cavallaro, A.; Xiang, T. Learning generalisable omni-scale representations for person re-identification. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 5056–5069. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).