SDGTrack: A Multi-Target Tracking Method for Pigs in Multiple Farming Scenarios

Simple Summary

Abstract

1. Introduction

- (1)

- An environment-aware adaptive module was proposed to enhance the model’s performance across various scenarios.

- (2)

- A target association strategy was designed to effectively reduce target mismatches and misassignments.

- (3)

- Comparative experiments with other leading trackers on MOT data across multiple scenarios validated the ability of SDGTrack to extend from a single environment to multiple scenarios.

2. Materials and Methods

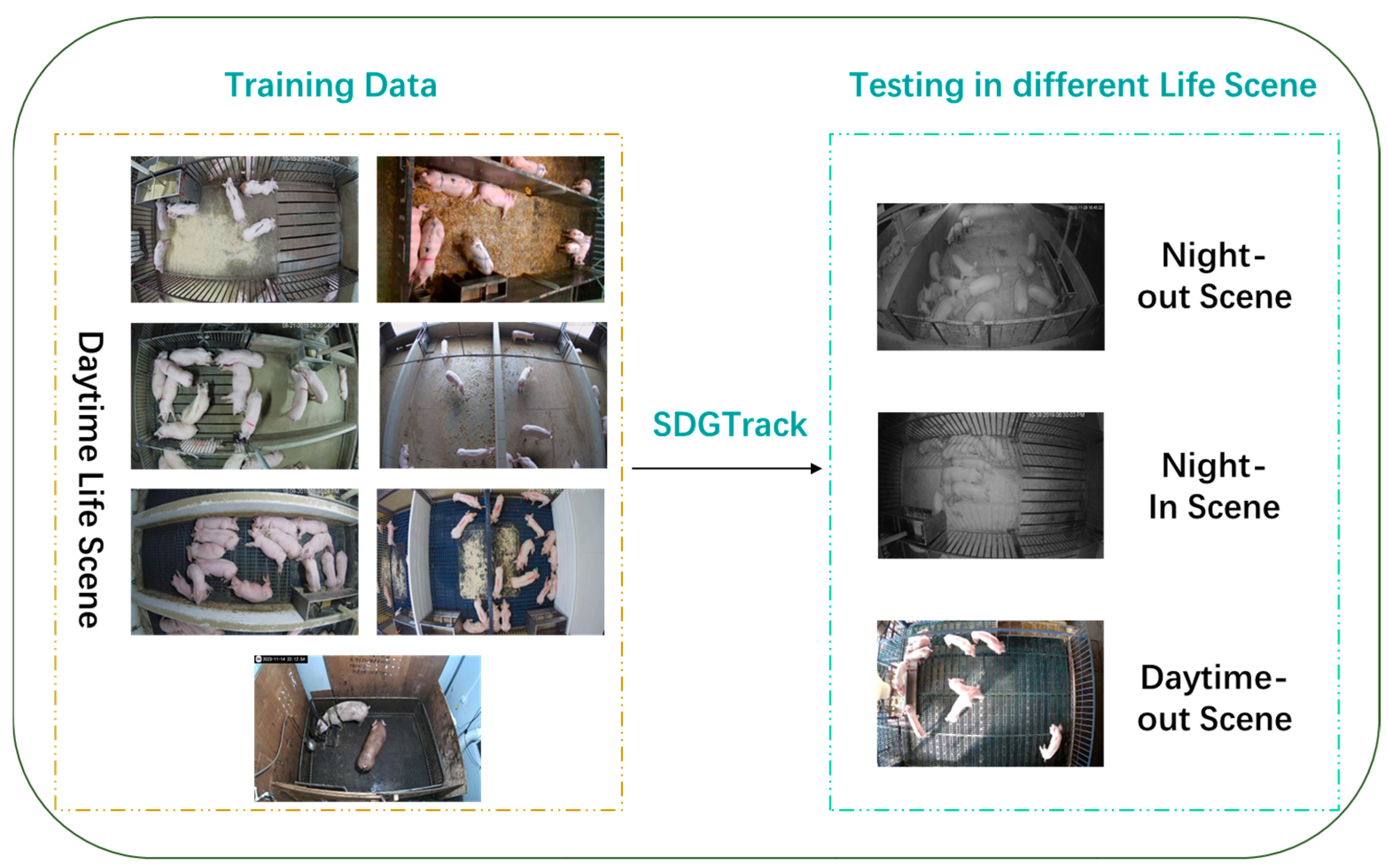

2.1. Materials

2.1.1. Data Acquisition

2.1.2. Dataset Construction

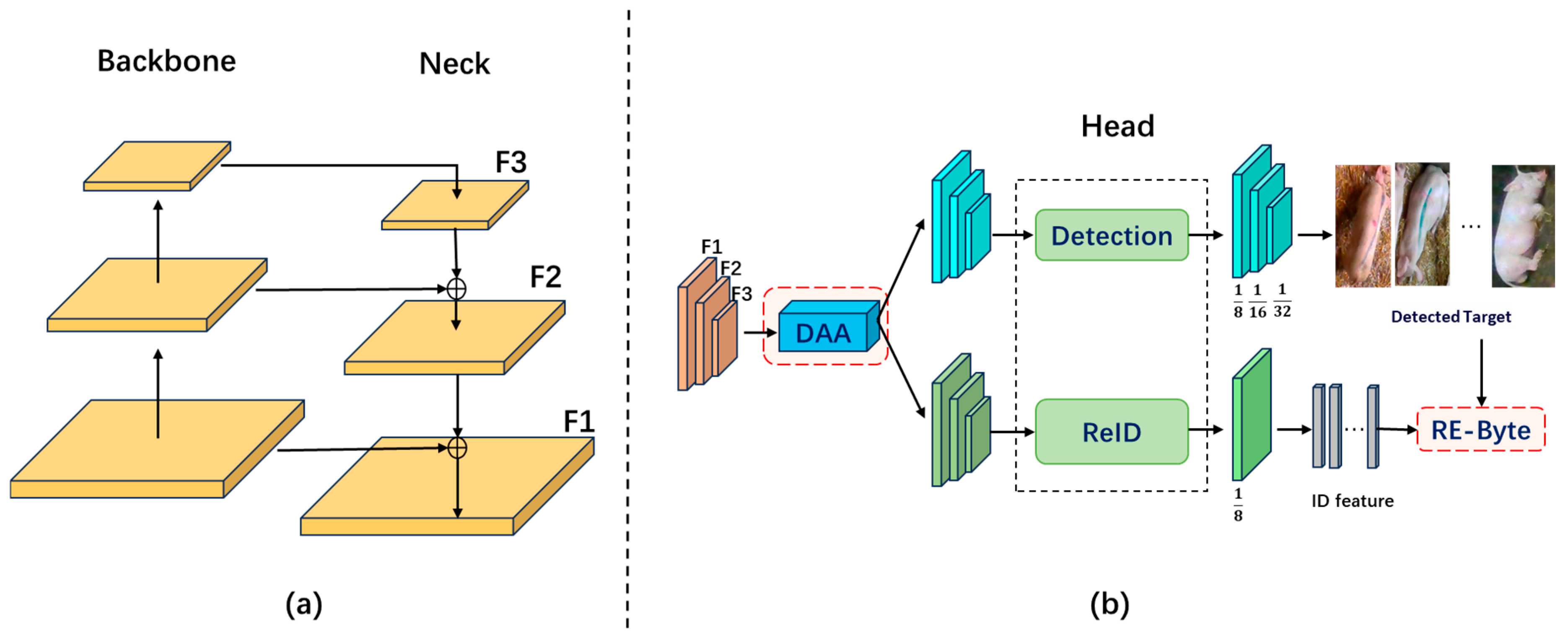

2.2. Methods

2.2.1. Basic JDE and CSTrack Methods

2.2.2. SDGTrack Tracking Model

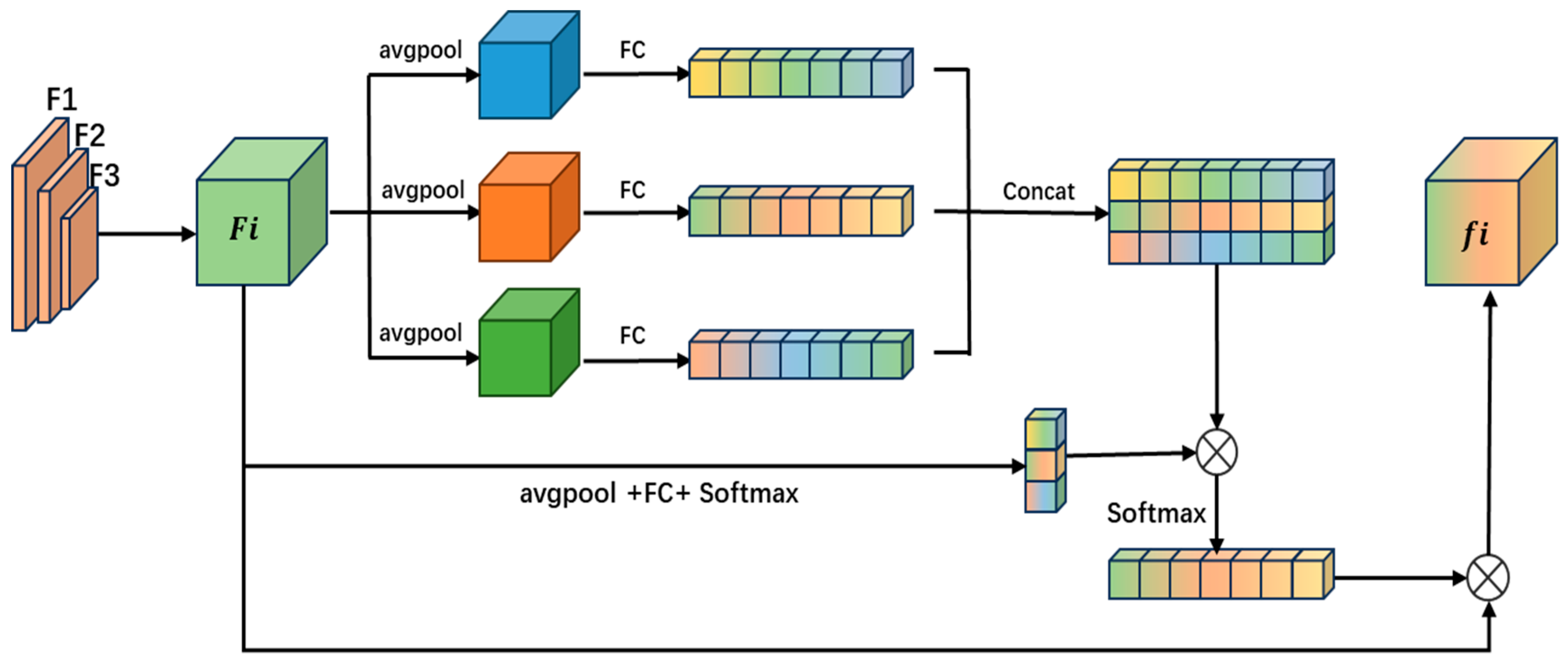

2.2.3. Domain-Aware Attention Module

2.2.4. Re-Byte

2.2.5. Evaluation Metrics

3. Results and Analysis

3.1. Experimental Platforms

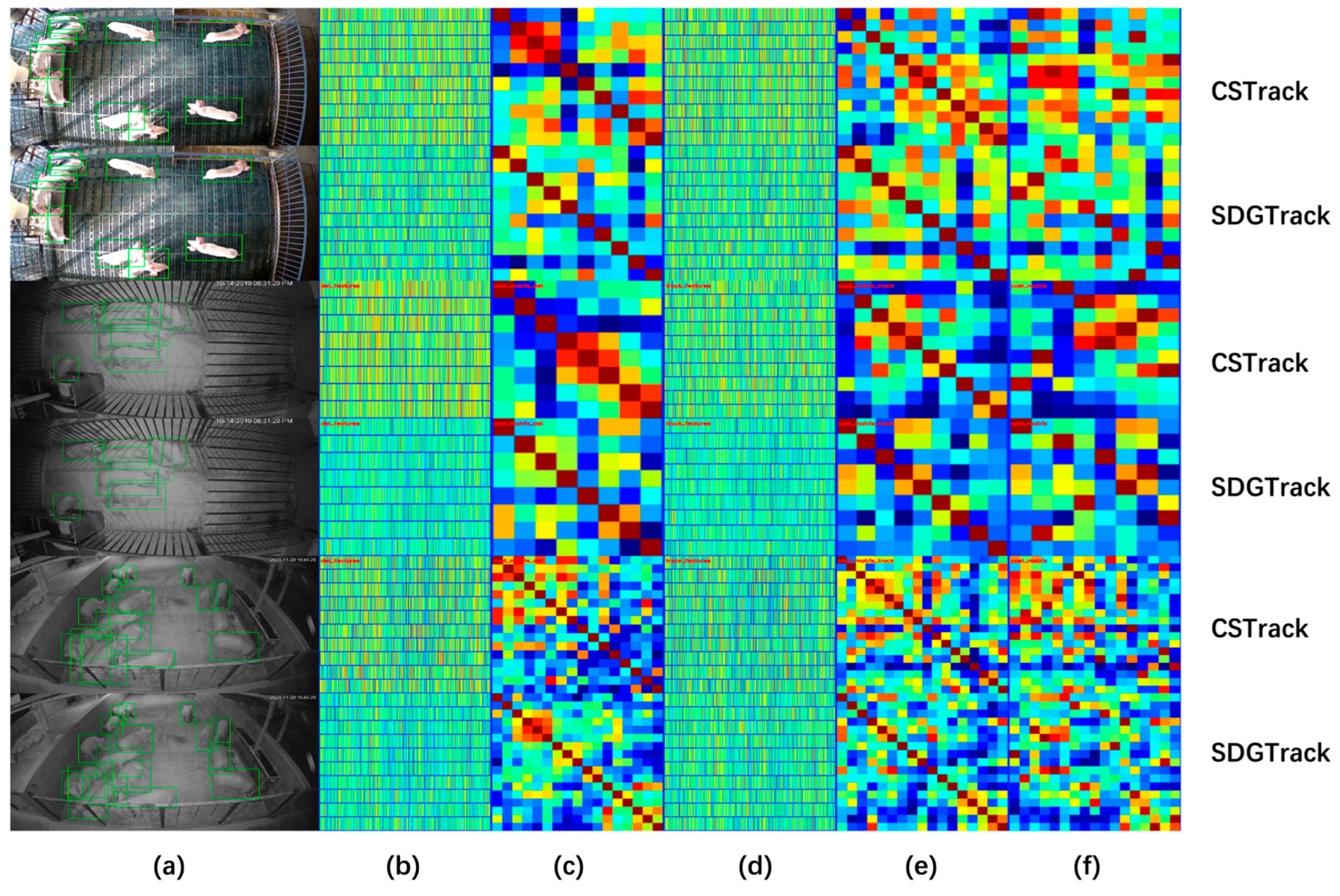

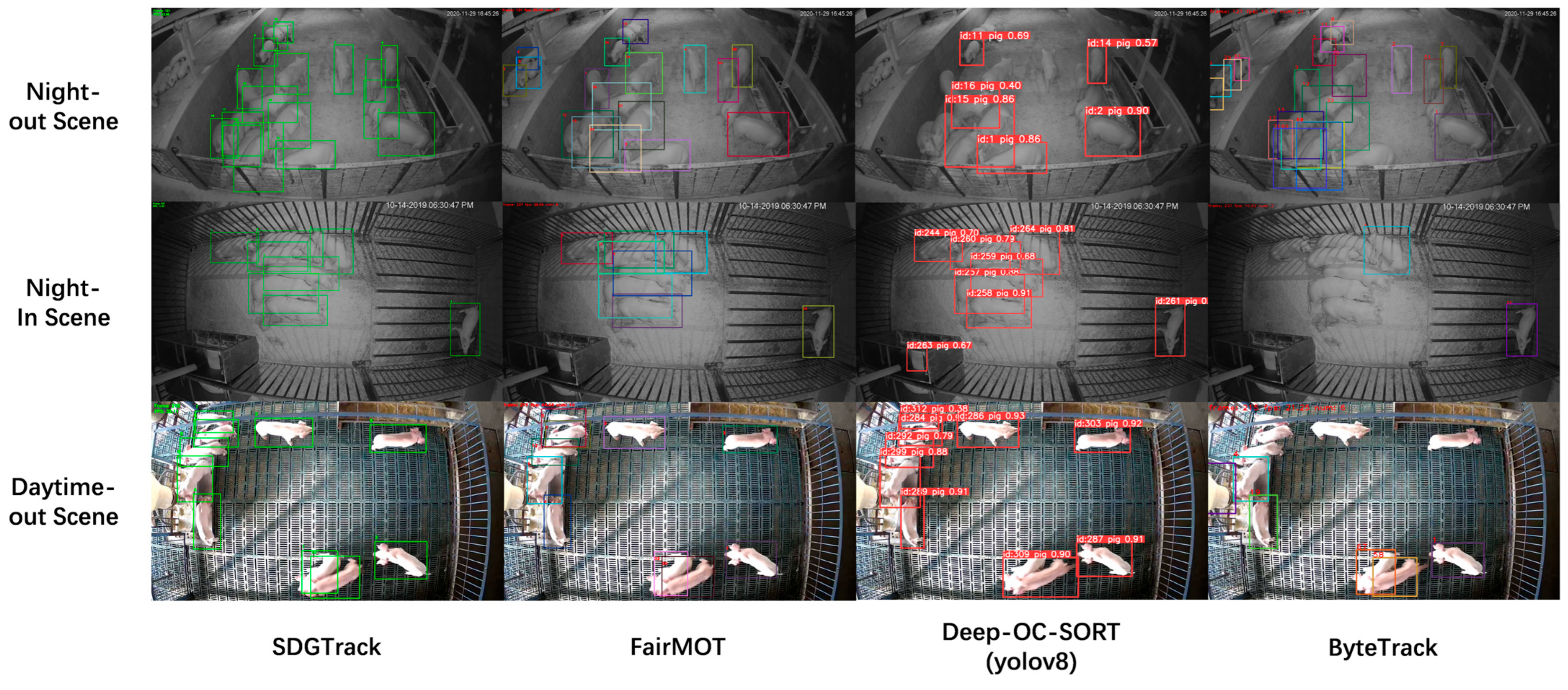

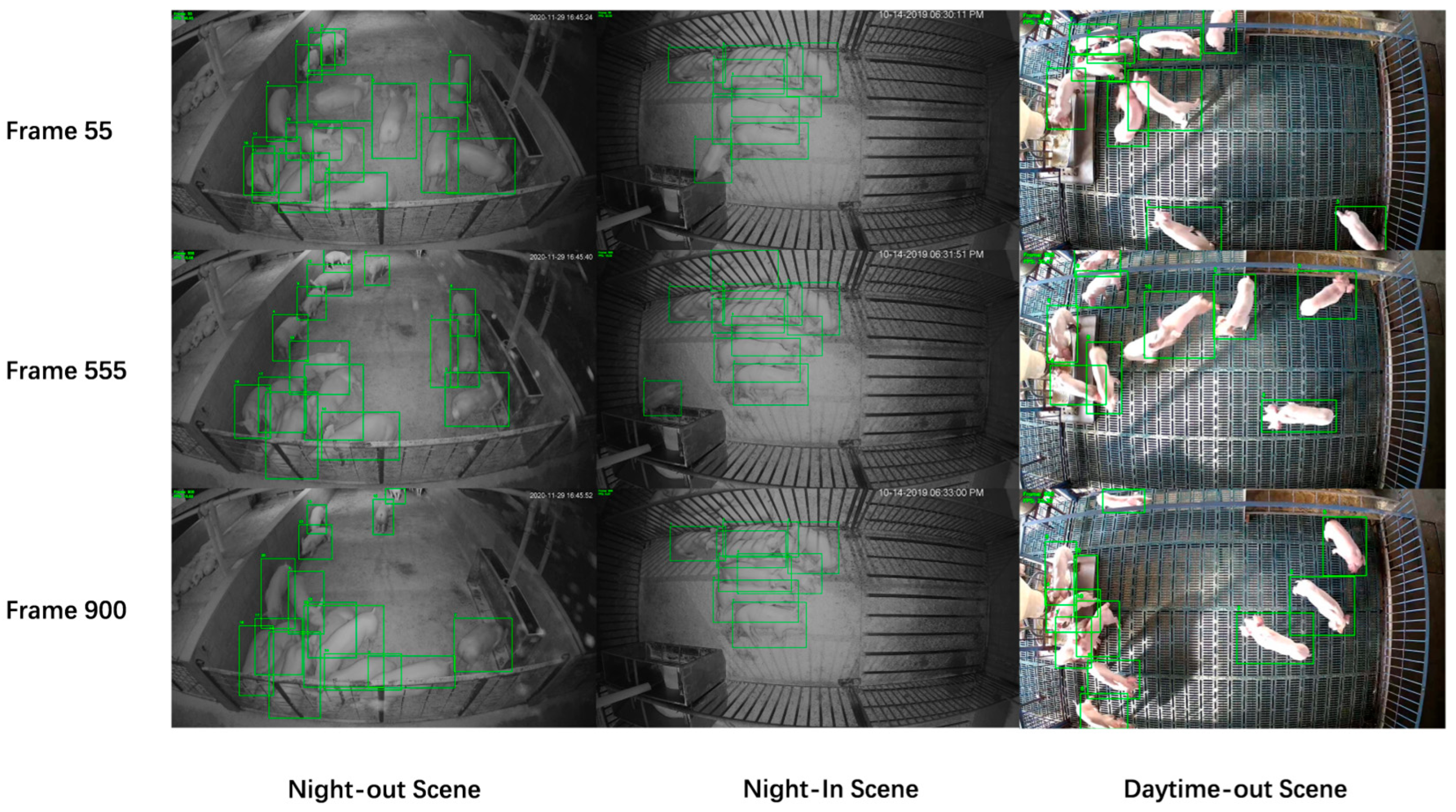

3.2. Comparative Experiments with Different MOT Algorithms

3.3. Ablation Experiment

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Soare, E.; Chiurciu, I.-A. Study on the Pork Market Worldwide. Comput. Electron. Agric. 2017, 2017, 321–326. [Google Scholar]

- Tzanidakis, C.; Simitzis, P.; Arvanitis, K.; Panagakis, P. An Overview of the Current Trends in Precision Pig Farming Technologies. Livest. Sci. 2021, 249, 104530. [Google Scholar] [CrossRef]

- Tangirala, B.; Bhandari, I.; Laszlo, D.; Gupta, D.K.; Thomas, R.M.; Arya, D. Livestock Monitoring with Transformer. arXiv 2021, arXiv:2111.00801. [Google Scholar] [CrossRef]

- Floyd, R.E. RFID in Animal-Tracking Applications. IEEE Potentials 2015, 34, 32–33. [Google Scholar] [CrossRef]

- Adrion, F.; Kapun, A.; Eckert, F.; Holland, E.-M.; Staiger, M.; Götz, S.; Gallmann, E. Monitoring Trough Visits of Growing-Finishing Pigs with UHF-RFID. Comput. Electron. Agric. 2018, 144, 144–153. [Google Scholar] [CrossRef]

- Maselyne, J.; Adriaens, I.; Huybrechts, T.; De Ketelaere, B.; Millet, S.; Vangeyte, J.; Van Nuffel, A.; Saeys, W. Measuring the Drinking Behaviour of Individual Pigs Housed in Group Using Radio Frequency Identification (RFID). Animal 2016, 10, 1557–1566. [Google Scholar] [CrossRef]

- Maselyne, J.; Saeys, W.; De Ketelaere, B.; Mertens, K.; Vangeyte, J.; Hessel, E.F.; Millet, S.; Van Nuffel, A. Validation of a High Frequency Radio Frequency Identification (HF RFID) System for Registering Feeding Patterns of Growing-Finishing Pigs. Comput. Electron. Agric. 2014, 102, 10–18. [Google Scholar] [CrossRef]

- De Bruijn, B.G.C.; De Mol, R.M.; Hogewerf, P.H.; Van Der Fels, J.B. A Correlated-Variables Model for Monitoring Individual Growing-Finishing Pig’s Behavior by RFID Registrations. Smart Agric. Technol. 2023, 4, 100189. [Google Scholar] [CrossRef]

- Xu, L.; Huang, Y. Rethinking Joint Detection and Embedding for Multiobject Tracking in Multiscenario. IEEE Trans. Ind. Inf. 2024, 20, 8079–8088. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-Cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- He, H.; Qiao, Y.; Li, X.; Chen, C.; Zhang, X. Optimization on Multi-Object Tracking and Segmentation in Pigs’ Weight Measurement. Comput. Electron. Agric. 2021, 186, 106190. [Google Scholar] [CrossRef]

- Sun, L.; Zou, Y.; Li, Y.; Cai, Z.; Li, Y.; Luo, B.; Liu, Y.; Li, Y. Multi Target Pigs Tracking Loss Correction Algorithm Based on Faster R-CNN. Int. J. Agric. Biol. Eng. 2018, 11, 192–197. [Google Scholar] [CrossRef]

- Tu, S.; Cai, Y.; Liang, Y.; Lei, H.; Huang, Y.; Liu, H.; Xiao, D. Tracking and Monitoring of Individual Pig Behavior Based on YOLOv5-Byte. Comput. Electron. Agric. 2024, 221, 108997. [Google Scholar] [CrossRef]

- Tu, S.; Zeng, Q.; Liang, Y.; Liu, X.; Huang, L.; Weng, S.; Huang, Q. Automated Behavior Recognition and Tracking of Group-Housed Pigs with an Improved DeepSORT Method. Agriculture 2022, 12, 1907. [Google Scholar] [CrossRef]

- Huang, Y.; Xiao, D.; Liu, J.; Tan, Z.; Liu, K.; Chen, M. An Improved Pig Counting Algorithm Based on YOLOv5 and DeepSORT Model. Sensors 2023, 23, 6309. [Google Scholar] [CrossRef]

- Guo, Q.; Sun, Y.; Min, L.; van Putten, A.; Knol, E.F.; Visser, B.; Rodenburg, T.; Bolhuis, L.; Bijma, P.; de With, P.H.N. Video-Based Detection and Tracking with Improved Re-Identification Association for Pigs and Laying Hens in Farms. In Proceedings of the 17th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Virtual, 6–8 February 2022; SciTePress: Setúbal, Portugal, 2022; pp. 69–78. [Google Scholar]

- Guo, Q.; Sun, Y.; Orsini, C.; Bolhuis, J.E.; De Vlieg, J.; Bijma, P.; De With, P.H.N. Enhanced Camera-Based Individual Pig Detection and Tracking for Smart Pig Farms. Comput. Electron. Agric. 2023, 211, 108009. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A.; Paulus, D. Simple Online and Realtime Tracking with a Deep Association Metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Zhang, L.; Gray, H.; Ye, X.; Collins, L.; Allinson, N. Automatic Individual Pig Detection and Tracking in Pig Farms. Sensors 2019, 19, 1188. [Google Scholar] [CrossRef]

- Gao, Y.; Yan, K.; Dai, B.; Sun, H.; Yin, Y.; Liu, R.; Shen, W. Recognition of Aggressive Behavior of Group-Housed Pigs Based on CNN-GRU Hybrid Model with Spatio-Temporal Attention Mechanism. Comput. Electron. Agric. 2023, 205, 107606. [Google Scholar] [CrossRef]

- Shirke, A.; Saifuddin, A.; Luthra, A.; Li, J.; Williams, T.; Hu, X.; Kotnana, A.; Kocabalkanli, O.; Ahuja, N.; Green-Miller, A.; et al. Tracking Grow-Finish Pigs Across Large Pens Using Multiple Cameras. arXiv 2021, arXiv:2111.10971. [Google Scholar]

- T. Psota, E.; Schmidt, T.; Mote, B.; C. Pérez, L. Long-Term Tracking of Group-Housed Livestock Using Keypoint Detection and MAP Estimation for Individual Animal Identification. Sensors 2020, 20, 3670. [Google Scholar] [CrossRef]

- Bergamini, L.; Pini, S.; Simoni, A.; Vezzani, R.; Calderara, S.; D’Eath, R.; Fisher, R. Extracting Accurate Long-Term Behavior Changes from a Large Pig Dataset. In Proceedings of the 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Virtual, 8–10 February 2021; SciTePress: Setúbal, Portugal, 2021; pp. 524–533. [Google Scholar]

- Milan, A.; Leal-Taixe, L.; Reid, I.; Roth, S.; Schindler, K. MOT16: A Benchmark for Multi-Object Tracking. arXiv 2016, arXiv:1603.00831. [Google Scholar] [CrossRef]

- Wang, Z.; Zheng, L.; Liu, Y.; Li, Y.; Wang, S. Towards Real-Time Multi-Object Tracking. In Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; Volume 12356, pp. 107–122. ISBN 978-3-030-58620-1. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liang, C.; Zhang, Z.; Zhou, X.; Li, B.; Zhu, S.; Hu, W. Rethinking the Competition Between Detection and ReID in Multiobject Tracking. IEEE Trans. Image Process. 2022, 31, 3182–3196. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. ByteTrack: Multi-Object Tracking by Associating Every Detection Box. In Computer Vision—ECCV 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer Nature: Cham, Switzerland, 2022; Volume 13682, pp. 1–21. ISBN 978-3-031-20046-5. [Google Scholar]

- Lee, Y.; Park, J. CenterMask: Real-Time Anchor-Free Instance Segmentation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 13903–13912. [Google Scholar]

- Welch, G.; Bishop, G. An Introduction to the Kalman Filter; University of North Carolina at Chapel Hill, Department of Computer Science: Chapel Hill, NC, USA, 1995. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Kuhn, H.W. The Hungarian Method for the Assignment Problem. Nav. Res. Logist. 1955, 2, 83–97. [Google Scholar] [CrossRef]

- Danielsson, P.-E. Euclidean Distance Mapping. Comput. Graph. Image Process. 1980, 14, 227–248. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, C.; Wang, X.; Zeng, W.; Liu, W. FairMOT: On the Fairness of Detection and Re-Identification in Multiple Object Tracking. Int. J. Comput. Vis. 2021, 129, 3069–3087. [Google Scholar] [CrossRef]

- Aharon, N.; Orfaig, R.; Bobrovsky, B.-Z. BoT-SORT: Robust Associations Multi-Pedestrian Tracking. arXiv 2022, arXiv:2206.14651. [Google Scholar]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple Online and Realtime Tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- Cao, J.; Pang, J.; Weng, X.; Khirodkar, R.; Kitani, K. Observation-Centric SORT: Rethinking SORT for Robust Multi-Object Tracking. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 9686–9696. [Google Scholar]

- Maggiolino, G.; Ahmad, A.; Cao, J.; Kitani, K. Deep OC-Sort: Multi-Pedestrian Tracking by Adaptive Re-Identification. In Proceedings of the 2023 IEEE International Conference on Image Processing (ICIP), Kuala Lumpur, Malaysia, 8 October 2023; pp. 3025–3029. [Google Scholar]

| Dataset | Scenario Type | Scene Count | Total Images | Description |

|---|---|---|---|---|

| Training | Daytime | 7 | 12,600 | Data from 7 different daytime pig life scenarios |

| Test | Night-In Scene | 1 | 1800 | A nighttime sequence captured in the same location as the training set. |

| Night-Out Scene | 1 | 1800 | Nighttime data recorded in a farming scenario completely different from the training set. | |

| Daytime-Out Scene | 1 | 1800 | A daytime recording from another farm not included in the training. |

| Metric | Description |

|---|---|

| HOTA↑ | Combined accuracy of detection and identity tracking. |

| MOTA↑ | Evaluates the overall accuracy of the multi-object tracking algorithm. |

| IDF1↑ | Combines correctly detected objects (IDTP), false positives (IDFP), and missed objects (IDFN) into a single metric. |

| MOTP↑ | Measures the precision of the tracker in estimating the positions of targets. |

| MT↑ | Assesses the proportion of targets that can be consistently tracked throughout the process. |

| ML↓ | Evaluates the proportion of targets that are lost during the multi-object tracking process. |

| IDS↓ | Represents the total number of ID switches |

| FP↓ | False Positive (FP) refers to negative samples incorrectly predicted as positive by the model, also known as the false alarm rate. |

| FN↓ | False Negative (FN) refers to positive samples incorrectly predicted as negative by the model, also known as the miss rate. |

| FPS↑ | FPS represents the frame rate of the entire tracking framework. |

| Method | HOTA↑ (%) | MOTA↑ (%) | IDF1↑ (%) | MT↑ (%) | ML↓ (%) | FP↓ | FN↓ | IDS↓ | FPS↑ |

|---|---|---|---|---|---|---|---|---|---|

| SORT (yolox) | 50.8 | 45.4 | 56.8 | 17 | 9 | 7019 | 27,579 | 126 | 37.5 |

| DeepSORT (yolox) | 48.8 | 44.2 | 53.9 | 18 | 9 | 8693 | 26,650 | 171 | 12.3 |

| Deep-OC-SORT (yolov8) | 55.4 | 51.5 | 59.5 | 19 | 11 | 575 | 29,722 | 567 | 24.1 |

| BoT-SORT (yolov8) | 51.5 | 49.6 | 53.5 | 16 | 11 | 275 | 31,188 | 596 | 23.2 |

| OC-SORT (yolov8) | 55.3 | 51.5 | 59.4 | 18 | 12 | 575 | 29,720 | 572 | 20.2 |

| ByteTrack | 47.3 | 46.6 | 48.0 | 17 | 9 | 5498 | 28,128 | 358 | 37.4 |

| FairMOT | 55.1 | 55.6 | 54.6 | 25 | 5 | 7561 | 20,337 | 338 | 40.5 |

| CSTrack | 57.7 | 64.3 | 51.8 | 26 | 6 | 1476 | 20,719 | 447 | 21.6 |

| SDGTrack (Ours) | 83.0 | 80.9 | 85.1 | 38 | 3 | 3394 | 13,163 | 24 | 27.5 |

| Method | Dataset | HOTA↑ (%) | MOTA↑ (%) | IDF1↑ (%) | MT↑ (%) | ML↓ (%) | FP↓ | FN↓ | IDS↓ | FPS↑ |

|---|---|---|---|---|---|---|---|---|---|---|

| Deep-OC-Sort (yolov8) | night-in scene | 67.9 | 64.1 | 71.9 | 5 | 3 | 0 | 6436 | 25 | 15.7 |

| night-out scene | 33.0 | 28.2 | 38.5 | 1 | 9 | 0 | 22,759 | 298 | 27.9 | |

| daytime-out scene | 83.9 | 90.0 | 78.2 | 13 | 0 | 575 | 527 | 244 | 36.0 | |

| FairMOT | night-in scene | 62.5 | 68.8 | 56.8 | 6 | 1 | 760 | 4780 | 85 | 22.0 |

| night-out scene | 39.0 | 33.5 | 45.5 | 7 | 4 | 6266 | 14,972 | 92 | 25.7 | |

| daytime-out scene | 80.0 | 90.5 | 70.7 | 12 | 0 | 535 | 585 | 161 | 40.5 | |

| CSTrack | night-in scene | 56.2 | 64.7 | 48.8 | 5 | 3 | 1 | 6191 | 79 | 16.8 |

| night-out scene | 48.4 | 52.8 | 44.4 | 10 | 4 | 1142 | 13,849 | 174 | 23.2 | |

| daytime-out scene | 79.5 | 91.1 | 69.4 | 11 | 0 | 333 | 679 | 194 | 45.6 | |

| SDGTrack | night-in scene | 80.0 | 78.1 | 81.9 | 9 | 0 | 1558 | 3371 | 11 | 17.2 |

| night-out scene | 75.8 | 69.6 | 82.6 | 17 | 2 | 1678 | 9342 | 10 | 41.3 | |

| daytime-out scene | 92.9 | 95.0 | 90.8 | 12 | 0 | 176 | 500 | 3 | 48.8 |

| Method | Dataset | DAA Re-Byte | HOTA↑ (%) | MOTA↑ (%) | IDF1↑ (%) | IDS↓ |

|---|---|---|---|---|---|---|

| CSTrack | night-in scene | 🗴 🗴 | 56.2 | 64.7 | 48.8 | 79 |

| night-out scene | 48.4 | 52.8 | 44.4 | 174 | ||

| daytime-out scene | 79.5 | 91.1 | 69.4 | 194 | ||

| Total | 57.7 | 64.3 | 51.8 | 447 | ||

| SDGTrack | night-in scene | ✓ 🗴 | 75.9 | 75.6 | 76.2 | 90 |

| night-out scene | 70.1 | 67.6 | 72.6 | 166 | ||

| daytime-out scene | 87.5 | 93.2 | 82.2 | 208 | ||

| Total | 77.6 | 76.7 | 78.6 | 464 | ||

| night-in scene | 🗴 ✓ | 75.4 | 71.3 | 79.7 | 20 | |

| night-out scene | 68.1 | 62.3 | 74.4 | 29 | ||

| daytime-out scene | 93.2 | 93.0 | 93.5 | 5 | ||

| Total | 78.4 | 74.3 | 82.7 | 54 | ||

| night-in scene | ✓ ✓ | 80.0 | 78.1 | 81.9 | 11 | |

| night-out scene | 75.8 | 69.6 | 82.6 | 10 | ||

| daytime-out scene | 92.9 | 95.0 | 90.8 | 3 | ||

| Total | 82.9 | 80.9 | 85.1 | 24 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, T.; Jie, D.; Zhuang, J.; Zhang, D.; He, J. SDGTrack: A Multi-Target Tracking Method for Pigs in Multiple Farming Scenarios. Animals 2025, 15, 1543. https://doi.org/10.3390/ani15111543

Liu T, Jie D, Zhuang J, Zhang D, He J. SDGTrack: A Multi-Target Tracking Method for Pigs in Multiple Farming Scenarios. Animals. 2025; 15(11):1543. https://doi.org/10.3390/ani15111543

Chicago/Turabian StyleLiu, Tao, Dengfei Jie, Junwei Zhuang, Dehui Zhang, and Jincheng He. 2025. "SDGTrack: A Multi-Target Tracking Method for Pigs in Multiple Farming Scenarios" Animals 15, no. 11: 1543. https://doi.org/10.3390/ani15111543

APA StyleLiu, T., Jie, D., Zhuang, J., Zhang, D., & He, J. (2025). SDGTrack: A Multi-Target Tracking Method for Pigs in Multiple Farming Scenarios. Animals, 15(11), 1543. https://doi.org/10.3390/ani15111543