Simple Summary

Poultry locomotion is an important indicator of animal health, welfare, and productivity. This research introduced an innovative approach that employs an enhanced track anything model (TAM) to track chickens in various experimental settings for locomotion analysis. The model demonstrated notable accuracy in speed detection, as evidenced by a root mean square error (RMSE) value of 0.02 m/s, offering a technologically advanced, consistent, and non-intrusive method for tracking and estimating the locomotion speed of chickens.

Abstract

Poultry locomotion is an important indicator of animal health, welfare, and productivity. Traditional methodologies such as manual observation or the use of wearable devices encounter significant challenges, including potential stress induction and behavioral alteration in animals. This research introduced an innovative approach that employs an enhanced track anything model (TAM) to track chickens in various experimental settings for locomotion analysis. Utilizing a dataset comprising both dyed and undyed broilers and layers, the TAM model was adapted and rigorously evaluated for its capability in non-intrusively tracking and analyzing poultry movement by intersection over union (mIoU) and the root mean square error (RMSE). The findings underscore TAM’s superior segmentation and tracking capabilities, particularly its exemplary performance against other state-of-the-art models, such as YOLO (you only look once) models of YOLOv5 and YOLOv8, and its high mIoU values (93.12%) across diverse chicken categories. Moreover, the model demonstrated notable accuracy in speed detection, as evidenced by an RMSE value of 0.02 m/s, offering a technologically advanced, consistent, and non-intrusive method for tracking and estimating the locomotion speed of chickens. This research not only substantiates TAM as a potent tool for detailed poultry behavior analysis and monitoring but also illuminates its potential applicability in broader livestock monitoring scenarios, thereby contributing to the enhancement of animal welfare and management in poultry farming through automated, non-intrusive monitoring and analysis.

1. Introduction

Precision livestock farming (PLF) has rapidly evolved into a key field, merging modern technology with traditional animal farming to improve animal welfare and streamline production processes [1]. In poultry farming, it is essential to monitor and understand bird movement and behavior closely. This not only ensures the well-being of the animals but also helps improve production efficiency in a sustainable environment [2,3,4]. Chickens display a variety of behaviors, including different movement patterns, social interactions, and reactions to their surroundings. This requires advanced systems to track and analyze them effectively.

Deep learning, an advanced form of machine learning technology, is becoming a key tool for analyzing and predicting patterns in large and complex datasets [5,6]. In animal behavior studies, deep learning helps provide a detailed understanding of movement, interactions between species, and overall health [7]. In poultry farming, the use of deep learning offers more than just a glimpse into bird behaviors. It acts as a powerful tool to closely observe and track their activities [8]. Regarding post-observational monitoring, a slew of algorithms has found their footing in this domain, with you only look once (YOLO) being at the forefront. For instance, in large-scale poultry farms, the surveillance of thousands of chickens for health, activity, and behavioral patterns becomes pivotal [9]. YOLO’s rapid detection capabilities can identify early signs of disease or distress in chickens by recognizing subtle behavioral changes, thereby aiding farmers in timely interventions [5]. In addition, the proposed ChickTrack model uses deep learning to detect chickens, count them, and measure their movement paths, providing spatiotemporal data and identifying behavioral anomalies from videos and images [10]. However, while YOLO has shown commendable performance in a variety of scenarios, it is not exempt from limitations. For effective use in poultry farming, it demands rigorous training on domain-specific data to fine-tune its detection and tracking capabilities. The nuances of poultry behavior, their interactions, and variations in physical appearances require YOLO to be trained with vast and diverse datasets. But even with comprehensive training, the model might still face challenges in tracking individual entities within dense flocks, especially under varying environmental conditions [11]. It is in this context that the track anything model (TAM) emerges as a promising candidate. This research aims to harness the potential of TAM, enhancing its capabilities to not just track individual chickens in a flock, but to analyze their complex locomotion patterns in real-time [12,13,14]. By bridging the gaps left by previous models and incorporating the strengths of YOLO’s detection capabilities, TAM is poised to offer a holistic solution to the multifaceted challenges in poultry behavior analysis.

In this research, an innovative approach involving the strategic dyeing of chickens was adopted to augment the model’s capability to distinctly identify and track individual entities within the flock. The dyed chickens, exhibiting distinct and consistent coloration, serve as a unique identifier, facilitating improved tracking and identity preservation by the algorithms. The research further explores the adaptation of TAM, integrating a speed detection function, thereby providing a comprehensive tool for detailed poultry behavior analysis and monitoring. Through rigorous evaluations and comparative analyses, this research aims to underscore the efficacy and potential of TAM and its adaptation, TAM-speed, in providing a multifaceted solution for real-time poultry behavior tracking and analysis, thereby contributing to the advancement of precision livestock farming.

The objectives of this study were to: (1) develop a tracker model for monitoring the locomotion speed of individual chicks based on the TAM; (2) compare the TAM-speed model with state-of-the-art models such as YOLO, which are trained using images of chickens; and (3) test the performance of these newly developed models under various production conditions.

2. Materials and Methods

2.1. Data Acquisition

The dataset was obtained from two different experimental chicken houses (i.e., broilers and layers houses) in the Poultry Research Center at the University of Georgia (UGA), USA. Chickens were subjected to dyeing to assess the detection differences between dyed and undyed samples. Broilers were dyed with specific colors (green, red, and blue) and laying hens with another set (green, red, and black). Figure 1 illustrates the experimental chicken houses alongside their dyed counterparts. HD cameras (PRO-1080MSFB, Swann Communications, Santa Fe Springs, CA, USA) were affixed at a 3 m height on ceilings and walls in each room, capturing chicken behavior at 18 FPS with a 1440 × 1080 resolution. Lens maintenance involved weekly cleaning for clarity [15]. Image data were initially stored on Swann video recorders and subsequently transferred to HDDs (Western Digital Corporation, San Jose, CA, USA) at UGA’s Department of Poultry Science.

Figure 1.

Contrast of dyed and undyed broilers and layers in experimental settings.

2.2. Marking Approach

Chickens were first subjected to a random selection process to determine which individuals would be used for the experiment. Once chosen, these chickens were dyed using the all-weather Quick Shot dye (LA-CO INDUSTRIES, INC, Elk Grove Village, IL, USA). The selection of dye colors aims to reduce feather flecking in dyed chickens [16]. The application process required a coordinated effort from a two-person team: while one individual gently held and restrained the bird to ensure its safety and ease of application, the other expertly applied the spray dye to the specific targeted areas on the chicken’s body, ensuring consistent and even coverage. This methodology was designed to minimize stress to the chickens while achieving a uniform application of the dye.

2.3. Model Innovation for Tracking Chickens

In our study, we utilized the track anything model (TAM) to monitor chicken locomotion. Recognizing the versatility of TAM, we further enhanced it with a speed detection function, enabling the real-time measurement of each chicken’s velocity [17]. In the preprocessing phase, we utilized the XMem video object segmentation (VOS) technique to discern the masks of chickens across subsequent video frames [18]. XMem, renowned for its efficiency in standard scenarios, usually generated a predicted mask. However, when this forecast was suboptimal, our system captured both the prediction and key intermediate parameters, namely the probe and affinity. In instances where the mask quality fell below expectations, the SAM technique was harnessed to further refine the XMem-proposed mask using the said parameters as guidance. Recognizing the limitations of automated systems in intricate situations, we also factored in human oversight, allowing manual mask adjustments during real-time tracking to ensure optimal accuracy (Figure 2). The TAM architecture was structured such that preprocessed frames of size 1440 × 1080 served as input. Within the model, convolutional neurons were dedicated to extracting essential features like shape and color patterns. Crucially, by integrating TAM’s inherent capabilities with our innovations, we developed a layer that not only estimated chicken trajectories but also calculated their speed using the change in positional coordinates across frames and the associated time differential [19]. The output then presented both the chicken’s position and speed. We later benchmarked our enhanced TAM with a speed detection model (TAM-speed) against several state-of-the-art simple online and real-time tracking (SORT) models including observation-centric SORT (OC-SORT) [20], deep association metric SORT (DeepSORT) [21], ByteTrack [22], and StrongSORT [23], focusing on criteria such as tracking accuracy, speed measurement accuracy, frame processing rate, and model robustness in scenarios with dense poultry populations. For the models like OC-SORT, DeepSORT, ByteTrack, and StrongSORT, the comparison with TAM was primarily based on their tracking function. However, when it came to comparing TAM with you only look once version 5 (YOLOv5) and you only look once version 8 (YOLOv8), our motivation was distinct. YOLOv5 and YOLOv8 are renowned for their advanced segmentation capabilities, which are crucial for detailed object recognition and delineation in complex environments [24]. By comparing TAM with these YOLO versions, we aimed to evaluate how our model fares in terms of segmentation accuracy, efficiency, and reliability. Given the intricate patterns and overlapping scenarios often observed in poultry behavior, a robust segmentation function can significantly enhance the precision of tracking. Thus, understanding how TAM stands against the segmentation prowess of YOLOv5 and YOLOv8 can provide insights into potential areas of improvement and adaptation for our model. This adaptation of the TAM model aims to provide a comprehensive solution for real-time poultry behavior tracking, potentially paving the way for broader applications in livestock monitoring.

Figure 2.

Pipeline of the track anything model (TAM) applied to chicken tracking [17].

2.4. Methods of Speed Calculation in Chicken Tracking

Video analysis often encounters challenges in measuring the velocity of chickens due to distortions from camera perspectives. A video, which comprises continuous frames, enables the calculation of “pixel speed” by evaluating the chicken’s pixel displacement across frames within a time interval of 55.56 milliseconds (ms) at 18 FPS. However, the chicken’s motion can appear distorted in 2D frames due to 3D environmental dynamics. Our solution transforms the video frame to a top-down perspective, using open source computer vision library (OpenCV)‘s perspective transformation capabilities based on known rectangle coordinates in the original frame (Figure 3) [25]. This transformation eliminates horizontal discrepancies and relates vertical pixel shifts to the chicken’s actual distance traveled. Using this method and the time between frames, we were able to estimate individual chickens’ average velocity, which also indicates their real-time walking/running speed in closely spaced frames.

Figure 3.

An illustration of a perspective transformation utilizing the OpenCV library.

So, the equation to compute the actual speed V for chickens:

where:

- ΔY is the vertical pixel displacement of the chicken in the top-down view.

- W is the actual physical distance represented by one pixel in the top-down view.

- M and N are the frame numbers where the chicken’s position was recorded.

2.5. Model Evaluation Metrics

In our endeavor to optimize the track anything models for monitoring chickens’ locomotion, rigorous model evaluations were centered on specific metrics to ensure precise and consistent tracking of individual chickens across video sequences. The multiple objects tracking accuracy (MOTA) gauges the accuracy of the tracking model, considering discrepancies like false positives, misses, and identity switches. The identification F1 score (IDF1) becomes paramount in assessing the model’s proficiency in recognizing and consistently maintaining the identity of each chicken throughout sequences. IDF1 is computed as the harmonic mean of identification precision (IDP) and identification recall (IDR). IDP evaluates how many detections of a particular chicken identity are correct, while IDR calculates the proportion of actual detections for a chicken identity. Furthermore, the identity switches (IDS) metric quantifies instances when the system erroneously alters a chicken’s identity. The frames per second (FPS) metric serves as a testament to the model’s real-time monitoring efficacy, elucidating its processing speed [26]. When comparing TAM with YOLO, the mean Intersection over Union (mIoU) becomes essential. mIoU is a metric that evaluates the overlap between the predicted segmentation and the ground truth, providing insights into the model’s segmentation accuracy. In the context of TAM-speed detection accuracy, the root means square error (RMSE) is employed to quantify the model’s prediction accuracy in determining the chickens’ speed [27]. RMSE represents the square root of the average squared differences between the observed actual speed and the speed predicted by the model. Through this lens, the TAM-speed model’s efficacy in accurately detecting and predicting the chickens’ speed was rigorously evaluated, ensuring that the model not only proficiently tracks the chickens but also precisely gauges their speed, thereby providing a comprehensive tool for detailed poultry behavior analysis and monitoring. For each metric, we calculated the average from test results based on a test dataset across different models. These average values were then utilized to compare the performance among the various models.

where N is the number of classes, is the predicted segmentation for class i, and is the ground truth for class i.

where n is the total number of observations, is the actual speed of the chicken in the ith observation, and is the predicted speed of the chicken in the ith observation.

3. Results

3.1. Comparison of Segmentation Approaches

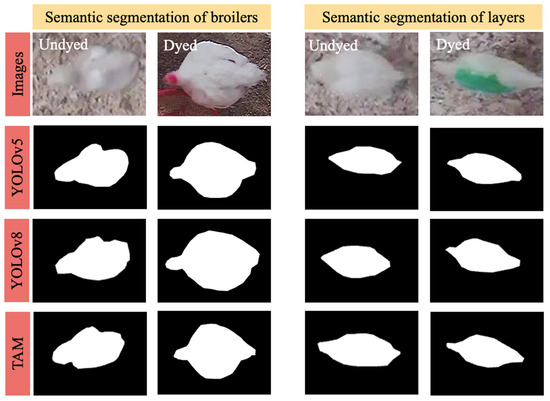

In our rigorous comparative analysis of segmentation methodologies for chicken tracking analysis, we evaluated YOLOv5, YOLOv8, and TAM. The chicken dataset, encompassing 1000 images, served as the foundation for this analysis. For the models necessitating training phases, specifically YOLOv5 and YOLOv8, a distribution of 600 images was allocated for training, 200 for validation, and the residual 200 for testing. The training regimen was orchestrated within a Python 3.7 environment, harnessing the capabilities of the PyTorch deep learning library, facilitated by an NVIDIA-SMI graphics card with a 16 GB capacity. Our segmentation efficacy evaluation spanned four distinct chicken categories: undyed broilers, undyed layers, dyed broilers, and dyed layers. A recurrent theme was the enhanced segmentation precision observed in dyed chickens, attributed to the pronounced color contrast introduced by dyeing, which counteracted the challenges posed by the chromatic resemblance between the chickens’ white plumage and the light brown litter. Despite the distinction between broilers and layers, no significant segmentation performance variance was observed, suggesting challenges predominantly driven by color rather than morphology. Among the methodologies, TAM, leveraging its pre-trained model, consistently outperformed both YOLOv5 and YOLOv8. This superiority can be attributed to TAM’s architectural robustness, its adeptness at high-dimensional feature extraction, and the efficacy of its pre-trained model [17], which potentially aligns better with the challenges presented by the chicken dataset. The forthcoming mIoU values in Table 1 will further detail TAM’s segmentation prowess, and a visual representation in Figure 4 underscores its potential as a leading choice for future chicken segmentation research.

Table 1.

A comparison of TAM and YOLOv5 and YOLOv8 in terms of mean intersection over union (mIoU).

Figure 4.

Visual comparison of segmentation results. YOLOv5 and YOLOv8 are compared with the TAM approach applied to diverse chicken datasets.

3.2. Assessing the Performance of Chicken Tracking

Navigating through the intricate domain of chicken tracking, a comparative analysis was conducted, scrutinizing various algorithms, each harboring a unique blend of detection and tracking capabilities. The algorithms under the lens included YOLOv5+DeepSORT, YOLOv5+ByteTrack, YOLOv8+OC-SORT, YOLOv8+StrongSORT, and TAM, each meticulously paired to harness the strengths of YOLO’s object detection and the respective tracking proficiencies of the algorithms. YOLOv5 was paired with both DeepSORT and ByteTrack, leveraging its enhanced detection capabilities with DeepSORT’s deep association metrics and ByteTrack’s byte-level tracking, respectively, to maintain persistent identities of chickens, especially amidst occlusions and flock interactions. The dyed chickens, with their distinct colors, provided a vibrant scenario to evaluate the color-based tracking of these algorithms. The color distinction in dyed chickens inherently offers a unique identifier that facilitates improved tracking and identity preservation by the algorithms. In experiments, dyed chickens consistently demonstrated superior MOTA and IDF1 scores across all algorithms, indicating enhanced tracking accuracy and identity preservation, respectively. For instance, YOLOv5+DeepSORT exhibited a MOTA of 92.13% and IDF1 of 90.25% for dyed chickens, compared to slightly lower percentages for undyed ones. This trend was consistent across all algorithms, underscoring the pivotal role of distinct coloration in enhancing tracking performance [28].

In the case of YOLOv8, it was paired with OC-SORT and StrongSORT, evaluating their potential to minimize identity switches and maintain tracking accuracy amidst the dynamic and interactive poultry house environment. The algorithms were evaluated based on the TAM, ensuring a balanced assessment of both accuracy and computational efficiency, focusing on metrics such as MOTA, IDF1, and IDS. In the context of dyed chickens, YOLOv5+DeepSORT exhibited commendable tracking accuracy, leveraging the color features effectively, yet faced challenges in maintaining identities during occlusions. YOLOv5+ByteTrack showcased robustness in handling identity switches but at a computational cost, reflected in a lower FPS. YOLOv8+OC-SORT demonstrated enhanced tracking accuracy in scenarios of chicken interactions and occlusions due to its observation-centric approach, while YOLOv8+StrongSORT, maintaining a high MOTA, faced challenges in dense chicken populations, leading to a higher IDS [29]. Considering the comparative values provided in experiments and illustrated in Table 2, TAM emerges as the superior model, substantiating its position as the best model among those evaluated. It boasts the highest MOTA, indicating the highest accuracy in tracking while minimizing misses and false positives. It achieves the highest IDF1 score, showcasing its proficiency in maintaining consistent identities throughout the tracking period. Furthermore, TAM registers the lowest number of Identity Switches (IDS), reflecting its capability to preserve identities accurately across frames with minimal switches. This unique capability of TAM to provide accurate tracking alongside its superior tracking accuracy underscores its unparalleled utility in comprehensive poultry behavior analysis, thereby substantiating its position as the best model among the ones evaluated. This assessment reveals a trade-off between tracking accuracy and computational efficiency, suggesting that advancements in TAM could potentially enhance poultry tracking in future applications.

Table 2.

Comparative analysis of tracking algorithms for dyed and undyed chickens.

3.3. Evaluating Velocity Measurement

In the meticulous pursuit of accurate and reliable chicken tracking, TAM-speed has been subjected to a thorough evaluation, particularly focusing on its capability to accurately detect and quantify the speed of chickens within a controlled environment. In our experiments, where the average speed of the chickens was measured to be 0.05 m/s, the precision with which TAM-speed could predict and validate these speed measurements became paramount. Utilizing the RMSE as a pivotal metric to quantify the average discrepancies between the speeds predicted by TAM-speed and the actual observed speeds, a comprehensive analysis was conducted. Given that RMSE provides a high penalty for larger errors, it serves as a stringent metric, ensuring that the model’s predictions are not only accurate on average but also do not deviate significantly in individual predictions. In our analysis, dyed chickens, with their distinct and consistent coloration, provided a somewhat stable basis for the tracking algorithm to latch onto, potentially minimizing the instances where tracking was lost or inaccurately assigned. The RMSE for dyed chickens was recorded at a laudable 0.02 m/s, indicating a high degree of accuracy in speed detection. The distinct coloration likely assisted the model in maintaining a consistent track, thereby enabling more accurate speed calculations over a sequence of frames. Conversely, undyed chickens, with their more variable and less distinct visual features, posed a slightly more complex scenario for TAM-speed. The RMSE for undyed chickens was marginally higher, recorded at 0.025 m/s. This subtle elevation in error might be attributed to the challenges in maintaining consistent tracking amidst the visually similar undyed chickens, potentially leading to brief losses in tracking or misidentifications, which in turn, could slightly skew the speed calculations [30]. Despite these discrepancies, it is crucial to note that in the dynamic and somewhat unpredictable environment of a poultry house, numerous variables can influence the chickens’ speed, such as their age, size, and overall health, as well as external factors like lighting and noise levels. Despite the challenges, TAM-speed has showcased a commendable capability in speed detection, providing predictions that, while subject to error, still provide valuable insight into the locomotion and behavior of the chickens. The utility of such a model extends beyond mere speed detection, offering potential insights into the health and well-being of the poultry by monitoring their mobility and activity levels [31]. In conclusion, while TAM-speed demonstrates a notable accuracy in speed detection, it is imperative to continually refine the model, considering the myriad of variables that can influence the speed and behavior of chickens. Future iterations of the model might benefit from additional training data, encompassing a wider range of scenarios and conditions, to further enhance its predictive accuracy and reliability in diverse poultry house environments. Table 3 summarizes the velocity changes among dyed and undyed chickens. Figure 5 displays a visualization of speed and track detected by TAM-speed.

Table 3.

Comparative analysis of velocity for dyed and undyed chickens.

Figure 5.

Track and detection speed of broilers (the green number indicates the tracking number, while the black number represents speed).

4. Discussion

4.1. Chicken Segmentation Approaches

In the present exploration, TAM has notably eclipsed both YOLOv5 and YOLOv8 in a variety of tracking tasks, particularly those involving chickens in dyed condition. Specifically, TAM’s integrated mode, which amalgamates tracking and speed measurement, has showcased unparalleled precision across diverse tracking scenarios. This exemplary performance can be attributed to several pivotal factors. Firstly, TAM utilizes a specialized tracking mechanism that adeptly captures intricate movement patterns and complex trajectories, enabling it to focus on pertinent features and trajectories, thereby facilitating more accurate tracking. Moreover, it is worth noting that TAM surpassed other models without necessitating additional training or extensive fine-tuning. This implies that the architecture and design of TAM inherently possess robust tracking capabilities, negating the need for exhaustive model adjustments or specialized training datasets. This inherent proficiency not only underscores TAM as a more practical and effective option for tracking applications but also highlights its potential to be applied in various poultry tracking scenarios without the need for exhaustive model adjustments or specialized training datasets. In addition, the segmentation of dyed chickens consistently exhibited superior performance across all algorithms when compared to undyed chickens. This can be attributed to the distinct colors of the dyed chickens, which provide a more discernible feature for the model to track, thereby reducing identity switches and enhancing tracking accuracy [32]. This nuanced capability of TAM to adeptly manage variations in object features further solidifies its position as a versatile and reliable model for chicken tracking applications. Comparing the tracking of whole chickens, it was observed that tracking dyed chickens demonstrated superior performance across all metrics. This is because tracking dyed chickens may provide additional distinctive features for the model to latch onto, thereby facilitating improved tracking results. The tracking of a dyed chicken provides a more comprehensive understanding of the object by capturing its overall shape and structure, which facilitates improved tracking results. Table 4 presents a comparative analysis of TAM with various research studies in the domain of chicken tracking using computer vision. For instance, EfficientNet-B0 achieved a mIoU of 89.34% in a study involving the segmentation of meat carcasses using a dataset of 108,296 images [6]. Similarly, MSAnet secured a mIoU of 87.7% for segmenting caged poultry across a 300-image dataset [2], while Mask R-CNN recorded a mIoU between 83.6% and 88.8% for segmenting hens in a 1700-image dataset [33]. Contrarily, TAM demonstrated a mIoU of 93.12% for poultry tracking, potentially outperforming other methods even without a specialized target dataset. This highlights TAM’s ability to accurately trace chicken movements within images and underscores its efficacy and potential applicability in broader computer vision tasks related to chicken tracking.

Table 4.

Comparison of different methods on segmentation accuracy.

4.2. The Precision of Velocity Measurement in Poultry Tracking

In the realm of poultry tracking, the implementation of speed detection, particularly through computer vision, remains a relatively unexplored territory. The TAM-speed model, however, has emerged as a pioneering approach in this domain, offering a novel perspective in estimating the velocity of broiler and layers. This model, while primarily focused on tracking, also encapsulates the capability to measure speed, providing a dual functionality that is both innovative and crucial for comprehensive poultry behavior analysis. In contrast, the field of vehicle speed detection has witnessed substantial advancements, with numerous methodologies being developed and refined over the years. A common approach within this domain involves the utilization of a perspective transformer, which aids in estimating the speed of vehicles by analyzing the change in position of a vehicle over consecutive frames, considering the camera’s perspective [26,34]. This method, while effective for vehicles, presents unique challenges when applied to poultry due to the erratic and non-linear movement patterns exhibited by chickens. Comparatively, other methods of speed detection in poultry have traditionally relied on wearable equipment or radio speed detection techniques. Wearable devices, while providing accurate data, may influence the natural behavior and movement of the chickens due to the physical burden and potential stress induced by the equipment [35,36]. On the other hand, radio speed detection, which typically involves tracking the radio frequency identification (RFID) tags attached to the chickens, may offer valuable data but is often constrained by its dependency on the proximity and orientation of the RFID tags, potentially limiting the accuracy and consistency of the data collected [37,38,39]. TAM-speed, in this context, offers a non-intrusive, consistent, and technologically advanced method of not only tracking but also estimating the speed of chickens without the need for physical contact or proximity-based technology. It leverages computer vision to analyze movement and estimate speed, providing a wealth of data that are both accurate and comprehensive, without influencing the natural behaviors of the poultry.

4.3. Limitations and Future Works

TAM and its derivative, TAM-speed, exhibit a notable limitation in their substantial computational and memory demands, especially when applied to scenarios involving the tracking of numerous entities over extended durations. In specific test cases, even when utilizing the robust NVIDIA A100 GPU, which is equipped with a substantial 96 GB of memory and is renowned for its computational prowess, the models encountered difficulties in sustaining tracking for periods exceeding 2 min, particularly when tasked with simultaneously tracking more than 20 individual chickens. This computational demand not only restricts the duration and scale of tracking but also poses significant barriers to its application in real-world, large-scale poultry farms where continuous monitoring of larger flocks is imperative for effective management and research.

In future endeavors, leveraging distributed computing can mitigate TAM’s computational demands, enabling the analysis of larger poultry populations and extended tracking durations. Additionally, incorporating edge computing strategies, where initial data processing occurs on local devices, could alleviate the computational load on the central model, ensuring efficient and timely poultry behavior analysis. Furthermore, implementing adaptive sampling techniques, which dynamically adjust the TAM model’s sampling rate based on scene complexity, could optimize computational resource allocation, ensuring detailed analyses during complex behaviors while conserving resources during simpler scenarios [40].

5. Conclusions

The track anything model and its adaptation, TAM-speed, have emerged as potent tools for analyzing chicken locomotion and behavior, demonstrating superior performance in tracking and segmenting dyed chickens compared to other models like YOLOv5 and YOLOv8. TAM achieved a mean Intersection over Union (mIoU) of up to 95.13%, showcasing its architectural robustness and effective pre-trained model. Furthermore, TAM-speed exhibited commendable speed detection capabilities, with an RMSE of 0.02 m/s for dyed chickens, providing valuable insights into poultry behavior and potential health indicators. This research underscores TAM’s potential as a multifaceted tool for comprehensive poultry behavior analysis without requiring extensive training or fine-tuning, paving the way for advanced applications in precision livestock farming.

Author Contributions

Conceptualization, L.C.; Methodology, X.Y. and L.C.; Formal analysis, X.Y.; Investigation, X.Y., R.B.B. and B.P.; Resources, L.C.; Writing—original draft, X.Y., L.C., R.B.B. and B.P. All authors have read and agreed to the published version of the manuscript.

Funding

USDA-NIFA AFRI CARE (2023-68008-39853); Egg Industry Center; Georgia Research Alliance (Venture Fund); UGA COVID Recovery Research Fund; and USDA-NIFA Hatch Multistate projects: Fostering Technologies, Metrics, and Behaviors for Sustainable Advances in Animal Agriculture (S1074).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on reasonable request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Morrone, S.; Dimauro, C.; Gambella, F.; Cappai, M.G. Industry 4.0 and Precision Livestock Farming (PLF): An up to Date Overview across Animal Productions. Sensors 2022, 22, 4319. [Google Scholar] [CrossRef]

- Li, W.; Xiao, Y.; Song, X.; Lv, N.; Jiang, X.; Huang, Y.; Peng, J. Chicken Image Segmentation via Multi-Scale Attention-Based Deep Convolutional Neural Network. IEEE Access 2021, 9, 61398–61407. [Google Scholar] [CrossRef]

- Yang, X.; Chai, L.; Bist, R.B.; Subedi, S.; Wu, Z. A Deep Learning Model for Detecting Cage-Free Hens on the Litter Floor. Animals 2022, 12, 1983. [Google Scholar] [CrossRef]

- Siriani, A.L.R.; Kodaira, V.; Mehdizadeh, S.A.; de Alencar Nääs, I.; de Moura, D.J.; Pereira, D.F. Detection and Tracking of Chickens in Low-Light Images Using YOLO Network and Kalman Filter. Neural Comput. Appl. 2022, 34, 21987–21997. [Google Scholar] [CrossRef]

- Liu, H.-W.; Chen, C.-H.; Tsai, Y.-C.; Hsieh, K.-W.; Lin, H.-T. Identifying Images of Dead Chickens with a Chicken Removal System Integrated with a Deep Learning Algorithm. Sensors 2021, 21, 3579. [Google Scholar] [CrossRef]

- Gorji, H.T.; Shahabi, S.M.; Sharma, A.; Tande, L.Q.; Husarik, K.; Qin, J.; Chan, D.E.; Baek, I.; Kim, M.S.; MacKinnon, N.; et al. Combining Deep Learning and Fluorescence Imaging to Automatically Identify Fecal Contamination on Meat Carcasses. Sci. Rep. 2022, 12, 2392. [Google Scholar] [CrossRef] [PubMed]

- Bist, R.B.; Yang, X.; Subedi, S.; Chai, L. Mislaying Behavior Detection in Cage-Free Hens with Deep Learning Technologies. Poult. Sci. 2023, 102, 102729. [Google Scholar] [CrossRef] [PubMed]

- Ben Sassi, N.; Averós, X.; Estevez, I. Technology and Poultry Welfare. Animals 2016, 6, 62. [Google Scholar] [CrossRef] [PubMed]

- Tong, Q.; Zhang, E.; Wu, S.; Xu, K.; Sun, C. A Real-Time Detector of Chicken Healthy Status Based on Modified YOLO. SIViP 2023, 17, 4199–4207. [Google Scholar] [CrossRef]

- Neethirajan, S. ChickTrack–A Quantitative Tracking Tool for Measuring Chicken Activity. Measurement 2022, 191, 110819. [Google Scholar] [CrossRef]

- Elmessery, W.M.; Gutiérrez, J.; Abd El-Wahhab, G.G.; Elkhaiat, I.A.; El-Soaly, I.S.; Alhag, S.K.; Al-Shuraym, L.A.; Akela, M.A.; Moghanm, F.S.; Abdelshafie, M.F. YOLO-Based Model for Automatic Detection of Broiler Pathological Phenomena through Visual and Thermal Images in Intensive Poultry Houses. Agriculture 2023, 13, 1527. [Google Scholar] [CrossRef]

- Lu, G.; Li, S.; Mai, G.; Sun, J.; Zhu, D.; Chai, L.; Sun, H.; Wang, X.; Dai, H.; Liu, N.; et al. AGI for Agriculture. arXiv 2023, arXiv:2304.06136. [Google Scholar]

- Yang, X.; Dai, H.; Wu, Z.; Bist, R.; Subedi, S.; Sun, J.; Lu, G.; Li, C.; Liu, T.; Chai, L. SAM for Poultry Science. arXiv 2023, arXiv:2305.10254. [Google Scholar]

- Ahmadi, M.; Lonbar, A.G.; Sharifi, A.; Beris, A.T.; Nouri, M.; Javidi, A.S. Application of Segment Anything Model for Civil Infrastructure Defect Assessment. arXiv 2023, arXiv:2304.12600. [Google Scholar]

- Subedi, S.; Bist, R.; Yang, X.; Chai, L. Tracking Pecking Behaviors and Damages of Cage-Free Laying Hens with Machine Vision Technologies. Comput. Electron. Agric. 2023, 204, 107545. [Google Scholar] [CrossRef]

- Shi, H.; Li, B.; Tong, Q.; Zheng, W.; Zeng, D.; Feng, G. Effects of LED Light Color and Intensity on Feather Pecking and Fear Responses of Layer Breeders in Natural Mating Colony Cages. Animals 2019, 9, 814. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Gao, M.; Li, Z.; Gao, S.; Wang, F.; Zheng, F. Track Anything: Segment Anything Meets Videos. arXiv 2023, arXiv:2304.11968. [Google Scholar]

- Cheng, H.K.; Schwing, A.G. XMem: Long-Term Video Object Segmentation with an Atkinson-Shiffrin Memory Model. In Computer Vision–ECCV 2022; Springer: Cham, Switzerland, 2022. [Google Scholar]

- Sun, Z.; Bebis, G.; Miller, R. On-Road Vehicle Detection: A Review. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 694–711. [Google Scholar] [CrossRef]

- Cao, J.; Pang, J.; Weng, X.; Khirodkar, R.; Kitani, K. Observation-Centric SORT: Rethinking SORT for Robust Multi-Object Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 9686–9696. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple Online and Realtime Tracking with a Deep Association Metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017. [Google Scholar]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. ByteTrack: Multi-Object Tracking by Associating Every Detection Box. In Proceedings of the Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022, Proceedings, Part XXII; Springer: Berlin/Heidelberg, Germany, 2022; pp. 1–21. [Google Scholar]

- Du, Y.; Zhao, Z.; Song, Y.; Zhao, Y.; Su, F.; Gong, T.; Meng, H. StrongSORT: Make DeepSORT Great Again. IEEE Trans. Multimed. 2023, 25, 8725–8737. [Google Scholar] [CrossRef]

- Wei, Y.; Zhang, D.; Gao, M.; Tian, Y.; He, Y.; Huang, B.; Zheng, C. Breast Cancer Prediction Based on Machine Learning. J. Softw. Eng. Appl. 2023, 16, 348–360. [Google Scholar] [CrossRef]

- Culjak, I.; Abram, D.; Pribanic, T.; Dzapo, H.; Cifrek, M. A Brief Introduction to OpenCV. In Proceedings of the 2012 Proceedings of the 35th International Convention MIPRO, Opatija, Croatia, 21–25 May 2012; pp. 1725–1730. [Google Scholar]

- Zhang, D.; Zhou, F.; Yang, X.; Gu, Y. Unleashing the Power of Self-Supervised Image Denoising: A Comprehensive Review. arXiv 2023, arXiv:2308.00247. [Google Scholar]

- Li, C.; Peng, Q.; Wan, X.; Sun, H.; Tang, J. C-Terminal Motifs in Promyelocytic Leukemia Protein Isoforms Critically Regulate PML Nuclear Body Formation. J. Cell Sci. 2017, 130, 3496–3506. [Google Scholar] [CrossRef] [PubMed]

- Bidese Puhl, R. Precision Agriculture Systems for the Southeast US Using Computer Vision and Deep Learning; Auburn University: Auburn, AL, USA, 2023. [Google Scholar]

- Early Warning System for Open-Beaked Ratio, Spatial Dispersion, and Movement of Chicken Using CNNs. Available online: https://elibrary.asabe.org/abstract.asp?JID=5&AID=54230&CID=oma2023&T=1 (accessed on 15 October 2023).

- Okinda, C.; Nyalala, I.; Korohou, T.; Okinda, C.; Wang, J.; Achieng, T.; Wamalwa, P.; Mang, T.; Shen, M. A Review on Computer Vision Systems in Monitoring of Poultry: A Welfare Perspective. Artif. Intell. Agric. 2020, 4, 184–208. [Google Scholar] [CrossRef]

- Fang, C.; Huang, J.; Cuan, K.; Zhuang, X.; Zhang, T. Comparative Study on Poultry Target Tracking Algorithms Based on a Deep Regression Network. Biosyst. Eng. 2020, 190, 176–183. [Google Scholar] [CrossRef]

- Wang, J.; Liu, Z.; Zhao, L.; Wu, Z.; Ma, C.; Yu, S.; Dai, H.; Yang, Q.; Liu, Y.; Zhang, S.; et al. Review of Large Vision Models and Visual Prompt Engineering. Meta-Radiol. 2023, 1, 100047. [Google Scholar] [CrossRef]

- Li, G.; Hui, X.; Lin, F.; Zhao, Y. Developing and Evaluating Poultry Preening Behavior Detectors via Mask Region-Based Convolutional Neural Network. Animals 2020, 10, 1762. [Google Scholar] [CrossRef]

- Wu, L.; Wang, Y.; Liu, J.; Shan, D. Developing a Time-Series Speed Prediction Model Using Transformer Networks for Freeway Interchange Areas. Comput. Electr. Eng. 2023, 110, 108860. [Google Scholar] [CrossRef]

- Fujinami, K.; Takuno, R.; Sato, I.; Shimmura, T. Evaluating Behavior Recognition Pipeline of Laying Hens Using Wearable Inertial Sensors. Sensors 2023, 23, 5077. [Google Scholar] [CrossRef]

- Siegford, J.M.; Berezowski, J.; Biswas, S.K.; Daigle, C.L.; Gebhardt-Henrich, S.G.; Hernandez, C.E.; Thurner, S.; Toscano, M.J. Assessing Activity and Location of Individual Laying Hens in Large Groups Using Modern Technology. Animals 2016, 6, 10. [Google Scholar] [CrossRef]

- Chien, Y.-R.; Chen, Y.-X. An RFID-Based Smart Nest Box: An Experimental Study of Laying Performance and Behavior of Individual Hens. Sensors 2018, 18, 859. [Google Scholar] [CrossRef]

- Feiyang, Z.; Yueming, H.; Liancheng, C.; Lihong, G.; Wenjie, D.; Lu, W. Monitoring Behavior of Poultry Based on RFID Radio Frequency Network. Int. J. Agric. Biol. Eng. 2016, 9, 139–147. [Google Scholar] [CrossRef]

- Doornweerd, J.E.; Kootstra, G.; Veerkamp, R.F.; de Klerk, B.; Fodor, I.; van der Sluis, M.; Bouwman, A.C.; Ellen, E.D. Passive Radio Frequency Identification and Video Tracking for the Determination of Location and Movement of Broilers. Poult. Sci. 2023, 102, 102412. [Google Scholar] [CrossRef] [PubMed]

- Xie, L.; Wei, L.; Zhang, X.; Bi, K.; Gu, X.; Chang, J.; Tian, Q. Towards AGI in Computer Vision: Lessons Learned from GPT and Large Language Models. arXiv 2023, arXiv:2306.08641. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).