SheepInst: A High-Performance Instance Segmentation of Sheep Images Based on Deep Learning

Abstract

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset

2.1.1. Data Collection and Labelling

2.1.2. Data Augmentation

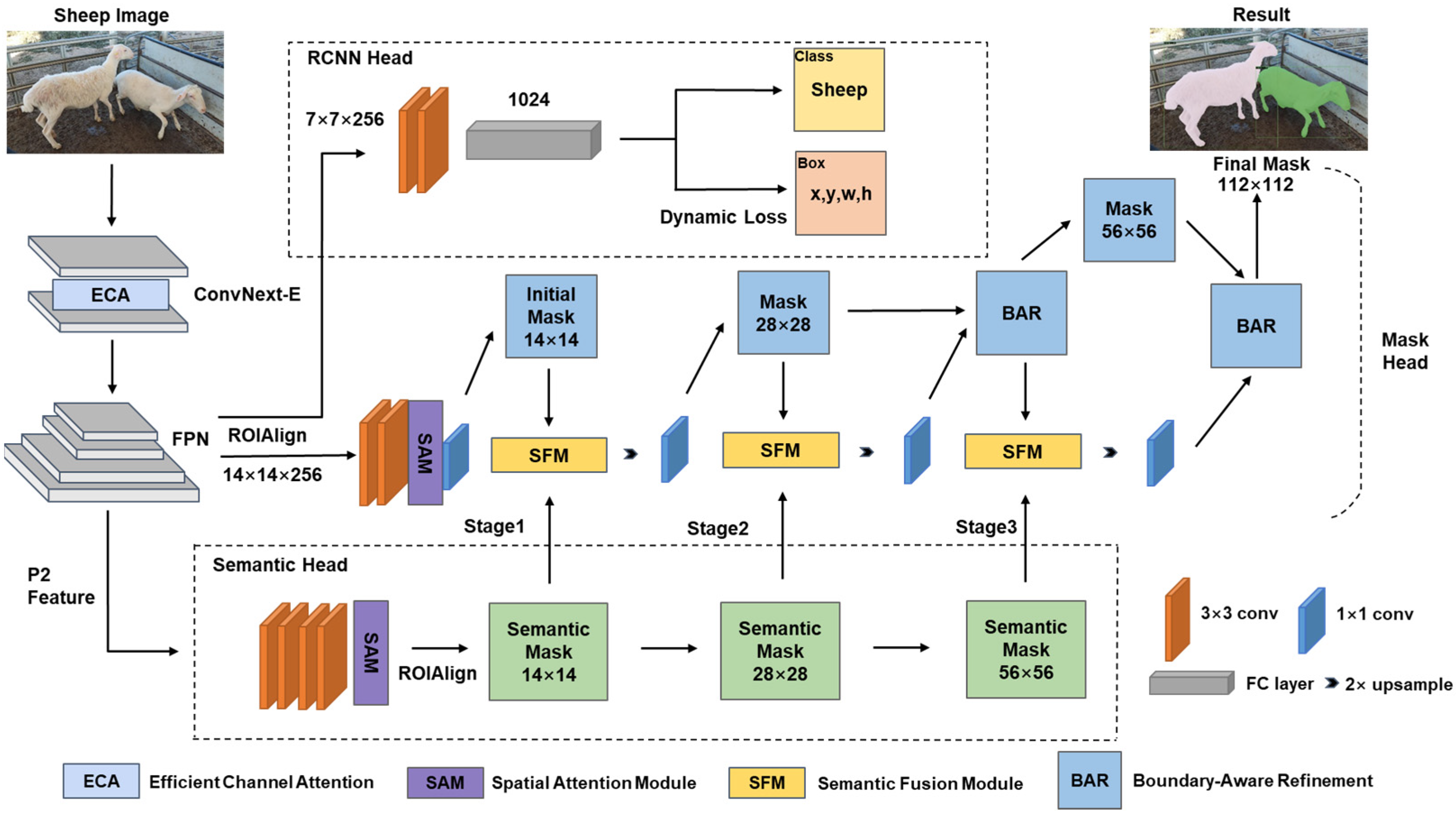

2.2. SheepInst

2.2.1. Overview

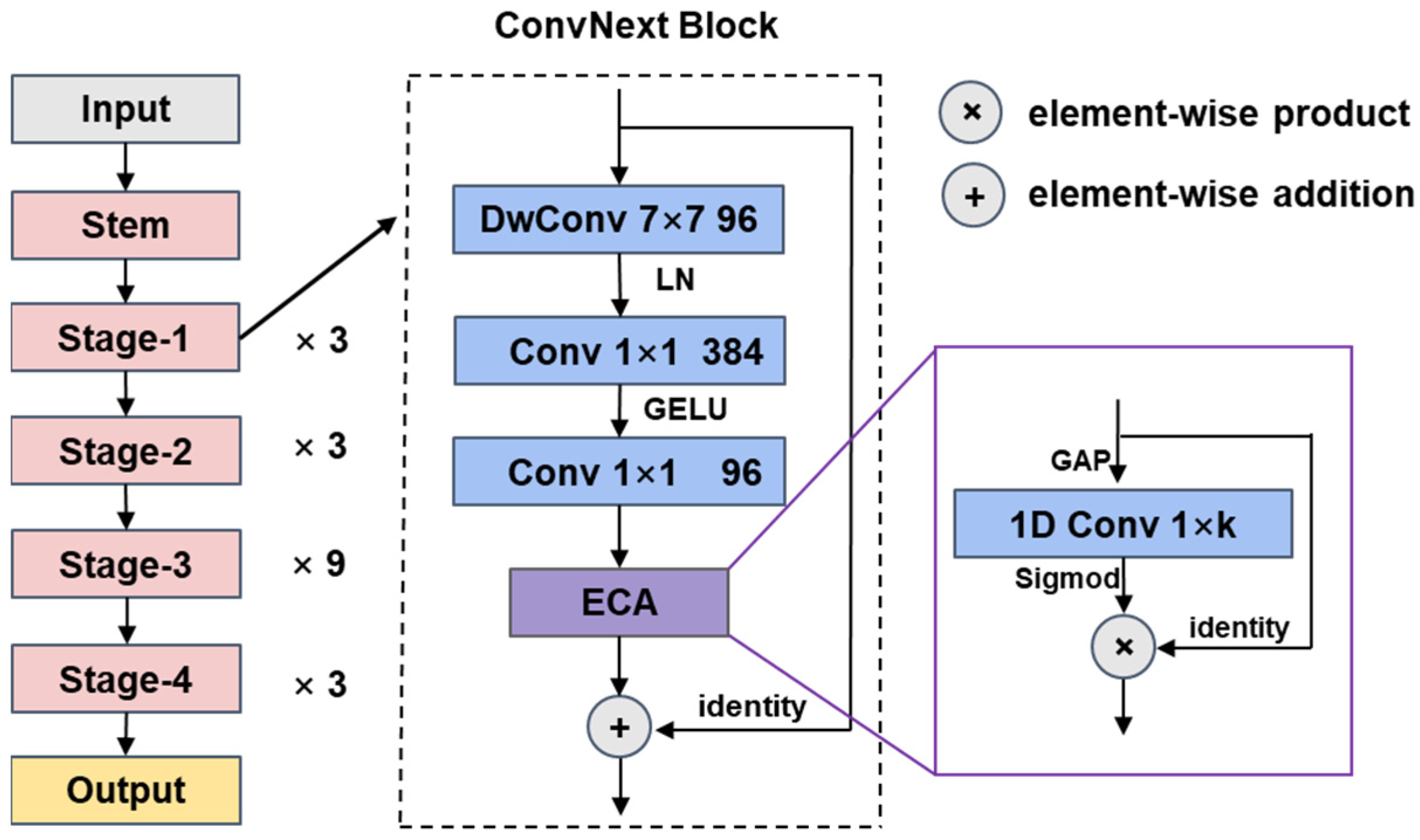

2.2.2. Feature Acquisition with ConvNeXt-E

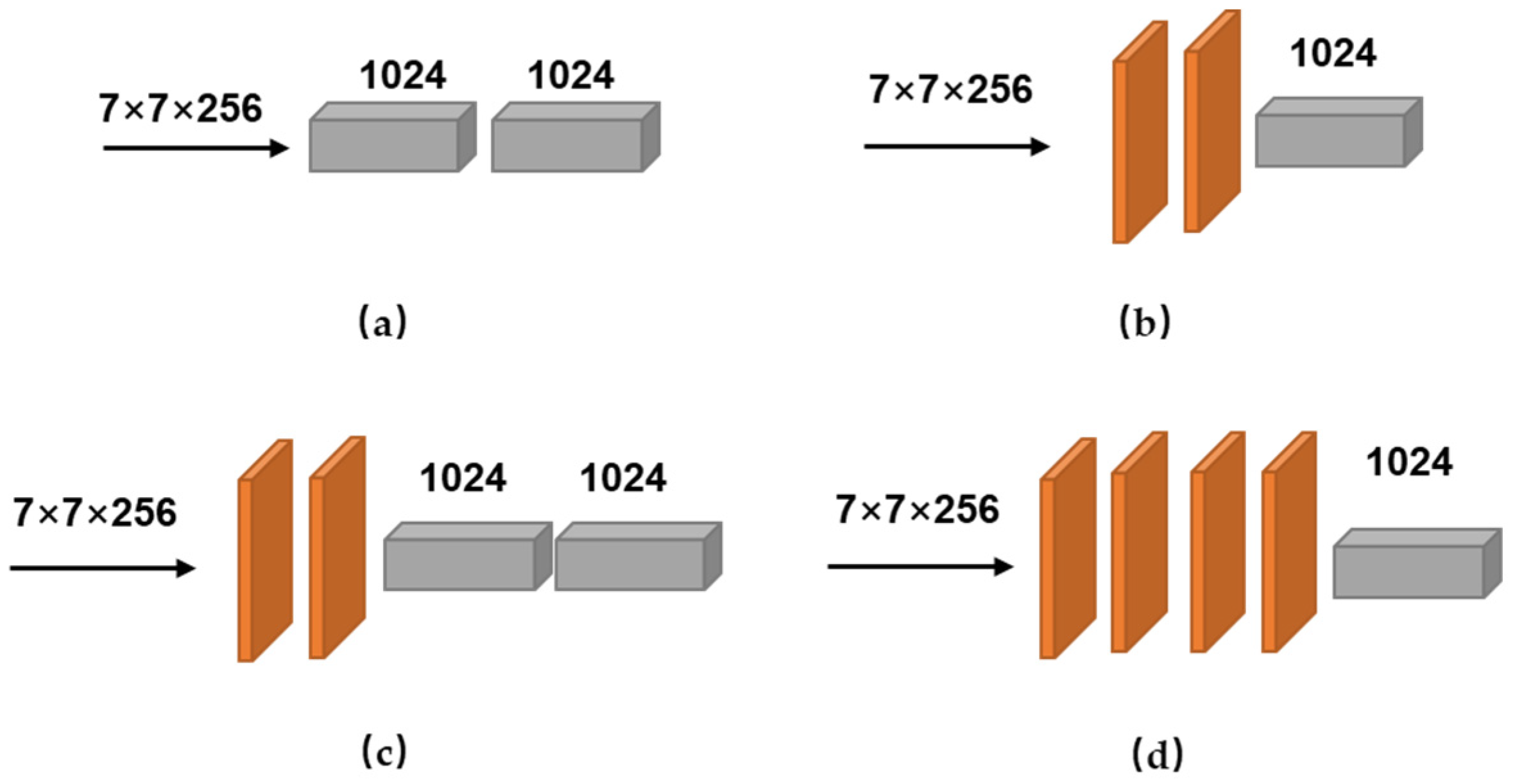

2.2.3. Detecting Sheep with Improved Dynamic R-CNN

2.2.4. Sheep Segmentation with RefineMask-SAM

2.3. Implementation Details

3. Results

3.1. Evaluation Metrics

3.1.1. COCO Metrics

3.1.2. Boundary AP

3.2. Comparison with Other Methods

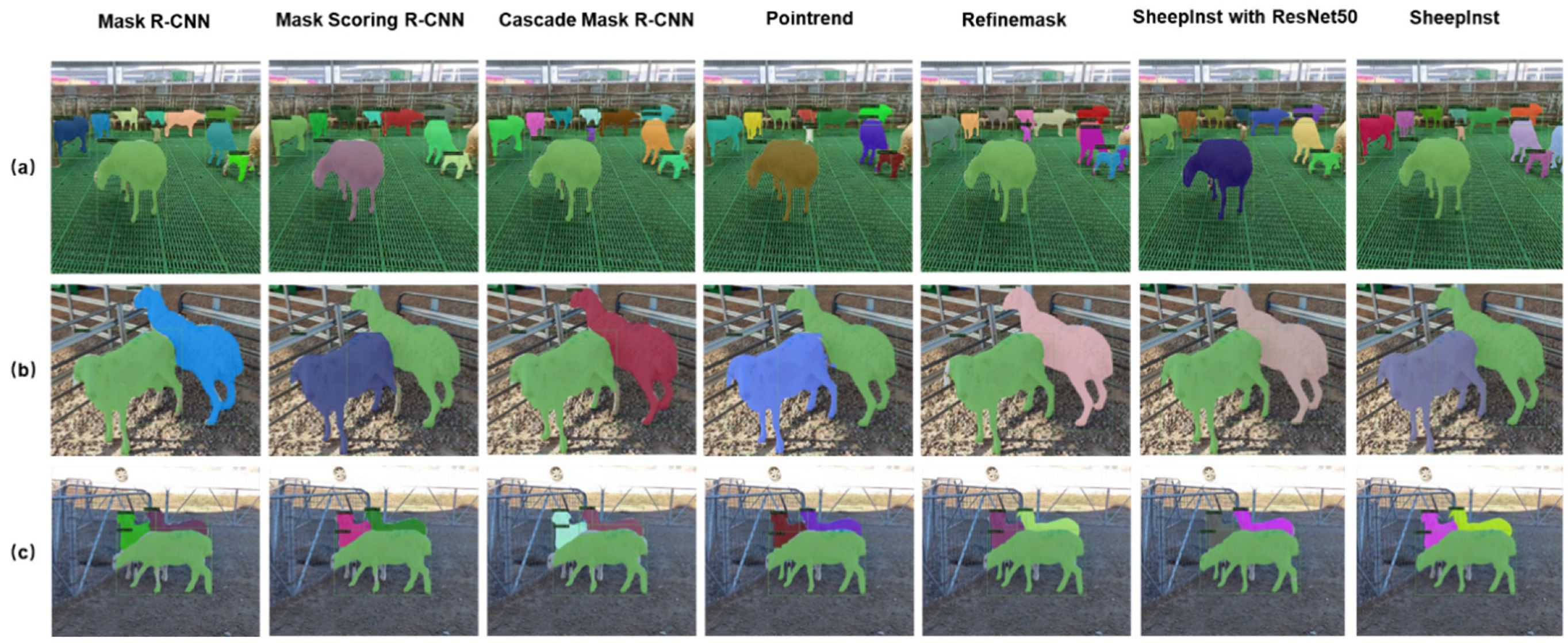

3.3. Qualitative Results

3.4. Ablation Study

3.4.1. Effectiveness of Data Augmentation

3.4.2. Effectiveness of ConvNeXt-E

3.4.3. Effectiveness of Attention Modules in ConvNeXt

3.4.4. Effectiveness of Improved Structure of Detector

3.4.5. Effectiveness of SAM in Segmentation

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Berckmans, D. Precision livestock farming technologies for welfare management in intensive livestock systems. Rev. Sci. Tech. 2014, 33, 189–196. [Google Scholar] [CrossRef] [PubMed]

- García, R.; Aguilar, J.; Toro, M.; Pinto, A.; Rodríguez, P. A systematic literature review on the use of machine learning in precision livestock farming. Comput. Electron. Agric. 2020, 179, 105826. [Google Scholar] [CrossRef]

- Wathes, C.M.; Kristensen, H.H.; Aerts, J.M.; Berckmans, D. Is precision livestock farming an engineer’s daydream or nightmare, an animal’s friend or foe, and a farmer’s panacea or pitfall? Comput. Electron. Agric. 2008, 64, 2–10. [Google Scholar] [CrossRef]

- Hu, H.; Dai, B.; Shen, W.; Wei, X.; Sun, J.; Li, R.; Zhang, Y. Cow identification based on fusion of deep parts features. Biosyst. Eng. 2020, 192, 245–256. [Google Scholar] [CrossRef]

- Shang, C.; Zhao, H.; Wang, M.; Wang, X.; Jiang, Y.; Gao, Q. Individual identification of cashmere goats via method of fusion of multiple optimization. Comput. Animat. Virtual Worlds 2022, e2048. [Google Scholar] [CrossRef]

- Yang, A.; Huang, H.; Zheng, B.; Li, S.; Gan, H.; Chen, C.; Yang, X.; Xue, Y. An automatic recognition framework for sow daily behaviours based on motion and image analyses. Biosyst. Eng. 2020, 192, 56–71. [Google Scholar] [CrossRef]

- He, C.; Qiao, Y.; Mao, R.; Li, M.; Wang, M. Enhanced LiteHRNet based sheep weight estimation using RGB-D images. Comput. Electron. Agric. 2023, 206, 107667. [Google Scholar] [CrossRef]

- Suwannakhun, S.; Daungmala, P. Estimating Pig Weight with Digital Image Processing using Deep Learning. In Proceedings of the 2018 14th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS), Las Palmas de Gran Canaria, Spain, 26–29 November 2018; pp. 320–326. [Google Scholar]

- Chen, C.; Zhu, W.; Norton, T. Behaviour recognition of pigs and cattle: Journey from computer vision to deep learning. Comput. Electron. Agric. 2021, 187, 106255. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Huang, Z.; Huang, L.; Gong, Y.; Huang, C.; Wang, X. Mask Scoring R-CNN. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 6402–6411. [Google Scholar]

- Carvalho, O.L.F.d.; Júnior, O.A.d.C.; Albuquerque, A.O.d.; Santana, N.C.; Guimarães, R.F.; Gomes, R.A.T.; Borges, D.L. Bounding Box-Free Instance Segmentation Using Semi-Supervised Iterative Learning for Vehicle Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 3403–3420. [Google Scholar] [CrossRef]

- Li, Y.; Zhao, H.; Qi, X.; Wang, L.; Li, Z.; Sun, J.; Jia, J. Fully Convolutional Networks for Panoptic Segmentation. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 214–223. [Google Scholar]

- Xiong, Y.; Liao, R.; Zhao, H.; Hu, R.; Bai, M.; Yumer, E.; Urtasun, R. UPSNet: A Unified Panoptic Segmentation Network. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 8810–8818. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.-Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Qiao, Y.; Truman, M.; Sukkarieh, S. Cattle segmentation and contour extraction based on Mask R-CNN for precision livestock farming. Comput. Electron. Agric. 2019, 165, 104958. [Google Scholar] [CrossRef]

- Salau, J.; Krieter, J. Instance Segmentation with Mask R-CNN Applied to Loose-Housed Dairy Cows in a Multi-Camera Setting. Animals 2020, 10, 2402. [Google Scholar] [CrossRef] [PubMed]

- Dohmen, R.; Catal, C.; Liu, Q. Image-based body mass prediction of heifers using deep neural networks. Biosyst. Eng. 2021, 204, 283–293. [Google Scholar] [CrossRef]

- Xu, J.; Wu, Q.; Zhang, J.; Tait, A. Automatic Sheep Behaviour Analysis Using Mask R-Cnn. In Proceedings of the 2021 Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, Australia, 29 November–1 December 2021; pp. 1–6. [Google Scholar]

- Sant’Ana, D.A.; Carneiro Brito Pache, M.; Martins, J.; Astolfi, G.; Pereira Soares, W.; Neves de Melo, S.L.; da Silva Heimbach, N.; de Moraes Weber, V.A.; Gonçalves Mateus, R.; Pistori, H. Computer vision system for superpixel classification and segmentation of sheep. Ecol. Inform. 2022, 68, 101551. [Google Scholar] [CrossRef]

- Sant’Ana, D.A.; Pache, M.C.B.; Martins, J.; Soares, W.P.; de Melo, S.L.N.; Garcia, V.; de Moares Weber, V.A.; da Silva Heimbach, N.; Mateus, R.G.; Pistori, H. Weighing live sheep using computer vision techniques and regression machine learning. Mach. Learn. Appl. 2021, 5, 100076. [Google Scholar] [CrossRef]

- Zhang, G.; Lu, X.; Tan, J.; Li, J.; Zhang, Z.; Li, Q.; Hu, X. RefineMask: Towards High-Quality Instance Segmentation with Fine-Grained Features. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 6857–6865. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.-Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 11966–11976. [Google Scholar]

- Zhang, H.; Chang, H.; Ma, B.; Wang, N.; Chen, X. Dynamic R-CNN: Towards High Quality Object Detection via Dynamic Training. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 260–275. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Ghiasi, G.; Cui, Y.; Srinivas, A.; Qian, R.; Lin, T.-Y.; Cubuk, E.D.; Le, Q.V.; Zoph, B. Simple Copy-Paste is a Strong Data Augmentation Method for Instance Segmentation. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 2917–2927. [Google Scholar]

- Hao, Y.; Liu, Y.; Wu, Z.; Han, L.; Chen, Y.; Chen, G.; Chu, L.; Tang, S.; Yu, Z.; Chen, Z.; et al. EdgeFlow: Achieving Practical Interactive Segmentation with Edge-Guided Flow. In Proceedings of the 2021 IEEE International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; pp. 1551–1560. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.u.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems 30, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations (ICLR), Vienna, Austria, 4 May 2021. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 9992–10002. [Google Scholar]

- Ramachandran, P.; Parmar, N.; Vaswani, A.; Bello, I.; Levskaya, A.; Shlens, J. Stand-Alone Self-Attention in Vision Models. In Proceedings of the Advances in Neural Information Processing Systems 32, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Xie, S.; Girshick, R.; Dollar, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5987–5995. [Google Scholar]

- Hendrycks, D.; Gimpel, K. Gaussian Error Linear Units (GELUs). arXiv 2016, arXiv:1606.08415. [Google Scholar] [CrossRef]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer Normalization. arXiv 2016, arXiv:1606.08415. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Raghu, M.; Poole, B.; Kleinberg, J.M.; Ganguli, S.; Sohl-Dickstein, J. On the Expressive Power of Deep Neural Networks. In Proceedings of the 34th International Conference on Machine Learning (ICML), Sydney, Australia, 6–11 August 2017; pp. 2847–2854. [Google Scholar]

- Chatterjee, S. Coherent Gradients: An Approach to Understanding Generalization in Gradient Descent-based Optimization. In Proceedings of the International Conference on Learning Representations (LCLR), Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Chen, K.; Wang, J.; Pang, J.; Cao, Y.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Xu, J.; et al. MMDetection: Open MMLab Detection Toolbox and Benchmark. arXiv 2019, arXiv:1906.07155. [Google Scholar] [CrossRef]

- Cheng, B.; Girshick, R.; Dollar, P.; Berg, A.C.; Kirillov, A. Boundary IoU: Improving Object-Centric Image Segmentation Evaluation. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 15329–15337. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving Into High Quality Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Kirillov, A.; Wu, Y.; He, K.; Girshick, R. PointRend: Image Segmentation As Rendering. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 9796–9805. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13708–13717. [Google Scholar]

- Zhong, Z.; Lin, Z.Q.; Bidart, R.; Hu, X.; Daya, I.B.; Li, Z.; Zheng, W.-S.; Li, J.; Wong, A. Squeeze-and-Attention Networks for Semantic Segmentation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 13062–13071. [Google Scholar]

| Methods | Backbone | FPS a | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Mask R-CNN [12] | ResNet-50 + FPN | 77.3 | 93.7 | 88.6 | 75.4 | 93.6 | 87.2 | 55.6 | 12.4 |

| Mask Scoring R-CNN [13] | 77.2 | 93.6 | 86.6 | 75.9 | 92.8 | 86.5 | 55.0 | 12.1 | |

| Cascade Mask R-CNN [47] | 81.4 | 82.9 | 87.9 | 74.6 | 90.9 | 85.7 | 54.9 | 10.7 | |

| PointRend [48] | 78.1 | 93.9 | 87.3 | 82.9 | 93.8 | 89.8 | 70.0 | 11.4 | |

| RefineMask [25] | 69.4 | 93.3 | 82.4 | 83.0 | 93.4 | 89.0 | 69.4 | 8.7 | |

| Ours w/o Aug * | 78.4 | 93.6 | 88.0 | 85.1 | 93.6 | 89.5 | 73.9 | 7.9 | |

| Mask R-CNN | ResNet-101 + FPN | 79.8 | 93.7 | 89.5 | 76.6 | 93.5 | 86.4 | 57.7 | 11.5 |

| Mask Scoring R-CNN | 80.0 | 92.8 | 89.7 | 76.5 | 91.8 | 88.7 | 55.7 | 10.6 | |

| Cascade Mask R-CNN | 84.2 | 92.9 | 90.9 | 76.3 | 91.9 | 86.9 | 56.5 | 9.3 | |

| PointRend | 81.3 | 92.9 | 89.7 | 84.0 | 93.9 | 90.6 | 71.6 | 9.8 | |

| RefineMask | 81.2 | 92.8 | 89.8 | 87.3 | 93.7 | 91.6 | 76.0 | 8.6 | |

| Ours w/o Aug * | 82.5 | 93.6 | 90.5 | 88.4 | 93.7 | 91.7 | 76.7 | 7.1 | |

| Ours w/Aug * | ConvNeXt-E + FPN | 89.1 | 93.9 | 93.9 | 91.3 | 94.8 | 93.7 | 79.5 | 8.2 |

| Methods | ||||||

|---|---|---|---|---|---|---|

| SheepInst w/CP * | 86.9 | 87.2 | 89.8 | 88.9 | 79.3 | 78.2 |

| SheepInst w/CP&SSJ | 89.0 | 88.6 | 91.7 | 90.8 | 80.6 | 79.8 |

| SheepInst w/CP&LSJ | 89.8 | 89.1 | 91.8 | 91.3 | 80.8 | 79.5 |

| Backbone | Parameters * | |||

|---|---|---|---|---|

| ResNet-50 | 85.1 | 88.8 | 77.5 | 23.283 M |

| ResNet-101 | 86.8 | 89.6 | 78.3 | 42.275 M |

| Swin-T | 82.3 | 88.6 | 75.7 | 27.497 M |

| ResNeXt-50 | 85.3 | 88.8 | 76.9 | 22.765 M |

| ResNeXt-101 | 87.7 | 90.0 | 79.0 | 41.913 M |

| ConvNeXt | 88.4 | 91.0 | 79.2 | 27.797 M |

| ConvNeXt-E (ours) | 89.1 | 91.3 | 79.5 | 27.797 M |

| Backbone | Parameters * | |||

|---|---|---|---|---|

| ConvNeXt | 88.4 | 91.0 | 79.2 | 27.797 M |

| ConvNeXt + SE | 88.6 | 91.0 | 79.2 | 27.899 M |

| ConvNeXt + CA | 88.5 | 90.7 | 78.9 | 28.122 M |

| ConvNeXt + CBAM | 88.7 | 91.2 | 79.3 | 28.203 M |

| ConvNeXt + ECA (ours) | 89.1 | 91.3 | 79.5 | 27.797 M |

| Backbone | RCNN Structure | Parameters * | |||

|---|---|---|---|---|---|

| Faster R-CNN | 2FC | 85.2 | 90.4 | 78.2 | - |

| Dynamic R-CNN | 2FC | 87.1 | 90.6 | 78.7 | - |

| Improved Dynamic R-CNN | 2Conv + 2FC | 89.3 | 91.2 | 79.7 | +1.18 M |

| 4Conv + 1FC | 88.6 | 91.0 | 79.4 | +1.31 M | |

| 2Conv + 1FC (ours) | 89.1 | 91.3 | 79.5 | +0.13 M |

| Methods | Kernel Size | ||||

|---|---|---|---|---|---|

| RefineMask | - | 90.7 | 94.9 | 93.6 | 79.3 |

| +SA | - | 90.8 | 94.9 | 93.7 | 78.9 |

| +SAM | 3 | 91.1 | 94.9 | 92.9 | 79.3 |

| +SAM (ours) | 7 | 91.3 | 94.8 | 93.7 | 79.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, H.; Mao, R.; Li, M.; Li, B.; Wang, M. SheepInst: A High-Performance Instance Segmentation of Sheep Images Based on Deep Learning. Animals 2023, 13, 1338. https://doi.org/10.3390/ani13081338

Zhao H, Mao R, Li M, Li B, Wang M. SheepInst: A High-Performance Instance Segmentation of Sheep Images Based on Deep Learning. Animals. 2023; 13(8):1338. https://doi.org/10.3390/ani13081338

Chicago/Turabian StyleZhao, Hongke, Rui Mao, Mei Li, Bin Li, and Meili Wang. 2023. "SheepInst: A High-Performance Instance Segmentation of Sheep Images Based on Deep Learning" Animals 13, no. 8: 1338. https://doi.org/10.3390/ani13081338

APA StyleZhao, H., Mao, R., Li, M., Li, B., & Wang, M. (2023). SheepInst: A High-Performance Instance Segmentation of Sheep Images Based on Deep Learning. Animals, 13(8), 1338. https://doi.org/10.3390/ani13081338