1. Introduction

Accurate wildlife density and abundance monitoring assists in analysis of the causes of biodiversity loss and assessment of the impacts of conservation measures [

1]. According to the International Union for Conservation of Nature (IUCN), up to 17,000 species are considered as “data deficient” [

2]. Therefore, there is an urgent need for effective large-scale wildlife monitoring systems with great spatiotemporal resolution. Camera traps have become an essential tool for wildlife monitoring in the recent decades, collecting huge amounts of data every day [

3]. Because manual annotation of such huge amounts of data is time-consuming, automatic wildlife recognition is an appealing method for analyzing these data [

4,

5]. Deep learning methods have recently emerged as the dominant method for automatically recognizing wildlife. Xie et al. [

6] introduced the SE-ResNeXt model for recognizing 26 wildlife species in the Snapshot Serengeti dataset, with the highest Top-1 and Top-5 accuracy levels of 95.3% and 98.8%, respectively. Silva et al. [

7] utilized ResNet50 to classify different species of “bush pigs”, with a best accuracy of 98.33%. Nguyen et al. [

8] designed Lite AlexNet to identify the three most common species in South Central Victoria, Australia, with an accuracy of 90.4%. Tan et al. [

5] compared three mainstream detection models, YOLOv5, FCOS, and Cascade R-CNN, on the Northeast Tiger and Leopard National Park wildlife image dataset (NTLNP dataset). YOLOv5, FCOS, and Cascade R-CNN all obtained high average precision values: >97.9% at mAP_0.5 and >81.2% at mAP_0.5: 0.95. These studies indicate that deep convolutional neural networks (CNNs) can perform well in wildlife recognition.

Although existing automatic wildlife recognition methods have achieved higher and higher accuracy, these models’ capacity to generalize across diverse datasets is not as strong as it could be. Geirhos et al. [

9] suggested that the aforementioned issue might be attributed to shortcut learning, in which these models tend to learn simple decision rules during training. These learned decision rules can only perform well on datasets that are independent and identically distributed (i.i.d.). In a non-independent and identically distributed (non-IID) dataset, however, performance deteriorated. Because camera traps are typically deployed in fixed locations, wildlife monitoring images appear to have similar backgrounds over time. This demonstrates a strong coupling link between wildlife and their backgrounds, providing shortcuts for a deep learning model to recognize wildlife via the backgrounds. These models may fail to recognize the same species with different backgrounds given the shortcut-learned decision rules. To increase the accuracy and generalization capabilities of wildlife recognition models, shortcut learning must be avoided.

Many strategies for mitigating shortcut learning have been investigated. Szegedy et al. [

10] proved that data distribution has a direct impact on deep neural network learning and generated adversarial samples by adding perturbations to the data to avoid shortcut learning. Cubuk et al. [

11] improved the generalization of object recognition models by augmenting data with information, geometric distortion, and color distortion. Arjovsky et al. [

12] used causality to distinguish the false correlation and the interest region in the sample and then proposed invariant risk minimization (IRM), a novel learning framework that can estimate nonlinear, invariant, causal predictors across different training environments, allowing for out-of-distribution generalization. Finn et al. [

13] utilized meta-learning to train a model on multiple learning tasks, resulting in high generalization performance with only a modest number of new training samples. Overall, data augmentation is a a simple and effective technique for avoiding shortcut learning.

Furthermore, it is beneficial to conduct recognition directly on a camera trap to improve the effectiveness of wildlife monitoring [

14]. Due to the limited computing capability and memory of camera traps, a lightweight recognition model is required. Model compression is a commonly used method to generate a lightweight model [

15]. Knowledge distillation [

16] transfers the knowledge gained by a large teacher network to a small student network without losing validity, allowing model compression to be realized. The structure of the student model is crucial to the success of compression and the performance of the compressed model. Wen et al. [

17] suggested a structural sparse learning approach for obtaining the student network from a large CNN, which accelerated AlexNet by 5.1 and 3.1 times on CPU and GPU, respectively, with only a 1% drop in accuracy. Rather than compressing the teacher network, Du et al. [

18] selected a shallow reference model as the student network, which was then combined with a random forest model to generate more precise probability values for each class. Crowley et al. [

19] separated the normal convolution of the teacher model into different groups of point-wise convolutions to construct several student models with test errors ranging from 5.0% to 7.87%.

Model pruning is another popular model compression method that can significantly reduce the number of parameters by removing certain parts of the model [

20]. It is regarded as an effective method for achieving a student network. There are two types of pruning, i.e., unstructured pruning and structured pruning. Unstructured pruning approaches remove weights on a case-by-case basis, e.g., HashedNet [

20], which uses a hash function to randomly group network weights, then allows weights in the same group to share parameters to minimize model complexity. Structured pruning methods remove weights in groups, such as a channel or a layer, and are often more efficient than unstructured pruning methods. Li et al. [

21] removed convolution kernels with low effect on network accuracy and then retrained the pruned model to boost accuracy. Luo et al. [

22] developed a pruning technique for ThiNet based on a greedy strategy. By considering network pruning to be an optimization issue, the statistical information obtained from the next layer’s input–output relationship is utilized to determine how to prune the present layer. ThiNet can reduce the parameters and FLOPs of ResNet-50 by more than half, whereas top-5 can only reduce them by 1%. He et al. [

23] utilized a channel pruning approach to reduce the channel information in the input feature map before retaining it in the output feature map by adjusting the weights. Under a scenario with five-fold acceleration, the approach only degrades the accuracy of a VGG16 network by 0.3%. To achieve a high compression ratio, Jin et al. [

24] proposed a hybrid pruning strategy that integrated kernel pruning and weight pruning. Aghli et al. [

25] first employed the weight pruning method to create a student model, then knowledge distillation was achieved by minimizing the cosine similarity between the layers of the teacher and student networks. When compared to the state-of-the-art methods, higher compression rates can be achieved with comparable accuracy. All in all, it appears that the combination method of model pruning and knowledge distillation is more suited for generating a lightweight student network with acceptable accuracy.

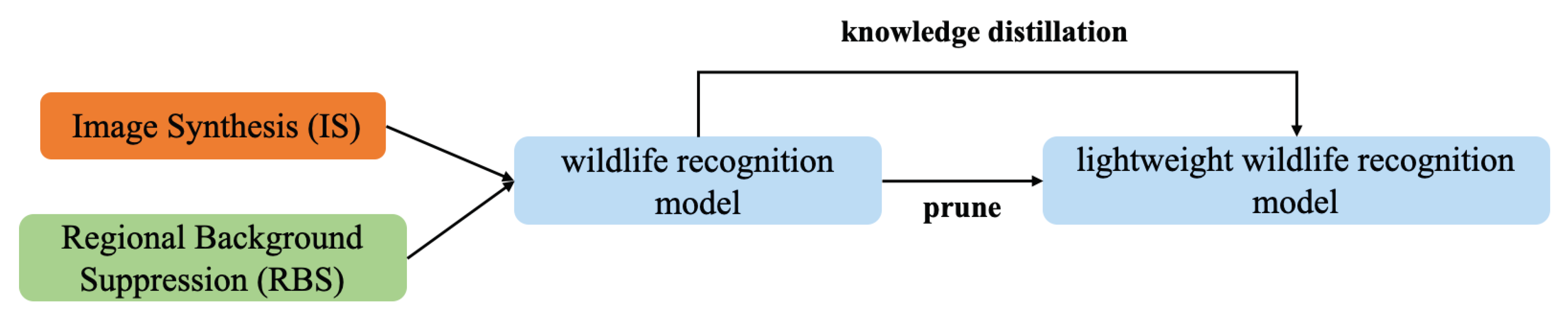

In this paper, we propose a lightweight automatic wildlife recognition model design method that avoids shortcut learning. To the best of our knowledge, this is the first work that focuses on the shortcut learning of camera trap image recognition. First, two data augmentation strategies—image synthesis (IS) and regional background suppression (RBS)—are introduced in order to prevent the wildlife recognition model from shortcut learning and improve its performance. The Resnet50-based wildlife recognition model is then pruned with the genetic algorithm and adaptive BN (GA-ABN) to construct the student model. Finally, utilizing the Resnet50-based wildlife recognition model as a teacher model, knowledge distillation is employed to fine-tune the student model, yielding a lightweight automatic wildlife recognition model. The technological framework for the design of the lightweight automatic wildlife recognition model is depicted in

Figure 1.

To summarize, the contribution of this work is two-fold:

- (1)

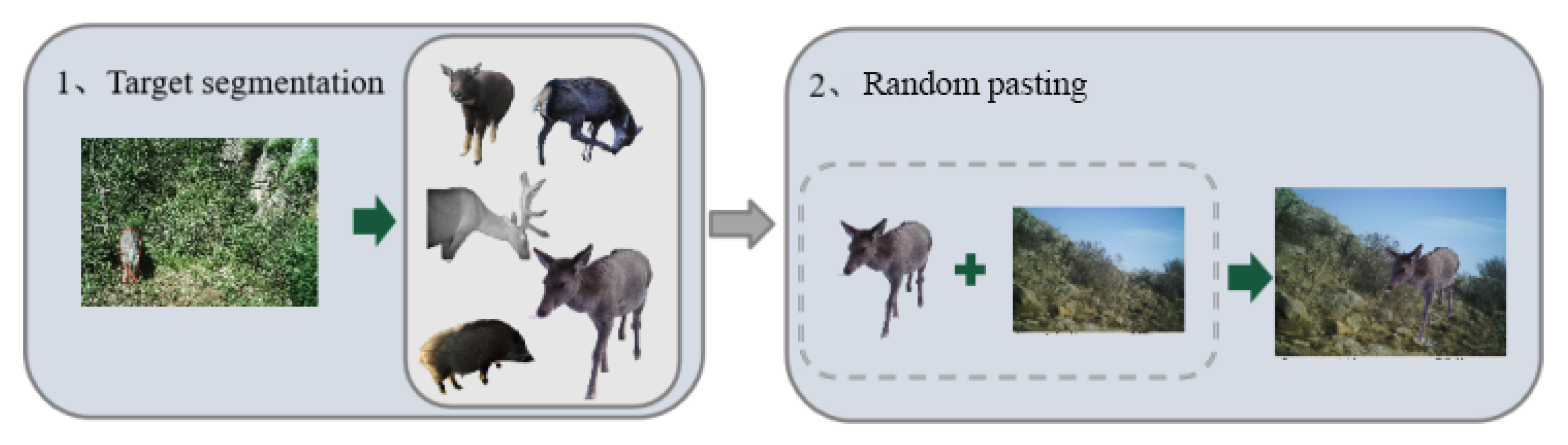

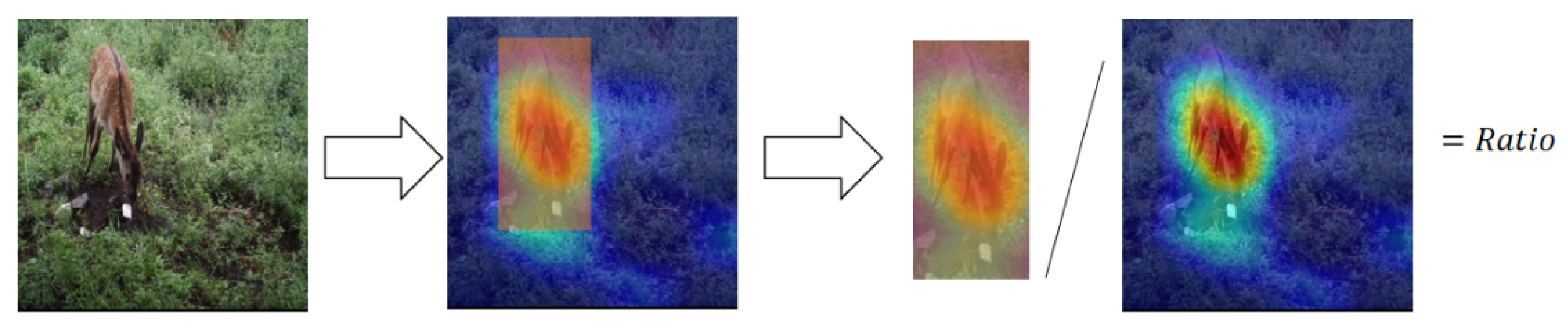

We introduce a novel mixed data augmentation method that combines IS and RBS to mitigate shortcut learning in wildlife recognition.

- (2)

We propose an effective model compression strategy based on GA-ABN for adaptively reducing the redundant parameters of a large wildlife recognition model while maintaining accuracy.

5. Conclusions

This study proposed a lightweight wildlife recognition model design method that combines a mixed data augmentation strategy, as well as a model compression method with integrated pruning and knowledge distillation.

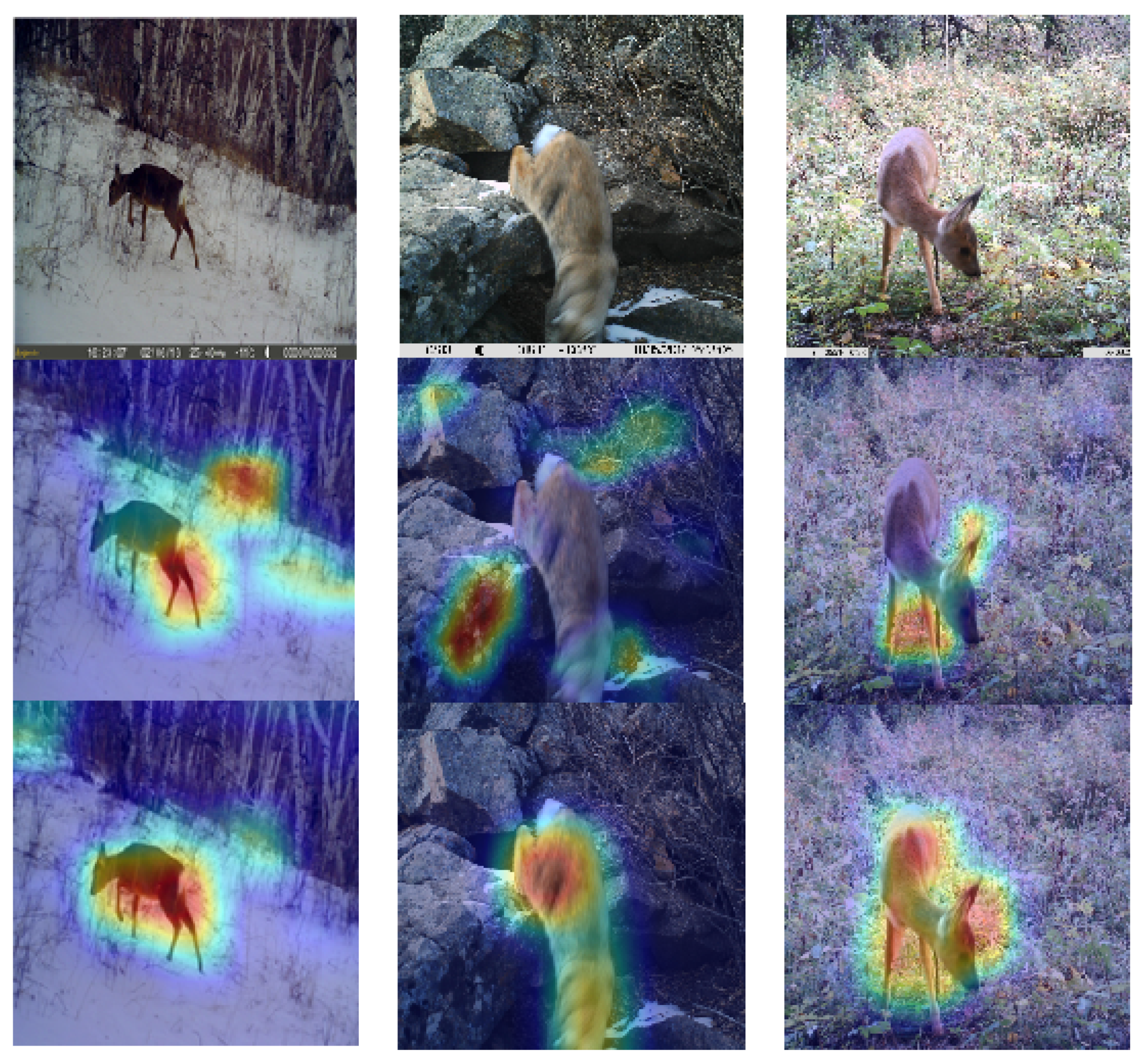

The mixed data augmentation method, combined with IS and RBS, was introduced to modify the data distribution of the dataset, guiding the model to focus more on the wildlife feature. The and FRoH were both improved after using the mixed data augmentation method, demonstrating that shortcut learning was mitigated.

Furthermore, the lightweight model was constructed using an integrated method that first employed the structural pruning technique based on GA-ABN, then fine-tuned the pruned model with KD-MSE. Using GA-ABN pruning and a compression rate of 50 ± 5%, the number of model parameters reduced by 57.4%, FLOPs decreased by 46.1%, FPS improved to 62.9 times, and accuracy decreased by just 4.73%. After fine-tuning with KD-MSE, the accuracy of the model improved by 2.12%.

Overall, this study provides a novel method for developing lightweight wildlife recognition models, which can result in lightweight models with relatively high accuracy and significantly decreased computing cost. Our method has important practical implications for utilizing deep learning models for wildlife monitoring on edge intelligent devices. This can help to support long-term wildlife monitoring, biodiversity resource assessments, and ecological conservation.

In the future, we intend to deploy our lightweight model to camera traps and augment them with wireless communication capabilities to create a real-time wildlife monitoring system. The performance of proposed system will be evaluated in the wild.