Detection of Pig Movement and Aggression Using Deep Learning Approaches

Abstract

:Simple Summary

Abstract

1. Introduction

2. Materials and Methods

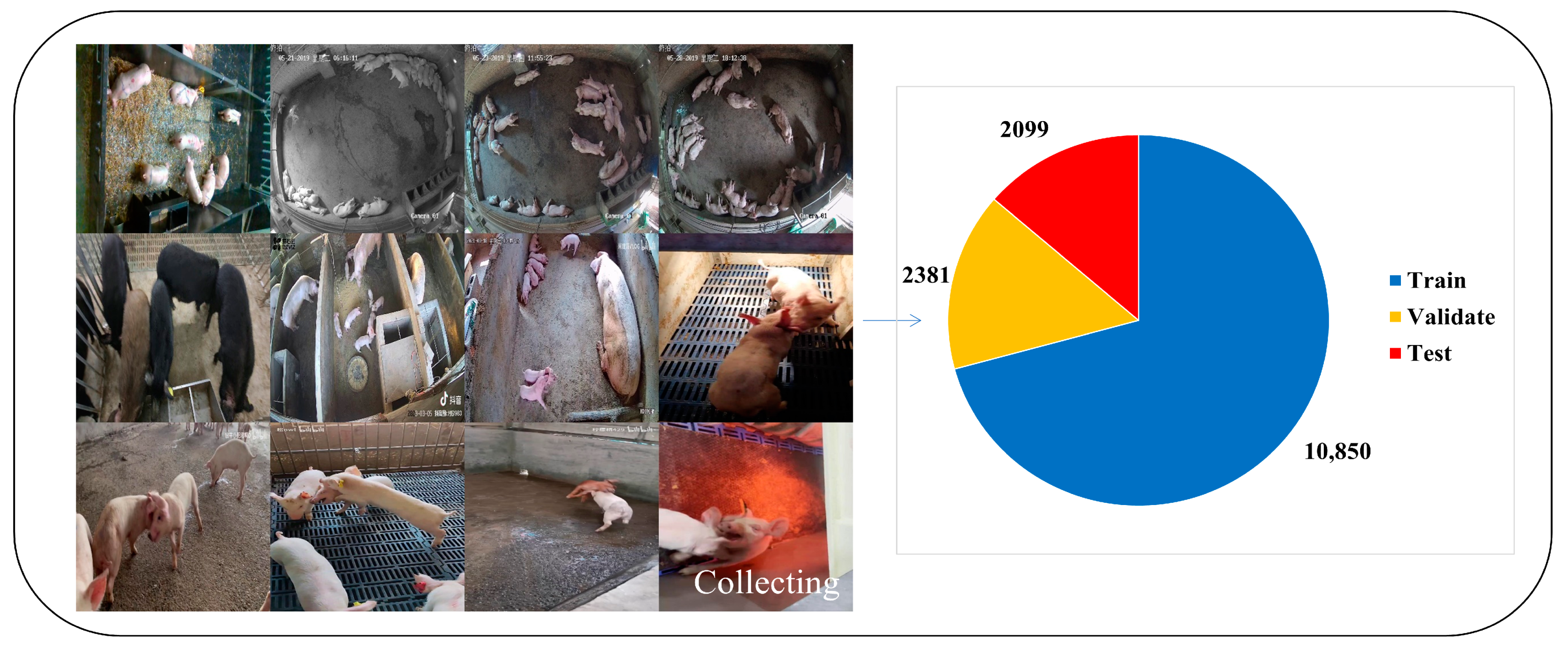

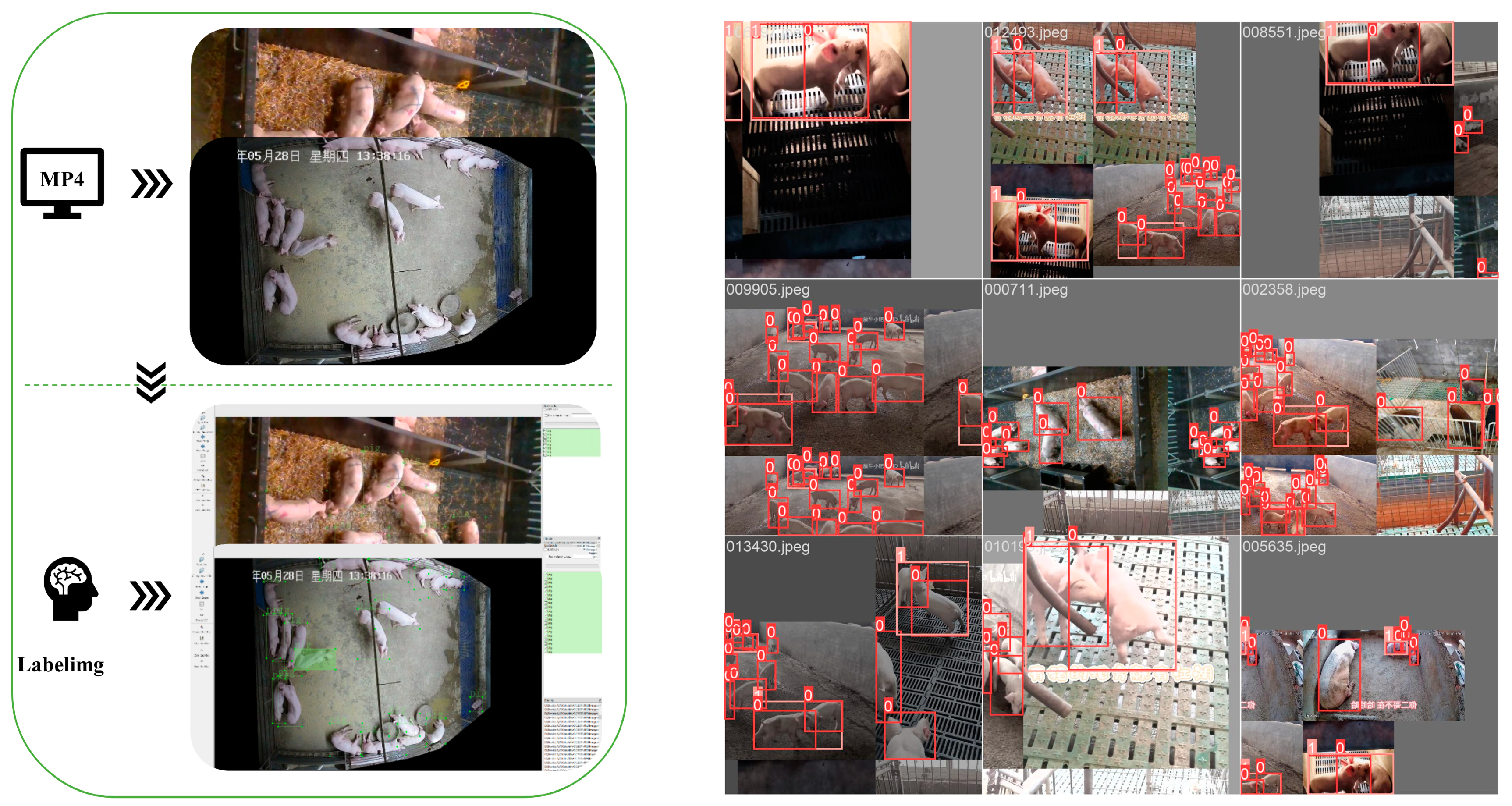

2.1. Data Acquisition and Pre-Processing Methods

2.2. Training for Individual Identification of Pigs

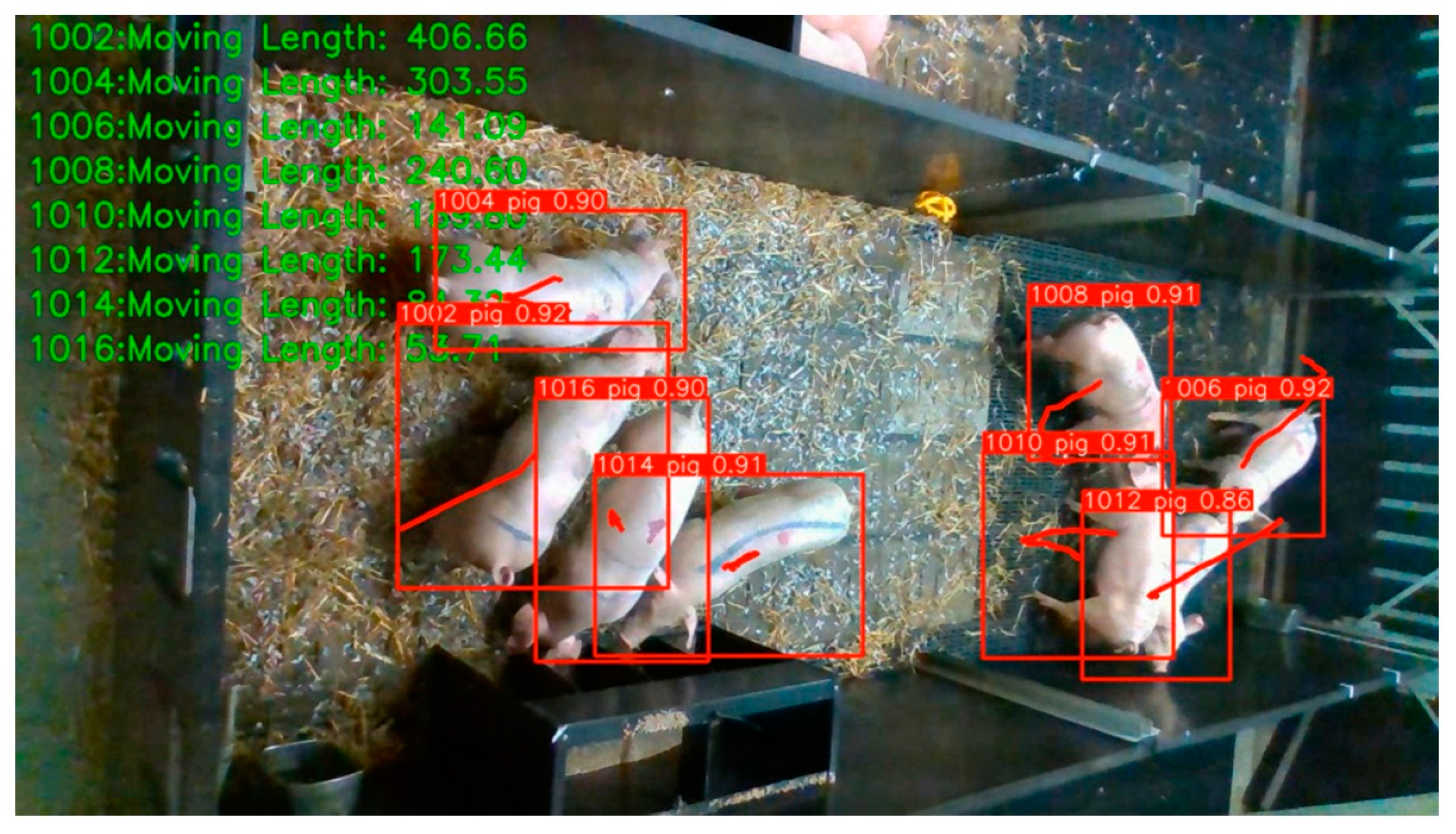

2.3. Tracking of Pig Motion

- Separate the detection frames into high- and low-scoring frames based on the detection frame score.

- The first time, the high score box is used to match the previous trace.

- The second time, we use the low-scoring frame to match the track that did not match the high-scoring frame the first time (e.g., objects that were heavily occluded in the current frame that caused the score to drop).

- Create a new track for frames that do not match the track but have a high enough score. Tracks that do not match a frame are kept for 30 frames and matched when they appear again.

2.4. Detection and Tracking of Aggressive Behavior and Movement Trajectories in Pigs

2.5. Model Training and Test Precision Evaluation

3. Results

3.1. Setting of Parameters of the YOLOV8 Model

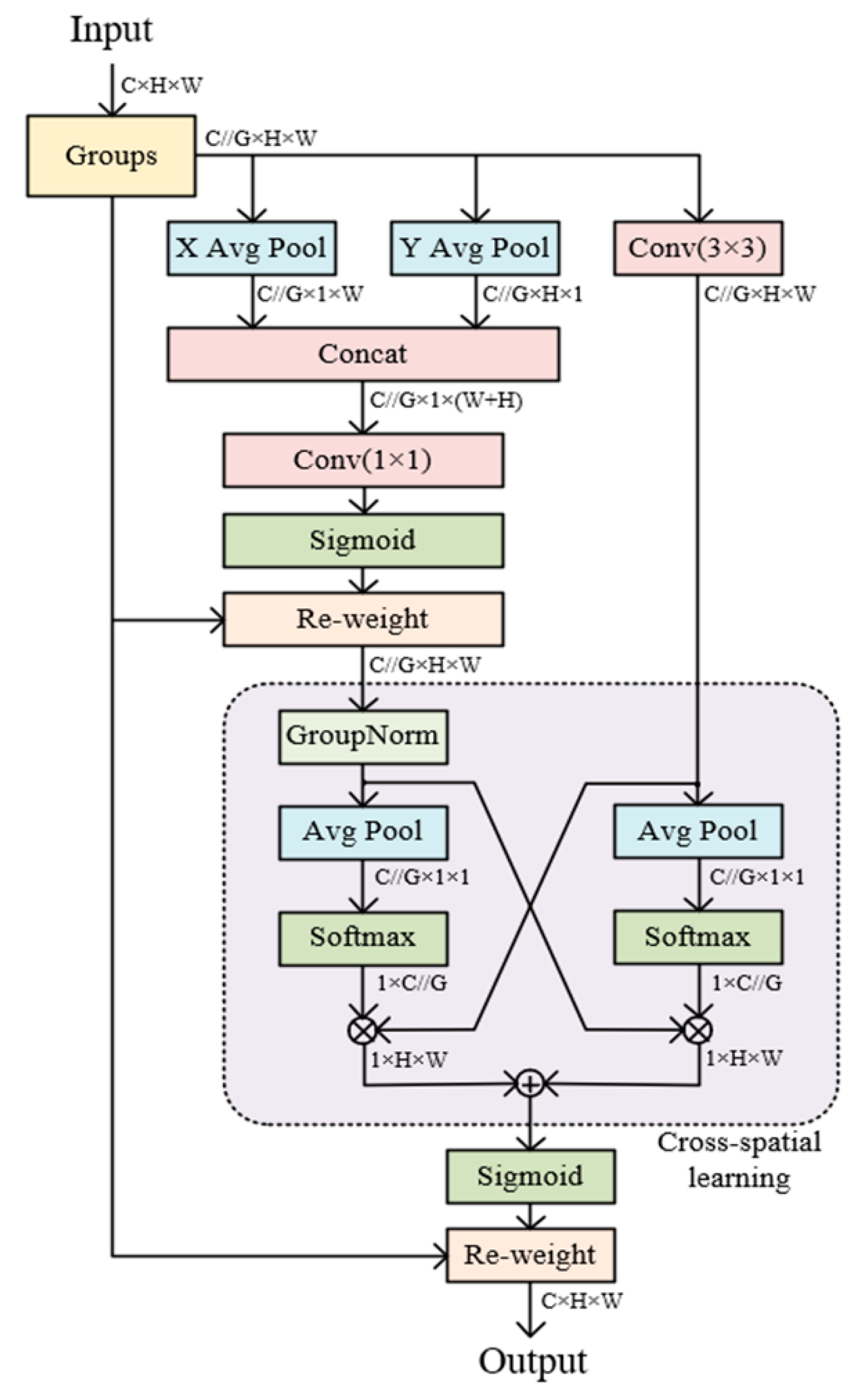

3.2. Improvements in Different Attention Mechanisms in Model Training

3.3. Evaluation of Different Models for Detection of Pig and Aggressive Behavior

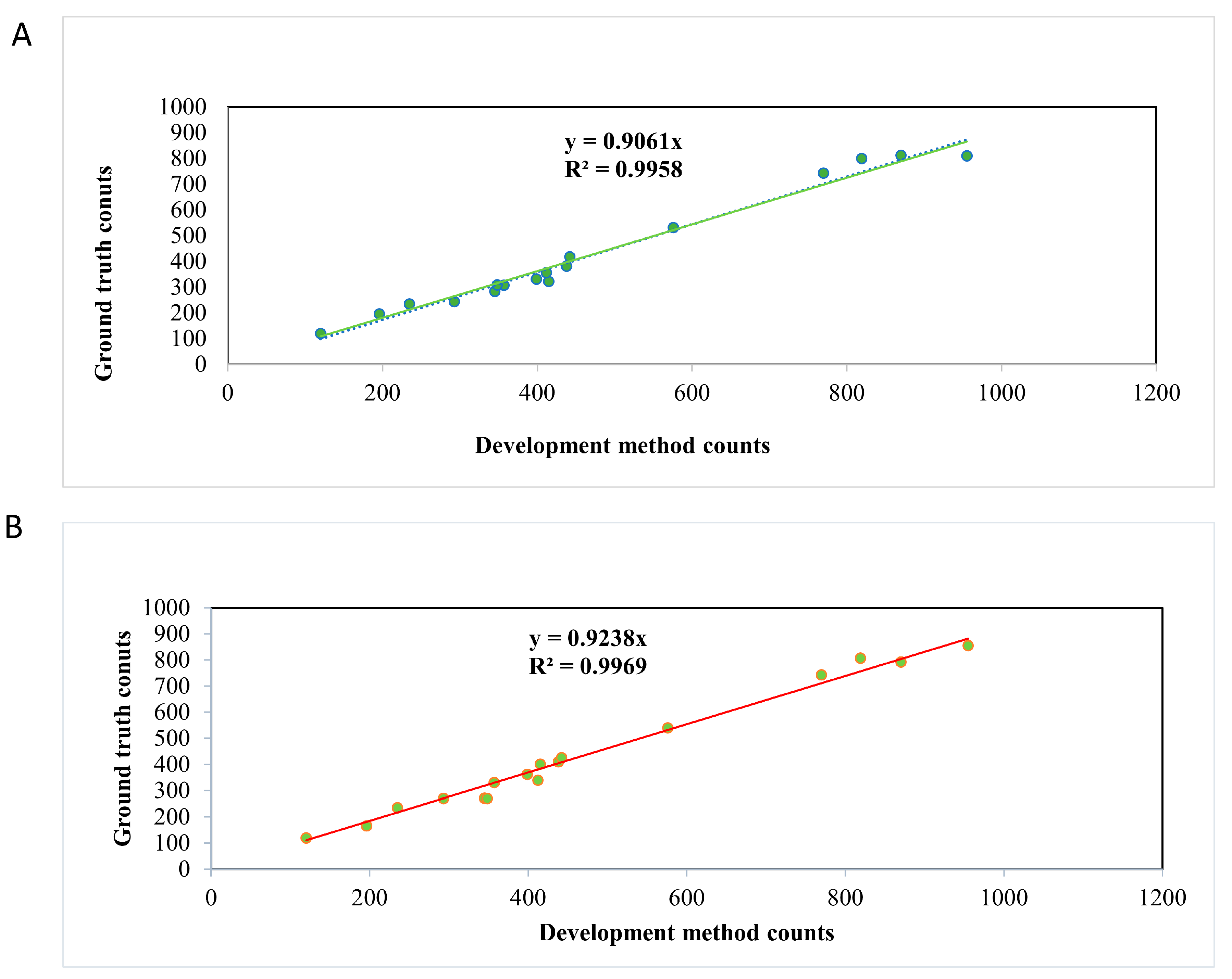

3.4. Evaluation of Model Generalization Capability

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, K.; Wang, S.; Ji, X.; Chen, D.; Shen, Q.; Yu, Y.; Xiao, W.; Wu, P.; Yuan, J.; Gu, Y.; et al. Genome-wide association studies identified loci associated with both feed conversion ratio and residual feed intake in Yorkshire pigs. Genome 2022, 65, 405–412. [Google Scholar] [CrossRef]

- Miao, Y.; Mei, Q.; Fu, C.; Liao, M.; Liu, Y.; Xu, X.; Li, X.; Zhao, S.; Xiang, T. Genome-wide association and transcriptome studies identify candidate genes and pathways for feed conversion ratio in pigs. BMC Genom. 2021, 22, 294. [Google Scholar] [CrossRef]

- Fels, M.; Hartung, J.R.; Hoy, S. Social hierarchy formation in piglets mixed in different group compositions after weaning. Appl. Anim. Behav. Sci. 2014, 152, 17–22. [Google Scholar] [CrossRef]

- Meese, G.; Ewbank, R. The establishment and nature of the dominance hierarchy in the domesticated pig. Anim. Behav. 1973, 21, 326–334. [Google Scholar] [CrossRef]

- Biswas, C.; Pan, S.; Ray, S. Agonistic ethogram of freshly regrouped weaned piglets. Indian J. Anim. Prod. Manag. 1995, 11, 186–188. [Google Scholar]

- Stookey, J.M.; Gonyou, H.W. The effects of regrouping on behavioral and production parameters in finishing swine. J. Anim. Sci. 1994, 72, 2804–2811. [Google Scholar] [CrossRef] [PubMed]

- Turner, S.P.; Farnworth, M.J.; White, I.M.S.; Brotherstone, S.; Mendl, M.; Knap, P.; Penny, P.; Lawrence, A.B. The accumulation of skin lesions and their use as a predictor of individual aggressiveness in pigs. Appl. Anim. Behav. Sci. 2006, 96, 245–259. [Google Scholar] [CrossRef]

- Kongsted, A.G. Stress and fear as possible mediators of reproduction problems in group housed sows: A review. Acta Agric. Scand. 2004, 54, 58–66. [Google Scholar] [CrossRef]

- D’Eath, R.B.; Turner, S.P. The Natural Behaviour of the Pig; Springer: Amsterdam, The Netherlands, 2008. [Google Scholar]

- Turner, S.P.; Roehe, R.; D’Eath, R.B.; Ison, S.H.; Farish, M.; Jack, M.C.; Lundeheim, N.; Rydhmer, L.; Lawrence, A.B. Genetic validation of postmixing skin injuries in pigs as an indicator of aggressiveness and the relationship with injuries under more stable social conditions. J. Anim. Sci. 2009, 87, 3076–3082. [Google Scholar] [CrossRef]

- Verdon, M.; Hansen, C.F.; Rault, J.-L.; Jongman, E.; Hansen, L.U.; Plush, K.; Hemsworth, P.H. Effects of group housing on sow welfare: A review1. J. Anim. Sci. 2015, 93, 1999–2017. [Google Scholar] [CrossRef]

- Qi, J.; Liu, X.; Liu, K.; Xu, F.; Guo, H.; Tian, X.; Li, M.; Bao, Z.; Li, Y. An improved YOLOv5 model based on visual attention mechanism: Application to recognition of tomato virus disease. Comput. Electron. Agric. 2022, 194, 106780. [Google Scholar] [CrossRef]

- Li, Y.; Bao, Z.; Qi, J. Seedling maize counting method in complex backgrounds based on YOLOV5 and Kalman filter tracking algorithm. Front. Plant Sci. 2022, 13, 1030962. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Fu, L.; Sun, Y.; Mu, Y.; Chen, L.; Li, J.; Gong, H. Individual dairy cow identification based on lightweight convolutional neural network. PLoS ONE 2021, 16, e0260510. [Google Scholar] [CrossRef] [PubMed]

- Shen, W.; Hu, H.; Dai, B.; Wei, X.; Sun, J.; Jiang, L.; Sun, Y. Individual identification of dairy cows based on convolutional neural networks. Multimed. Tools Appl. 2020, 79, 14711–14724. [Google Scholar] [CrossRef]

- Li, G.; Huang, Y.; Chen, Z.; Chesser, G.D., Jr.; Purswell, J.L.; Linhoss, J.; Zhao, Y. Practices and Applications of Convolutional Neural Network-Based Computer Vision Systems in Animal Farming: A Review. Sensors 2021, 21, 1492. [Google Scholar] [CrossRef] [PubMed]

- Guo, Q.; Sun, Y.; Orsini, C.; Bolhuis, J.E.; Vlieg, J.d.; Bijma, P.; With, P.H.N.d. Enhanced camera-based individual pig detection and tracking for smart pig farms. Comput. Electron. Agric. 2023, 211, 14. [Google Scholar] [CrossRef]

- Zhang, L.; Gray, H.; Ye, X.; Collins, L.; Allinson, N. Automatic Individual Pig Detection and Tracking in Pig Farms. Sensors 2019, 19, 1188. [Google Scholar] [CrossRef]

- Wutke, M.; Heinrich, F.; Das, P.P.; Lange, A.; Gentz, M.; Traulsen, I.; Warns, F.K.; Schmitt, A.O.; Gültas, M. Detecting Animal Contacts—A Deep Learning-Based Pig Detection and Tracking Approach for the Quantification of Social Contacts. Sensors 2021, 21, 7512. [Google Scholar] [CrossRef]

- Mcglone, J.J. A Quantitative Ethogram of Aggressive and Submissive Behaviors in Recently Regrouped Pigs. J. Anim. Sci. 1985, 3, 556–566. [Google Scholar] [CrossRef]

- Chen, C.; Zhu, W.; Liu, D.; Steibel, J.; Norton, T. Detection of aggressive behaviours in pigs using a RealSence depth sensor. Comput. Electron. Agric. 2019, 166, 105003. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. Bytetrack: Multi-object tracking by associating every detection box. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 1–21. [Google Scholar]

- Ultralytics YOLOv8. Available online: https://docs.ultralytics.com/ (accessed on 21 June 2023).

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Yeh, I.H. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Yang, G.; He, Y.; Yang, Y.; Xu, B. Fine-Grained Image Classification for Crop Disease Based on Attention Mechanism. Front. Plant Sci. 2020, 11, 600854. [Google Scholar] [CrossRef] [PubMed]

- Ouyang, D.; He, S.; Zhang, G.; Luo, M.; Guo, H.; Zhan, J.; Huang, Z. Efficient Multi-Scale Attention Module with Cross-Spatial Learning. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.E.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global attention mechanism: Retain information to enhance channel-spatial interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Li, Y.Z.; Johnston, L.J. Behavior and performance of pigs previously housed in large groups. J. Anim. Sci. 2009, 87, 1472–1478. [Google Scholar] [CrossRef]

| Models | Class | mAP@0.5 (%) 1 | Parameters | FLOPs (G) |

|---|---|---|---|---|

| YOLOV8n | Pig 2 | 95.6 | 3.01 M | 8.1 |

| pig_fighting 3 | 95.9 | |||

| YOLOV8s | pig | 96.2 | 11.13 M | 28.4 |

| pig_fighting | 95.9 | |||

| YOLOV8m | pig | 96.2 | 25.84 M | 78.7 |

| pig_fighting | 96.6 |

| Models | Class | mAP@0.5 (%) 1 | Parameters | FLOPs (G) |

|---|---|---|---|---|

| YOLOV8n | Pig 2 | 95.6 | 3.01 M | 8.1 |

| pig_fighting 3 | 95.9 | |||

| SE-YOLOV8n | pig | 95.8 | 3.01 M | 8.1 |

| pig_fighting | 95.8 | |||

| CBAM-YOLOV8n | pig | 96.0 | 3.01 M | 8.1 |

| pig_fighting | 95.9 | |||

| EMA-YOLOV8n | pig | 96.1 | 3.02 M | 8.1 |

| pig_fighting | 96.6 | |||

| GAM-YOLOV8n | pig | 96.1 | 4.65 M | 12.5 |

| pig_fighting | 96.2 |

| Models | Class | mAP@0.5 (%) 1 | Parameters | FLOPs (G) |

|---|---|---|---|---|

| SSD | Pig 2 | 94.1 | 23.88 M | 343.3 |

| pig_fighting 3 | 96.4 | |||

| Faster R-CNN | pig | 52.4 | 41.13 M | 193.78 |

| pig_fighting | 46.5 | |||

| YOLOV5n | pig | 95.9 | 1.76 M | 4.1 |

| pig_fighting | 94.9 | |||

| EMA-YOLOV8n | pig | 96.1 | 3.02 M | 8.1 |

| pig_fighting | 96.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, J.; Tang, X.; Liu, J.; Zhang, Z. Detection of Pig Movement and Aggression Using Deep Learning Approaches. Animals 2023, 13, 3074. https://doi.org/10.3390/ani13193074

Wei J, Tang X, Liu J, Zhang Z. Detection of Pig Movement and Aggression Using Deep Learning Approaches. Animals. 2023; 13(19):3074. https://doi.org/10.3390/ani13193074

Chicago/Turabian StyleWei, Jiacheng, Xi Tang, Jinxiu Liu, and Zhiyan Zhang. 2023. "Detection of Pig Movement and Aggression Using Deep Learning Approaches" Animals 13, no. 19: 3074. https://doi.org/10.3390/ani13193074

APA StyleWei, J., Tang, X., Liu, J., & Zhang, Z. (2023). Detection of Pig Movement and Aggression Using Deep Learning Approaches. Animals, 13(19), 3074. https://doi.org/10.3390/ani13193074