Automatic Detection of Group Recumbency in Pigs via AI-Supported Camera Systems

Abstract

:Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Animals

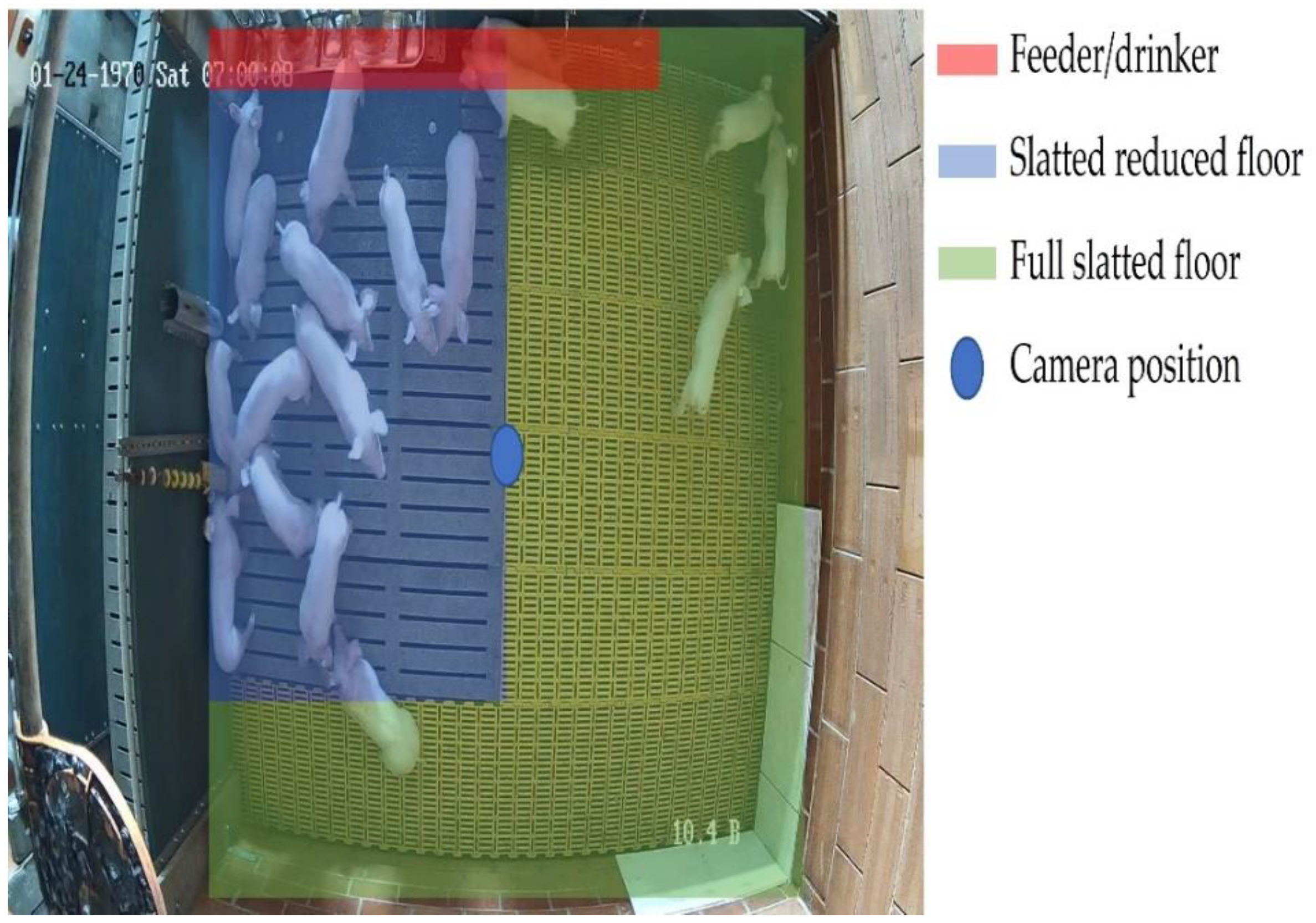

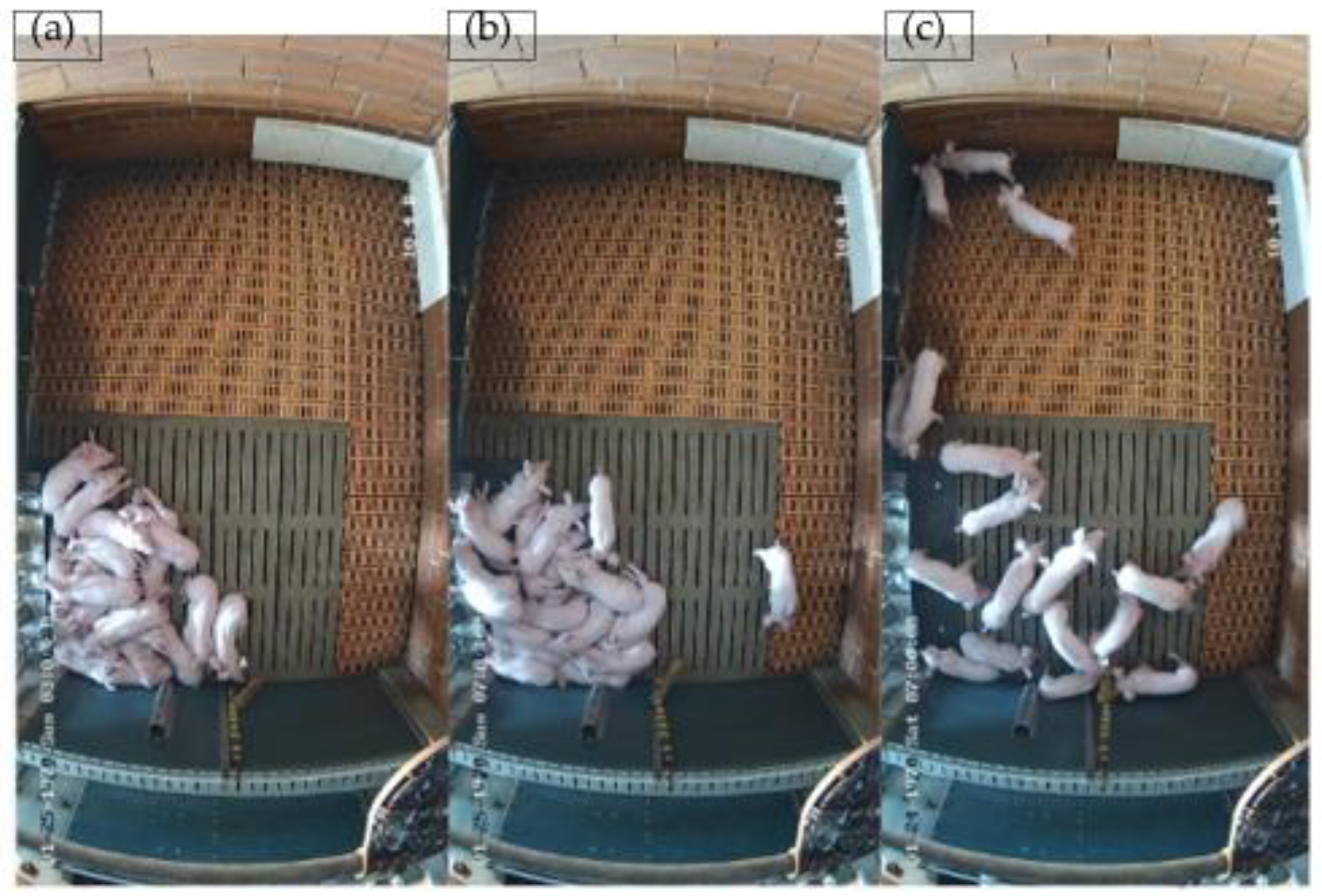

2.2. Experimental Design

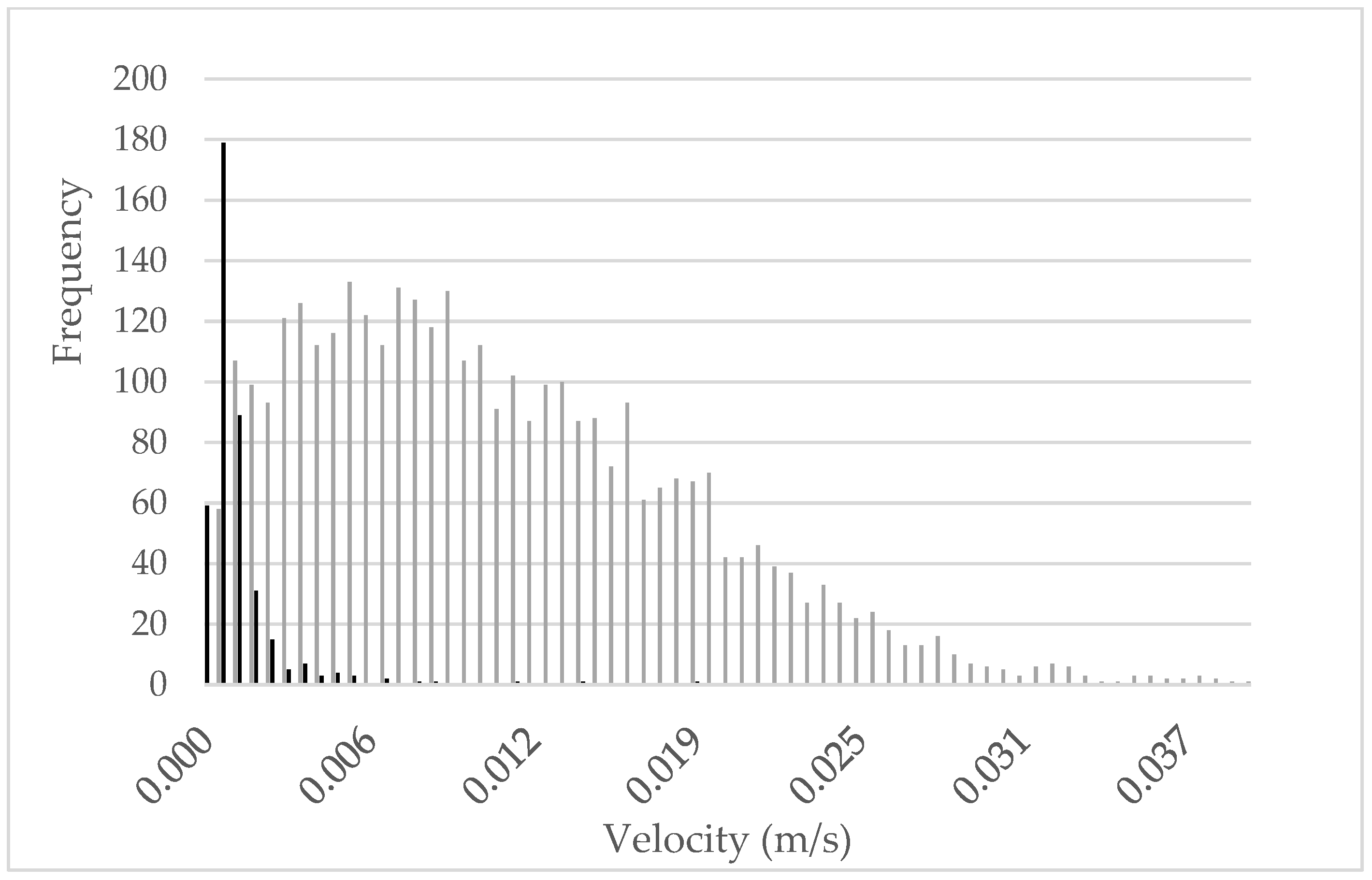

2.3. Measurements

2.4. Statistical Analyses

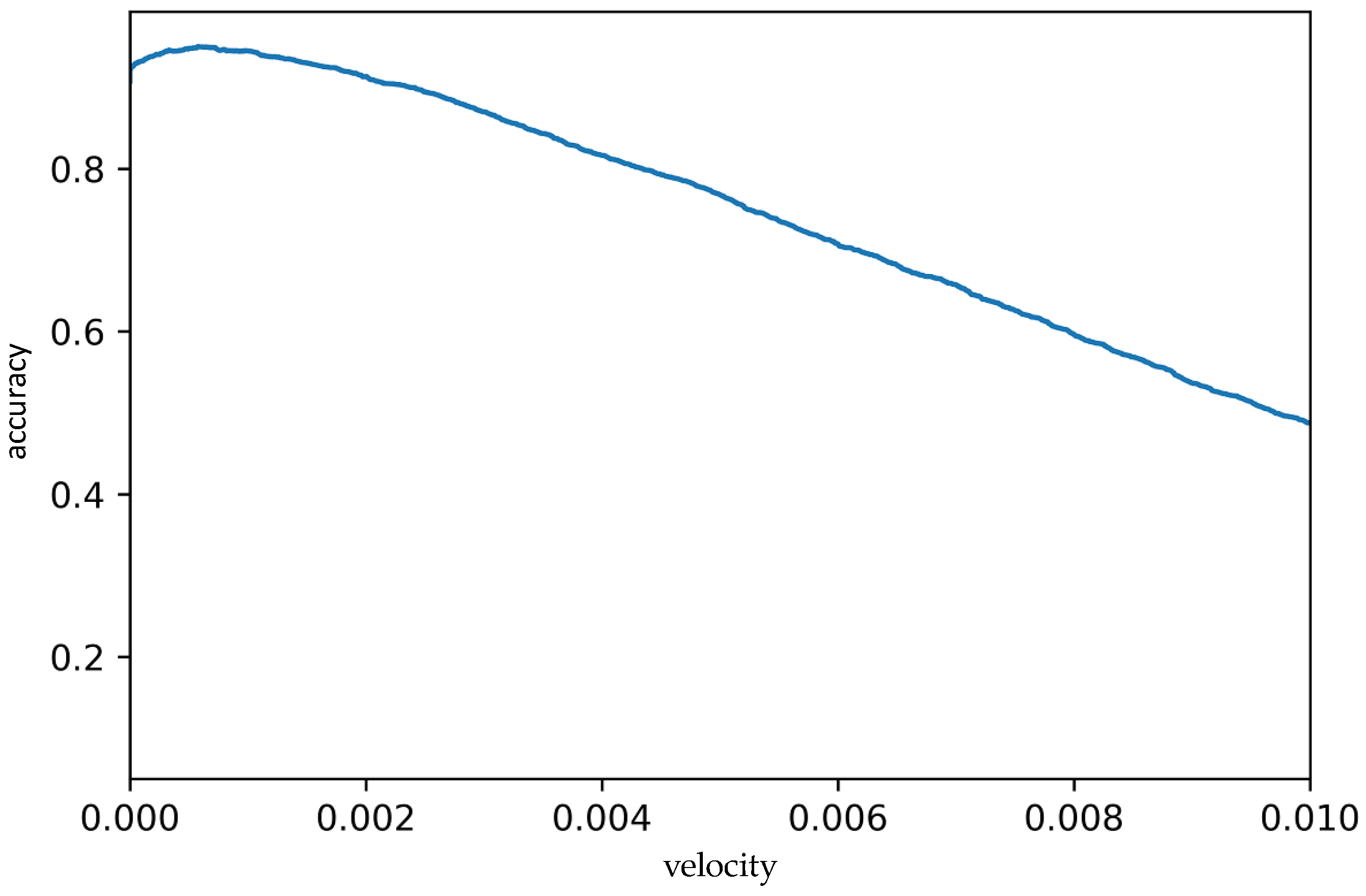

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yang, Q.; Xiao, D. A review of video-based pig behavior recognition. Appl. Anim. Behav. Sci. 2020, 233, 105146. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Sturm, B.; Olsson, A.-C.; Jeppsson, K.-H.; Müller, S.; Edwards, S.; Hensel, O. Automatic scoring of lateral and sternal lying posture in grouped pigs using image processing and Support Vector Machine. Comput. Electron. Agric. 2019, 156, 475–481. [Google Scholar] [CrossRef]

- Riekert, M.; Klein, A.; Adrion, F.; Hoffmann, C.; Gallmann, E. Automatically detecting pig position and posture by 2D camera imaging and deep learning. Comput. Electron. Agric. 2020, 174, 105391. [Google Scholar] [CrossRef]

- Xin, H. Assessing Swine Thermal Comfort by Image Analysis of Postural Behaviors. J. Anim. Sci. 1999, 77, 1–9. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Buayai, P.; Piewthongngam, K.; Leung, C.K.; Saikaew, K.R. Semi-Automatic Pig Weight Estimation Using Digital Image Analysis. Appl. Eng. Agric. 2019, 35, 521–534. [Google Scholar] [CrossRef]

- Liu, D.; Oczak, M.; Maschat, K.; Baumgartner, J.; Pletzer, B.; He, D.; Norton, T. A computer vision-based method for spatial-temporal action recognition of tail-biting behaviour in group-housed pigs. Biosyst. Eng. 2020, 195, 27–41. [Google Scholar] [CrossRef]

- Bateson, M.; Martin, P. Measuring Behaviour: An Introductory Guide, 4th ed.; Cambridge University Press: Cambridge, UK, 2021. [Google Scholar]

- Donát, P. Measuring behaviour: The tools and the strategies. Neurosci. Biobehav. Rev. 1991, 15, 447–454. [Google Scholar] [CrossRef] [PubMed]

- Lehner, P.N. Sampling Methods in Behavior Research. Poult. Sci. 1992, 71, 643–649. [Google Scholar] [CrossRef]

- Mullan, S.; Browne, W.J.; Edwards, S.A.; Butterworth, A.; Whay, H.R.; Main, D.C.J. The effect of sampling strategy on the estimated prevalence of welfare outcome measures on finishing pig farms. Appl. Anim. Behav. Sci. 2009, 119, 39–48. [Google Scholar] [CrossRef]

- Enders, R.K.; Carpenter, C.R. A Field Study of the Behavior and Social Relations of the Howling Monkeys. J. Mammal. 1934, 15, 324. [Google Scholar] [CrossRef]

- Schneirla, T.C. The relationship between observation and experimentation in the field study of behavior. Ann. N. Y. Acad. Sci. 1950, 51, 1022–1044. [Google Scholar] [CrossRef]

- Altmann, J. Observational study of behavior: Sampling methods. Behaviour 1974, 49, 227–267. [Google Scholar] [CrossRef] [Green Version]

- Götz, S.; Raoult, C.M.C.; Reiter, K.; Wensch-Dorendorf, M.; von Borell, E. Lying, Feeding and Activity Preference of Weaned Piglets for LED-Illuminated vs. Dark Pen Compartments. Animals 2022, 12, 202. [Google Scholar] [CrossRef] [PubMed]

- Marx, D. Beurteilungskriterien für artgerechte Tierhaltung am Beispiel der Schweineaufzucht. Bau. Für Die Landwirtsch 1991, 28, 6–10. [Google Scholar]

- Hörning, B.; Raskopf, S.; Simantke, C. Artgemäße Schweinehaltung Grundlagen und Beispiele aus der Praxis; Müller: Karlsruhe, Germany, 1992. [Google Scholar]

- Mayer, C.; Hillmann, E.; Schrader, L. Verhalten, Haltung und Bewertung von Haltungssystemen. In Schweinezucht und Schweinefleischerzeugung: Empfehlungen für die Praxis; Bundesforschungsanstalt für Landwirtschaft: Braunschweig, Germany, 2006; pp. 94–122. [Google Scholar]

- Opderbeck, S.; Keßler, B.; Gordillio, W.; Schrade, H.; Piepho, H.-P.; Gallmann, E. Influence of A Cooled, Solid Lying Area on the Pen Fouling and Lying Behavior of Fattening Pigs. Agriculture 2020, 10, 307. [Google Scholar] [CrossRef]

- Scriba, M.; Wechsler, B. Verhaltensindikatoren und physiologische Indikatoren für Hitzestress bei Mastschweinen. Agrar. Schweiz 2021, 12, 172–180. [Google Scholar]

- Li, D.; Zhang, K.; Li, Z.; Chen, Y. A Spatiotemporal Convolutional Network for Multi-Behavior Recognition of Pigs. Sensors 2020, 20, 2381. [Google Scholar] [CrossRef] [Green Version]

- Huynh, T.; Aarnink, A.; Gerrits, W.; Heetkamp, M.; Canh, T.; Spoolder, H.; Kemp, B.; Verstegen, M. Thermal behaviour of growing pigs in response to high temperature and humidity. Appl. Anim. Behav. Sci. 2005, 91, 1–16. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Richter, U.; Hensel, O.; Edwards, S.; Sturm, B. Using machine vision for investigation of changes in pig group lying patterns. Comput. Electron. Agric. 2015, 119, 184–190. [Google Scholar] [CrossRef] [Green Version]

- Nasirahmadi, A.; Edwards, S.A.; Sturm, B. Implementation of machine vision for detecting behaviour of cattle and pigs. Livest. Sci. 2017, 202, 25–38. [Google Scholar] [CrossRef] [Green Version]

- Shao, B.; Xin, H. A real-time computer vision assessment and control of thermal comfort for group-housed pigs. Comput. Electron. Agric. 2008, 62, 15–21. [Google Scholar] [CrossRef]

- Shao, J.; Xin, H.; Harmon, J.D. Comparison of image feature extraction for classification of swine thermal comfort behavior. Comput. Electron. Agric. 1998, 19, 223–232. [Google Scholar]

- Lao, F.; Brown-Brandl, T.; Stinn, J.P.; Liu, K.; Teng, G.; Xin, H. Automatic recognition of lactating sow behaviors through depth image processing. Comput. Electron. Agric. 2016, 125, 56–62. [Google Scholar] [CrossRef] [Green Version]

- Kemper, N. Optimising pig welfare at the weaning and nursery stage. In Understanding the Behaviour and Improving the Welfare of Pigs; Edwards, S., Ed.; Burleigh Dodds Science Publishing: Cambridge, UK, 2021; pp. 177–208. [Google Scholar]

- Yilmaz, A.; Javed, O.; Shah, M. Object tracking: A survey. ACM Comput. Surv. 2006, 38, 13–es. [Google Scholar] [CrossRef]

- Krogh, A. What are artificial neural networks? Nat. Biotechnol. 2008, 26, 195–197. [Google Scholar] [CrossRef]

- Ahrendt, P.; Gregersen, T.; Karstoft, H. Development of a real-time computer vision system for tracking loose-housed pigs. Comput. Electron. Agric. 2011, 76, 169–174. [Google Scholar] [CrossRef]

- He, H.; Qiao, Y.; Li, X.; Chen, C.; Zhang, X. Optimization on multi-object tracking and segmentation in pigs’ weight measurement. Comput. Electron. Agric. 2021, 186, 106190. [Google Scholar] [CrossRef]

- Matthews, S.G.; Miller, A.L.; Plötz, T.; Kyriazakis, I. Automated tracking to measure behavioural changes in pigs for health and welfare monitoring. Sci. Rep. 2017, 7, 17582. [Google Scholar] [CrossRef]

- Kuhn, M. Building Predictive Models in R Using the caret Package. J. Stat. Softw. 2008, 28, 1–26. [Google Scholar] [CrossRef] [Green Version]

- Wang, S.; Jiang, H.; Qiao, Y.; Jiang, S.; Lin, H.; Sun, Q. The Research Progress of Vision-Based Artificial Intelligence in Smart Pig Farming. Sensors 2022, 22, 6541. [Google Scholar] [CrossRef]

- Cook, N.J.; Bench, C.J.; Liu, T.; Chabot, B.; Schaefer, A.L. The automated analysis of clustering behaviour of piglets from thermal images in response to immune challenge by vaccination. Animal 2018, 12, 122–133. [Google Scholar] [CrossRef] [PubMed]

- Nasirahmadi, A.; Hensel, O.; Edwards, S.A.; Sturm, B. A new approach for categorizing pig lying behaviour based on a Delaunay triangulation method. Animal 2017, 11, 131–139. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hoha, G.V.; Costachescu, E.; Nica, A.; Dunea, I.B.; Pasarin, B. The influence of microclimates conditions on production performance in pigs. Lucr. Ştiinţifice Ser Zooteh 2013, 59, 165–169. [Google Scholar]

- Le Dividich, J. Effects of environmental temperature on the growth rates of early-weaned piglets. Livest. Prod. Sci. 1981, 8, 75–86. [Google Scholar] [CrossRef]

- Le Dividich, J.; Herpin, P. Effects of climatic conditions on the performance, metabolism and health status of weaned piglets: A review. Livest. Prod. Sci. 1994, 38, 79–90. [Google Scholar] [CrossRef]

- Sutherland, M.A.; Niekamp, S.R.; Johnson, R.W.; Van Alstine, W.G.; Salak-Johnson, J.L. Heat and social rank impact behavior and physiology of PRRS-virus-infected pigs. Physiol. Behav. 2007, 90, 73–81. [Google Scholar] [CrossRef]

- Chen, C.; Zhu, W.; Steibel, J.; Siegford, J.; Han, J.; Norton, T. Recognition of feeding behaviour of pigs and determination of feeding time of each pig by a video-based deep learning method. Comput. Electron. Agric. 2020, 176, 105642. [Google Scholar] [CrossRef]

- Fernandes, A.F.A.; Dórea, J.R.R.; Fitzgerald, R.; Herring, W.; Rosa, G.J.M. A novel automated system to acquire biometric and morphological measurements and predict body weight of pigs via 3D computer vision. J. Anim. Sci. 2019, 97, 496–508. [Google Scholar] [CrossRef]

- Yik, S.; Benjamin, M.; Lavagnino, M.; Morris, D. DIAT (Depth-Infrared Image Annotation Transfer) for Training a Depth-Based Pig-Pose Detector. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021. [Google Scholar]

| Behavior | Code | Behavior Description |

|---|---|---|

| Recumbent | 0 | Every animal in the group is recumbent (lying in a sternal or lateral position) |

| Standing | 1 | At least one animal in the group is not recumbent (i.e., an animal is standing or sitting). |

| Performance Criteria for the Threshold Method | ||

|---|---|---|

| Sensitivity (%) | TP = true positive (standing position labeled as standing position) FP = false positive (recumbent position labeled as standing position) TN = true negative (recumbent position labeled as recumbent position) FN = false negative (standing position labeled as recumbent position) | |

| Specificity (%) | ||

| Accuracy (%) | ||

| Number of Images | ||

|---|---|---|

| Pen | Standing (1) | All Recumbent (0) |

| 1 | 408 | 179 |

| 2 | 235 | 50 |

| 3 | 371 | 22 |

| 4 | 384 | 68 |

| 5 | 345 | 53 |

| 6 | 341 | 3 |

| 7 | 360 | 15 |

| 8 | 377 | 5 |

| 9 | 380 | 11 |

| 10 | 348 | 5 |

| Total | 3549 | 411 |

| Results of the Applied Optimal Threshold | |

|---|---|

| Sensitivity (%) | 97.8% |

| Specificity (%) | 60.8% |

| Accuracy (%) | 94.1% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kühnemund, A.; Götz, S.; Recke, G. Automatic Detection of Group Recumbency in Pigs via AI-Supported Camera Systems. Animals 2023, 13, 2205. https://doi.org/10.3390/ani13132205

Kühnemund A, Götz S, Recke G. Automatic Detection of Group Recumbency in Pigs via AI-Supported Camera Systems. Animals. 2023; 13(13):2205. https://doi.org/10.3390/ani13132205

Chicago/Turabian StyleKühnemund, Alexander, Sven Götz, and Guido Recke. 2023. "Automatic Detection of Group Recumbency in Pigs via AI-Supported Camera Systems" Animals 13, no. 13: 2205. https://doi.org/10.3390/ani13132205

APA StyleKühnemund, A., Götz, S., & Recke, G. (2023). Automatic Detection of Group Recumbency in Pigs via AI-Supported Camera Systems. Animals, 13(13), 2205. https://doi.org/10.3390/ani13132205