Study on Poultry Pose Estimation Based on Multi-Parts Detection

Abstract

:Simple Summary

Abstract

1. Introduction

2. Materials and Methods

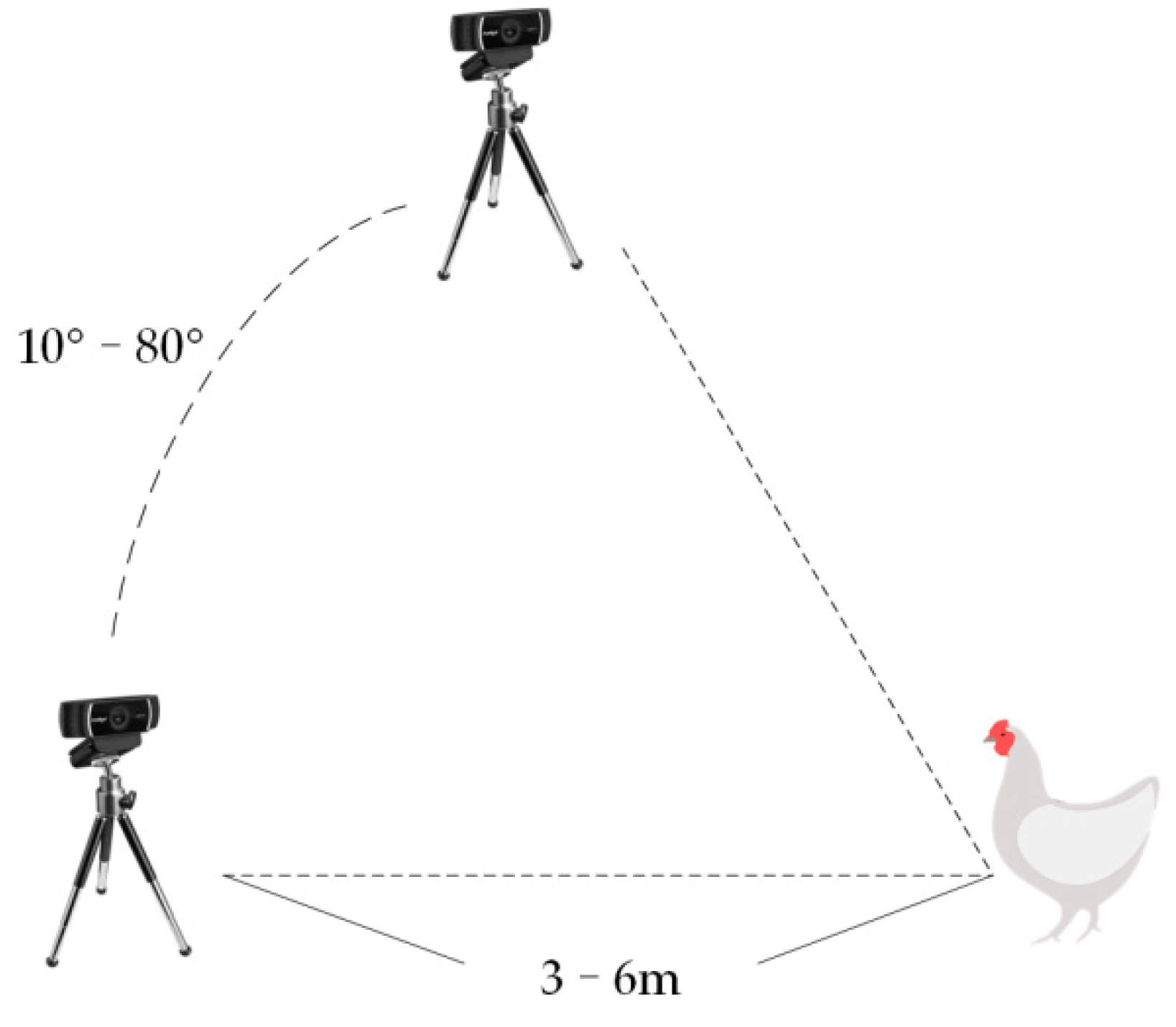

2.1. Experimental Environment

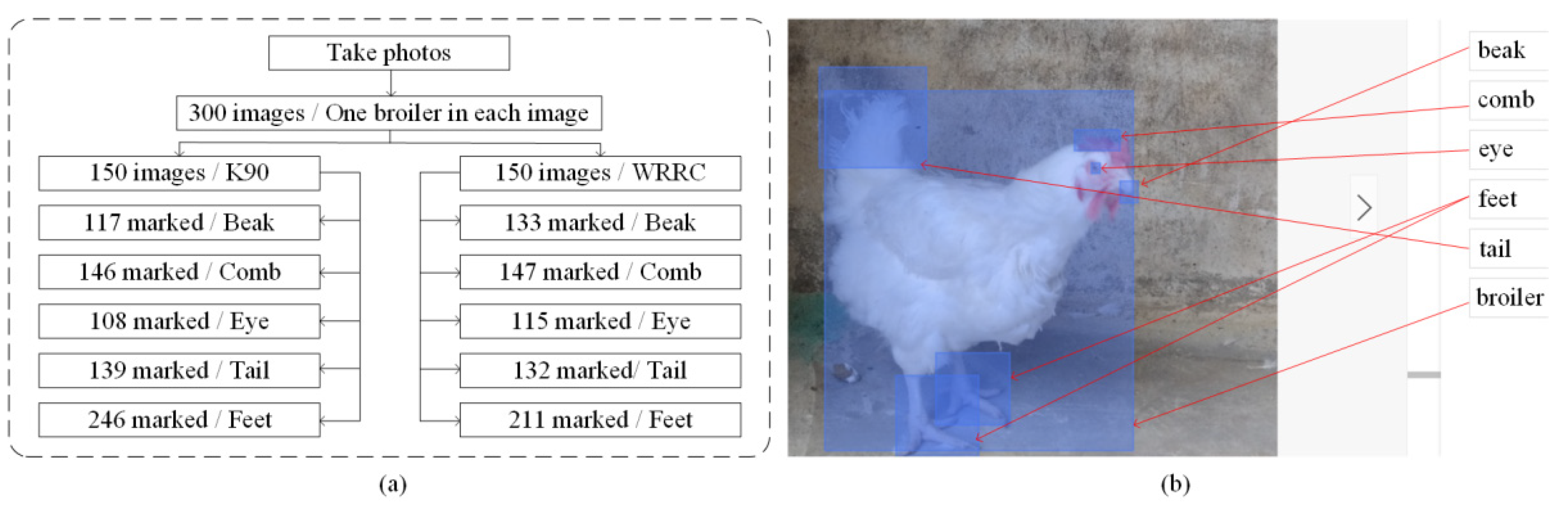

2.2. Data Processing and Labelling

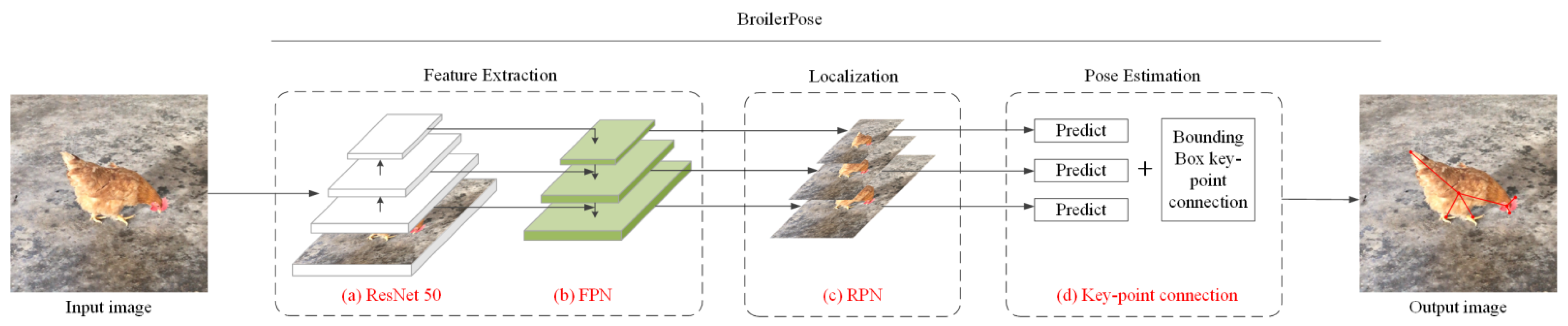

2.3. Algorithm Framework and Implementation Steps

2.4. Algorithm Training

2.5. Evaluation Metrics

3. Results

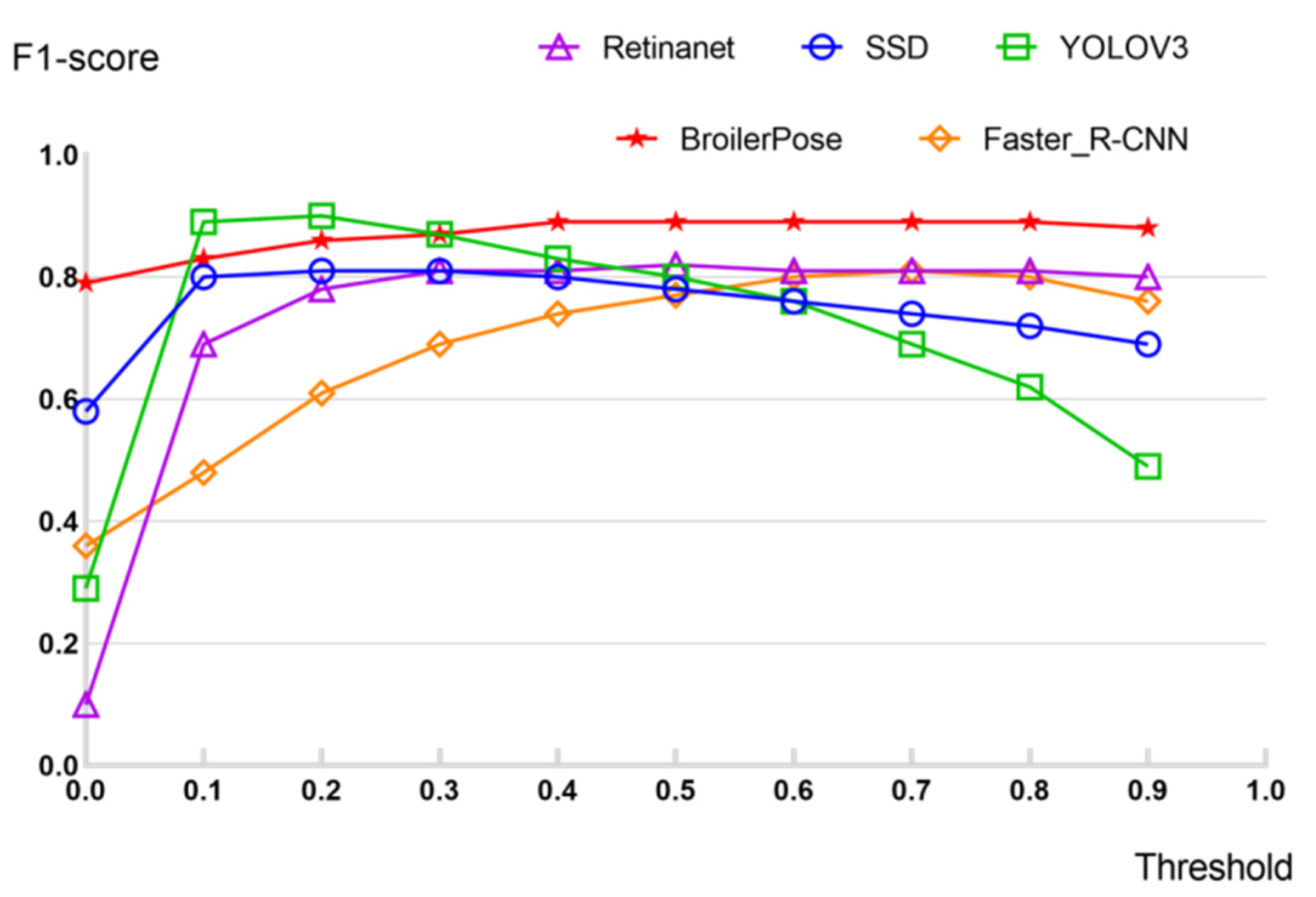

3.1. Effects of Different Detection Methods

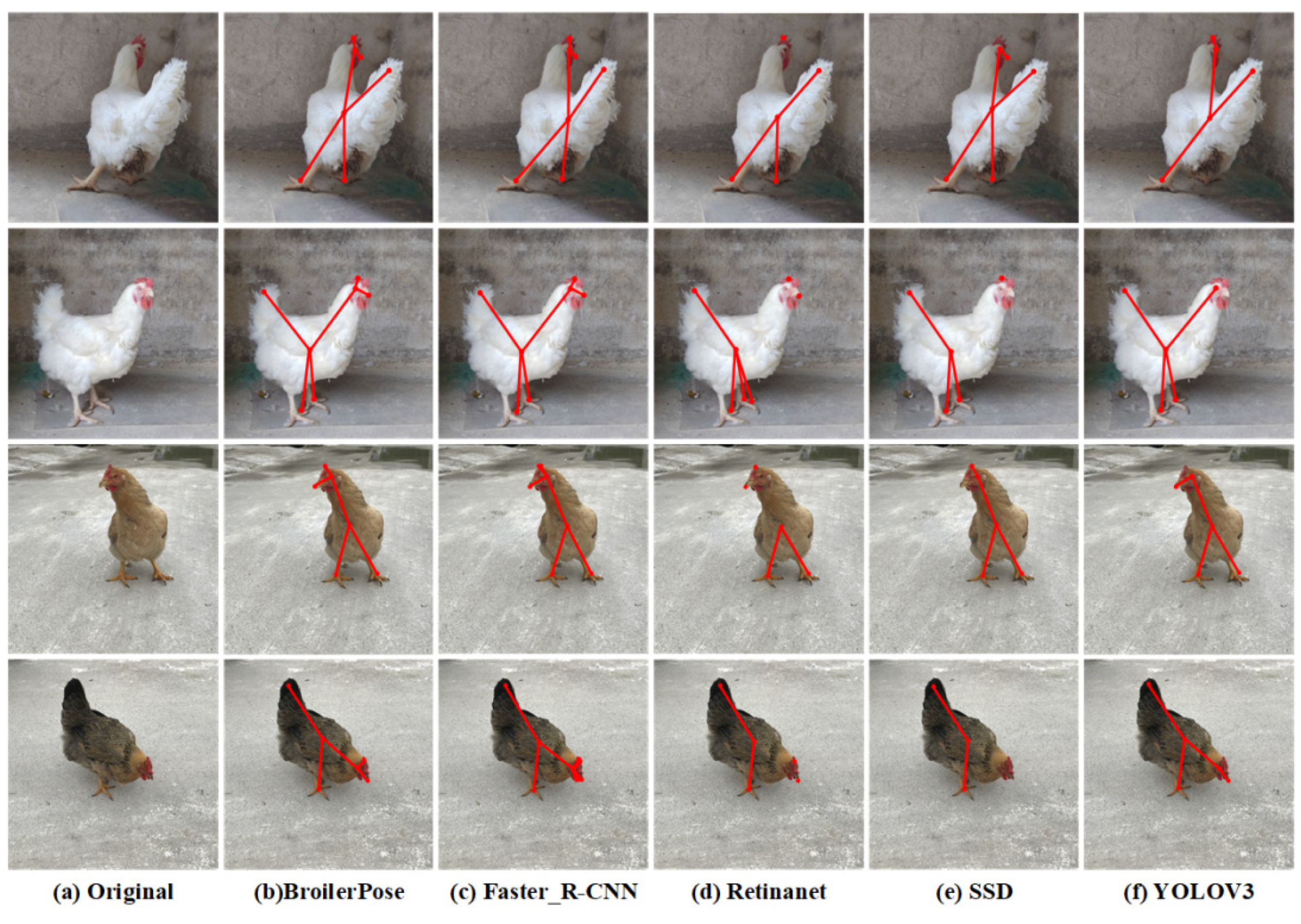

3.2. Comparison of Posture of Different Models

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Benjamin, M.; Yik, S. Precision Livestock Farming in Swine Welfare: A Review for Swine Practitioners. Animals 2019, 9, 133. [Google Scholar] [CrossRef] [Green Version]

- Astill, J.; Dara, R.A.; Fraser, E.D.G.; Roberts, B.; Sharif, S. Smart poultry management: Smart sensors, big data, and the internet of things. Comput. Electron. Agric. 2020, 170, 105291. [Google Scholar] [CrossRef]

- Zheng, H.; Zhang, T.; Fang, C.; Zeng, J.; Yang, X. Design and Implementation of Poultry Farming Information Management System Based on Cloud Database. Animals 2021, 11, 900. [Google Scholar] [CrossRef]

- Zhuang, X.; Zhang, T. Detection of sick broilers by digital image processing and deep learning. Biosyst. Eng. 2019, 179, 106–116. [Google Scholar] [CrossRef]

- Yang, A.; Huang, H.; Zheng, B.; Li, S.; Gan, H.; Chen, C.; Yang, X.; Xue, Y. An automatic recognition framework for sow daily behaviours based on motion and image analyses. Biosyst. Eng. 2020, 192, 56–71. [Google Scholar] [CrossRef]

- Zheng, C.; Yang, X.; Zhu, X.; Chen, C.; Wang, L.; Tu, S.; Yang, A.; Xue, Y. Automatic posture change analysis of lactating sows by action localisation and tube optimisation from untrimmed depth videos. Biosyst. Eng. 2020, 194, 227–250. [Google Scholar] [CrossRef]

- Liu, D.; Oczak, M.; Maschat, K.; Baumgartner, J.; Pletzer, B.; He, D.; Norton, T. A computer vision-based method for spatial-temporal action recognition of tail-biting behaviour in group-housed pigs. Biosyst. Eng. 2020, 195, 27–41. [Google Scholar] [CrossRef]

- Li, G.; Ji, B.; Li, B.; Shi, Z.; Zhao, Y.; Dou, Y.; Brocato, J. Assessment of layer pullet drinking behaviors under selectable light colors using convolutional neural network. Comput. Electron. Agric. 2020, 172, 105333. [Google Scholar] [CrossRef]

- Zhuang, X.; Bi, M.; Guo, J.; Wu, S.; Zhang, T. Development of an early warning algorithm to detect sick broilers. Comput. Electron. Agric. 2018, 144, 102–113. [Google Scholar] [CrossRef]

- Khan, A.Q.; Khan, S.; Ullah, M.; Cheikh, F.A. A Bottom-Up Approach for Pig Skeleton Extraction Using RGB Data. In Lecture Notes in Computer Science, Proceedings of the 2020 International Conference on Image and Signal Processing, Marrakesh, Morocco, 4–6 June 2020; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Fuentes, A.; Yoon, S.; Park, J.; Park, D.S. Deep learning-based hierarchical cattle behavior recognition with spatio-temporal information. Comput. Electron. Agric. 2020, 177, 105627. [Google Scholar] [CrossRef]

- Riekert, M.; Klein, A.; Adrion, F.; Hoffmann, C.; Gallmann, E. Automatically detecting pig position and posture by 2D camera imaging and deep learning. Comput. Electron. Agric. 2020, 174, 105391. [Google Scholar] [CrossRef]

- Huang, X.; Hu, Z.; Wang, X.; Yang, X.; Zhang, J.; Shi, D. An Improved Single Shot Multibox Detector Method Applied in Body Condition Score for Dairy Cows. Animals 2019, 9, 470. [Google Scholar] [CrossRef] [Green Version]

- Hu, H.; Dai, B.; Shen, W.; Wei, X.; Sun, J.; Li, R.; Zhang, Y. Cow identification based on fusion of deep parts features. Biosyst. Eng. 2020, 192, 245–256. [Google Scholar] [CrossRef]

- Marsot, M.; Mei, J.; Shan, X.; Ye, L.; Feng, P.; Yan, X.; Li, C.; Zhao, Y. An adaptive pig face recognition approach using Convolutional Neural Networks. Comput. Electron. Agric. 2020, 173, 105386. [Google Scholar] [CrossRef]

- Salau, J.; Haas, J.H.; Junge, W.; Thaller, G. Determination of Body Parts in Holstein Friesian Cows Comparing Neural Networks and k Nearest Neighbour Classification. Animals 2021, 11, 50. [Google Scholar] [CrossRef]

- Wutke, M.; Heinrich, F.; Das, P.P.; Lange, A.; Gentz, M.; Traulsen, I.; Warns, F.K.; Schmitt, A.O.; Gültas, M. Detecting Animal Contacts—A Deep Learning-Based Pig Detection and Tracking Approach for the Quantification of Social Contacts. Sensors 2021, 21, 7512. [Google Scholar] [CrossRef]

- Mathis, A.; Mamidanna, P.; Cury, K.M.; Abe, T.; Murthy, V.N.; Mathis, M.W.; Bethge, M. DeepLabCut: Markerless pose estimation of user-defined body parts with deep learning. Nat. Neurosci. 2018, 21, 1281–1289. [Google Scholar] [CrossRef]

- Pereira, T.D.; Aldarondo, D.E.; Willmore, L.; Kislin, M.; Wang, S.S.H.; Murthy, M.; Shaevitz, J.W. Fast animal pose estimation using deep neural networks. Nat. Methods 2019, 16, 117–125. [Google Scholar] [CrossRef]

- Raman, S.; Maskeliūnas, R.; Damaševičius, R. Markerless Dog Pose Recognition in the Wild Using ResNet Deep Learning Model. Computers 2022, 11, 2. [Google Scholar] [CrossRef]

- Vecvanags, A.; Aktas, K.; Pavlovs, I.; Avots, E.; Filipovs, J.; Brauns, A.; Done, G.; Jakovels, D.; Anbarjafari, G. Ungulate Detection and Species Classification from Camera Trap Images Using RetinaNet and Faster R-CNN. Entropy 2022, 24, 353. [Google Scholar] [CrossRef]

- Li, G.; Hui, X.; Lin, F.; Zhao, Y. Developing and Evaluating Poultry Preening Behavior Detectors via Mask Region-Based Convolutional Neural Network. Animals 2020, 10, 1762. [Google Scholar] [CrossRef] [PubMed]

- Lee, D.-S.; Kim, J.-S.; Jeong, S.C.; Kwon, S.-K. Human Height Estimation by Color Deep Learning and Depth 3D Conversion. Appl. Sci. 2020, 10, 5531. [Google Scholar] [CrossRef]

- Jia, L.; Tian, Y.; Zhang, J. Domain-Aware Neural Architecture Search for Classifying Animals in Camera Trap Images. Animals 2022, 12, 437. [Google Scholar] [CrossRef] [PubMed]

- Zuerl, M.; Stoll, P.; Brehm, I.; Raab, R.; Zanca, D.; Kabri, S.; Happold, J.; Nille, H.; Prechtel, K.; Wuensch, S.; et al. Automated Video-Based Analysis Framework for Behavior Monitoring of Individual Animals in Zoos Using Deep Learning—A Study on Polar Bears. Animals 2022, 12, 692. [Google Scholar] [CrossRef]

- Tang, L.; Tang, W.; Qu, X.; Han, Y.; Wang, W.; Zhao, B. A Scale-Aware Pyramid Network for Multi-Scale Object Detection in SAR Images. Remote Sens. 2022, 14, 973. [Google Scholar] [CrossRef]

- Kim, J.; Moon, N. Dog Behavior Recognition Based on Multimodal Data from a Camera and Wearable Device. Appl. Sci. 2022, 12, 3199. [Google Scholar] [CrossRef]

- Akçay, H.G.; Kabasakal, B.; Aksu, D.; Demir, N.; Öz, M.; Erdoğan, A. Automated Bird Counting with Deep Learning for Regional Bird Distribution Mapping. Animals 2020, 10, 1207. [Google Scholar] [CrossRef]

- Fang, C.; Huang, J.; Cuan, K.; Zhuang, X.; Zhang, T. Comparative study on poultry target tracking algorithms based on a deep regression network. Biosyst. Eng. 2020, 190, 176–183. [Google Scholar] [CrossRef]

- Fang, C.; Zhang, T.; Zheng, H.; Huang, J.; Cuan, K. Pose estimation and behavior classification of broiler chickens based on deep neural networks. Comput. Electron. Agric. 2021, 180, 105863. [Google Scholar] [CrossRef]

| Part | Key-Point | Combination |

|---|---|---|

| Broiler | 1 | (K1, K4) |

| Beak | 2 | (K2, K4) |

| Comb | 3 | (K3, K4) |

| Eye_left | 4 | (K4, K4) |

| Eye_right | 5 | (K5, K1) |

| Tail | 6 | (K6, K1) |

| Foot_left | 7 | (K7, K1) |

| Foot_right | 8 | (K8, K1) |

| Bbox | Algorithms | ||||

|---|---|---|---|---|---|

| BroilerPose | YOLOV3 | Faster_R-CNN | RetinaNet | SSD | |

| Broiler | 0.997 | 0.998 | 0.994 | 0.998 | 0.999 1 |

| Beak | 0.772 | 0.774 1 | 0.65 | 0.641 | 0.563 |

| Comb | 0.837 1 | 0.756 | 0.651 | 0.785 | 0.772 |

| Eye | 0.790 1 | 0.768 | 0.74 | 0.728 | 0.734 |

| Tail | 0.893 | 0.904 1 | 0.873 | 0.891 | 0.901 |

| Feet | 0.902 1 | 0.9 | 0.849 | 0.897 | 0.816 |

| BroilerPose | YOLOV3 | Faster_R-CNN | RetinaNet | SSD | |

|---|---|---|---|---|---|

| Precision | 0.919 | 0.933 1 | 0.840 | 0.881 | 0.838 |

| Recall | 0.865 1 | 0.850 | 0.793 | 0.754 | 0.737 |

| K90 | K90 (Indoor) | K90 (Outdoor) | WRRC | WRRC (Indoor) | WRRC (Outdoor) | All | ||

|---|---|---|---|---|---|---|---|---|

| Standard deviation | Precision | 0.0096 | 0.0106 | 0.0092 | 0.0147 | 0.0081 | 0.011 | 0.0128 |

| Recall | 0.0267 | 0.0375 | 0.0183 | 0.0225 | 0.0173 | 0.0226 | 0.0266 | |

| Confidence (95%) | Precision | 0.9255 ± 0.0053 | - | - | 0.9181 ± 0.0081 | - | - | 0.9218 ± 0.0048 |

| Recall | 0.8888 ± 0.0148 | - | - | 0.9105 ± 0.0124 | - | - | 0.8996 ± 0.0099 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fang, C.; Zheng, H.; Yang, J.; Deng, H.; Zhang, T. Study on Poultry Pose Estimation Based on Multi-Parts Detection. Animals 2022, 12, 1322. https://doi.org/10.3390/ani12101322

Fang C, Zheng H, Yang J, Deng H, Zhang T. Study on Poultry Pose Estimation Based on Multi-Parts Detection. Animals. 2022; 12(10):1322. https://doi.org/10.3390/ani12101322

Chicago/Turabian StyleFang, Cheng, Haikun Zheng, Jikang Yang, Hongfeng Deng, and Tiemin Zhang. 2022. "Study on Poultry Pose Estimation Based on Multi-Parts Detection" Animals 12, no. 10: 1322. https://doi.org/10.3390/ani12101322

APA StyleFang, C., Zheng, H., Yang, J., Deng, H., & Zhang, T. (2022). Study on Poultry Pose Estimation Based on Multi-Parts Detection. Animals, 12(10), 1322. https://doi.org/10.3390/ani12101322