Exploring How White-Faced Sakis Control Digital Visual Enrichment Systems

Abstract

Simple Summary

Abstract

1. Introduction

- RQ1

- What visual enrichment do captive white-faced sakis trigger?

- RQ2

- What are the behavioural effects of visual enrichment upon captive white-faced sakis?

2. Materials and Methods

2.1. Participants

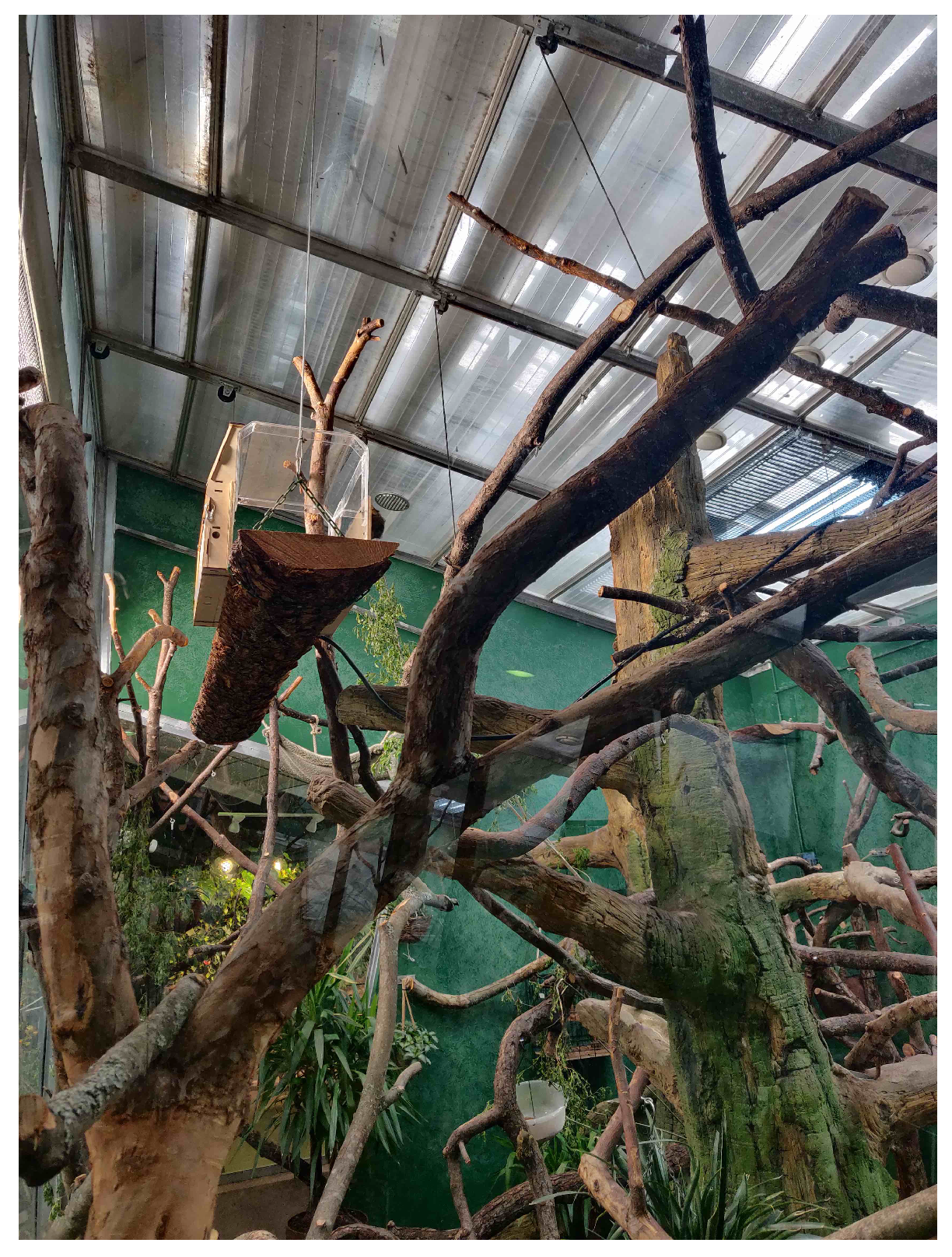

2.2. Design Requirements

2.3. Method

2.4. Hardware and Software

2.5. Visual Stimuli

2.6. Terminology of Interaction and Activation

2.7. Data Analysis

3. Results

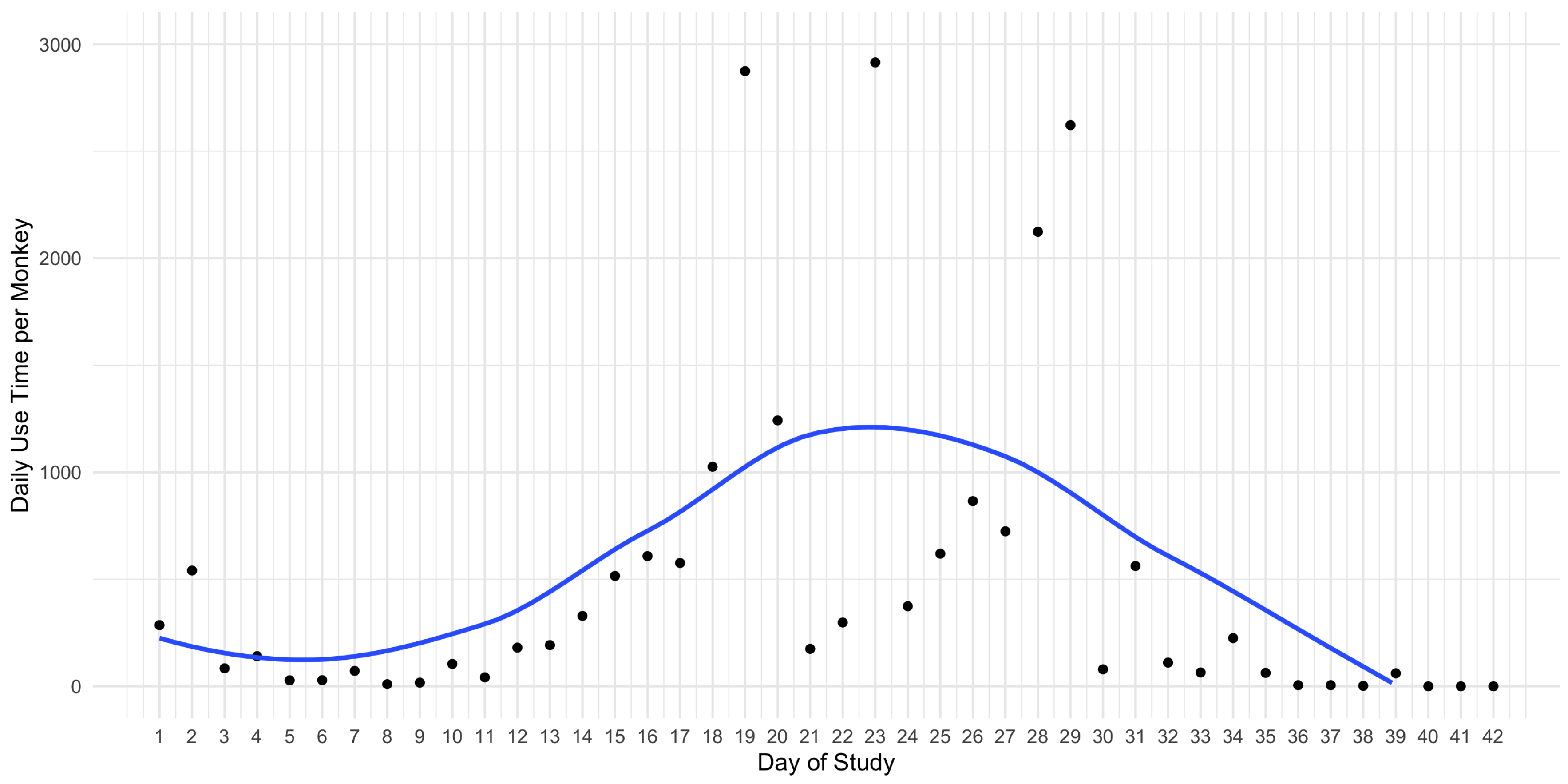

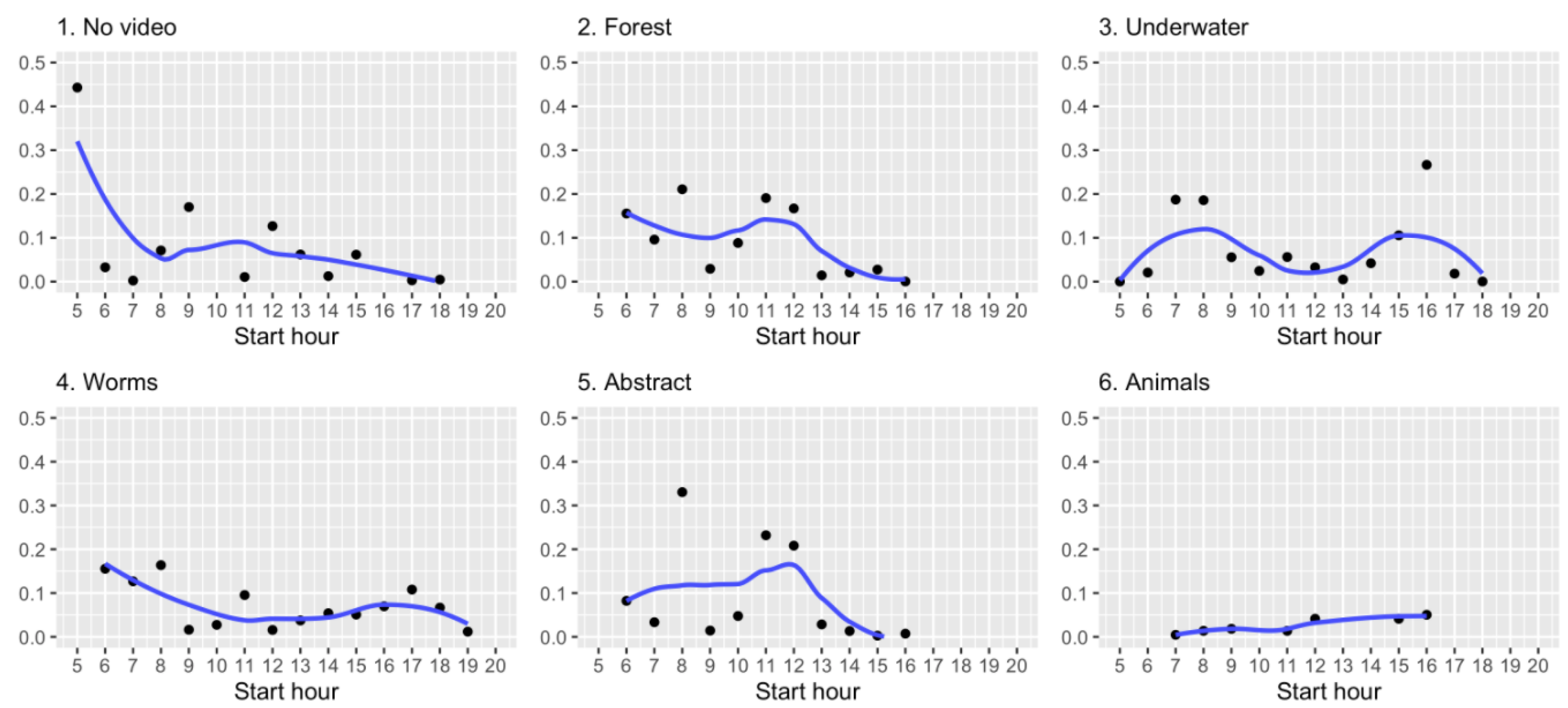

3.1. Sakis Interactions with the Visual Enrichment System

3.2. Sakis Behaviour with the Visual Enrichment System

4. Discussion

4.1. Sakis’ Interaction with Visual Enrichment Systems

4.2. What We Learned about Sakis’ Behaviours with Screen Enrichment Systems

4.3. Future Work and Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

References

- Kim-McCormack, N.N.; Smith, C.L.; Behie, A.M. Is Interactive Technology a Relevant and Effective Enrichment for Captive Great Apes? Appl. Anim. Behav. Sci. 2016, 185, 1–8. [Google Scholar] [CrossRef]

- Gray, S.; Clark, F.; Burgess, K.; Metcalfe, T.; Kadijevic, A.; Cater, K.; Bennett, P. Gorilla Game Lab: Exploring Modularity, Tangibility and Playful Engagement in Cognitive Enrichment Design. In ACI ’18, Proceedings of the Fifth International Conference on Animal-Computer Interaction, Atlanta, GA, USA, 4–6 December 2018; Association for Computing Machinery: New York, NY, USA, 2018. [Google Scholar] [CrossRef]

- Webber, S.; Carter, M.; Sherwen, S.; Smith, W.; Joukhadar, Z.; Vetere, F. Kinecting with Orangutans: Zoo Visitors’ Empathetic Responses to Animals? Use of Interactive Technology. In CHI ’17, Proceedings of the 2017 CHI Conference on Human Factors in Computing Systemss, Denver, CO, USA, 6–11 May 2017; ACM: New York, NY, USA, 2017; pp. 6075–6088. [Google Scholar] [CrossRef]

- French, F.; Mancini, C.; Sharp, H. Designing Interactive Toys for Elephants. In Proceedings of the 2015 Annual Symposium on Computer-Human Interaction in PlayCHI PLAY ’15, London, UK, 5–7 October 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 523–528. [Google Scholar] [CrossRef]

- Carter, M.; Webber, S.; Sherwen, S. Naturalism and ACI: Augmenting Zoo Enclosures with Digital Technology. In ACE ’15, Proceedings of the 12th International Conference on Advances in Computer Entertainment Technology, Iskandar, Malaysia, 16–19 November 2015; Association for Computing Machinery: New York, NY, USA, 2015. [Google Scholar] [CrossRef]

- Pons, P.; Carter, M.; Jaen, J. Sound to Your Objects: A Novel Design Approach to Evaluate Orangutans’ Interest in Sound-based Stimuli. In ACI ’16, Proceedings of the Third International Conference on Animal-Computer Interaction, Milton Keynes, UK, 15–17 November 2016; ACM: New York, NY, USA, 2016; pp. 7:1–7:5. [Google Scholar] [CrossRef]

- Piitulainen, R.; Hirskyj-Douglas, I. Music for Monkeys: Building Methods to Design with White-Faced Sakis for Animal-Driven Audio Enrichment Devices. Animals 2020, 10, 1768. [Google Scholar] [CrossRef]

- Gupfinger, R.; Kaltenbrunner, M. Sonic Experiments with Grey Parrots: A Report on Testing the Auditory Skills and Musical Preferences of Grey Parrots in Captivity. In ACI2017, Proceedings of the Fourth International Conference on Animal-Computer Interaction, Milton Keynes, UK, 21–23 November 2017; ACM: New York, NY, USA, 2017; pp. 3:1–3:6. [Google Scholar] [CrossRef]

- French, F.; Mancini, C.; Sharp, H. High Tech Cognitive and Acoustic Enrichment for Captive Elephants. J. Neurosci. Methods 2018, 300, 173–183. [Google Scholar] [CrossRef]

- French, F.; Mancini, C.; Sharp, H. More Than Human Aesthetics: Interactive Enrichment for Elephants. In DIS ’20, Proceedings of the 2020 ACM Designing Interactive Systems Conference, Eindhoven, The Netherlands, 6–10 July 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1661–1672. [Google Scholar] [CrossRef]

- Webber, S.; Carter, M.; Smith, W.; Vetere, F. Co-Designing with Orangutans: Enhancing the Design of Enrichment for Animals. In DIS ’20, Proceedings of the 2020 ACM Designing Interactive Systems Conference, Eindhoven, The Netherlands, 6–10 July 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1713–1725. [Google Scholar] [CrossRef]

- Shepherdson, D.J. Environmental Enrichment: Past, Present and Future. Int. Zoo Yearb. 2003, 38, 118–124. [Google Scholar] [CrossRef]

- Coe, J. Embedding Environmental Enrichment into Zoo Animal Facility Design. In Proceedings of the Zoo Design Conference, Wroclaw, Poland, 5–7 April 2017. [Google Scholar]

- Clay, A.W.; Perdue, B.M.; Gaalema, D.E.; Dolins, F.L.; Bloomsmith, M.A. The use of technology to enhance zoological parks. Zoo Biol. 2011, 30, 487–497. [Google Scholar] [CrossRef]

- Ritvo, S.E.; Allison, R.S. Challenges Related to Nonhuman Animal-Computer Interaction: Usability and ‘Liking’. In ACE ’14 Workshops, Proceedings of the 2014 Workshops on Advances in Computer Entertainment Conference, Madeira, Portugal, 11–14 November 2014; Association for Computing Machinery: New York, NY, USA, 2014. [Google Scholar] [CrossRef]

- Scheel, B. Designing Digital Enrichment for Orangutans. In ACI ’18, Proceedings of the Fifth International Conference on Animal-Computer Interaction, Atlanta, GA, USA, 4–6 December 2018; ACM: New York, NY, USA, 2018; pp. 5:1–5:11. [Google Scholar] [CrossRef]

- Egelkamp, C.L.; Ross, S.R. A Review of Zoo-Based Cognitive Research using Touchscreen Interfaces. Zoo Biol. 2019, 38, 220–235. [Google Scholar] [CrossRef]

- Perdue, B.M.; Clay, A.W.; Gaalema, D.E.; Maple, T.L.; Stoinski, T.S. Technology at the Zoo: The Influence of a Touchscreen Computer on Orangutans and Zoo Visitors. Zoo Biol. 2012, 31, 27–39. [Google Scholar] [CrossRef]

- Martin, C.F.; Shumaker, R.W. Computer Tasks for Great Apes Promote Functional Naturalism in a Zoo Setting. In ACI ’18, Proceedings of the Fifth International Conference on Animal-Computer Interaction, Atlanta, GA, USA, 4–6 December 2018; Association for Computing Machinery: New York, NY, USA, 2018. [Google Scholar] [CrossRef]

- Boostrom, H. Problem-Solving with Orangutans (Pongo Pygmaeus and Pongo Abelii) and Chimpanzees (Pan Troglodytes): Using the iPad to Provide Novel Enrichment Opportunities; Texas A&M University: College Station, TX, USA, 2013. [Google Scholar]

- Grunauer, P.P.; Walguarnery, J.W. Relative Response to Digital Tablet Devices and Painting as Sensory Enrichment in Captive Chimpanzees. Zoo Biol. 2018, 37, 269–273. [Google Scholar] [CrossRef] [PubMed]

- Wirman, H. Games for/with Strangers—Captive Orangutan (Pongo Pygmaeus) Touch Screen Play; Antennae: New York, NY, USA, 2014. [Google Scholar]

- Perea Garcia, J.O.; Miani, A.; Kristian Olsen Alstrup, A.; Malmkvist, J.; Pertoldi, C.; Hammer Jensen, T.; Kruse Nielsen, R.; Witzner Hansen, D.; Bach, L.A. Orangulas: Effect of Scheduled Visual Enrichment on Behavioral and Endocrine Aspects of a Captive Orangutan (Pongo pygmaeus). J. Zoo Aquar. Res. 2020, 8, 67–72. [Google Scholar] [CrossRef]

- Hopper, L.M. Cognitive Research in Zoos. Curr. Opin. Behav. Sci. 2017, 16, 100–110. [Google Scholar] [CrossRef]

- Pokorny, J.J.; de Waal, F.B. Monkeys Recognize the Faces of Group Mates in Photographs. Proc. Natl. Acad. Sci. USA 2009, 106, 21539–21543. [Google Scholar] [CrossRef] [PubMed]

- D’amato, M.; Van Sant, P. The Person Concept in Monkeys (Cebus apella). J. Exp. Psychol. Anim. Behav. Process. 1988, 14, 43. [Google Scholar] [CrossRef]

- Parron, C.; Call, J.; Fagot, J. Behavioural Responses to Photographs by Pictorially Naive Baboons (Papio anubis), Gorillas (Gorilla gorilla) and Chimpanzees (Pan troglodytes). Behav. Process. 2008, 78, 351–357. [Google Scholar] [CrossRef]

- Fagot, J.; Thompson, R.K.; Parron, C. How to Read a Picture: Lessons From Nonhuman Primates. Proc. Natl. Acad. Sci. USA 2010, 107, 519–520. [Google Scholar] [CrossRef]

- Hopper, L.M.; Lambeth, S.P.; Schapiro, S.J. An Evaluation of the Efficacy of Video Displays for Use With Chimpanzees (P an troglodytes). Am. J. Primatol. 2012, 74, 442–449. [Google Scholar] [CrossRef]

- Shepherd, S.V.; Steckenfinger, S.A.; Hasson, U.; Ghazanfar, A.A. Human-monkey Gaze Correlations Reveal Convergent and Divergent Patterns of Movie Viewing. Curr. Biol. 2010, 20, 649–656. [Google Scholar] [CrossRef]

- Perdue, B.M.; Evans, T.A.; Washburn, D.A.; Rumbaugh, D.M.; Beran, M.J. Do Monkeys Choose. In Learning and Behavior; Springer: Berlin/Heidelberg, Germany, 2014; pp. 164–175. [Google Scholar] [CrossRef]

- Anderson, J.R.; Kuroshima, H.; Fujita, K. Observational Learning in Capuchin Monkeys: A Video Deficit Effect. Q. J. Exp. Psychol. 2017, 70, 1254–1262. [Google Scholar] [CrossRef]

- Platt, D.M.; Novak, M.A. Videostimulation as Enrichment for Captive Rhesus Monkeys (Macaca mulatta). Appl. Anim. Behav. Sci. 1997, 52, 139–155. [Google Scholar] [CrossRef]

- Bliss-Moreau, E.; Santistevan, A.; Machado, C. Monkeys Prefer Reality Television. 2021. Available online: https://psyarxiv.com/7drpt/ (accessed on 29 January 2021).

- Leighty, K.A.; Maloney, M.A.; Kuhar, C.W.; Phillips, R.S.; Wild, J.M.; Chaplin, M.S.; Betting, T.L. Use of a Touchscreen-Mediated Testing System with Mandrill Monkeys. Int. J. Comp. Psychol. 2011, 24, 60–75. [Google Scholar]

- Buchanan-Smith, H.M.; Badihi, I. The Psychology of Control: Effects of Control over Supplementary Light on Welfare of Marmosets. Appl. Anim. Behav. Sci. 2012, 137, 166–174. [Google Scholar] [CrossRef]

- Grandin, T.; Curtis, S.E.; Widowski, T.M.; Thurmon, J.C. Electro-Immobilization Versus Mechanical Restraint in an Avoid-Avoid Choice Test for Ewes. J. Anim. Sci. 1986, 62, 1469–1480. [Google Scholar] [CrossRef] [PubMed]

- Ogura, T. Use of video system and its effects on abnormal behaviour in captive Japanese macaques (Macaca fuscata). Appl. Anim. Behav. Sci. 2012, 141, 173–183. [Google Scholar] [CrossRef]

- Villani, G.M. Learning to Avoid Unprofitable Prey: A Forgotten Benefit of Trichromacy in Primates? Helsingin yliopisto: Helsinki, Finland, 2020. [Google Scholar]

- Lehman, S.M.; Prince, W.; Mayor, M. Within-Group Social Bonds in White-Faced Saki Monkeys (Pithecia pithecia) Display Male-Female Pair Preference. Neotrop. Primates 2001, 9, 96. [Google Scholar]

- Hirskyj-Douglas, I.; Piitulainen, R. Developing Zoo Technology Requirements for White-Faced Saki Monkeys. In Proceedings of the International Conference on Animal-Computer Interaction, Honolulu, HI, USA, 25–30 April 2020. [Google Scholar] [CrossRef]

- Hirskyj-Douglas, I.; Read, J.C. DoggyVision: Examining how dogs (Canis Familiaris) Interact with Media Using a Dog-Driven Proximity Tracker Device. Anim. Behav. Cogn. 2018, 5, 388–405. [Google Scholar] [CrossRef]

- Shepherd, S.V.; Platt, M.L. Noninvasive Telemetric Gaze Tracking in Freely Moving Socially Housed Prosimian Primates, Method. Methods 2006, 38, 185–194. [Google Scholar] [CrossRef]

- Orr, C.M. Locomotor Hand Postures, Carpal Kinematics During Wrist Extension, and Associated Morphology in Anthropoid Primates. Anat. Rec. 2017, 300, 382–401. [Google Scholar] [CrossRef]

- Davis, T.; Torab, K.; House, P.; Greger, B. A Minimally Invasive Approach to Long-Term Head Fixation in Behaving Nonhuman Primates. J. Neurosci. Methods 2009, 181, 106–110. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Carpio, F.; Jukan, A.; Sanchez, A.I.M.; Amla, N.; Kemper, N. Beyond Production Indicators: A Novel Smart Farming Application and System for Animal Welfare. In ACI2017, Proceedings of the Fourth International Conference on Animal-Computer Interaction, Milton Keynes, UK, 21–23 November 2017; Association for Computing Machinery: New York, NY, USA, 2017. [Google Scholar] [CrossRef]

- Schmitt, V. Implementing Portable Touchscreen-Setups to Enhance Cognitive Research and Enrich Zoo-Housed Animals. J. Zoo Aquar. Res. 2019, 7, 50–58. [Google Scholar] [CrossRef]

- Hirskyj-Douglas, I.; Lucero, A. On the Internet, Nobody Knows You’re a Dog. In CHI’19, Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1–12. [Google Scholar] [CrossRef]

- Ruge, L.; Cox, E.; Mancini, C.; Luck, R. User Centered Design Approaches to Measuring Canine Behavior: Tail Wagging as a Measure of User Experience. In ACI ’18, Proceedings of the Fifth International Conference on Animal-Computer Interaction, Atlanta, GA, USA, 4–6 December 2018; Association for Computing Machinery: New York, NY, USA, 2018. [Google Scholar] [CrossRef]

- Zeagler, C.; Zuerndorfer, J.; Lau, A.; Freil, L.; Gilliland, S.; Starner, T.; Jackson, M.M. Canine Computer Interaction: Towards Designing a Touchscreen Interface for Working Dogs. In ACI ’16, Proceedings of the Third International Conference on Animal-Computer Interaction, Milton Keynes, UK, 15–17 November 2016; Association for Computing Machinery: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- Bloomsmith, M.A.; Lambeth, S.P. Videotapes as enrichment for captive chimpanzees (Pan troglodytes). Zoo Biol. 2000, 19, 541–551. [Google Scholar] [CrossRef]

- Ogura, T.; Matsuzawa, T. Video preference assessment and behavioral management of single-caged Japanese macaques (Macaca fuscata) by movie presentation. J. Appl. Anim. Welf. Sci. 2012, 15, 101–112. [Google Scholar] [CrossRef] [PubMed]

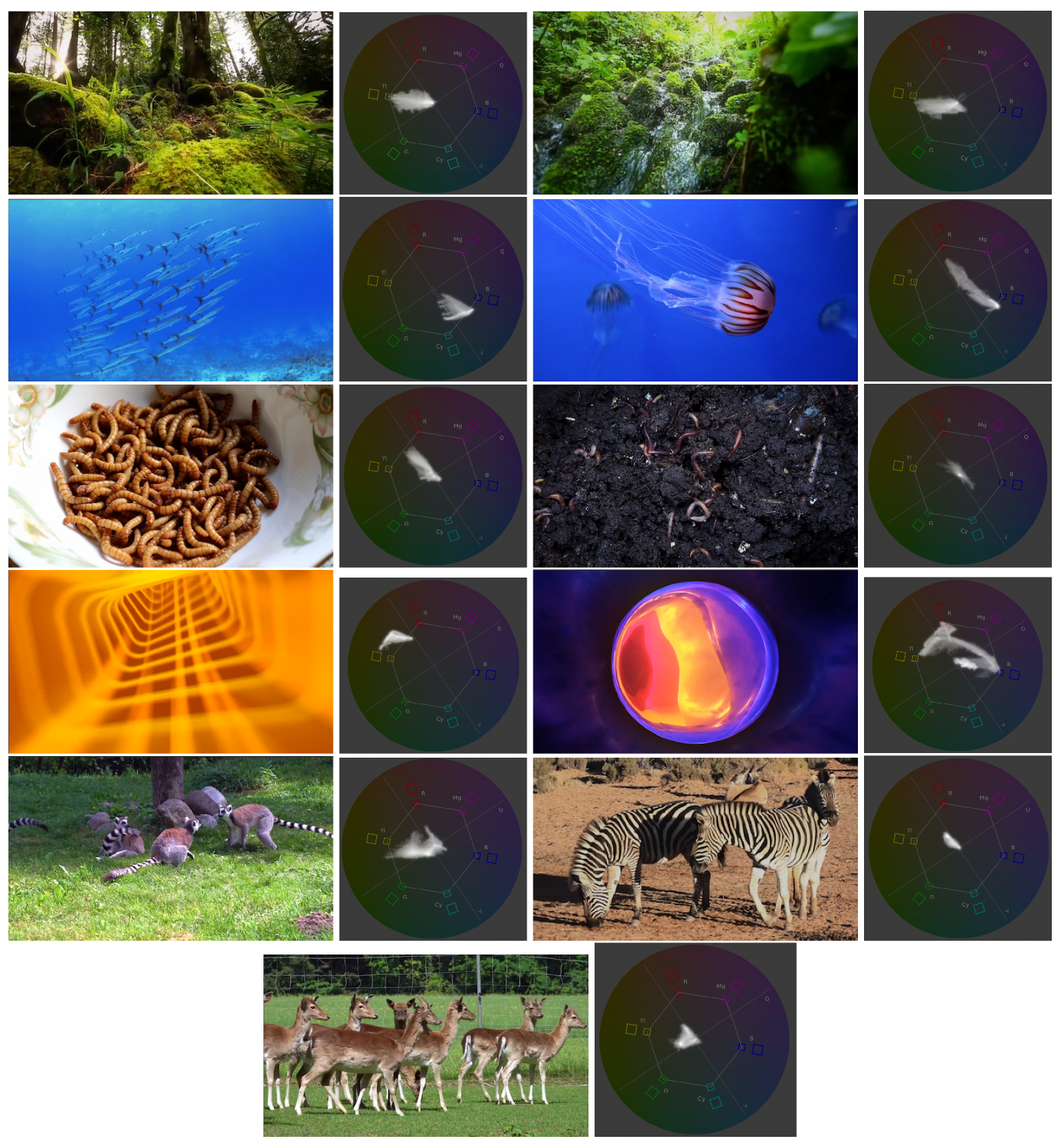

| Video Content | Description | Characteristics | |

|---|---|---|---|

| Forest | Two clips filmed in a forest showing scenery, moving slowly. | Slow movement; Green colours; Natural habitat; Calm | |

| Underwater | Two clips filmed underwater. One showing a school of barracudas and the other a jellyfish with bright colour. | Active movement; Shades of blue and bright red colour; Unfamiliar environment; Target to follow | |

| Worms | Two clips: earthworms in soil and a white bowl full of mealworms. | Earthy colour shades; Recognisable objects for the sakis (part of their diet) | |

| Abstract | Two clips: orange-coloured simulation of moving through the structure, and a ball-shaped object with changing bright colours. | Colourful; Movement; Unrecognisable content | |

| Animals | Three 10-s clips of different animals (zebras, lemurs, deer) socially interacting with each other. | Mild colors; Not familiar animals; Social behaviour |

| Video Content | Description |

|---|---|

| Passing Through | Monkey passes through the device without stopping at any point or interacting in any form |

| Viewing out | While inside located at one of the edges of the device, monkey is looking out to other parts of the enclosure |

| Looking screen | The monkey is seen to look at the screen at some point during triggering |

| Tactile on wall | The monkey touches anywhere on the wall where the screen resides or the screen as an individual behaviour |

| Viewing window | The monkey pauses, sits and/or stops to look out of the perspex plastic to the rest of the enclosure |

| Social Usage | During interaction, multiple monkeys interacting with each other but only one is inside the device at once |

| Social All Inside | During interaction multiple monkeys inside the device at once |

| Two Monkeys Usage | Two monkeys inside the device at once |

| Three Monkeys Usage | Three monkeys inside the device at once |

| Chasing Out | Chasing another monkey out of the system |

| Grooming | Grooming together or alone |

| Sleeping | Seen sleeping by lying down and eyes closed |

| Scratching | Rubs with its fingers and nails somewhere on itself in a quick manner |

| Stretching | Stretching |

| Sitting | Sitting down in the device |

| Sitting Facing Screen | Sitting down facing the screen within the device |

| Sitting in the Middle | Sitting location is inside in the middle of the device |

| Sitting on the Edge | Sitting location is on one of the edges of the device |

| Sitting on Both | During the interaction, sitting both on middle and edge locations |

| Condition 1 (N = 7) | Condition 2 (N = 7) | W | Adj. p-Value | Effect Size (r) |

|---|---|---|---|---|

| No video | Forest | 27 | 1.000 | ns |

| No video | Underwater | 3 | 0.041 * | 0.734 |

| No video | Worms | 2 | 0.029 * | 0.768 |

| No video | Abstract | 19 | 1.000 | ns |

| No video | Animals | 47 | 0.041 * | 0.773 |

| Forest | Underwater | 3 | 0.041 * | 0.734 |

| Forest | Worms | 1 | 0.018 * | 0.803 |

| Forest | Abstract | 16 | 1.000 | ns |

| Forest | Animals | 46 | 0.049 * | 0.738 |

| Underwater | Worms | 22 | 1.000 | ns |

| Underwater | Abstract | 40 | 0.265 | ns |

| Underwater | Animals | 49 | 0.029 * | 0.841 |

| Worms | Abstract | 41 | 0.227 | ns |

| Worms | Animals | 49 | 0.029 * | 0.841 |

| Abstract | Animals | 49 | 0.029 * | 0.841 |

| Control | Visual Stimuli | Difference | p-Value | |

|---|---|---|---|---|

| Passing through | 59.70% | 48.75% | −18.34% | 0.397 |

| Viewing out | 35.82% | 39.50% | 10.27% | 0.673 |

| Looking screen | 20.90% | 17.34% | −17.03% | 0.488 |

| Tactile on wall | 16.42% | 7.51% | −54.26% | 0.193 |

| Viewing window | 16.42% | 19.85% | 20.89% | 0.646 |

| Social usage | 5.97% | 8.86% | 48.41% | 0.453 |

| Social all inside | 1.49% | 5.59% | 275.17% | 0.253 |

| Two monkeys | 7.46% | 9.06% | 21.45% | 0.555 |

| Three monkeys | 0.00% | 0.39% | NA | 0.552 |

| Chasing out | 0.00% | 0.19% | NA | 0.701 |

| Grooming | 5.97% | 15.03% | 151.76% | 0.138 |

| Sleeping | 4.48% | 8.29% | 85.04% | 0.694 |

| Scratching | 28.36% | 11.37% | −59.91% | 0.022 * |

| Stretching | 8.96% | 5.78% | −35.49% | 0.898 |

| Sitting | 38.81% | 45.47% | 17.16% | 0.879 |

| Sitting facing screen | 19.40% | 21.97% | 13.25% | 1.000 |

| Sitting on middle | 7.46% | 13.68% | 83.38% | 0.406 |

| Sitting on edge | 16.42% | 16.38% | −0.24% | 0.224 |

| Sitting on both | 14.93% | 15.41% | 3.22% | 0.619 |

| Frequency | Avg Use Time (s) | Duration (s) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| No. per Day | Weekly | Daily | Daily SD | Mean | Median | Std | Longest | Shortest | ||||

| No video | 1.47 | 1179 | 168 | 186.63 | 115.22 | 5 | 429.23 | 3281 | 1 | |||

| Forest | 1.82 | 874 | 125 | 116.48 | 68.78 | 3 | 179.92 | 1065 | 1 | |||

| Underwater | 10.02 | 7016 | 1002 | 896.45 | 112.29 | 4 | 520.62 | 6001 | 1 | |||

| Worms | 5.61 | 7919 | 1131 | 994.39 | 202.01 | 27 | 432.82 | 2737 | 1 | |||

| Abstract | 5.67 | 3726 | 523 | 938.32 | 93.03 | 11 | 217.18 | 1073 | 1 | |||

| Animals | 0.71 | 73 | 10 | 22.29 | 14.53 | 3 | 44.17 | 174 | 1 | |||

| Control | Forest | Underwater | Worms | Abstract | Animals | |

|---|---|---|---|---|---|---|

| (Total 67) | (Total 89) | (Total (235) | (Total 119) | (Total 61) | (Total 15) | |

| Passing through | 59.70% | 59.55% | 51.49% | 36.97% | 39.34% | 73.33% |

| Viewing out | 35.82% | 21.35% | 39.15% | 52.94% | 44.26% | 26.67% |

| Looking screen | 20.90% | 23.60% | 13.19% | 19.33% | 22.95% | 6.67% |

| Tactile on wall | 16.42% | 8.99% | 5.96% | 11.76% | 3.28% | 6.67% |

| Viewing window | 16.42% | 17.98% | 16.60% | 27.73% | 24.59% | 0.00% |

| Social usage | 5.97% | 16.85% | 8.94% | 5.88% | 4.92% | 0.00% |

| Social all inside | 1.49% | 8.99% | 7.66% | 2.52% | 0.00% | 0.00% |

| Two monkeys | 7.46% | 16.85% | 9.36% | 5.88% | 4.92% | 0.00% |

| Three monkeys | 0.00% | 1.12% | 0.43% | 0.00% | 0.00% | 0.00% |

| Chasing out | 0.00% | 0.00% | 0.00% | 0.84% | 0.00% | 0.00% |

| Grooming | 5.97% | 6.74% | 9.79% | 29.41% | 22.95% | 0.00% |

| Sleeping | 4.48% | 5.62% | 5.96% | 15.97% | 8.20% | 0.00% |

| Scratching | 28.36% | 15.73% | 5.11% | 16.81% | 21.31% | 0.00% |

| Stretching | 8.96% | 13.48} | 4.26% | 3.36% | 6.56% | 0.00% |

| Sitting | 38.81% | 37.08% | 43.40% | 56.30% | 52.46% | 13.33% |

| Sitting facing screen | 19.40% | 22.47% | 17.87% | 30.25% | 24.59% | 6.67% |

| Sitting on middle | 7.46% | 13.48% | 11.06% | 17.65% | 19.67% | 0.00% |

| Sitting on edge | 16.42% | 11.24% | 17.45% | 20.17% | 14.75% | 6.67% |

| Sitting on both | 14.93% | 12.36% | 14.89% | 18.49% | 18.03% | 6.67% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hirskyj-Douglas, I.; Kankaanpää, V. Exploring How White-Faced Sakis Control Digital Visual Enrichment Systems. Animals 2021, 11, 557. https://doi.org/10.3390/ani11020557

Hirskyj-Douglas I, Kankaanpää V. Exploring How White-Faced Sakis Control Digital Visual Enrichment Systems. Animals. 2021; 11(2):557. https://doi.org/10.3390/ani11020557

Chicago/Turabian StyleHirskyj-Douglas, Ilyena, and Vilma Kankaanpää. 2021. "Exploring How White-Faced Sakis Control Digital Visual Enrichment Systems" Animals 11, no. 2: 557. https://doi.org/10.3390/ani11020557

APA StyleHirskyj-Douglas, I., & Kankaanpää, V. (2021). Exploring How White-Faced Sakis Control Digital Visual Enrichment Systems. Animals, 11(2), 557. https://doi.org/10.3390/ani11020557