Deep-Learning-Based Automatic Monitoring of Pigs’ Physico-Temporal Activities at Different Greenhouse Gas Concentrations

Abstract

:Simple Summary

Abstract

1. Introduction

2. Materials and Methods

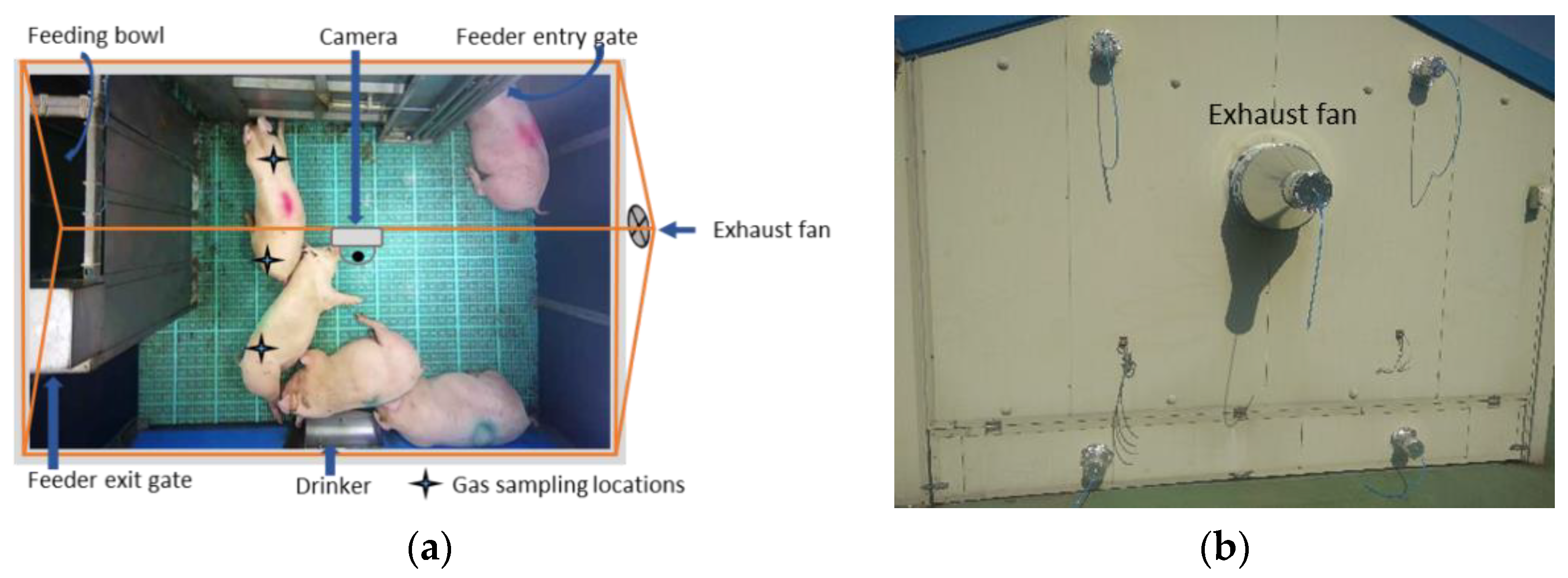

2.1. Experimental House and Animals

2.2. Experimental Setup and Data Collection

2.3. Image Pre-Processing and Dataset Preparation

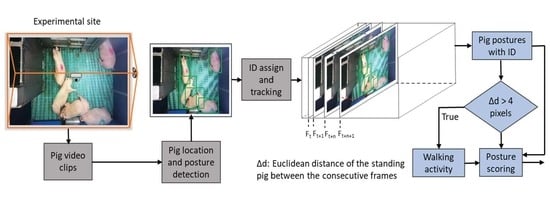

2.4. Proposed Methodology

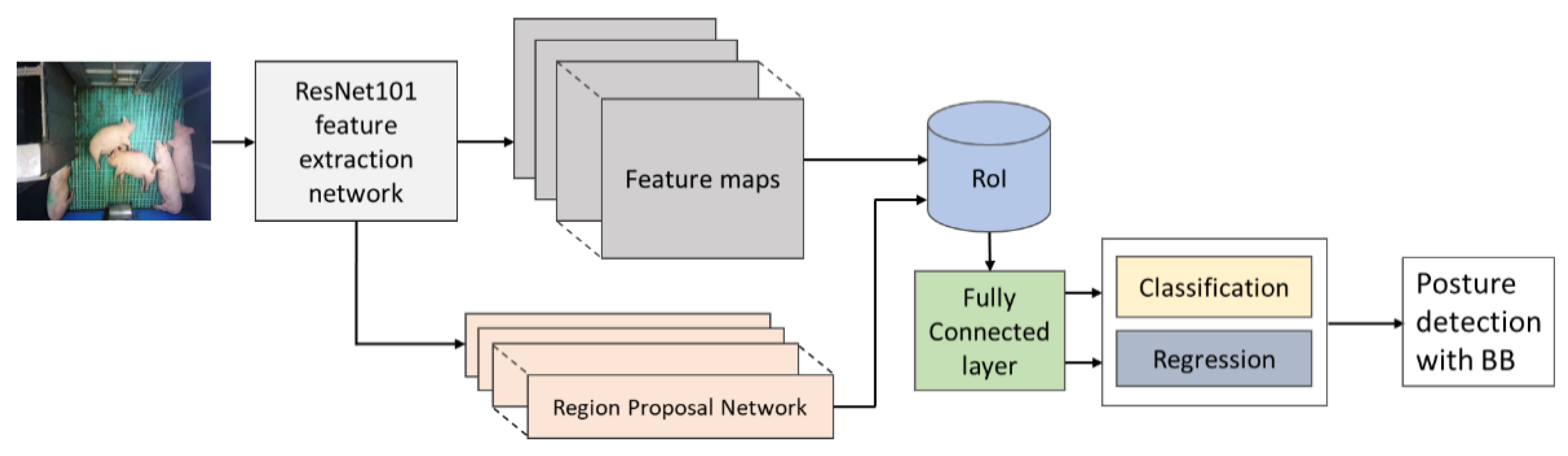

2.4.1. Pig Posture Activity Detection Model

2.4.2. Pig-Tracking Algorithm

2.4.3. Pig-Moving Detection and Activity-Scoring Algorithm

2.4.4. Training and Evaluation of the Model

3. Results

3.1. Greenhouse Gas Concentrations

3.2. Group-Wise Pig Posture and Walking Behavior Score

3.3. Individual Pig Posture and Walking Behavior

3.4. Pig-Activity Detection and Tracking Model Performance

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Czycholl, I.; Büttner, K.; grosse Beilage, E.; Krieter, J. Review of the assessment of animal welfare with special emphasis on the Welfare Quality® animal welfare assessment protocol for growing pigs. Arch. Anim. Breed. 2015, 58, 237–249. [Google Scholar] [CrossRef] [Green Version]

- Matthews, S.G.; Miller, A.L.; Clapp, J.; Plötz, T.; Kyriazakis, I. Early detection of health and welfare compromises through automated detection of behavioural changes in pigs. Vet. J. 2016, 217, 43–51. [Google Scholar] [CrossRef] [Green Version]

- Cowton, J.; Kyriazakis, I.; Bacardit, J. Automated Individual Pig Localisation, Tracking and Behaviour Metric Extraction Using Deep Learning. IEEE Access 2019, 7, 108049–108060. [Google Scholar] [CrossRef]

- Villain, A.S.; Lanthony, M.; Guérin, C.; Tallet, C. Manipulable Object and Human Contact: Preference and Modulation of Emotional States in Weaned Pigs. Front. Vet. Sci. 2020, 7, 577433. [Google Scholar] [CrossRef]

- Leruste, H.; Bokkers, E.A.; Sergent, O.; Wolthuis-Fillerup, M.; van Reenen, C.G.; Lensink, B.J. Effects of the observation method (direct v. From video) and of the presence of an observer on behavioural results in veal calves. Animal 2013, 7, 1858–1864. [Google Scholar] [CrossRef] [PubMed]

- Rostagno, M.H.; Eicher, S.D.; Lay, D.C., Jr. Immunological, physiological, and behavioral effects of salmonella enterica carriage and shedding in experimentally infected finishing pigs. Foodborne Pathog. Dis. 2011, 8, 623–630. [Google Scholar] [CrossRef] [PubMed]

- Huynh, T.T.T.; Aarnink, A.J.A.; Gerrits, W.J.J.; Heetkamp, M.J.H.; Canh, T.T.; Spoolder, H.A.M.; Kemp, B.; Verstegen, M.W.A. Thermal behaviour of growing pigs in response to high temperature and humidity. Appl. Anim. Behav. Sci. 2005, 19, 1–16. [Google Scholar] [CrossRef]

- Alameer, A.; Kyriazakis, I.; Bacardit, J. Automated recognition of postures and drinking behaviour for the detection of compromised health in pigs. Sci. Rep. 2020, 10, 13665. [Google Scholar] [CrossRef] [PubMed]

- Benjamin, M.; Yik, S. Precision Livestock Farming in Swine Welfare: A Review for Swine Practitioners. Animals 2019, 9, 133. [Google Scholar] [CrossRef] [Green Version]

- Diana, A.; Carpentier, L.; Piette, D.; Boyle, L.A.; Berckmans, D.; Norton, T. An ethogram of biter and bitten pigs during an ear biting event: First step in the development of a Precision Livestock Farming tool. App. Anim. Behav. Sci. 2019, 215, 26–36. [Google Scholar] [CrossRef]

- MacLeod, M.; Gerber, P.; Mottet, A.; Tempio, G.; Falcucci, A.; Opio, C.; Vellinga, T.; Henderson, B.; Steinfeld, H. Greenhouse Gas Emissions from Pig and Chicken Supply Chains–A Global Life Cycle Assessment; Food and Agriculture Organization of the United Nations (FAO): Rome, Italy, 2013; Available online: http://www.fao.org/3/i3460e/i3460e.pdf (accessed on 4 March 2021).

- Çavuşoğlu, E.; Rault, J.-L.; Gates, R.; Lay, D.C., Jr. Behavioral Response of Weaned Pigs during Gas Euthanasia with CO2, CO2 with Butorphanol, or Nitrous Oxide. Animals 2020, 10, 787. [Google Scholar] [CrossRef]

- Atkinson, S.; Algers, B.; Pallisera, J.; Velarde, A.; Llonch, P. Animal Welfare and Meat Quality Assessment in Gas Stunning during Commercial Slaughter of Pigs Using Hypercapnic-Hypoxia (20% CO2 2% O2) Compared to Acute Hypercapnia (90% CO2 in Air). Animals 2020, 10, 2440. [Google Scholar] [CrossRef] [PubMed]

- Lindahl, C.; Sindhøj, E.; Brattlund Hellgren, R.; Berg, C.; Wallenbeck, A. Responses of Pigs to Stunning with Nitrogen Filled High-Expansion Foam. Animals 2020, 10, 2210. [Google Scholar] [CrossRef]

- Verhoeven, M.; Gerritzen, M.; Velarde, A.; Hellebrekers, L.; Kemp, B. Time to Loss of Consciousness and Its Relation to Behavior in Slaughter Pigs during Stunning with 80 or 95% Carbon Dioxide. Front Vet. Sci. 2016, 3, 38. [Google Scholar] [CrossRef] [Green Version]

- Sejian, V.; Bhatta, R.; Malik, K.; Madiajagan, B.; Al-Hosni, Y.A.S.; Sullivan, M.; Gaughan, J.B. Livestock as Sources of Greenhouse Gases and Its Significance to Climate Change. In Greenhouse Gases; Llamas, B., Pous, J., Eds.; IntechOpen: London, UK, 2016; pp. 243–259. [Google Scholar]

- Nasirahmadi, A.; Edwardsa, S.A.; Sturm, B. Implementation of machine vision for detecting behaviour of cattle and pigs. Livest. Sci. 2017, 202, 25–38. [Google Scholar] [CrossRef] [Green Version]

- Nasirahmadi, A.; Hensel, O.; Edwards, S.A.; Sturm, B. Automatic detection of mounting behaviours among pigs using image analysis. Comput. Electron. Agric. 2016, 124, 295–302. [Google Scholar] [CrossRef] [Green Version]

- Nasirahmadi, A.; Sturm, B.; Olsson, A.C.; Jeppsson, K.H.; Müller, S.; Edwards, S.; Hensel, O. Automatic scoring of lateral and sternal lying posture in grouped pigs using image processing and Support Vector Machine. Comput. Electron. Agric. 2019, 156, 475–481. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Richter, U.; Hensel, O.; Edwards, S.; Sturm, B. Using machine vision for investigation of changes in pig group lying patterns. Comput. Electron. Agric. 2015, 119, 184–190. [Google Scholar] [CrossRef] [Green Version]

- Matthews, S.G.; Miller, A.L.; Plötz, T.; Kyriazakis, I. Automated tracking to measure behavioural changes in pigs for health and welfare monitoring. Sci. Rep. 2017, 7, 17582. [Google Scholar] [CrossRef]

- Zhang, L.; Gray, H.; Ye, X.; Collins, L.; Allinson, N. Automatic Individual Pig Detection and Tracking in Pig Farms. Sensors 2019, 19, 1188. [Google Scholar] [CrossRef] [Green Version]

- Liu, D.; Oczak, M.; Maschat, K.; Baumgartner, J.; Pletzer, B.; He, D.; Norton, T. A computer vision-based method for spatial-temporal action recognition of tail-biting behavior in group-housed pigs. Biosyst. Eng. 2020, 195, 27–41. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Sturm, B.; Edwards, S.; Jeppsson, K.-H.; Olsson, A.-C.; Müller, S.; Hensel, O. Deep Learning and Machine Vision Approaches for Posture Detection of Individual Pigs. Sensors 2019, 19, 3738. [Google Scholar] [CrossRef] [Green Version]

- Yang, A.; Huang, H.; Zhu, X.; Yang, X.; Chen, P.; Li, S.; Xue, Y. Automatic recognition of sow nursing behavior using deep learning-based segmentation and spatial and temporal features. Biosyst. Eng. 2018, 175, 133–145. [Google Scholar] [CrossRef]

- Riekert, M.; Klein, A.; Adrion, F.; Hoffmann, C.; Gallmann, E. Automatically detecting pig position and posture by 2D camera imaging and deep learning. Comput. Electron. Agric. 2020, 174, 105391. [Google Scholar] [CrossRef]

- Moon, B.E.; Lee, M.H.; Kim, H.T.; Choi, T.H.; Kim, Y.B.; Ryou, Y.S.; Kim, H.T. Evaluation of thermal performance through development of an unglazed transpired collector control system in experimental pig barns. Sol. Energy 2017, 157, 201–215. [Google Scholar] [CrossRef]

- Basak, J.K.; Okyere, F.G.; Arulmozhi, A.; Park, J.; Khan, F.; Kim, H.T. Artificial neural networks and multiple linear regressionas potential methods for modelling body surface temperature of pig. J. Appl. Anim. Res. 2020, 48, 207–219. [Google Scholar] [CrossRef]

- Kim, K.H.; Kim, K.S.; Kim, J.E.; Kim, D.W.; Seol, K.H.; Lee, S.H.; Chase, B.J.; Kim, K.H. The effect of optimal space allowance on growth performance and physiological responses of pigs at different stages of growth. Animal 2017, 11, 478–485. [Google Scholar] [CrossRef]

- Sander, B.O.; Wassmann, R. Common practices for manual greenhouse gas sampling in rice production: A literature study on sampling modalities of the closed chamber method. Greenh. Gas. Meas. Manag. 2014, 4, 1–13. [Google Scholar] [CrossRef]

- Hikvisionapi 0.2.1. Available online: https://pypi.org/project/hikvisionapi/ (accessed on 14 June 2020).

- Zauner, C.; Steinebach, M.; Hermann, E. Rihamark: Perceptual image hash benchmarking. In Proceedings of the SPIE 7880, Media Watermarking, Security, and Forensics III, San Francisco, CA, USA, 10 February 2011. [Google Scholar]

- Sekachev, B.; Manovich, N.; Zhiltsov, M.; Zhavoronkov, A.; Kalinin, D.; Hoff, D.; Tosmanov; Kruchinin, D.; Zankevich, A.; Sidnev, D.; et al. OpenCV/CVAT: v1.1.0. Available online: https://cvat.org (accessed on 12 November 2020).

- VOC2010 Annotation Guidelines. Available online: http://host.robots.ox.ac.uk/pascal/VOC/voc2010/guidelines.html (accessed on 12 November 2020).

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934v1. Available online: https://arxiv.org/pdf/2004.10934.pdf (accessed on 16 September 2021).

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. Available online: https://arxiv.org/pdf/1804.02767.pdf (accessed on 16 September 2021).

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Computer Vision–ECCV 2014. Springer: Cham, Germany; pp. 740–755. [Google Scholar]

- TensorFlow2 Detection Model Zoo. Available online: https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/tf2_detection_zoo.md (accessed on 15 February 2021).

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Washington, DC, USA, 13–16 December 2015; pp. 1440–1448. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and real-time tracking with a deep association metric. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Kuhn, H.W. The Hungarian method for the assignment problem. Naval. Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef] [Green Version]

- Kalman, R.E. A new approach to linear filtering and prediction problems. Trans. ASME J. Basic. Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef] [Green Version]

- Forst, W.; Hoffmann, D. Optimization—Theory and Practice; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Milan, A.; Leal-Taixé, L.; Reid, I.; Roth, S.; Schindler, K. Mot16: A benchmark for multi-object tracking. arXiv 2016, arXiv:1603.00831. [Google Scholar]

- Padilla, R.; Passos, W.L.; Dias, T.L.B.; Netto, S.L.; da Silva, E.A.B. A Comparative Analysis of Object Detection Metrics with a Companion Open-Source Toolkit. Electronics 2021, 10, 279. [Google Scholar] [CrossRef]

- Dong, H.; Kang, G.; Zhu, Z.; Tao, X.; Chen, Y. Ammonia, Methane, and Carbon Dioxide Concentrations and Emissions of a Hoop Grower-Finisher Swine Barn. Trans. ASABE 2008, 52, 1741–1747. [Google Scholar] [CrossRef]

- Ni, J.Q.; Heber, A.J.; Lim, T.T.; Tao, P.C.; Schmidt, A.M. Methane and carbon dioxide emission from two pig finishing barns. J. Env. Qual. 2008, 37, 2001–2011. [Google Scholar] [CrossRef] [PubMed]

- Moller, H.B.; Sommer, S.G.; Ahring, B.K. Biological degradation and greenhouse gas emissions during pre-storage of liquid animal manure. J. Env. Qual. 2004, 33, 27–36. [Google Scholar] [CrossRef]

- Lionch, P.; Dalmau, A.; Rodriguez, P.; Manteca, X.; Velarde, A. Aversion to nitrogen and carbon dioxide mixtures for stunning pigs. Anim. Welf. 2012, 21, 33–39. [Google Scholar] [CrossRef]

- Azuma, K.; Kagi, N.; Yanagi, U.; Osawa, H. Effects of low-level inhalation exposure to carbon dioxide in indoor environments: A short review on human health and psychomotor performance. Env. Int. 2018, 121, 51–56. [Google Scholar] [CrossRef]

- Ruis, M.A.; Brake, J.H.; Engel, B.; Buist, W.G.; Blokhuis, H.J.; Koolhaas, J.M. Adaptation to social isolation. Acute and long-term stress responses of growing gilts with different coping characteristics. Physiol. Behav. 2001, 73, 541–551. [Google Scholar] [CrossRef] [Green Version]

- Nasirahmadi, A.; Hensel, O.; Edwards, S.A.; Sturm, B. A new approach for categorizing pig lying behaviour based on a Delaunay triangulation method. Animal 2017, 11, 131–139. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Pig Posture and Label | Identification Convention | Instances |

|---|---|---|

| Standing pig (standing_pig) | Only feet or feet and snout in contact with the floor | 10,124 |

| Sternal lying pig (sl_pig) | Belly and folded limbs in contact with the floor | 9364 |

| Lateral lying pig (ll_pig) | Side trunk and extended limbs in contact with the ground | 10,745 |

| Hyperparameter | Value |

|---|---|

| Learning rate | 0.001 |

| Epochs | 500 |

| Optimizer | Adam |

| Batch size | 2 |

| Subdivisions | 1 |

| Activation | Mish |

| Input image size | [640, 640, 3] |

| Data augmentation | Horizontal and vertical flip, Rotations by 90°, 180°, and 270°, and mosaic augmentation |

| Hyperparameter | Value |

|---|---|

| Learning rate | 0.004 |

| Iteration | 50,000 |

| Warmup learning rate | 0.0013333 |

| Momentum | 0.9 |

| Batch size | 2 |

| Score converter | Softmax |

| Input image size | [640, 640, 3] |

| Data augmentation | Horizontal and vertical flip; Rotations by 90°, 180°, and 270° |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bhujel, A.; Arulmozhi, E.; Moon, B.-E.; Kim, H.-T. Deep-Learning-Based Automatic Monitoring of Pigs’ Physico-Temporal Activities at Different Greenhouse Gas Concentrations. Animals 2021, 11, 3089. https://doi.org/10.3390/ani11113089

Bhujel A, Arulmozhi E, Moon B-E, Kim H-T. Deep-Learning-Based Automatic Monitoring of Pigs’ Physico-Temporal Activities at Different Greenhouse Gas Concentrations. Animals. 2021; 11(11):3089. https://doi.org/10.3390/ani11113089

Chicago/Turabian StyleBhujel, Anil, Elanchezhian Arulmozhi, Byeong-Eun Moon, and Hyeon-Tae Kim. 2021. "Deep-Learning-Based Automatic Monitoring of Pigs’ Physico-Temporal Activities at Different Greenhouse Gas Concentrations" Animals 11, no. 11: 3089. https://doi.org/10.3390/ani11113089