Automated Bird Counting with Deep Learning for Regional Bird Distribution Mapping

Abstract

Simple Summary

Abstract

1. Introduction

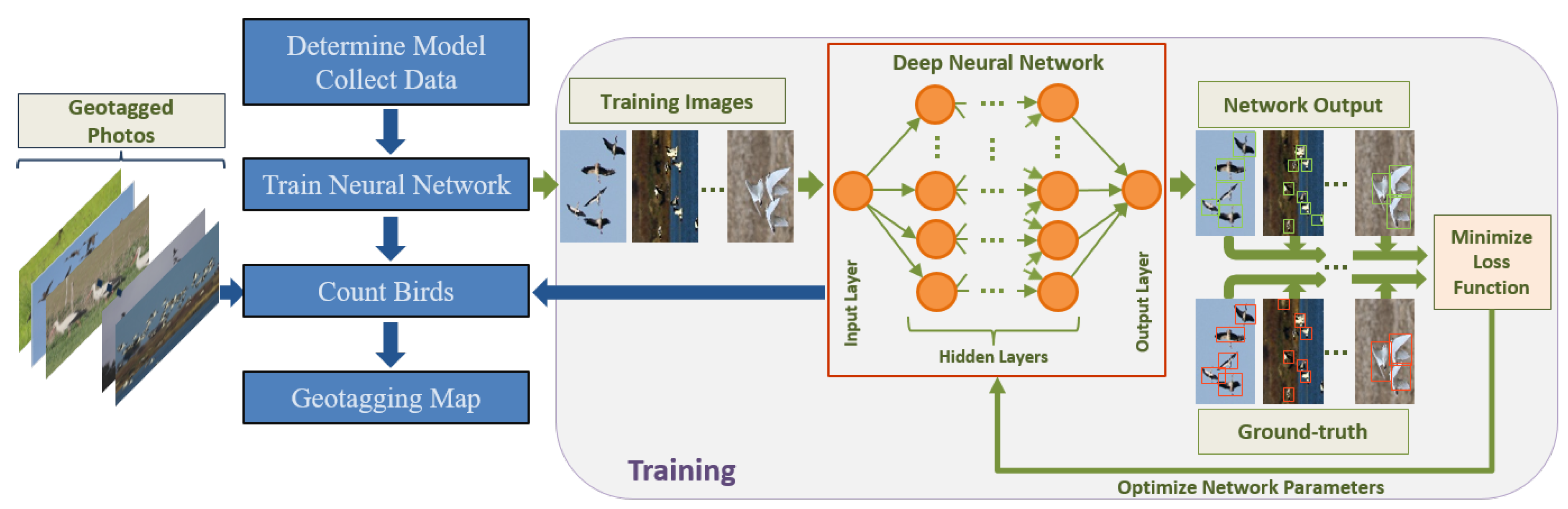

2. Materials and Methods

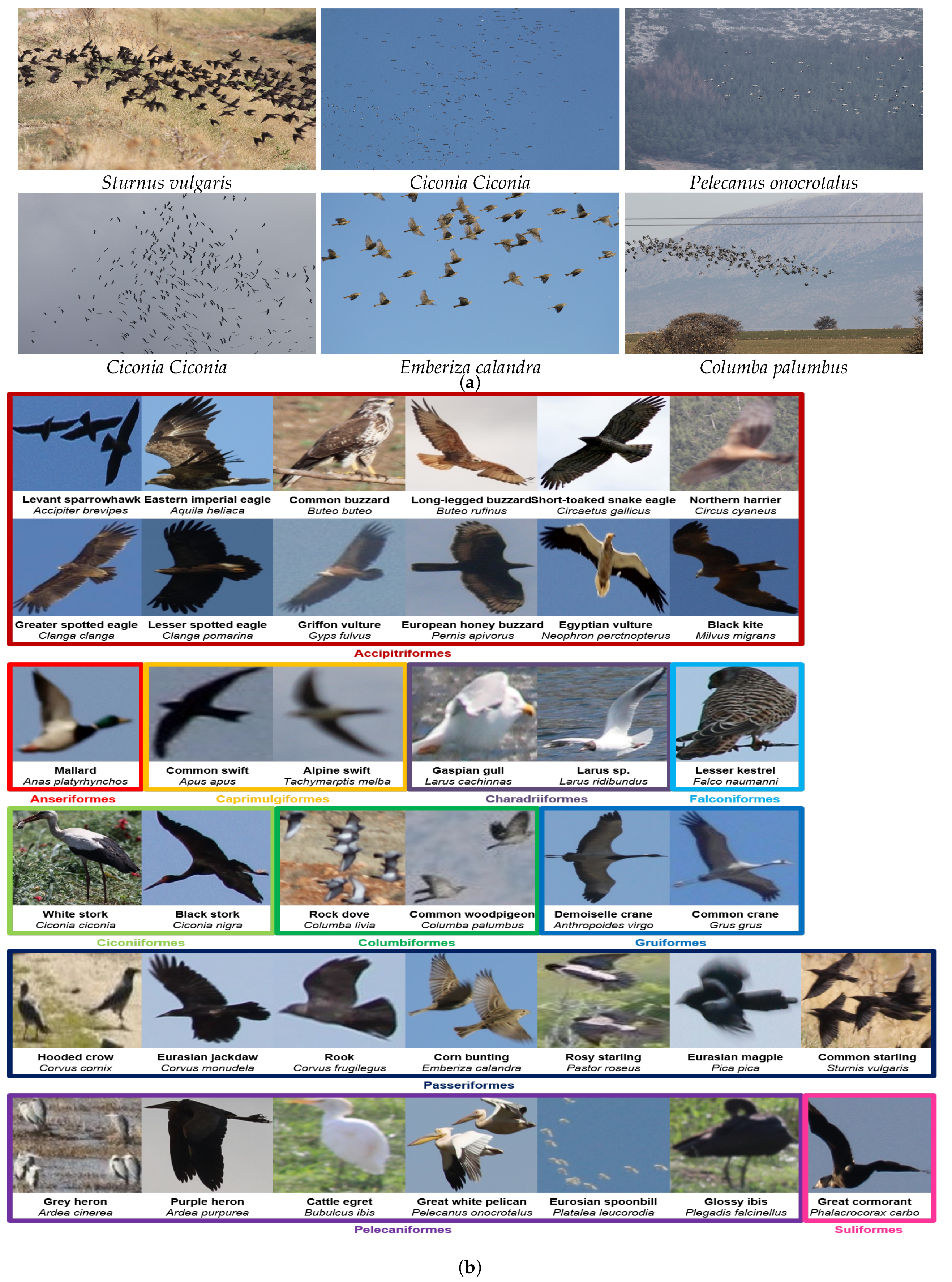

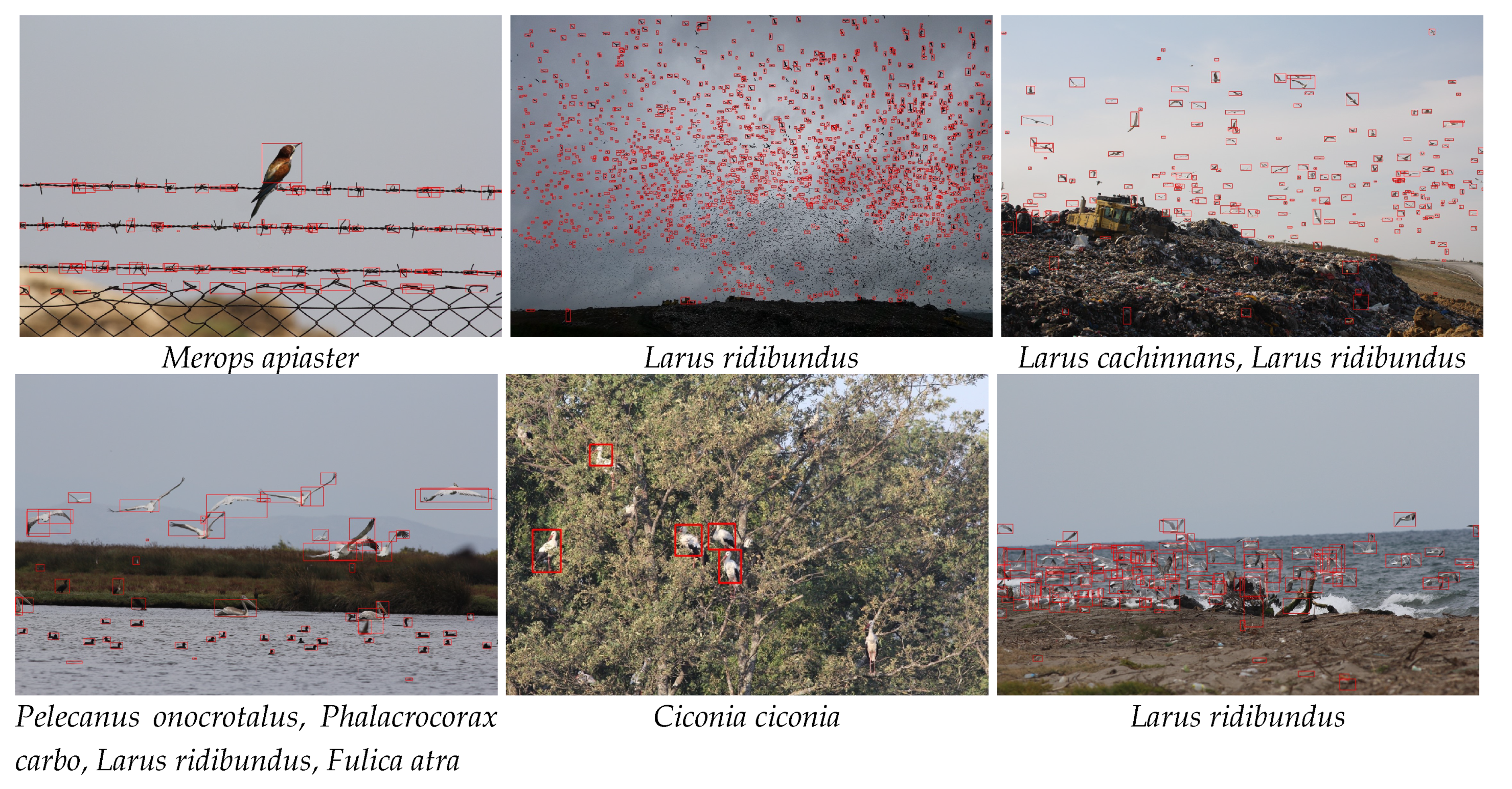

2.1. Data Collection

2.2. Automated Bird Detection

- convolutional layers each of which convolves the previous layer’s neuron outputs by different convolution filters (i.e., each filter is a linear combination of neighboring neurons inside a fixed-size window) and then apply a non-linear function,

- max-pooling layers each of which outputs the maximum of input neuron values in each grid when the input neurons are partitioned into non-overlapping rectangular grids,

- fully connected (FC) layers where each neuron’s output is computed as a non-linear function applied to a linear combination of outputs of all neurons in the previous layer.

2.2.1. Faster R-CNN Network Architecture

Feature Extraction Network

Region Proposal Networks

Fast-RCNN Detector

2.2.2. Data Augmentation

2.2.3. Performance Evaluation

Data Set

Experimental Protocol

Baselines for Comparison

Evaluation Criteria

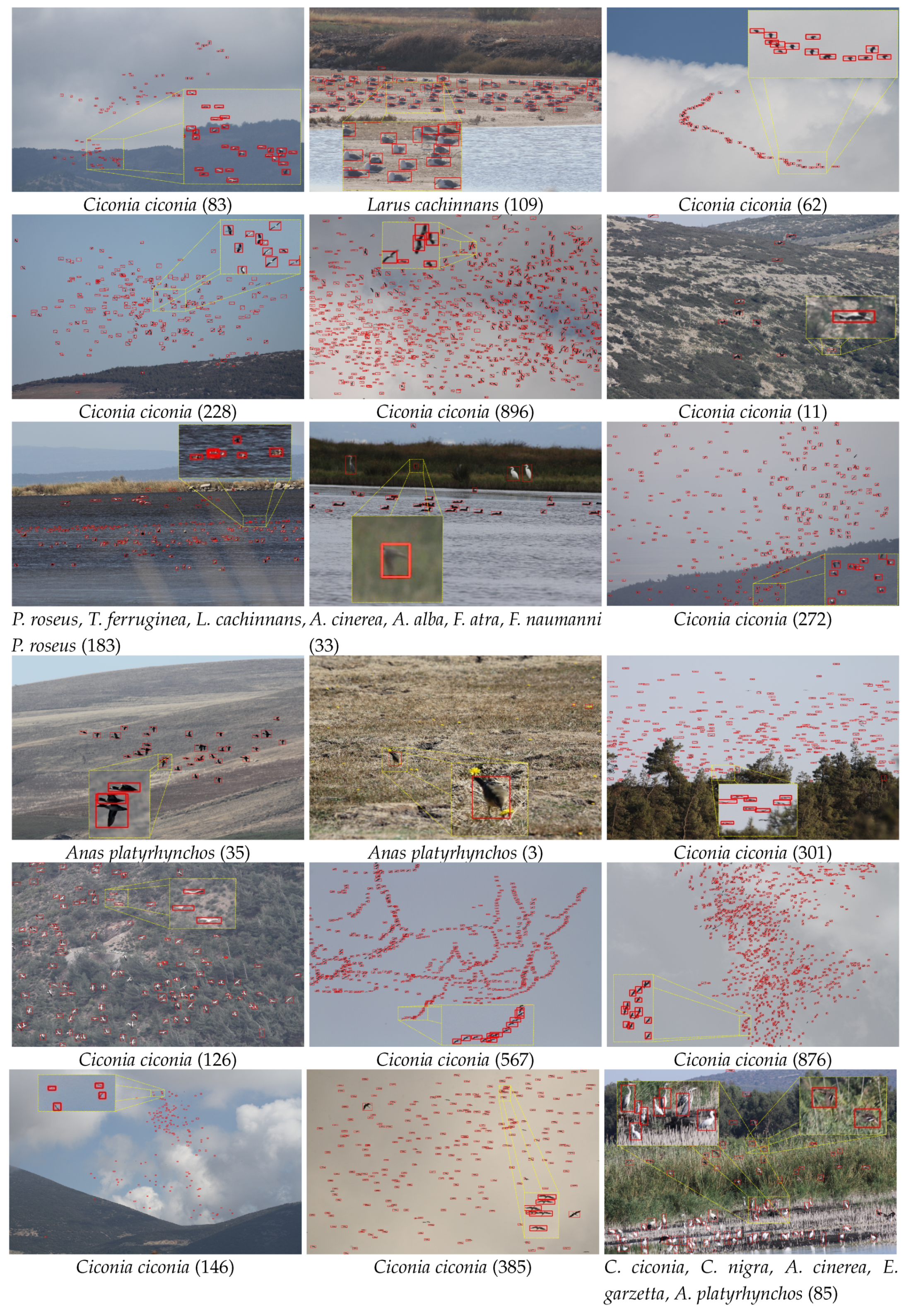

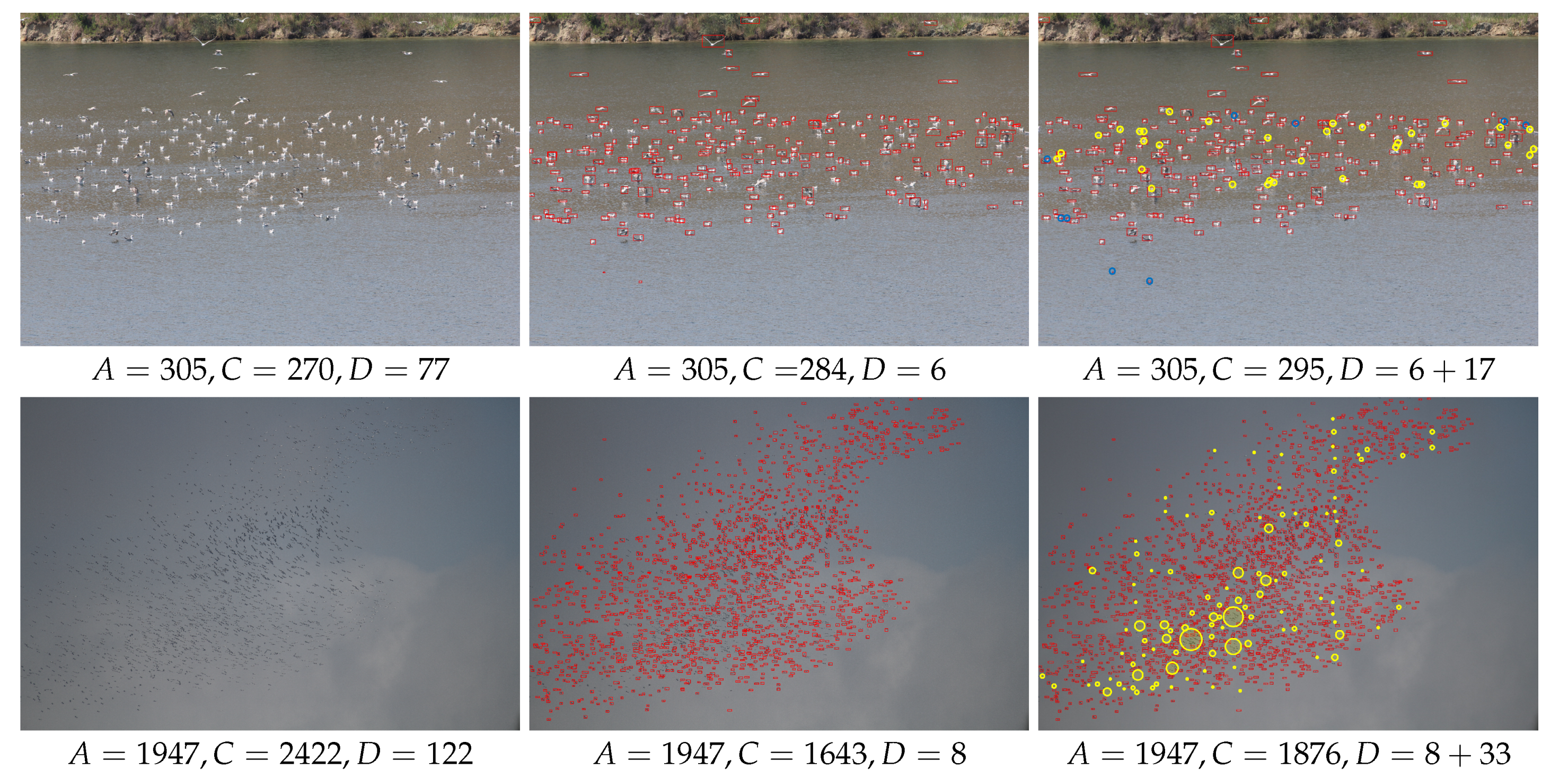

2.3. Bird Counting

- Manual: Two experts, Exp1 and Exp2, that have PhDs in ornithology and at least five-year field experiences on bird counting, separately counted the photographs in random order on a computer monitor. In this method, experts usually count birds by grouping them (i.e., not one by one, but five by five or ten by ten). The maximum allowed duration of effort for each photo was fixed at 3 min.

- Automated: This method corresponds to the Faster R-CNN model output.

- Computer-assisted: We also evaluated the performances of the two experts’ manual countings with the aid of the model outputs. Each expert was shown on the screen the automated detection result image of each photo and asked to improve the overall count by adding and subtracting uncounted and over-counted birds, respectively. Counting processes were held in the same conditions with the manual method two weeks after the manual count.

2.4. Geospatial Bird Mapping

3. Results

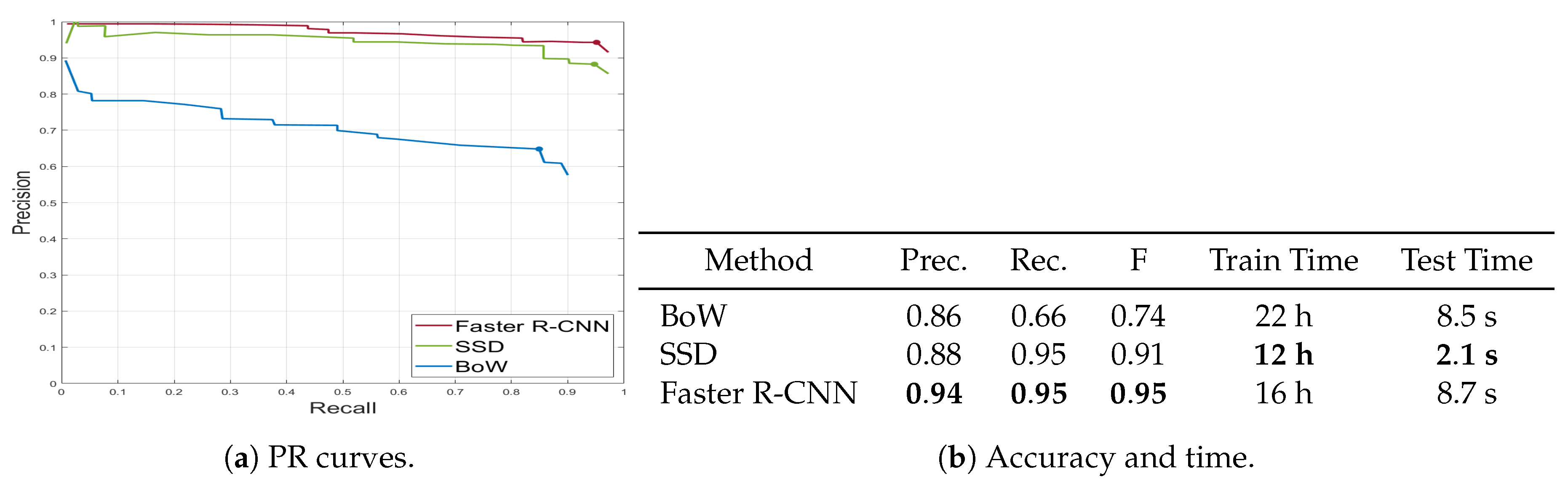

3.1. Automated Bird Detection Results

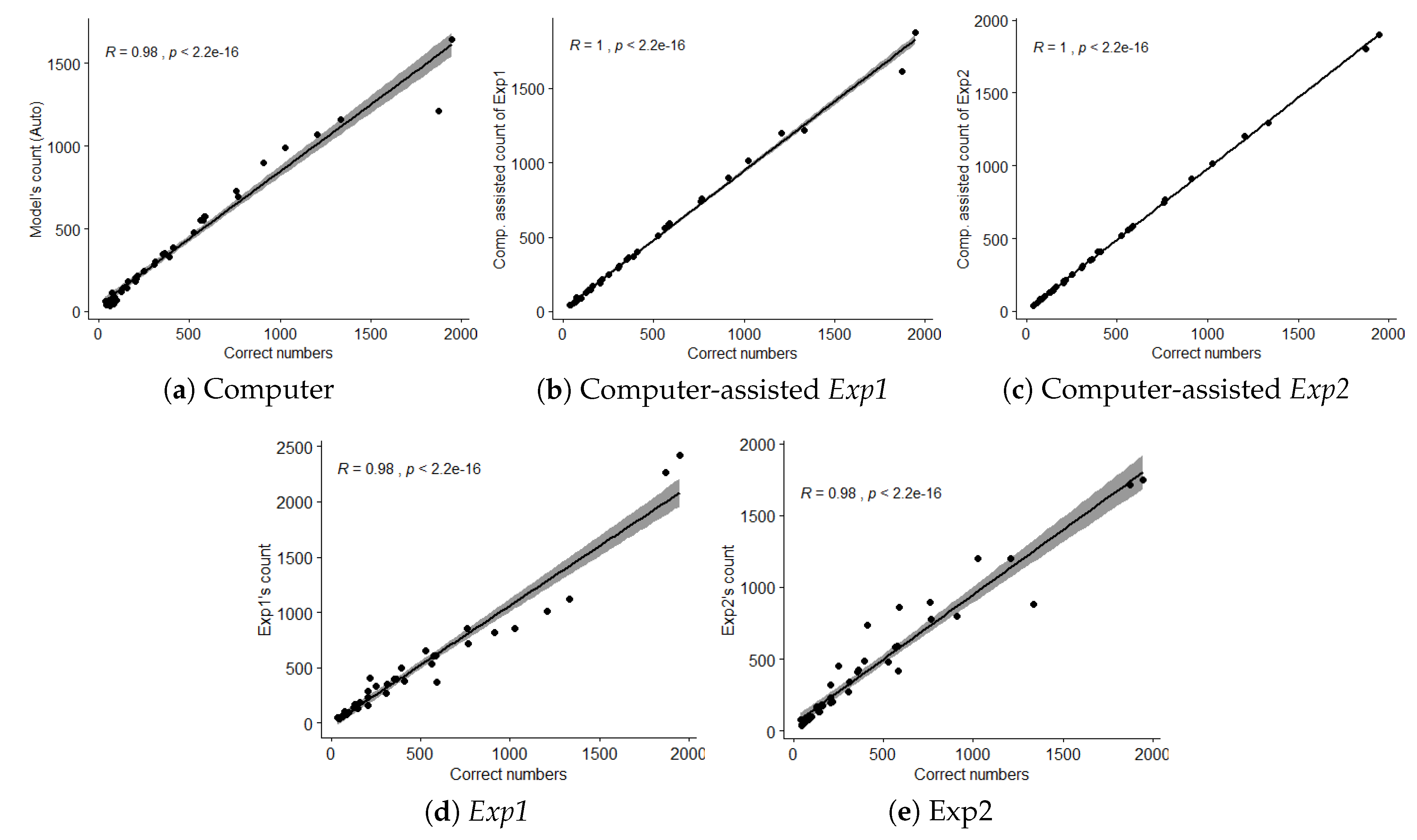

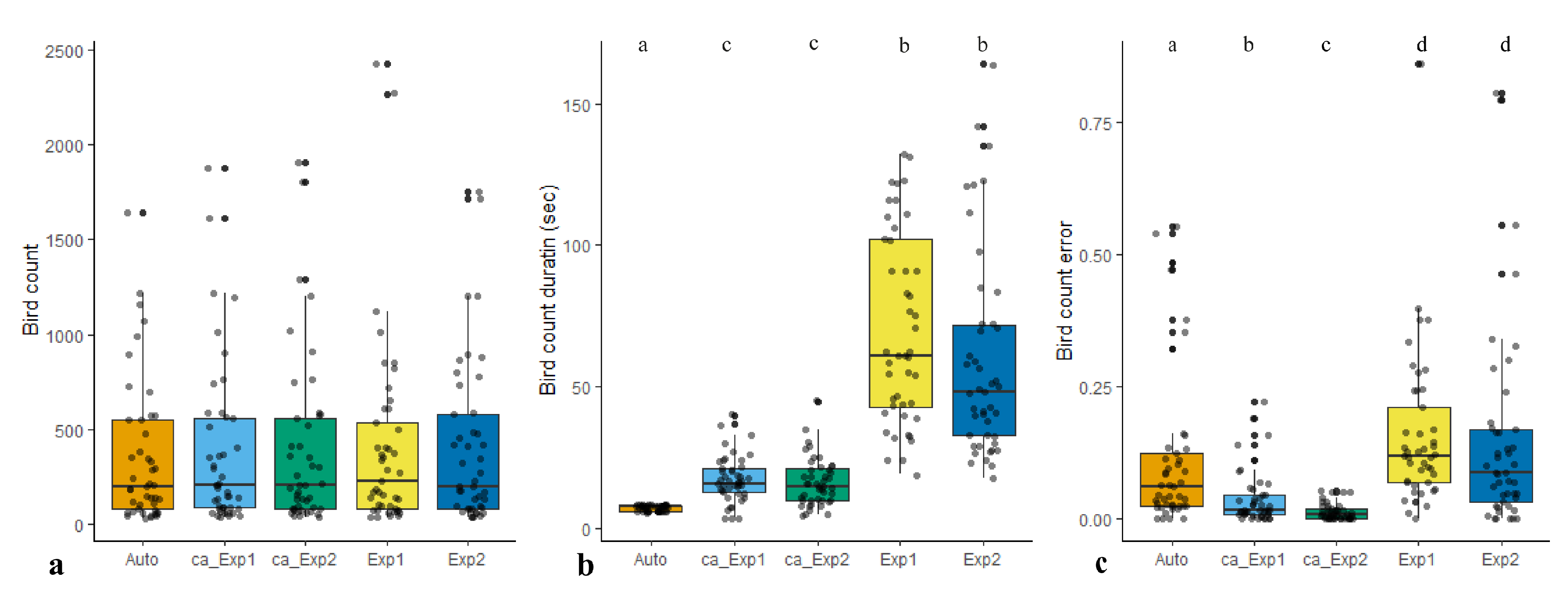

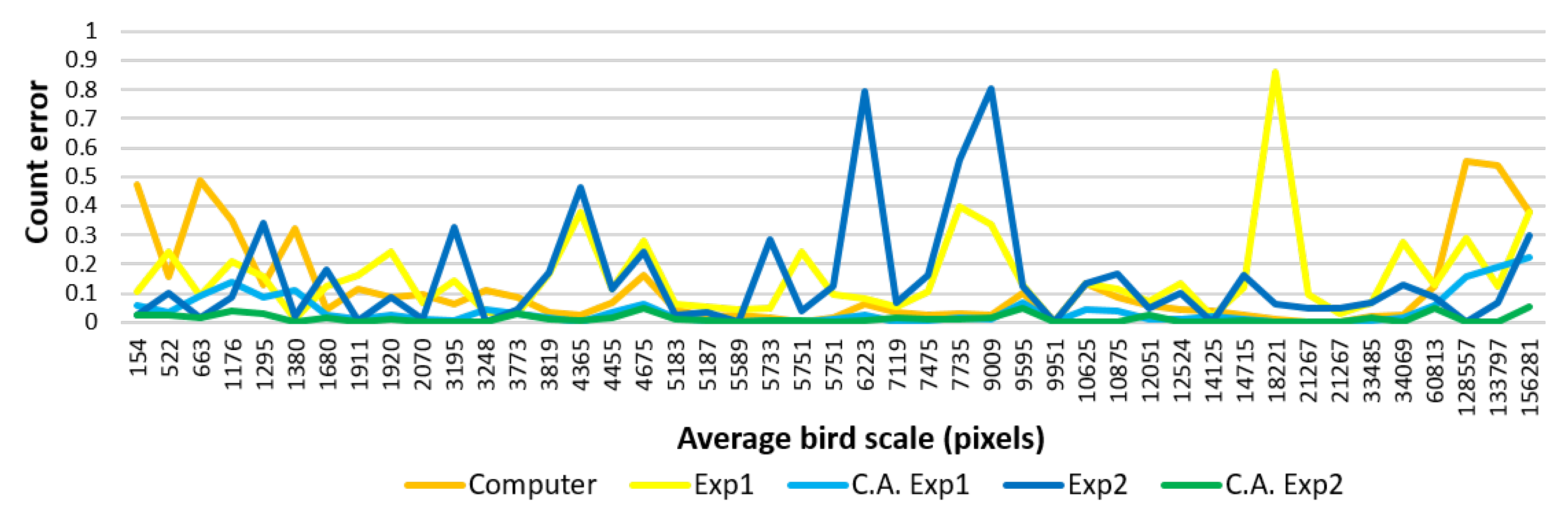

3.2. Bird Counting Results

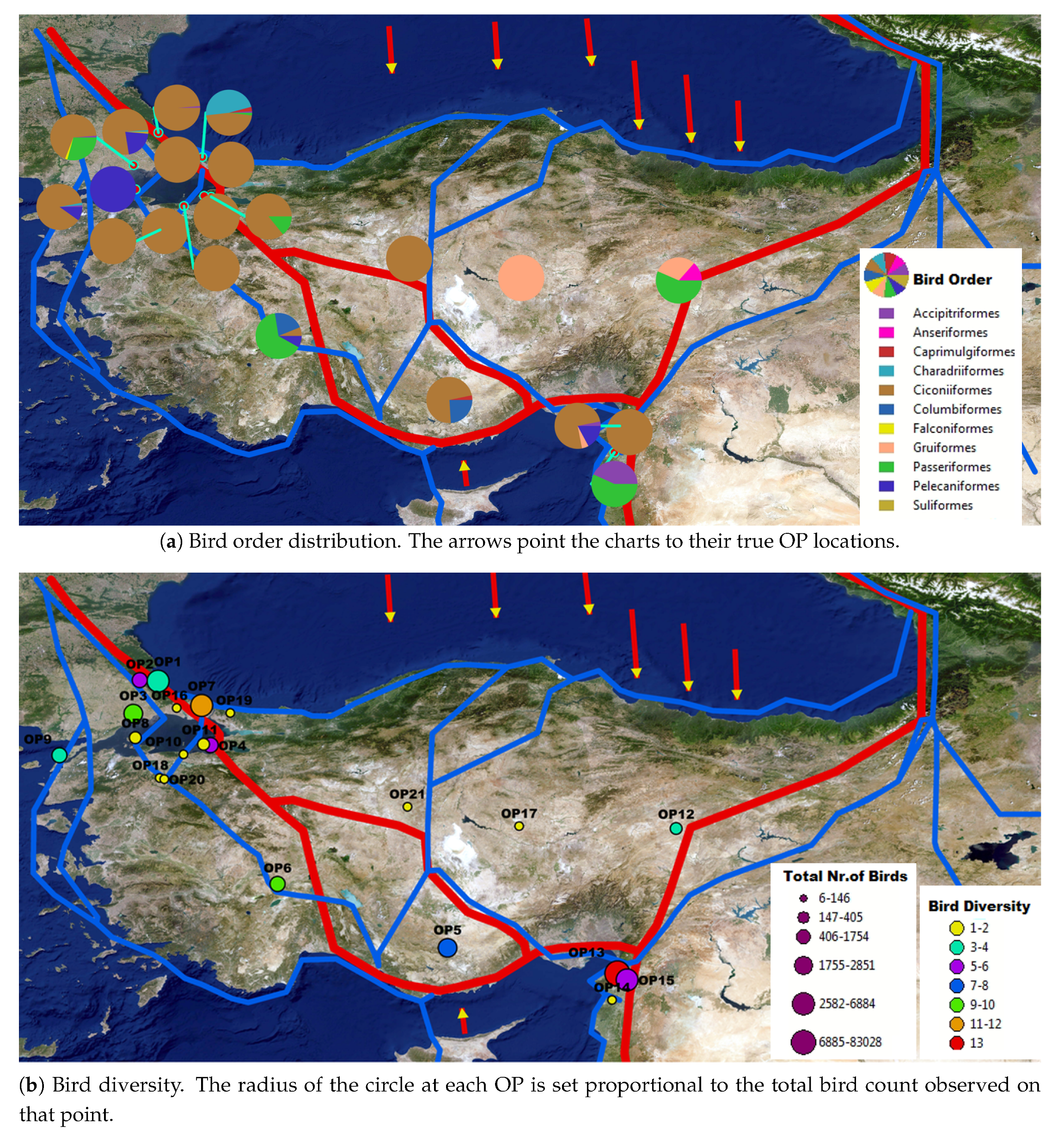

3.3. Bird Mapping Results

4. Discussion

4.1. Automated Bird Detection Discussion

4.2. Bird Counting Discussion

4.3. Bird Mapping Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Sekercioglu, C.H. Increasing awareness of avian ecological function. Trends Ecol. Evol. 2006, 21, 464–471. [Google Scholar] [CrossRef]

- Whelan, C.J.; Şekercioğlu, Ç.H.; Wenny, D.G. Why birds matter: From economic ornithology to ecosystem services. J. Ornithol. 2015, 156, 227–238. [Google Scholar] [CrossRef]

- Şekercioğlu, Ç.H.; Daily, G.C.; Ehrlich, P.R. Ecosystem consequences of bird declines. Proc. Natl. Acad. Sci. USA 2004, 101, 18042–18047. [Google Scholar] [CrossRef] [PubMed]

- Frederick, P.; Gawlik, D.E.; Ogden, J.C.; Cook, M.I.; Lusk, M. The White Ibis and Wood Stork as indicators for restoration of the everglades ecosystem. Ecol. Indic. 2009, 9, S83–S95. [Google Scholar] [CrossRef]

- Hilty, J.; Merenlender, A. Faunal indicator taxa selection for monitoring ecosystem health. Biol. Conserv. 2000, 92, 185–197. [Google Scholar] [CrossRef]

- Şekercioğlu, Ç.H.; Primack, R.B.; Wormworth, J. The effects of climate change on tropical birds. Biol. Conserv. 2012, 148, 1–18. [Google Scholar] [CrossRef]

- Zakaria, M.; Leong, P.C.; Yusuf, M.E. Comparison of species composition in three forest types: Towards using bird as indicator of forest ecosystem health. J. Biol. Sci. 2005, 5, 734–737. [Google Scholar] [CrossRef]

- Bowler, D.E.; Heldbjerg, H.; Fox, A.D.; de Jong, M.; Böhning-Gaese, K. Long-term declines of European insectivorous bird populations and potential causes. Conserv. Biol. 2019, 33, 1120–1130. [Google Scholar] [CrossRef]

- Buechley, E.R.; Santangeli, A.; Girardello, M.; Neate-Clegg, M.H.; Oleyar, D.; McClure, C.J.; Şekercioğlu, Ç.H. Global raptor research and conservation priorities: Tropical raptors fall prey to knowledge gaps. Divers. Distrib. 2019, 25, 856–869. [Google Scholar] [CrossRef]

- Garcês, A.; Pires, I.; Pacheco, F.A.; Fernandes, L.F.S.; Soeiro, V.; Lóio, S.; Prada, J.; Cortes, R.; Queiroga, F.L. Preservation of wild bird species in northern Portugal-Effects of anthropogenic pressures in wild bird populations (2008–2017). Sci. Total Environ. 2019, 650, 2996–3006. [Google Scholar] [CrossRef]

- Phalan, B.T.; Northrup, J.M.; Yang, Z.; Deal, R.L.; Rousseau, J.S.; Spies, T.A.; Betts, M.G. Impacts of the Northwest Forest Plan on forest composition and bird populations. Proc. Natl. Acad. Sci. USA 2019, 116, 3322–3327. [Google Scholar] [CrossRef] [PubMed]

- McClure, C.J.; Westrip, J.R.; Johnson, J.A.; Schulwitz, S.E.; Virani, M.Z.; Davies, R.; Symes, A.; Wheatley, H.; Thorstrom, R.; Amar, A. State of the world’s raptors: Distributions, threats, and conservation recommendations. Biol. Conserv. 2018, 227, 390–402. [Google Scholar] [CrossRef]

- Marsh, D.M.; Trenham, P.C. Current trends in plant and animal population monitoring. Conserv. Biol. 2008, 22, 647–655. [Google Scholar] [CrossRef]

- McGill, B.J.; Dornelas, M.; Gotelli, N.J.; Magurran, A.E. Fifteen forms of biodiversity trend in the Anthropocene. Trends Ecol. Evol. 2015, 30, 104–113. [Google Scholar] [CrossRef]

- Yoccoz, N.G.; Nichols, J.D.; Boulinier, T. Monitoring of biological diversity in space and time. Trends Ecol. Evol. 2001, 16, 446–453. [Google Scholar] [CrossRef]

- Nichols, J.D.; Williams, B.K. Monitoring for conservation. Trends Ecol. Evol. 2006, 21, 668–673. [Google Scholar] [CrossRef]

- Nandipati, A.; Abdi, H. Bird Diversity Modeling Using Geostatistics and GIS. In Proceedings of the 12th AGILE International Conference on Geographic Information Science 2009, Hannover, Germany, 2–5 June 2009. [Google Scholar]

- Butler, W.I.; Stehn, R.A.; Balogh, G.R. GIS for mapping waterfowl density and distribution from aerial surveys. Wildl. Soc. Bull. 1995, 23, 140–147. [Google Scholar]

- Grenzdörffer, G.J. UAS-based automatic bird count of a common gull colony. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-1/W2, 169–174. [Google Scholar]

- Turner, W.R. Citywide biological monitoring as a tool for ecology and conservation in urban landscapes: The case of the Tucson Bird Count. Landsc. Urban Plan 2003, 65, 149–166. [Google Scholar] [CrossRef]

- Society, T.N.A. Audubon Christmans Bird Count. Available online: https://www.audubon.org/conservation/science/christmas-bird-count (accessed on 29 March 2020).

- Sauer, J.R.; Pendleton, G.W.; Orsillo, S. Mapping of Bird Distributions from Point Count Surveys; Gen. Tech. Rep. PSW-GTR-149; U.S. Department of Agriculture, Forest Service, Pacific Southwest Research Station: Albany, CA, USA, 1995; Volume 149, pp. 151–160.

- Gottschalk, T.K.; Ekschmitt, K.; Bairlein, F. A GIS-based model of Serengeti grassland bird species. Ostrich 2007, 78, 259–263. [Google Scholar] [CrossRef]

- Bibby, C.J.; Burgess, N.D.; Hill, D.A.; Mustoe, S. Bird Census Techniques; Academic Press: Cambridge, MA, USA, 2000; ISBN 978-008-088-692-3. [Google Scholar]

- Gregory, R.D.; Gibbons, D.W.; Donald, P.F. Bird census and survey techniques. Avian Conserv. Ecol. 2004, 17–56. [Google Scholar] [CrossRef]

- Fuller, M.R.; Mosher, J.A. Methods of detecting and counting raptors: A review. Stud. Avian Biol. 1981, 6, 264. [Google Scholar]

- Rosenstock, S.S.; Anderson, D.R.; Giesen, K.M.; Leukering, T.; Carter, M.F. Landbird counting techniques: Current practices and an alternative. Auk 2002, 119, 46–53. [Google Scholar] [CrossRef]

- Nichols, J.D.; Hines, J.E.; Sauer, J.R.; Fallon, F.W.; Fallon, J.E.; Heglund, P.J. A double-observer approach for estimating detection probability and abundance from point counts. Auk 2000, 117, 393–408. [Google Scholar] [CrossRef]

- Fitzpatrick, M.C.; Preisser, E.L.; Ellison, A.M.; Elkinton, J.S. Observer bias and the detection of low-density populations. Ecol. Appl. 2009, 19, 1673–1679. [Google Scholar] [CrossRef] [PubMed]

- Sauer, J.R.; Peterjohn, B.G.; Link, W.A. Observer differences in the North American breeding bird survey. Auk 1994, 111, 50–62. [Google Scholar] [CrossRef]

- Link, W.A.; Sauer, J.R. Estimating population change from count data: Application to the North American Breeding Bird Survey. Ecol. Appl. 1998, 8, 258–268. [Google Scholar] [CrossRef]

- Vansteelant, W.M.; Verhelst, B.; Shamoun-Baranes, J.; Bouten, W.; van Loon, E.E.; Bildstein, K.L. Effect of wind, thermal convection, and variation in flight strategies on the daily rhythm and flight paths of migrating raptors at Georgia’s Black Sea coast. J. Field Ornithol. 2014, 85, 40–55. [Google Scholar] [CrossRef]

- Allen, P.E.; Goodrich, L.J.; Bildstein, K.L. Within-and among-year effects of cold fronts on migrating raptors at Hawk Mountain, Pennsylvania, 1934–1991. Auk 1996, 113, 329–338. [Google Scholar] [CrossRef]

- Kerlinger, P.; Gauthreaux, S. Flight behavior of raptors during spring migration in south Texas studied with radar and visual observations. J. Field Ornithol. 1985, 56, 394–402. [Google Scholar]

- Svensson, L.; Mullarney, K.; Zetterstrom, D.; Grant, P. Collins Bird Guide: The Most Complete Field Guide to the Birds of Britain and Europe, 2nd ed.; Harper Collins: New York, NY, USA, 2009; ISBN 978-0-00-726726-2. [Google Scholar]

- Wehrmann, J.; de Boer, F.; Benjumea, R.; Cavaillès, S.; Engelen, D.; Jansen, J.; Verhelst, B.; Vansteelant, W.M. Batumi Raptor Count: Autumn raptor migration count data from the Batumi bottleneck, Republic of Georgia. Zookeys 2019, 836, 135. [Google Scholar] [CrossRef] [PubMed]

- Groom, G.; Petersen, I.K.; Anderson, M.D.; Fox, A.D. Using object-based analysis of image data to count birds: Mapping of Lesser Flamingos at Kamfers Dam, Northern ape, South Africa. Int. J. Remote Sens. 2011, 32, 4611–4639. [Google Scholar] [CrossRef]

- Weinstein, B.G. A computer vision for animal ecology. J. Anim. Ecol. 2018, 87, 533–545. [Google Scholar] [CrossRef] [PubMed]

- Wäldchen, J.; Mäder, P. Machine learning for image based species identification. Methods Ecol. Evol. 2018, 9, 2216–2225. [Google Scholar] [CrossRef]

- Christin, S.; Hervet, É.; Lecomte, N. Applications for deep learning in ecology. Methods Ecol. Evol. 2010, 10, 1632–1644. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Chabot, D.; Francis, C.M. Computer-automated bird detection and counts in high-resolution aerial images: A review. J. Field Ornithol. 2016, 87, 343–359. [Google Scholar] [CrossRef]

- Fretwell, P.T.; Scofield, P.; Phillips, R.A. Using super-high resolution satellite imagery to census threatened albatrosses. Ibis 2017, 159, 481–490. [Google Scholar] [CrossRef]

- Rush, G.P.; Clarke, L.E.; Stone, M.; Wood, M.J. Can drones count gulls? Minimal disturbance and semiautomated image processing with an unmanned aerial vehicle for colony-nesting seabirds. Ecol. Evol. 2018, 8, 12322–12334. [Google Scholar] [CrossRef]

- Hodgson, J.C.; Mott, R.; Baylis, S.M.; Pham, T.T.; Wotherspoon, S.; Kilpatrick, A.D.; Raja Segaran, R.; Reid, I.; Terauds, A.; Koh, L.P. Drones count wildlife more accurately and precisely than humans. Methods Ecol. Evol. 2018, 9, 1160–1167. [Google Scholar] [CrossRef]

- Kislov, D.E.; Korznikov, K.A. Automatic Windthrow Detection Using Very-High-Resolution Satellite Imagery and Deep Learning. Remote. Sens 2020, 12, 1145. [Google Scholar] [CrossRef]

- Oosthuizen, W.C.; Krüger, L.; Jouanneau, W.; Lowther, A.D. Unmanned aerial vehicle (UAV) survey of the Antarctic shag (Leucocarbo bransfieldensis) breeding colony at Harmony Point, Nelson Island, South Shetland Islands. Polar Biol. 2020, 43, 187–191. [Google Scholar] [CrossRef]

- Simons, E.S.; Hinders, M.K. Automatic counting of birds in a bird deterrence field trial. Ecol. Evol. 2019, 9, 11878–11890. [Google Scholar] [CrossRef]

- Vishnuvardhan, R.; Deenadayalan, G.; Rao, M.V.G.; Jadhav, S.P.; Balachandran, A. Automatic detection of flying bird species using computer vision techniques. J. Phys. Conf. Ser. 2019, 1362, 012112. [Google Scholar] [CrossRef]

- Akçay, H.G.; Kabasakal, B.; Aksu, D.; Demir, N.; Öz, M.; Erdoğan, A. AU Bird Scene Photo Collection; Kaggle: San Francisco, CA, USA, 2020. [Google Scholar] [CrossRef]

- T’Jampens, R.; Hernandez, F.; Vandecasteele, F.; Verstockt, S. Automatic detection, tracking and counting of birds in marine video content. In Proceedings of the 6th International Conference on Image Processing Theory, Tools and Applications (IPTA 2016), Oulu, Finland, 12–15 December 2016; pp. 1–6. [Google Scholar]

- Norouzzadeh, M.S.; Nguyen, A.; Kosmala, M.; Swanson, A.; Palmer, M.S.; Packer, C.; Clune, J. Automatically identifying, counting, and describing wild animals in camera-trap images with deep learning. Proc. Natl. Acad. Sci. USA 2018, 115, E5716–E5725. [Google Scholar] [CrossRef]

- Hong, S.J.; Han, Y.; Kim, S.Y.; Lee, A.Y.; Kim, G. Application of deep-learning methods to bird detection using unmanned aerial vehicle imagery. Sensors 2019, 19, 1651. [Google Scholar] [CrossRef]

- Bowley, C.; Andes, A.; Ellis-Felege, S.; Desell, T. Detecting wildlife in uncontrolled outdoor video using convolutional neural networks. In Proceedings of the 12th IEEE International Conference on e-Science (e-Science 2016), Baltimore, MD, USA, 23–27 October 2016; pp. 251–259. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; pp. 200–220. ISBN 978-026-203-561-3. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 28th IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2015), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the 28th International Conference on Neural Information Processing Systems (NIPS 2015), Montréal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV 2015), Santiago, Chile, 13–16 December 2015; pp. 1440–1448. [Google Scholar]

- Bartlett, P.L.; Maass, W. Vapnik-Chervonenkis dimension of neural nets. In The Handbook of Brain Theory and Neural Networks, 2nd ed.; Arbib, M.A., Ed.; MIT Press: Cambridge, MA, USA, 2003; pp. 1188–1192. ISBN 978-026-201-197-6. [Google Scholar]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? In Proceedings of the 27th International Conference on Neural Information Processing Systems (NIPS 2014), Montréal, QC, Canada, 8–13 December 2014; pp. 3320–3328. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar] [CrossRef]

- Csurka, G.; Dance, C.; Fan, L.X.; Willamowski, J.; Bray, C. Visual categorization with bags of keypoints. In Proceedings of the ECCV 2004 International Workshop on Statistical Learning in Computer Vision, Prague, Czech Republic, 11–14 May 2004; pp. 1–22. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the 14th European Conference on Computer Vision (ECCV 2016), Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Lowe, D.G. Object Recognition from local scale–invariant features. In Proceedings of the 7th IEEE International Conference on Computer Vision (ICCV 1999), Corfu, Greece, 20–25 September 1999; pp. 1150–1157. [Google Scholar]

- Huang, J.; Rathod, V.; Sun, C.; Zhu, M.; Korattikara, A.; Fathi, A.; Fischer, I.; Wojna, Z.; Song, Y.; Guadarrama, S.; et al. Speed/accuracy trade-offs for modern convolutional object detectors. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2017), Honolulu, HI, USA, 22 July–25 July 2017; pp. 3296–3297. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 29th IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2016), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar]

- Parkhi, O.M.; Vedaldi, A.; Zisserman, A.; Jawahar, J.W. Cats and Dogs. In Proceedings of the 25th IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2012), Providence, RI, USA, 18–20 June 2012; pp. 3498–3505. [Google Scholar]

- Dytham, C. Choosing and Using Statistics: A Biologist’S Guide; John Wiley & Sons: Hoboken, NJ, USA, 2011; ISBN 978-140-519-839-4. [Google Scholar]

- Mangiafico, S. Summary and Analysis of Extension Program Evaluation in R, Version 1.15.0. Available online: https://rcompanion.org/handbook (accessed on 16 May 2020).

- R Core Team. R Core Team: A Language and Environment for Statistical Computing; Foundation for Statistical Computing: Vienna, Austria, 2020. [Google Scholar]

- Kassambara, A. rstatix: Pipe-Friendly Framework for Basic Statistical Tests. R Package Version 0.5.0. Available online: https://CRAN.R-project.org/package=rstatix (accessed on 20 May 2020).

- Ogle, D.; Wheeler, P.; Dinno, A. FSA: Fisheries Stock Analysis. R Package Version 0.8.30. Available online: https://github.com/droglenc/FSA (accessed on 20 May 2020).

- Mangiafico, S. rcompanion: Functions to Support Extension Education Program Evaluation. R Package Version 2.3.25. Available online: https://CRAN.R-project.org/package=rcompanion (accessed on 20 May 2020).

- Wickham, H.; Averick, M.; Bryan, J.; Chang, W.; McGowan, L.; François, R.; Grolemund, G.; Hayes, A.; Henry, L.; Hester, J. Welcome to the Tidyverse. J. Open Source Softw. 2019, 4, 1686. [Google Scholar] [CrossRef]

- Sutherland, W.; Brooks, D. The autumn migration of raptors, storks, pelicans, and spoonbills at the Belen pass, southern Turkey. Sandgrouse 1981, 2, 1–21. [Google Scholar]

- Shirihai, H.; Christie, D.A. Raptor migration at Eilat. Br. Birds 1992, 85, 141–186. [Google Scholar]

- Cameron, R.; Cornwallis, L.; Percival, M.; Sinclair, A. The migration of raptors and storks through the Near East in autumn. Ibis 1967, 109, 489–501. [Google Scholar] [CrossRef]

- Panuccio, M.; Duchi, A.; Lucia, G.; Agostini, N. Species-specific behaviour of raptors migrating across the Turkish straits in relation to weather and geography. Ardeola 2017, 64, 305–324. [Google Scholar] [CrossRef]

- Turan, L.; Kiziroğlu, İ.; Erdoğan, A. Biodiversity and its disturbing factors in Turkey. In Proceedings of the 6th International Symposium on Ecology and Environmental Problems, Antalya, Turkey, 17–20 November 2011; p. 56. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV 2017), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Wang, X.; Zhao, Y.; Pourpanah, F. Recent advances in deep learning. Int. J. Mach. Learn. Cybern. 2020, 11, 747–750. [Google Scholar] [CrossRef]

- Ralph, C.J.; Sauer, J.R.; Droege, S. Monitoring Bird Populations by Point Counts; Gen. Tech. Rep. PSW-GTR-149; US Department of Agriculture, Forest Service, Pacific Southwest Research Station: Albany, CA, USA, 1995; Volume 149, p. 187. Available online: https://www.fs.usda.gov/treesearch/pubs/download/31461.pdf (accessed on 29 March 2020).

- Van Horn, G.; Branson, S.; Farrell, R.; Haber, S.; Barry, J.; Ipeirotis, P.; Perona, P.; Belongie, S. Building a bird recognition app and large scale dataset with citizen scientists: The fine print in fine-grained dataset collection. In Proceedings of the 28th IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2015), Boston, MA, USA, 7–12 June 2015; pp. 595–604. [Google Scholar]

- Snäll, T.; Kindvall, O.; Nilsson, J.; Pärt, T. Evaluating citizen-based presence data for bird monitoring. Biol. Conserv. 2011, 144, 804–810. [Google Scholar] [CrossRef]

- Yang, D.; Wan, H.Y.; Huang, T.-K.; Liu, J. The Role of Citizen Science in Conservation under the Telecoupling Framework. Sustainability 2019, 11, 1108. [Google Scholar] [CrossRef]

- Zhang, G. Integrating Citizen Science and GIS for Wildlife Habitat Assessment. In Wildlife Population Monitoring; Ferretti, M., Ed.; IntechOpen: London, UK, 2019; ISBN 978-178-984-170-1. [Google Scholar]

| Imaging camera | Canon 7D Mark II body + Canon EF 100–400 mm f/4.5–5.6 L IS II USM telephoto zoom lens |

| Resolution | pixels |

| Focal length | 200 mm |

| Sensor size | 22.4 mm × 15 mm |

| Shutter Speed | s |

| Variable | Counter | Min | Max | Median | q1 | q3 | iqr | Mean | sd | se | CI |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Count | Exp1 | 39 | 2422 | 231 | 83 | 533 | 450 | 412.02 | 510.07 | 76.04 | 153.24 |

| Exp2 | 38 | 1748 | 203 | 85 | 581 | 496 | 404 | 430.77 | 64.22 | 129.42 | |

| Automated | 34 | 1643 | 201 | 79 | 551 | 472 | 355.33 | 380.08 | 56.66 | 114.19 | |

| C.A. Exp1 | 42 | 1876 | 204 | 88 | 559 | 471 | 380.93 | 431.71 | 64.36 | 129.7 | |

| C.A. Exp2 | 42 | 1902 | 205 | 82 | 559 | 477 | 390.62 | 450.26 | 67.12 | 135.27 | |

| Duration | Exp1 | 19 | 132 | 61 | 43 | 102 | 59 | 70.13 | 33.49 | 4.99 | 10.06 |

| Exp2 | 18 | 164 | 48 | 33 | 72 | 39 | 59.33 | 36.55 | 5.45 | 10.98 | |

| Automated | 6 | 8 | 8 | 6 | 8 | 2 | 7.29 | 0.97 | 0.14 | 0.29 | |

| C.A. Exp1 | 4 | 40 | 16 | 13 | 21 | 8 | 17.42 | 8.08 | 1.2 | 2.43 | |

| C.A. Exp2 | 5 | 45 | 15 | 10 | 21 | 11 | 16.4 | 8.46 | 1.26 | 2.54 | |

| Error | Exp1 | 0 | 0.86 | 0.12 | 0.07 | 0.21 | 0.14 | 0.16 | 0.15 | 0.02 | 0.05 |

| Exp2 | 0 | 0.81 | 0.09 | 0.03 | 0.17 | 0.14 | 0.15 | 0.19 | 0.03 | 0.06 | |

| Automated | 0 | 0.55 | 0.06 | 0.02 | 0.12 | 0.10 | 0.12 | 0.15 | 0.02 | 0.05 | |

| C.A. Exp1 | 0 | 0.22 | 0.01 | 0.01 | 0.04 | 0.04 | 0.04 | 0.05 | 0.01 | 0.02 | |

| C.A. Exp2 | 0 | 0.05 | 0.01 | 0 | 0.02 | 0.02 | 0.01 | 0.02 | 0 | 0.01 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Akçay, H.G.; Kabasakal, B.; Aksu, D.; Demir, N.; Öz, M.; Erdoğan, A. Automated Bird Counting with Deep Learning for Regional Bird Distribution Mapping. Animals 2020, 10, 1207. https://doi.org/10.3390/ani10071207

Akçay HG, Kabasakal B, Aksu D, Demir N, Öz M, Erdoğan A. Automated Bird Counting with Deep Learning for Regional Bird Distribution Mapping. Animals. 2020; 10(7):1207. https://doi.org/10.3390/ani10071207

Chicago/Turabian StyleAkçay, Hüseyin Gökhan, Bekir Kabasakal, Duygugül Aksu, Nusret Demir, Melih Öz, and Ali Erdoğan. 2020. "Automated Bird Counting with Deep Learning for Regional Bird Distribution Mapping" Animals 10, no. 7: 1207. https://doi.org/10.3390/ani10071207

APA StyleAkçay, H. G., Kabasakal, B., Aksu, D., Demir, N., Öz, M., & Erdoğan, A. (2020). Automated Bird Counting with Deep Learning for Regional Bird Distribution Mapping. Animals, 10(7), 1207. https://doi.org/10.3390/ani10071207