1. Introduction

Family dogs are now a widely accepted model of human socio-cognitive functioning [

1], and this role was recently extended to neurocognition, including sleep physiology [

2]. Due to ethical considerations, a non-invasive polysomnography (PSG) method had been developed for studying different aspects of sleep in pet dogs (see [

3]). This method has several advantages: (1) it can be easily applied without any previous training of the subjects, (2) it causes no pain or serious stress to the animal, (3) it provides comparable macrostructural and spectral results to data from both human and mammalian PSG studies, and (4) electrode placement and data acquisition are easy to learn and carry out by following the validated protocol (cf. [

3,

4]).

While dog PSG studies are relatively easy to carry out, the field of canine sleep research faces similar practical challenges regarding data processing as human studies do. The most time-consuming part of the process is manual sleep staging (i.e., identifying the sleep stage(s) in segments of an EEG-recorded signal) and artifact rejection (i.e., eliminating artifacts resulting from muscle tone or eye movements). Manual/visual scoring is still the gold standard in human sleep studies as well, wherein a human scorer codes the PSG recordings epoch-by-epoch (usually in 20–30 s intervals) and identifies the sleep stage according to standard criteria [

5]. Several attempts have been made to automate this part of the data processing and have the (human) PSG recordings scored by a computer program (e.g., [

6,

7]).

It would reduce the cost and improve reproducibility if the automated sleep stage scoring algorithms achieved comparable accuracy to manual scoring. Even the use of semi-automatic scoring, in which the sleep–wake distinctions are made automatically and provide some quality control over the procedure, would also be beneficial and would make this time-consuming process easier. In human PSG data, there are some promising results regarding computer-assisted or automated staging technologies [

8,

9,

10] using a wide range of signal processing methods and algorithms (for a review, see [

11]).

Most of the studies focusing on the development of automated sleep stage scoring algorithms are in need of feature selection since they are not capable of running on the raw dataset. Feature generation is usually the second step after the sufficient cleaning and processing of the raw dataset; it reduces dimensionality in the dataset, which improves the speed and performance. It is an important task for several supervised machine learning models, especially in time series data feature generation [

12]. These approaches first define the most common features such as frequency, amplitude or other time-based features of EEG waveforms. In the case of neural networks, raw data are used as inputs and feature selection is included in the algorithm. For other algorithms, feature selection/feature generation is usually applied for input-preprocessing after data-cleaning. [

13,

14]. However, there is no consensus in the literature about the training process or the feature generation, and most of the studies rely on prior knowledge to compute representative features to effectively characterize EEGs [

9,

15]. These prevent the generalizability to larger datasets from various patients with different sleep patterns, and the applicability is even more limited in non-human research.

Several technical and biological artifacts, such as active power line, impedance fluctuation, eyeblink, and muscle activity might interfere with EEG signals. Similarly to sleep staging, artifact rejection can be done manually or automatically, usually by independent component analysis. Some types of artifact cannot be easily eliminated with the application of preprocessing algorithms. Therefore, there is still no consensus about the efficiency of fully automatic artifact rejection (e.g., [

16]).

It is customary in both human and dog PSG studies to report an inter-rater reliability (IRR) measurement (e.g., agreement rate (%) or Cohen’s kappa (κ)) to prove that sleep stage scoring has been done by expert coders who highly agree in their assessments (for a review, see [

17]). Such IRR scores are usually based on a randomly selected sample of the whole dataset (i.e., 2%–20% of the whole dataset). However, some studies on human sleep, that aimed to explore the reliability of visual scoring, calculated IRR based on whole dataset (for a review, see [

17]). In general, the IRR score of human PSG studies depends largely on the sleep stage (e.g., [

18]), the rater’s scoring experience [

19] and the number of active electrodes placed during the recording [

20], and there is considerable variability between the individual records being scored [

21]. The average overall IRR (κ value) is usually 0.6–0.8 (for a review, see [

17]); however, much lower agreements (0.2–0.4) can be found in several studies, especially in the NREM1 phase, (non-rapid eye movement), while for wake and REM phases, the agreement is usually higher (0.8–0.9) [

18,

20]. Danker-Hopfe and colleagues investigated the IRR between scorers from different laboratories and found similar κ values as in previous studies; however, they also studied the macrostructural pattern of the hypnograms [

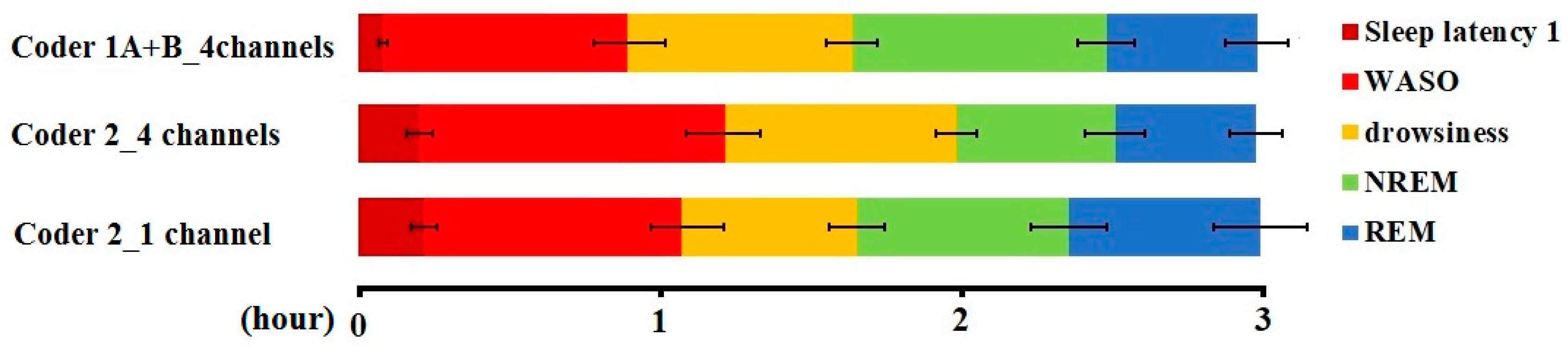

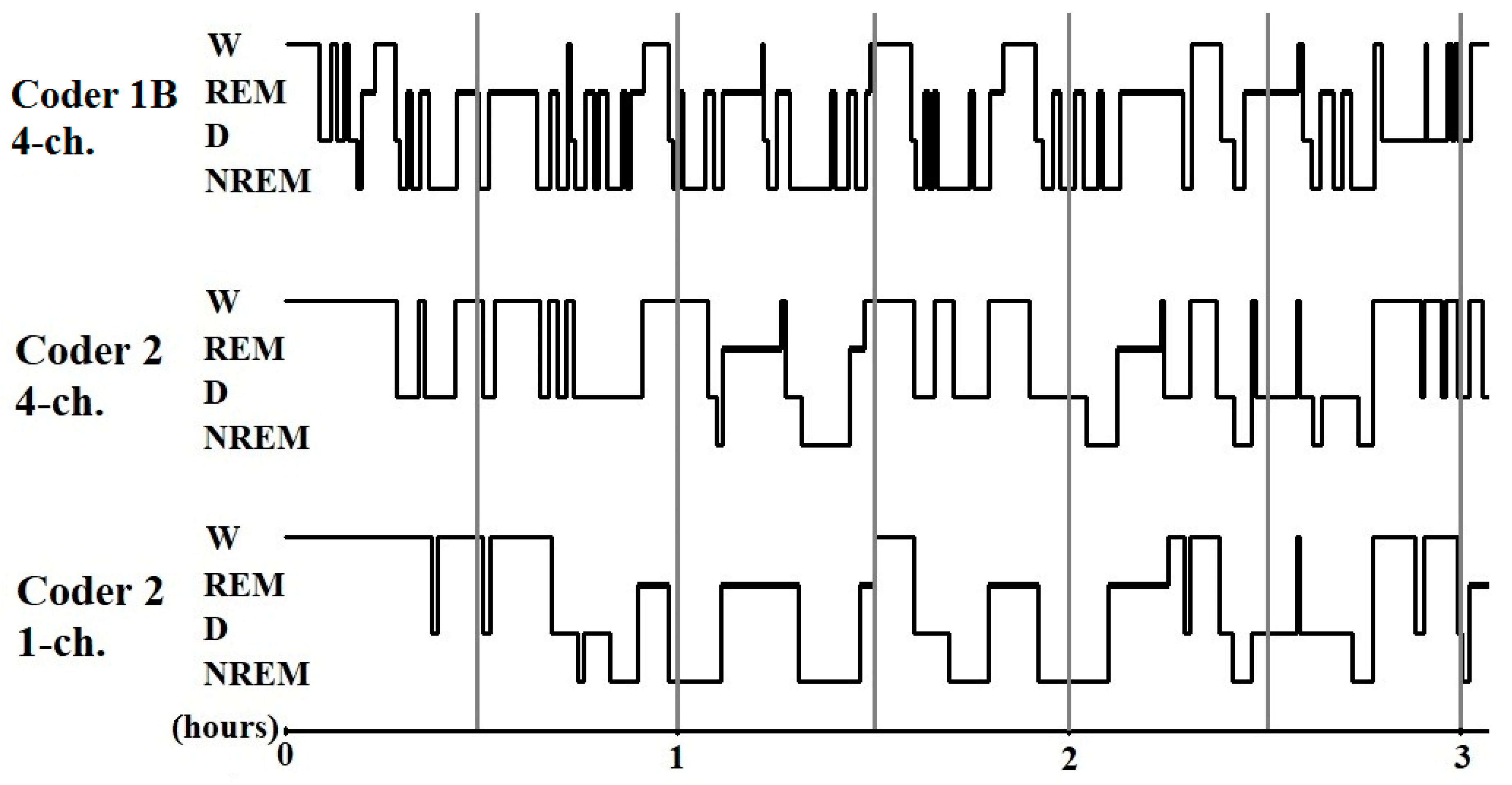

22]. They showed that despite a relatively low κ, which was found in the case of the NREM1 and NREM2 phases, the intraclass correlation of macrostructural variables (e.g., wake after sleep onset (WASO), sleep onset latency and total sleep time) was still very high (>0.86). This suggests that sleep macrostructure can be reliable even with a relatively low IRR.

Dog PSG studies so far have been conducted on relatively low samples sizes (between

N = 7 and

N = 16 individuals), and such datasets are typically scored by one coder (e.g., [

2,

23]). The reported inter-rater agreements are high (0.8–0.9), but have been performed on a very low number of randomly selected epochs. More recently, however, a dataset of over 150 dogs was used in one study [

24], with the recordings being analyzed by several different scorers with different scoring experience. There is, thus, a rising importance of carrying out in-depth analyses of within- and between-coder reliability for canine PSG scoring.

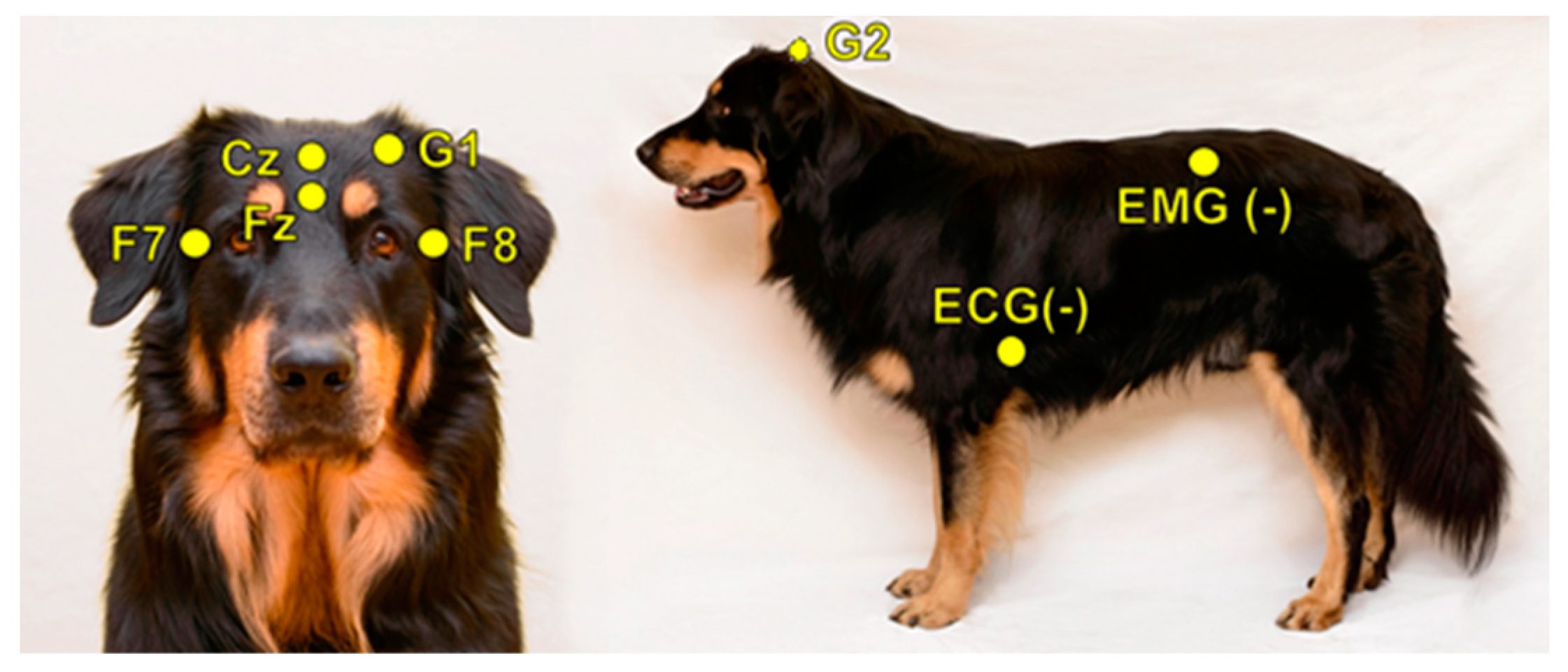

A further factor to be considered is that our canine PSG method evolved from its initial single-channel form (as described originally in [

3]) to a four channels protocol. In addition to Fz, channel Cz, F7 and F8 are also included (for a detailed description of the updated procedure, see [

24]). This four channels protocol not only allows for topographical comparisons [

24] but also provides more data for sleep stage identification. In humans, it has been shown that hypnogram scoring based on single-channel EEG, contrary to a full PSG protocol, yields different results [

20], thus the same question is highly relevant for canine sleep studies as well.

The aim of the present study is to provide a detailed descriptive analysis of reliability measures of dog sleep staging based on the non-invasive canine PSG method. Study 1 will focus on manual scoring by providing inter-rater agreement measures and error matrices for full 3 h-long recordings between coders with different scoring experience, as well as examining the possible effects of the number of visible channels used when scoring (within-coder). Importantly, the effects of any sleep stage scoring differences on the macrostructural and spectral results will also be reported. Study 2 will present the results of the first version of automatic scoring (wake versus sleep identification) of canine PSG recordings using machine learning algorithms.

3. Study 2

3.1. Materials and Methods

All available recordings were taken from the Family Dog Project database with the aim of testing the automated sleep–wake detection algorithm. All previous PSG recordings were used where the measurement was not preceded by any pre-treatment (this included recordings reported in previous publications [

3,

4,

23,

25,

30] as well as unpublished recordings, which had been scored by a total of six different scorers). A total of 304 recordings of a ca. 3 h duration each from 151 dogs (age range: 2 months–17 years, 6.44 ± 3.97 (mean ± SD); 79 females, 72 males; 97 neutered, 38 intact and 19 of unknown reproductive status; 96 purebred from 42 different breeds and 55 mongrels) were included in the final dataset. For all the recordings, the manually scored hypnogram was used as an annotation reference. PSG recordings in which the percentage of sleep label was less than 5% or the proportion of awake labels was less than 2% (

N = 3) were excluded from all analyses. Each PSG has an accompanying hypnogram containing expert annotations (visual scoring) of sleep stages. The sleep stages were scored as described in Study 1 as Wake (1), Drowsiness (2), NREM (3) and REM (4). During the modeling, the sleep stages were merged into a binary format: Sleeping (>1) and Wake (1).

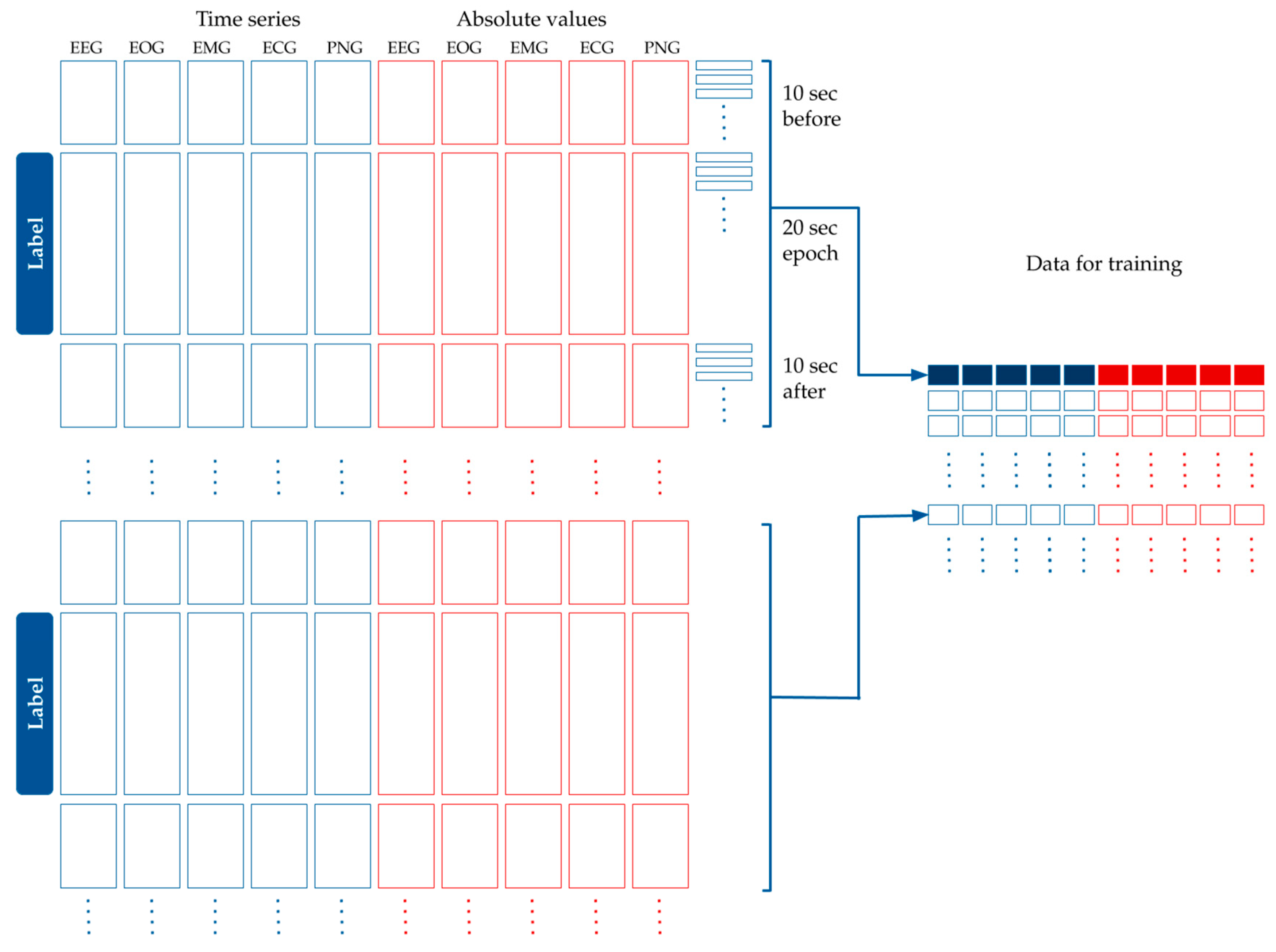

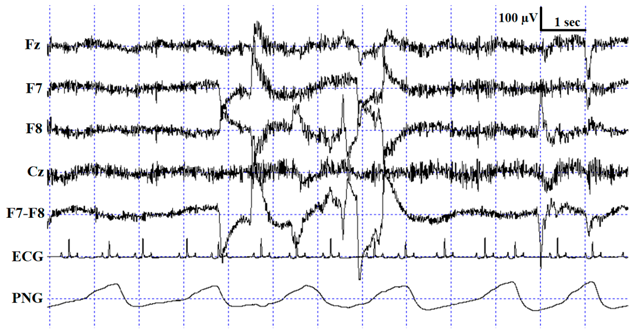

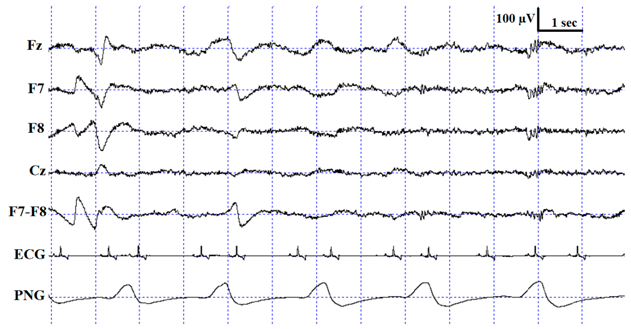

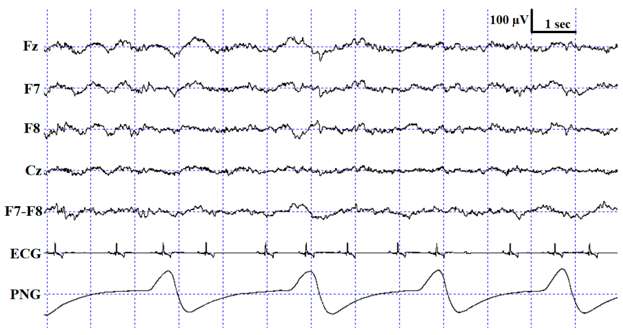

The algorithms used five time series including brain activity (EEG, Fz-G2 derivation), eye movements (EOG, F7-F8 derivation), muscle activity or skeletal muscle activation (EMG), heart rhythm (ECG) and respiration (PNG), all recorded simultaneously. The signals were obtained with a sampling rate of either 249 Hz (in case of 27 recordings) or 1024 Hz. Each recording was split into 20 s long epochs, and annotations for each epoch were added to the hypnograms.

Machine learning (ML) models were trained with the intention of using input features calculated from the five time series. The entire dataset was split randomly into training and test sets. Eighty-percent of the data were used for training, and 20%, for testing our models. Note that the data were split across recordings, i.e., a single recording either belonged to the training or the test sets. This ensured avoiding the overfitting of our models upon recording specific signals. In order to prepare the data for modeling, the following processing and feature generation steps were applied:

In order to reduce noise in the data, each time series was standardized and split into 100 ms intervals, and the mean value was used for each interval.

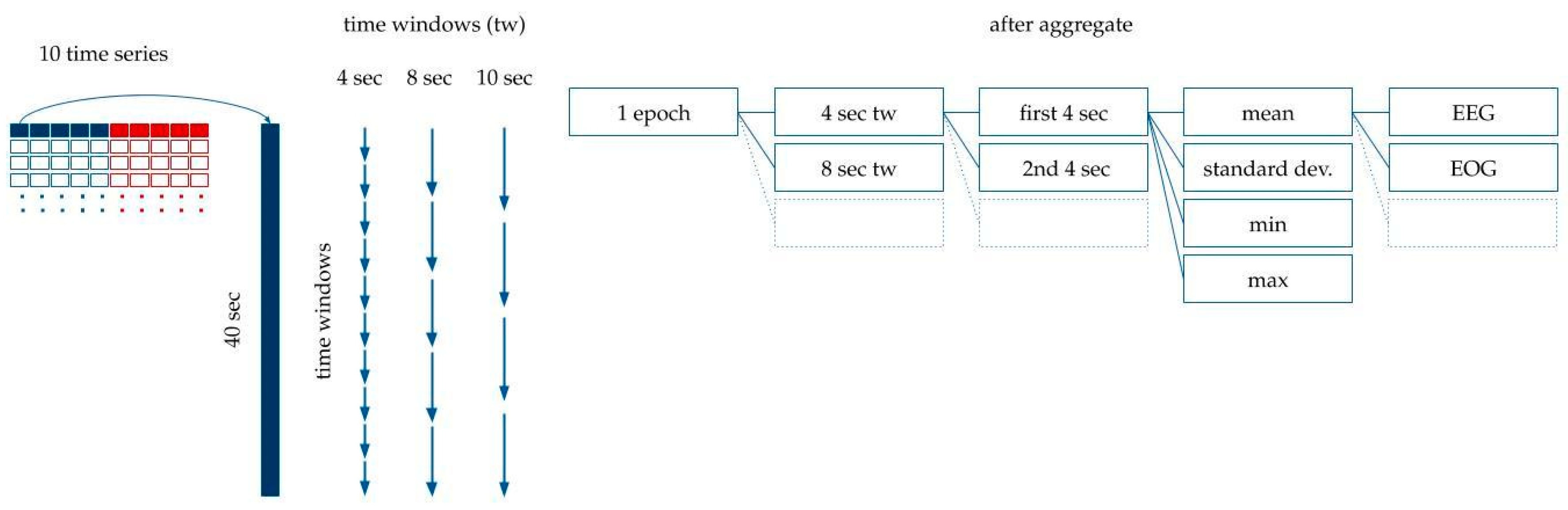

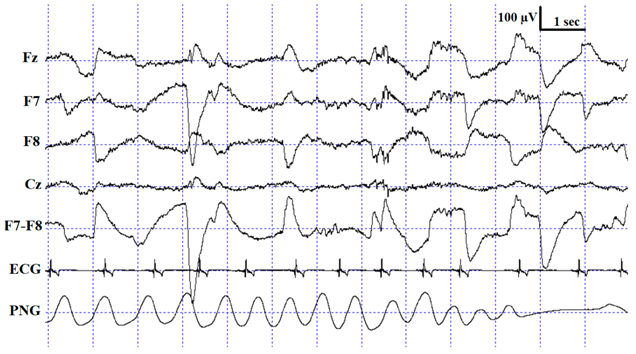

Five more time series were added that contained the absolute value of the original time series, as seen in

Figure 4.

Each label belonged to one 20 s-long epoch. We had calculated the features for modeling based on the assumption that adjacent epochs might contain information about the current epoch. Thus, we added the 10 s-long period before the epoch, and the 10 s-long period after the epoch to the 20 s-long period of the epoch. This 40 s-long interval was used for feature generation (see

Figure 4).

Since the initial averaging was done per 100 milliseconds, 10 s are represented by 100 data points. Each epoch label hence corresponds to 400 data points of the five time series from the raw data plus five time series for their absolute values (see

Figure 4).

Finally, features were generated based on the 400 data points per time series per epoch.

We split the 40 s extended epochs into time windows with a set of different lengths, tw, between 1 and 40. For example, in the case of tw = 4, we created 10 subintervals within the 40 s-long intervals belonging to the epoch.

The mean, standard deviation, maximum and minimum were calculated within each time window (see

Figure 5). For example, with

tw = 4 s, descriptive statistics of the 10 subintervals were generated.

The final dataset contains 138,962 records in the training dataset and 32,716 records in the test dataset.

To evaluate the quality of the prediction on the test set, the area under the curve (AUC) was used [

31]. AUC is a classification metric that shows how much the model is capable of distinguishing between classes. The higher the AUC (between 0.5 and 1), the better the model is at distinguishing between classes.

3.2. Modeling, Results and Discussion

The first machine learning model that we used is logistic regression (LogReg), a linear model for binary classification [

32]. The model fits a linear decision boundary between the classes. The logistic regression models were trained by using the scikit-learn Python library [

33] with default parameters. The feature importance values were calculated by taking the absolute values of the linear model coefficients. As indicated in

Table 8, the standard deviation calculated from the absolute values of the EEG signal and the PNG are the most important. The best performing model was found when all the attributes of the 5, 10, 20 and 40 s resolutions were used. The AUC of the best model was 0.864.

Our second learning machine was gradient boosting trees (GBTs), which recently became popular for supervised learning tasks [

34]. In a GBT, we are creating decision trees in an iterative way to incrementally improve its predictive performance. To reduce the risk of overfitting, a GBT classifier in this paper is implemented using the scikit-learn library using the following parameters: number of the decision trees, 200; learning rate, 0.1; maximum depth of each decision tree, 2. GBT achieved an AUC of 0.872 when trained on the same dataset as the logistic regression.

The GBT model outputs feature importance values based on the number of times it uses a given feature when building the decision trees. As we can see in

Table 9, the EEG signal is also an important attribute in the GBT, but in this model, the EOG is even more important. Contrarily to logistic regression, GBT uses statistics of the original signal.

As our final experiment, convolutional neural networks (CNN) were trained on the data. Time series can be naturally handled with CNN architectures. Hence, in case of the CNN, we were using the raw time series with the 400 data points without any feature generation. We used Keras [

35] to create the model and collect the experimental results, with Tensorflow [

36] as a backend. Our model used a three-layer convolutional neural network with ever shorter convolutional window sizes (10, 5 and 2) in successive convolutional layers. Thus, convolutional operations were performed first in 1 s intervals and then at 5 and 10 s intervals. The convolutional operations approximate the different aggregated statistics computed for logistic regression and GBT, but here, we let the model learn the operation (mean, min, max or any function) accurately.

The model was trained with the following parameters: kernel-sizes of the convolutional layers, 10, 5, 2; number of iterations, 9; batch size, 64; starting learning rate, 0.01; optimizer, Adam. The AUC of the CNN was 0.880.

Finally, the predictions of the above three models were averaged to gain the best predictive performance. The combination of the LogReg, GBT and the CNN performed better than the individual models and resulted in a final 0.890 AUC score.

4. Discussion

The present study provided reliability measures for both manual and automated sleep stage scoring in non-invasively recorded dog PSG. Previous studies using the protocol for canine PSG by Kis et al. [

3] all used manual scoring to obtain hypnograms, and the present findings confirm that despite disagreements at various levels, this is a valid approach resulting in reliable macrostructural and EEG spectral data.

Automated scoring is often preferred over visual inspection due to its objective nature (e.g., arguments in [

37], but see [

38]). In canine sleep research, sleep spindle detection has been successfully automated in recent years [

24,

39]; although the method was not validated against visual detection, external validity was proven at different levels. While there is no consensus regarding sleep spindle identification among human researchers either (both automated and manual methods are in use—e.g., [

37,

38]), sleep stage scoring is almost exclusively carried out manually, despite continued efforts to develop a fully automated method (e.g., [

7]). The machine learning algorithm presented in this paper performed relatively well for binary classification (distinction between sleep and awake), but the next step would be to explore the possibility of multiclass annotation (distinguishing the different sleep stages) by defining more elaborative features and building more accurate multiclass models. At the current stage, the model could be of practical use for accelerating data processing via a semi-automated method where sleep–wake distinctions are made automatically whereas sleep stages (D, NREM, REM) are scored manually. Given the huge individual variability that characterizes dogs at the physiological level (e.g., [

40,

41]), such semi-automated processes seem to be the best practice so far and have been implemented, e.g., for heart-rate analysis [

41,

42].

In addition, the current machine learning experiments have made little use of the fact that data consist of time series and approached the task solely as a classification problem. An interesting area of research would be to approach this machine learning task with an increased focus on time series analysis and to incorporate the regularities in the sequence of sleep stages into the algorithm. Since most dog sleep research studies apply a within-subject design (with an adaptation occasion followed by two pre-sleep treatments administered in a random order), advantage can be taken of the fact that the algorithms can be trained with the first recordings of the dogs and then be used for scoring later recordings of the same dogs.

Results from Study 1, showing that (manual) sleep staging is least reliable when transitions occur between stages, could also be taken into consideration for optimizing a semi-automated method. While cutting out the epochs before and after a transition seems to somewhat increase the reliability of the scoring, it also results in additional data loss, and this trade-off relationship should thus be further examined before implementing it into practice. In addition, considering the higher disagreement between coders with less experience, it might be optimal to train/adjust the learning algorithms separately for a given coder if a certain number of coded recordings are available. In line with our assumption, we found the highest agreement within coders despite the fact that the number of visible EEG channels differed between the two intra-observer scoring events. Presumably, this agreement would be even higher with an identical setup of visible channels during scoring. For future canine sleep studies, we therefore suggest one scorer only when coding PSG recordings for comparisons and using the same set of visible EEG channels during visible sleep staging.

There seems to be a difference in reliability between the different sleep stages. While the most optimal automated algorithm achieved a very high (AUC > 0.88) agreement between sleep and wake, manual scoring seems to struggle most with the distinction between W and D, which is in line with reports on human data, where Stage 1 NREM sleep seems to be the most problematic with regards to scoring (for a review, see [

17]). This, again, suggests that the implementation of an objective and automated method for sleep–wake distinction might cause a significant improvement in reliability. Alternatively, when in a human study authors decided to eliminate Stage 1 NREM completely from the PSG recordings, it resulted in an increased κ value [

20]. Eliminating D from dogs’ hypnograms in future studies might be a feasible option but needs further investigation. The mere existence of the D stage in carnivore and insectivore species is suggestive for the lack of a clear-cut distinction between sleep and awake [

43], thus a strict categorization during hypnogram scoring is likely not ideal. Furthermore, the current canine PSG protocol does not make it possible to record from occipital derivations where alpha activity is best observed in humans [

20], which would be crucial for recognizing quiet W/D stages. Previous studies using the same non-invasive EEG method and hypnogram scoring protocol seem to indicate that dogs’ D reacts to pre-sleep treatments mostly in the same ways as NREM, although the results are often less clear for that stage. For example, relative D and NREM durations were both higher after positive as opposed to negative social interaction, whereas relative REM duration clearly showed the opposite trend [

30]. Following a behaviorally active day, dogs showed a marked increase in NREM duration compared to that following a behaviorally passive day. Meanwhile, the same tendency was not significant for D in the two conditions [

3]. According to another study with a different protocol [

4], however, following an active day, dogs were more likely to have an earlier drowsiness and NREM. At the same time, dogs spent less time in D and more time in NREM and REM, compared to after a normal day. The spectral analysis of the D stage is additionally problematic due to the high proportion of muscle tone artifacts, which led to the exclusion of this stage from an analysis showing that learning new commands had an effect on the EEG spectrum in dogs [

23].

Our results show that similarly to in human studies on sleep, the IRR of canine PSG analysis is highly dependent on the scorers’ prior experience. It has been proposed that ongoing training and scoring experience at the time of the experiment has the potential to reduce differences between the scorers of human PSG recordings [

28]. While there are currently no standard criteria for how many PSG recordings a person needs to code in order to become a so-called expert, in this study, all three coders had coded at least 80 PSG recordings prior to the scorings included in the analysis. Some suggest, however, that this requirement may be not enough [

28]. In spite of these limitations, high agreement between expert scorers was found for the identification of NREM (

Table 3,

Table 4 and

Table 5). Some of the more recent work on dog PSG data exclusively examines NREM traces [

24,

26,

39], while comparisons of the relative ratio of REM to NREM duration [

4,

30] are also relatively safe from the difficulty of distinguishing D from W. This suggests that visual scoring in the dog can be sufficient with regard to many questions.

Another possible method for obtaining better agreement in canine sleep staging would be the application of an alternative scoring system, which has been proposed by the American Academy of Sleep Medicine (AASM) [

44]. Compared to the most widely accepted standard described by Rechtschaffen and Kales [

5], the AASM classification comprises alternative EEG derivations, merging non-REM Stages 3 and 4 into N3, and recommends different sampling rates and filter settings for PSG reporting. The AASM system has been revised and reviewed several times and might have the potential to revolutionize visible and automated canine PSG scoring as well. Alternatively to distinct sleep-staging, researchers frequently evaluate human PSG data based on quantitative sleep parameters (amplitude- and frequency-mapping etc.) or by feature-plots (arousals, slow-wave activity, spindle-plots etc.). Considering and describing sleep as a continuum can be a feasible alternative in canine sleep research as well, especially in automated wake–sleep identification (e.g., [

45]).

While there is evidence supporting the external validity of the canine PSG protocol [

3], as the data obtained have revealed human-like memory consolidation and emotion processing during sleep, there is still room for methodological improvement in the field. The present study explores this space by examining the reliability of the visual sleep stage scoring applied thus far and also by offering and evaluating a first step towards a semi-automated scoring method.