Developing and Evaluating Poultry Preening Behavior Detectors via Mask Region-Based Convolutional Neural Network

Abstract

:Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Housing, Animals and Management

2.2. Data Acquisition

2.3. Preening Behavior Definition and Labelling

2.4. Network Description

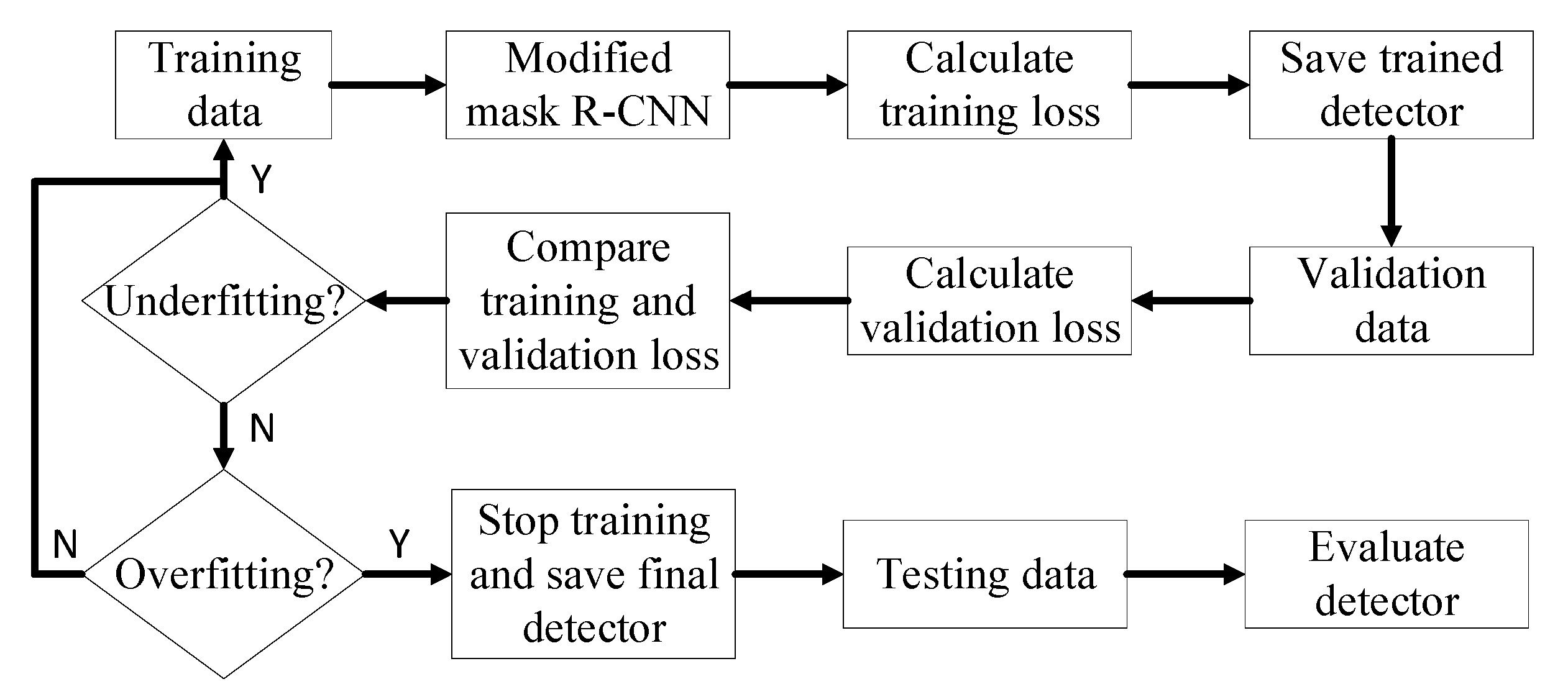

2.5. General Workflow of Detector Training, Validation and Testing

2.6. Modifications for Detector Development

2.6.1. Residual Network Architecture

2.6.2. Pre-Trained Weight

2.6.3. Image Resizer

2.6.4. Proposed Regions of Interest

2.7. Evaluation Metrics

2.8. Sample Detection

3. Results

3.1. Sample Detection

3.2. Performance of Various Residual Networks

3.3. Performance of the Detectors Trained with Various Pre-Trained Weights

3.4. Performance of Various Image Resizers

3.5. Performance of the Detectors Trained with Various Numbers of Regions of Interest

3.6. Preening Behavior Measurement via the Trained Detector

4. Discussion

4.1. Ambiguous Preening Behavior

4.2. Segmentation Method Comparison

4.3. Architecture Selection

4.4. Performance of Various Residual Network

4.5. Performance of the Detectors Trained with Various Pre-Trained Weights

4.6. Performance of Various Image Resizers

4.7. Performance of the Detectors Trained with Various Numbers of Regions of Interest

4.8. Preening Behavior Measurement with the Trained Detector

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Nomenclature

| AP | Average precision | MIOU | Mean intersection over union |

| ARS | Agriculture Research Service | MP | Max pooling |

| Bbox | Bounding box | NMS | Non-maximum suppression |

| Box1–Box5 | Proposed boxes with various sales and ratios after the region proposal network | Interpolated precision in the precision-recall curve when recall is r | |

| C1–C5 | Convolutional stages 1 to 5 in the residual network | Measured precision at recall | |

| COCO | Common object in context | P2-P6 | Feature maps in the feature pyramid network |

| Conv. | Convolution | ReLu | Rectified linear units |

| CNN | Convolutional neural network | ResNet | Residual network |

| Faster RCNN | Faster region-based convolutional neural network | ResNet18-ResNet1000 | Residual network with 18-1000 layers of convolution |

| FC | Fully-connected | ROI | Regions of interest |

| FCN | Fully-connected network | RPN | Region proposal network |

| FN | False negative | TN | True negative |

| FP | False positive | TP | True positive |

| FPN | Feature pyramid network | Ups. | Upsampling |

| IOU | Intersection over union | USA | United State of American |

| Mask R-CNN | Mask region-based convolutional neural network | USDA | United State department of agriculture |

References

- Powers, R.; Li, N.; Gibson, C.; Irlbeck, E. Consumers’ Evaluation of Animal Welfare Labels on Poultry Products. J. Appl. Commun. 2020, 104, 1a. [Google Scholar] [CrossRef]

- Xin, H. Environmental challenges and opportunities with cage-free hen housing systems. In Proceedings of the XXV World’s Poultry Congress, Beijing, China, 5–9 September 2016; pp. 5–9. [Google Scholar]

- Webster, A.J. Farm animal welfare: The five freedoms and the free market. Vet. J. 2001, 161, 229–237. [Google Scholar] [CrossRef] [PubMed]

- Appleby, M.C.; Mench, J.A.; Hughes, B.O. Poultry Behaviour and Welfare; CABI: Oxfordshire, UK, 2004. [Google Scholar]

- Delius, J. Preening and associated comfort behavior in birds. Ann. N. Y. Acad. Sci. 1988, 525, 40–55. [Google Scholar] [CrossRef] [PubMed]

- Kristensen, H.H.; Burgess, L.R.; Demmers, T.G.; Wathes, C.M. The preferences of laying hens for different concentrations of atmospheric ammonia. Appl. Anim. Behav. Sci. 2000, 68, 307–318. [Google Scholar] [CrossRef]

- Dawkins, M.S. Time budgets in red junglefowl as a baseline for the assessment of welfare in domestic fowl. Appl. Anim. Behav. Sci. 1989, 24, 77–80. [Google Scholar] [CrossRef]

- Duncan, I. Behavior and behavioral needs. Poult. Sci. 1998, 77, 1766–1772. [Google Scholar] [CrossRef]

- Nicol, C. Social influences on the comfort behaviour of laying hens. Appl. Anim. Behav. Sci. 1989, 22, 75–81. [Google Scholar] [CrossRef]

- Banerjee, D.; Biswas, S.; Daigle, C.; Siegford, J.M. Remote activity classification of hens using wireless body mounted sensors. In Proceedings of the 9th International Conference on Wearable and Implantable Body Sensor Networks, London, UK, 10–12 May 2012; pp. 107–112. [Google Scholar]

- Li, G.; Li, B.; Shi, Z.; Zhao, Y.; Ma, H. Design and evaluation of a lighting preference test system for laying hens. Comput. Electron. Agric. 2018, 147, 118–125. [Google Scholar] [CrossRef]

- Li, G.; Ji, B.; Li, B.; Shi, Z.; Zhao, Y.; Dou, Y.; Brocato, J. Assessment of layer pullet drinking behaviors under selectable light colors using convolutional neural network. Comput. Electron. Agric. 2020, 172, 105333. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Li, E.; Liang, Z. Instance segmentation of apple flowers using the improved Mask R-CNN model. Biosys. Eng. 2020, 193. [Google Scholar] [CrossRef]

- Li, G.; Xu, Y.; Zhao, Y.; Du, Q.; Huang, Y. Evaluating convolutional neural networks for cage-free floor egg detection. Sensors 2020, 20, 332. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE international conference on computer vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Li, D.; Chen, Y.; Zhang, K.; Li, Z. Mounting behaviour recognition for pigs based on deep learning. Sensors 2019, 19, 4924. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yu, Y.; Zhang, K.; Yang, L.; Zhang, D. Fruit detection for strawberry harvesting robot in non-structural environment based on Mask R-CNN. Comput. Electron. Agric. 2019, 163, 104846. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European conference on computer vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE conference on computer vision and pattern recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Sharif Razavian, A.; Azizpour, H.; Sullivan, J.; Carlsson, S. CNN features off-the-shelf: An astounding baseline for recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops, Columbus, OH, USA, 24–27 June 2014; pp. 806–813. [Google Scholar]

- Lotter, W.; Sorensen, G.; Cox, D. A multi-scale CNN and curriculum learning strategy for mammogram classification. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer: New York, NY, USA, 2017; pp. 169–177. [Google Scholar]

- Abdulla, W. Mask R-CNN for Object Detection and Instance Segmentation on Keras and Tensorflow. Available online: https://github.com/matterport/Mask_RCNN (accessed on 30 March 2020).

- Huang, J.; Rathod, V.; Sun, C.; Zhu, M.; Korattikara, A.; Fathi, A.; Fischer, I.; Wojna, Z.; Song, Y.; Guadarrama, S. Speed/accuracy trade-offs for modern convolutional object detectors. In Proceedings of the IEEE conference on computer vision and pattern recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7310–7311. [Google Scholar]

- International Egg Commission. Atlas of the Global Egg Industry. Available online: https://www.internationalegg.com/wp-content/uploads/2015/08/atlas_2013_web.pdf (accessed on 16 June 2020).

- Duncan, I.; Wood-Gush, D. An analysis of displacement preening in the domestic fowl. Anim. Behav. 1972, 20, 68–71. [Google Scholar] [CrossRef]

- Koelkebeck, K.W.; Amoss, M., Jr.; Cain, J. Production, physiological and behavioral responses of laying hens in different management environments. Poult. Sci. 1987, 66, 397–407. [Google Scholar] [CrossRef] [PubMed]

- Vezzoli, G.; Mullens, B.A.; Mench, J.A. Relationships between beak condition, preening behavior and ectoparasite infestation levels in laying hens. Poult. Sci. 2015, 94, 1997–2007. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, LA, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. IJCV 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Vala, H.J.; Baxi, A. A review on Otsu image segmentation algorithm. Int. J. Adv. Res. Comput. Eng. Technol. 2013, 2, 387–389. [Google Scholar]

- Aydin, A.; Cangar, O.; Ozcan, S.E.; Bahr, C.; Berckmans, D. Application of a fully automatic analysis tool to assess the activity of broiler chickens with different gait scores. Comput. Electron. Agric. 2010, 73, 194–199. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic image segmentation with deep convolutional nets and fully connected crfs. arXiv 2014, arXiv:1412.7062. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- COCO. Detection Evaluation. Available online: http://cocodataset.org/#detection-eval (accessed on 15 April 2020).

- Du, C.; Wang, Y.; Wang, C.; Shi, C.; Xiao, B. Selective feature connection mechanism: Concatenating multi-layer CNN features with a feature selector. PaReL 2020, 129, 108–114. [Google Scholar] [CrossRef]

| Items | Training | Validation | Testing |

|---|---|---|---|

| Hen age (day) | 266 | 267 | 268 |

| Images | 1175 | 102 | 423 |

| Number of preening hens | 8464 | 762 | 2788 |

| Number of non-preening hens | 26,786 | 2298 | 9902 |

| Stage of Convolution | ResNet18 | ResNet34 | ResNet50 | ResNet101 | ResNet152 | ResNet1000 |

|---|---|---|---|---|---|---|

| C1 | 7 × 7, 64, stride 2 3 × 3 max pooling, stride 2 | |||||

| C2 | ||||||

| C3 | ||||||

| C4 | ||||||

| C5 | ||||||

| Average pooling, 1000-d FC, softmax | ||||||

| ResNet | MIOU (%) | Precision (%) | Recall (%) | Specificity (%) | Accuracy (%) | F1 Score (%) | AP (%) | Processing Speed (ms·image−1) |

|---|---|---|---|---|---|---|---|---|

| ResNet18 | 87.4 | 87.2 | 87.1 | 96.7 | 94.7 | 87.1 | 83.6 | 364.8 |

| ResNet34 | 87.4 | 88.5 | 86.2 | 97.0 | 94.8 | 87.3 | 83.1 | 378.4 |

| ResNet50 | 87.8 | 84.4 | 85.3 | 95.7 | 93.5 | 84.9 | 81.4 | 342.9 |

| ResNet101 | 87.4 | 87.7 | 88.4 | 96.7 | 95.0 | 88.1 | 83.5 | 386.0 |

| ResNet152 | 87.4 | 83.1 | 90.1 | 95.1 | 94.1 | 86.5 | 85.7 | 387.7 |

| ResNet1000 | 87.4 | 84.5 | 90.8 | 95.6 | 94.6 | 87.6 | 85.6 | 393.2 |

| Training | MIOU (%) | Precision (%) | Recall (%) | Specificity (%) | Accuracy (%) | F1 Score (%) | AP (%) | Processing Speed (ms·image−1) |

|---|---|---|---|---|---|---|---|---|

| w/o pre-trained weights | 88.7 | 80.3 | 92.3 | 93.9 | 93.6 | 85.9 | 87.5 | 379.0 |

| w/pre-trained COCO weights | 87.2 | 83.4 | 91.3 | 94.5 | 93.8 | 87.2 | 86.7 | 382.9 |

| w/pre-trained ImageNet weights | 83.6 | 81.2 | 83.1 | 94.9 | 92.5 | 82.2 | 80.0 | 413.7 |

| Mode of Image Resizer | MIOU (%) | Precision (%) | Recall (%) | Specificity (%) | Accuracy (%) | F1 Score (%) | AP (%) | Processing Speed (ms·image−1) |

|---|---|---|---|---|---|---|---|---|

| None | 87.2 | 85.3 | 88.4 | 96.0 | 94.4 | 86.8 | 84.6 | 377.7 |

| Square | 87.6 | 86.9 | 86.3 | 96.6 | 94.5 | 86.6 | 86.7 | 377.8 |

| Pad64 | 87.0 | 84.2 | 90.1 | 95.6 | 94.5 | 87.0 | 86.3 | 383.3 |

| Number of ROI | MIOU (%) | Precision (%) | Recall (%) | Specificity (%) | Accuracy (%) | F1 Score (%) | AP (%) | Processing Speed (ms·image−1) |

|---|---|---|---|---|---|---|---|---|

| 30 | 87.5 | 92.5 | 79.3 | 98.2 | 94.2 | 85.4 | 75.8 | 378.2 |

| 100 | 87.1 | 84.2 | 89.1 | 95.5 | 94.2 | 86.6 | 84.9 | 379.4 |

| 200 | 86.9 | 85.8 | 89.5 | 96.0 | 94.6 | 87.6 | 85.4 | 378.1 |

| 300 | 87.2 | 85.7 | 87.2 | 96.3 | 94.4 | 86.4 | 83.8 | 390.7 |

| 400 | 87.6 | 82.7 | 90.3 | 95.0 | 94.0 | 86.3 | 86.5 | 382.0 |

| 500 | 87.0 | 82.8 | 90.7 | 95.0 | 94.1 | 86.6 | 86.2 | 378.8 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, G.; Hui, X.; Lin, F.; Zhao, Y. Developing and Evaluating Poultry Preening Behavior Detectors via Mask Region-Based Convolutional Neural Network. Animals 2020, 10, 1762. https://doi.org/10.3390/ani10101762

Li G, Hui X, Lin F, Zhao Y. Developing and Evaluating Poultry Preening Behavior Detectors via Mask Region-Based Convolutional Neural Network. Animals. 2020; 10(10):1762. https://doi.org/10.3390/ani10101762

Chicago/Turabian StyleLi, Guoming, Xue Hui, Fei Lin, and Yang Zhao. 2020. "Developing and Evaluating Poultry Preening Behavior Detectors via Mask Region-Based Convolutional Neural Network" Animals 10, no. 10: 1762. https://doi.org/10.3390/ani10101762