1. Introduction

Microbial fermentation has become a cornerstone of modern biotechnology, enabling the production of probiotics, enzymes, amino acids, bioactive peptides, and pharmaceuticals on an industrial scale. The global microbial fermentation technology market is projected to surpass USD 36 billion by 2025, reflecting both rising consumer demand and expanding industrial applications [

1]. Among probiotic strains,

L. plantarum has emerged as one of the most widely utilized species in functional foods and nutraceuticals because of its strong acid tolerance, broad metabolic versatility, and health-promoting effects [

2,

3,

4]. Despite its industrial significance, the fermentation of

L. plantarum remains challenging to standardize due to nonlinear interactions among multiple parameters such as pH, temperature, inoculum density, and medium composition. This inherent complexity often results in poor reproducibility, inconsistent yields, and financial losses for manufacturers.

Conventional strategies for fermentation monitoring, such as offline sampling, endpoint CFU measurements, or physicochemical assessments, are inherently reactive and frequently fail to capture the dynamic evolution of microbial growth [

5]. Although statistical approaches like Response Surface Methodology (RSM) have been applied to optimize culture conditions, they remain limited by assumptions of linearity and cannot adequately model the complex temporal behavior of microbial systems [

6]. Consequently, there is an urgent need for advanced predictive tools that can provide early, actionable insights to prevent failure and maximize productivity.

Recent advances in artificial intelligence (AI) have demonstrated significant potential in biological process modeling. Artificial Neural Networks (ANNs) have been employed to optimize exopolysaccharide production [

7], predict GABA synthesis in soymilk fermentation [

8], and enhance cellulase yields under solid-state fermentation [

9]. Hybrid optimization strategies, such as combining ANN with Genetic Algorithms (GA) or RSM, have further improved multi-variable optimization of lactic acid bacteria processes [

10,

11]. More recently, AI-driven approaches have extended to morphological classification of microbial images [

12], the optimization of recombinant protein production [

13], and even predictive models for food safety applications [

14]. These studies collectively highlight the versatility of AI as a transformative tool in fermentation science.

Convolutional neural networks (CNNs), originally developed for image recognition, have proven particularly adept at extracting patterns from high-dimensional and structured data [

15]. Their ability to capture local dependencies and temporal trends makes them highly suitable for analyzing fermentation time-series data. Preliminary studies have shown that CNNs outperform traditional statistical models and even recurrent neural networks in discriminating microbial growth trajectories and predicting process outcomes [

9,

16,

17,

18]. This suggests that CNNs may serve as effective “soft sensors” for bioprocess control, capable of delivering accurate early-stage predictions that traditional models cannot provide.

Despite these promising advances, most existing AI applications in fermentation rely on complete fermentation profiles or final-stage data, limiting their practical utility in real-time decision-making. Few studies have systematically investigated the use of CNNs for early-stage prediction of probiotic fermentation success and the implementation of timely corrective interventions. In particular, there is a lack of integrative frameworks that combine predictive modeling with experimental validation in industrially relevant probiotic strains such as L. plantarum.

In this study, we propose a CNN-based predictive framework that utilizes the first 24 h of L. plantarum fermentation data to classify process outcomes into successful, semi-successful, or failed categories. By integrating feature selection, time-series modeling, and validation experiments, we demonstrate the capacity of CNNs to provide robust early-stage predictions and enable effective real-time interventions. This work contributes a novel, scalable, and intelligent approach for probiotic fermentation control, with the potential to improve product consistency, reduce batch failure, and enhance overall industrial efficiency.

2. Materials and Methods

2.1. Bacterial Strain and Culture Conditions

The strain

L. plantarum LP198 was obtained in lyophilized powder form from SYNBIO TECH, Inc. (Kaohsiung, Taiwan). The strain was rehydrated and activated in de Man–Rogosa-Sharpe (MRS) medium (Cyrus Bioscience, Taipei, Taiwan) at 37 °C for 48 h, and then subcultured at a 10% (

v/

v) inoculum into fresh MRS medium to generate sufficient biomass for fermentation. Viability was confirmed by colony-forming unit (CFU) enumeration using plate counts on MRS agar, according to the CNS 14760 N6371 protocol for lactic acid bacteria [

17]. Long-term preservation was achieved by storage in 65% glycerol at −80 °C or under manufacturer-recommended lyophilized conditions, with activation steps performed prior to each experiment to ensure cell viability and consistency. As illustrated in

Figure 1, this strain activation and culture process constituted the initial stage of the workflow, which subsequently integrated fermentation setup, data acquisition, preprocessing, and deep learning prediction.

2.2. Fermentation Setup and Data Acquisition

Fermentation experiments were conducted in 12 L bench-top bioreactors (BTF-A, Biotop, Nantou, Taiwan) with a working volume of 10 L, operated under batch conditions. Each vessel was equipped with embedded pH, temperature, and dissolved oxygen (DO) probes and connected to a computer-based data acquisition system for continuous signal logging. The fermentation process was maintained at 37 °C, with agitation at 200 rpm and an initial pH adjusted to 6.5. Activated seed cultures of L. plantarum LP198 were inoculated at 10% (v/v), corresponding to approximately 1 × 107 CFU/mL at the start of fermentation. Dissolved oxygen, pH, and temperature were automatically monitored at 5 min intervals throughout the 48 h fermentation period.

Outlier values exceeding three standard deviations from the mean were removed, and missing values were linearly interpolated based on adjacent time points. To focus on early-stage predictive features, all datasets were truncated to the first 24 h (288 time points). Subsequently, the cleaned data were normalized to zero mean and unit variance to ensure comparability across runs. As shown in

Figure 2, the preprocessing pipeline comprised raw signal acquisition, outlier removal, interpolation, truncation to 24 h, and normalization for subsequent model training.

Software and data management. All bioprocess signals were digitally logged through the BTF-A controller (Biotop, Taiwan) and exported as comma-separated value (CSV) files for downstream analysis. Data management and preprocessing, including outlier filtering, linear interpolation, 24 h truncation, and normalization, were conducted in Python (v3.9) (Anaconda distribution) using the Pandas (v2.2.3), NumPy (v1.23.0), and SciPy libraries (v1.16.2), with visualization performed in Matplotlib (v3.3.2). Feature ranking and statistical utilities were implemented in scikit-learn (v1.2.2), and model development was carried out using TensorFlow/Keras (v2.12) as described in

Section 2.5. These tools collectively ensured standardized data handling, transparency, and full reproducibility of the analytical workflow.

2.3. Dataset Labeling and Classification Criteria

For each fermentation run, process data, including time, pH, temperature, dissolved oxygen, and agitation speed, were logged digitally. Final microbial counts were used to categorize the fermentation outcomes into four classes: (1) Successful: ≥3 × 109 CFU/mL; (2) Semi-successful: 1–3 × 109 CFU/mL; (3) Unsuccessful: <1 × 109 CFU/mL; and (4) Complete failure: <1 × 107 CFU/mL or undetectable. The classification was based on CFU counts after 48 h fermentation. The final dataset included 52 experiments (40 newly conducted and 12 from prior studies), with 38 used for training and 9 for testing. The complete failure group was excluded from training. The total of 52 fermentation runs was not derived from a formal design of experiments but from an empirical collection of available batch data under consistent operating parameters. Among them, 40 new runs were performed to encompass representative variations in early-stage process conditions (e.g., pH, temperature, dissolved oxygen), while 12 additional datasets from prior studies were incorporated to enhance diversity and model generalization. This approach reflects practical process variability rather than factorial-level combinations, ensuring that the CNN model learned from biologically and operationally realistic conditions.

2.4. Data Preprocessing and Feature Selection

Raw time-series data were preprocessed by removing outliers and imputing missing values using a linear interpolation approach based on adjacent time points. Outliers were identified when values exceeded three standard deviations from the mean. Each sample was truncated to the first 24 h (288 time points) for model input. Signal-to-Noise (S/N) ratio analysis was applied to identify features with the highest discriminatory power between outcome classes [

18]. Features with minimal variation or constant values across all experiments (e.g., fixed setpoints) were removed. The final input dataset consisted of three key features: pH, actual temperature, and time. Outliers were identified as observations exceeding ±3 SD from the batch-specific mean and flagged prior to downstream processing (

Figure 2b). Missing values were imputed using linear interpolation on the time axis, ensuring continuity of local signal dynamics (

Figure 2c). To enable early prediction, signals were truncated to the first 24 h of fermentation (288 points at 5 min sampling;

Figure 2d), corresponding to the model’s early decision window. Finally, each truncated sequence was normalized to the range [0–1] to standardize the scale across variables (

Figure 2e).

Signal-to-Noise (S/N) Ratio for Feature Ranking

The S/N ratio was adopted as a feature-ranking metric because it provides a robust, model-independent measure of discriminative power under small-sample conditions. This approach, originating from Taguchi’s design-of-experiments methodology, quantifies the balance between inter-class signal strength and intra-class noise, thereby identifying variables that contribute most to class separation [

19,

20]. Compared with mutual information or recursive feature elimination (RFE), the S/N ratio avoids estimator bias and performs reliably even with limited experimental data. Specifically, mutual information can be sensitive to binning effects and small-sample variability, whereas RFE depends on model choice and parameter tuning, potentially introducing circularity in feature evaluation. In contrast, the S/N ratio offers computational simplicity, interpretability, and robustness in biological datasets characterized by stochastic fluctuations. In this study, the S/N ratio–derived feature order (pH, actual temperature, time) was consistent with that obtained from mutual information analysis (Spearman correlation = 0.91), supporting its validity as an efficient ranking criterion for fermentation datasets.

To evaluate the contribution of each recorded variable toward classification performance, we applied an S/N ratio method. This approach is commonly used in experimental design and feature selection to assess the discriminative capacity of input features. The S/N ratio for each variable was calculated using the following Equation:

where

yi denotes the response value (e.g., CFU concentration or target classification label) for the

ith observation, and

n is the total number of observations. This formulation, based on the “larger-the-better” criterion from Taguchi methods, emphasizes higher response values and penalizes variability.

After calculating the S/N ratios for all recorded variables, the top three features, pH value, actual fermentation temperature, and time, were selected based on their ranking scores. These variables exhibited the highest signal contributions and were retained as inputs for model training. Static parameters (e.g., setpoint temperature, RPM) and redundant signals were excluded from subsequent analysis.

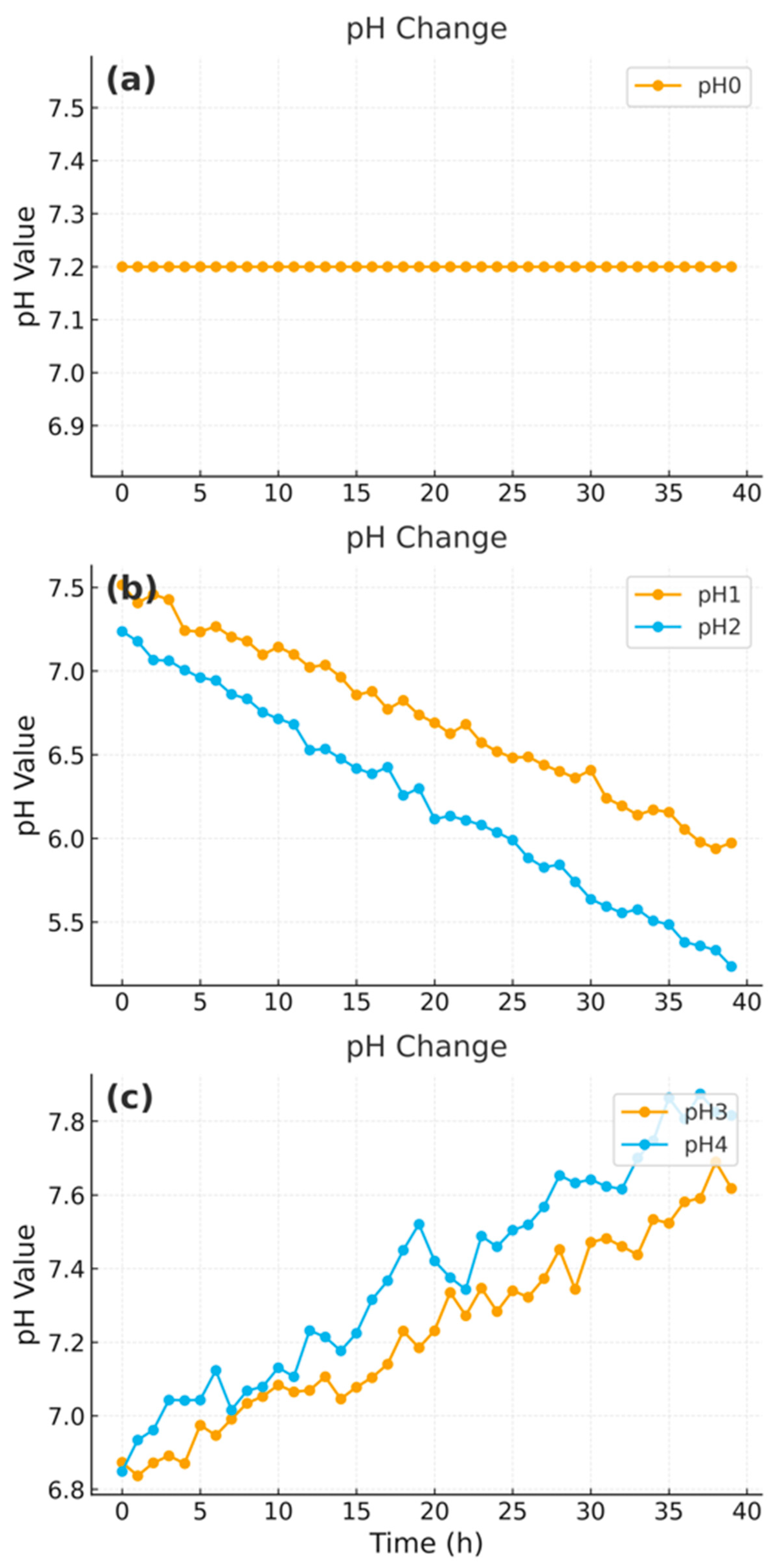

Figure 3 shows representative early-stage pH trajectories, which display informative dynamics and were consistently identified as among the most predictive variables by the S/N ratio method. Temperature and time followed similar monotonic trends in their S/N profiles, further supporting their selection as predictive features.

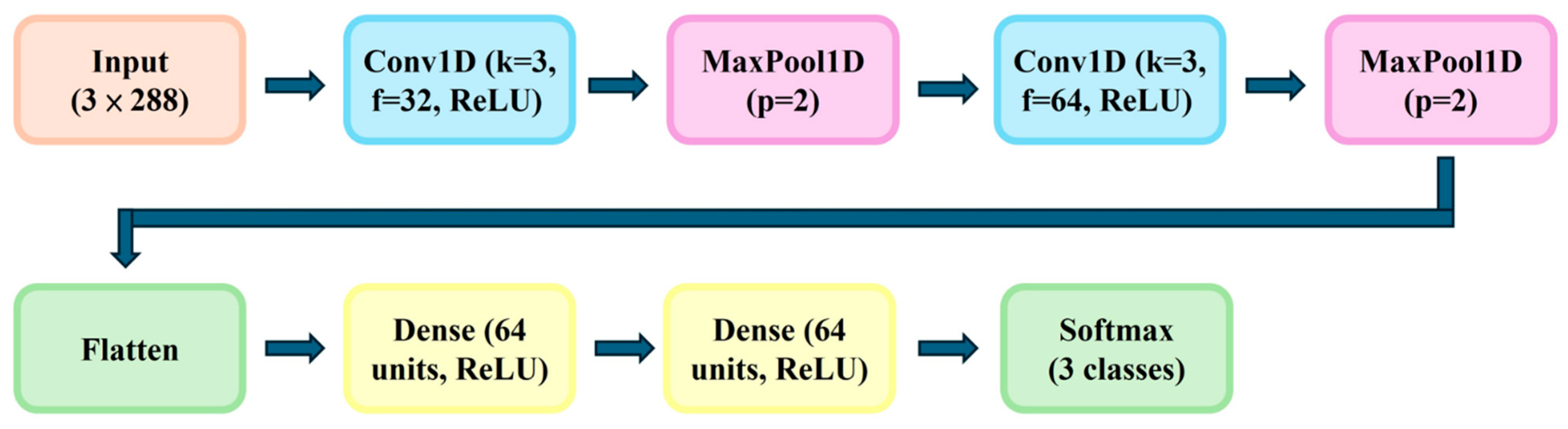

2.5. CNN Model Architecture and Training

A Convolutional Neural Network (CNN) model was constructed using one-dimensional time-series input vectors. The network architecture included: (1) Input layer: 3 × 288 features; (2) Two convolutional layers (kernel size = 3, filters = 32 and 64, activation = ReLU); (3) Max pooling layers (pool size = 2); (4) Flattening layer; (5) two fully connected dense layers, each consisting of 64 units with ReLU activation; and (6) Output layer (softmax, 3 classes). This dual-layer dense configuration was chosen to enhance feature abstraction and improve classification stability. The model was trained for 500 epochs using categorical cross-entropy loss and the Adam optimizer (learning rate = 0.001), with 80% of the dataset for training and 20% for validation. Training and implementation were carried out using Python (v3.9), Pandas (v2.2.3), NumPy(v1.23.0), SciPy (v1.16.2), Matplotlib (v3.3.2), TensorFlow/Keras (v2.12), and scikit-learn (v2.12). As depicted in

Figure 4, the architecture integrates convolution, pooling, and dense layers, enabling efficient extraction of temporal features for early prediction of fermentation outcomes.

2.6. Model Validation and Intervention Protocol

To evaluate the real-time predictive capability of the model, two-stage validation was performed. In the first stage, the model classified fermentation outcomes based on data from the first 24 h. In the second stage, if a “semi-successful” or “failure” class was predicted, a manual intervention was carried out between 16 and 20 h, corresponding to the model’s earliest stable decision window. Corrective actions included (i) pH adjustment by aseptically adding 1 M NaOH in small aliquots to raise the medium from approximately pH 4.0–4.2 to 5.0 ± 0.1, and (ii) nutrient supplementation by introducing 1% (v/v) sterile-filtered MRS medium. Each fermentation received at most one corrective action. Comparative experiments were conducted with and without intervention across trials performed by novice and experienced operators. Improvement in microbial yield was assessed to validate the effectiveness of early prediction-based control.

For external validation, four additional datasets were collected from independent fermentation batches conducted at separate times within the same pilot-scale facility. Each dataset comprised 10–12 runs performed by different operators using fresh MRS medium preparations and new inoculum cultures. Slight variations in environmental and process conditions were intentionally retained—specifically, ambient temperature (±1.5 °C), agitation rate (180–220 rpm), and initial pH (6.3–6.7)—to evaluate model robustness under realistic operational fluctuations. None of these data were used for model training or hyperparameter tuning.

4. Discussion

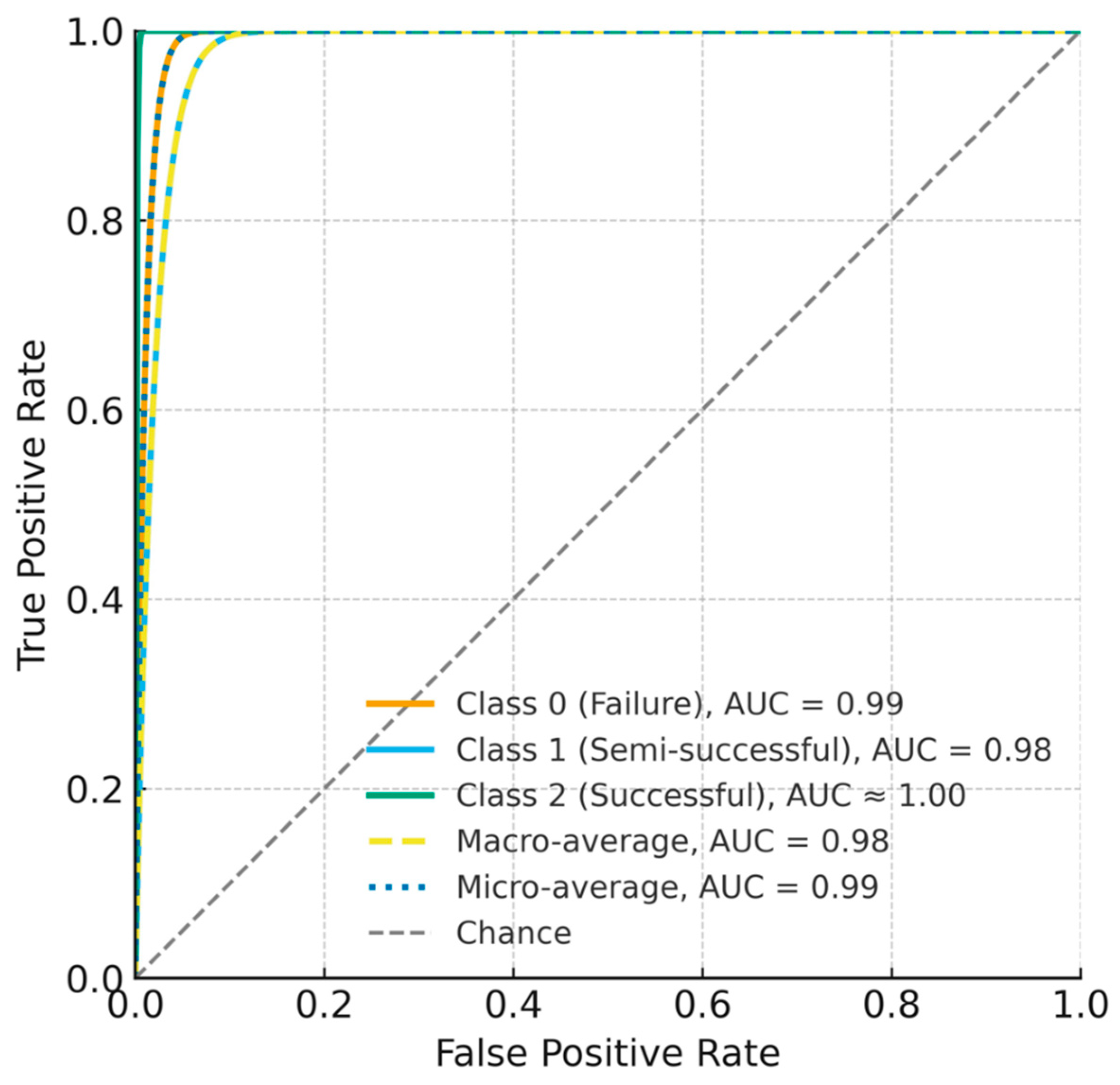

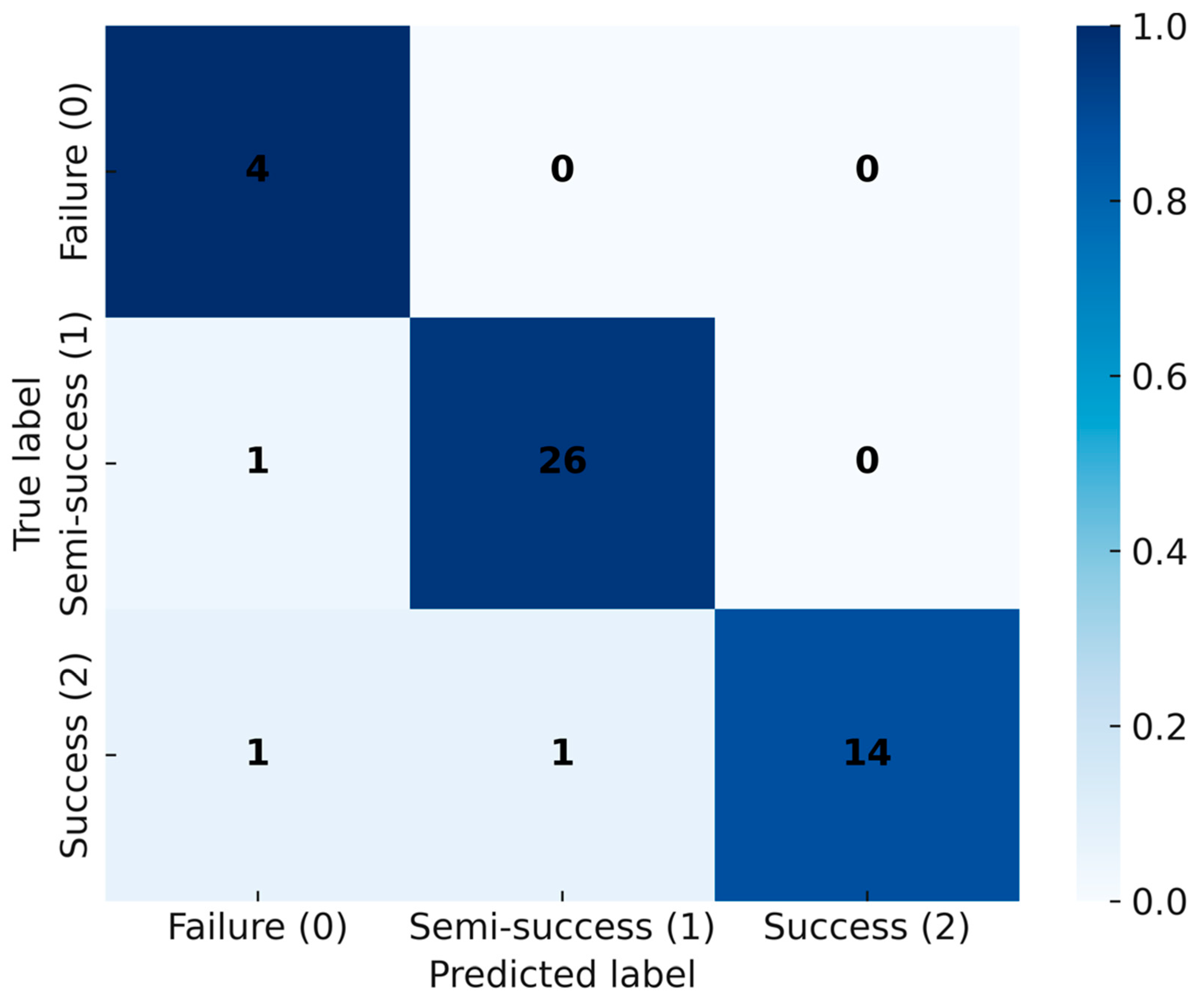

This study demonstrates that a one-dimensional CNN trained on the first 24 h of fermentation time-series (pH, temperature, time; 288 points) can accurately classify L. plantarum LP198 outcomes into three categories and outperforms LSTM, XGBoost, and LightGBM under identical data splits. On the internal test set, the CNN achieved 97.87% accuracy, compared with 93.61% (LSTM), 95.74% (XGBoost), and 93.61% (LightGBM) (

Figure 6). Discrimination was consistently high across classes, with AUC = 0.99 (failure), 0.98 (semi-successful), and ≈1.00 (successful); the macro-average AUC = 0.98 and micro-average AUC = 0.99 (

Figure 7). The confusion matrix shows only limited misclassification—primarily between adjacent biological categories—consistent with expected gradations near decision boundaries (

Figure 8). These misclassifications occurred only between adjacent categories, consistent with biological variability at borderline outcomes, and did not involve gross errors (e.g., failure classified as success). Together, these results indicate that discriminative cues emerge within the first 24 h, supporting the use of CNNs as soft sensors for early decision-making in probiotic fermentation [

8,

9,

17]. Although the total number of fermentation runs (

n = 47 effective) may appear limited, this scale aligns with prior AI-driven fermentation studies, where each experiment involves complex bioreactor operations and biological variability [

15,

16]. The model’s consistent performance across independent training–testing splits and fivefold cross-validation suggests that the CNN learned generalizable temporal patterns rather than overfitting to batch-specific noise. In addition, regularization measures such as dropout and early stopping were applied to enhance robustness. Nevertheless, broader validation with expanded datasets across strains and facilities will be pursued in future work to further strengthen generalization. Furthermore, the CFU trajectory across 24–72 h (

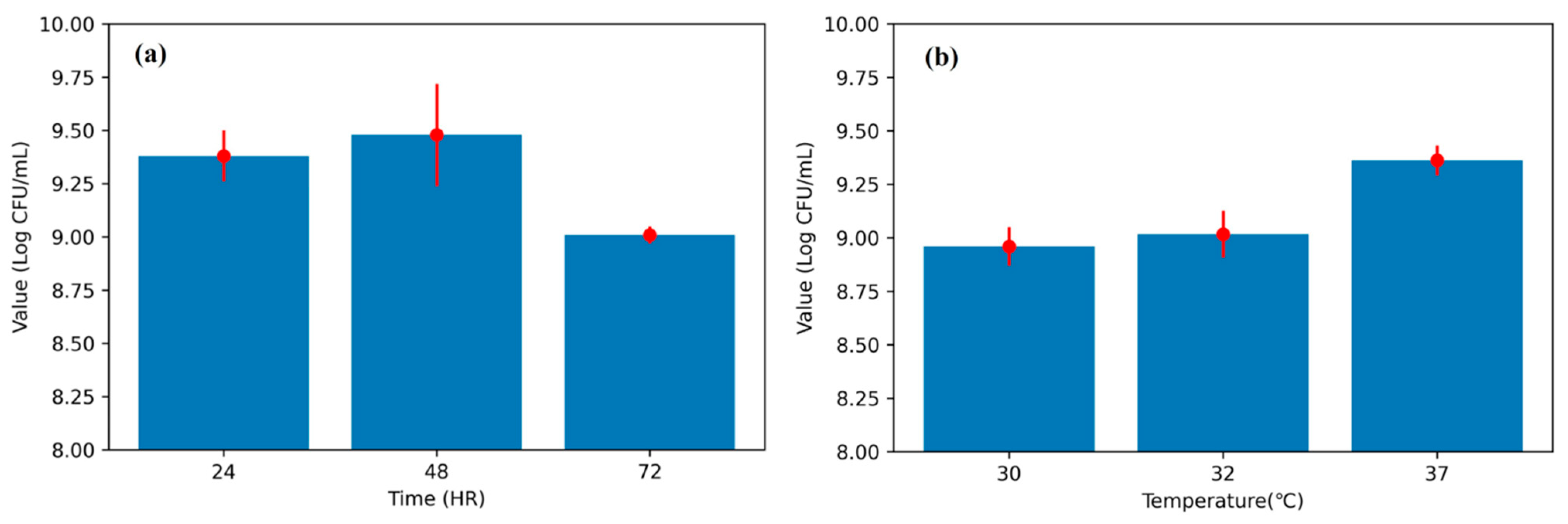

Figure 5a) corroborates the biological basis for early prediction: viability typically peaks by ~48 h and declines thereafter, indicating that discriminative cues emerge well before endpoint measurements. The observed CFU trends (

Figure 5) are consistent with known growth optima of

L. plantarum, where mid-fermentation (48 h) and moderate-to-high incubation temperatures (37 °C) yield the most favorable outcomes. This biological behavior underscores the relevance of incorporating temperature and early growth trajectories into predictive models.

Compared with previously reported ML- and DL-based fermentation studies [

15,

16,

19], the present CNN framework offers distinctive advantages in both functionality and applicability. It enables early-stage prediction (within the first 24 h) using minimal sensor data, directly captures temporal trajectory features instead of static endpoints, and allows real-time corrective intervention based on model guidance. Moreover, its validation across four independent datasets confirms robustness and generalizability, supporting its potential use as a practical control tool for industrial-scale probiotic fermentation.

Relative to traditional modeling approaches widely used in fermentation optimization—such as ANN with RSM/GA hybrids and classical response-surface–based designs—the present framework addresses two practical limitations reported in prior work: reliance on endpoint readouts and incomplete treatment of temporal dependencies [

3,

14,

16,

19,

20]. Prior ANN/RSM studies have successfully optimized media or targeted metabolite yields in lactic acid bacteria and related systems, yet they typically treat inputs as static factors and therefore do not leverage early process trajectories [

14,

16,

19,

20]. By contrast, the CNN’s convolutional filters capture localized temporal motifs in sensor streams (

Figure 9), providing a mechanistic–agnostic but trajectory-aware representation that aligns with recent observations of CNN efficacy on biological time-series and lactic acid bacteria processes [

8,

9]. In this context, the present findings extend the ANN-centric literature by showing that temporal feature learning can deliver deployable, early-stage classification with industrial relevance [

3,

8,

9].

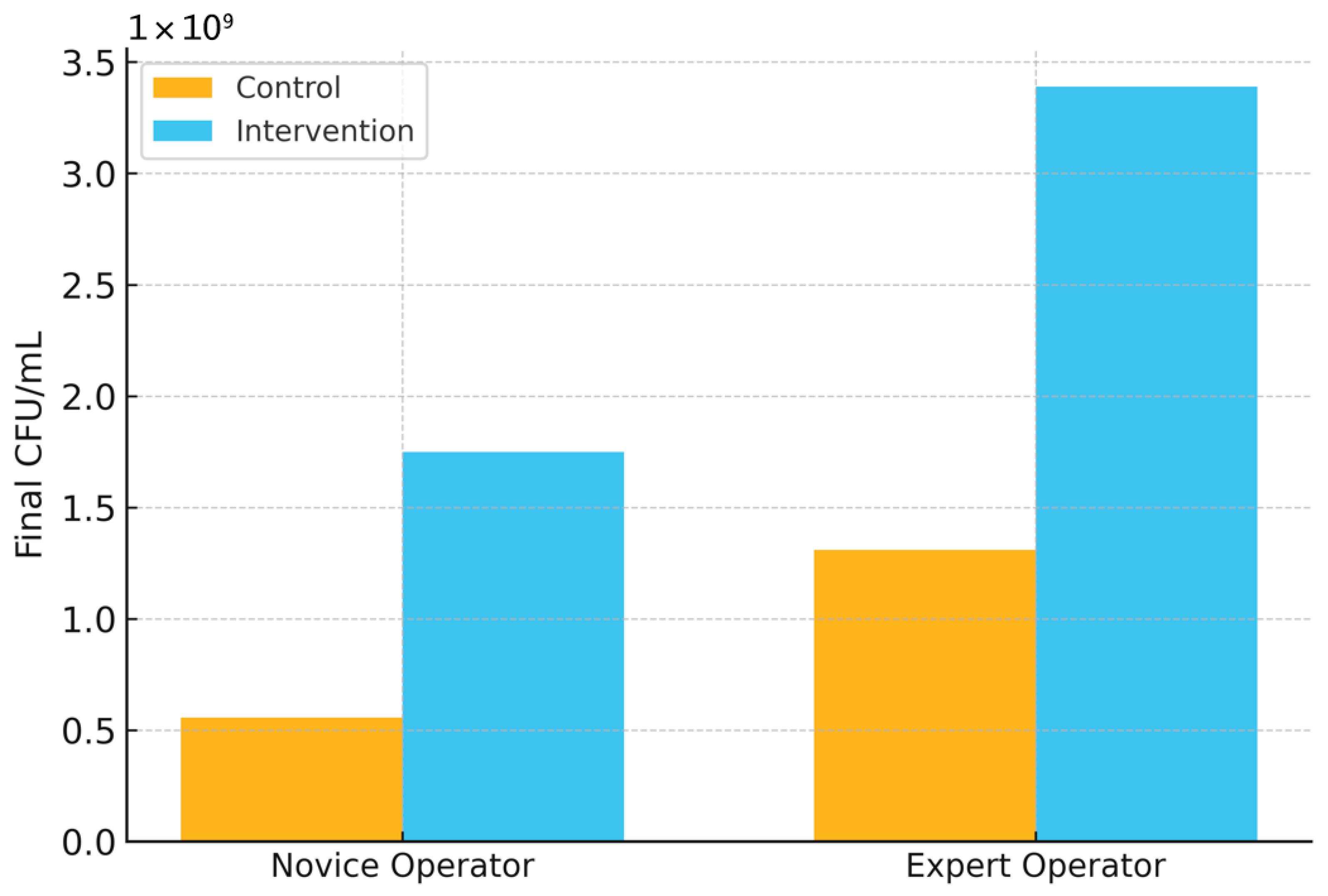

Importantly, model-guided interventions translated algorithmic gains into operational improvements. When early predictions triggered simple corrective actions (e.g., pH adjustment, nutrient supplementation), final CFU/mL increased for both novice and expert operators, 5.57 × 10

8 → 1.75 × 10

9 (novice) and 1.31 × 10

9 → 3.39 × 10

9 (expert), demonstrating that decision support can reduce operator-dependent variability and elevate yield (

Figure 10). This effect, observed under routine conditions, suggests a practical route to standardizing probiotic manufacturing, complementing the optimization-oriented strategies reported previously for LAB processes [

14,

16,

19,

20]. From a quality-by-design perspective, such soft-sensing closes the loop between early diagnostics and targeted control actions, thereby lowering the risk of batch failure while improving resource efficiency [

8,

9].

External validation with four independent datasets further supports generalizability, with correct outcome predictions at early checkpoints (e.g., ~40th time point) and at 24 h. While the external sets differ in strain/context, the model maintained high agreement with final CFU-based categories, indicating robustness of the learned temporal signatures rather than overfitting to internal idiosyncrasies. This observation is consistent with reports that deep models, properly regularized, can transfer across related fermentation regimes when key process trajectories are retained [

8,

9,

17].

Several limitations warrant consideration. First, the internal dataset comprises 47 valid runs for LP198; although sufficient to demonstrate utility, broader coverage across strains, substrates, and scales is required to fully characterize generalization boundaries. Second, only three sensor variables were used; incorporating dissolved oxygen, redox, on-line spectroscopy, or metabolomics could further improve sensitivity to failure precursors [

3,

8]. Third, while the current CNN effectively captures local patterns, attention-based or hybrid CNN-Transformer architectures may better model long-range dependencies and cross-channel interactions, as suggested by recent deep learning advances [

17]. Finally, baseline implementations (LSTM, XGBoost, LightGBM) followed standard practice [

18], but expanded hyperparameter sweeps and probabilistic calibration could sharpen comparative insights, especially for borderline “semi-successful” cases (

Figure 8). We also note that

Figure 9 is a qualitative schematic rather than raw intermediate activations; activation-based interpretability (e.g., layer-wise relevance, integrated gradients) will be reported in follow-up work using released weights and code.

In summary, the proposed CNN enables early, accurate, and actionable prediction of probiotic fermentation outcomes using only the first 24 h of process data, outperforming strong baselines (

Figure 5,

Figure 6 and

Figure 7) and improving yield via model-guided interventions (

Figure 9). These findings build on and extend prior ANN/RSM-based optimization studies by introducing a trajectory-aware soft-sensing paradigm suited for real-time manufacturing control in

L. plantarum and, potentially, other industrial microbes [

3,

8,

9,

14,

16,

19,

20]. Practical deployment of the CNN model can be achieved by integrating it into existing fermentation control software as an early-warning and decision-support module. The system continuously receives sensor inputs (pH, temperature, and dissolved oxygen) and provides classification outputs within the first 24 h of fermentation. When suboptimal outcomes are predicted, operators can apply targeted corrective actions, such as pH correction, nutrient supplementation, or temperature adjustment, based on the system’s alerts. This workflow can also be embedded into Supervisory Control and Data Acquisition (SCADA) or Programmable Logic Controller (PLC) platforms to enable semi-automated or fully automated control, thereby improving process robust-ness and consistency in industrial probiotic production. Future work will scale datasets across strains and facilities, integrate richer sensing modalities, and explore attention-based architectures to further enhance early-stage reliability and deployment readiness [

17,

18].

5. Conclusions

This study demonstrates that a one-dimensional convolutional neural network (CNN) trained on the first 24 h of fermentation time-series (pH, temperature, time; 288 points) can accurately classify L. plantarum LP198 outcomes into successful, semi-successful, and unsuccessful categories. Under identical data splits, the CNN outperformed strong baselines, LSTM, XGBoost, and LightGBM, on the internal test set, achieving 97.87% accuracy versus 93.61%, 95.74%, and 93.61%, respectively. ROC analysis further indicated high discrimination across classes (AUC = 0.99 for failure, 0.98 for semi-successful, and ≈1.00 for successful), with macro-average AUC = 0.98 and micro-average AUC = 0.99. These results support the feasibility of early, trajectory-aware soft sensing for probiotic fermentation using routine process signals.

Importantly, early predictions translated to measurable operational gains when used to guide simple interventions (e.g., pH adjustment, nutrient supplementation). Final CFU/mL increased from 5.57 × 108 (control) to 1.75 × 109 (intervention) in novice-operated runs, and from 1.31 × 109 to 3.39 × 109 in expert-operated runs. These improvements indicate that model-guided decision support can reduce operator-dependent variability and enhance yield without altering core process hardware or adding invasive sensors.

This work has practical scope and limitations. The modeling was performed on 47 valid runs drawn from 52 experiments of a single strain and used three on-line variables; broader validation across strains, substrates, and facilities, and the inclusion of additional sensing (e.g., DO/redox, spectroscopic or metabolomic signals) are warranted. Future efforts will expand dataset scale and diversity, evaluate prospective deployments, and explore attention-based or hybrid CNN-Transformer architectures to capture longer-range dependencies while maintaining real-time applicability. Overall, the present framework provides a deployable pathway for early prediction and targeted control in industrial probiotic fermentation.