Abstract

To address the challenges of acquiring precise dynamic models for electromechanical servo systems and the susceptibility of system state information to noise, a backstepping command filter controller based on reinforcement learning is proposed. This method can achieve high-precision and low-energy control in electromechanical servo systems subject to noise interference and unmodeled disturbances. The proposed method employs a command filter to obtain differential estimations of known signals and process noise. Reinforcement learning is employed to estimate unknown system disturbances, including unmodeled friction and external interference. The weights of the networks are updated using an online gradient descent algorithm, eliminating the need for an offline learning phase. In addition, the Lyapunov function analysis method is used to demonstrate the stability of the closed-loop system. To validate the effectiveness of the proposed method, comparative experiments were conducted using an electromechanical servo experimental platform. The experimental results indicate that, compared to other mainstream controllers, the control algorithm excels in tracking accuracy, response speed, and energy efficiency, and demonstrates significant robustness against system uncertainties and external disturbances.

1. Introduction

In recent years, the tracking control problem of electromechanical servo systems has garnered significant attention in both theoretical research and practical applications, owing to their inherent uncertainties [1,2,3,4]. Servo systems are widely used in high-precision fields, such as autonomous driving, unmanned aerial vehicle (UAV) navigation, and robotic control. However, the complex dynamic characteristics and uncertainties of these systems pose substantial challenges for achieving precise control. Inadequate handling of uncertainties and external disturbances can lead to deviations from the desired trajectory or even system instability, thereby compromising both the performance and safety. To address these issues, various control strategies have been proposed, including adaptive robust control, sliding mode control (SMC), and disturbance rejection control [5,6,7,8]. However, these methods often rely on partial or full knowledge of system dynamics, limiting their applicability in scenarios where the dynamic parameters are entirely unknown, particularly in industrial and engineering contexts where practical constraints are significant.

Model-free control strategies have gained considerable interest in overcoming reliance on dynamic models. These approaches, including iterative learning control [9], neural network control [10], reinforcement learning (RL) control [11], and optimal control [12], reduce dependence on precise system models and expand the scope of control technologies. However, they still face challenges in managing uncertainties, external disturbances, and noise in complex dynamic environments where maintaining stable control remains difficult.

To cope with these complex environments, researchers have explored the potential of combining RL with classical control methods [13,14,15]. The combination of RL’s adaptability and the stability of classical control techniques can effectively overcome the limitations of traditional methods in dynamic environments.

A previous study [16] introduced a hybrid control strategy based on the actor-critic architecture, enabling the simultaneous estimation of multiple PID controller gains. This strategy does not rely on precise dynamic models and can adaptively adjust to dynamic behaviors and operating environments, although its theoretical stability proof remains incomplete. Other studies [17,18] employed Lyapunov methods to verify RL stability in complex systems, such as helicopters and robots, but found it to be less effective in handling external disturbances. In practical applications, servo systems often encounter uncertain disturbances that can significantly degrade control performance or even lead to instability [19,20].

To enhance disturbance rejection, some studies have combined RL with disturbance observers. For example, refs. [21,22] proposed integrating RL with fuzzy logic systems (FLS). In [21], RL optimizes the virtual and actual control at each step of the backstepping control, whereas FLS approximates unknown functions and provides feedforward compensation. Similarly, ref. [22] applied the FLS to approximate unknown functions in large-scale nonlinear systems by utilizing state observers to estimate unmeasured states. However, the performance of an FLS is highly dependent on fuzzy rule design, which often requires extensive experimental tuning, complicating practical implementation. In addition, ref. [23] proposed combining RL with sliding mode control, offering a solution distinct from traditional integral sliding mode control. This sliding mode controller uses neural networks for approximation and disturbance estimation, while an actor-critic architecture continuously learns the optimal control strategy using adaptive dynamic programming (ADP). However, the inherent discontinuity in sliding mode control may lead to chattering, which negatively impacts performance. Another study [24] integrated RL with the robust integral of the sign of the error (RISE) controller using an actor-critic architecture to approximate unknown dynamics for feedforward compensation. While this improved the control accuracy, the high sensitivity of the sign function to error variations weakened the robustness of the system to noise. In environments with significant measurement noise, the RISE controller may misinterpret the noise as an error, exacerbating fluctuations in the control inputs. Despite these advancements, further research is necessary to develop more flexible and precise control strategies for handling greater uncertainties and complex environments.

This study proposes a novel backstepping command filter controller based on reinforcement learning (BCF-RL) to address these challenges. The BCF-RL controller effectively tackles the challenge of obtaining accurate dynamic models and reduces sensitivity to noise in state measurements. Using an actor-critic framework, the adaptive RL control strategy estimates unknown disturbances in real time and provides feedforward compensation, significantly mitigating the impact of unmodeled dynamics and external perturbations. Traditional noise suppression methods, such as the extended Kalman filter (EKF) and low-pass filters, are effective and often face high computational complexity and reduced robustness in nonlinear systems. By avoiding excessive signal smoothing, command filters achieve better noise suppression and faster response times, making them a more suitable choice.

Compared with the method in [16], the BCF-RL approach provides more rigorous theoretical stability guarantees. Unlike traditional sliding mode control, it reduces chattering and improves system performance. Furthermore, compared with the RISE control strategy in [24], this method demonstrates greater robustness to sensor noise while achieving faster response times. One key advantage is its minimal reliance on prior system knowledge, which allows for asymptotic tracking and robust performance against both unknown dynamics and external disturbances. These features make the BCF-RL a promising solution for the precise control of complex dynamic systems with high uncertainty. The contributions of this study are as follows:

- (1)

- Given the sensitivity of the system speed signal to the measurement noise, a command filter was developed to process this noise, thereby enhancing the robustness of the controller.

- (2)

- A hybrid data-model-driven control method was designed. By employing the actor-critic structure of reinforcement learning, this method provides a more accurate estimation of unknown disturbances, resulting in a higher accuracy in position tracking.

- (3)

- The stability of the controller in reinforcement learning and the weight convergence of the two networks are rigorously proven from a theoretical perspective, ensuring the robustness and effectiveness of the control strategy.

This article is organized as follows: Section 2 introduces the problem formulation and system architecture. Section 3 details the design process of the main controller. Section 4 describes the design process of the auxiliary controller. Section 5 provides stability analysis and proof. Section 6 presents the experimental results. Finally, Section 7 concludes the article.

2. Problem Formulation and System Architecture

2.1. Description of Servo System

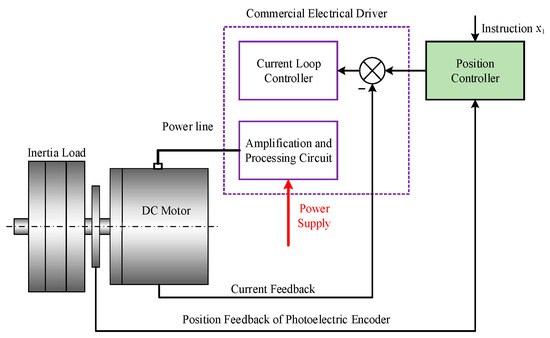

The servo system depicted in Figure 1 is a positioning system in which the inertial load is directly driven by a permanent magnet synchronous motor (PMSM). The control objective is to ensure that the inertial load closely follows a specified smooth motion trajectory . Typically, the bandwidth of the motor current loop is much greater than that of the velocity and position loops, allowing the current dynamics to be neglected. The dynamic characteristics of the servo system are described by (1).

Figure 1.

Electromechanical servo system structure.

In (1), , and represent angular displacement, angular velocity, and angular acceleration, respectively. is the torque constant, u is the control signal, denotes the inertial load coefficient. The term accounts for other unmodeled disturbances such as nonlinear friction, external disturbances, and unmodeled dynamics. is represented by the nonlinear friction model as follows:

where represent the amplitude levels of different friction characteristics, are the shape coefficients characterizing different friction characteristics. characterizes the viscous friction torque, characterizes the Coulomb friction characteristics, ))] characterizes the Stribeck effect.

Considering sensor measurement errors, the dynamics equation can be modified to include an additional error term . Defining , the state equation of the system is as follows:

where is the control coefficient. ))], is viscous friction and represents unknown disturbances. It is worth noting that the controller designed in this study relies only on the known control coefficient , measurable angular displacement , and angular velocity .

2.2. Design of Control System Architecture

The design objective of the controller is to obtain u such that of the inertial load can track the desired trajectory while ensuring that the system signals remain bounded. The following assumptions were made to facilitate the controller design process.

Assumptions.

- (1)

- The desired reference trajectory and its first derivative are both existent and bounded.

- (2)

- The measurement noise n and the unknown interference are bounded, with known upper and lower limits.

3. Design of BCF Main Controller

3.1. Design of Differential Command Filter

In practical systems, the direct differentiation of real-time signals can amplify the noise and cause numerical instability. Therefore, it is essential to design differential-command filters to mitigate these issues.

Lemma 1.

Define a command filter as specified in (4).

where is the constant gain, is the intermediate state of the command filter, is the input of the filter, is the output of the filter, and is the initial state of the filter. If is continuous, ( is a compact set), and is bounded, both is bounded.

Proof of Lemma 1.

Define . According to (4), the first derivative of can be expressed as shown in (5).

Because , according to ref. [25], it can be concluded that (6) is old.

where .

Define . . Based on , and according to (5) and (6), it can be obtained as shown in (7):

□

3.2. Design of Dummy Control Law α

The feedback inputs to the BCF main controller are states and , and the output is the control law u. The design of the BCF controller primarily includes the differential command filter, dummy control law α for , and actual control law .

and are defined in (8).

For tracking error to approach zero, it is necessary to ensure that its derivative approaches zero. By differentiating , we obtain Equation (8).

To ensure the convergence of , a Lyapunov function is designed as .

According to (8) and (9), the derivative of can be obtained, as shown in (10).

Because of the unknown measurement noise, n, an analytical expression for cannot be obtained. Based on Lemma 1, the command filter is constructed, as shown in (11).

where .

By substituting (11) into (9), the expression for n can be derived as shown in (12).

By substituting (12) into (10), Equation (13) can be obtained:

Therefore, the dummy control law can be designed as shown in (14).

where is the control gain.

3.3. Design of Actual Control Law u

In Equation (8), to ensure the convergence of the dummy control error , it is necessary for its derivative to approach zero. Consequently, Equation (15) can be derived.

To ensure the convergence of , a Lyapunov function is designed, as shown in (16).

The derivative of can be expressed as shown in (17).

Unknown nonlinear can be constructed by command filter as (18).

where .

The unknown disturbance F is estimated using the RL auxiliary controller, and the estimated result is . represents the estimation error, which is further detailed in Section 4.

Therefore, the actual control law u can be designed as shown in (19).

where is the control gain.

Substituting (19) into (15) yields the expression for the estimation error, as shown in Equation (20).

3.4. Controller Overall Architecture

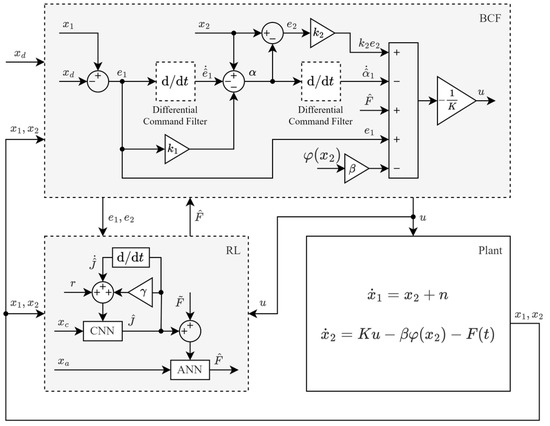

The BCF-RL controller was designed to address technical challenges in electromechanical servo systems. It consists of a BCF main controller and an RL auxiliary controller. The BCF main controller integrates a command filter into the backstepping control, enhancing the system’s response speed and significantly reducing errors caused by measurement noise. This improves the robustness and accuracy of the system. The RL auxiliary controller handles the online estimation of unknown disturbances in the system. Utilizing critic and actor neural networks, the RL auxiliary controller adapts to dynamic changes and effectively estimates the complex nonlinear disturbances. This combination enhances the adaptive capability of the system and improves the stability and reliability of the controller for practical applications. The controller structure is illustrated in Figure 2.

Figure 2.

Electromechanical servo system structure (ANN: actor neural network; CNN: critic neural network).

4. Design of RL Auxiliary Controller

The RL auxiliary controller, which includes an actor neural network and a critic neural network, is an efficient approach for solving the optimal control problems for nonlinear systems. The core of this method lies in the actor network’s ability to achieve timely feed-forward compensation by dynamically estimating uncertainty disturbances in a nonlinear system. Concurrently, the critic network evaluates the operating cost in real time, quantifies the performance of the current control strategy, and provides feedback to the actor network to optimize its subsequent decision-making process. The design of the RL auxiliary controller is described in this section.

4.1. Preliminaries

To achieve optimal tracking control, the instantaneous cost function is defined as (21).

where and are constant coefficients. To achieve optimal tracking control, the infinite time-domain cost function J to be minimized is represented by (22).

where γ represents the discount factor, which ensures that the cost function is bounded even if the ideal trajectory does not approach 0. By transforming the above equation, the following Bellman equation is obtained as (23).

4.2. Critic Neural Network

A critic neural network is used to approximate the value function of the Bellman equation in reinforcement learning. In this study, the critic network generated a scalar evaluation signal that is then used to tune the actor network. Based on the general approximation properties of neural networks, cost function J can be expressed (24).

The input of the critic neural network is . is the weight value from the input layer to the hidden layer of the critic network, is the weight value from the hidden layer to the output layer, is the reconstruction error of the neural network, and represents . Therefore, the approximate value of J can be expressed (25).

where is the estimated value of and is the estimated value of . The mismatch between the estimated and ideal weights is defined as and , respectively.

Taking the derivative of yields (26).

where , , and represent the matrix multiplication by bit (Hadamard product). In the design of the neural network weights, only was considered.

The prediction error was defined as . The objective function minimizes the approximation error in (27).

Taking the partial derivative of over yields (28).

Therefore, the updating law of can be designed as (29).

where , were used to adjust the convergence rate of the weight values.

Taking the partial derivative of Ec over yields (30).

The updating law of can be obtained as (31).

where , are used to adjust the convergence rate of the weight values.

4.3. Actor Neural Network

Although in the above design the unmodeled dynamic function F can be suppressed by the robust term in the actual control quantity, this introduces a high-gain problem. Therefore, to mitigate the potential adverse effects of a high feedback gain on the system, an actor network is adopted to estimate the unmodeled dynamics and implement feedforward compensation based on this. The following neural network (32) is used to approximate the function F.

The input of the actor neural network is . is the weight value from the input layer to the hidden layer of the actor network, is the weight value from the hidden layer to the output layer, is the reconstruction error of the actor neural network.

The estimated value of unknown dynamics can be written as (33).

Similarly, is the estimated value of and is the estimated value of . The mismatch between the estimated and ideal weights is defined as (34).

The prediction error was defined as . The objective function minimizes the approximation error in (35).

Taking the partial derivative of over yields (36).

Define ,. is the column vector of the fifth column of .

Therefore, the updating law of can be obtained as (37).

where are used to adjust the convergence rate of the weight values and .

Taking the partial derivative of over . yields (38).

The updating law of can be obtained as (39).

where are used to adjust the convergence rate of the weight values.

5. Stability Analysis

5.1. Convergence Analysis of Weight Parameters in RL Neural Networks

Lemmas 2–5 prove the weight convergence of RL actor and critic networks.

Lemma 2.

The update law for is given by (29). If the weight update learning coefficient satisfies (40).

It is guaranteed that the weight estimation error is uniformly ultimately bounded.

Proof of Lemma 2.

For , selecting the Lyapunov function is as (41).

Considering Equations (23) and (29), the first derivative of (41) can be represented as (42).

where . The last term of Equation (42) can be expanded to obtain (43).

By considering (43) and (42), we obtain (44):

where denotes the maximum eigenvalue of . , . is the upper bound of . is the upper bound of .

When the weight update coefficient satisfies (40), (45) can be obtained:

The tracking error converges exponentially to a small neighborhood of the origin. □

Lemma 3.

The update law for is given by (30). If the weight update learning coefficient satisfies (45), then

It is guaranteed that the weight estimation error is uniformly ultimately bounded.

Proof of Lemma 3:

For , selecting the Lyapunov function is as (47).

Considering Equation (31), the first derivative of (47) can be represented as (48).

The first term of Equation (48) can be expanded as:

By considering (48) and (49), we obtain (50):

where and .

When the weight update coefficient satisfies (46), (51) can be obtained as follows:

The tracking error converges exponentially to a small neighborhood of the origin. □

Lemma 4.

The update law for is given by (36). If the weight update learning coefficient satisfies (51), then

It is guaranteed that the weight estimation error is uniformly ultimately bounded.

Proof of Lemma 4.

For selecting the Lyapunov function is as (53).

Considering Equation (37), the first derivative of (53) can be represented as (54).

where and . represents the upper bound of the absolute inequality value of . is the maximum eigenvalue of the .

When the weight update coefficient satisfies (52), (55) can be obtained.

The tracking error converges exponentially to a small neighborhood of the origin. □

Lemma 5.

The update law for is given by (38). If the weight update learning coefficient satisfies

It is guaranteed that the weight estimation error is uniformly ultimately bounded.

Proof of Lemma 5.

For , selecting the Lyapunov function is as (57).

Considering Equation (39), the first derivative of (57) can be represented as (58).

where , and .

When the weight update coefficient satisfies (56), (59) can be obtained as follows:

The tracking error converges exponentially to a small neighborhood of the origin. □

Remark 1.

The convergence of the weights of the actor network ensures that the estimation error of unknown disturbances is bounded, that is, . The convergence of the weights of the critic network ensures that the estimated value of the cost function approaches the real value .

5.2. Proof of Closed-Loop Stability of the System

Theorem 1.

For system (1), the control law is (19) with weight update laws (29), (31), (37) and (39), and if the control gains satisfy

This can ensure that all closed-loop signals of the system are bounded and that the tracking error e1 exponentially converges to a small neighborhood of the origin, that is,

Proof of Theorem 1.

Define the Lyapunov candidate function as (62).

According to (13), (14), (17) and (19), the derivative of V can be expressed as (63):

where , .

The selected control gains and satisfy (60). (64) can be obtained as follows:

The tracking error e1 converges exponentially to a small neighborhood of origin. □

6. Experimental Verification

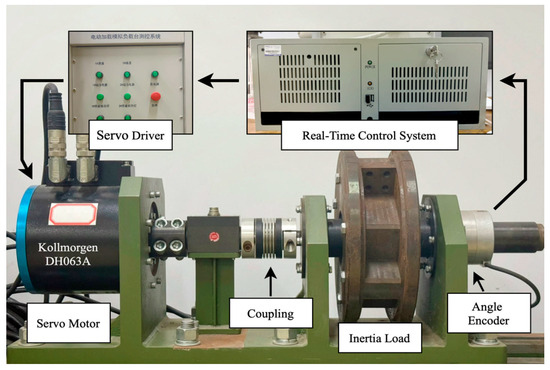

The overall assembly diagram and control system of the experimental platform are illustrated in Figure 3, with the component list and motor specifications detailed in Table 1. The platform comprises the following core components: a base unit, a PMSM drive system (including Kollmorgen D063M-13-1310 PMSM, Kollmorgen ServoStar 620 servo drive (Kollmorgen Corporation, Radford, VA, USA), Heidenhain ERN180 rotary encoder with ±13 arcsec accuracy (Heidenhain GmbH, Traunreut, Germany), inertia flywheel and coupling mechanism), power supply module, and measurement-control system. The measurement-control system integrates monitoring software with an industrial computer running real-time operating system RTU, which executes control programs developed in C language. Hardware interfaces include an Advantech PCI-1723 16-bit D/A conversion card for control command output and a Heidenhain IK-220 16-bit acquisition card for encoder signal collection. With a control cycle of 0.5 ms, the system velocity is calculated in real time through backward difference algorithm based on high-precision position signals.

Figure 3.

Electromechanical servo system experimental platform.

Table 1.

Experimental system components list.

6.1. Overview of Proposed and Comparative Controllers

To compare the effectiveness of the proposed algorithm, the following five controllers were implemented for comparative experiments under the same conditions:

C1: To adjust the proposed BCF-RL controller, the parameters of the BCF main controller were first determined according to Theorem 1 to ensure fast response and stability. Then, the RL auxiliary controller was integrated, and its parameters were adjusted to achieve fast convergence of the neural network. Finally, we fine-tuned the parameters to optimize the overall control performance. After the calculation, the control parameters were determined as follows: , The number of hidden layers in the neural network was five. The initial weight value of the neural network was set to 0.1 for each element. Through friction fitting experiments, the parameters of the friction model are obtained as follows: The controller gains of C2–C4 are the same as those in C1.

C2: The BCF-SNN controller estimates unknown dynamics using a single-actor neural network (SNN). Unlike the proposed controller, it does not include a critic network for evaluating the actor network. The control law is shown in (65).

To ensure a fair comparison, the initial weight value of the BCF-SNN controller was set to be the same as that of the BCF-RL controller.

Remark 2.

The neural networks used in this study feature a shallow architecture, resulting in lower computational complexity than deep neural networks. They are implemented in C language and executed on a real-time control system.

C3: Extended state observer (ESO) is a classic method for estimating states and system disturbances. To further compare the estimation performance of the disturbances, the neural network in C2 was replaced with an ESO for disturbance estimation. The specific design of the ESO can be found in [19]. The ESO bandwidth was set to .

C4: The BCF controller, which does not include a neural network compared with the BCF-RL, has the control law shown in (66).

C5: The traditional PID controller follows the control law expressed in (67).

The PID control parameters were set as and . These parameter values were determined using the typical Type-II system methodology for PID controller tuning and have been validated through simulation experiments.

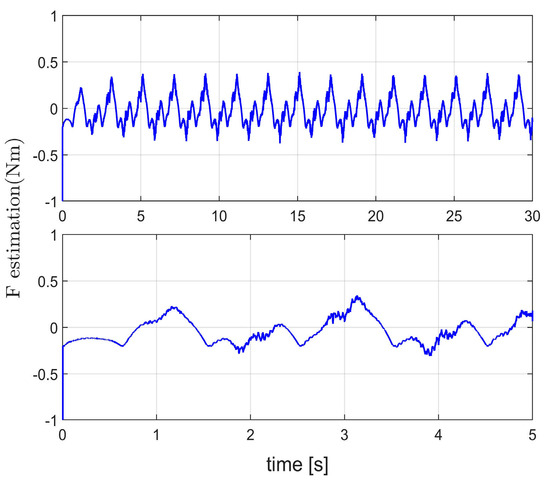

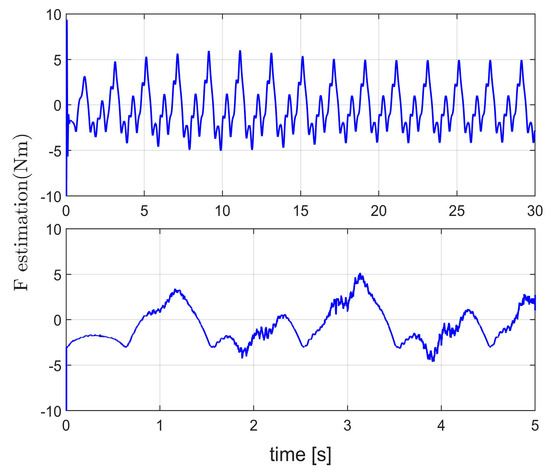

6.2. Verification of Disturbance Estimation

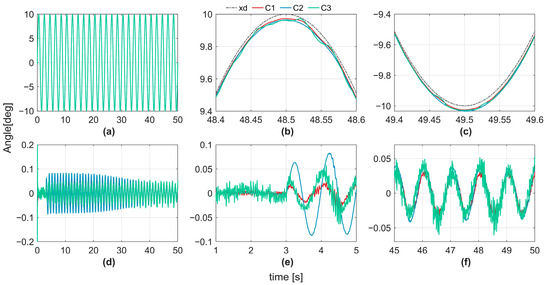

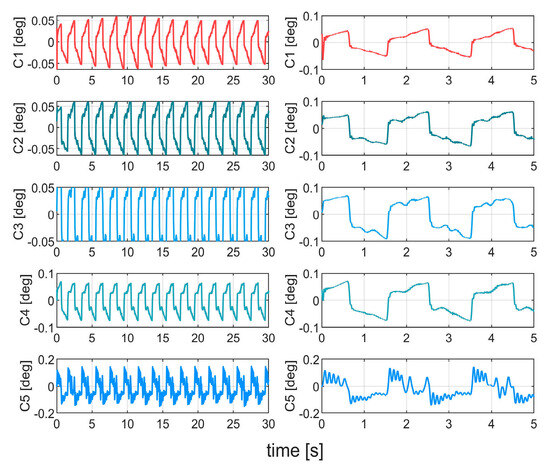

Simulations were conducted using the MATLAB/Simulink (2023b) software. Three controllers (C1, C2, C3) are compared in this study. The comparison between C1 and C2 evaluates the performance of the actor-critic mechanism implemented in C1. C3 employs a classical Extended state observer (ESO) for disturbance estimation. Because of the unmeasurable nature of unknown dynamics in real systems, the evaluation of disturbance estimation is conducted solely through simulations. In the simulation, the desired trajectory is defined as (deg), with the unknown disturbance set to zero during the first 3 s and introduced at as (Nm).

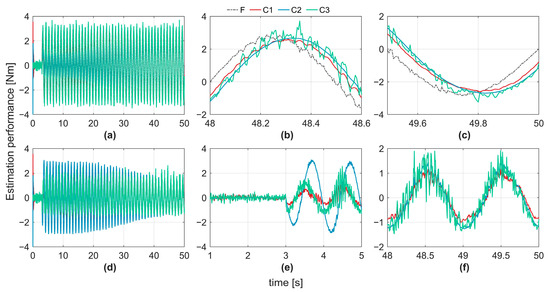

The tracking trajectories and errors of the three controllers are shown in Figure 4. Figure 4a shows the tracking curves of the four controllers. Figure 4b and Figure 4c show zoomed-in views of the last crest and trough regions in Figure 4a, respectively. Figure 4d presents the tracking error curves of the four controllers. To better observe the tracking performance immediately after the disturbance introduced at the 3 s mark, Figure 4e displays a zoomed-in view of Figure 4d over the 1–5 s interval. It can be observed that before the disturbance occurs, C3 exhibits the largest tracking error, followed by C3, whereas C1 achieves the smallest errors with minimal differences. Figure 4f illustrates the zoomed-in tracking errors during the steady-state phase (45–50 s), showing that C3 has similar maximum tracking errors. Benefiting from the neural network’s more accurate disturbance estimation, the tracking errors of C2 and C1 were significantly reduced. In particular, C1, with its actor-critic mechanism, achieves the smallest tracking error. The maximum steady-state tracking errors for C1, C2, and C3 are 0.038°, 0.043°, and 0.06°, respectively.

Figure 4.

Tracking results. (a) Tracking performance of controllers C1–C3; (b) zoomed-in view of the last rising edge in (a); (c) zoomed-in view of the last falling edge in (a); (d) tracking error of controllers C1–C3; (e) zoomed-in view of the first 5 s in (d); (f) zoomed-in view of the last 5 s in (d).

Figure 5 illustrates the estimation trajectories and errors of the unknown disturbances for the three controllers. Figure 5b and Figure 5c show zoomed-in views of the last crest and trough regions in Figure 5a, respectively. Figure 5d shows the tracking error curves of the unknown disturbances, while Figure 5e presents a zoomed-in view of Figure 5d over the 1–5 s interval. It can be observed that in the absence of disturbances, the disturbance estimation errors of the three controllers fluctuate around zero. After the disturbance is introduced at the 3 s mark, C1 achieves the smallest estimation error, followed by C3 and C2. Figure 5f highlights the steady-state phase (45–50 s), where C1 still maintains the smallest estimation error, C2 ranks second, and C3 has the largest error. Although C3 initially converges faster when estimating unknown disturbances, its steady-state accuracy is inferior to that of C1 and C2. Additionally, C3’s estimation curve exhibits persistent oscillations, which are less smooth than those of C2 and C1, potentially imposing extra computational burdens on the system. The superior estimation accuracy of C1 over C2 further validates the effectiveness of the proposed actor-critic mechanism in C1.

Figure 5.

Estimation results of unknown dynamics. (a) Estimation performance of controllers C1–C3; (b) zoomed-in view of the last rising edge in (a); (c) zoomed-in view of the last falling edge in (a); (d) estimation errors of controllers C1–C3; (e) zoomed-in view of the first 5 s in (d); (f) zoomed-in view of the last 2 s in (d).

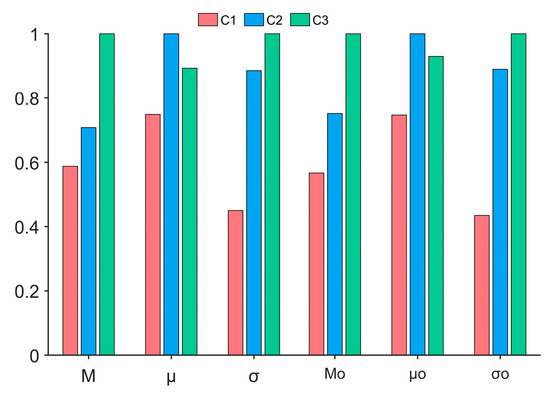

Table 2 presents the results. The primary performance metrics include the maximum absolute tracking error (M), average tracking error (μ), and standard deviation of the tracking error (σ), as defined in [19]. The subscript ‘o’ indicates the analysis term for an unknown dynamic estimation error. Figure 6 shows a bar chart derived from the normalized data presented in Table 2. Both Table 2 and Figure 6 indicate that all indicators of C1 surpassed those of C2 and C3.

Table 2.

Performance in simulation.

Figure 6.

Normalized performance metrics of C1–C3.

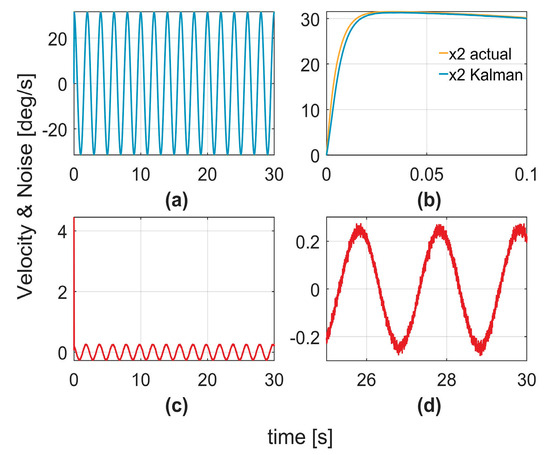

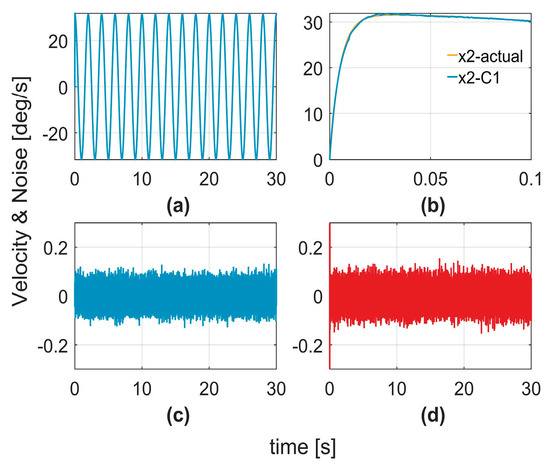

6.3. Verification of Handling System Noise

To verify the effectiveness of the controller in handling the system noise, a comparative analysis with a Kalman filter was conducted. Figure 7a,b illustrates the velocity estimations generated by the Kalman filter. Figure 7c,d depicts the estimation errors, representing the differences between the estimated and actual velocities, which correspond to the estimated noise signals. Figure 8a,b shows the velocity estimations produced by the C1 controller, whereas Figure 8d displays the noise signals estimated using Equation (11). Figure 8c shows the artificially introduced noise signals added to the system. By comparing Figure 7d and Figure 8d, it is evident that the proposed method not only achieves more accurate noise signal estimation, but also generates smoother estimation curves, significantly mitigating the impact of noise on the system. This demonstrates that the proposed method outperformed the Kalman filter in terms of noise suppression and system robustness.

Figure 7.

Kalman filter noise reduction results. (a) estimation; (b) local zoom-in of (a); (c) estimation error; (d) l zoom-in of (c).

Figure 8.

C1 noise reduction result. (a) estimation; (b) local zoom-in of (a); (c) actual noise value; (d) C1 noise estimation.

6.4. Verification of Tracking Performance

Five controllers were evaluated for tracking performance of the desired trajectory (deg).

6.4.1. Case 1: No Additive Disturbance

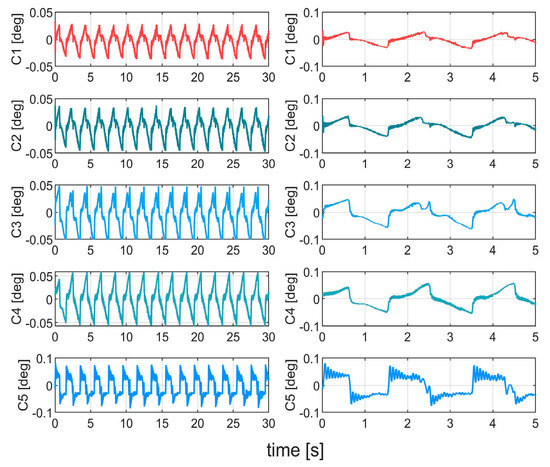

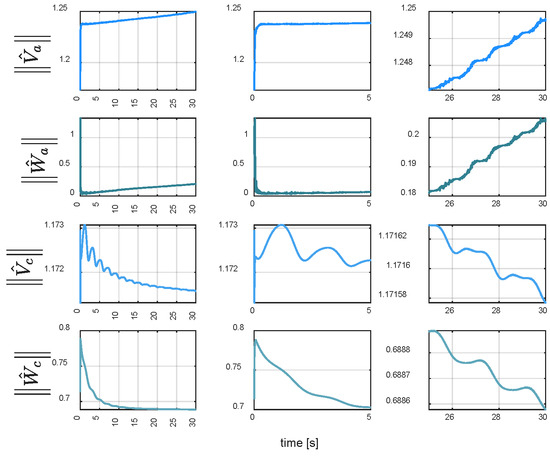

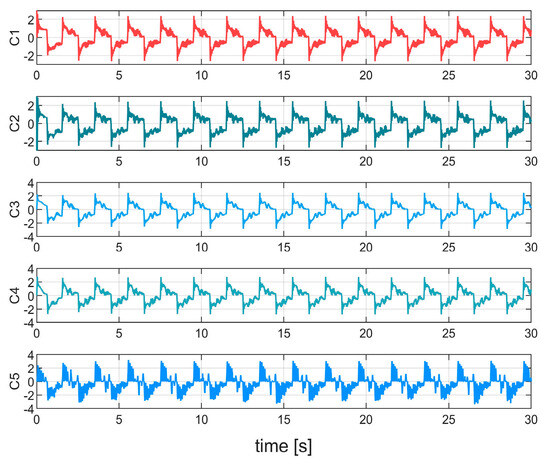

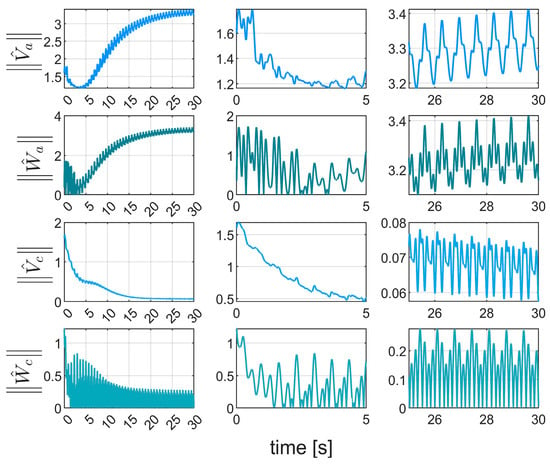

Figure 9 compares tracking errors in Case 1. Controller C5 exhibited the maximum steady-state error (0.074°), followed by C4 (0.062°). With reduced disturbance effects, C3 achieved a lower maximum steady-state error (0.056°) than the former two controllers. Through neural network-based estimation and compensation for unknown dynamics, C2 and C1 demonstrated higher precision. Particularly, C1 with critic neural network integration achieved optimal tracking performance (maximum steady-state error: 0.041°). Figure 10 compares controller energy consumption. Figure 11 verifies real-time implementation of the RL-augmented controller. Figure 12 shows rapid convergence of actor network weights under disturbance-free conditions.

Figure 9.

Tracking errors in Case 1.

Figure 10.

Controller outputs in Case 1.

Figure 11.

Estimation results in Case 1.

Figure 12.

Weights norms in Case 1.

Table 3 demonstrates the superiority of the proposed C1 controller across all key performance indicators (M is maximum steady-state error; μ is mean error; σ is the standard deviation of error). The root mean square of control input (Eu) reflects energy consumption. Comparative analysis reveals that C4 exhibits greater robustness than C5. However, because C4 lacks disturbance estimation compensation, its control precision is lower than that of C1–C3. Due to critic-actor structure, C1 achieves higher tracking accuracy compared to C2, which relies solely on a single neural network for disturbance estimation. While C2 demonstrates slightly lower energy consumption than C1, C1 outperforms C2 significantly with a 9% lower M value for critical performance metrics.

Table 3.

Performance of controllers under Case 1.

6.4.2. Case 2: Position–Velocity–Input Disturbance

Considering the diversity and complexity of actual working conditions, position–velocity–input interference is used to test the control effect of the controller, that is, the actual input of the plant is 0.5u − 0.2x1 + 0.05x2. Compared with Case 1, there are significant changes in the structural and unstructured uncertainties in this situation.

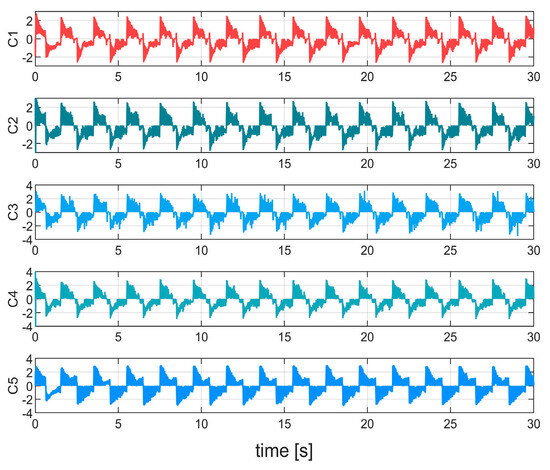

As shown in Figure 13 and Table 4, under complex conditions, C5 exhibits the largest steady-state tracking error of 0.12°, while the improvement of C4 is limited. Compared with C5 and C4, C3 achieves a 37% and 11% reduction in error, respectively. By employing neural network-based disturbance estimation and compensation, C2 further reduces the error to 0.064°. C1 achieves the lowest tracking error of 0.05°. The comparison of control inputs in Figure 14 confirms that C1 achieves the best tracking while maintaining low energy consumption. The disturbance estimation in Figure 15 and the variation of weight norms in Figure 16 reveal that, despite the increased complexity, the actor-critic network remains convergent, and the reinforcement learning-assisted controller ensures stable operation under adverse conditions.

Figure 13.

Tracking errors in Case 2.

Table 4.

Performance of controllers under Case 2.

Figure 14.

Controller outputs in Case 2.

Figure 15.

Estimation results in Case 2.

Figure 16.

Weights norms in Case 2.

6.4.3. Confidence Interval and t-Test Analysis

To ensure the reliability of the experimental results, this study conducted repeated experiments on five controllers (C1–C5) under Case 2, collecting 20 trajectory tracking error samples for each controller. At a 95% confidence level (α = 0.05), the confidence interval was calculated based on the t-distribution using the following formula:

where represents the mean tracking error of the i-th controller, denotes the standard deviation of the corresponding controller’s tracking error, and the sample size n = 20. Consulting the t-distribution table, with degrees of freedom n − 1 = 19, the critical value = 2.093.

The confidence interval analysis presented in Table 5 indicates that C1 exhibits the lowest mean tracking error (0.0418 deg) and the narrowest confidence interval ([0.0329, 0.0507]), demonstrating the smallest error and highest stability. In comparison, C2 has a slightly higher mean (0.0474 deg) and a marginally wider interval ([0.0375, 0.0573]); the confidence intervals of C3, C4, and C5 progressively increase in both mean and width, with C5 showing the highest mean (0.0835 deg) and the widest interval ([0.0671, 0.0999]), indicating the greatest variability. Overall, C1 outperforms C2, C3, C4, and C5 in terms of both mean error and stability, making it the optimal controller among the five.

Table 5.

Confidence Interval Analysis of Controllers under Case 2.

To further quantify the performance advantage of the C1 controller, this study employed an independent samples Welch’s t-test (two-sample t-test assuming unequal variances) for inter-group difference analysis. As shown in Table 6, using C1 as the baseline, one-tailed tests (α = 0.05) were conducted against C2–C5. The null hypothesis was defined as , indicating no difference in mean error between C1 and the i-th controller; the alternative hypothesis was , suggesting that C1’s mean error is less than that of the iii-th controller. The one-tailed critical t-value, based on degrees of freedom , was approximately −1.686 from the t-distribution table. The t-value was calculated using the following formula:

Table 6.

t-Test Results for Controllers under Case 2.

The statistical results in Table 6 indicate that, at a 95% confidence level, the mean error difference between C1 and C2 did not reach statistical significance (t = −0.881), failing to reject . However, C1 demonstrated a significant advantage over C3, C4, and C5, with t-values of −2.128, −2.916, and −4.675, respectively, all below the critical value of −1.686–1.686−1.686. The negative mean differences (−0.016, −0.0226, and −0.0417) further confirm that C1’s tracking error is significantly lower than that of these controllers. Based on the hypothesis testing results, C1 exhibits statistically significant superiority in trajectory tracking performance, establishing it as the optimal controller.

7. Conclusions

Considering the challenges in establishing accurate dynamic models for electromechanical servo systems and the sensitivity of system state information measurements to noise, this study proposes a nonlinear backstepping control algorithm that integrates a command filter with RL. This control algorithm effectively addresses the issues of measurement noise, unmodeled disturbances, and external interference in electromechanical servo systems by leveraging the command filter and RL. The weight update law of the RL neural network is implemented online using a gradient descent algorithm. Stability analysis and proof of the closed-loop system were conducted based on the Lyapunov function method. Comparative experiments were performed to validate the effectiveness of the designed controller, and confidence intervals and t-tests were employed to verify the reliability of the controller. The experimental results demonstrate that the proposed algorithm exhibits strong robustness against system uncertainties and external disturbances, achieving excellent control performance.

Author Contributions

C.X.: conceptualization, methodology, software, investigation, formal analysis, writing—original draft. J.H.: conceptualization, funding acquisition, formal analysis. J.W.: conceptualization, supervision. W.D.: visualization, investigation. J.Y.: validation. X.Z.: resources. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant No. 52475061, in part by the Natural Science Foundation of Jiangsu Province under Grant No. BK20232038, in part by the Special Project for Frontier Leading Technology Basic Research of Jiangsu Province under Grant No. BK20232031, in part by the Natural Science Foundation of Jiangsu Province under Grant No. BK20243018 and was also supported by the Major Science and Technology Projects in Jiangsu Province under Grant No. BG2024008.

Data Availability Statement

The data supporting this study’s findings are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| UAV | Unmanned aerial vehicle |

| SMC | Sliding mode control |

| RL | Reinforcement learning |

| LD | Linear dichroism |

| FLS | Fuzzy logic systems |

| ADP | Adaptive dynamic programming |

| RISE | Robust integral of the sign of the error |

| BCF-RL | Backstepping command filter controller based on reinforcement learning |

| EKF | Extended Kalman filter |

| ANN | Actor neural network |

| CNN | Critic neural network |

| SNN | Single neural network |

| PMSM | Permanent magnet synchronous motor |

Nomenclature

| Symbol | Description | Type/Format |

| reference trajectory | Scalars | |

| angular displacement | Scalars | |

| angular velocity | Scalars | |

| inertial load coefficient | Scalars | |

| torque constant | Scalars | |

| friction coefficient | Vectors | |

| measurement errors | Scalars | |

| Scalars | ||

| α | dummy control law | Scalars |

| estimated disturbance | Scalars | |

| instantaneous cost function | Function | |

| infinite time-domain cost function | Function | |

| weight value from the input layer to the hidden layer of the critic network of critic neural network | Matrice | |

| weight value from the hidden layer to the output layer of critic neural network | Vectors | |

| Functions | ||

| Functions | ||

| the matrix multiplication by bit (Hadamard product) | Operators | |

| objective function of critic neural network | Functions | |

| Functions | ||

| Functions | ||

| weight value from the input layer to the hidden layer of the critic network of actor neural network | Matrice | |

| weight value from the hidden layer to the output layer of actor neural network | Vectors | |

| objective function of actor neural network | Functions | |

| Functions | ||

| Functions |

References

- Wang, X.; Liu, H.; Ma, J.; Gao, Y.; Wu, Y. Compensation-based Characteristic Modeling and Tracking Control for Electromechanical Servo Systems with Backlash and Torque Disturbance. Int. J. Control Autom. Syst. 2024, 22, 1869–1882. [Google Scholar] [CrossRef]

- Xiong, S.; Cheng, X.; Ouyang, Q.; Lv, C.; Xu, W.; Wang, Z. Disturbance compensation-based feedback linearization control for air rudder electromechanical servo systems. Asian J. Control, 2025; early view. [Google Scholar] [CrossRef]

- Yuan, M.; Zhang, X. Minimum-Time Transient Response Guaranteed Control of Servo Motor Systems with Modeling Uncertainties and High-Order Constraint. IEEE Trans. Circuits Syst. II Express Briefs 2023, 70, 2156–2160. [Google Scholar] [CrossRef]

- Mofid, O.; Alattas, K.; Mobayen, S.; Vu, M. Adaptive finite-time command-filtered backstepping sliding mode control for stabilization of a disturbed rotary-inverted-pendulum with experimental validation. J. Vib. Control 2022, 29, 107754632110640. [Google Scholar] [CrossRef]

- Fang, Q.; Zhou, Y.; Ma, S.; Zhang, C.; Wang, Y.; Huangfu, H. Electromechanical Actuator Servo Control Technology Based on Active Disturbance Rejection Control. Electronics 2023, 12, 1934. [Google Scholar] [CrossRef]

- Xian, Y.; Huang, K.; Zhu, Z.; Zhen, S.; Ye-HwaChen, Y.-H. Adaptive Robust Control for Fuzzy Mechanical Systems in Confined Spaces: Nash Game Optimization Design. IEEE Trans. Fuzzy Syst. 2024, 32, 2863–2875. [Google Scholar] [CrossRef]

- Liu, B.; Wang, Y.; Mofid, O.; Mobayen, S.; Khooban, M. Barrier Function-Based Backstepping Fractional-Order Sliding Mode Control for Quad-Rotor Unmanned Aerial Vehicle Under External Disturbances. IEEE Trans. Aerosp. Electron. Syst. 2024, 60, 716–728. [Google Scholar] [CrossRef]

- He, W.; Meng, T.; He, X.; Sun, C. Iterative Learning Control for a Flapping Wing Micro Aerial Vehicle Under Distributed Disturbances. IEEE Trans. Cybern. 2019, 49, 1524–1535. [Google Scholar] [CrossRef]

- Transactions, I.; Wang, R.; Zhuang, Z.; Tao, H.; Paszke, W.; Stojanovic, V. Q-learning based fault estimation and fault tolerant iterative learning control for MIMO systems. ISA Trans. 2023, 142, 123–135. [Google Scholar]

- Liu, Z.; Peng, K.; Han, L.; Guan, S. Modeling and Control of Robotic Manipulators Based on Artificial Neural Networks: A Review. Iran. J. Sci. Technol. Trans. Mech. Eng. 2023, 47, 1307–1347. [Google Scholar] [CrossRef]

- Ma, D.; Chen, X.; Ma, W.; Zheng, H.; Qu, F. Neural Network Model-Based Reinforcement Learning Control for AUV 3-D_Path_Following. IEEE Trans. Onintell. Veh. 2024, 9, 893–904. [Google Scholar] [CrossRef]

- Lu, J.; Wei, Q.; Zhou, T.; Wang, Z.; Wang, F.-Y. Event-Triggered Near-Optimal Control for Unknown Discrete-Time Nonlinear Systems Using Parallel Control. IEEE Trans. Cybern. 2023, 53, 1890–1904. [Google Scholar] [CrossRef] [PubMed]

- Jin, X.; Chen, K.; Zhao, Y.; Ji, J.; Jing, P. Simulation of hydraulic transplanting robot control system based on fuzzy PID controller. Measurement 2020, 164, 108023. [Google Scholar] [CrossRef]

- Mofid, O.; Mobayen, S. Robust fractional-order sliding mode tracker for quad-rotor UAVs: Event-triggered adaptive backstepping approach under disturbance and uncertainty. Aerosp. Sci. Technol. 2024, 146, 108916. [Google Scholar] [CrossRef]

- Wang, S.; Na, J.; Chen, Q. Adaptive Predefined Performance Sliding Mode Control of Motor Driving Systems with Disturbances. IEEE Trans. Energy Convers. 2021, 36, 1931–1939. [Google Scholar] [CrossRef]

- Transactions, I.; Kumar, A.; Tomar, H.; Mehla, V.; Komaragiri, R.; Kumar, M. An adaptive deep reinforcement learning approach for MIMO PID control of mobile robots. ISA Trans. 2022, 102, 280–294. [Google Scholar]

- Xian, B.; Zhang, X.; Zhang, H.; Gu, X. Robust Adaptive Control for a Small Unmanned Helicopter Using Reinforcement Learning. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 7589–7597. [Google Scholar] [CrossRef]

- Liu, R.; Nageotte, F.; Zanne, P.; de Mathelin, M.; Dresp-Langley, B. Deep Reinforcement Learning for the Control of Robotic Manipulation: A Focussed Mini-Review. Robotics 2021, 10, 22. [Google Scholar] [CrossRef]

- Xu, C.; Hu, J. Adaptive robust control of a class of motor servo system with dead zone based on neural network and extended state observer. Proc. Inst. Mech. Eng. Part I J. Syst. Control Eng. 2022, 236, 1724–1737. [Google Scholar] [CrossRef]

- Ma, W.; Li, X.; Duan, L.; Dong, H. Motion control of chaotic permanent-magnet synchronous motor servo system with neural network–based disturbance observer. Adv. Mech. Eng. 2019, 11, 1–9. [Google Scholar] [CrossRef]

- Wen, G.; Li, B.; Niu, B. Optimized Backstepping Control Using Reinforcement Learning of Observer-Critic-Actor Architecture Based on Fuzzy System for a Class of Nonlinear Strict-Feedback Systems. IEEE Trans. Fuzzy Syst. 2022, 30, 4322–4335. [Google Scholar] [CrossRef]

- Tong, S.; Sun, K.; Sui, S. Observer-Based Adaptive Fuzzy Decentralized Optimal Control Design for Strict-Feedback Nonlinear Large-Scale Systems. IEEE Trans. Fuzzy Syst. 2018, 26, 569–584. [Google Scholar] [CrossRef]

- Fan, Q.-Y.; Yang, G.-H. Adaptive Actor–Critic Design-Based Integral Sliding-Mode Control for Partially Unknown Nonlinear Systems with Input Disturbances. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 165–177. [Google Scholar] [CrossRef]

- Liang, X.; Yao, Z.; Ge, Y.; Yao, J. Reinforcement learning based adaptive control for uncertain mechanical systems with asymptotic tracking. Def. Technol. 2024, 34, 19–28. [Google Scholar] [CrossRef]

- Dong, W.; Farrell, J.A.; Polycarpou, M.M.; Djapic, V.; Sharma, M. Command Filtered Adaptive Backstepping. IEEE Trans. Control Syst. Technol. 2012, 20, 566–580. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).