Research on Viewpoints Planning for Industrial Robot-Based Three-Dimensional Sculpture Reconstruction

Abstract

1. Introduction

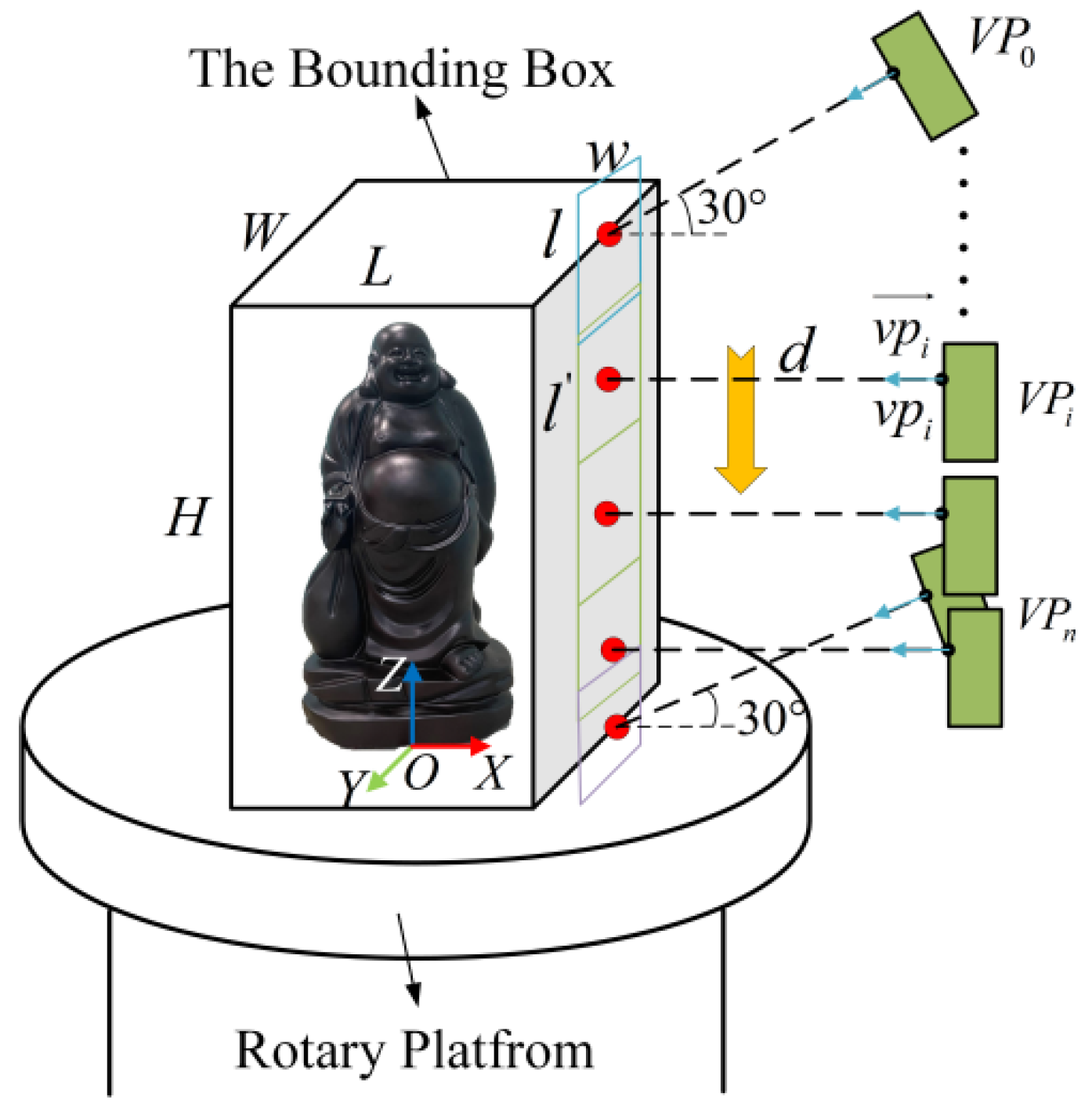

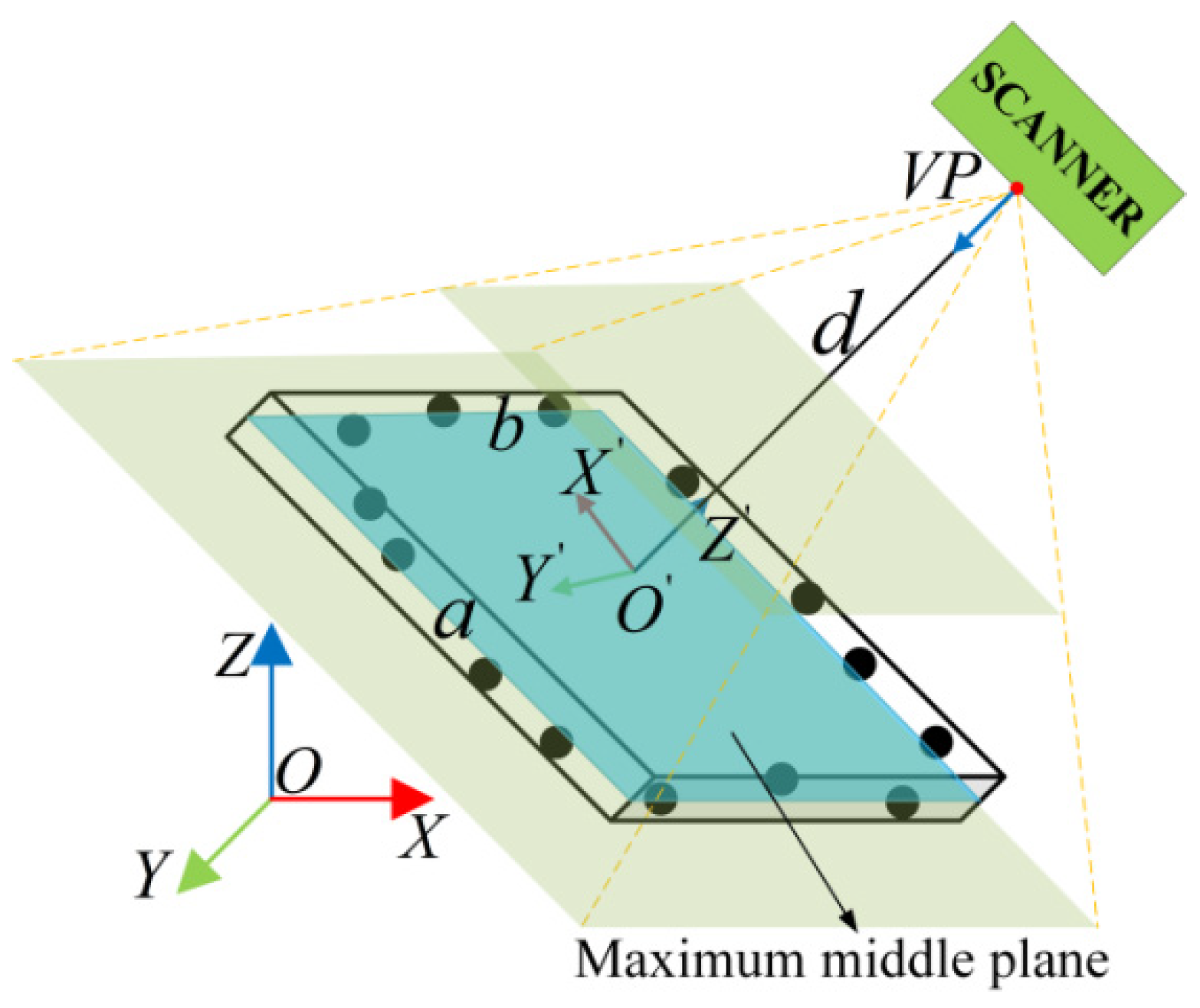

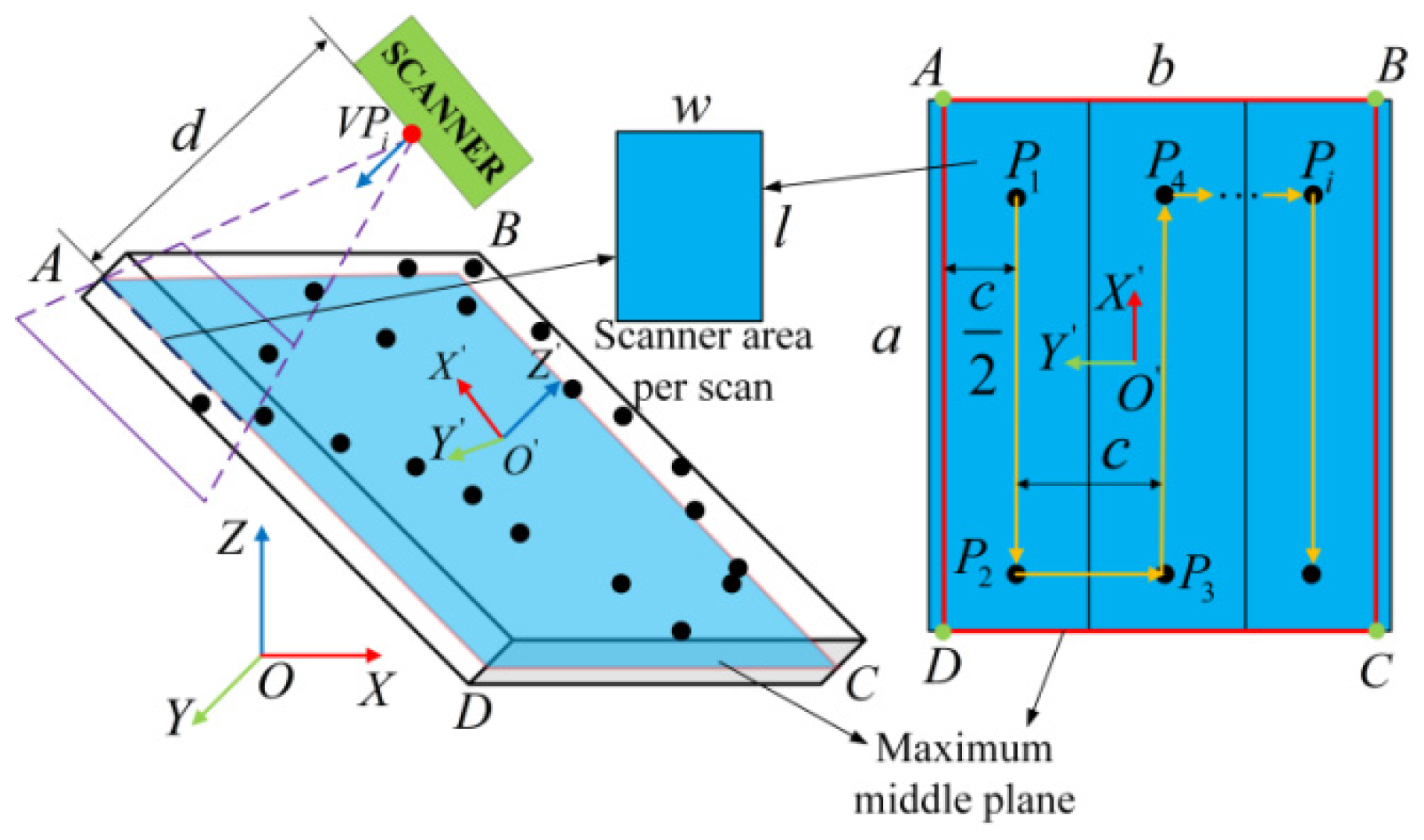

2. Global Viewpoints Planning for Global Model

3. Local Viewpoints Planning for Local Models

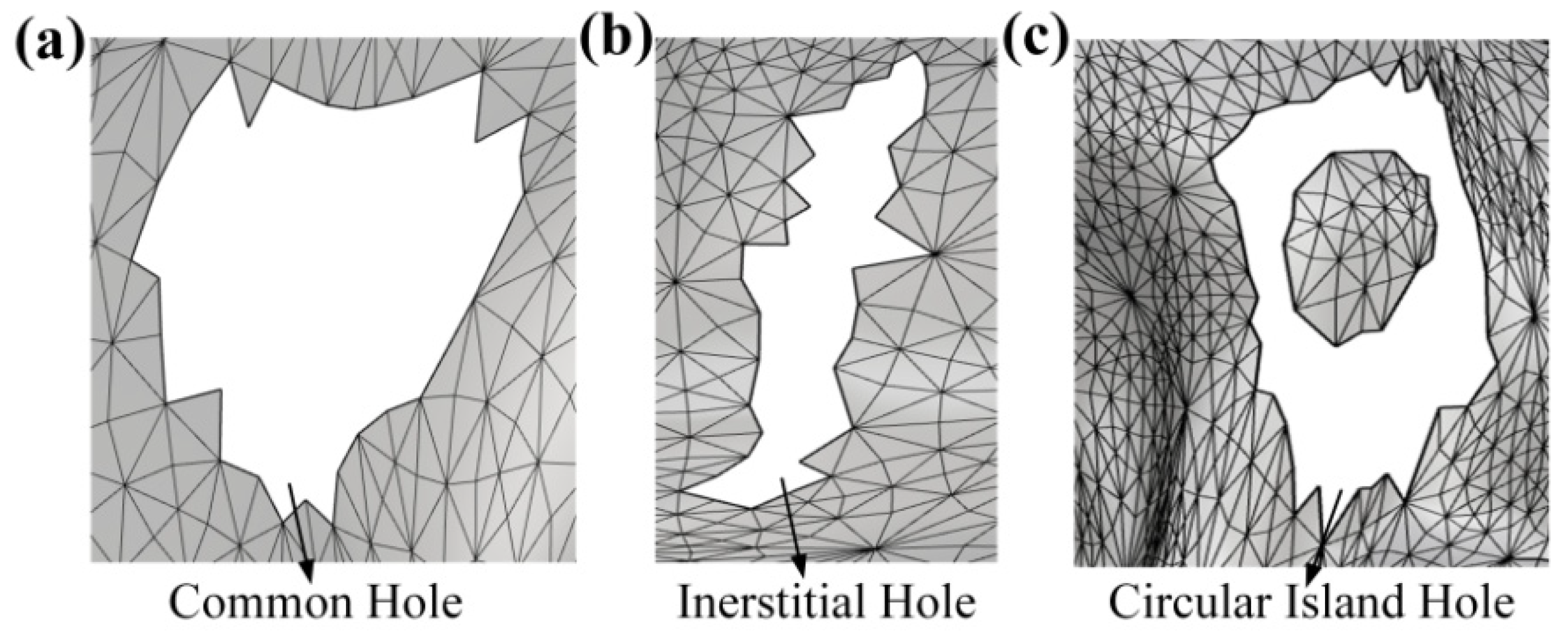

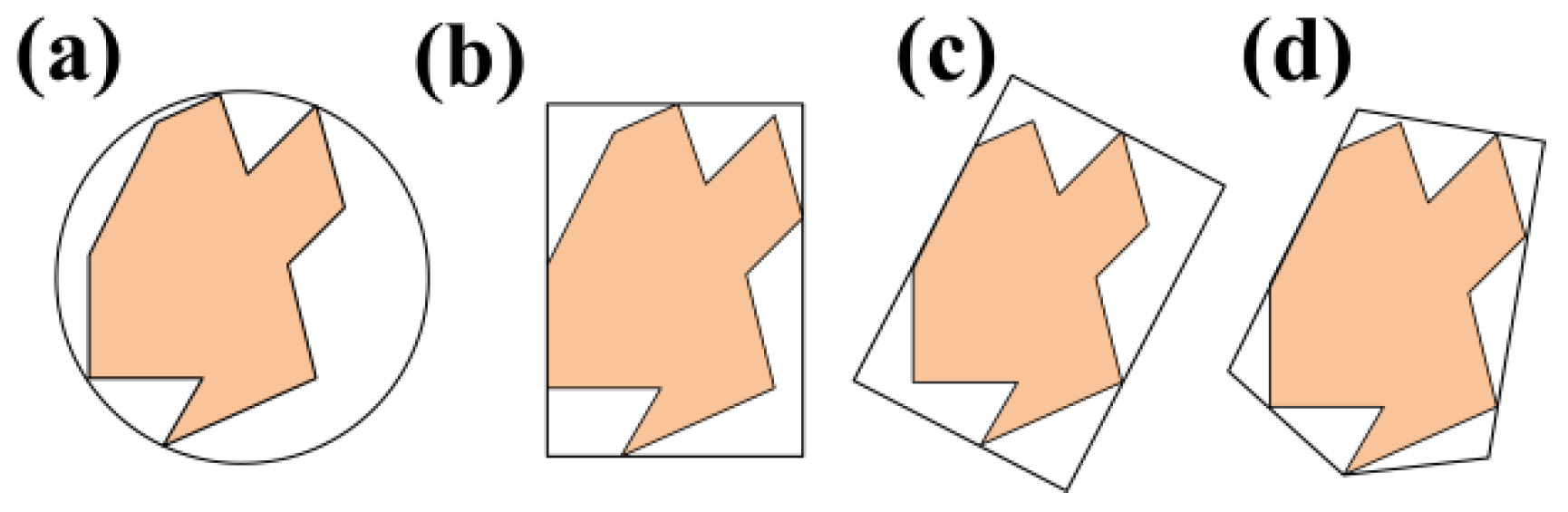

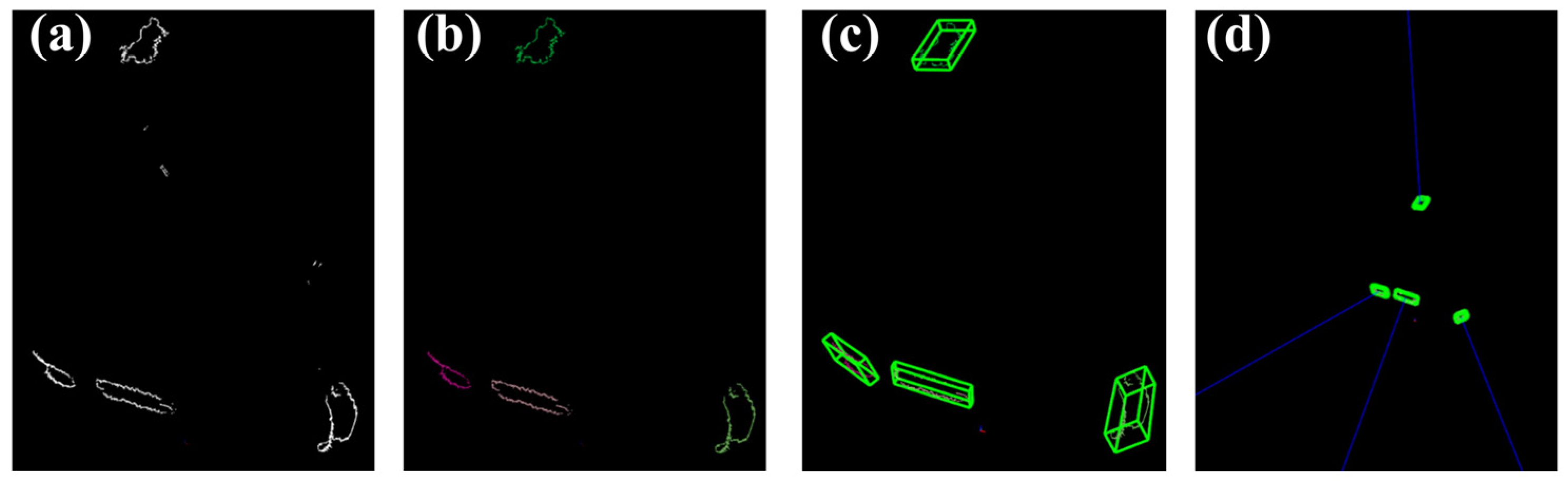

3.1. Left Holes Recognition After Global Scanning

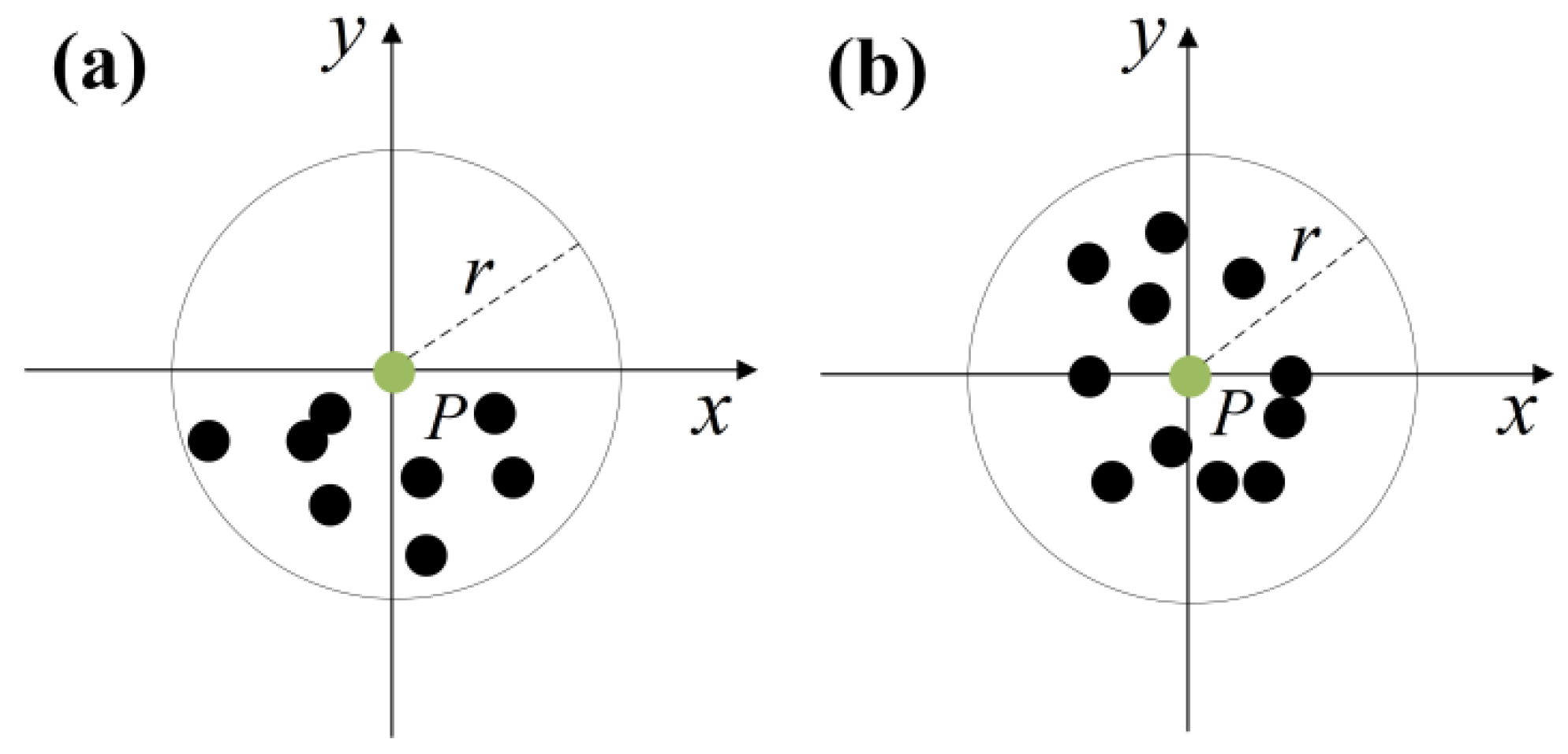

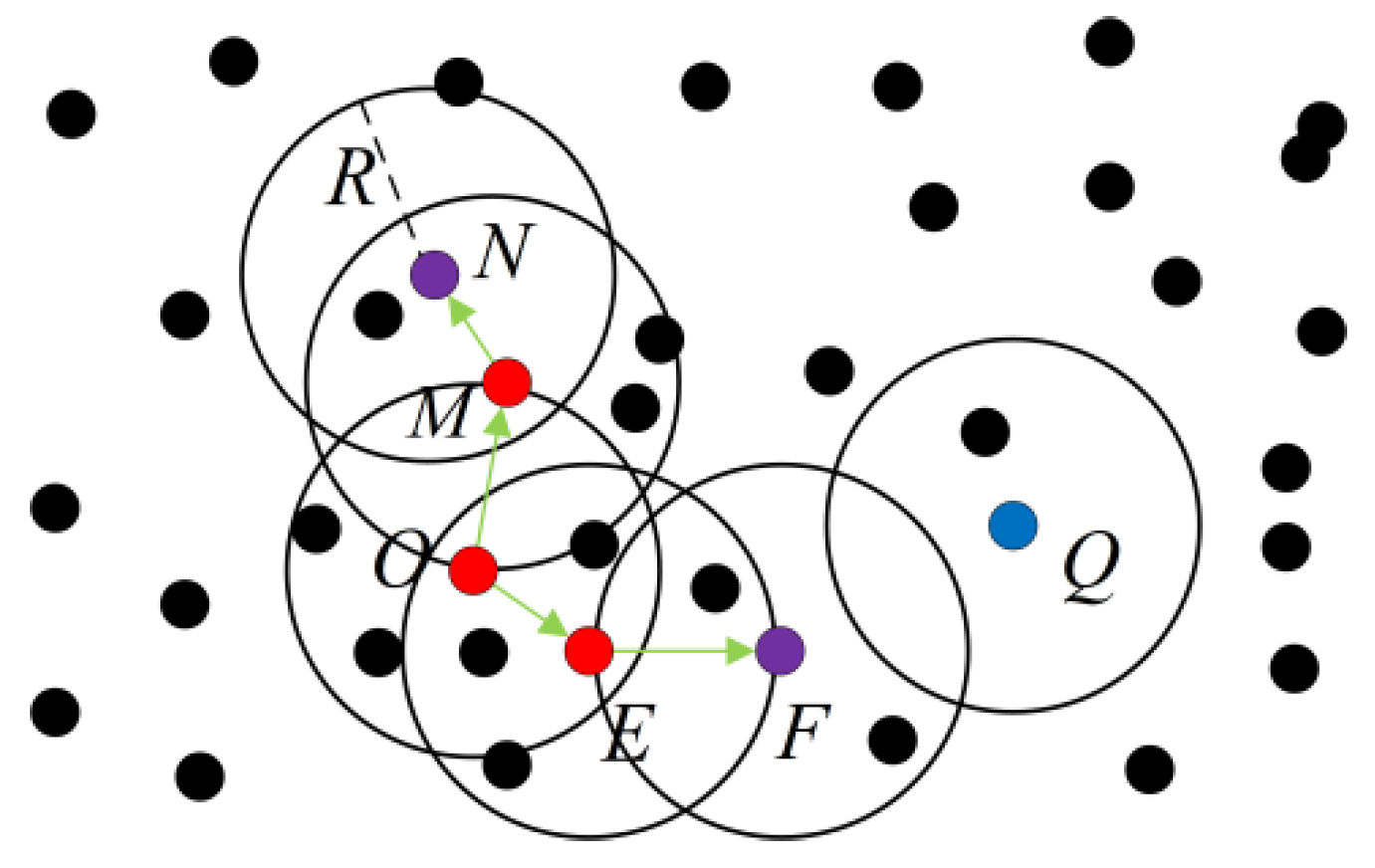

3.2. Holes Clustering

- (1)

- ε-neighborhood: , ε-neighborhood contains a subset of sample set D consisting of points no farther than ε from xj, i.e., . The number of subsamples in this subset is denoted as ;

- (2)

- Core point: , if ε-neighborhood of xj contains at least MinPts of samples, i.e., then xj is a core point;

- (3)

- Boundary point: a point falls within the ε-neighborhood of a core point;

- (4)

- Noise point: a point that is neither a core point nor a boundary point is considered a noise point;

- (5)

- Density direct: if xj is in the ε-neighborhood of xi and xi is a core point, then xj is said to be density direct from xi;

- (6)

- Density reachable: for xj and xi, xj is said to be density-reachable by xi if there exists a sequence of samples p1, p2, ∙∙∙, pn, where p1 = xi, pn = xj, and pi+1 is directly density-reachable from pi;

- (7)

- Density connected: For xj and xi, xj and xi are said to be connected if there exists xk such that both xj and xi are accessible by xk density.

- (1)

- Initialize the set of core points , the number of clusters k = 0, and initialize the set of unvisited samples . The clusters are then divided into ;

- (2)

- For , find all the core points as follows:

- (a)

- Find the ε-neighborhood subsample set of sample xj using the distance metric;

- (b)

- If the number of samples in the subsample set satisfies , add sample xj to the set of core points: ;

- (3)

- If the set of core points , the algorithm is finished; otherwise, proceed to step (4);

- (4)

- Select a random core point from the core point set , initialize the current cluster core point queue , initialize the current cluster sample set , and update the unvisited sample set ;

- (5)

- If the current cluster core point queue , generate the current cluster Ck, update the cluster division , update the core point set , and proceed to step (3). Otherwise, update the core point set ;

- (6)

- Remove a core point from the current cluster core point queue , pass the neighborhood distance threshold ε-neighborhood sub-sample set , make , update the current cluster sample set , update the unvisited sample set , update , and proceed to step (5).

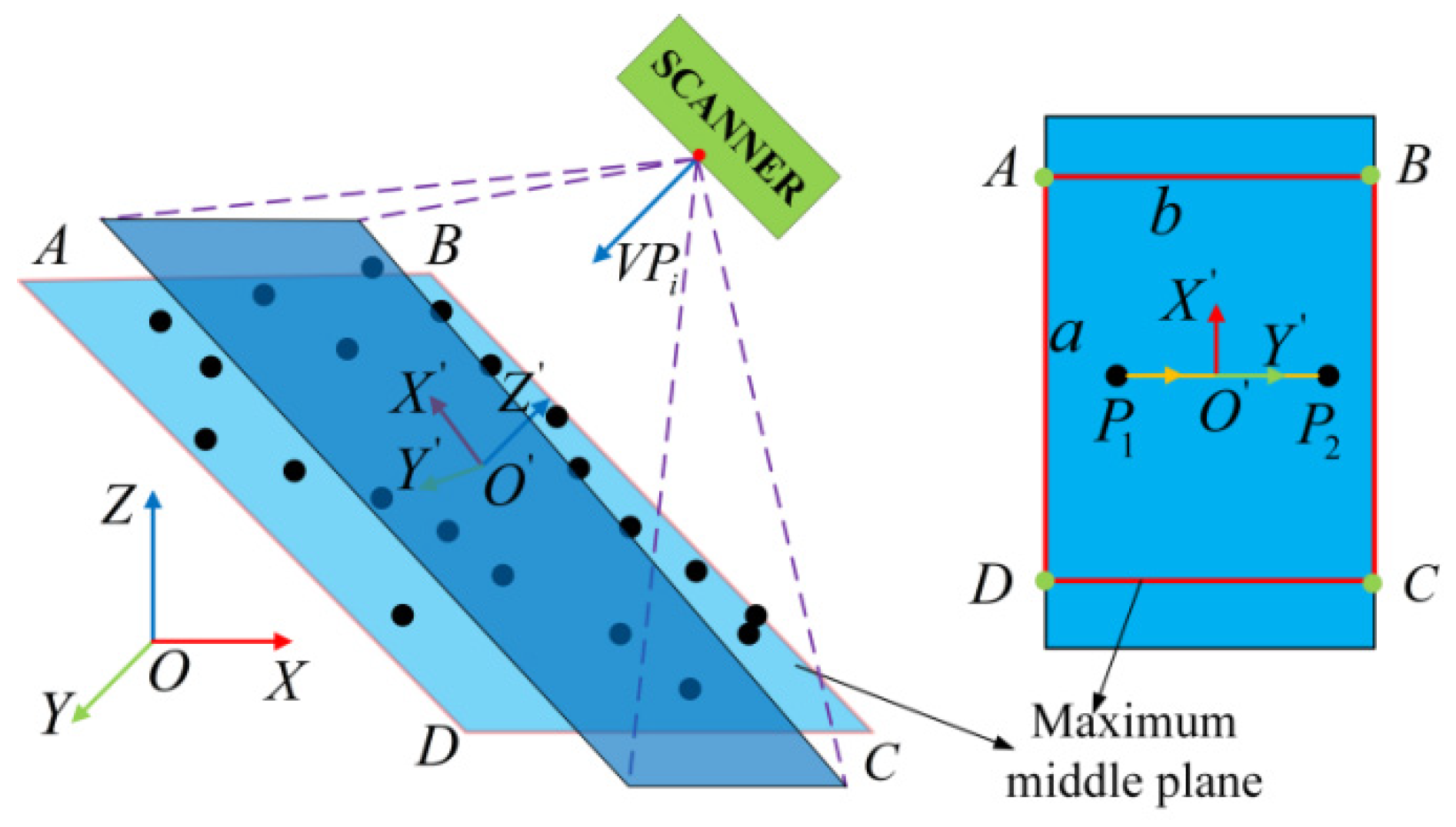

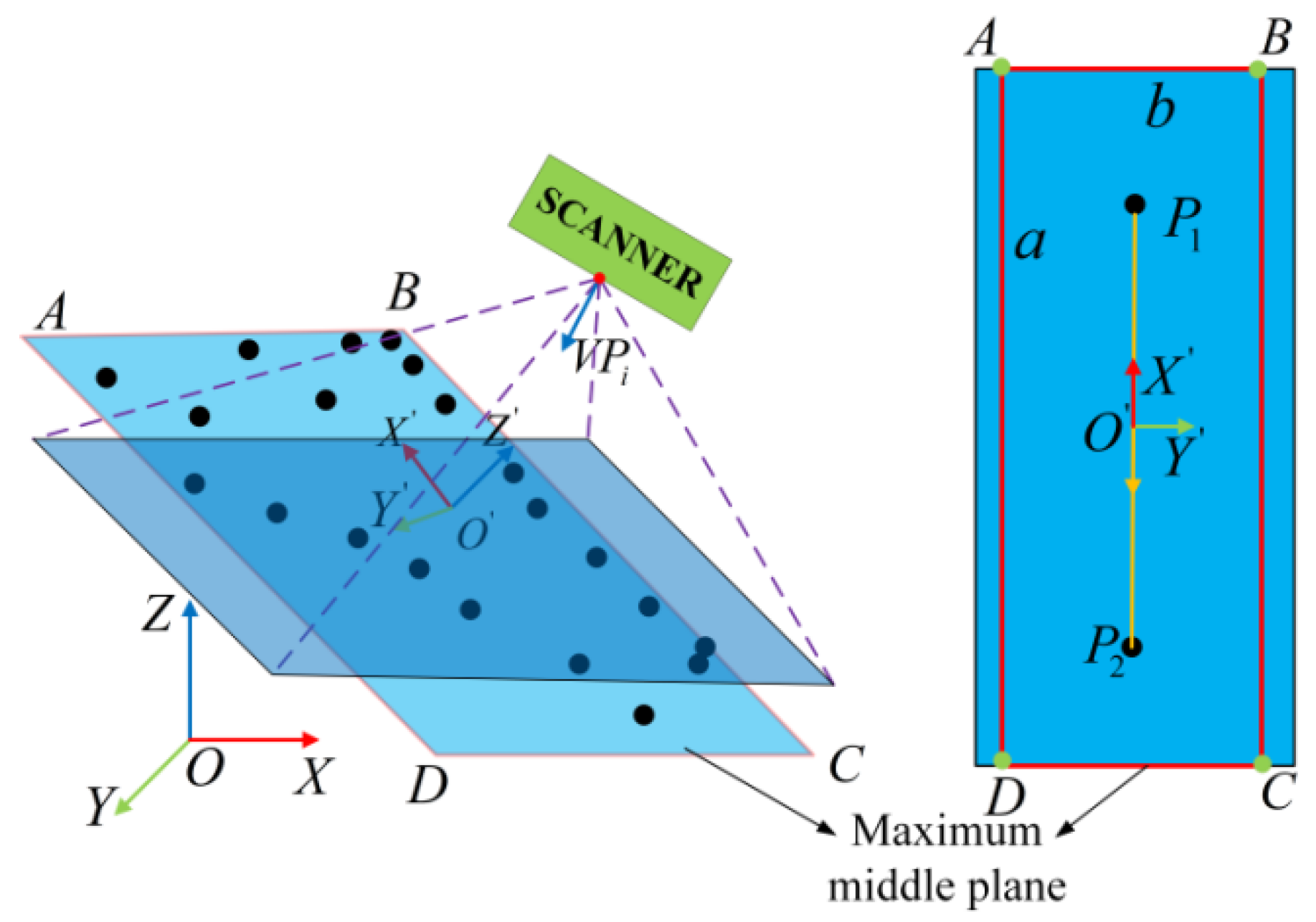

3.3. Local Viewpoints Planning

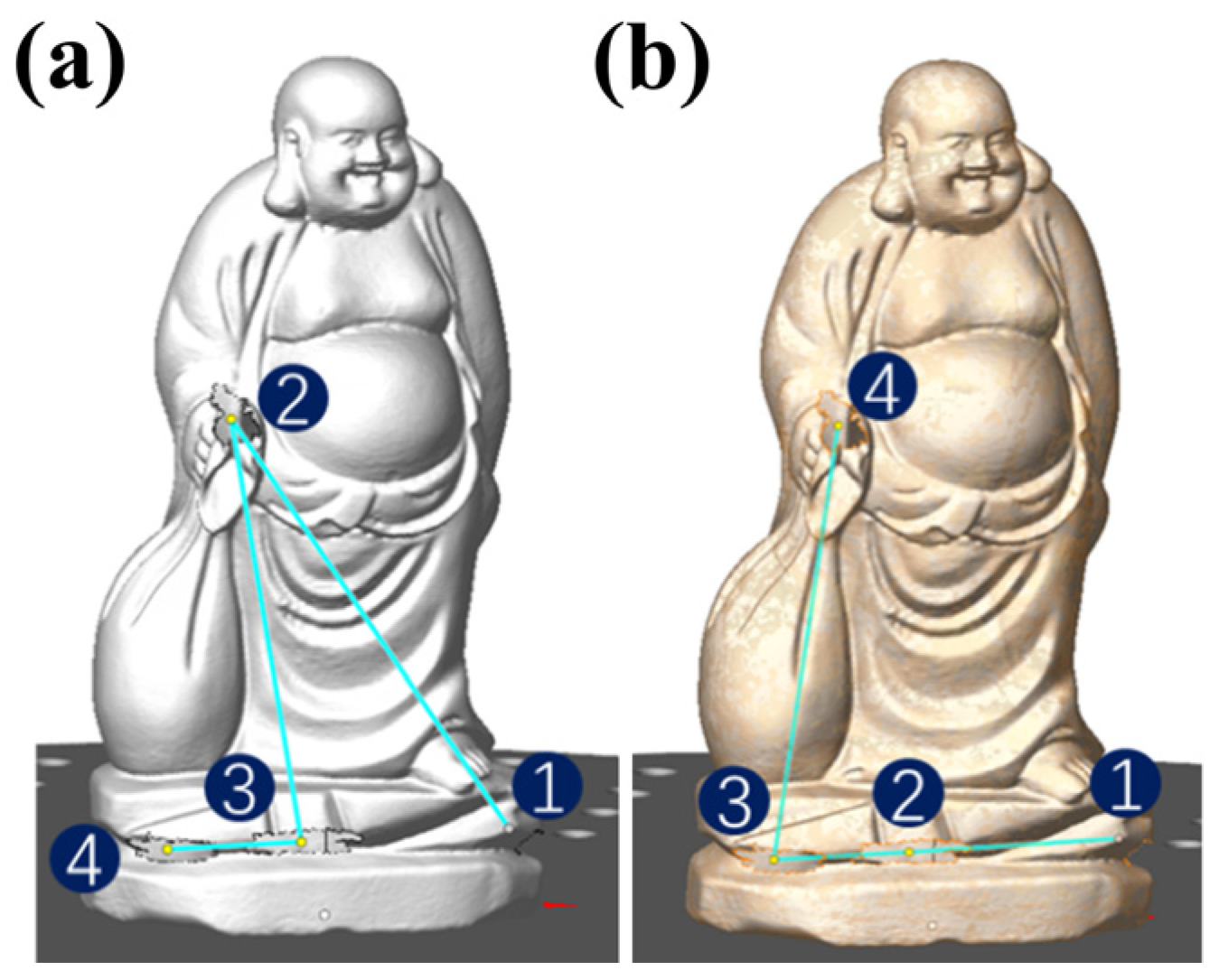

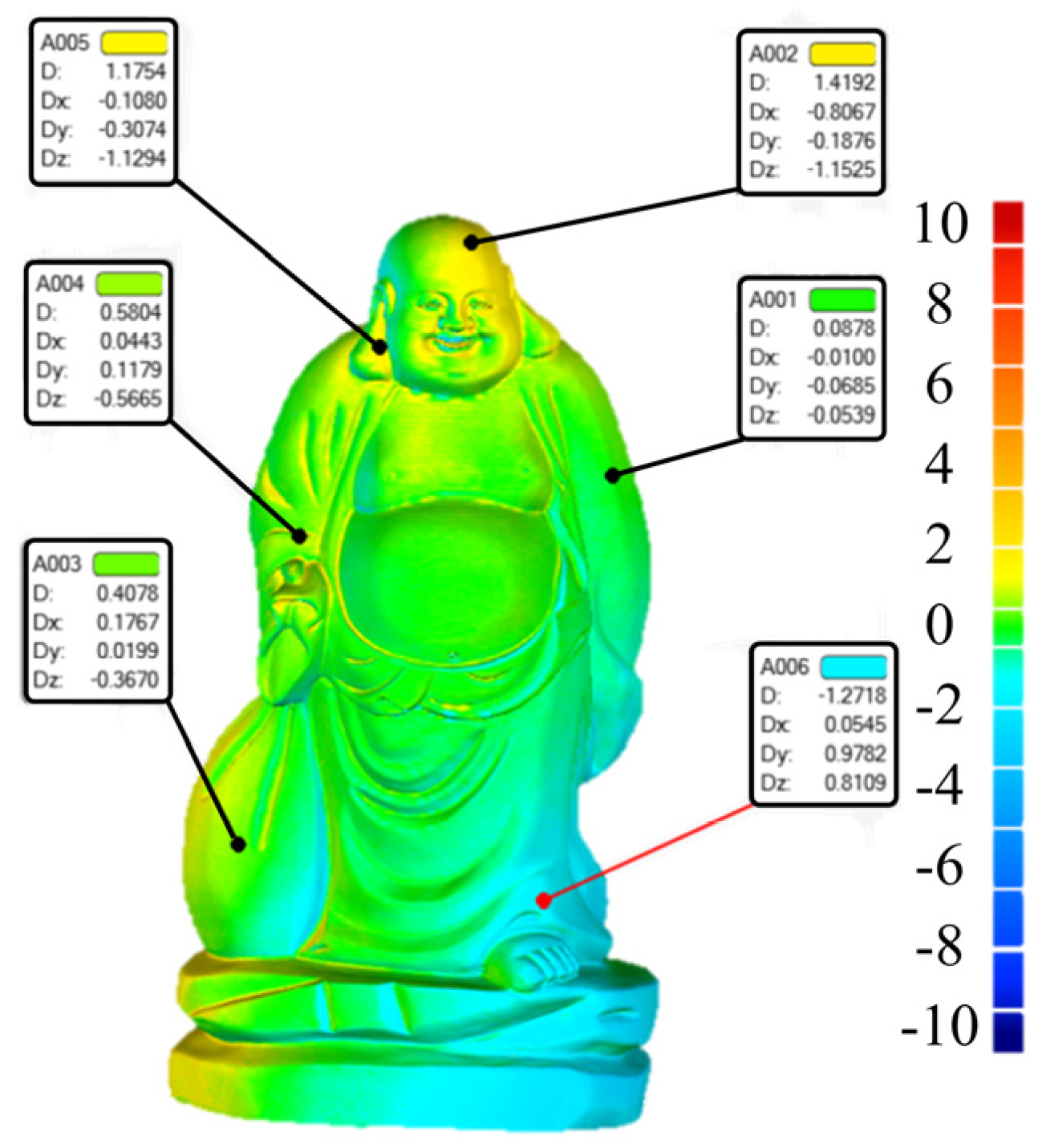

4. Experiment and Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Pieraccini, M.; Guidi, G.; Atzeni, C. 3D digitizing of cultural heritage. J. Cult. Herit. 2001, 2, 63–70. [Google Scholar] [CrossRef]

- Zabulis, X.; Meghini, C.; Partarakis, N.; Beisswenger, C.; Dubois, A.; Fasoula, M.; Galanakis, G. Representation and preservation of Heritage Crafts. Sustainability 2020, 12, 1461. [Google Scholar] [CrossRef]

- Fu, Y. Reflections on the Digital Protection and Dissemination of Traditional Ceramic Handicrafts of Non-Foreign Heritage. Art Perform. Lett. 2023, 4, 78–85. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, J.; Zhang, L.; Qi, L. Development of a robotic 3D scanning system for reverse engineering of freeform part. In Proceedings of the 2008 International Conference on Advanced Computer Theory and Engineering, Phuket, Thailand, 20–22 December 2008; pp. 246–250. [Google Scholar] [CrossRef]

- Phan, N.D.M.; Quinsat, Y.; Lavernhe, S.; Lartigue, C. Scanner path planning with the control of overlap for part inspection with an industrial robot. Int. J. Adv. Manuf. Technol. 2018, 98, 629–643. [Google Scholar] [CrossRef]

- Karaszewski, M.; Sitnik, R.; Bunsch, E. On-line, collision-free positioning of a scanner during fully automated three-dimensional measurement of cultural heritage objects. Robot. Auton. Syst. 2012, 60, 1205–1219. [Google Scholar] [CrossRef]

- Kwon, H.; Na, M.; Song, J.B. Rescan strategy for time efficient view and path planning in automated inspection system. Int. J. Precis. Eng. Manuf. 2019, 20, 1747–1756. [Google Scholar] [CrossRef]

- Lee, I.D.; Seo, J.H.; Kim, Y.M.; Choi, J.; Han, S.; Yoo, B. Automatic pose generation for robotic 3-D scanning of mechanical parts. IEEE Trans. Robot. 2020, 36, 1219–1238. [Google Scholar] [CrossRef]

- Ozkan, M.; Secil, S.; Turgut, K.; Dutagaci, H.; Uyanik, C.; Parlaktuna, O. Surface profile-guided scan method for autonomous 3D reconstruction of unknown objects using an industrial robot. Vis. Comput. 2022, 38, 3953–3977. [Google Scholar] [CrossRef]

- Peng, W.; Wang, Y.; Miao, Z.; Feng, M.; Tang, Y. Viewpoints planning for active 3-D reconstruction of profiled blades using estimated occupancy probabilities (EOP). IEEE Trans. Ind. Electron. 2020, 68, 4109–4119. [Google Scholar] [CrossRef]

- Yuan, X. A mechanism of automatic 3D object modeling. IEEE Trans. Pattern Anal. Mach. Intell. 1995, 17, 307–311. [Google Scholar] [CrossRef]

- Martins, F.R.; Garcia-Bermejo, J.G.; Zalama, E.; Peran, J.R. An optimized strategy for automatic optical scanning of objects in reverse engineering. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2003, 217, 1167–1171. [Google Scholar] [CrossRef]

- Li, Y.F.; He, B.; Bao, P. Automatic view planning with self-termination in 3D object reconstructions. Sens. Actuators A Phys. 2005, 122, 335–344. [Google Scholar] [CrossRef]

- Chen, S.Y.; Li, Y.F. Vision sensor planning for 3-D model acquisition. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2005, 35, 894–904. [Google Scholar] [CrossRef] [PubMed]

- Munkelt, C.; Kühmstedt, P.; Denzler, J. Incorporation of a-priori information in planning the next best view. In Proceedings of the Vision, Modeling, and Visualization 2006: Proceedings, Aachen, Germany, 22–24 November 2006; p. 261. [Google Scholar]

- Zhou, X.; He, B.; Li, Y.F. A novel view planning method for automatic reconstruction of unknown 3-D objects based on the limit visual surface. In Proceedings of the 2009 Fifth International Conference on Image and Graphics, Xi’an, China, 20–23 September 2009; pp. 301–306. [Google Scholar] [CrossRef]

- Kriegel, S.; Bodenmüller, T.; Suppa, M.; Hirzinger, G. A surface-based next-best-view approach for automated 3D model completion of unknown objects. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 4869–4874. [Google Scholar] [CrossRef]

- Kriegel, S.; Rink, C.; Bodenmüller, T.; Narr, A.; Suppa, M.; Hirzinger, G. Next-best-scan planning for autonomous 3d modeling. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Algarve, Portugal, 7–12 October 2012; pp. 2850–2856. [Google Scholar] [CrossRef]

- Vasquez-Gomez, J.I.; Sucar, L.E.; Murrieta-Cid, R.; Lopez-Damian, E. Volumetric next-best-view planning for 3D object reconstruction with positioning error. Int. J. Adv. Robot. Syst. 2014, 11, 159. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining (KDD-96), Portland, OR, USA, 2–4 August 1996; Volume 96, pp. 226–231. [Google Scholar]

- Gottschalk, S.; Lin, M.C.; Manocha, D. OBBTree: A hierarchical structure for rapid interference detection. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 4–9 August 1996; pp. 171–180. [Google Scholar] [CrossRef]

- Xi, F.; Shu, C. CAD-based path planning for 3-D line laser scanning. Comput.-Aided Des. 1999, 31, 473–479. [Google Scholar] [CrossRef]

| Key Performance Indicator | Value |

|---|---|

| Working distance (Zs) | 400 ≤ Zs ≤ 1000 mm |

| Close-range scanning (l1 × w1) | 214 × 148 mm |

| Long-range scanning (l2 × w2) | 536 × 371 mm |

| Scanning-angle range (l × w) | 30° × 21° |

| Highest 3D resolution | 0.1 mm |

| Highest 3D point accuracy | 0.05 mm |

| Maximum 3D distance accuracy | 0.03% 100 cm |

| Maximum 3D reconstruction rate | 16 fps |

| Maximum data acquisition speed | 2 × 106 points/s |

| Scanning-Process Parameters | Value |

|---|---|

| Scanning distance | 700 mm |

| Scanning speed | 0.1 m/s |

| Capture frame rate | 10 fps |

| Turntable speed | 10 r/min |

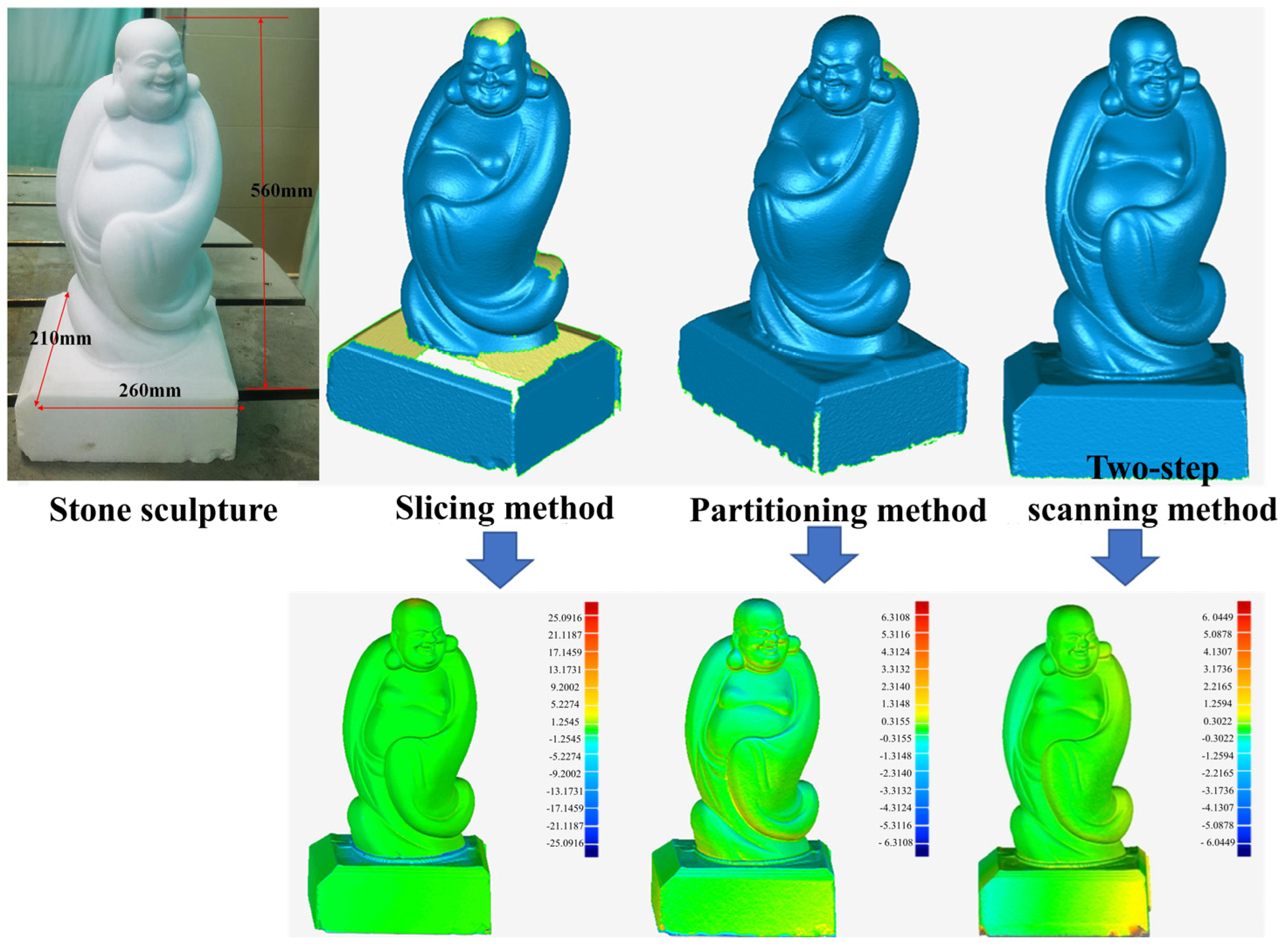

| Scanning Integrity | Scanning Accuracy | Standard Deviation | Scanning Time | |

|---|---|---|---|---|

| CAD-slicing method | 89.05% | 1.86 mm | 2.71 mm | 145 s |

| Surface-partitioning method | 97.08% | 0.97 mm | 1.12 mm | 120 s |

| Two-step scanning method | 100% | 0.26 mm | 0.31 mm | 152 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Cui, C.; Qin, G.; Huang, H.; Yin, F. Research on Viewpoints Planning for Industrial Robot-Based Three-Dimensional Sculpture Reconstruction. Actuators 2025, 14, 139. https://doi.org/10.3390/act14030139

Zhang Z, Cui C, Qin G, Huang H, Yin F. Research on Viewpoints Planning for Industrial Robot-Based Three-Dimensional Sculpture Reconstruction. Actuators. 2025; 14(3):139. https://doi.org/10.3390/act14030139

Chicago/Turabian StyleZhang, Zhen, Changcai Cui, Guanglin Qin, Hui Huang, and Fangchen Yin. 2025. "Research on Viewpoints Planning for Industrial Robot-Based Three-Dimensional Sculpture Reconstruction" Actuators 14, no. 3: 139. https://doi.org/10.3390/act14030139

APA StyleZhang, Z., Cui, C., Qin, G., Huang, H., & Yin, F. (2025). Research on Viewpoints Planning for Industrial Robot-Based Three-Dimensional Sculpture Reconstruction. Actuators, 14(3), 139. https://doi.org/10.3390/act14030139