Development of Tendon-Driven Continuum Robot with Visual Posture Sensing for Object Grasping

Abstract

1. Introduction

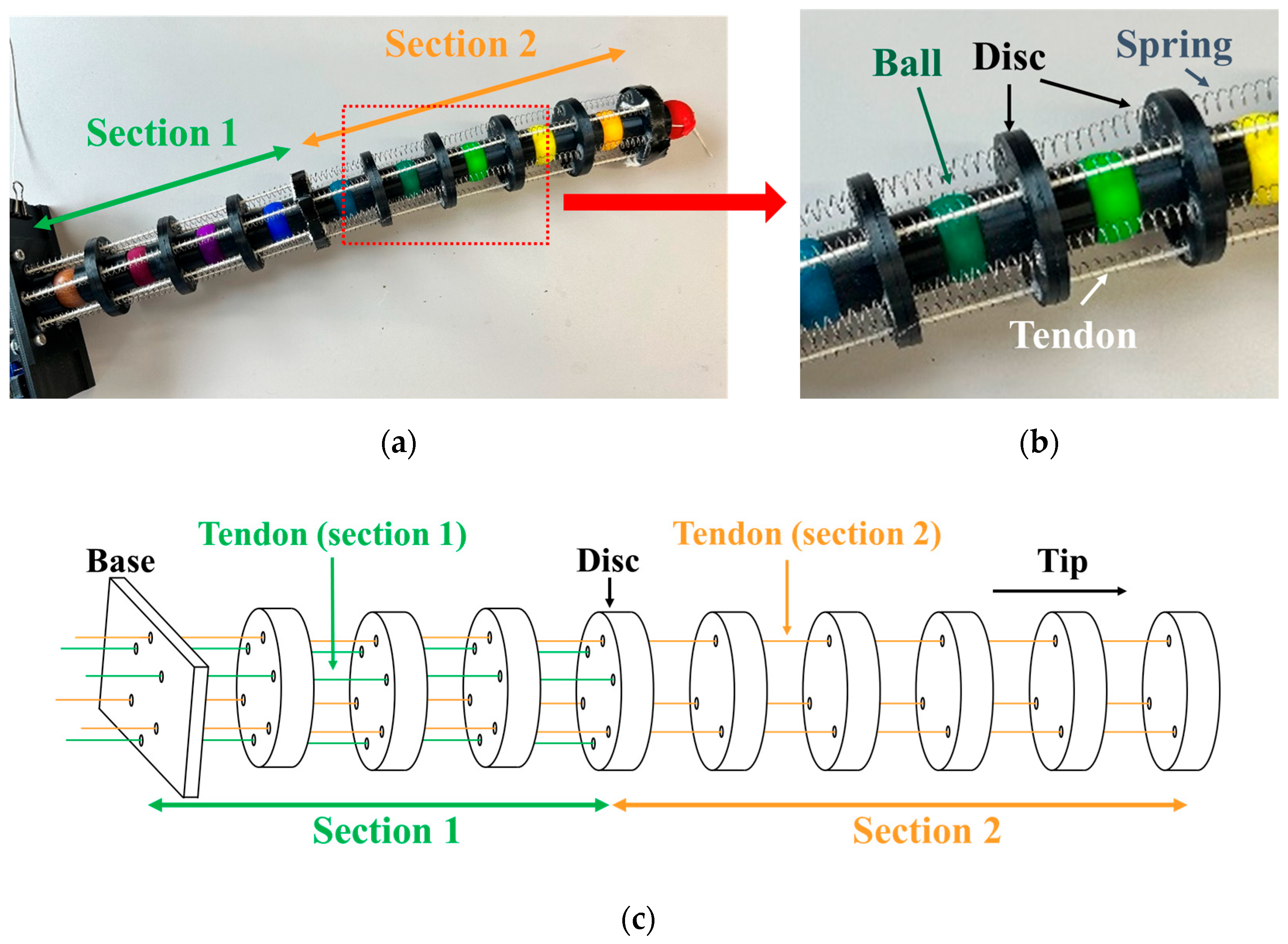

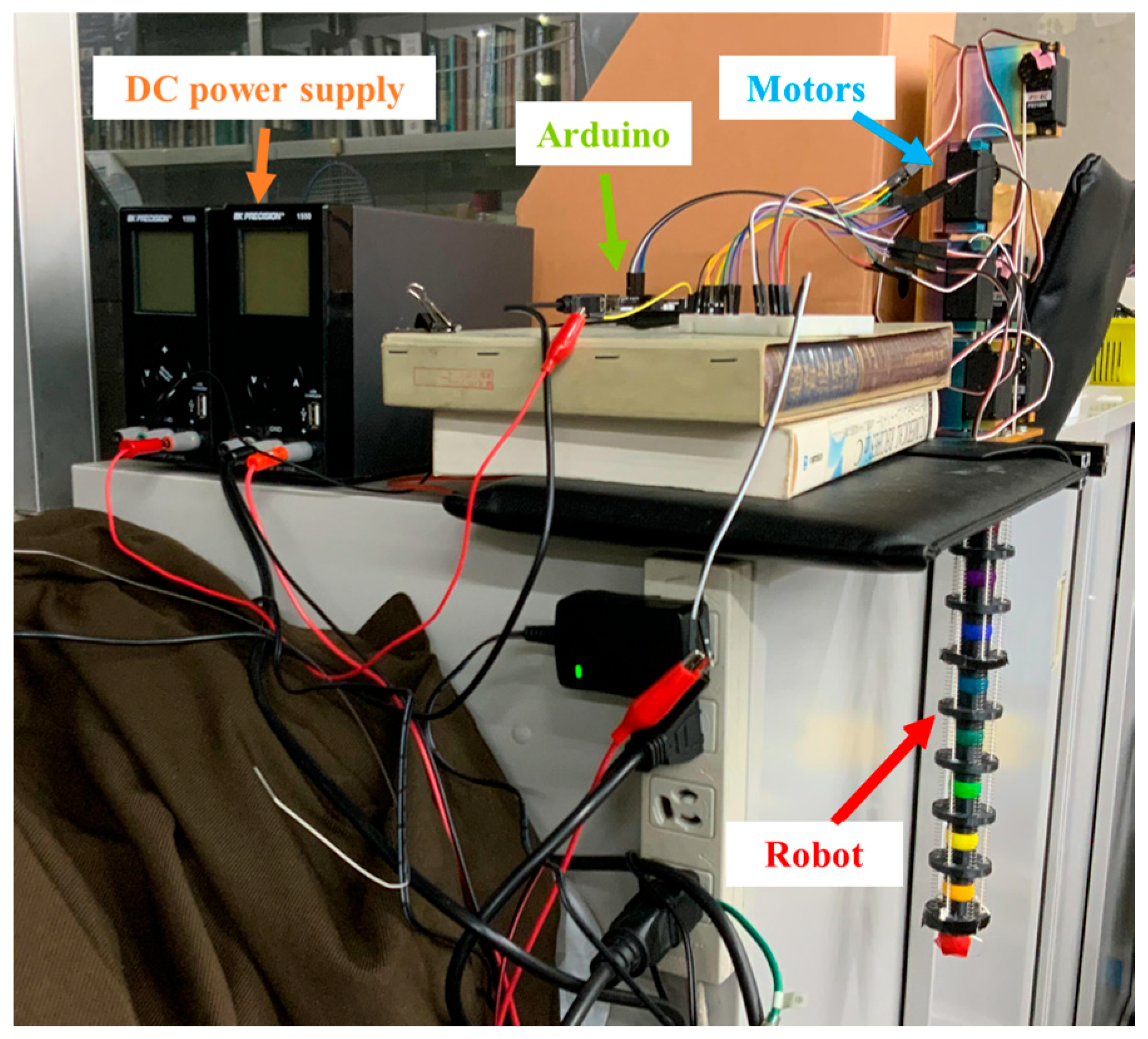

2. Design and Control of Robot

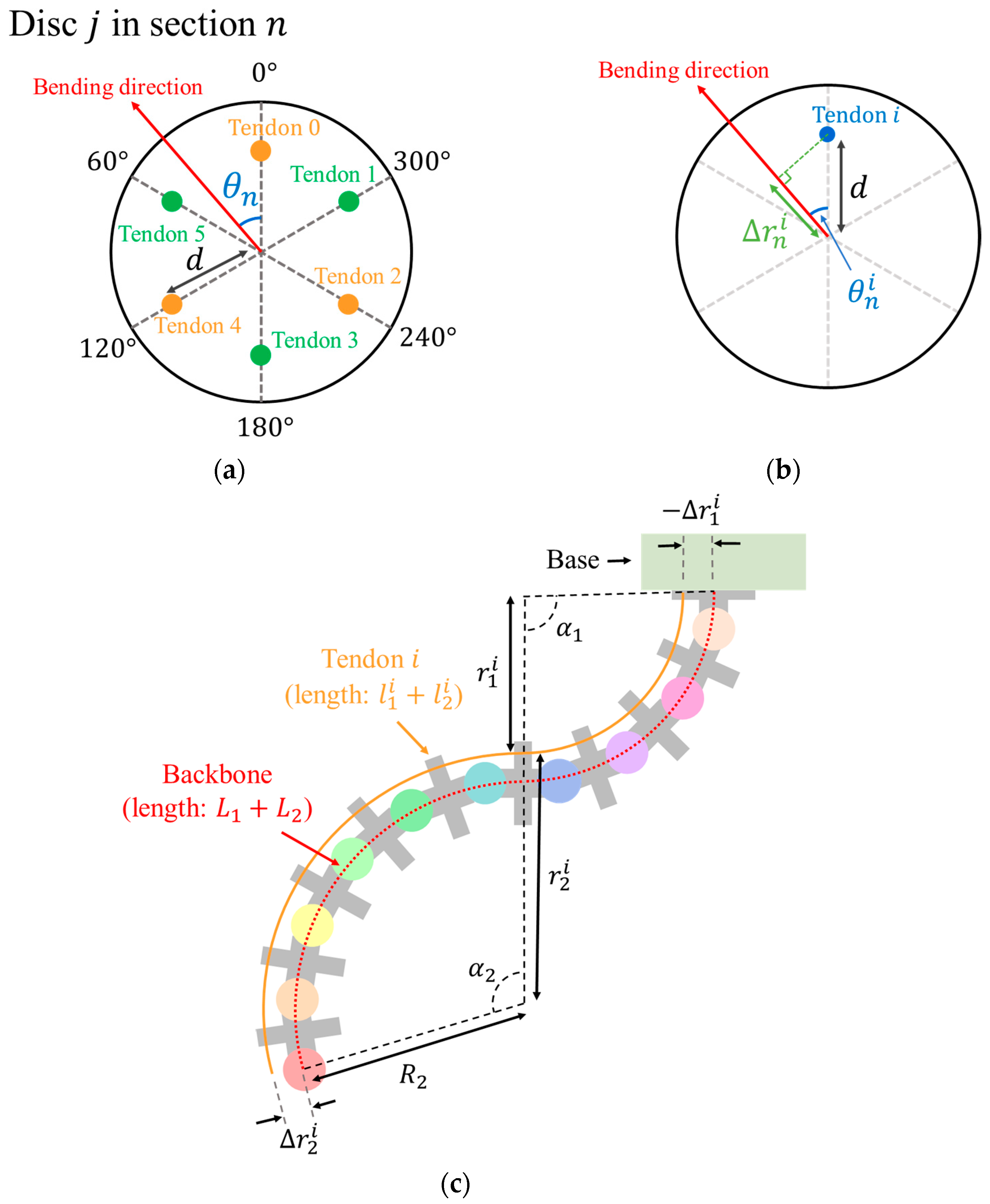

2.1. Design

2.2. Control

- Based on the bending direction , calculate the appropriate rotation speed of each motor using Equations (1) and (2).

- (In object grasping) rotate the motors in section 2 at the calculated rotation speed for 500 ms, and then stop the motor.

- Rotate the motors in section 1 at the calculated rotational speed for the bending time and stop.

- Rotate the motors in section 2 at the calculated rotation speed for the bending time (-500 when object grasping task), and stop.

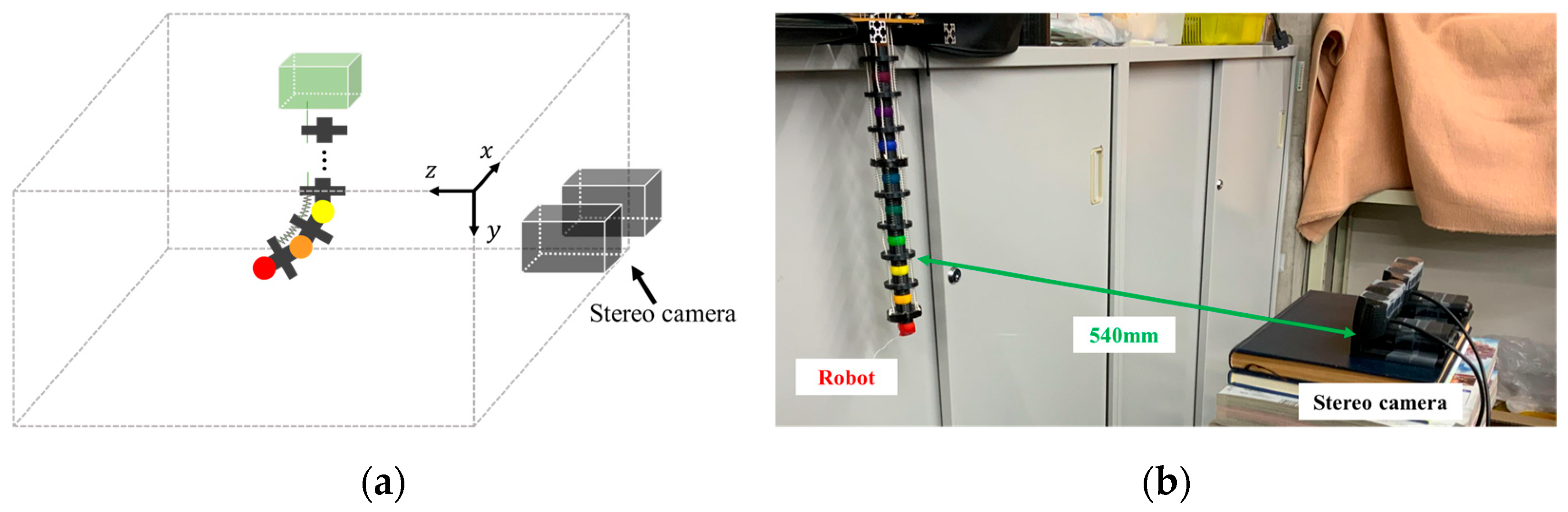

3. Construction of Visual Posture Sensing System

3.1. System Configuration

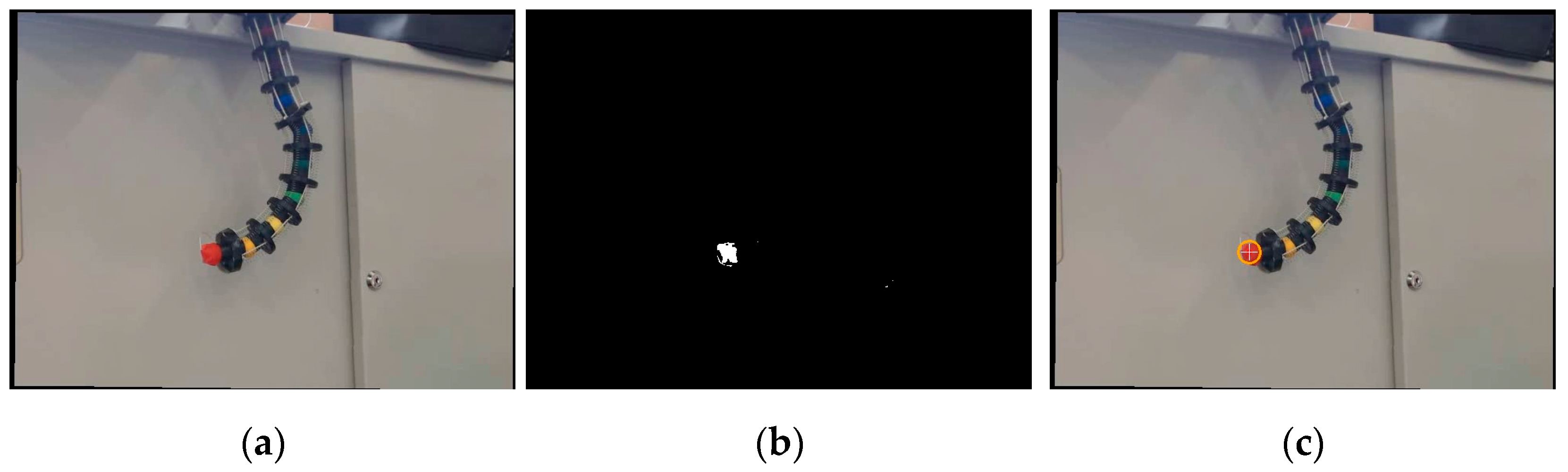

- Obtain a mask image that extracts only the target color by using a specified range of Hue values in the HSV color space.

- Apply a Hough transform to the obtained mask image to detect the center coordinates of the ball.

- Calculate the 3D position of the ball from the disparity of the corresponding points in the left and right images of the stereo camera.

- These processes are performed for all nine colors applied to the robot’s joints to detect the posture of the whole body of the robot.

3.2. Position Estimation of Each Ball

4. Experiments and Results

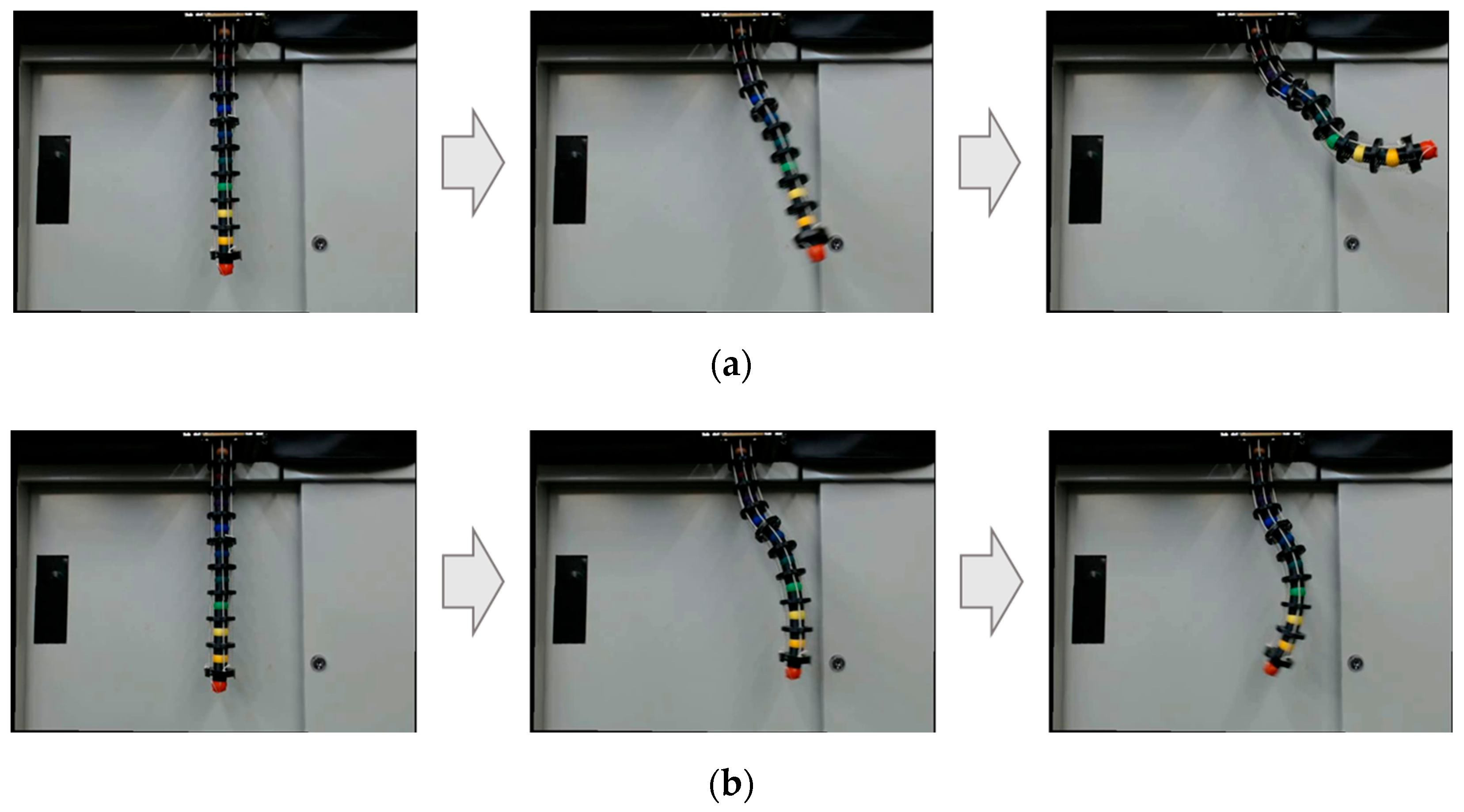

4.1. Manipulation of Continuum Robotic Body

- One-directional bending: () = (60, 1000, 60, 2000);

- S-shaped pose: () = (90, 1000, 270, 3000);

- Spatial motion: () = (60, 1000, −60, 4500).

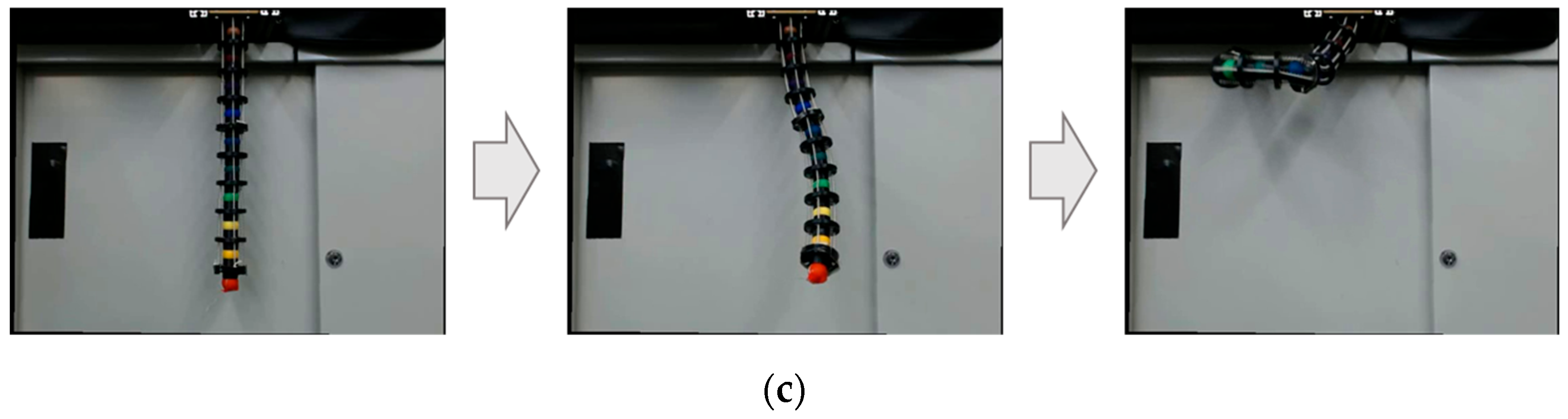

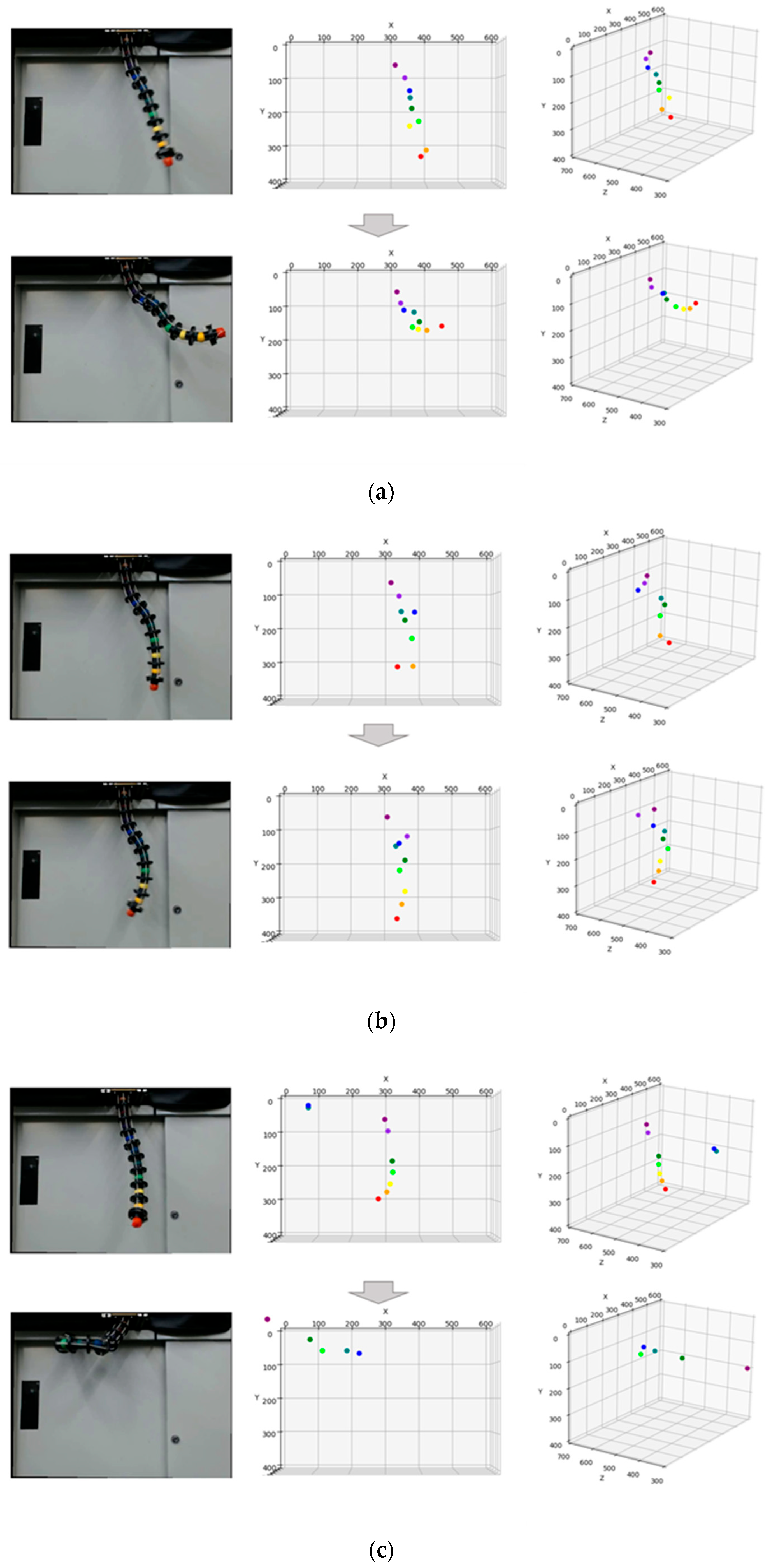

4.2. Posture Estimation

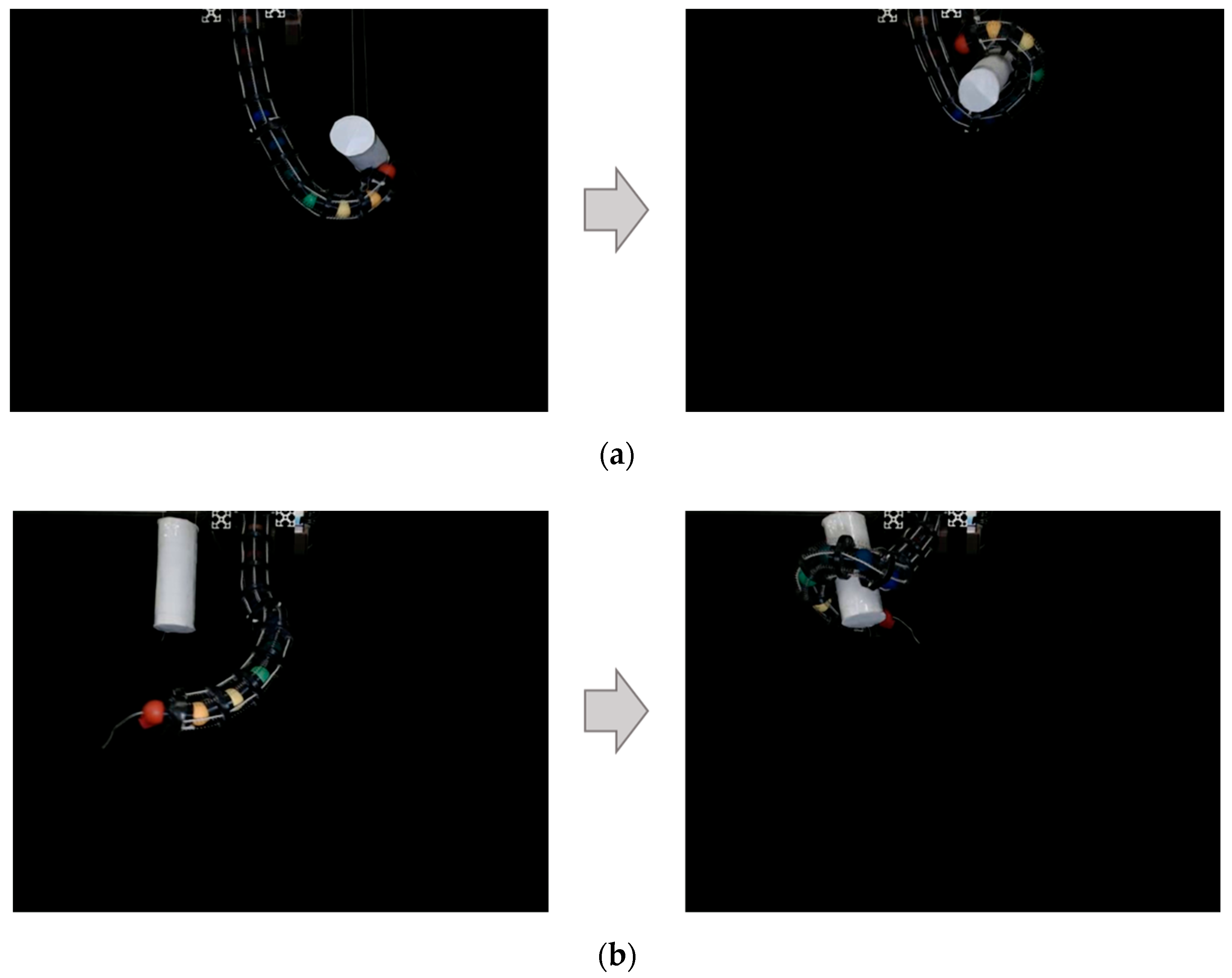

4.3. Grasping Experiment

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hannan, M.W.; Walker, I.D. The “Elephant Trunk” Manipulator, Design and Implementation. In Proceedings of the 2001 IEEE/ASME International Conference on Advanced Intelligent Mechatronics. Proceedings (Cat. No.01TH8556), Como, Italy, 8–12 July 2001; Volume 1, pp. 14–19. [Google Scholar]

- Liu, Y.; Ge, Z.; Yang, S.; Walker, I.D.; Ju, Z. Elephant’s Trunk Robot: An Extremely Versatile Under-Actuated Continuum Robot Driven by a Single Motor. J. Mech. Robot. 2019, 11, 051008. [Google Scholar] [CrossRef]

- Mochiyama, H.; Gunji, M.; Niiyama, R. Ostrich-Inspired Soft Robotics: A Flexible Bipedal Manipulator for Aggressive Physical Interaction. J. Robot. Mechatron. 2022, 34, 212–218. [Google Scholar] [CrossRef]

- Laschi, C.; Cianchetti, M.; Mazzolai, B.; Margheri, L.; Follador, M.; Dario, P. Soft Robot Arm Inspired by the Octopus. Adv. Robot. 2012, 26, 709–727. [Google Scholar] [CrossRef]

- Fan, Y.; Liu, D.; Ye, L. A Novel Continuum Robot with Stiffness Variation Capability Using Layer Jamming: Design, Modeling, and Validation. IEEE Access 2022, 10, 130253–130263. [Google Scholar] [CrossRef]

- Tsukagoshi, H.; Kitagawa, A.; Segawa, M. Active Hose: An Artificial Elephant’s Nose with Maneuverability for Rescue Operation. In Proceedings of the Proceedings 2001 ICRA. IEEE International Conference on Robotics and Automation (Cat. No.01CH37164), Seoul, Republic of Korea, 21–26 May 2001; Volume 3, pp. 2454–2459. [Google Scholar]

- Buckingham, R.; Graham, A. Nuclear Snake-arm Robots. Ind. Robot Int. J. 2012, 39, 6–11. [Google Scholar] [CrossRef]

- Burgner-Kahrs, J.; Rucker, D.C.; Choset, H. Continuum Robots for Medical Applications: A Survey. IEEE Trans. Robot. 2015, 31, 1261–1280. [Google Scholar] [CrossRef]

- Schmitz, A.; Treratanakulchai, S.; Berthet-Rayne, P.; Yang, G.-Z. A Rolling-Tip Flexible Instrument for Minimally Invasive Surgery. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 379–385. [Google Scholar]

- Yoshikawa, D.; Shimizu, M.; Umedachi, T. A Single Motor-Driven Continuum Robot That Can Be Designed to Deform into a Complex Shape with Curvature Distribution. ROBOMECH J. 2023, 10, 18. [Google Scholar] [CrossRef]

- Braganza, D.; McIntyre, M.L.; Dawson, D.M.; Walker, I.D. Whole Arm Grasping Control for Redundant Robot Manipulators. In Proceedings of the 2006 American Control Conference, Minneapolis, MN, USA, 14–16 June 2006; p. 6. [Google Scholar]

- Asano, F.; Luo, Z.-W.; Yamakita, M.; Hosoe, S. Dynamic Modeling and Control for Whole Body Manipulation. In Proceedings of the Proceedings 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No.03CH37453), Las Vegas, NV, USA, 27–31 October 2003; Volume 4, pp. 3162–3167. [Google Scholar]

- Li, C.; Rahn, C.D. Design of Continuous Backbone, Cable-Driven Robots. J. Mech. Des. 2002, 124, 265–271. [Google Scholar] [CrossRef]

- Huang, X.; Zou, J.; Gu, G. Kinematic Modeling and Control of Variable Curvature Soft Continuum Robots. IEEEASME Trans. Mechatron. 2021, 26, 3175–3185. [Google Scholar] [CrossRef]

- Thuruthel, T.G.; Falotico, E.; Renda, F.; Laschi, C. Model-Based Reinforcement Learning for Closed-Loop Dynamic Control of Soft Robotic Manipulators. IEEE Trans. Robot. 2019, 35, 124–134. [Google Scholar] [CrossRef]

- Webster, R.J.; Jones, B.A. Design and Kinematic Modeling of Constant Curvature Continuum Robots: A Review. Int. J. Robot. Res. 2010, 29, 1661–1683. [Google Scholar] [CrossRef]

- Morimoto, R.; Nishikawa, S.; Niiyama, R.; Kuniyoshi, Y. Model-Free Reinforcement Learning with Ensemble for a Soft Continuum Robot Arm. In Proceedings of the 2021 IEEE 4th International Conference on Soft Robotics (RoboSoft), New Haven, CT, USA, 12–16 April 2021; pp. 141–148. [Google Scholar]

- Ji, G.; Yan, J.; Du, J.; Yan, W.; Chen, J.; Lu, Y.; Rojas, J.; Cheng, S.S. Towards Safe Control of Continuum Manipulator Using Shielded Multiagent Reinforcement Learning. IEEE Robot. Autom. Lett. 2021, 6, 7461–7468. [Google Scholar] [CrossRef]

- Wang, X.; Li, Y.; Kwok, K.-W. A Survey for Machine Learning-Based Control of Continuum Robots. Front. Robot. AI 2021, 8, 730330. [Google Scholar] [CrossRef] [PubMed]

- Onose, R.; Sawada, H. A Ball-Jointed Tendon-Driven Continuum Robot with Multi-Directional Operability for Grasping Objects. ROBOMECH J. 2024, 11, 4. [Google Scholar] [CrossRef]

- Niu, G.; Zhang, Y.; Li, W. Path Planning of Continuum Robot Based on Path Fitting. J. Control Sci. Eng. 2020, 2020, 8826749. [Google Scholar] [CrossRef]

- Seleem, I.A.; El-Hussieny, H.; Ishii, H. Imitation-Based Motion Planning and Control of a Multi-Section Continuum Robot Interacting with the Environment. IEEE Robot. Autom. Lett. 2023, 8, 1351–1358. [Google Scholar] [CrossRef]

- Camarillo, D.B.; Loewke, K.E.; Carlson, C.R.; Salisbury, J.K. Vision Based 3-D Shape Sensing of Flexible Manipulators. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 2940–2947. [Google Scholar]

- Li, J.; Sun, Y.; Su, H.; Zhang, G.; Shi, C. Marker-Based Shape Estimation of a Continuum Manipulator Using Binocular Vision and Its Error Compensation. In Proceedings of the 2020 IEEE International Conference on Mechatronics and Automation (ICMA), Beijing, China, 13–16 October 2020; pp. 1745–1750. [Google Scholar]

- Xu, W.; Foong, R.P.L.; Ren, H. Maker Based Shape Tracking of a Flexible Serpentine Manipulator. In Proceedings of the 2015 IEEE International Conference on Information and Automation, Lijiang, China, 8–10 August 2015; pp. 637–642. [Google Scholar]

- Liu, R.; Zheng, H.; Hliboký, M.; Endo, H.; Zhang, S.; Baba, Y.; Sawada, H. Anatomically-Inspired Robotic Finger with SMA Tendon Actuation for Enhanced Biomimetic Functionality. Biomimetics 2024, 9, 151. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.; Paris, S.; Popović, J. Practical Color-Based Motion Capture. In Proceedings of the 2011 ACM SIGGRAPH/Eurographics Symposium on Computer Animation, Vancouver, BC, Canada, 5–7 August 2011; Association for Computing Machinery: New York, NY, USA, 2011; pp. 139–146. [Google Scholar]

| Color | Range of Hue |

|---|---|

| red | 1~6 |

| orange | 14~22 |

| yellow | 24~31 |

| lime | 40~79 |

| green | 80~99 |

| teal | 100~109 |

| blue | 108~120 |

| blue–violet | 125~138 |

| purple | 168~179 |

| Movement | Frame | Error [mm] | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Red | Orange | Yellow | Lime | Green | Teal | Blue | Blue–Violet | Purple | ||

| One-directional bending | 1st | 19.15 | 41.39 | 31.81 | 25.69 | 3.77 | 6.83 | 29.20 | 13.82 | 35.69 |

| 2nd | 10.18 | 3.01 | 13.88 | 11.10 | 39.97 | 24.25 | 27.34 | 6.59 | 28.80 | |

| S-shaped pose | 1st | 4.78 | 63.09 | 6062.20 | 37.33 | 6.02 | 11.12 | 113.90 | 34.11 | 5.12 |

| 2nd | 7.45 | 8.41 | 12.90 | 32.09 | 13.84 | 12.34 | 35.95 | 108.73 | 22.11 | |

| Spatial motion | 1st | 4.15 | 3.32 | 5.03 | 23.25 | 16.17 | 474.07 | 480.58 | 27.66 | 28.36 |

| 2nd | - | - | - | 13.77 | 202.09 | 8.50 | 30.89 | - | - | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Onose, R.; Sawada, H. Development of Tendon-Driven Continuum Robot with Visual Posture Sensing for Object Grasping. Actuators 2025, 14, 140. https://doi.org/10.3390/act14030140

Onose R, Sawada H. Development of Tendon-Driven Continuum Robot with Visual Posture Sensing for Object Grasping. Actuators. 2025; 14(3):140. https://doi.org/10.3390/act14030140

Chicago/Turabian StyleOnose, Ryo, and Hideyuki Sawada. 2025. "Development of Tendon-Driven Continuum Robot with Visual Posture Sensing for Object Grasping" Actuators 14, no. 3: 140. https://doi.org/10.3390/act14030140

APA StyleOnose, R., & Sawada, H. (2025). Development of Tendon-Driven Continuum Robot with Visual Posture Sensing for Object Grasping. Actuators, 14(3), 140. https://doi.org/10.3390/act14030140