Abstract

After years of more rigid and conventional production processes, the traditional manufacturing industry has been moving toward flexible manufacturing and intelligent manufacturing in recent years. After more than half a century of development, robotics has penetrated into all aspects of human production and life, bringing significant changes to the development of human society. At the same time, the key technology of human–machine cooperative operation has become a research hotspot, and how to realize a human–machine integrated safety system has attracted attention. Human–machine integration means that humans and robots can work in the same natural space, coordinating closely and interacting naturally. To realize real human–robot integration under human–robot cooperative operation, the good judgment of intentional interaction and accidental collision and the detection of collision joints and collision points when accidental collision occurs are the key points. In this paper, we propose a method to identify the collision joints by detecting real-time current changes in each joint of the robot and solve the collision point location information of the collision joints through the principle of virtual displacement and the principle of force method using the force sensor data installed at the base of the robot as the known condition. The results show that the proposed method of identifying the collision joints using changes in joint current and then establishing a collision detection algorithm to solve the collision point location is correct and reliable.

1. Introduction

With the development of basic theories and key technologies for human–machine collaboration and human–machine integration, research focusing on the scientific issues of “human–machine–environment multimodal perception and interaction” is at a critical stage. The construction of a human–machine and machine–machine interaction system can promote the progress of robotics and theory while also meeting the needs of contemporary intelligent development [1,2,3]. Establishing collision detection models, deriving force control algorithms during robot motion, and establishing robot collision recognition and fast response mechanisms are the keys to ensuring safety during human–machine collaboration [4,5,6,7,8].

Common collision detection methods include installing optoelectronic, force-controlled, and joint torque sensors in specific parts of the robot [9,10,11,12] or detecting the relative position relationship between robot and human through vision systems, imaging systems, ranging systems, and envelope box methods [13,14,15] to ensure the safety of human–robot collaboration systems through pre-collision safety mechanisms. However, some of the systems are more complex in composition, have various types of sensors, have poor backward compatibility, have long fusion response time, and incur expensive system costs, thus making them not suitable for large-scale dissemination and use. Bharadwaja et al. [16] proposed a neural network-based path planning control system for service robots, which was compared with the traditional probabilistic roadmap (PRM) algorithm, and the robot’s obstacle distance prediction accuracy improved by about 36%. Meanwhile, the vision system can be used to model the surrounding environment in 3D and a genetic algorithm can be used to generate the optimal choice for the robot’s forward route. A. Lomakin et al. [17] proposed a rigid robot collision detection method considering collision and external force, which provides an evaluable expression that can estimate the calculation error caused by numerical error and parameter uncertainty, which was applied in research into human–robot collaboration and robot safety. T. Lindner et al. [18] proposed a Reinforcement Learning (RL)-based collision avoidance strategy, which controls the robot’s movement through trial-and-error interaction with the environment and achieves the goal of obstacle avoidance through the combination of the Deep Deterministic Policy Gradient (DDPG) algorithm and Hindsight Experience Replay (HER) algorithm. Alchan Yun et al. [19] designed a strain gauge-based cylindrical three-axis sensor to detect external force information by connecting the sensor to the robot, and it was experimentally verified that measuring force information with this structure of sensor can improve the efficiency of collision detection. In the literature [20,21], by installing color spheres on human shoulders, the head, limbs, etc., the camera IP captures data information to estimate human pose and position in performing the HRC task and uses the DLT method to estimate the 3D information of color spheres on the human body to avoid collisions through the use of image processing techniques. S. A. Gadsden et al. [22] proposed the smooth variable structure filter (SVSF), which has stronger accuracy and robustness in collision recognition and fault diagnosis for human–computer interaction systems with uncertainty in modeling and has been applied in the field of aerospace brakes.

Compared with the use of vision sensors, multi-sensor fusion, and energy drive for collision detection, the use of force sensors for collision recognition research has the advantages of direct measurement, simple algorithms, conduciveness to decoupling, and real-time response and is an important detection method in the field of human–machine integration. In this paper, we propose a collision detection algorithm based on the numerical information collected by a six-axis force/torque sensor installed at the base of a robot after static/dynamic force compensation and apply the force law principle and the virtual displacement principle to lock the location information of the collision point when the collision joint information is identified by real-time feedback of the current situation of each joint of the robot. Finally, the force compensation algorithm and collision detection algorithm are experimentally verified by carrying the experimental platform.

2. Model Building

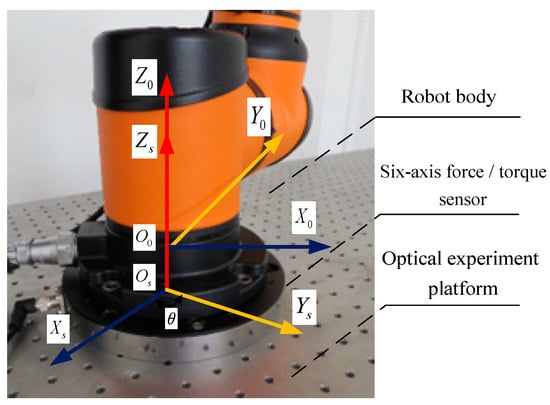

In order to complete the collision detection task, a six-axis force/torque sensor was mounted at the base of the robot, namely the KWR200X. It is a large-range multi-dimensional force sensor with a built-in high-precision embedded data acquisition system, which can measure and transmit the orthogonal force and orthogonal moment in three directions in real time. It is made of high-strength alloy steel with strong bending resistance. The assembly method is bolted to the table and the robot is bolted to the six-dimensional force cell for better monitoring of experimental data. We define the robot base coordinate system as , where the direction is opposite to the gravity direction and vertical upward. We define the six-axis force/torque sensor coordinate system as , and the six-axis force/torque sensor coordinate system can be obtained by rotating the robot base coordinate system around the axis by the angle; the attitude transformation matrix is shown in Equation (1), and the position relationship is shown in Figure 1.

Figure 1.

Relative position of the six-axis force/torque sensor’s mounting position and coordinate system to the base coordinate system.

When the robot is working normally, each linkage is a common static structure, and the forces are fixed at both ends. When the robot linkage is collided with by the external environment, if the detected external force is defined as , the original stationary structure becomes three-time super-stationary. The six-axis force/torque sensor installed at the robot base can measure the orthogonal forces and orthogonal moments in three directions: and , respectively.

Therefore, the cosine of the magnitude and direction of the combined force is

The location information of the collision point can be expressed as

in Equation (4) is the position vector of the collision point relative to the six-axis force/torque sensor coordinate system. According to the vector information about force and moment in Equation (4), it is not possible to lock the collision point position coordinates, and other methods are needed to complete the task of collision point identification.

As shown in Figure 1, the directions of the three axes in the six-axis force/torque sensor coordinate system are known, and when a collision occurs, the robot linkage coordinate system needs to be re-established based on the original six-axis force/torque sensor coordinate system. We define the robot linkage coordinate system as , and the robot linkage coordinate system can be rotated by the six-axis force/torque sensor coordinate system around by the angle first, then around the axis by the angle, and finally around by the angle, and its attitude transformation matrix is shown in Equation (5).

Here, stands for the abbreviation of and stands for the abbreviation of . The presence of the tilt angle of the collaborative robot base mounting and the zero value of the six-axis force/torque sensor will provide the difference between the force information measured by the six-axis force/torque sensor and the actual magnitude of the external force of the collision. In the literature [23], the above compensation method is verified, where the collaborative robot base mounting inclination angle can be found. The coordinates at each joint are known at the factory when the robot is shipped, and when combined with the positions of each coordinate system defined above, the angles , , and can be found. According to the Cartesian coordinate transformation rule, the magnitude of each directional component force measured by the six-axis force/torque sensor can be expressed in the linkage coordinate system as

The direction is perpendicular to axis and the plane in which the connecting rod is located, the direction is parallel to the connecting rod and points to axis , and the direction is parallel to axis and the plane in which the connecting rod is located.

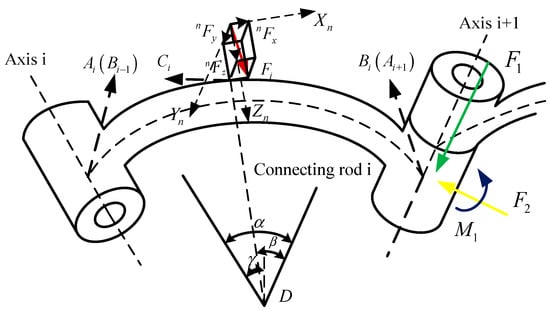

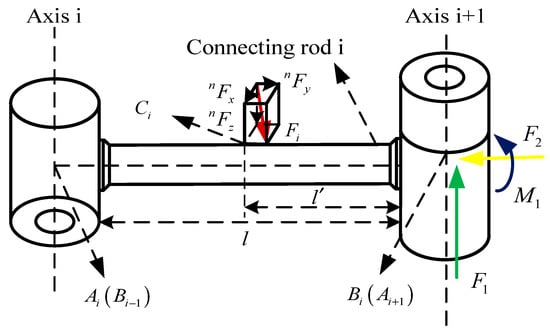

As shown in Figure 2, the robot connecting rod is subjected to the external force after the collision of the robot connecting rod . The effect of the force in the direction of is the same as the effect of the force in the direction of . When considering the force on rod , the effect on is used as an example to derive the result, and the algebraic summation of the two parts is performed.

Figure 2.

Collision force schematic of robot linkage with external force .

In the figure, point is defined as the position of the collision point where force collides with robot rod ; point is defined as the intersection point of axis and the axis extension line; is the distance between axis and axis center-of-mass positions and to point ; is the angle between the two extension lines; is the angle between a line in the plane where forces and are located and the axis angle of the extension line; and is an angle variable in the force method to solve the super-stationary structure.

3. Force Compensation Algorithm

When the robot is at rest, the six-axis force/torque sensor mounted at the base will output different values in each direction due to the gravity of the body in different positions. Therefore, in order to identify the collision point at rest, the gravity should be compensated to achieve a zero reading of the six-axis force/torque sensor mounted at the base when the robot is at rest. Therefore, when the sensor value changes irregularly, it is assumed that an accidental collision has occurred between the robot and the external environment.

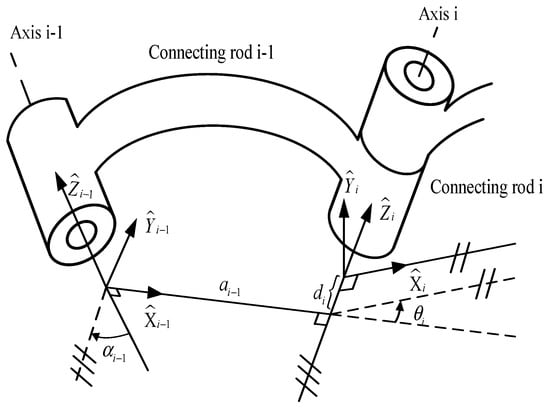

To establish the position and transformation relationship between the adjacent links of the collaborative robot using the “” parameter method, the parameters and variables of each link should be defined first. is the angle of rotation from to around the axis; is the distance from to along the axis; is the distance from to along the axis; and is the angle of rotation from to around the axis. Figure 3 shows the “” coordinate space of the two adjacent joints.

Figure 3.

“” coordinate space of two adjacent joint links.

In the robot linkage coordinate system, the coordinate system can be obtained by four flush transformations of the coordinate system ; therefore, the flush transformation matrix between two adjacent linkage coordinate systems and is

By bringing the parameters of each linkage of the robot into Equation (7), the positional transformation matrix of the specified linkage can be obtained. Here, the transformation matrix of the coordinate system with respect to the base coordinate system can be expressed as

where denotes the rotation matrix of the coordinate system with respect to the coordinate system , and denotes the position matrix of the coordinate system with respect to the coordinate system .

We define the position vector of the center of mass of any linkage with respect to the joint coordinate system as

The transformation matrix of the connecting rod coordinate system with respect to the base coordinate system can be represented by . The connecting rod center-of-mass coordinate system has the same direction as the coordinate system , and its position relationship is shown in Figure 1. If the gravity vector of a robot linkage on its center-of-mass coordinate system is

then the chi-square transformation matrix of the linkage’s center-of-mass coordinate system with respect to the base coordinate system is

where .

Then, when the robot is in the static state, its own gravity vector, when transformed from the linkage coordinate system to the base coordinate system, is expressed by as

Its moment vector, when converted from the linkage coordinate system to the base coordinate system, is expressed in as

Thus, the transformation matrices of the force and moment vectors in the force sensor coordinate system and the base coordinate system can be obtained as

where ; .

The robot’s speed and acceleration change over time during normal operation, so gravity compensation alone cannot meet the requirements. After the dynamic force compensation, the sensor reading will be zero, so that if a collision occurs, the collision point can be identified and calculated using the compensated data.

The Newton–Euler method can build a rigid robot dynamics model using only the velocity, angular velocity, and rotational inertia of each linkage of the robot. The velocity and acceleration of each linkage of the robot are obtained by first pushing outward from the robot base and then pushing inward from the outside to obtain the generalized force of interaction between two adjacent linkages.

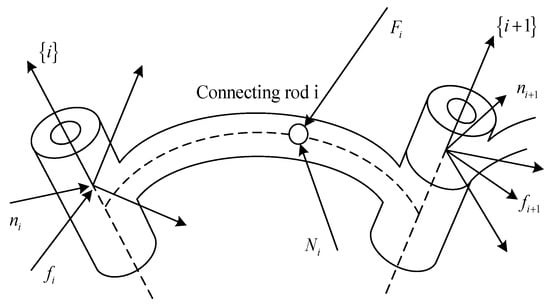

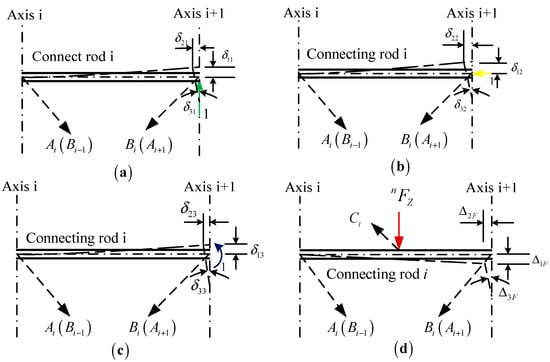

For a single operator arm linkage of the robot, the forces are shown in Figure 4.

Figure 4.

Robot linkage force diagram.

We define the mass of each operating arm of the robot as , and the position vector of the center of mass of robot joint with respect to the joint coordinate system can be expressed as . We define the displacement of robot joint i as , the velocity as , and the acceleration as . The extrapolation process according to Equation (15) is mainly to solve for the acceleration of the center of mass of each joint of its robot.

where , , and are the angular velocity, angular acceleration, linear acceleration, and center-of-mass linear acceleration of the robot linkage , respectively;

; .

Accordingly, Newton’s equation and Euler’s equation of motion can be established:

where and denote the force and moment of the robot linkage on the linkage , respectively; and denote the force and moment of the robot linkage on the linkage , respectively; and denote the position vector from the coordinate origin to the center of mass attached on robot joints and , respectively; denotes the inertia tensor of robot linkage with respect to the center of mass ; and denotes the Coe-style force term.

For the n-degree-of-freedom robot, when there is no load at its end, the extrapolated recursive formulas from Equations (15)–(17) can calculate and , which are then carried over to gravity compensation Formula (12) to (14) in the stationary state. The required gravity compensation values for the six-axis force/torque sensor at the base, namely and , can be solved for when the robot is in motion.

When the robot is in normal operation, the six-dimensional force sensor installed at the base reads the force and moment information as and . The force and moment relationship information obtained after gravity compensation is and , followed by

Thus, when the robot is in normal operation, the six-axis force/torque sensor mounted at the base of the robot reads zero after dynamic force compensation. Then, when the value of the six-axis force/torque sensor exceeds a set threshold, a collision between the robot and the external environment is considered to have occurred. Based on the six-axis force/torque sensor and the working principle of the robot itself, the spatial coordinates at the front axis of the collision linkage and the spatial coordinates at the rear axis of the collision linkage at the time of collision are

4. The Principle of Force Method to Solve the Location of the Collision Point

When the joints where the robot collides are known, the forces at robot joint axes and due to the collision are shown in Figure 5. We define the point as the location of the collision point where the force collides with the robot joint , we define as the length of the robot joint , and we define as the length of the robot axis to the cross section where the collision point is located and the variables in the force law principle.

Figure 5.

Collision force schematic of robot linkage subjected to external force .

When applying the principle of force method to solve the position of the collision point, the end of the connecting rod where the collision occurs is first released, and the original super-stationary structure becomes a basic static system. In this static system, in addition to the action of , the vertical force , the horizontal force , and the moment , which are in the same direction as axis and perpendicular to axis , act at the end of , as shown in Figure 6.

Figure 6.

Schematic diagram of robot linkage after unconstraint under the action of .

The robot linkage is a linear elastic structure, so the displacement is proportional to the force. denotes the displacement of the end along the direction of under the force , and , , and denote the displacement of the end of the robot linkage along the direction of when , , and are unit forces, respectively, with individual displacement acting on the robot linkage . Thus, the total displacement of the end of the robot linkage along the direction is

Since the end of the robot linkage is a fixed constraint, the displacement at the point along the direction should be 0, and the deformation coordination equation thus becomes

Following the same method, two other deformation coordination equations can be obtained for the displacement of the robot linkage point along the direction equal to zero and the angle of rotation in the direction equal to zero. Thus, the linear equation equation is as follows:

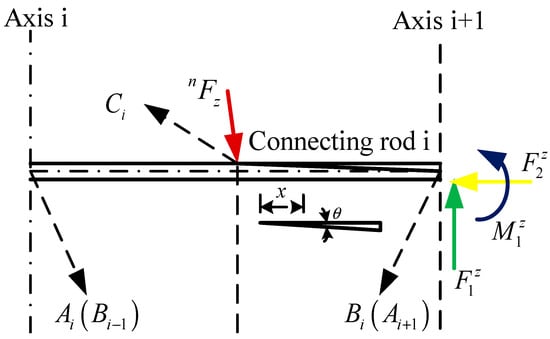

The nine coefficients and three constant terms in Equation (22) are represented as shown in Figure 7. In the figures, (a)–(d) show the displacement diagrams of the end of the robot linkage along the , , and directions when , , , and are unit forces.

Figure 7.

Diagram of the displacement along different directions when different forces are applied to the robot linkage. (a) Displacement diagram of the connecting rod along the , , and directions when the unit force is . (b) Displacement diagram of the connecting rod along the , , and directions when the unit force is . (c) Displacement diagram of the connecting rod along the , , and directions when the unit force is . (d) plot of the displacement of the connecting rod along the , , and directions when the unit force is united.

When considering the impact of the collision process on the displacement of the robot linkage, the impact of the shear force and the axial force is much smaller than the impact of the moment and can be neglected. As shown in Figure 6, when the basic static system in which the robot linkage is located only acts with the external force , the moment on the robot linkage is

If the moment of the increase in the curvature of the robot linkage is specified as positive, the moment equation when a unit force is applied at point on the robot linkage along the direction of is

When a unit force is applied at point on the robot linkage along the direction, the moment equation is

When a unit force is applied at point on the robot linkage along the direction , the moment equation is

According to the displacement reciprocity theorem, it is proved that . Therefore, it is obtained that

The system of Equation (22) is simplified to obtain the formula for the force-moment:

Among them,

When solving the effect of the robot linkage due to the action of , the method is exactly the same as the calculation and solution method for solving . When and act on the robot connecting rod at the same time when establishing the connecting rod coordinate system, the two sets of forces are in two different planes after being unconstrained. The force-moments generated under the action of are , , and , and the force-moments generated under the action of are , , and , with the two sets of force-moments algebraically summed as , , and . Therefore, when a collision occurs, the effect of the external force on the robot joint axis is shown in Figure 5. In practical applications, when the software and hardware of the robot system are installed, the program is run. First, the collision joint is locked according to the change in the output joint current. Combined with the force and moment values output by the robot during normal operation and some parameter information for the robot body, it is brought into the Equations (23)–(35) to solve the algorithm parameters. In Equations (36)–(38), because the value information of force and moment at the time of collision is known, only the only variable in the algorithm is the unknown condition, and and the collision point location can be found. At this point, the theoretical derivation of the force compensation algorithm and the principle of the force law, i.e., how the location of the collision point is solved, is completed.

5. Experimental Verification and Analysis of Results

5.1. Identification of Collision Joints for Experimental Verification

In the process of human–robot cooperative operation, the robot system information can be read by observing changes in the current of each joint of the robot and making a judgment as to whether the robot has had an accidental collision. To read the current data of each joint of the robot in real time, we can write a script program to write the robot system by making the robot communicate with the host computer through a network protocol to read the data. The main program is mainly divided into four parts: serial communication between the robot and the host computer, the assignment of values to each joint of the robot, loading real-time data for transfer to the host computer, and transmission of the data collected in the RET function to the host computer for display, of which part of the main program is shown below.

Joint_AO_1=get modbus io status(“Joint_AO_1”)

Joint_AO_2=get modbus io status(“Joint_AO_2”)

Joint_AO_3=get modbus io status(“Joint_AO_3”)

Joint_AO_4=get modbus io status(“Joint_AO_4”)

Joint_AO_5=get modbus io status(“Joint_AO_5”)

Joint_AO_6=get modbus io status(“Joint_AO_6”)

if (Joint_AO_1 > 30,000) then

Joint_current_1=Joint_AO_1-65535

end

if (Joint_AO_1 < 30,000) then

Joint_current_1=Joint_AO_1

end

if (Joint_AO_2 > 30,000) then

Joint_current_2=Joint_AO_2-65535

end

if (Joint_AO_2 < 30,000) then

Joint_current_2=Joint_AO_2

end

if (Joint_AO_3 > 30,000) then

Joint_current_3=Joint_AO_3-65535

end

if (Joint_AO_3 < 30,000) then

Joint_current_3=Joint_AO_3

end

if (Joint_AO_4 > 30,000) then

Joint_current_4=Joint_AO_4-65535

end

if (Joint_AO_4 < 30,000) then

Joint_current_4=Joint_AO_4

end

if (Joint_AO_5 > 30,000) then

Joint_current_5=Joint_AO_5-65535

end

if (Joint_AO_5 < 30,000) then

Joint_current_5=Joint_AO_5

end

if (Joint_AO_6 > 30,000) then

Joint_current_6=Joint_AO_6-65535

end

if (Joint_AO_6 < 30,000) then

Joint_current_6=Joint_AO_6

end

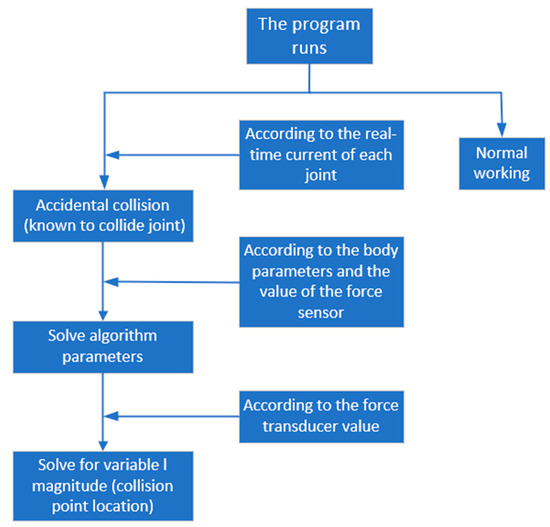

To run the program, you first need to understand the current values of each joint when the robot works normally. According to the output real-time current value of each joint, it is possible to determine whether an accidental collision has occurred. When an accidental collision occurs, the algorithm parameters are solved according to the parameter information of the robot body and the output value of the force sensor. Then, based on the value of the force sensor at the time of collision, the magnitude of variable l in the algorithm is solved. At this point, the location of the collision point can be represented. The algorithm block diagram is shown in Figure 8.

Figure 8.

Diagram of the collision detection algorithm.

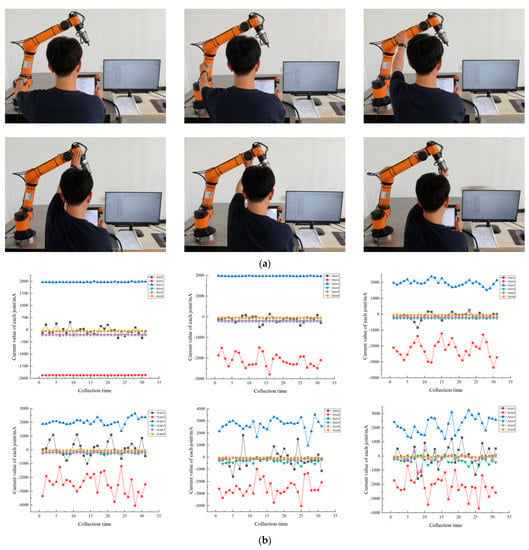

As shown in Figure 9a, volunteers simulated the collision experiment with their hands and applied external forces to robot joints 1 to 6, respectively. At the same time, the computer collected the current at each joint of the robot in six action states and output it to the computer in the form of numerical values. This current information is output in groups every 500 ms. Figure 9b shows a line chart corresponding to the output of the process of the volunteer hand simulation collision experiment in Figure 9a. The horizontal axis of this line chart is represented as a set of current information collected every 500 ms. The vertical axis of the line chart represents the real-time current situation of each joint of the robot corresponding to each set of current information.

Figure 9.

Human−robot interaction collision detection experiment. (a) Volunteer−simulated collision experiment. (b) Joint 1−Joint 6 variations in current data.

5.2. Force Method Principle for Collision Point and Error Analysis

The force method used the AUBO i5 series collaborative robot body parameters as input conditions and applied two external forces of “” and “” in various direction, as measured by a tensiometer tester. The calculated values are obtained by substituting the formula. The material of the robot linkage was the common carbon fiber “” and its elasticity model is . The robot arm cross-section is circular and its moment of inertia is . The numerical information of each parameter of the force principle is shown in Table 1.

Table 1.

Information on the value of each parameter of the force law principle.

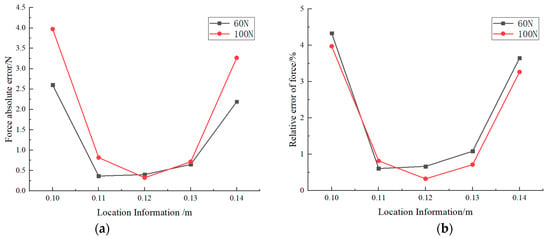

By bringing the values of the parameters in Table 1 into Equations (27)–(35), the numerical solution of the force generated by the robot linkage due to the collision action of and can be obtained. In order to verify the correctness and accuracy of the calculation procedure, an error analysis of the experimental results was performed.

The absolute error for the actual external contact force is calculated by the formula

where is the experimental value of the external force and is the calculated value of the external force.

The relative error for the actual external contact force is calculated by the formula

where is the absolute error of the external contact force and is the mode of the applied external force vector. The experimental results obtained by applying different magnitudes of external contact forces at different positions of the robot are shown in Table 2.

Table 2.

Experimental results of different collision external forces.

As shown in Figure 10, when a collision occurs, the relative error of the external force on the collision point is 4.333% of the maximum when the position of the collision point is solved by the principle of force law and the principle of virtual displacement, and the accuracy is higher the closer the position of the collision point is to the midpoint of the collision joint of the robot, thus meeting accuracy requirements. Therefore, when the force situation meets the accuracy requirement, the variable in the force method principle can be found, and the coordinates of the collision point can also be found.

Figure 10.

Experimental results. (a) Force absolute error. (b) Force relative error.

6. Conclusions

In this paper, we propose a method to establish communication between the robot and the host computer with the aim of clarifying information about joints where accidental collisions occur during robot operation by observing the real-time current changes in each joint axis of the robot. The derivation of the force compensation algorithm is completed by the data information transmitted from the six-dimensional force sensor installed at the robot base. The force law principle and the virtual displacement principle are introduced to solve the specific position information of the specific collision point in the known collision joints. Using the AUBO collaborative robot as the main body of the experiment, experimental verification was performed, experimental data were recorded, and conclusions were drawn through comparative analysis. The algorithm of locking the collision joint is based on real-time current changes in the robot body and the collision point position information being solved based on the force method principle and the virtual displacement principle, and the error of the force situation is under a reasonable range, which meets the accuracy requirement of the robot collision point identification algorithm.

Author Contributions

Conceptualization, Z.W. and B.Z.; methodology, B.Z.; software, B.Z.; validation, Z.W., B.Z. and Y.Y.; formal analysis, B.Z. and Z.W.; investigation, B.Z.; resources, Z.L.; data curation, Z.L.; writing—original draft preparation, B.Z.; writing—review and editing, B.Z. and Z.W.; visualization, Z.L.; supervision, Z.W. and Z.L.; project administration, Z.W. and Z.L.; funding acquisition, Z.W. All authors have read and agreed to the published version of the manuscript.

Funding

National Natural Science Foundation of China (No. 51505124), Science and Technology Project of Hebei Education Department (ZD2020151), and Tangshan Science and Technology Innovation Team Training Plan Project (21130208D).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Madonna, M.; Monica, L.; Anastasi, S. Evolution of cognitive demand in the human–machine interaction integrated with industry 4.0 technologies. Wit Trans. Built Environ. 2019, 189, 13–19. [Google Scholar]

- Wang, T.R. Development of the robotics. Robot 2017, 39, 385–386. [Google Scholar]

- Jing, G.G.; Wu, G.T.; Chang, B.Y. Contact collision dynamics analysis of manipulator. Trans. Chin. Soc. Agric. Mach. 2016, 47, 369–375. [Google Scholar]

- Lai, Y.N.; Ye, X.; Ding, H. Major research plans and progress on the integration. Chin. J. Mech. Eng. 2021, 57, 1–11+20. [Google Scholar]

- Wang, Z.J.; Liu, L.; Li, Z.X. Research on robot body collision point detection based on six-dimensional force sensors. J. Agric. Mach. 2021, 52, 395–401+410. [Google Scholar]

- Saenz, J.; Behrens, R.; Schulenburg, E. Methods for considering safety in design of robotics applications featuring human-robot collaboration. Int. J. Adv. Manuf. Technol. 2020, 107, 2313–2331. [Google Scholar] [CrossRef]

- Safeea, M.; Neto, P.; Bearee, R. On-line collision avoidance for collaborative robot manipulators by adjusting off-line generated paths: An industrial use case. Robot. Auton. Syst. 2019, 119, 278–288. [Google Scholar] [CrossRef]

- Yu, X.S.; Wang, Z.A.; Wu, G.X. Design of human-machine co-integration systems for different interaction tasks. J. Autom. 2022, 48, 2265–2276. [Google Scholar]

- Wang, B.L. Research on Collision Detection and Response Strategy and Supple Control Algorithm of Robotic Arm. Harbin Inst. Technol. 2019. Available online: https://kns.cnki.net/kcms2/article/abstract?v=3uoqIhG8C475KOm_zrgu4lQARvep2SAkWfZcByc-RON98J6vxPv10azsRg9B71I1c60b40mP4o4DwCMhP9xnq1d-e9Bn7Pmr&uniplatform=NZKPT (accessed on 15 July 2023).

- Zhu, Z.; Gong, Z.; Zhang, Y.; Chen, S.; Zhao, Z.; Zhou, X.; Gao, M.; Huang, S. Research on Collision Detection and Collision Reaction of Collaborative Robots. In Intelligent Robotics and Applications, Proceedings of the 14th International Conference on Intelligent Robotics and Applications, Yantai, China, 22–25 October 2021; Springer: Cham, Switzerland, 2021; Volume 13014, pp. 510–520. [Google Scholar] [CrossRef]

- Yu, W.F. A review of collision detection algorithms based on virtual reality technology. J. Civ. Aviat. 2019, 3, 85–87+96. [Google Scholar]

- Govender, N. Collision detection of convex polyhedra on the NVIDIA GPU architecture for the discrete element method. Appl. Math. Comput. 2015, 267, 810–829. [Google Scholar] [CrossRef]

- Geng, H.; Gao, L.L. Improved hybrid hierarchical wraparound box collision detection algorithm for aircraft virtual maintenance. Sci. Technol. Eng. 2018, 18, 63–68. [Google Scholar]

- Zheng, Y.; Chen, C.M.; Adiwahono, A.H. A GJK-Based Approach to Contact Force Feasibility and Distribution for Multi-Contact Robots; North-Holland Publishing Co.: Amsterdam, The Netherlands, 2011; Volume 59, pp. 194–207. [Google Scholar]

- Wang, Z.F.; Liu, C.Y.; Sui, X. Three-dimensional point cloud target segmentation and collision detection based on depth projection. Opt. Precis. Eng. 2020, 28, 1600–1608. [Google Scholar] [CrossRef]

- Bharadwaj, H.; Kumar, V. Comparative study of neural networks in path planning for catering robots. Procedia Comput. Sci. 2018, 133, 417–423. [Google Scholar] [CrossRef]

- Lomakin, A.; Deutscher, J. Reliable Algebraic Fault Detection and Identification of Robots. IEEE Trans. Autom. Sci. Eng. 2022, 19, 3821–3837. [Google Scholar] [CrossRef]

- Lindner, T.; Milecki, A. Reinforcement Learning-Based Algorithm to Avoid Obstacles by the Anthropomorphic Robotic Arm. Appl. Sci. 2022, 12, 6629. [Google Scholar] [CrossRef]

- Yun, A.; Lee, W.; Kim, S. Development of a robot arm link system embedded with a three-axis sensor with a simple structure capable of excellent external collision detection. Sensors 2022, 22, 1222. [Google Scholar] [CrossRef] [PubMed]

- Fu, J.S.; Ding, Y.B.; Liu, H.T. A vision localization method for mobile machining robots. J. Mech. Eng. 2022, 58, 25–34. [Google Scholar]

- Phong, L.D.; Choi, J.; Lee, W.; Kang, S. A novel method for estimating external force: Simulation study with a 4-DOF robot manipulator. Int. J. Precis. Eng. Manuf. 2015, 16, 755–766. [Google Scholar] [CrossRef]

- Gadsden, S.A.; Song, Y.; Habibi, S.R. Novel Model-Based Estimators for the Purposes of Fault Detection and Diagnosis. Trans. Mechatron. 2013, 18, 1237–1249. [Google Scholar] [CrossRef]

- Zhang, L.J.; Hu, R.Q.; Yi, W.M. Research on end-load force sensing of industrial robots based on six-dimensional force sensors. J. Autom. 2017, 43, 439–447. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).