Artificial Intelligence Adoption Amongst Digitally Proficient Trainee Teachers: A Structural Equation Modelling Approach

Abstract

1. Introduction

2. Literature Review

2.1. Professional Engagement and Performance Expectancy

2.2. Professional Engagement and Effort Expectancy

2.3. Professional Engagement and Social Influence

2.4. Professional Engagement and Hedonic Motivation

2.5. Professional Engagement and Price Value

2.6. Professional Engagement and Habit of Use

2.7. Digital Resources in Performance Expectation

2.8. Digital Resource Creation in Effort Expectation

2.9. Digital Resources and Social Influence

2.10. Digital Resource Creation and Hedonic Motivation

2.11. Digital Resources and Facilitating Conditions

3. The Present Study: Background and Objectives

- Structural Integration Approach: Following Tenberga and Daniela’s (2024) framework, this paradigm advocates for the formal inclusion of AI-specific domains within digital competence frameworks. This position has spurred the development of novel assessment instruments, exemplified by Chiu et al.’s (2024) validated scales for evaluating AI-related teaching competencies.

- Competency-Mediated Adoption Approach: Aligned with the present study’s orientation, this research stream investigates how pre-existing digital competence influences AI utilization patterns. Khalil and Alsenaidi’s (2024) work in this domain reveals that educators’ AI implementation strategies frequently remain constrained, while demonstrating how digital competence training cultivates both critical engagement and positive dispositions toward educational technology (Bedir Erişti and Freedman 2024).

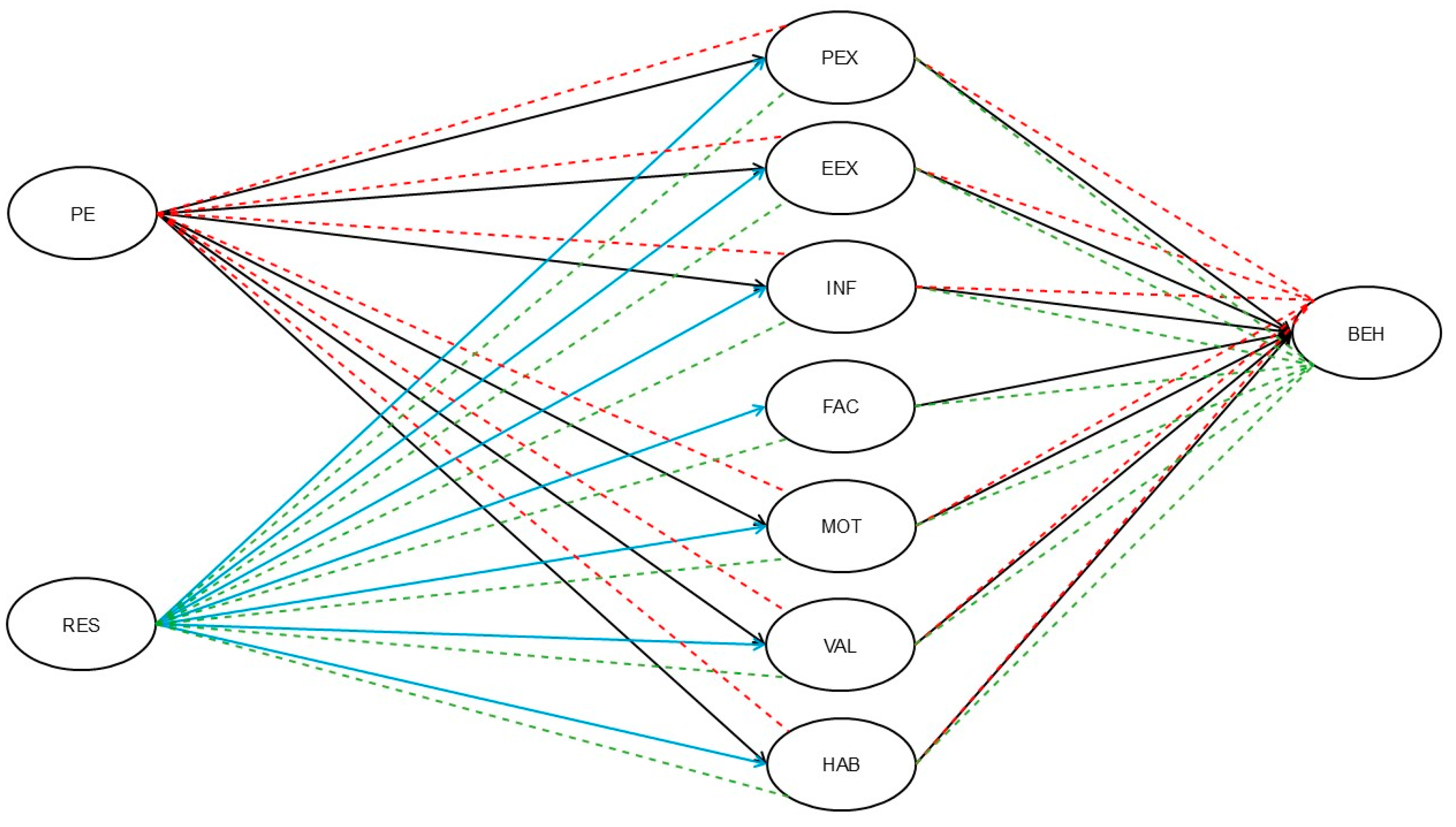

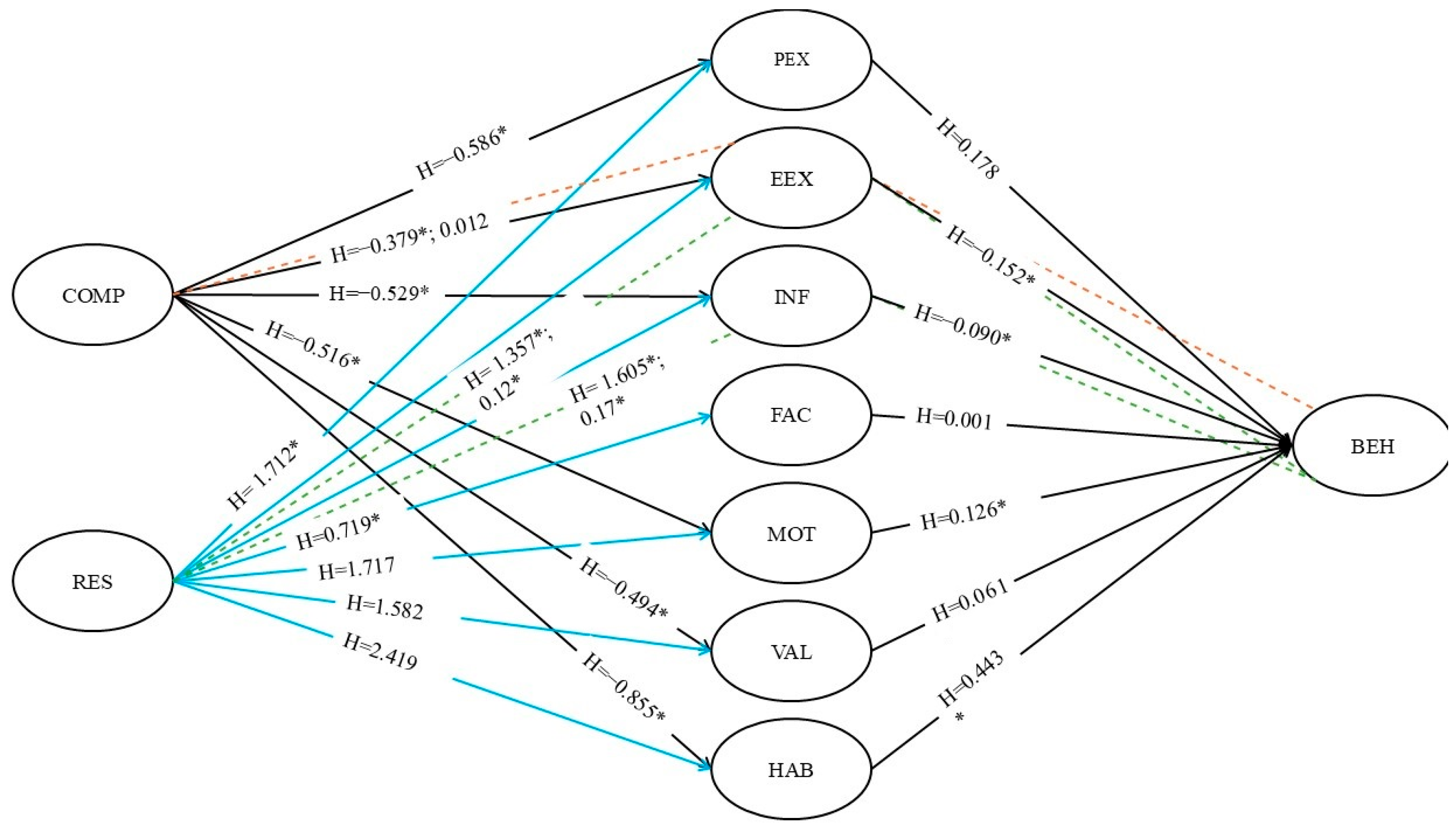

4. Objectives and Theory-Driven Hypotheses

5. Materials and Methods

5.1. Participants and Procedure

5.2. Research Instrument and Data Analysis

6. Results

6.1. Normality Assessment of Sample Distribution

6.2. Ad Hoc Validation of Research Instrument

7. Discussion

8. Conclusions

8.1. Future Research Directions

- -

- Intervention Planning: The assessment of pre-service teachers’ prior knowledge reveals a widespread lack of familiarity with AI applications in education. This study reveals the need to develop a structured protocol focused on building competencies in educational AI implementation.

- -

- Demographic Analysis: A critical research direction involves examining potential variations in AI-adoption patterns across different sociodemographic groups. This analysis should investigate how influential factors such as age, educational background, and technological exposure are.

8.2. Research Limitations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- 4Docent.es. 2024. Cuaderno Docente con IA. Available online: https://4docent.es/ (accessed on 11 February 2025).

- Adelana, Owolabi Paul, Musa Adekunle Ayanwale, and Ismaila Temitayo Sanusi. 2024. Exploring Pre-Service Biology Teachers’ Intention to Teach Genetics Using an AI Intelligent Tutoring-Based System. Cogent Education 11: 2310976. [Google Scholar] [CrossRef]

- Adell, Jordi. 1996. Internet en educación: Una gran oportunidad. Net Conexión 11: 44–47. [Google Scholar]

- Agus, Mirian, Giovanni Bonaiuti, and Arianna Marras. 2024. Psychometric validation of the Robotics Interest Questionnaire (RIQ) scale with Italian teachers. Journal of Science Education and Technology 33: 68–83. [Google Scholar] [CrossRef]

- Alonso-Rodríguez, Ana María. 2024. Hacia un Marco Ético de la Inteligencia Artificial en la Educación. Teoría de la Educación. Revista Interuniversitaria 36: 79–98. [Google Scholar] [CrossRef]

- Ayanwale, Musa Adekunle, Emmanuel Kwabena Frimpong, Oluwaseyi Aina Gbolade Opesemowo, and Ismaila Temitayo Sanusi. 2024. Exploring factors that support pre-service teachers’ engagement in learning artificial intelligence. Journal for STEM Education Research 8: 199–229. [Google Scholar] [CrossRef]

- Aznar-Díaz, Isabel, Patricia Ayllón-Salas, Francisco D. Fernández-Martín, and María Ramos-Navas-Parejo. 2025. Exploring Predictors of Success in Massive Open Online Courses (MOOC). RIED-Revista Iberoamericana de Educación a Distancia 28: 239–257. [Google Scholar] [CrossRef]

- Batanero, Carmen. 1998. Recursos para la educación estadística en Internet. Uno 15: 13–26. [Google Scholar]

- Bedir Erişti, Suzan Duygu, and Kerry Freedman. 2024. Integrating Digital Technologies and AI in Art Education: Pedagogical Competencies and the Evolution of Digital Visual Culture. Participatory Educational Research 11: 57–79. [Google Scholar] [CrossRef]

- Bentler, Peter M., and Douglas G. Bonett. 1980. Significance tests and goodness of fit in the analysis of covariance structures. Psychological Bulletin 88: 588–606. [Google Scholar] [CrossRef]

- Bolaño-García, Manuel, and Nicolás Duarte-Acosta. 2024. Una Revisión Sistemática del Uso de la IA en la Educación. Revista Colombiana de Cirugía 39: 51–63. [Google Scholar]

- Bollen, Kenneth A. 1989. Structural Equations with Latent Variables. Hoboken: John Wiley & Sons. [Google Scholar]

- Bozkurt, Aras. 2023. Unleashing the Potential of Generative AI, Conversational Agents and Chatbots in Educational Praxis. Open Praxis 15: 261–70. [Google Scholar] [CrossRef]

- Bozkurt, Aras. 2024. Tell Me Your Prompts and I Will Make Them True. Open Praxis 16: 111–18. [Google Scholar] [CrossRef]

- Brett, Jeanne M., and Fritz Drasgow. 2002. The Psychology of Work: Theoretically Based Empirical Research. Mahwah: Lawrence Erlbaum Associates. [Google Scholar]

- Caballero-García, Presentación Ángeles, Pilar Ester Mariñoso, Isabel Morales Jareño, and Emilio Cañadas Rodríguez. 2024. La IA como herramienta de creación de contenidos y personalización del proceso de enseñanza aprendizaje. In Metodologías Emergentes en la Investigación y Acción Educativa. Edited by Ana Belén Barragán, María del Mar Simón Márquez, José Jesús Gázquez Linares, Elena Martínez Casanova and Silvia Fernández Gea. Madrid: Dykinson, pp. 177–91. [Google Scholar]

- Centro de Servicios Informáticos y Redes de Comunicación. 2024. Inteligencia Artificial en la UGR: Microsoft Copilot. Universidad de Granada. Available online: https://csirc.ugr.es/informacion/noticias/microsoft-copilot (accessed on 8 February 2024).

- Cheung, Gordon W., Helena D. Cooper-Thomas, Rebecca S. Lau, and Linda C. Wang. 2024. Reporting reliability, convergent and discriminant validity with structural equation modeling: A review and best-practice recommendations. Asia Pacific Journal of Management 41: 745–83. [Google Scholar] [CrossRef]

- Chiu, Thomas K. F., Benjamin Luke Moorhouse, Ching Sing Chai, and Murod Ismailov. 2023. Teacher Support and Student Motivation to Learn with Artificial Intelligence (AI) Based Chatbot. Interactive Learning Environments 32: 3240–56. [Google Scholar] [CrossRef]

- Chiu, Thomas K. F., Zubair Ahmad, and Murat Çoban. 2024. Development and validation of teacher artificial intelligence (AI) competence self-efficacy (TAICS) scale. Education and Information Technologies 30: 6667–85. [Google Scholar] [CrossRef]

- Clemente-Alcocer, Antonio A., Antonio Cabello-Cabrera, and Elizabeth Añorve-García. 2024. La IA en la Educación: Desafíos Éticos. Revista Latinoamericana de Ciencias Sociales y Humanidades 5: 464–72. [Google Scholar] [CrossRef]

- Cochran, William Gemmell, and Eva C. Díaz. 1980. Técnicas de Muestreo. Mexico City: Compañía Editorial Continental. [Google Scholar]

- Cohen, Jacob. 1988. Statistical Power Analysis for the Behavioral Sciences, 2nd ed. New York: Routledge. [Google Scholar]

- Collado-Alonso, Rocío, Laura Picazo-Sánchez, Ana-Teresa López-Pastor, and Agustín García-Matilla. 2023. ¿Qué enseña el social media? Influencers y followers ante la educación informal en redes sociales. Revista Mediterránea de Comunicación 14: 259–70. [Google Scholar] [CrossRef]

- Cortina, José M. 1993. What Is Coefficient Alpha? An Examination of Theory and Applications. Journal of Applied Psychology 78: 98–104. [Google Scholar] [CrossRef]

- Cukurova, Mutlu, Xin Miao, and Richard Brooker. 2023. Adoption of Artificial Intelligence in Schools. In Artificial Intelligence in Education. AIED 2023. Edited by Ning Wang, Genaro Rebolledo-Mendez, Noboru Matsuda, Olga C. Santos and Vania Dimitrova. Cham: Springer, pp. 151–63. [Google Scholar] [CrossRef]

- De Frutos, Nahia Delgado, Lucía Campo-Carrasco, Martín Sainz de la Maza, and José María Extabe-Urbieta. 2023. Application of artificial intelligence (AI) in education: Benefits and limitations of AI as perceived by primary, secondary, and higher education teachers. Revista Electrónica Interuniversitaria de Formación del Profesorado 27: 207–25. [Google Scholar] [CrossRef]

- DeCarlo, Lawrence T. 1997. On the Meaning and Use of Kurtosis. Psychological Methods 2: 292–307. [Google Scholar] [CrossRef]

- Del Moral-Pérez, M. Esther, Nerea López-Bouzas, and Jonathan Castañeda-Fernández. 2024. Transmedia skill derived from the process of converting films into educational games with augmented reality and artificial intelligence. Journal of New Approaches in Educational Research 13: 15. [Google Scholar] [CrossRef]

- Faul, Franz, Edgar Erdfelder, Albert Buchner, and Axel-Georg Lang. 2009. Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behavior Research Methods 41: 1149–60. [Google Scholar] [CrossRef] [PubMed]

- Galindo-Domínguez, Héctor, Nahia Delgado, Lucía Campo, and Martín Sainz de la Maza. 2024. Uso de ChatGpt en educación superior. Un análisis en función del género, rendimiento académico, año y grado universitario del alumnado. Red U 22: 16–30. [Google Scholar] [CrossRef]

- Galindo-Domínguez, Héctor, Nahia Delgado, María-Victoria Urruzola, Jose-María Etxabe, and Lucía Campo. 2025. Using artificial intelligence to promote adolescents’ learning motivation. A longitudinal intervention from the self-determination theory. Journal of Computer Assisted Learning 41: e70020. [Google Scholar] [CrossRef]

- García Cabrero, Benilde, Javier Loredo Enríquez, and Guadalupe Carranza Peña. 2008. Análisis de la práctica educativa de los docentes: Pensamiento, interacción y reflexión. Revista Electrónica de Investigación Educativa 10: 1–15. [Google Scholar]

- Gerbing, David W., and James C. Anderson. 1984. On the Meaning of Within-Factor Correlated Measurement Errors. Journal of Consumer Research 11: 572–80. [Google Scholar] [CrossRef]

- Costa, Guilherme Gonçalves, Wilton J. D. Nascimento Júnior, Murilo Nícolas Mombelli, and Gildo Girotto Júnior. 2024. Revisiting a teaching sequence on the topic of electrolysis: A comparative study with the use of artificial intelligence. Journal of Chemical Education 101: 3255–63. [Google Scholar] [CrossRef]

- Hair, Joseph F., G. Tomas M. Hult, Christian Ringle, and Marko Sarstedt. 2016. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM). London: SAGE. [Google Scholar]

- Hair, Joseph F., William C. Black, Barry J. Babin, and Rolph E. Anderson. 2019. Multivariate Data Analysis. Boston: Cengage. [Google Scholar]

- Hair, Joseph F., G. Tomas M. Hult, Christian Ringle, Marko Sarstedt, Nicholas P. Danks, and Siegfried Roy. 2022. Partial Least Squares Structural Equation Modeling (PLS-SEM) Using R: A Workbook. Cham: Springer. [Google Scholar]

- Hargreaves, Andy, and Michael Fullan. 2012. Professional Capital: Transforming Teaching in Every School. New York: Teachers College Press. [Google Scholar]

- Henseler, Jörg, Christian M. Ringle, and Marko Sarstedt. 2015. A new criterion for assessing discriminant validity in variance-based structural equation modeling. Journal of the Academy of Marketing Science 43: 115–35. [Google Scholar] [CrossRef]

- Hermida, Rafael. 2015. The Problem of Allowing Correlated Errors in Structural Equation Modeling: Concerns and Considerations. Computational Methods in Social Sciences 3: 5–17. [Google Scholar]

- Hezam, Abdulrahman Mokbel Mahyoub, and Abdulelah Alkhateeb. 2024. Short stories and AI tools: An exploratory study. Theory and Practice in Language Studies 14: 2053–62. [Google Scholar] [CrossRef]

- Hu, Li-tze, and Peter M. Bentler. 1999. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modelling: A Multidisciplinary Journal 6: 1–55. [Google Scholar] [CrossRef]

- Jefatura del Estado. 2018. Ley Orgánica 3/2018, de Protección de Datos Personales y Garantía de los Derechos Digitales. Madrid: Boletín Oficial del Estado (BOE), No. 294 (December 6), pp. 1–118. Available online: https://www.boe.es/buscar/pdf/2018/BOE-A-2018-16673-consolidado.pdf (accessed on 20 March 2025).

- Jöreskog, Karl G., and Dag Sörbom. 1993. LISREL 8: Structural Equation Modeling with the SIMPLIS Command Language. Mahwah: Lawrence Erlbaum Associates. [Google Scholar]

- Kalniņa, Daiga, Dita Nīmante, and Sanita Baranova. 2024. Artificial intelligence for higher education: Benefits and challenges for pre-service teachers. Front. Educ. 9: 1501819. [Google Scholar] [CrossRef]

- Karran, Alex J., Patrick Charland, J. T. Martineau, A. de Arana, A. M. Lesage, Sylvain Senecal, and Pierre-Majorique Leger. 2024. Multi-stakeholder perspective on responsible artificial intelligence and acceptability in education. arXiv arXiv:2402.15027. [Google Scholar]

- Kenny, David A. 2011. Respecification of Latent Variable Models. Structural Equation Modeling. Available online: https://davidakenny.net/cm/respec.htm (accessed on 20 March 2025).

- Kenny, David A., and Silvia Milan. 2012. Identification: A nontechnical discussion of a technical issue. In Handbook of Structural Equation Modeling. Edited by Rick H. Hoyle. New York: The Guilford Press, pp. 145–63. [Google Scholar]

- Khalil, Hanan, and Said Alsenaidi. 2024. Teachers’ digital competencies for effective AI integration in higher education in Oman. Journal of Education and E-Learning Research 11: 698–707. [Google Scholar] [CrossRef]

- Kim, Jae Hae. 2019. Multicollinearity and Misleading Statistical Results. Korean Journal of Anesthesiology 72: 558–69. [Google Scholar] [CrossRef] [PubMed]

- Kline, Rex B. 2016. Principles and Practice of Structural Equation Modeling, 4th ed. New York: Guilford Press. [Google Scholar]

- Kocakaya, Serhat, and Fatih Kocakaya. 2014. A structural equation modeling on factors of how experienced teachers affect the students’ science and mathematics achievements. Education Research International 2014: 490371. [Google Scholar] [CrossRef]

- Lee, Y. 2023. A study on the effectiveness analysis of liberal arts education for the improvement of artificial intelligence literacy of pre-service teachers. Journal of Computer Education Research 26: 73–89. [Google Scholar] [CrossRef]

- Lin, Hua. 2022. Influences of Artificial Intelligence in Education on Teaching Effectiveness. International Journal of Emerging Technologies in Learning (iJET) 17: 144–56. [Google Scholar] [CrossRef]

- Lü, Hong, Ling He, Hao Yu, Tong Pan, and Kai Fu. 2024. A Study on Teachers’ Willingness to Use Generative AI. Sustainability 16: 7216. [Google Scholar] [CrossRef]

- MacCallum, Robert C., and Sehee Hong. 1997. Power analysis in covariance structure modeling using GFI and AGFI. Multivariate Behavioral Research 32: 193–210. [Google Scholar] [CrossRef]

- Mardia, Kantil V. 1970. Measures of Multivariate Skewness and Kurtosis with Applications. Biometrika 57: 519–30. [Google Scholar] [CrossRef]

- Mardia, Kantil V. 1974. Applications of Some Measures of Multivariate Skewness and Kurtosis in Testing Normality and Robustness Studies. Sankhyā: The Indian Journal of Statistics Series B 36: 115–28. [Google Scholar]

- Marsh, Herbert W., and Dennis Hocevar. 1985. Application of Confirmatory Factor Analysis to the Study of Self Concept: First and Higher Order Factor Models and Their Invariance Across Groups. Psychological Bulletin 97: 562–82. [Google Scholar] [CrossRef]

- Martínez-Domingo, José-Antonio, José-María Romero-Rodríguez, Arturo Fuentes-Cabrera, and Inmaculada Aznar-Díaz. 2024. Los Influencers y su Papel en la Educación: Una Revisión Sistemática. Manila: Educar. [Google Scholar] [CrossRef]

- Ministerio de Universidades. 2022. Libro de Trabajo: Academica21_EEUU. Estudiantes en las Universidades Españolas. Available online: https://public.tableau.com/views/Academica21_EEU/InfografiaEEU?%3AshowVizHome=no&%3Aembed=true (accessed on 20 March 2025).

- Montero, Isabel, and Orlando G. León. 2005. A guide for naming research studies in Psychology. International Journal of Clinical and Health Psychology 5: 115–27. [Google Scholar]

- Mora-Cantallops, Marçal, Andreia Inamorato dos Santos, Cristina Villalonga-Gómez, Juan Ramón Lacalle Remigio, Juan Camarillo Casado, José Manuel Sota Eguizabal, Juan Ramón Velasco, and Pedro Miguel Ruiz Martínez. 2022. The Digital Competence of Academics in Spain: A Study Based on the European Frameworks DigCompEdu and OpenEdu. Luxembourg: Publications Office of the European Union. [Google Scholar] [CrossRef]

- Moreira-Zambrano, Mayra del Carmen, Yurelquis Marzo-Villalón, and Segress García-Hevia. 2025. IA en primer año de bachillerato técnico: Guía didáctica para su uso ético y eficaz. Scientific Journal MQRInvestigar 9: 1–35. [Google Scholar] [CrossRef]

- Muijs, Daniel. 2022. Doing Quantitative Research in Education with IBM SPSS Statistics, 3rd ed. London: SAGE. [Google Scholar]

- Núñez, Raúl Prada, William Rodrigo Avendaño Castro, and Cesar Augusto Hernández Suarez. 2022. Globalización y cultura digital en entornos educativos. Revista Boletín Redipe 11: 262–72. [Google Scholar] [CrossRef]

- Peng, Yi, Yanyu Wang, and Jie Hu. 2023. Examining ICT attitudes, use and support in blended learning settings for students’ reading performance: Approaches of artificial intelligence and multilevel model. Computers & Education 203: 104846. [Google Scholar] [CrossRef]

- Pérez Ibáñez, J. D. 2024. Curso de Evaluación Competencial con IA. Available online: https://jose-david.com/ (accessed on 20 March 2025).

- Piette, Jacques. 2000. La educación en medios de comunicación y las nuevas tecnologías. Comunicar 14: 17–22. [Google Scholar] [CrossRef]

- Quicaño-Arones, César, Carla León-Fernández, and Antonio Moquillaza-Vizarreta. 2019. Un modelo para medir el comportamiento en la aceptación tecnológica del servicio de Internet en hoteles peruanos basado en UTAUT2. Caso ‘Casa Andina’. 3C TIC: Cuadernos de Desarrollo Aplicados a las TIC 8: 12–35. [Google Scholar] [CrossRef]

- Rahimi, Ali Reza, and Zahra Mosalli. 2024. The Role of 21-Century Digital Competence in Shaping Pre-Service Language Teachers’ Skills. Journal of Computers in Education 12: 165–89. [Google Scholar] [CrossRef]

- Rosseel, Yves. 2012. lavaan: An R Package for Structural Equation Modeling. Journal of Statistical Software 48: 1–36. [Google Scholar] [CrossRef]

- Russo, Giuseppe Maria, Patricia Amelia Tomei, Bernardo Serra, and Sylvia Mello. 2021. Differences in the use of 5- or 7-point Likert scale: An application in food safety culture. Organizational Cultures: An International Journal 21: 1–17. [Google Scholar] [CrossRef]

- Saifi, Sajuddin, Shaista Tanveer, Mohd Arwab, Dori Lal, and Nabila Mirza. 2025. Exploring the persistence of Open AI adoption among users in Indian higher education: A fusion of TCT and TTF model. In Education and Information Technologies. Cham: Springer. [Google Scholar] [CrossRef]

- Satorra, Albert, and Peter M. Bentler. 1994. Corrections to Test Statistics and Standard Errors in Covariance Structure Analysis. In Latent Variables Analysis: Applications for Developmental Research, edited by Alexander von Eye and Clifford C. Clogg. Thousand Oaks: SAGE, pp. 399–419. [Google Scholar]

- Skantz-Åberg, Emma, Anna Lantz-Andersson, Monica Lundin, and Peter Williams. 2022. Teachers’ Professional Digital Competence. Cogent Education 9: 1–23. [Google Scholar] [CrossRef]

- Suárez Rodríguez, José M., and José M. Jornet Meliá. 1994. Evaluación referida al criterio: Construcción de un test criterial de clase. In Problemas y Métodos de Investigación en Educación Personalizada. Edited by Víctor García-Hoz Rosales. Madrid: Rialp, pp. 419–43. [Google Scholar]

- Suconota-Pintado, Luis, Rubén Sánchez-Prado, Carlos Orellana-Peláez, and Walter Ávila-Aguilar. 2023. IA y Sostenibilidad. Magazine de las Ciencias 8: 12–28. [Google Scholar] [CrossRef]

- Svoboda, Petr. 2024. Teachers’ Digital Competencies. TEM Journal 13: 2195–207. [Google Scholar] [CrossRef]

- Tenberga, Ilze, and Linda Daniela. 2024. Artificial Intelligence Literacy Competencies for Teachers Through Self-Assessment Tools. Sustainability 16: 10386. [Google Scholar] [CrossRef]

- Tong, Ying, and Li Zhang. 2023. Discovering the Next Decade’s Synthetic Biology Research Trends with ChatGPT. Synthetic and Systems Biotechnology 8: 220–23. [Google Scholar] [CrossRef]

- Universidad de Huelva. 2022. Copilot. Available online: https://www.uhu.es/copilot (accessed on 1 February 2025).

- Universidad de Sevilla. 2024. Guías Copilot. Microsoft 365 Universidad de Sevilla. Available online: https://m365.us.es/es/guias/copilot (accessed on 20 March 2025).

- Usán-Supervía, Pedro, and Raquel Castellanos-Vega. 2024. Fomento de la Motivación Académica y la Competencia Digital. Revista Electrónica de Investigación Psicoeducativa 22: 419–40. [Google Scholar] [CrossRef]

- Van den Berg, Geesje, and Elize du Plessis. 2023. ChatGPT and generative AI: Possibilities for its contribution to lesson planning, critical thinking, and openness in teacher education. Education Sciences 13: 998. [Google Scholar] [CrossRef]

- Venkatesh, Viswanath, James Y. L. Thong, and Xin Xu. 2012. Consumer Acceptance and Use of Information Technology: Extending the Unified Theory of Acceptance and Use of Technology. MIS Quarterly 36: 157–78. [Google Scholar] [CrossRef]

- Ventura-León, José L., and Tomás Caycho-Rodríguez. 2017. El Coeficiente Omega: Un Método Alternativo para la Estimación de la Confiabilidad. Revista Latinoamericana de Ciencias Sociales, Niñez y Juventud 15: 625–27. [Google Scholar]

- Voogt, Joke. 2010. Teacher Factors Associated with Innovative Curriculum Goals and Pedagogical Practices. Journal of Computer Assisted Learning 26: 453–64. [Google Scholar] [CrossRef]

- Wang, Ying, and Lin Sun. 2024. Research on the Influence Mechanism of the Willingness to Apply Generative AI. New Explorations in Education and Teaching 2: 54–57. [Google Scholar] [CrossRef]

- Wu, Guangdong, Cong Liu, Xianbo Zhao, and Jian Zuo. 2017. Investigating the relationship between communication-conflict interaction and project success among construction project teams. International Journal of Project Management 35: 1466–82. [Google Scholar] [CrossRef]

- Zawacki-Richter, Olaf, Victoria I. Marín, Melissa Bond, and Franziska Gouverneur. 2019. Systematic review of research on artificial intelligence applications in higher education—where are the educators? International Journal of Educational Technology in Higher Education 16: 39. [Google Scholar] [CrossRef]

- Zhang, Yuhong. 2024. Research on the connotation, dilemmas, and practical path of digital and intelligent competency of university teachers in the ‘Big Data + Artificial Intelligence’ era. Journal of Computer Technology and Electronic Research 1: 1–14. [Google Scholar] [CrossRef]

- Zhang, Chengming, Jessica Schießl, Lea Plößl, Florian Hofmann, and Michaela Gläser-Zikuda. 2023. Acceptance of artificial intelligence among pre-service teachers: A multi-group analysis. International Journal of Educational Technology in Higher Education 20: 49. [Google Scholar] [CrossRef]

| Variable | M | SD | VIF | Tolerance | K-S-L | S-W | Skewness | Kurtosis |

|---|---|---|---|---|---|---|---|---|

| ENG1 | 4.110 | 1.657 | 1.418 | 0.705 | 0.168 *** | 0.905 *** | 0.203 | −0.853 |

| ENG2 | 3.480 | 1.344 | 1.450 | 0.690 | 0.320 *** | 0.810 *** | 1.251 | 1.223 |

| ENG3 | 3.700 | 1.601 | 1.510 | 0.662 | 0.184 *** | 0.927 *** | 0.161 | −1.066 |

| ENG4 | 2.590 | 1.449 | 1.210 | 0.826 | 0.238 *** | 0.877 *** | 0.768 | −0.167 |

| RES1 | 3.820 | 1.499 | 1.405 | 0.712 | 0.211 *** | 0.913 *** | 0.19 | −1.114 |

| RES2 | 3.930 | 1.854 | 1.400 | 0.714 | 0.155 *** | 0.923 *** | 0.068 | −1.209 |

| RES3 | 3.910 | 1.533 | 1.329 | 0.753 | 0.157 *** | 0.947 *** | 0.218 | −0.588 |

| PEX1 | 4.150 | 0.828 | 1.569 | 0.637 | 0.252 *** | 0.802 *** | −1.042 | 1.543 |

| PEX3 | 4.160 | 0.842 | 1.491 | 0.671 | 0.261 *** | 0.796 *** | −1.132 | 1.744 |

| PEX4 | 3.520 | 1.073 | 1.300 | 0.769 | 0.208 *** | 0.899 *** | −0.377 | −0.51 |

| EEX1 | 3.970 | 0.851 | 2.097 | 0.477 | 0.259 *** | 0.846 *** | −0.666 | 0.393 |

| EEX2 | 3.860 | 0.858 | 1.857 | 0.538 | 0.268 *** | 0.855 *** | −0.608 | 0.406 |

| EEX3 | 3.980 | 0.857 | 2.091 | 0.478 | 0.266 *** | 0.841 *** | −0.746 | 0.58 |

| EEX4 | 3.850 | 0.857 | 1.839 | 0.544 | 0.269 *** | 0.857 *** | −0.585 | 0.319 |

| INF1 | 3.240 | 0.968 | 2.441 | 0.410 | 0.250 *** | 0.888 *** | −0.071 | 0.002 |

| INF2 | 3.260 | 0.949 | 3.037 | 0.329 | 0.262 *** | 0.877 *** | −0.086 | 0.173 |

| INF3 | 3.170 | 0.952 | 2.350 | 0.426 | 0.263 *** | 0.875 *** | −0.104 | 0.247 |

| FAC1 | 4.020 | 0.816 | 1.634 | 0.612 | 0.288 *** | 0.824 *** | −0.791 | 0.709 |

| FAC2 | 3.770 | 0.878 | 1.569 | 0.637 | 0.262 *** | 0.869 *** | −0.464 | −0.088 |

| FAC3 | 4.070 | 0.743 | 1.359 | 0.736 | 0.287 *** | 0.810 *** | −0.728 | 1.116 |

| FAC4 | 3.90 | 0.84 | 1.255 | 0.797 | 0.311 *** | 0.828 *** | −0.827 | 0.881 |

| MOT1 | 3.80 | 0.862 | 2.391 | 0.418 | 0.220 *** | 0.861 *** | −0.324 | −0.036 |

| MOT2 | 3.820 | 0.806 | 2.100 | 0.476 | 0.247 *** | 0.855 *** | −0.288 | −0.025 |

| MOT3 | 3.750 | 0.858 | 2.585 | 0.387 | 0.227 *** | 0.865 *** | −0.311 | 0.003 |

| VAL1 | 3.370 | 0.949 | 2.154 | 0.464 | 0.240 *** | 0.888 *** | −0.118 | −0.059 |

| VAL2 | 3.420 | 0.901 | 2.942 | 0.340 | 0.262 *** | 0.871 *** | −0.062 | 0.11 |

| VAL3 | 3.420 | 0.871 | 2.648 | 0.378 | 0.269 *** | 0.865 *** | −0.029 | 0.189 |

| HAB1 | 3.280 | 1.165 | 1.710 | 0.585 | 0.194 *** | 0.907 *** | −0.325 | −0.673 |

| HAB2 | 2.420 | 1.249 | 1.730 | 0.578 | 0.203 *** | 0.877 *** | 0.515 | −0.767 |

| HAB3 | 3.110 | 1.033 | 1.417 | 0.706 | 0.218 *** | 0.905 *** | −0.222 | −0.304 |

| BEH1 | 3.870 | 0.832 | 1.642 | 0.609 | 0.284 *** | 0.839 *** | −0.744 | 1.083 |

| BEH2 | 3.010 | 1.058 | 1.732 | 0.577 | 0.192 *** | 0.915 *** | 0.022 | −0.513 |

| BEH3 | 3.440 | 0.971 | 2.269 | 0.441 | 0.226 *** | 0.887 *** | −0.487 | 0.082 |

| Multivariate | 111.490 *** | 1405.524 *** |

| Latent Variables and Indicators | λ | R2 | AVE | α | ω | CR | ||

|---|---|---|---|---|---|---|---|---|

| Control | ||||||||

| Professional Engagement (ENG) | ENG1 | 0.669 | 0.448 | 0.405 | 0.721 | 0.729 | 0.728 | |

| ENG2 | 0.630 | 0.397 | ||||||

| ENG3 | 0.717 | 0.514 | ||||||

| ENG4 | 0.510 | 0.260 | ||||||

| Digital Resource Creation (RES) | RES1 | 0.702 | 0.492 | 0.444 | 0.700 | 0.706 | 0.705 | |

| RES2 | 0.660 | 0.436 | ||||||

| RES3 | 0.636 | 0.405 | ||||||

| Performance Expectancy (PEX) | PEX1 | PEX3 | 0.680 | 0.463 | 0.419 | 0.707 | 0.708 | 0.683 |

| PEX3 | PEX1 | 0.614 | 0.377 | |||||

| PEX4 | 0.646 | 0.417 | ||||||

| Effort Expectancy (EEX) | EEX1 | EEX3 | 0.738 | 0.545 | 0.598 | 0.855 | 0.855 | 0.856 |

| EEX2 | EEX4 | 0.816 | 0.666 | |||||

| EEX3 | EEX1 | 0.733 | 0.538 | |||||

| EEX4 | EEX2 | 0.802 | 0.643 | |||||

| Social Influence (INF) | INF1 | INF2 | 0.804 | 0.647 | 0.710 | 0.884 | 0.886 | 0.880 |

| INF2 | INF1 | 0.892 | 0.796 | |||||

| INF3 | 0.828 | 0.686 | ||||||

| Facilitating Conditions (FACs) | FAC1 | FAC2 | 0.666 | 0.443 | 0.410 | 0.742 | 0.748 | 0.733 |

| FAC2 | FAC1 | 0.715 | 0.511 | |||||

| FAC3 | 0.628 | 0.395 | ||||||

| FAC4 | 0.539 | 0.290 | ||||||

| Hedonic Motivation (MOT) | MOT1 | MOT2 | 0.894 | 0.799 | 0.733 | 0.871 | 0.873 | 0.892 |

| MOT2 | MOT1 | 0.863 | 0.745 | |||||

| MOT3 | 0.810 | 0.657 | ||||||

| Price Value (VAL) | VAL1 | VAL3 | 0.793 | 0.629 | 0.525 | 0.881 | 0.882 | 0.768 |

| VAL2 | 0.686 | 0.471 | ||||||

| VAL3 | VAL1 | 0.690 | 0.476 | |||||

| Habits (HABs) | HAB1 | 0.826 | 0.683 | 0.739 | 0.770 | 0.781 | 0.895 | |

| HAB2 | HAB3 | 0.860 | 0.739 | |||||

| HAB3 | HAB2 | 0.892 | 0.796 | |||||

| Behavioral Intention (BEH) | BEH1 | BEH2 | 0.773 | 0.597 | 0.627 | 0.797 | 0.815 | 0.834 |

| BEH2 | BEH1 | 0.786 | 0.617 | |||||

| BEH3 | 0.816 | 0.666 | ||||||

| Total | N/A | N/A | N/A | 0.906 | 0.895 | N/A | ||

| ENG | RES | PEX | EEX | INF | FAC | MOT | HAB | VAL | BEH | |

|---|---|---|---|---|---|---|---|---|---|---|

| ENG | 0.14 | |||||||||

| RES | 0.89 | 0.14 | ||||||||

| PEX | 0.17 | 0.21 | 0.34 | |||||||

| EEX | 0.22 | 0.22 | 0.75 | 0.29 | ||||||

| INF | 0.22 | 0.2 | 0.58 | 0.38 | 0.19 | |||||

| FAC | 0.3 | 0.29 | 0.61 | 0.76 | 0.36 | 0.25 | ||||

| MOT | 0.25 | 0.2 | 0.7 | 0.61 | 0.43 | 0.62 | 0.259 | |||

| VAL | 0.23 | 0.21 | 0.57 | 0.42 | 0.62 | 0.22 | 0.523 | 0.24 | ||

| HAB | 0.21 | 0.22 | 0.48 | 0.41 | 0.47 | 0.45 | 0.513 | 0.59 | 0.19 | |

| BEH | 0.165 | 0.155 | 0.735 | 0.494 | 0.558 | 0.456 | 0.669 | 0.873 | 0.552 | N/A |

| Factor | Levene’s Test | Independent Sample t Test |

|---|---|---|

| ENG | 10.532 ** | −12.933 *** |

| RES | 16.467 *** | −28.483 *** |

| TPEX | 3.552 | −2.903 ** |

| TEEX | 0.087 | −3.011 ** |

| INF | 4.532 * | −2.078 * |

| TFAC | 0.685 | −4.193 *** |

| TMOT | 2.652 | −1.805 |

| VAL | 8.420 ** | −3.025 ** |

| HAB | 5.648 * | −1.398 |

| BEH | 4.110 * | −0.871 |

| Hypothesis | Relationship | β | B | SD | p | Status |

|---|---|---|---|---|---|---|

| H1A | COMP → PEX | −0.586 | 0.056 | −1.434 | 0.000 | Confirmed |

| H1B | COMP → EEX | −0.379 | 0.041 | −9.267 | 0.000 | Confirmed |

| H1C | COMP → INF | −0.529 | 0.053 | −9.937 | 0.000 | Confirmed |

| H1D | COMP → MOT | −0.516 | 0.053 | −9.710 | 0.000 | Confirmed |

| H1E | COMP → VAL | −0.494 | 0.051 | −9.637 | 0.000 | Confirmed |

| H1F | COMP → HAB | −0.855 | 0.082 | −1.394 | 0.000 | Confirmed |

| H2A | REC → PEX | 1.712 | 0.099 | 17.261 | 0.000 | Confirmed |

| H2B | REC → EEX | 1.357 | 0.079 | 17.238 | 0.000 | Confirmed |

| H2C | REC → INF | 1.605 | 0.096 | 16.797 | 0.000 | Confirmed |

| H2D | REC → FAC | 0.719 | 0.038 | 19.169 | 0.000 | Confirmed |

| H2E | REC → MOT | 1.717 | 0.098 | 17.572 | 0.000 | Confirmed |

| H2F | REC → VAL | 1.582 | 0.093 | 17.024 | 0.000 | Confirmed |

| H2G | REC → HAB | 2.419 | 0.141 | 17.187 | 0.000 | Confirmed |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Morales-Cevallos, M.B.; Alonso-García, S.; Martínez-Menéndez, A.; Victoria-Maldonado, J.J. Artificial Intelligence Adoption Amongst Digitally Proficient Trainee Teachers: A Structural Equation Modelling Approach. Soc. Sci. 2025, 14, 355. https://doi.org/10.3390/socsci14060355

Morales-Cevallos MB, Alonso-García S, Martínez-Menéndez A, Victoria-Maldonado JJ. Artificial Intelligence Adoption Amongst Digitally Proficient Trainee Teachers: A Structural Equation Modelling Approach. Social Sciences. 2025; 14(6):355. https://doi.org/10.3390/socsci14060355

Chicago/Turabian StyleMorales-Cevallos, María Belén, Santiago Alonso-García, Alejandro Martínez-Menéndez, and Juan José Victoria-Maldonado. 2025. "Artificial Intelligence Adoption Amongst Digitally Proficient Trainee Teachers: A Structural Equation Modelling Approach" Social Sciences 14, no. 6: 355. https://doi.org/10.3390/socsci14060355

APA StyleMorales-Cevallos, M. B., Alonso-García, S., Martínez-Menéndez, A., & Victoria-Maldonado, J. J. (2025). Artificial Intelligence Adoption Amongst Digitally Proficient Trainee Teachers: A Structural Equation Modelling Approach. Social Sciences, 14(6), 355. https://doi.org/10.3390/socsci14060355