Climate Change Disinformation on Social Media: A Meta-Synthesis on Epistemic Welfare in the Post-Truth Era

Abstract

1. Introduction

- To define and critically examine the concept of epistemic welfare in relation to climate change disinformation in the post-truth era.

- To identify and analyze the strategies used to disseminate climate change disinformation across global social media ecosystems.

- To evaluate the implications of disinformation for public understanding, policy development, and the integrity of global scientific consensus.

2. Conceptual Discourse

2.1. Epistemic Welfare and the Threat of Disinformation in the Digital Age

2.2. Post-Truth and the Crisis of Epistemic Welfare in a Disinformation Age

3. Theoretical Framework

4. Methodology

4.1. Research Design

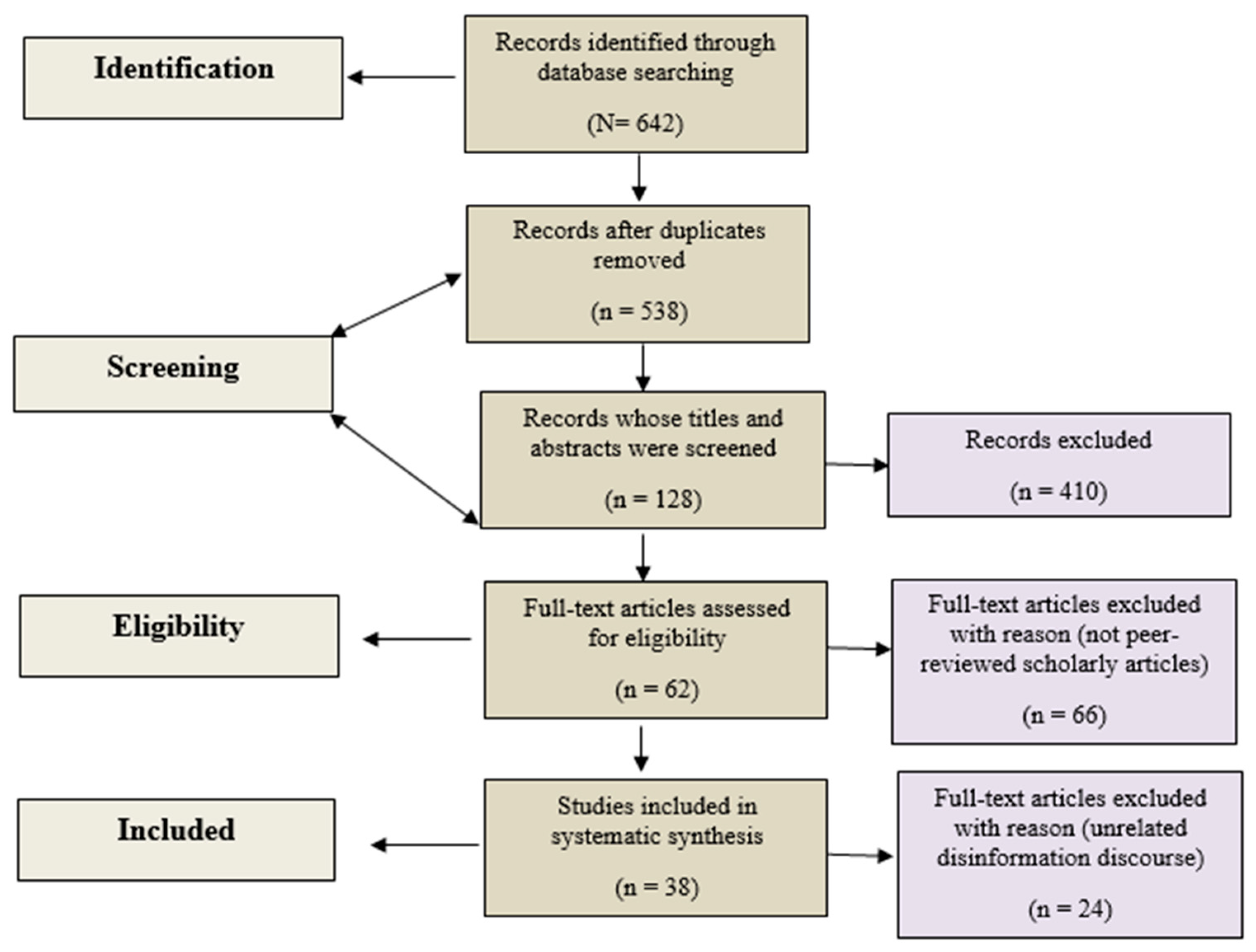

4.2. Inclusion and Exclusion Criteria

4.3. Data Extraction and Coding

4.4. Synthesis and Interpretation

5. Results and Discussions

5.1. Results

5.2. Visual Synthesis of Dominant Themes

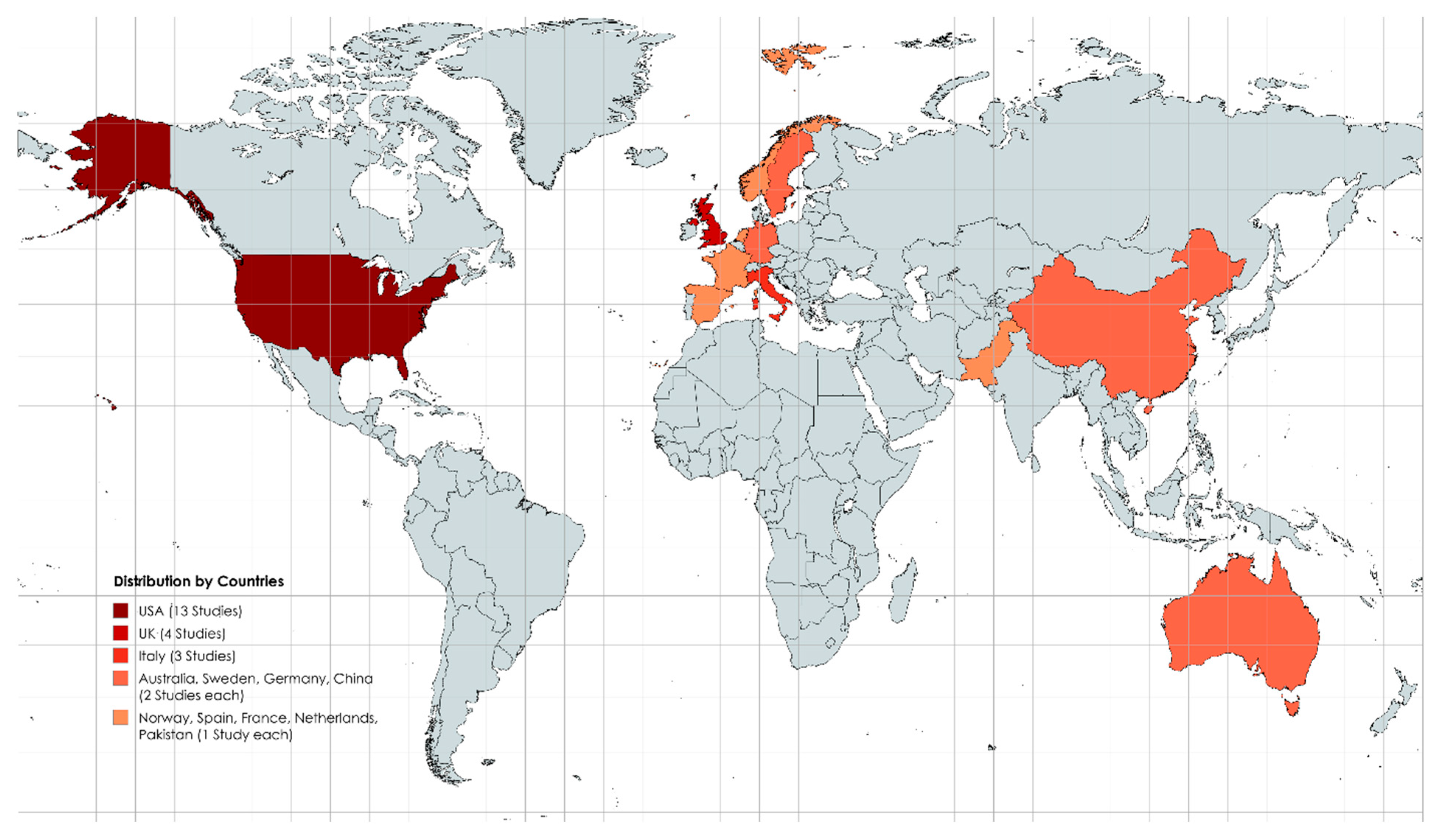

5.3. Spatial Mappings

5.4. Discussion of Findings

6. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Allgaier, Joachim. 2019. Science and Environmental Communication on YouTube: Strategically Distorted Communications in Online Videos on Climate Change and Climate Engineering. Frontiers in Communication 4: 36. [Google Scholar] [CrossRef]

- Al-Rawi, Ahmed, Derrick O’Keefe, Oumar Kane, and Aimé-Jules Bizimana. 2021. Twitter’s fake news discourses around climate change and global warming. Frontiers in Communication 6: 729818. [Google Scholar] [CrossRef]

- Bassolas, Aleix, Joan Massachs, Emanuele Cozzo, and Julian Vicens. 2024. A cross-platform analysis of polarization and echo chambers in climate change discussions. arXiv arXiv:2410.21187. [Google Scholar]

- Benegal, Salil D., and Lyle A. Scruggs. 2018. Correcting misinformation about climate change: The impact of partisanship in an experimental setting. Climatic Change 148: 61–80. [Google Scholar] [CrossRef]

- Bugden, Dylan. 2022. Denial and distrust: Explaining the partisan climate gap. Climatic Change 170: 34. [Google Scholar] [CrossRef]

- Bush, Martins. 2020. Denial and Deception. In Climate Change and Renewable Energy. Cham: Palgrave Macmillan. [Google Scholar] [CrossRef]

- Center for Countering Digital Hate. 2021. The Disinformation Dozen: Why Platforms Must Act on Twelve Leading Online Anti-Vaxxers. Available online: https://www.counterhate.com/disinformationdozen (accessed on 8 April 2025).

- Chu, Jianxun, Yuqi Zhu, and Jiaojiao Ji. 2023. Characterizing the semantic features of climate change misinformation on Chinese social media. Public Understanding of Science 32: 845–59. [Google Scholar] [CrossRef]

- Coeckelbergh, Mark. 2023. Democracy, epistemic agency, and AI: Political epistemology in times of artificial intelligence. AI and Ethics 3: 1341–50. [Google Scholar] [CrossRef]

- Corsi, Giulio. 2023. Evaluating Twitter’s Algorithmic Amplification of Low-Credibility Content: An Observational Study. arXiv arXiv:2305.06125. [Google Scholar] [CrossRef]

- Cosentino, Gabriele. 2020. Social Media and the Post-Truth World Order. London and Cham: Palgrave pivot. [Google Scholar]

- Dahlgren, Peter. 2018. Media, Knowledge and Trust: The Deepening Epistemic Crisis of Democracy. Javnost—The Public 25: 20–27. [Google Scholar] [CrossRef]

- Daume, Stephan. 2024. Online misinformation during extreme weather emergencies: Short-term information hazard or long-term influence on climate change perceptions? Environmental Research Communications 6: 022001. [Google Scholar] [CrossRef]

- Deryugina, Tatyana, and Olga Shurchkov. 2016. The effect of information provision on public consensus about climate change. PLoS ONE 11: e0151469. [Google Scholar] [CrossRef] [PubMed]

- Dunlap, Riley, and Robert Brulle. 2020. Sources and amplifiers of climate change denial. In Research Handbook on Communicating Climate Change. Cheltenham: Edward Elgar Publishing, pp. 49–61. [Google Scholar]

- Ejaz, Waqas, Muhammad Ittefaq, and Muhammad Arif. 2024. Understanding Influences, Misinformation, and Fact-Checking Concerning Climate-Change Journalism in Pakistan. In Journalism and Reporting Synergistic Effects of Climate Change. London: Routledge, pp. 168–88. [Google Scholar]

- Falkenberg, Max, Alessandro Galeazzi, Maddalena Torricelli, Niccolò Di Marco, Francesca Larosa, Madalina Sas, and Amin Mekacher. 2021. Growing polarisation around climate change on social media. arXiv arXiv:2112.12137. [Google Scholar]

- Fischer, Frank. 2019. Knowledge politics and post-truth in climate denial: On the social construction of alternative facts. Critical Policy Studies 13: 133–52. [Google Scholar] [CrossRef]

- Fischer, Frank. 2020. Post-truth politics and climate denial: Further reflections. Critical Policy Studies 14: 124–30. [Google Scholar] [CrossRef]

- Forsyth, Tim. 2012. Politicizing environmental science does not mean denying climate science nor endorsing it without question. Global Environmental Politics 12: 18–23. [Google Scholar] [CrossRef]

- Foucault, Michel. 1977. Discipline and Punish: The Birth of the Prison. Translated by A. Sheridan. New York: Pantheon Books. [Google Scholar]

- Fricker, Miranda. 2017. Evolving concepts of epistemic injustice. In Routledge Handbook of Epistemic Injustice. Routledge Handbooks in Philosophy. Edited by Ian James Kidd, José Medina and Gaile Pohlhaus, Jr. London: Routledge, pp. 53–60. ISBN 9781138828254. [Google Scholar]

- Goldman, Alvin. 1987. Foundations of social epistemics. Synthese 73: 109–44. [Google Scholar] [CrossRef]

- Gundersen, Torbjørn, Donya Alinejad, Teresa Yolande Branch, Bobby Duffy, Kirstie Hewlett, Cathrine Holst, and Susan Owens. 2022. A new dark age? Truth, trust, and environmental science. Annual Review of Environment and Resources 47: 5–29. [Google Scholar] [CrossRef]

- Habermas, Jurgen. 1984. The Theory of Communicative Action, Volume 1: Reason and the Rationalization of Society. Translated by T. McCarthy. Boston: Beacon Press. [Google Scholar]

- Heffernan, Alison. 2024. Countering Climate Disinformation in Africa. Available online: https://www.cigionline.org/static/documents/DPH-paper-Heffernan_fxkEQFh.pdf (accessed on 2 March 2025).

- Huang, Yan, and Weirui Wang. 2025. Message Strategies for Misinformation Correction: Current Research, Challenges, and Opportunities. In Communication and Misinformation: Crisis Events in the Age of Social Media. Hoboken: Wiley, pp. 145–62. [Google Scholar]

- Hyzen, Aaron, Hilde Van den Bulck, Michelle Kulig, Manuel Puppis, and Steve Paulussen. 2023. Epistemic welfare and the digital public sphere: Creating conditions for epistemic agency and out of the epistemic crisis. Algorithms and Epistemic Welfare: A New Framework to Understand Governance of Recommender Systems. Paper presented at the ECREA Communication Philosophy Workshop, Barcelona (SP), Spain, October 26–27. [Google Scholar]

- Jacques, Peter. 2012. A general theory of climate denial. Global Environmental Politics 12: 9–17. [Google Scholar] [CrossRef]

- Kaun, Anne, Stine Lomborg, Christian Pentzold, Doris Allhutter, and Karolina Sztandar-Sztanderska. 2023. Crosscurrents: Welfare. Media, Culture & Society 45: 877–83. [Google Scholar] [CrossRef]

- Lewandowsky, Stephan. 2021. Climate change disinformation and how to combat it. Annual Review of Public Health 42: 1–21. [Google Scholar] [CrossRef]

- Lewandowsky, Stephan, John Cook, Nicolas Fay, and Gilles E. Gignac. 2019. Science by social media: Attitudes towards climate change are mediated by perceived social consensus. Memory & Cognition 47: 1445–56. [Google Scholar]

- Loru, Edoardo, Alessandro Galeazzi, Anita Bonetti, Emanuele Sangiorgio, Niccolò Di Marco, Matteo Cinelli, Andrea Baronchelli, and Walter Quattrociocchi. 2024. Who Sets the Agenda on Social Media? Ideology and Polarization in Online Debates. arXiv arXiv:2412.05176. [Google Scholar]

- López, Antonio. 2022. Gaslighting: Fake Climate News and Big Carbon’s Network of Denial. In The Palgrave Handbook of Media Misinformation. Cham: Springer International Publishing, pp. 159–77. [Google Scholar]

- Neuberger, Christoph, Anne Bartsch, Romy Fröhlich, Thomas Hanitzsch, Carsten Reinemann, and Johanna Schindler. 2023. The digital transformation of knowledge order: A model for the analysis of the epistemic crisis. Annals of the International Communication Association 47: 180–201. [Google Scholar] [CrossRef]

- Nye, Elizabeth, Gerado Melendez-Torres, and Chris Bonell. 2016. Origins, methods and advances in qualitative meta-synthesis. Review of Education 4: 57–79. [Google Scholar] [CrossRef]

- Nyhan, Brendan, Ethan Porter, and Thomas J. Wood. 2022. Time and skeptical opinion content erode the effects of science coverage on climate beliefs and attitudes. Proceedings of the National Academy of Sciences 119: e2122069119. [Google Scholar] [CrossRef]

- Oreskes, Naomi, and Erik M. Conway. 2010. Merchants of Doubt: How a Handful of Scientists Obscured the Truth on Issues from Tobacco Smoke to Global Warming. London: Bloomsbury Press. [Google Scholar]

- Page, Matthew J., Joanne E. McKenzie, Patrick M. Bossuyt, Isabelle Boutron, Tammy C. Hoffmann, Cynthia D. Mulrow, and Larissa Shamseer. 2021. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 372: n71. [Google Scholar] [CrossRef]

- Popescu-Sarry, Diana. 2023. Post-truth is misplaced distrust in testimony, not indifference to facts: Implications for deliberative remedies. Political Studies 72: 1453–70. [Google Scholar] [CrossRef]

- Porter, Amanda J., and Iina Hellsten. 2014. Investigating participatory dynamics through social media using a multideterminant “frame” approach: The case of Climategate on YouTube. Journal of Computer-Mediated Communication 19: 1024–41. [Google Scholar] [CrossRef]

- Porter, Ethan, Thomas J. Wood, and Babak Bahador. 2019. Can presidential misinformation on climate change be corrected? Evidence from Internet and phone experiments. Research & Politics 6: 2053168019864784. [Google Scholar]

- Proctor, Robert N., and Londa Schiebinger, eds. 2008. Agnotology: The Making and Unmaking of Ignorance. Redwood City: Stanford University Press. [Google Scholar]

- Santamaría, Sara García, Paolo Cossarini, Eva Campos-Domínguez, and Dolors Palau-Sampio. 2024. Unraveling the Dynamics of Climate Disinformation. Understanding the Role of Vested Interests, Political Actors, and Technological Amplification. Observatorio (OBS) 18. [Google Scholar] [CrossRef]

- Schäfer, Mike. 2012. Online communication on climate change and climate politics: A literature review. Wiley Interdisciplinary Reviews: Climate Change 3: 527–43. [Google Scholar] [CrossRef]

- Schmid-Petri, Hannah. 2017. Politicization of science: How climate change skeptics use experts and scientific evidence in their online communication. Climatic Change 145: 523–37. [Google Scholar] [CrossRef]

- Schuldt, Jonathon, Sungjong Roh, and Norbert Schwarz. 2015. Questionnaire design effects in climate change surveys: Implications for the partisan divide. The Annals of the American Academy of Political and Social Science 658: 67–85. [Google Scholar] [CrossRef]

- Simon, Gregory. 2022. Disingenuous natures and post-truth politics: Five knowledge modalities of concern in environmental governance. Geoforum 132: 162–70. [Google Scholar] [CrossRef]

- Skirbekk, Gunnar. 2020. Epistemic Challenges in a Modern World: From” Fake News” and” Post Truth” to Underlying Epistemic Challenges in Science-Based Risk-Societies. Münster: LIT Verlag Münster. [Google Scholar]

- Skoglund, Annika, and Johannes Stripple. 2019. From climate skeptic to climate cynic. Critical Policy Studies 13: 345–65. [Google Scholar] [CrossRef]

- Stewart, Heather, Emily Cichocki, and Carolyn McLeod. 2022. A perfect storm for epistemic injustice: Algorithmic targeting and sorting on social media. Feminist Philosophy Quarterly 8: 1–29. [Google Scholar] [CrossRef]

- Treen, Kathie, Hywel Williams, and Saffron O’Neill. 2020. Online misinformation about climate change. Wiley Interdisciplinary Reviews: Climate Change 11: e665. [Google Scholar] [CrossRef]

- Tyagi, Aman, Joshua Uyheng, and Kathleen Carley. 2020. Affective Polarization in Online Climate Change Discourse on Twitter. arXiv arXiv:2008.13051. [Google Scholar]

- Van den Bulck, Hilde, Aaron Hyzen, Michelle Kulig, Steve Paulussen, and Manuel Puppis. 2024. How to Understand Trust in News Recommender Systems. Available online: https://www.algepi.com/how-to-understand-trust-in-news-recommender-systems/ (accessed on 6 March 2025).

- Van der Linden, Sander, Anthony Leiserowitz, Seth Rosenthal, and Edward Maibach. 2017. Inoculating the Public against Misinformation about Climate Change. Global Challenges 1: 1600008. [Google Scholar] [CrossRef]

- van Dijck, Jose. 2021. Governing trust in European platform societies: Introduction to the special issue. European Journal of Communication 36: 323–33. [Google Scholar] [CrossRef]

- Wardle, Claire, and Derakhshan Hossein. 2017. Information Disorder: Toward an Interdisciplinary Framework for Research and Policymaking. Available online: https://rm.coe.int/information-disorder-report-november-2017/1680764666 (accessed on 2 March 2025).

- Walsh, Denis, and Soo Downe. 2005. Meta-synthesis method for qualitative research: A literature review. Journal of Advanced Nursing 50: 204–11. [Google Scholar] [CrossRef] [PubMed]

- Wentz, Jessica, and Benjamin Franta. 2022. Liability for public deception: Linking fossil fuel disinformation to climate damages. Environmental Law Reporter 52: 10995. [Google Scholar]

- Williams, Hywel, James McMurray, Tim Kurz, and Hugo Lambert. 2015. Network analysis reveals open forums and echo chambers in social media discussions of climate change. Global Environmental Change 32: 126–38. [Google Scholar] [CrossRef]

| Author(s) | SN | Study Title | Research Findings | Theoretical Framework | Geographical Context | Year | Journal/Source |

|---|---|---|---|---|---|---|---|

| Kathie Treen, Hywel Williams, Saffron O’Neill | 1 | Online Misinformation about Climate Change | The study finds that climate change disinformation and misinformation are driven by a network of actors financing and amplifying it, thrive in polarized online environments, and spread due to cognitive biases and social norms. | Networked Misinformation and Social Epistemology | United Kingdom | 2020 | Wiley Interdisciplinary Reviews: Climate Change |

| Stephen Lewandowsky | 2 | Climate change disinformation and how to combat it | The study finds that climate disinformation and misinformation flourish in politically charged environments but can be countered through cognitive-based strategies, emphasizing scientific consensus, culturally aligned communication, and pre-emptive inoculation against false claims. | Cognitive Science, Political Ideology | Australia | 2021 | Annual review of public health, 42 |

| Stephan Daume | 3 | Online misinformation during extreme weather emergencies: short-term information hazard or long-term influence on climate change perceptions? | The study finds that misinformation and disinformation during extreme weather events spread across multiple domains and scales, influencing both immediate crisis responses and long-term climate change perceptions, necessitating structured and comparative research for effective countermeasures. | Misinformation Theory, Crisis Communication Theory, Media Ecology Theory | Sweden | 2024 | Environmental Research Communications 6 |

| Gregory Simon | 4 | Disingenuous natures and post-truth politics: Five knowledge modalities of concern in environmental governance | The study finds that post-truth politics and ‘disingenuous natures’ distort environmental knowledge and decision-making, necessitating a clearer theoretical framework to analyze how misinformation and ideological constructs shape human–environment interactions. | Post-Truth Theory, Social Construction of Knowledge, Epistemic Injustice | United States of America | 2022 | Geoforum, 132 |

| Torbjørn Gundersen, Donya Alinejad, Teresa Yolande Branch, Bobby Duffy, Kirstie Hewlett, Cathrine Holst, Susan Owens | 5 | A new dark age? Truth, trust, and environmental science. | The study finds that trust in environmental science is neither universally declining nor wholly stable, but rather fluctuates based on social, political, and media influences, highlighting both skepticism and continued confidence in scientific knowledge. | Trust Theory, Democratic Theory | Norway | 2022 | Annual Review of Environment and Resources, 47 |

| Santamaría Garcia, Sara, Paolo Cossarini, Eva Campos-Domínguez, Dolors Palau-Sampio | 6 | Unraveling the Dynamics of Climate Disinformation. Understanding the Role of Vested Interests, Political Actors, and Technological Amplification | The study finds that climate disinformation is shaped by the intersection of political actors, vested interests, and technological factors, with algorithms and far-right political parties playing a significant role in amplifying false narratives, showing the urgent need for improved climate communication strategies. | Disinformation Theory | Latin America | 2024 | Observatorio (OBS (2024)) |

| Hefferman Andrew | 7 | Countering Climate Disinformation in Africa | The study finds that climate disinformation in Africa significantly hampers both the support for climate mitigation policies and the effectiveness of adaptation measures, necessitating targeted policies that include fact-checking, education, and community-driven solutions to combat the crisis. | Disinformation Theory, Climate Justice and Information Warfare | Africa | 2024 | Center for International Governance Innovation—Working Paper (2024) |

| Stephen Lewandowsky, John Cook, Nicolas Fay, Gilles Gignac | 8 | Science by social media: Attitudes towards climate change are mediated by perceived social consensus | The study finds that public attitudes toward climate change are significantly shaped by perceived social consensus. Social media platforms influence this perception by showcasing agreement or disagreement with scientific views, ultimately impacting belief formation and resistance to misinformation and disinformation. This highlights how social validation processes online mediate the acceptance of climate science. | Cognitive Psychology, Social Consensus Theory | Australia | 2019 | Memory and Cognition, 47(8) |

| Aman Tyagi, Joshua Uyheng, Kathleen Carley | 9 | Affective Polarization in Online Climate Change Discourse on Twitter | The study finds that online discussions around climate change on Twitter are deeply affected by affective polarization, where users increasingly express strong emotional alignments with ideological groups. This emotional intensity fosters division, reduces cross-ideological engagement, and contributes to hostile communication environments that hinder constructive discourse on climate policy. | Computational Social Science, Affective Polarization Theory | United States of America | 2020 | arXiv preprint arXiv:2008.13051 |

| Giulio Corsi | 10 | Evaluating Twitter’s Algorithmic Amplification of Low-Credibility Content: An Observational Study | The study finds that Twitter’s algorithm tends to amplify content from low-credibility sources, especially on controversial issues like climate change. This algorithmic behavior results in greater visibility for misleading narratives and decreases the prominence of verified scientific information, posing risks to public understanding and trust in climate science. | Algorithmic Bias, Information Ecology Theory | Italy | 2023 | arXiv preprint arXiv:2305.06125 |

| Aleix Bassolas, Joan Massachs, Emanuele Cozzo, Julian Vicens | 11 | A cross-platform analysis of polarization and echo chambers in climate change discussions | The study finds that social media discussions on climate change are often confined to ideologically homogeneous groups across multiple platforms, forming distinct echo chambers. These environments reinforce users’ beliefs while filtering out opposing viewpoints, intensifying polarization, and limiting opportunities for consensus or dialog. | Network Theory, Echo Chamber Hypothesis | Spain | 2024 | arXiv preprint arXiv:2410.21187 |

| Edoardo Loru, Alessandro Galeazzi, Anita Bonetti, Emanuele Sangiorgio, Niccolò Di Marco, Matteo Cinelli, Andrea Baronchelli, Walter Quattrociocchi | 12 | Who Sets the Agenda on Social Media? Ideology and Polarization in Online Debates | The study finds that ideologically motivated actors are highly influential in setting the agenda of climate-related debates on social media. Through selective posting and algorithmic engagement, these actors steer public discourse toward polarizing themes, shaping users’ exposure to climate content, and reinforcing political divides. | Agenda-Setting Theory, Political Ideology Framework | Italy | 2024 | arXiv preprint arXiv:2412.05176 |

| Max Falkenberg, Alessandro Galeazzi, Maddalena Torricelli, Niccolo Di Marco, Francesca Larosa, Madalina Sas, Amin Mekacher, Warren Pearce, Fabiana Zollo, Walter Quattrociocchi, Andrea Baronchelli | 13 | Growing polarization around climate change on social media | The study finds a growing polarization in climate change discourse on social media, with a noticeable shift toward ideologically driven groupings that rarely interact. This trend is exacerbated by platform algorithms and selective sharing practices, creating fragmented information environments that challenge the communication of scientific consensus. | Polarization Theory, Computational Social Science | Italy, United Kingdom, France | 2021 | arXiv preprint arXiv:2112.12137 |

| Joachim Allgaier | 14 | Science and Environmental Communication on YouTube: Strategically Distorted Communications in Online Videos on Climate Change and Climate Engineering | The study finds that YouTube contains a significant number of videos presenting distorted or conspiratorial narratives around climate change and geoengineering. These videos often exploit the platform’s recommendation system to increase visibility, thereby shaping public perceptions and undermining trust in legitimate environmental science. | Science Communication Theory, Strategic Communication | Germany | 2019 | Frontiers in Communication, 4 |

| Hywel Williams, James McMurray, Tim Kurz, Hugo Lambert. | 15 | “Network analysis reveals open forums and echo chambers in social media discussions of climate change.” (2015): 126–138 | The study finds that climate change discourse on social media reflects a mix of open forums and tightly knit echo chambers. While some users engage across ideological lines, others remain insulated within reinforcing networks that perpetuate confirmation bias, impeding broader dialog and increasing the vulnerability to disinformation. | Network Analysis, Echo Chamber Theory | United Kingdom | 2015 | Global environmental change 32 |

| Amanda Porter, Lina Hellsten. | 16 | Investigating participatory dynamics through social media using a multideterminant “frame” approach: The case of Climategate on YouTube | The study finds that user-generated content on YouTube played a central role in shaping the narrative around Climategate, with diverse interpretations emerging due to varying frames. By analyzing these frames, the study shows how participatory online spaces can reframe scientific controversies and influence public trust in science. | Framing Theory, Participatory Media Theory | Netherlands | 2014 | Journal of Computer-Mediated Communication, 19 (4) |

| Yan Huang, Weirui Wang | 17 | Message Strategies for Misinformation Correction: Current Research, Challenges, and Opportunities | The study finds that correcting climate misinformation and disinformation requires tailored message strategies, especially during crises. It highlights challenges such as cognitive resistance and echo chambers, and proposes a need for more adaptive, evidence-based correction models in high-stakes communication environments. | Crisis Communication Theory, Message Framing | China | 2025 | Communication and Misinformation: Crisis Events in the Age of Social Media |

| Sander van der Linden, Anthony Leiserowitz, Seth Rosenthal, Edward Maibach | 18 | Inoculating the Public against Misinformation about Climate Change | The study finds that applying inoculation theory—preemptively exposing people to misinformation with a refutation—can significantly reduce the effectiveness of climate change denial. This psychological resistance helps build resilience in public understanding, especially when misinformation and disinformation challenge scientific consensus. | Inoculation Theory, Science Communication | United States of America | 2017 | Global Challenges, 1 |

| Salil Benegal, Lyle Scruggs | 19 | Correcting misinformation about climate change: The impact of partisanship in an experimental setting | The study finds that correcting misinformation and disinformation about climate change is highly dependent on partisanship. While factual corrections are effective among moderates, strong partisans tend to reject corrective messages, showing how political identity shapes receptivity to scientific information. | Political Psychology, Motivated Reasoning | United States of America | 2018 | Climatic change 148 (1) |

| Tatyana Deryugina, Olga Shurchkov | 20 | The effect of information provision on public consensus about climate change | The study finds that providing factual, scientifically grounded information increases public belief in the existence and risks of climate change. However, the effect is more pronounced among individuals with less pre-existing knowledge, showing a gap in how new information is processed. | Information Deficit Model, Behavioral Economics | United States of America | 2016 | PloS one 11 (4) |

| Ethan Porter, Thomas J. Wood, Babak Bahador | 21 | Can presidential misinformation on climate change be corrected? Evidence from Internet and phone experiments | The study finds that correcting misinformation and disinformation from high-profile figures, such as U.S. presidents, is difficult but not impossible. While corrections can initially improve factual understanding, partisan alignment often moderates long-term acceptance, particularly in politically polarized contexts. | Political Communication Theory, Elite Cue Theory | United States of America | 2019 | Research and Politics 6 (3) |

| Brendan Nyhan, Ethan Porter, Thomas Wood | 22 | Time and skeptical opinion content erode the effects of science coverage on climate beliefs and attitudes | The study finds that the effects of accurate science coverage on climate change beliefs diminish over time and can be eroded by exposure to skeptical media content. This suggests a time-decay pattern in attitude change and highlights the importance of sustained scientific messaging. | Media Effects Theory, Cognitive Psychology | United States of America | 2022 | Proceedings of the National Academy of Sciences 119 (26) |

| Dylan Bugden | 23 | Denial and distrust: explaining the partisan climate gap | The study finds that partisan differences in climate change beliefs are largely driven by underlying distrust in science and institutions. Conservatives, in particular, express higher levels of skepticism, shaped by identity and ideological beliefs, reinforcing the partisan climate gap. | Cultural Cognition Theory, Political Ideology Framework | United States of America | 2022 | Climatic Change 170 (3) |

| Jonathon Schuldt, Sungjong Roh, Norbert Schwarz | 24 | Questionnaire design effects in climate change surveys: Implications for the partisan divide | The study finds that how climate survey questions are worded can significantly influence the responses, especially among politically divided groups. Subtle shifts in question design either mitigate or exaggerate the partisan divide, underlining the importance of methodological precision in climate polling. | Survey Methodology, Framing Effects | United States of America | 2015 | The ANNALS of the American Academy of Political and Social Science, 658(1) |

| Riley Dunlap, Robert Brulle | 25 | Sources and amplifiers of climate change denial | This study identifies the major institutional sources of climate denial, including fossil fuel industries, conservative think tanks, and media outlets, which collectively fund and disseminate misleading information to create doubt about climate science. The findings show how strategic misinformation campaigns are amplified through elite networks to maintain the status quo and resist climate action. | Sociological Institutionalism, Political Economy of Climate Denial | United States of America | 2020 | Research handbook on communicating climate change |

| Waqas Ejaz, Muhammad Ittefaq | 26 | Understanding influences, misinformation, and fact-checking concerning climate change journalism in Pakistan | The study explores how climate journalism in Pakistan is affected by political influences, misinformation, disinformation, and insufficient fact-checking mechanisms. It finds that journalists face structural challenges, including limited resources and governmental pressure, which hinder balanced coverage. Misinformation and disinformation spread rapidly, often unchecked, due to a weak verification culture and sensationalism in the media. | Media Gatekeeping Theory, Framing in Crisis Communication | Pakistan | 2024 | Journalism and Reporting Synergistic Effects of Climate Change, pp. 168–88 |

| Mike Schäfer | 27 | Online communication on climate change and climate politics: a literature review | This literature review reveals that online climate communication has diversified over time, shifting from top-down institutional messaging to participatory digital platforms. While this has increased public engagement, it has also fragmented discourse and allowed misinformation to spread more easily. The study emphasizes the dual potential of online media to inform or mislead audiences. | Digital Media Ecology, Public Sphere Theory | Global/Europe-centric | 2012 | Wiley Interdisciplinary Reviews: Climate Change, 3 |

| Hannah Schmid-Petri | 28 | Politicization of science: How climate change skeptics use experts and scientific evidence in their online communication | The study demonstrates that climate skeptics strategically use scientific language and selectively cite experts to legitimize their denialist narratives online. Rather than outright rejecting science, they engage in scientific mimicry to cast doubt, create confusion, and politicize scientific discourse to serve ideological agendas. | Framing Theory, Science Communication Strategy | Germany | 2017 | Climatic Change, 145 |

| Jianxun Chu, Yuqi Zhu, Jiaojiao Ji | 29 | Characterizing the semantic features of climate change misinformation on Chinese social media | The study characterizes climate change misinformation and disinformation on Chinese social media, showing that such content often uses vague semantics, exaggerated claims, and conspiracy rhetoric. Misinformation and disinformation tend to exploit cultural references and emotional appeals rather than scientific facts, reducing the public’s ability to distinguish credible information. | Discourse Analysis, Semantic Network Theory | China | 2023 | Public Understanding of Science, 32 |

| Frank Fischer | 30 | Post-truth politics and climate denial: Further reflections | Fischer argues that post-truth politics, driven by populism and distrust in elites, reinforce climate denial by privileging emotional narratives over scientific evidence. The research finds that climate denial becomes a performative political act aimed at resisting environmental regulation rather than an epistemological disagreement with science. | Post-Truth Theory, Critical Policy Analysis | United States and Europe | 2020 | Critical policy studies, 14 |

| Frank Fischer | 31 | Knowledge politics and post-truth in climate denial: On the social construction of alternative facts | This study explores how “alternative facts” are socially constructed in climate denial narratives. It shows how power and ideology shape knowledge production, allowing fringe views to masquerade as legitimate science. The paper calls for reclaiming the epistemic authority of science in democratic debate. | Constructivist Policy Analysis, Knowledge Politics | Western Democracies | 2019 | Critical policy studies, 13 |

| Peter Jacques | 32 | A general theory of climate denial | Jacques presents a general theory that climate denial is not a lack of knowledge but a structured, ideological resistance to perceived threats to economic and political power. Denial is organized and institutionalized, often functioning through networks of influence that challenge environmental governance. | Ideology Critique, Environmental Political Theory | United States of America | 2012 | Global Environmental Politics, 12 |

| Annika Skoglund, Johannes Stripple | 33 | From climate skeptic to climate cynic | The study introduces a conceptual shift from climate skepticism to climate cynicism, where actors no longer question the science but dismiss climate action as futile or corrupt. This cynical position fosters disengagement, suggesting that apathy, rather than denial, is the new challenge in climate communication. | Critical Discourse Analysis, Political Cynicism Theory | Sweden and Europe | 2019 | Critical Policy Studies 13 |

| Tim Forsyth | 34 | Politicizing environmental science does not mean denying climate science nor endorsing it without question | Forsyth argues that politicizing environmental science should not be equated with denying climate change or blindly endorsing scientific consensus. Instead, he emphasizes that environmental science is inherently political because it involves value judgments about risk, justice, and policy outcomes. The article critiques technocratic approaches to climate policy and calls for more reflexivity in how science is used in environmental governance, suggesting that science should be interpreted in context and not treated as politically neutral. | Science and Technology Studies (STS), Political Ecology | Global South, Southeast Asia | 2012 | Global environmental politics, 12 |

| Antonio López | 35 | Gaslighting: fake climate news and Big Carbon’s network of Denial | López investigates how gaslighting—a psychological tactic of manipulation—is employed by Big Carbon networks to disseminate fake climate news. The research illustrates that these corporations use media platforms, PR firms, and think tanks to create cognitive dissonance, making the public question their understanding of climate reality. These disinformation efforts are not just accidental but strategically designed to delay climate action by manufacturing doubt and reframing environmental responsibility. | Critical Media Theory, Psychological Manipulation in Communication | Western industrialized nations | 2022 | The Palgrave handbook of media misinformation |

| Martin Bush | 36 | Denial and Deception | Bush explores the deliberate strategies of denial and deception employed by fossil fuel interests to mislead the public and policymakers about climate change. The study traces how these tactics evolved from outright denial to more subtle forms, such as greenwashing and promoting natural gas as a ‘bridge fuel’. Bush highlights how such narratives are reinforced by corporate lobbying, media manipulation, and selective funding of scientific research to obscure the urgency of climate action. | Environmental Communication, Political Economy of Energy | North America and Europe | 2020 | Climate Change and Renewable Energy. Palgrave Macmillan |

| Jessica Wentz, Benjamin Franta | 37 | Liability for public deception: linking fossil fuel disinformation to climate damages | This legal study connects fossil fuel disinformation to tangible climate damages by outlining how corporate deception campaigns may be grounds for legal liability. The authors provide historical evidence of intentional public deception by major oil companies and argue that courts can hold these actors accountable under tort and fraud law. The findings represent a critical bridge between climate communication and environmental justice, positioning disinformation as a legally actionable form of harm. | Legal Theory, Environmental Law, Accountability Frameworks | United States of America | 2022 | Environmental Law Reporter. 52 |

| Ahmed Al-Rawi, Derrick OʼKeefe, Oumar Kane, Aimé-Jules Bizimana | 38 | Twitter’s fake news discourses around climate change and global warming | This study analyzes Twitter discourse to map how fake news about climate change is circulated and framed. The authors find that misinformation and disinformation often intersect with conspiracy theories, anti-science rhetoric, and political ideology. Bots and coordinated campaigns play a significant role in amplifying false narratives. The paper emphasizes the importance of platform accountability and digital literacy to combat the algorithmic spread of denialism. | Computational Propaganda, Network Analysis, Disinformation Studies | Western digital ecosystem with a global online user base | 2021 | Frontiers in Communication, 6 |

| Scale | Label | Representative Keywords |

|---|---|---|

| Size 5 | Core Themes | Disinformation, Post-truth, Climate Change, Epistemic Harm |

| Size 4 | Major Themes | Misinformation, Epistemic Erosion, Social Media, Public Opinion, Digital Platforms |

| Size 3 | Sub-Themes | Fossil Fuel Industry, Ideological Polarization, Fake News, Knowledge Crisis, Truth Decay, Algorithmic Amplification, Skepticism, Strategic Ignorance, Emotional Appeal |

| Size 2 | Contextual Descriptors | Information Disorder, Fact-Checking, Media Literacy, Science Denial, Tech Platforms, Meta-Synthesis, Cognitive Bias, Confirmation Bias, Political Agendas, Echo Chambers |

| Size 1 | Methodological/Fringe Terms | Qualitative Synthesis, Epistemic Fragmentation, Digital Literacy, Narrative Frames, Thematic Coding, Empirical Studies, Analytical Review, PRISMA, Knowledge Authority, Public Perception, Knowledge Gaps, Trust Deficit, Policy Delay, Thematic Saturation, Online Discourse, Academic Institutions, Open Access, Data Integrity |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Essien, E.O. Climate Change Disinformation on Social Media: A Meta-Synthesis on Epistemic Welfare in the Post-Truth Era. Soc. Sci. 2025, 14, 304. https://doi.org/10.3390/socsci14050304

Essien EO. Climate Change Disinformation on Social Media: A Meta-Synthesis on Epistemic Welfare in the Post-Truth Era. Social Sciences. 2025; 14(5):304. https://doi.org/10.3390/socsci14050304

Chicago/Turabian StyleEssien, Essien Oku. 2025. "Climate Change Disinformation on Social Media: A Meta-Synthesis on Epistemic Welfare in the Post-Truth Era" Social Sciences 14, no. 5: 304. https://doi.org/10.3390/socsci14050304

APA StyleEssien, E. O. (2025). Climate Change Disinformation on Social Media: A Meta-Synthesis on Epistemic Welfare in the Post-Truth Era. Social Sciences, 14(5), 304. https://doi.org/10.3390/socsci14050304