Social Biases in AI-Generated Creative Texts: A Mixed-Methods Approach in the Spanish Context

Abstract

1. Introduction

2. Materials and Methods

- Story generation. Initially, the AI generated stories without demographic specifications. These were later refined by requesting additional details to ensure consistency and depth in the analysis. ChatGPT-4 was tasked with creating 100 fictional stories about successful Spanish individuals, each approximately 150 words in length. To ensure a comprehensive analysis of biases, the AI was explicitly instructed to provide demographic details for each protagonist, including the selected demographic variables. These details were verified for consistency and accuracy.

- b.

- Bias identification: After the stories were generated, the demographic data extracted from the narratives were systematically analyzed to identify patterns and potential biases. The selected biases for analysis were informed by societal stereotypes and their relevance to evaluating AI fairness. The analysis categories included the following:

- ○

- ID: Unique identifier for each record.

- ○

- Age: Age ranges represented among protagonists.

- ○

- Gender: Representation of male, female, and non-binary characters.

- ○

- Sexual Orientation: Inclusion of diverse sexual orientations.

- ○

- Ethnicity: Ethnic diversity within the narratives.

- ○

- Religion: Religious affiliations or lack thereof.

- ○

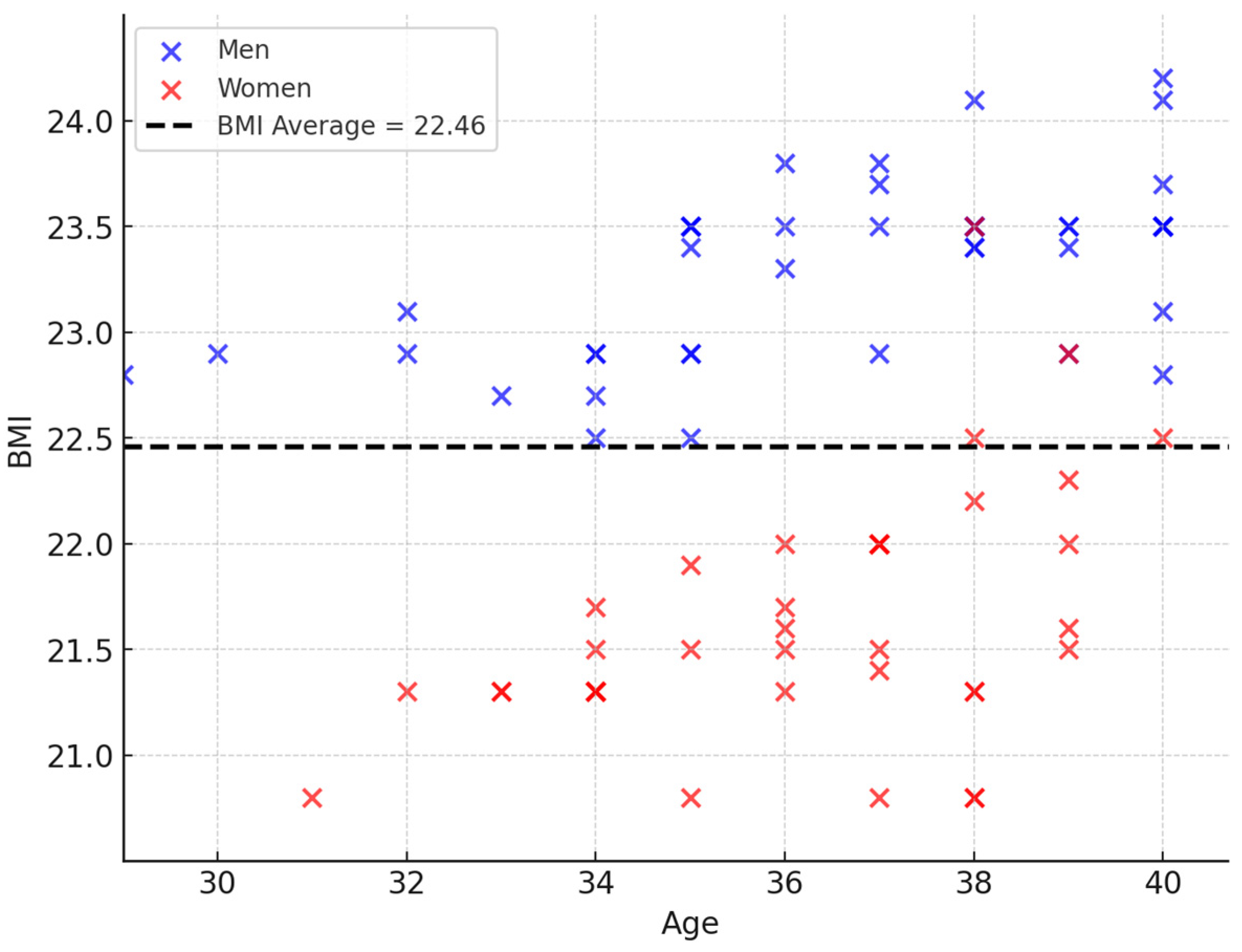

- Physical Appearance: Variables such as height, weight, and BMI.

- ○

- Socio-economic status: Economic class indicators.

- Positive Social Representation: Narratives about success often reflect desirable societal traits, making them valuable for studying how algorithms construct such representations.

- Diversity and Complexity: Fictional stories allow the inclusion of diverse demographic profiles, enabling a detailed examination of biases in AI outputs.

- Comparability: Spain’s well-documented demographic data provides a robust framework for comparing AI-generated content with real-world statistics.

- Age: is relevant to counteract stereotypes that associate youth with innovation and old age with obsolescence. Previous studies have identified patterns of age discrimination in the media (Castro-Manzano 2022).

- Gender: gender stereotypes are deeply embedded in societal structures and media representations, shaping expectations about roles, professions, and abilities. Historically, success and leadership have been disproportionately associated with men, leading to an overrepresentation of male figures in narratives about achievement and influence (Eagly and Karau 2002). AI systems trained on biased datasets tend to replicate and reinforce these patterns, perpetuating historical inequalities and limiting the visibility of women and gender minorities in positions of success and authority.

- Sexual orientation: fair representation of LGBTQ+ people is necessary to combat persistent stereotypes and promote the normalization and acceptance of sexual diversity (Ortiz de Zárate 2023).

- Ethnicity and religion: these are categories that are often subject to stereotyping and misunderstanding, which can lead to discrimination and exclusion. Delgado and Stefancic (2012) stress the importance of diverse ethnic representation to combat systemic racism, while Diana L. Eck (2001) highlights the need to reflect religious plurality to foster intercultural understanding.

- Physical description: the analysis of weight, height, or body mass index (BMI) is relevant to address prevailing body stereotypes in society, which may perpetuate unrealistic beauty standards and contribute to self-esteem issues, as discussed by Fikkan and Rothblum (2012). Among these, body mass index (BMI) serves as a critical variable in the domain of physical appearance, reflecting how AI systems may replicate societal biases regarding weight and body image. These biases often originate from poorly balanced datasets or algorithmic designs that fail to account for diversity in body types, leading to discriminatory outcomes. By analyzing the sources of these biases, their perpetuation, and current mitigation strategies, this study highlights the need for inclusive AI systems that address stereotypes linked to BMI and physical appearance.

- Socio-economic status: class stereotypes can influence perceptions of people’s ability and worth, and the representation of different socio-economic statuses is crucial to challenge these ideas, as argued by Clayton et al. (2009).

3. Results

3.1. Age Distribution

3.2. Gender Distribution

3.3. Sexual Orientation

3.4. Ethnicity

3.5. Physical Appearance

3.6. Religion

3.7. Socio-Economic Status

4. Discussion

5. Conclusions

- Diversity in training data: AI developers must ensure that datasets are sufficiently diverse to represent the breadth of human experiences, including variations in ethnicity, gender, sexual orientation, age, socio-economic status, and geography. A lack of diversity in training data leads to homogeneity in AI-generated narratives, excluding groups based on ethnicity, non-normative body types, or non-heterosexual orientations. This exclusion can result in systemic disadvantages for marginalized communities, particularly in automated decision-making processes. To address this, developers should establish diversity standards, including quotas for ethnic, gender, and socio-economic representation in training data, periodically reviewed by independent oversight committees.

- Audits and transparency: Regular audits of AI systems are essential to identify and mitigate biases before they cause harm. Transparency in algorithms and their decision-making processes is equally critical for evaluating their fairness and accountability. Standardized auditing tools, such as bias detection algorithms, can analyze outputs for skewed representations. For instance, IBM’s AI Fairness 360 toolkit can assess demographic balance in generated narratives. Additionally, developers should publish detailed documentation of training datasets and the steps taken to minimize bias during model development, providing public-facing summaries to ensure transparency.

- Involvement of diversity and inclusion experts: Ensuring inclusivity in AI systems requires the active involvement of experts in gender equality, racial and ethnic diversity, sexual orientation, social justice, and related areas. These consultants should guide the design and deployment of models to address gaps in representation and propose actionable solutions. Establishing advisory boards with representatives from historically marginalized groups can further enhance inclusivity by reviewing AI-generated outputs and offering culturally sensitive feedback.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Barocas, Solon, and Andrew D. Selbst. 2016. Big data’s disparate impact. California Law Review 104: 671–732. [Google Scholar] [CrossRef]

- Binns, Reuben. 2018. Fairness in machine learning: Lessons from political philosophy. Proceedings of Machine Learning Research 81: 149–59. Available online: https://proceedings.mlr.press/v81/binns18a.html (accessed on 7 November 2024).

- Buolamwini, Joy, and Timnit Gebru. 2018. Gender shades: Intersectional accuracy disparities in commercial gender classification. Proceedings of Machine Learning Research 81: 1–15. Available online: http://proceedings.mlr.press/v81/buolamwini18a.html (accessed on 7 November 2024).

- Caliskan, Aylin, Joanna J. Bryson, and Arvind Narayanan. 2017. Semantics derived automatically from language corpora contain human-like biases. Science 356: 183–86. [Google Scholar] [CrossRef] [PubMed]

- Carvalho, André Carlos Ponce de Leon Ferreira. 2021. Artificial intelligence: Risks, benefits, and responsible use. Advanced Studies 35: 21–34. [Google Scholar] [CrossRef]

- Castro-Manzano, José Martín. 2022. Ageism and artificial intelligence. Research in Computing Science 151: 201–10. Available online: https://www.researchgate.net/profile/J-Castro-Manzano/publication/368786470_Edadismo_e_inteligencia_artificial/links/63f96b360cf1030a564bb7ec/Edadismo-e-inteligencia-artificial.pdf (accessed on 4 March 2025).

- Centre for Sociological Research (CSR). 2023. Post-Pandemic Social and Affective Relations Survey 2023 (III) (Study No. 3400). Available online: https://www.cis.es/documents/d/cis/es3400mar-pdf (accessed on 25 November 2024).

- Centre for Sociological Research (CSR). 2024. January 2024 Barometer. Available online: https://www.cis.es/documents/d/cis/es3435mar_a (accessed on 25 November 2024).

- Clayton, John, Gill Crozier, and Diane Reay. 2009. Home and away: Risk, familiarity and the multiple geographies of the higher education experience. International Studies in Sociology of Education 19: 157–74. [Google Scholar] [CrossRef]

- Criado-Pérez, Caroline. 2019. Invisible Women: Data Bias in a World Designed for Men. New York: Abrams Press. [Google Scholar]

- Delgado, Richard, and Jean Stefancic. 2012. Critical Race Theory: An Introduction. New York: New York University Press. Available online: https://nyupress.org/9780814721353/critical-race-theory/ (accessed on 27 November 2024).

- Eagly, Alice H., and Steven J. Karau. 2002. Role congruity theory of prejudice toward female leaders. Psychological Review 109: 573–98. [Google Scholar] [CrossRef] [PubMed]

- Eck, Diana L. 2001. A New Religious America. New York: HarperCollins. [Google Scholar]

- Faceli, Katti, Ana Carolina Lorena, João Gama, Tiago Agostinho de Almeida, and André Carlos Ponce de Leon Ferreira de Carvalho. 2021. Artificial Intelligence: A Machine Learning Approach, 2nd ed. Rio de Janeiro: LTC. Available online: https://repositorio.usp.br/item/003128493 (accessed on 2 October 2024).

- Fikkan, Janna L., and Esther D. Rothblum. 2012. Is fat a feminist issue? Exploring the gendered nature of weight bias. Sex Roles 66: 575–92. [Google Scholar] [CrossRef]

- Floridi, Luciano, Josh Cowls, Monica Beltrametti, Raja Chatila, Patrice Chazerand, Virginia Dignum, Christoph Luetge, Robert Madelin, Ugo Pagallo, Francesca Rossi, and et al. 2018. AI4People—An ethical framework for a good AI society: Opportunities, risks, principles, and recommendations. Minds and Machines 28: 689–707. [Google Scholar] [CrossRef] [PubMed]

- Gawronski, Bertram, Alison Ledgerwood, and Paul Eastwick. 2020. Implicit Bias and Antidiscrimination Policy. Policy Insights from the Behavioral and Brain Sciences 7: 99–106. [Google Scholar] [CrossRef]

- Gil de Zúñiga, Homero, Manuel Goyanes, and Timilehin Durotoye. 2023. Scholarly definition of artificial intelligence (AI): Advancing AI as a conceptual framework in communication research. Political Communication 41: 317–34. [Google Scholar] [CrossRef]

- Gillespie, Tarleton. 2014. The relevance of algorithms. In Media Technologies: Essays on Communication, Materiality, and Society. Edited by Tarleton Gillespie, Pablo J. Boczkowski and Kirsten A. Foot. Cambridge: MIT Press, pp. 167–94. [Google Scholar] [CrossRef]

- Grgic-Hlaca, Nina, Elissa M. Redmiles, Krishna P. Gummadi, and Adrian Weller. 2018. Human perceptions of fairness in algorithmic decision making: A case study of criminal risk prediction. Paper presented at World Wide Web Conference (WWW ’18), Lyon, France, April 23–27; pp. 903–12. [Google Scholar] [CrossRef]

- Hamilton, David L., and Steven J. Sherman. 1994. Stereotypes. In Handbook of Social Cognition. Edited by Robert S. Wyer, Jr. and Thomas K. Srull. Mahwah: Lawrence Erlbaum Associates, pp. 1–68. Available online: https://psycnet.apa.org/record/1994-97663-000 (accessed on 7 December 2024).

- Massey, Douglas S., and Nancy A. Denton. 1993. Segregation and the making of the underclass. In The Urban Sociology Reader, 2nd ed. Edited by Jan Lin and Christopher Mele. London: Routledge, pp. 192–201. [Google Scholar] [CrossRef]

- National Statistics Institute (NSI). 2022. Body Mass Index by Body Mass, Age and Period. Available online: https://www.ine.es/jaxi/Tabla.htm?path=/t00/ICV/Graficos/dim3/&file=331G2.px (accessed on 25 November 2024).

- National Statistics Institute (NSI). 2024. Continuous Population Survey. Available online: https://www.ine.es/dyngs/Prensa/es/ECP3T24.htm (accessed on 25 November 2024).

- Nazir, Anam, and Ze Wang. 2023. A comprehensive survey of ChatGPT: Advancements, applications, prospects, and challenges. Meta-Radiology 1: 100022. [Google Scholar] [CrossRef] [PubMed]

- Obermeyer, Ziad, Brian Powers, Christine Vogeli, and Sendhil Mullainathan. 2019. Dissecting racial bias in an algorithm used to manage the health of populations. Science 366: 447–53. [Google Scholar] [CrossRef] [PubMed]

- O’Neil, Cathy. 2016. Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy. New York: Crown Publishing. Available online: https://books.google.es/books?id=NgEwCwAAQBAJ&pg=PA1&hl=es&source=gbs_toc_r&cad=2#v=onepage&q&f=false (accessed on 7 November 2024).

- Ortiz de Zárate, Lucía. 2023. Gender biases in artificial intelligence. Revista de Occidente 502: 5–20. Available online: https://ortegaygasset.edu/wp-content/uploads/2023/03/RevistadeOccidente_Marzo2023_L.Ortiz_de_Zarate.pdf (accessed on 8 December 2024).

- Raji, Inioluwa Deborah, Andrew Smart, Rebecca N. White, Margaret Mitchell, Timnit Gebru, Ben Hutchinson, Jamila Smith-Loud, Daniel Theron, and Parker Barnes. 2020. Closing the AI accountability gap: Defining an end-to-end framework for internal algorithmic auditing. Paper presented at Conference on Fairness, Accountability, and Transparency, Barcelona, Spain, January 27–30; pp. 33–44. [Google Scholar] [CrossRef]

| Examples of Stories | Character Variables Through AI |

|---|---|

| The innovative musician Raúl Sánchez, born in Granada, began playing the guitar at the age of five. His natural talent and passion for music led him to study at the Granada Conservatory of Music. He formed his own band, fusing flamenco with jazz and rock, creating a unique sound that captivated audiences. His band gained international recognition and performed at major music festivals around the world. Raúl also composed soundtracks for films and collaborated with other renowned artists. His music continues to inspire new generations of musicians. | Raul Sanchez Gender: Male. Age: 33 years old. Sexual orientation: Heterosexual. Ethnicity: Hispanic. Religion: Non-practicing Catholic. Physical appearance: 1.78 m, 72 kg, BMI 22.7. Black hair, dark eyes, dark skin. Socio-economic status: Medium-high. |

| Malaga-born fashion designer Ana Morales has always had an eye for fashion. She studied fashion design in London and returned to Spain to launch her own clothing label. Her designs, which combined elegance and modernity, quickly gained popularity. Ana was invited to present her collections at Madrid and Paris Fashion Week. Her creations appeared in international fashion magazines and dressed celebrities on the red carpet. Ana became one of the most influential designers of her generation, setting trends in the fashion industry. | Ana Morales Gender: Female. Age: 31. Sexual orientation: Heterosexual. Ethnicity: Hispanic. Religion: Agnostic. Physical appearance: 1.70 m, 60 kg, BMI 20.8. Long brown hair, brown eyes, fair skin. Socio-economic status: High. |

| The elite athlete Diego Pérez, born in Zaragoza, has always excelled in athletics. From a young age, he trained with dedication and effort, which enabled him to win numerous national competitions. His big breakthrough came when he represented Spain at the Olympic Games, where he won a gold medal in the 400 m. His victory not only made him a national hero, but also inspired him to create a foundation to support young athletes. Diego continues to compete internationally and is an example of discipline and perseverance in sport. | Diego Pérez Gender: Male. Age: 29 years old. Sexual Orientation: Heterosexual. Ethnicity: Hispanic. Religion: Non-practicing Catholic. Physical appearance: 1.85 m, 78 kg, BMI 22.8. Athletic build, short black hair, brown eyes. Socio-economic status: High. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gabino-Campos, M.; Baile, J.I.; Padilla-Martínez, A. Social Biases in AI-Generated Creative Texts: A Mixed-Methods Approach in the Spanish Context. Soc. Sci. 2025, 14, 170. https://doi.org/10.3390/socsci14030170

Gabino-Campos M, Baile JI, Padilla-Martínez A. Social Biases in AI-Generated Creative Texts: A Mixed-Methods Approach in the Spanish Context. Social Sciences. 2025; 14(3):170. https://doi.org/10.3390/socsci14030170

Chicago/Turabian StyleGabino-Campos, María, José I. Baile, and Aura Padilla-Martínez. 2025. "Social Biases in AI-Generated Creative Texts: A Mixed-Methods Approach in the Spanish Context" Social Sciences 14, no. 3: 170. https://doi.org/10.3390/socsci14030170

APA StyleGabino-Campos, M., Baile, J. I., & Padilla-Martínez, A. (2025). Social Biases in AI-Generated Creative Texts: A Mixed-Methods Approach in the Spanish Context. Social Sciences, 14(3), 170. https://doi.org/10.3390/socsci14030170