Abstract

The research explores how artificial intelligence-based chatbots transform psychological and legal assistance in situations of gender-based violence, evaluating their effect on women’s digital empowerment. A cross-sectional design with a mixed approach was used, combining a 25-item survey of 1000 women and a quantitative analysis using multiple correspondences and clustering techniques, supplemented by semi-structured interviews. The findings show that 64% considered the use of chatbots useful for accessing information, although only 27% used them to report incidents due to structural and digital barriers. Participants from rural areas faced severe connectivity limitations and expressed distrust of artificial intelligence, while those who interacted frequently demonstrated greater autonomy, decision-making capacity, and confidence in seeking support. Qualitative analysis showed that users valued confidentiality and anonymity as essential elements for sharing experiences of violence that they did not reveal in face-to-face settings. They also highlighted that immediate interaction with chatbots created a perception of constant support, reducing isolation and motivating users to seek formal help. The conclusion is that designing gender-focused chatbots and integrating them into care systems is an innovative and effective way to expand access to justice and psychological care.

1. Introduction

Gender-based violence remains one of the most persistent global problems, affecting one in three women in their lifetime (World Health Organization 2023). Even with improved regulation and technology, many victims still face systematic obstacles to psychological and legal assistance, such as lack of access to justice, discrimination in their claim or public services that do not act within the required timeframe, thus affecting the protection and redress of their violated rights. According to a report by UN Women (2023), 40% of victims of gender-based violence do not seek formal help, mainly due to fear of revictimization, mistrust of institutions, and ignorance of their rights (Costa 2018; Jomaa et al. 2024).

This problem affects women who live in communities with insufficient services and resources, as well as those who, even though they benefit from digital connectivity and have a certain degree of education, do not find sufficiently robust tools to get effective and safe help (Xia et al. 2023).

In response to this problem, a new alternative has emerged to counteract this problem: chatbots with artificial intelligence, which provide immediate and anonymous assistance to women who have suffered situations of gender-based violence (UNESCO 2023).

Chatbots are automated conversational systems based on natural language processing, designed to provide initial counselling, channel complaints, and offer emotional support without the direct intervention of a human operator (Bonamigo et al. 2022).

Digital empowerment allows women not only to access information but also to become active protagonists in their own support process. On a psychological level, digital tools such as chatbots provide a safe and confidential space that helps reduce fear of revictimization and builds confidence to seek help (UN Women 2023; Xia et al. 2023). From a legal standpoint, real-time access to regulatory resources, reporting channels, and protection mechanisms is an effective way to overcome the slowness or inaccessibility of in-person services (Costa 2018; Locke and Hodgdon 2024). In this way, digital empowerment is not limited to the use of technology but transforms women’s relationship with the support system, promoting both emotional care and access to justice (Jomaa et al. 2024; ITU 2023).

To access these chatbots, the user must connect through any mobile device with internet connection to the virtual platform and communication is generated automatically, as a chat window is opened where the user/victim makes her query; this form of communication has allowed its adoption at all levels of society, with users able to access support resources through any environment (Manasi et al. 2022; Locke and Hodgdon 2024).

Highlighting the remarkable effect of chatbots in this area, it is necessary to mention the three chatbots used that provide this legal and psychological assistance. In Latin America, Violeta has already helped more than 260,000 users, guiding them on platforms they already have access to such as WhatsApp and Messenger (Kelley et al. 2022). Sofia, which is in Mexico and Colombia, has registered more than 40,000 interactions and is distinguished by its AI-based personalisation capabilities. In the UK and Australia, they have integrated with government agencies and emergency services for direct access to official protection resources and reports through BrightSky (World Health Organization 2023). Despite the increasing implementation of AI-based chatbots in support services, there is little empirical evidence on their actual effectiveness as empowerment tools for women experiencing gender-based violence, particularly in Latin American contexts.

And despite their reach, the deployment of chatbots to assist victims of gender-based violence is limited in ways that diminish their effectiveness (Avella et al. 2023). According to the International Telecommunication Union (ITU 2023), 17% fewer women have access to the internet than men, and this restricts the use of these tools in communities where there is less digital development (Hajibabaei et al. 2022). But connectivity is not the only thing that conditions the use of chatbots. And even with access to the internet and more education, only 27% of women reported using chatbots to present cases of violence, due to a lack of knowledge in their use and application, which is one of the main systematic barriers that still persist in the use of these technologies (Kovacova et al. 2019; Jomaa et al. 2024).

With respect to chatbots, specific design and use deficiencies have been identified through the analysis of these tools, and, as a weakness, their effectiveness, in psychological and legal counselling, is undermined by the lack of personalisation of responses and limited reliance on artificial intelligence (Leong and Sung 2024). Furthermore, research in the area of artificial intelligence revealed that chatbots reproduce algorithmic biases, producing generic or inappropriate responses to a specific case of gender-based violence (Guevara-Gómez 2023). These deficiencies in both fields affect both women in extremely vulnerable circumstances and women who have better access to information and resources and need accurate and reliable solutions (International Telecommunication Union (ITU 2023). Therefore, the main objective of the study is: to evaluate the influence of Chatbots for the empowerment of women victims of gender-based violence through psychological and legal assistance. The specific objectives: (i) To examine the accessibility and trustworthiness of chatbots designed to assist women in situations of gender-based violence. (ii) To identify the technical and social challenges in implementing chatbots for psychological and legal assistance. (iii) To analyse the effectiveness of chatbots in reducing barriers to reporting and accessing support services. (iv) To explore improvement strategies for the optimisation of chatbots with a gender perspective.

The findings of this research provide empirical evidence on the functionality of chatbots in assisting women victims of gender-based violence, allowing for their integration into regulatory frameworks and institutional support strategies to be strengthened. In addition, relevant information is presented to optimise their design and application, guaranteeing their accessibility, security and effectiveness with the sole purpose of counteracting this problem. This article provides a mixed methods analysis of chatbot use among affected women, identifying the digital, psychological and institutional factors that determine their perceived usefulness and empowerment outcomes. Despite these advances, little empirical research has been conducted on the actual impact of AI-based chatbots on women’s empowerment in Latin American contexts, especially from a mixed-methods perspective (Locke and Hodgdon 2024). This study addresses that gap by integrating quantitative and qualitative evidence.

Contextualising Artificial Intelligence, Chatbots and Gender-Based Violence

In recent years, artificial intelligence (AI) has evolved from a futuristic technology to a resource applied in key sectors such as health, education and security (Parry et al. 2024). In particular, its implementation in sensitive social contexts has raised expectations about its ability to offer innovative solutions to structural problems, such as gender-based violence. The anonymity offered by these platforms and their 24/7 availability represent determining factors for many women who face barriers such as fear of the aggressor, social stigma or institutional distrust. Moreover, these digital solutions reduce waiting times and decentralise access to resources, which is especially relevant in territories where face-to-face services are limited or non-existent (Nouraldeen 2023). However, although their potential has been recognised, limitations ranging from the technical to the ethical have also been highlighted. On the one hand, the gender digital divide continues to disproportionately affect women in rural or less affluent areas. Lack of access to mobile devices, stable internet connection or basic digital skills restricts the effective use of these systems (Al-Ayed and Al-Tit 2024; Møgelvang et al. 2024). Added to this is the algorithmic bias present in artificial intelligence models trained with unrepresentative data, which generates standardized responses that do not consider the cultural, emotional, or linguistic dimensions of each user (Isaksson 2024; Lütz 2022). Ahn et al. (2022) demonstrated that automated recommendations conditioned by gender stereotypes distort users’ decision making. Chauhan and Kaur (2022) pointed out that algorithmic discrimination constitutes a direct threat to the protection of human rights in digital environments. In Latin America, Avella et al. (2023) showed that predictive artificial intelligence reproduces structural inequalities and compromises the safety of women who access these systems in search of guidance. Algorithmic bias is not merely a technical limitation but a factor that intensifies social inequalities and erodes trust in technologies that should guarantee protection and support for vulnerable populations. Furthermore, the presence of biases in models limits the ability of chatbots to provide reliable care in psychological support and legal advice processes, reducing their effectiveness in addressing gender-based violence. When systems lack inclusive design, automated responses tend to reproduce discriminatory patterns that discourage victims from seeking help and weaken the ability of artificial intelligence to establish itself as a tool for social justice.

On the other hand, the absence of clear regulatory frameworks on the use of artificial intelligence in the social sphere poses risks in terms of personal data protection, institutional accountability and transparency of automated processes. Authors such as Ahn et al. (2022) and Guevara-Gómez (2023) warn that, without specific regulations, the implementation of these technologies could perpetuate inequalities or violate fundamental rights, especially in highly vulnerable contexts.

At the international level, some pilot experiences in countries such as Spain, Canada and South Korea have shown that the integration of AI in victim services can be effective if it is articulated with solid institutional networks and permanent monitoring mechanisms (Joseph et al. 2024; Marinucci et al. 2023). However, in regions such as Latin America, these initiatives face regulatory obstacles, lack of budget and institutional resistance that limit their scalability and sustainability.

In this context, it is necessary to develop research that examines not only the technical effectiveness of chatbots but also their real impact on women’s empowerment, their capacity to build trust and their alignment with gender justice principles. This study therefore aims to critically analyse the use, benefits and limitations of chatbots as a support mechanism in situations of gender-based violence, integrating recent evidence and considering the social, normative and technological factors that condition their implementation. Given the complexity of the phenomenon and the challenges associated with the implementation of artificial intelligence-based solutions, it is essential to understand how these tools are being used by women in different contexts. Only through a critical analysis, which considers structural, technological and cultural conditions, is it possible to assess the true scope of chatbots as support devices in situations of gender-based violence.

The need for this study arises from the lack of empirical evidence on the real effect of AI-based chatbots on the empowerment of women victims of gender-based violence in Latin American contexts. While some research has explored their use as guidance tools (Locke and Hodgdon 2024; Jomaa et al. 2024), most do not incorporate a mixed methodological approach or examine structural barriers, such as technological mistrust or the digital divide. This lack of critical analysis of their actual usefulness and effective integration into institutional support systems highlights a significant gap in the literature. In this context, the present study offers evidence based on a mixed approach that allows us to assess how digital accessibility, trust in technology, and frequency of chatbot use relate to user empowerment. The results aim to guide improvements in the design and implementation of these tools within public policies and institutions that address gender-based violence.

2. Literature Review

2.1. Chatbots and Their Application to Gender-Based Violence

Artificial intelligence (AI) has redefined the way assistance is provided to women victims of gender-based violence, especially through the use of chatbots. These tools allow immediate, confidential and constant access to critical information, overcoming time and geographic barriers, as evidenced in countries such as South Korea, Canada and the UK (Locke and Hodgdon 2024; Jomaa et al. 2024). Systems such as Violet, Sofia and BrightSky have been used to inform victims of their rights, refer them to reporting mechanisms and direct them to legal and psychological support networks (Nouraldeen 2023).

Kim and Kwon (2024) revealed that female users valued the anonymity offered by chatbots as a key factor in initiating interaction with these tools.

Similarly, Schiller and McMahon (2019) documented that, in contexts such as the US and Germany, users identified these platforms as a safer space to express their experiences without fear of social judgement. These data show that, beyond the technological aspect, chatbots are perceived as initial emotional safeguarding tools (Bonamigo et al. 2022).

2.2. Technological Limitations and Social Barriers

Despite technological advances, the application of chatbots in sensitive contexts faces considerable limitations. Most of these systems have been trained with standardised databases that do not take into account the cultural, emotional and legal realities of each user, which reduces the relevance of the answers provided (Isaksson 2024; Lütz 2022). This deficit has been reported in studies in indigenous communities in Brazil and in rural regions of Africa, where it was found that automated responses lacked connection to women’s life contexts (Brown 2022; Huang et al. 2021).

This is compounded by the persistent digital gender divide. Research in Egypt, Nigeria and Peru highlights that 45% of a sample of 200 women do not have adequate devices or a stable internet connection, preventing them from interacting with these systems (Al-Ayed and Al-Tit 2024; Møgelvang et al. 2024). Furthermore, Dorta-González et al. (2024) noted that 39% of the 150 female users analysed abandoned chatbot use due to difficulties in understanding instructions, directly associated with low levels of digital literacy. These figures reveal that, although the tool exists, its use is still limited by structural and educational factors.

2.3. Ethical Dilemmas and Lack of International Regulation

The use of AI to address gender-based violence poses significant ethical and legal challenges. Although some countries, such as Spain and South Korea, have promoted partial regulations, there is no global framework to regulate data privacy or the obligations of automated platforms in the event of errors or inappropriate responses (Ahn et al. 2022; Hajibabaei et al. 2022). Recent studies indicate that more than 50% of users—out of 320 respondents—are unclear about who accesses their information and how it is used, leading to mistrust in the use of these tools (Sutko 2020).

In Brazil and Colombia, it has been shown that the algorithms used in artificial intelligence systems reproduce gender biases that reinforce stereotypes or generate erroneous responses, compromising the safety of victims (Guevara-Gómez 2023; Avella et al. 2023). Guevara-Gómez (2023) examined national artificial intelligence strategies in Latin America and showed that, lacking a gender perspective, the systems process data under parameters presented as neutral, which renders historical inequalities invisible and perpetuates discrimination against women. This lack of perspective allowed algorithms to normalize cultural and linguistic patterns that perpetuate asymmetrical power relations, coinciding with the findings of Chauhan and Kaur (2022), who identified that programming artificial intelligence without a gender perspective favors the reproduction of structural biases.

Avella et al. (2023) analyzed the application of predictive artificial intelligence in legal and protection services in Colombia and Brazil and documented that the systems issued responses that reduced the perception of risk in reported situations. The algorithms, based on statistical correlations, minimized the severity of episodes of violence, producing messages that did not reflect the real urgency of each case. This result coincides with the findings of Ahn et al. (2022), who identified that algorithmic recommendations conditioned by stereotypes limit users’ capacity for action, and with the study by Locke and Hodgdon (2024), who observed that generative systems reproduce discriminatory images and patterns that reinforce exclusion.

Complementarily, Marinucci et al. (2023) showed that implicit biases in artificial intelligence not only reinforce gender stereotypes but also directly affect the safety of those who interact with these tools. Borges and Filó (2021) demonstrated that this situation occurs even on global platforms such as Amazon, where the algorithmic architecture maintains discriminatory practices under the guise of technological neutrality. UNESCO (2023) and the International Telecommunication Union (ITU 2023) have documented that these types of limitations are observed in multiple regions, which explains why, in countries such as Brazil and Colombia, digital systems applied to gender-based violence response do not always guarantee safe environments for users. This evidence reinforces the need for rigorous ethical oversight and international guidelines to audit the behaviour and consequences of automated intervention in highly vulnerable contexts (Yim 2024; Young et al. 2023).

2.4. Proposals for Improvement in System Design and Integration

Several authors have proposed solutions that could improve the effectiveness of chatbots in the care of victims of gender-based violence. These include the design of adaptive algorithms capable of contextualising responses according to the profile and cultural environment of each user (Noriega 2020; Lee et al. 2024). This improvement would allow for more empathetic care, adjusted to the reality of each person, and not based on impersonal patterns.

In Spain and Canada, pilot experiences have been developed where chatbots are integrated into institutional support networks, facilitating the direct referral of cases to specialised centres according to the type of violence reported (Marinucci et al. 2023; Joseph et al. 2024). Work is also underway to create filters and sensors that identify gender bias in real time, adjusting the content of the responses generated to avoid reproducing stereotypes (Manasi et al. 2022). These proposals offer a clear path towards the development of more reliable, inclusive and ethically accountable systems capable of playing a relevant role in the protection of women’s rights.

Overall, the current literature recognises the value of chatbots as support tools in cases of gender-based violence but also shows profound limitations in terms of their personalisation, access, regulation and gender focus. Despite specific experiences in some countries, there is still little empirical evidence that analyses their real impact on the empowerment of users from a comprehensive approach. This reinforces the need for research such as this one, which combines quantitative and qualitative analyses to understand how these technologies operate in different social contexts and what factors influence their effectiveness.

2.5. Degree of Exploration of the Topic and Positioning of the Study

Various studies have documented the application of chatbots in responding to cases of gender-based violence, especially as initial guidance tools. Examples include Violeta in Latin America (Nouraldeen 2023), Sofía in Colombia and Mexico (Kelley et al. 2022) and BrightSky in the United Kingdom and Australia (World Health Organization 2023), which have been analysed in terms of their design, number of interactions and dissemination channels. However, most of this research is limited to functional descriptions or preliminary explorations, without delving into the specific effects on women’s digital empowerment or considering how these tools influence their access to psychological or legal support. Furthermore, there are few studies that integrate quantitative and qualitative approaches to capture both usage patterns and users’ subjective perceptions, especially in regions with high digital inequality such as Latin America. In this context, the present study stands out as a novel contribution by applying a mixed approach that segments user profiles according to their level of access, confidence and frequency of use while incorporating testimonials that provide insight into how women perceive and experience these technologies as mechanisms of support and autonomy.

2.6. Research Hypotheses

Based on the general objective and the specific objectives of the study, the following hypotheses are proposed:

General hypothesis:

H0:

The use of artificial intelligence-based chatbots does not significantly influence the empowerment of women victims of gender-based violence in terms of psychological and legal assistance.

H1:

The use of artificial intelligence-based chatbots significantly influences the empowerment of women victims of gender-based violence in terms of psychological and legal assistance.

Specific hypotheses:

HE1:

The greater the digital accessibility and trust in artificial intelligence, the more likely women are to use chatbots for psychological and legal support.

HE2:

Women in rural areas have significantly less access to and trust in chatbots than women in urban areas.

HE3:

Chatbots with a higher level of personalisation are perceived as more effective in supporting victims of gender-based violence.

HE4:

Women who use chatbots more frequently develop higher levels of digital empowerment in seeking psychological and legal support in the face of gender-based violence.

3. Materials and Methods

3.1. Strategy and Design

This study adopted a mixed-methods approach, combining qualitative and quantitative strategies to analyse the impact of artificial intelligence chatbots on the empowerment of women victims of gender-based violence, with particular emphasis on psychological and legal support (Møgelvang et al. 2024; Chauhan and Kaur 2022). The combination of quantitative and qualitative methods allowed for a comprehensive analysis of both the measurable frequency and types of chatbot use, as well as the subjective experiences of empowerment, trust and institutional perception among women victims of gender-based violence. The research was correlational-exploratory in nature, which allowed the identification of associations between digital accessibility and users’ autonomy in accessing chatbot platforms, providing a comparative analysis across differing technological contexts (Parry et al. 2024; Borges and Filó 2021). Although the introduction and literature refer to the Violet, Sofia and BrightSky chatbots as successful international models, this study did not limit the analysis to these platforms. Participants were included based on their use or knowledge of any AI-based chatbot offering psychological and legal assistance in cases of gender-based violence. In the Peruvian context, various platforms were identified that participants used to obtain automated guidance in situations of gender-based violence. These include the virtual chat service of Line 100 of the Ministry of Women and Vulnerable Populations (MIMP), the Facebook bot of the Aurora Programme, and the automated assistant of the Judiciary linked to applications such as SISMED. Users also reported using Amauta Pro, a locally developed artificial intelligence-based tool that offers general legal advice. These platforms formed the basis for selecting participants who had effectively interacted with virtual assistants with informational or referral capabilities, focused on psychological or legal support (Nwafor 2024).

A non-experimental cross-sectional design was used, whereby data were collected at a single point in time without manipulating the variables, in accordance with the methodological criteria suggested by Piva (2024). The target population consisted of Peruvian women over the age of 18 who had used virtual assistance platforms in response to situations of gender-based violence in the last two years. Based on institutional reports and preliminary estimates, an accessible population of approximately 3800 women was considered. Stratified probability sampling was applied, taking into account geographical distribution (urban/rural) and level of access to technological infrastructure, a key dimension highlighted in previous studies (Dorta-González et al. 2024).

The sample size of 1000 participants was defined taking into account the need to ensure results with an adequate level of reliability and validity for multivariate statistical analyses. This figure allows for a reduced sampling error, in addition to exceeding the sizes used in previous research on digital technologies and gender-based violence in the region, which usually range between 300 and 600 cases. Furthermore, reaching this number made it possible to complement the qualitative phase, ensuring theoretical saturation in the categories analyzed and reinforcing the robustness of the findings.

3.2. Participants

The final sample consisted of 1000 Peruvian women over the age of 18, selected through a purposive sampling process aimed at identifying actual users of automated support platforms in cases of gender-based violence. To this end, a digital call for participants was disseminated through social media, women’s support groups, institutional websites of the MIMP, and NGOs that promote access to rights. The first part of the questionnaire included a mandatory filter that allowed only those who declared that they had used platforms such as the MIMP’s Línea 100 virtual chat, the Aurora Programme’s Facebook bot, the SISMED automated assistant of the Judiciary, or the Amauta Pro tool at least once in the last two years to continue. The strategy combined stratified sampling and intentional criteria within each stratum. Proportional quotas were established for urban and rural areas, as well as for levels of technological connectivity, which ensured diversity and representativeness of the contexts in which chatbots operate. The purposive nature of the sampling allowed us to focus on women who had actually used these tools, ensuring the relevance and significance of the information collected. This methodological combination was considered the most appropriate, given that pure random sampling would have been unfeasible due to the difficulty of identifying users in a formal population setting.

This condition was validated by a closed question and an open verification question, which asked respondents to briefly describe their experience of using the platform.

Before the final application, a pilot test was conducted with 35 women who had previously interacted with chatbot-based platforms, with the aim of evaluating the clarity, comprehensibility, and response time of the items. Based on their observations, adjustments were made to the wording of certain questions, improving their accuracy and relevance. The final questionnaire was structured into five thematic blocks: (i) digital accessibility and trust in technology (items 1–5), (ii) use and frequency of chatbots (items 6–10), (iii) perception of psychological support (items 11–15), (iv) perception of legal support (items 16–20), and (v) digital empowerment (items 21–25). Content validity was ensured by a panel of three specialists in gender studies, psychology, and law, who verified the consistency and relevance of the items with respect to the study objectives. Finally, the internal reliability of the instrument was assessed using McDonald’s Omega coefficient (ω), which reached a value of 0.89, reflecting high consistency and confirming the methodological robustness of the questionnaire used (Rodriguez-Saavedra et al. 2025a).

3.3. Instruments

For the qualitative approach, a semi-structured interview guide was used, consisting of 7 categories, 14 subcategories, 35 key indicators, and 14 questions. Table 1 summarizes the categories, subcategories, qualitative indicators, and guiding questions used during data collection.

Table 1.

Categories, subcategories, indicators, and semi-structured questions.

The qualitative analysis followed a thematic approach. Two team members conducted an initial double reading to align coding criteria and refine the code book; subsequently, the entire corpus was coded in Atlas.ti, with code auditing to resolve discrepancies. Saturation was achieved when no new codes emerged in the final cases. All identifiers were anonymized, and the audio, transcripts, and matrices were stored on encrypted servers with restricted access.

The quantitative component was based on a structured questionnaire composed of 25 items divided into three dimensions: digital accessibility, trust in technology, and self-perceived empowerment. The instrument was validated through expert judgment (5 reviewers) and yielded an acceptable internal consistency score (Cronbach’s alpha = 0.84). Digital empowerment was operationalised through questionnaires assessing women’s autonomy in decision making, confidence in AI-guided assistance and the frequency with which they sought independent legal or psychological help through chatbot platforms. The records and transcripts were stored on encrypted, password-protected servers and were accessible only to the research team. This safeguarding was done in accordance with institutional regulations on the ethical handling of sensitive information, ensuring the protection of the identity and privacy of all participants.

3.4. Ethics and Procedure

All ethical principles were respected. Before participating, the women answered a question to confirm that they met the criteria: being over 18 years of age and having used a chatbot for legal or psychological purposes in cases of gender-based violence. They then signed a digital consent form guaranteeing their anonymity, confidentiality, and the right to withdraw from the study without consequences. The interviews and surveys were conducted virtually and securely. The data was protected with passwords, and only the research team had access, following institutional ethical standards for handling sensitive information (Rodríguez-Saavedra et al. 2024).

3.5. Analysis

The quantitative analysis was carried out by three researchers specializing in statistical methods, who processed the data in Rstudio R.4.3.2. using Multiple Correspondence Analysis (MCA) and k-means clustering to classify users according to their level of empowerment, frequency of use, and perception of technological limitations. This procedure included the calculation of coefficients of determination (R2), significance levels (p-values), and the contribution of individual variables to the multivariate structure (Kovacova et al. 2019). MCA was selected for its ability to analyze categorical data and reduce dimensionality in complex sets, while k-means clustering allowed for the formation of meaningful groups based on response similarities, facilitating the identification of behavioral and perceptual profiles. This strategy is consistent with methodologies applied in studies on digital tools and support systems for gender-based violence (Isaksson 2024; Guevara-Gómez 2023), which strengthened the interpretive validity of the results.

The qualitative analysis was conducted by two researchers with experience in gender studies and social sciences, who used Atlas.ti to code and categorize the participants’ testimonies. Through open and axial coding, narrative patterns related to trust in technology, connectivity difficulties, emotional limitations in seeking help, and the perception of support received through chatbots were identified (Rodriguez-Saavedra et al. 2025b).The narratives revealed how users in rural areas associated technology with mistrust and barriers to access, while urban participants highlighted the usefulness of chatbots as complementary support in legal and psychological decision making. Analysis of the interviews also revealed generational differences, with younger women expressing greater familiarity and digital autonomy, while older women showed reluctance due to previous experiences of technological exclusion.

Methodological integration was developed through a triangulation strategy, in which qualitative findings were compared with the profiles obtained in the statistical analysis. Cross-validation allowed for the examination of similarities and differences between the two levels of information (Marinucci et al. 2023), which added robustness to the study and allowed the patterns identified to be supported by both statistical results and participant testimonies.

3.6. Integration

A mixed triangulation strategy was adopted to ensure coherence, consistency, and depth in the interpretation of the results. This strategy was selected for its ability to cross-reference findings from different methods, reinforcing analytical robustness. Emerging themes from the qualitative analysis such as socio-technological barriers, emotional trust, and perceived accessibility were compared with the profiles generated in the quantitative analysis. This integration allowed for the validation of similarities between the two approaches and the explanation of differences, ensuring that interpretations were supported by both data sources (Rodríguez-Saavedra et al. 2024). The strategy contributed to a more robust analysis of the relationship between technological access and empowerment outcomes among women users of artificial intelligence chatbots, generating findings that would not have been possible with a single approach.

4. Results

The qualitative and quantitative findings are presented below, highlighting technological barriers, trust in AI and the segmentation of users according to their level of empowerment and digital access.

4.1. Qualitative Analysis

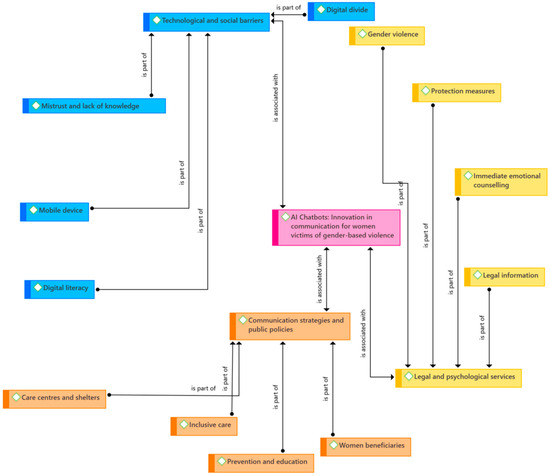

The qualitative analysis conducted with Atlas.ti allowed us to map relationships between access conditions and trust, technological and social barriers, and the chatbot’s articulation with legal and psychological services. Figure 1 summarizes this relational map and aligns with the objectives set out in the study, as it organizes the path from barriers to entry to support and referral routes. In this way, it establishes the framework for interpreting the findings and guiding the analytical decisions presented in the results.

Figure 1.

Integration of AI chatbots with legal, psychological, and institutional support services for women.

Figure 1 shows that the digital divide and technological ignorance limit access to chatbots, affecting the autonomy of women victims of gender-based violence. In addition, women who know and do not know about these platforms mentioned that, if they had greater knowledge about their use and assistance, they would have greater accessibility. These results correspond to the first specific objective, as they demonstrate how accessibility and trust in artificial intelligence influence the initial use of chatbots by women affected by gender-based violence.

Table 2, entitled “Matrix of categories, subcategories, and indicators,” was used to operationalize accessibility and trust in chatbots in the qualitative analysis. The topics are organized into barriers and facilitators, and observable descriptors were defined to enable consistent coding. The matrix is also aligned with the specific objectives of the study: (i) to examine accessibility and trust in chatbots; (ii) to identify the technical and social challenges of their implementation for psychological and legal assistance. The “Coverage” column reports the percentage of participants, out of a thousand interviews, who mentioned each topic at least once; the same case was counted in several categories when it mentioned more than one topic.

Table 2.

Coverage, subcodes, and microquotes by category.

The coverage, calculated on the basis of 1000 semi-structured interviews, shows that access and connectivity (41.2%), privacy and security (37.2%), and flow of interaction (29.5%) are the main barriers and account for most dropouts and interruptions. In contrast, personalization and empathy (33.8%) together with guidance and effectiveness (32.1%) act as facilitators when there is continuity of the case, respectful treatment, and clear pathways. Likewise, legal referral and follow-up (27.0%) operate as a bridge to transform guidance into action with traceable human support. For their part, contextual barriers (24.8%) reveal fragmented trajectories due to time and space constraints and digital skills gaps, which justify specific and flexible support. Consequently, empowerment is activated when stable and secure connection, understandable and recoverable steps, and access to traceable human referral converge, allowing information to be converted into action without increasing risk and reducing abandonment attributable to procedural confusion.

Table 3 is included, which organizes the participants’ accounts and frequent textual keywords by category, linking them to the types of psychological and legal support. In line with the specific objectives (iii) to analyze the effectiveness of chatbots in reducing barriers to reporting and accessing services, and (iv) to explore improvement strategies to optimize their design with a gender focus, the table translates narrative patterns into operational implications, specifies actionable responses for each situation, and guides decision making on accompaniment and referral. In this way, it focuses the reading on the factors that enable or interrupt empowerment and offers direct criteria for the functional and institutional adjustment of chatbots.

Table 3.

Narrative summary by category and types of psychological and legal support.

Based on these tables, representative excerpts are presented with their interpretive analysis, aligned with the specific objectives, in order to explain how each category affects use, continuity, and the search for help.

Access and connectivity.

Quote: “It cut out, so I came back later.”

Analysis: The evidence indicates that continuity of use depends on signal stability and available time. Consistent with specific objective i on accessibility and trust, the pattern describes how connectivity conditions the initiation and resumption of interaction; therefore, the analysis explains the episodes of abandonment and intermittent returns observed in the testimonies. Likewise, having re-entry routes and asynchronous reminders reduces loss of thread and promotes continuity.

Interaction flow.

Quote: “I didn’t know what to do next.”

Analysis: The expression reveals procedural burden and disorientation in the sequence of steps. In line with specific objective i, the finding shows that the usability of the flow determines the ability to continue without loss of context; thus, it clarifies why insufficient guidance is linked to delays in decision making. In addition, the presence of visible cues and back options reduces cognitive load and improves navigation.

Privacy and security.

Quote: “I use it when he’s not around and delete the chat quickly.”

Analysis: Covert use and deletion of traces indicate active risk management on the device. Consistent with specific objective ii on technical and social challenges, the pattern shows that the perception of vulnerability limits interaction time and defines safe windows of use; as a result, moments and places of low exposure are prioritized. Likewise, discreet protection mechanisms expand the margin of use without increasing the perceived risk.

Personalization and empathy.

Quote: “He remembered my case and used my name.”

Analysis: Case memory and validating treatment strengthen trust and adherence. Consistent with specific objective iii on effectiveness and barrier reduction, the pattern explains return and continuity when there is recognition of context and respectful language; thus, interaction is sustained over time. Hence, recognition of background and a non-revictimizing tone act as triggers for adherence.

Guidance and effectiveness.

Quote: “I don’t understand the requirements; I need exact directions.”

Analysis: The precision of routes and requirements reduces uncertainty and enables timely decisions. In line with specific objective iii, the pattern shows that structuring viable steps facilitates the transition from consultation to the execution of concrete actions, thus accelerating the conversion of information into action. At the same time, verification points and short lists limit ambiguities and speed up procedures.

Legal referral and follow-up.

Quote: “I want them to call me to confirm how to file the complaint.”

Analysis: The demand for traceable human contact transforms guidance into formal action. In line with specific objectives iii and iv relating to effectiveness and improvements in design and integration, the pattern highlights the importance of support in sustaining the process; consequently, the transition from initial guidance to concrete steps is enabled and sustained over time. In this vein, the existence of an identifiable reference point reduces uncertainty and sustains continuity.

Contextual barriers.

Quote: “I can only use it at certain times and I don’t have a private place.”

Analysis: Restrictions on time, space, and digital skills fragment the usage trajectory. Consistent with specific objective ii, the pattern shows that contextual conditions determine the pace and depth of interaction; moreover, they force critical steps to be deferred until conditions are safe. In addition, digital skill gaps introduce micro-interruptions that require flexible support and simple alternatives.

Within the framework of the article’s overall message, Table 2 and Table 3 serve complementary functions: Table 2 establishes the analytical architecture and relative magnitude of each topic, identifying where barriers are concentrated and which factors act as facilitators; Table 3 translates these patterns into operational decisions for psychological and legal support, specifying which interventions are relevant in each situation. Together, they outline the mechanism by which accessibility, trust, and human-centered coordination shape the digital empowerment of participants and support the design and integration priorities that are developed in the discussion and consolidated in the conclusions.

4.2. Quantitative Analysis

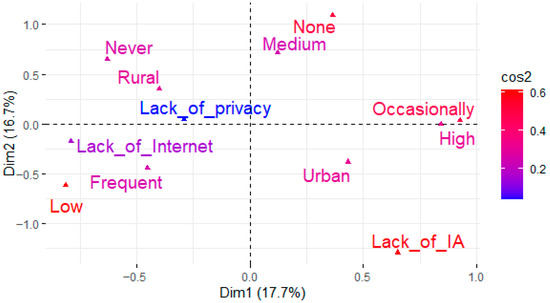

The statistical analysis showed significant differences in the adoption of chatbots according to the profile of the users. Using Multiple Correspondence Analysis (MCA) and clustering in R, differentiated groups were identified according to their level of empowerment, frequency of use and perception of digital accessibility.

The Figure 2 confirms that lack of artificial intelligence (AI) and low connectivity are the main barriers to the use of chatbots to assist women victims of gender-based violence. Dimension 1 (17.7%) shows that lack of AI and low frequency of use influence adoption, while Dimension 2 (16.7%) highlights that lack of privacy and limited internet affect trust in these tools. Urban users show greater interaction, while rural users show reduced use, which reaffirms the relationship between technological accessibility and digital empowerment.

Figure 2.

Multiple Correspondence Analysis (MCA).

The Table 4 shows that Dimensions 1 and 2 explain 34.35% of the total variance, being the most representative. Dimension 1 (17.65%) is associated with technological barriers and lack of access to AI, while Dimension 2 (16.69%) reflects privacy and internet access. In addition, the survey results revealed that 68% of respondents who described chatbot responses as ‘personalised and relevant to their specific situation’ considered them ‘very effective’ for psychological or legal help. In contrast, only 21% of those who described the chatbot interaction as ‘generic’ or ‘impersonal’ rated it as effective. These figures empirically support hypothesis 3, according to which the degree of personalisation of chatbot responses directly influences their perceived effectiveness. Participants highlighted that adaptive and tailored communication contributed to greater trust and autonomy. The progressive reduction in variance in the following dimensions confirms that these factors are key in the use of chatbots for the empowerment of women victims of gender-based violence.

Table 4.

Eigenvalue percentage of variance, cumulative percentage of variance.

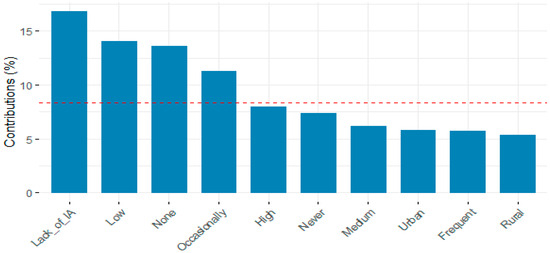

Figure 3 confirms the contribution of variables to the ACM map; Dimension 1 equals 17.7% and Dimension 2 equals 16.7%. Lack_of_AI is the variable with the highest representation and contribution in dimensions 1 and 2, followed by indicators of low or unstable connectivity such as Lack_of_Internet and low access. In contrast, frequency of use and urban and rural geographical context contribute less in this regard. Thus, the map shows that technological barriers weigh more heavily than frequency or residence in the observed configuration.

Figure 3.

Contribution of dimension 1–2 variables.

Figure 3 shows that the variables that exceed the reference line concentrate the greatest explanatory power of the map, led by lack of AI and followed by low access, absence of access, and occasional use, thus functioning as discriminating axes associated with technological barriers and weak appropriation. Below the threshold are high frequency of use and urban and rural contexts with marginal contributions, indicating that the variability of the factorial space is organized more by technological deficiencies and limited levels of adoption than by place of residence or intensity of use; thus, the reading prioritizes interventions on digital literacy and connectivity.

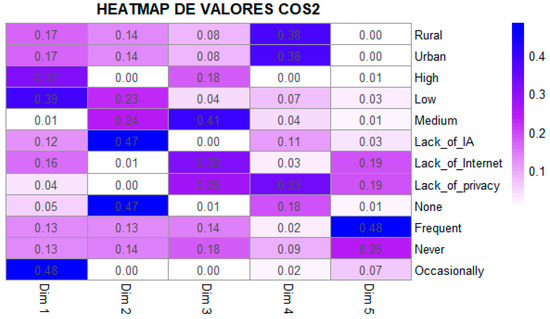

Figure 4 shows that women in urban and rural areas have a decisive influence on the first dimension, demonstrating that place of residence directly influences the use of chatbots. Difficulties such as lack of access to the internet and artificial intelligence are closely linked to the second and third dimensions, showing that these technological barriers significantly limit access to psychological and legal assistance. Likewise, those who use chatbots more frequently are strongly represented in the fifth dimension, confirming that trust in these systems is strengthened with consistent use, thus facilitating their role in counselling and support for victims of gender-based violence. These findings are aligned with the second specific objective, as they highlight key technical and social barriers such as lack of connectivity, digital illiteracy, and limited algorithmic performance that hinder the implementation of chatbots in legal and psychological support contexts.

Figure 4.

Cos2 matrix in the multiple correspondence analysis.

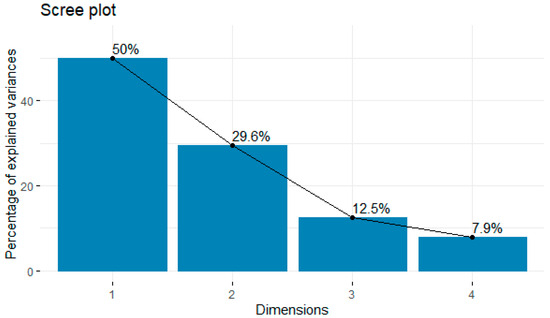

The Figure 5 shows that the first two dimensions explain 79.6% of the total variance, indicating that the main factors influencing the use of chatbots for psychological and legal assistance are represented in these dimensions. The first dimension is the most significant, capturing 50% of the variance, confirming that technological barriers and digital access have a determining impact on the adoption of these systems by women victims of gender-based violence.

Figure 5.

Inertia Analysis (Explained Variance).

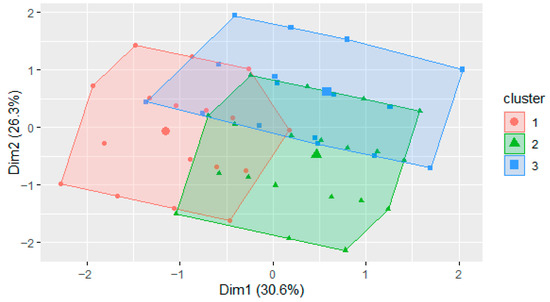

Figure 6 shows cluster 1 (red) groups 182 users (18.21%), characterised by low access and limited use of chatbots, evidencing that technological barriers and lack of trust in AI restrict their adoption. Cluster 2 (green) represents 385 users (38.47%) with moderate access and occasional use, indicating a partial adoption stage. Finally, Cluster 3 (blue) concentrates 433 users (43.32%) with high access and frequent use of chatbots, confirming that familiarity with the technology facilitates digital empowerment and effective assistance. These results confirm hypothesis 4, which proposes that women who use chatbots more frequently develop higher levels of digital empowerment. The profile of users in group 3 shows that constant and repeated interaction with chatbots is associated with greater confidence, autonomy in decision making and a stronger perception of the effectiveness of the support. This finding reinforces the idea that frequency of use is not only a behavioural variable, but also a critical factor in strengthening women’s digital agency when faced with gender-based violence. These findings respond to the third and fourth specific objectives, as they reflect that, although a significant portion of users have frequent access to chatbots, barriers still persist in using them to report incidents or access formal support services while also offering relevant data to inform improvements in chatbot design and integration.

Figure 6.

Cluster plot according to the segmentation of users according to access and use of chatbots.

As summarised in Table 5, the four specific hypotheses were confirmed by consistent quantitative and qualitative tests. This structured synthesis reinforces the internal coherence of the study and highlights the central role of digital access, personalisation, trust and frequency of use in determining the empowering potential of chatbots in contexts of gender-based violence.

Table 5.

Summary of hypotheses, variables, tests, and results.

5. Discussion

In terms of accessibility and trust in chatbots, multiple correspondence analysis (MCA) showed that dimension 1 explained 17.7% of the variance, identifying that lack of access to artificial intelligence and low frequency of use are the main factors limiting the adoption of these tools. Dimension 2 explained 16.7% of the variance, demonstrating that privacy and internet access directly affect trust in chatbots. This pattern was reflected not only in the quantitative data but also in the testimonials, where one participant stated, “I don’t trust the chatbot to protect my information,” while another noted, “When the connection fails, the tool leaves me hanging and I feel exposed.” A third testimony added: “The system seems to observe us, but not protect us.” These perceptions show that mistrust transcends the technical and connects with emotional vulnerability. The findings coincide with Dorta-González et al. (2024), who recognize the gender digital divide as an obstacle to equitable access to artificial intelligence technologies. Brown (2022) highlights that structural limitations reinforce inequalities, while Jang et al. (2022) insist that inclusive design is key to responding to heterogeneous populations. Similarly, the ITU (2023) points out that technological limitations erode trust, which connects with the findings of Guevara-Gómez (2023), who emphasizes that informational autonomy is only achieved when digital infrastructure is linked to institutional guarantees. Thus, the effectiveness of chatbots in empowering women depends directly on digital access and the level of trust placed in technology, making this aspect not only a technical challenge but also an ethical and social one.

Regarding the technical and social challenges in psychological and legal assistance, ACM and inertia analysis showed that the absence of artificial intelligence and low accessibility explained 50% of the variance, confirming that these are the most significant barriers. In the interviews, one participant noted, “The chatbot doesn’t understand what I need, I feel like it responds coldly,” while another said, “I requested legal help and it gave me incomplete information, which increased my fear.” Another testimony added: “Talking to a machine that doesn’t understand my urgency made me feel invisible.” These statements reflect how technological deficiency adds to the perception of dehumanization in support processes. The findings are in line with Hajibabaei et al. (2023), who show that algorithmic biases reduce the effectiveness of these platforms, and with Borges and Filó (2021), who highlight the need for equity and impartiality in the design of artificial intelligence. Isaksson (2024) identified that the absence of regulation increases mistrust, while UN Women (2023) warns that compliance with security standards is an indispensable condition for these tools to be used by women at risk. In this scenario, regulatory gaps and algorithmic biases not only erode the legitimacy of the systems but also limit their ability to establish themselves as reliable support mechanisms, which requires strengthening both the technical dimension and social sensitivity.

Regarding the effectiveness of chatbots in reporting and support services, cluster analysis showed that 43.32% of users (Cluster 3) reported high access and frequency of use, 38.47% (Cluster 2) reported moderate access, and 18.21% (Cluster 1) reported limited use. At a descriptive level, 64% of participants considered that these tools improve access to information on legal resources and psychological support, although only 27% actually used them to report situations of violence. The interviews provided revealing insights: “It helps to guide me, but when I wanted to report something, they told me to go to the police station. I felt abandoned,” said one user. Another commented: “It’s useful for quick information, but not for something so serious.” A third testimony added: “The chatbot listened to me, but in the end it didn’t solve my need for immediate protection.” These experiences reflect the tension between the informational potential of chatbots and the lack of effective integration with institutional structures. As Guevara-Gómez (2023) warns, these tools are accessible, but their performance depends directly on the institutional framework that supports them. Avella et al. (2023) emphasize the need to adjust them to human rights protocols, while Costa (2018) highlights the importance of AI in removing barriers in emergency services.

The World Bank (2023) highlights its role in public policies that seek to expand reporting channels, noting that personalized responses increase users’ willingness to share sensitive information, reinforcing the importance of tailoring systems to the specific needs of victims.

In the goal of optimizing gender-focused chatbots, ACM results showed that lack of personalization and low utility explained 50% of the variance, while trust in AI and data security explained 29.6%. The qualitative testimonials were compelling: “The chatbot repeats the same thing to everyone; it doesn’t understand what we’re going through,” said one user. Another pointed out: “If it doesn’t protect my data, I can’t use it again.” A third added: “What we need is empathy, not automatic responses.” These perceptions confirm that personalization and perceived security are essential conditions for digital empowerment. The literature supports this premise: Jang et al. (2022) emphasize that chatbots must be designed according to personalization criteria, Borges and Filó (2021) argue that algorithmic transparency is key to reducing bias, and Guevara-Gómez (2023) warns that they must be incorporated with caution into national AI strategies, prioritizing security and cultural sensitivity. Along the same lines, Isaksson (2024) emphasizes the need for regulatory frameworks that guarantee empathetic responses adapted to contexts of gender-based violence. From a broader perspective, authors such as Xia et al. (2023) and Sutko (2020) reinforce that digital empowerment is achieved when access is accompanied by autonomy in decision making. Thus, the relationship between frequent use of chatbots and the perception of autonomy validates that these tools not only provide information but also strengthen the capacity for agency and decision making in women facing violence.

Consequently, the findings of this study transcend the descriptive and contribute theoretical, political, and ethical implications. From a theoretical perspective, they confirm that digital empowerment cannot be understood as a merely instrumental process but rather as the intersection between technological infrastructure, institutional trust, and inclusive design (Piva 2024). Politically, they show that the expansion of chatbots must be accompanied by clear regulatory frameworks, coordination with justice services, and gender-sensitive public policies. And ethically, they highlight the urgency of designing systems that guarantee confidentiality, empathy, and cultural sensitivity, avoiding the reproduction of patterns of discrimination. Therefore, chatbots represent an opportunity to transform access to justice and psychological support, provided that their implementation is critical, contextualized, and aligned with principles of equity and human rights.

From an ethical perspective, the findings highlight that the use of chatbots in contexts of gender-based violence requires strict guarantees of confidentiality, protection of sensitive data, and the absence of discrimination. The testimonies showed that the perception of vulnerability is amplified when users fear that their personal information may be misused or exposed. Therefore, ethical responsibility is not limited to technical security, but also involves ensuring empathetic responses, avoiding revictimization, and promoting cultural sensitivity in design. These elements are essential to strengthen women’s trust in artificial intelligence systems and to ensure that technological innovation does not reproduce patterns of inequality but rather contributes effectively to the protection of fundamental rights.

Future Research and Recommendations

This study confirms the need to expand research on the use of artificial intelligence-based support systems targeting women victims of gender-based violence. Future research should develop longitudinal studies that evaluate the sustained impact of the use of chatbots on psychological recovery, legal empowerment, and social reintegration. In addition, it is essential to analyze the variation in the effectiveness of chatbots by age, cultural context, and level of digital literacy.

Comparative analyses between automated chatbots and human intervention mechanisms are also required in order to identify the specific contributions of each approach to the empowerment of users. Any future line of research should incorporate survivor-centered methodologies, ensuring that the design of chatbots responds directly to their actual experiences and needs.

Developers must integrate emotional recognition capabilities, multiple languages and advanced data protection systems to ensure the trust, adoption and permanence of these tools. Consequently, state institutions are called upon to incorporate these systems within their comprehensive care frameworks, ensuring their legitimacy, equitable access and linkage with justice and social protection mechanisms.

6. Conclusions

This study provides conclusive evidence that AI-based chatbots serve not only as informational tools but as digital empowerment mechanisms for women experiencing gender-based violence. Through a robust mixed methods approach, the research confirms that, when digital solutions are accessible, personalized, and institutionally recognized, they foster measurable increases in autonomy, self-efficacy, and engagement with legal and psychological resources. Consistent with the qualitative findings, empowerment is activated when stable and secure connections, understandable and recoverable steps, and traceable human referral to psychological and legal support converge, reducing dropout and turning guidance into action.

Confirmation of all four hypotheses demonstrates a clear pattern: women with greater access to technology and higher levels of trust in AI are more likely to adopt these systems, especially when chatbot responses are perceived as empathetic and tailored to their needs. Personalized interaction not only increases user satisfaction but transforms passive recipients of help into active agents of their own protection. In contrast, rural women and those excluded from reliable digital infrastructures continue to face structural barriers that perpetuate cycles of silence and dependency. Likewise, clarity of routes, definition of requirements, and continuity of the case emerge as critical enablers of timely decisions, while contextual barriers of time, space, and digital skills set the pace of use and require flexible support without increasing risk.

These findings underscore the urgency of integrating chatbot technologies into public policy and official support frameworks. Chatbots must be more than private sector initiatives or experimental tools; they must be institutionalized, regulated, and designed through a gender-sensitive and culturally adaptive lens. Training programs, cross-sector collaboration, and ethical oversight mechanisms are not optional but essential to ensure that AI-based support systems uphold dignity, equity, and fairness. Effective coordination with psychological and legal services, guaranteed confidentiality, and traceability of support are essential operational conditions for the benefits to be sustained in practice.

This study asserts that AI-based chatbots, when anchored in inclusive design and institutional legitimacy, are transformative technologies: not only informing, but empowering; not only assisting, but restoring agency; not only guiding, but liberating. In contexts of gender-based violence, their strategic integration is no longer a technical option but a moral imperative. The implementation process requires ensuring accessibility, personalization with non-revictimizing treatment, usability with recoverable steps, and a human bridge to accompany the reporting and follow-up, conditions that align technology with tangible results in terms of protection and access to justice.

Author Contributions

Conceptualization, M.O.R.S. and E.A.D.P.; methodology, E.A.D.P. and A.E.D.S.; software, R.E.G.L. and J.A.E.P.; validation, M.O.R.S., E.A.D.P., and R.E.G.L.; formal analysis, E.A.D.P.; research, P.G.L.T., O.A.A., M.E.A.C., W.Q.N., R.A.P.G., and M.E.H.Z.; resources, M.E.H.Z. and R.W.A.C.; data curation, J.A.E.P. and P.G.L.T.; writing: preparation of the original draft, M.O.R.S.; writing: review and editing, E.A.D.P., A.E.D.S., and R.E.G.L.; visualization, R.E.G.L. and J.A.E.P.; supervision, M.O.R.S. and R.W.A.C.; project management, M.O.R.S.; funding acquisition, M.O.R.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research has not received external funding. The APC has been funded by the corresponding author.

Data Availability Statement

The data supporting the results are available upon request from the corresponding author. An anonymized dataset, along with the variable dictionary and R scripts, will be provided to anyone who requests it for academic purposes and agrees to a confidentiality agreement, subject to applicable ethical requirements.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- Ahn, Jungyong, Jungwon Kim, and Yongjun Sung. 2022. The effect of gender stereotypes on artificial intelligence recommendations. Journal of Business Research 141: 50–59. [Google Scholar] [CrossRef]

- Al-Ayed, Sura I., and Ahmad Adnan Al-Tit. 2024. Measuring gender disparities in the intentions of startups to adopt artificial intelligence technology: A comprehensive multigroup comparative analysis. Uncertain Supply Chain Management 12: 1567–76. [Google Scholar] [CrossRef]

- Avella, Marcela del Pilar, Jesús Eduardo Sanabria Moyano, and Andrea Catalina Peña Piñeros. 2023. International standards for the protection of women against gender-based violence applied to predictive artificial intelligence|Los estándares internacionales de protección de la violencia basada en género de las mujeres aplicados a la inteligencia arti. Justicia (Barranquilla) 28: 43–56. [Google Scholar] [CrossRef]

- Bonamigo, Victoria Grassi, Fernanda Broering Gomes Torres, Rafaela Gessner Lourenço, and Marcia Regina Cubas. 2022. Violencia física, sexual y psicológica según el análisis conceptual evolutivo de Rodgers. Cogitare Enfermagem 27: e86883. [Google Scholar] [CrossRef]

- Borges, Gustavo Silveira, and Maurício da Cunha Savino Filó. 2021. Artificial intelligence, gender, and human rights: The case of amazonInteligência artificial, gênero e direitos humanos: O caso amazon. Law of Justice Journal 35: 218–45. [Google Scholar]

- Brown, Laila M. 2022. Gendered Artificial Intelligence in Libraries: Opportunities to Deconstruct Sexism and Gender Binarism. Journal of Library Administration 62: 19–30. [Google Scholar] [CrossRef]

- Chauhan, Prashant, and Gagandeep Kaur. 2022. Gender Bias and Artificial Intelligence: A Challenge within the Periphery of Human Rights. Hasanuddin Law Review 8: 46–59. [Google Scholar] [CrossRef]

- Costa, Pedro. 2018. Conversing with personal digital assistants: On gender and artificial intelligence. Journal of Science and Technology of the Arts 10: 59–72. [Google Scholar] [CrossRef]

- Dorta-González, Pablo, Alexis Jorge López-Puig, María Isabel Dorta-González, and Sara M. González-Betancor. 2024. Generative artificial intelligence usage by researchers at work: Effects of gender, career stage, type of workplace, and perceived barriers. Telematics and Informatics 94: 102187. [Google Scholar] [CrossRef]

- Guevara-Gómez, Ariana. 2023. National Artificial Intelligence Strategies: A Gender Perspective Approach. Revista de Gestion Publica 12: 129–54. [Google Scholar] [CrossRef]

- Hajibabaei, Anahita, Andrea Schiffauerova, and Ashkan Ebadi. 2022. Gender-specific patterns in the artificial intelligence scientific ecosystem. Journal of Informetrics 16: 101275. [Google Scholar] [CrossRef]

- Hajibabaei, Anahita, Andrea Schiffauerova, and Ashkan Ebadi. 2023. Women and key positions in scientific collaboration networks: Analyzing central scientists’ profiles in the artificial intelligence ecosystem through a gender lens. Scientometrics 128: 1219–40. [Google Scholar] [CrossRef]

- Huang, Kuo-Liang, Sheng-Feng Duan, and Xi Lyu. 2021. Affective Voice Interaction and Artificial Intelligence: A Research Study on the Acoustic Features of Gender and the Emotional States of the PAD Model. Frontiers in Psychology 12: 664925. [Google Scholar] [CrossRef] [PubMed]

- International Telecommunication Union. 2023. Gender Digital Divide and Access to Emerging Technologies. Available online: https://www.itu.int (accessed on 16 October 2025).

- Isaksson, Anna. 2024. Mitigation measures for addressing gender bias in artificial intelligence within healthcare settings: A critical area of sociological inquiry. AI and Society 40: 3009–18. [Google Scholar] [CrossRef]

- Jang, Yeonju, Seongyune Choi, and Hyeoncheol Kim. 2022. Development and validation of an instrument to measure undergraduate students’ attitudes toward the ethics of artificial intelligence (AT-EAI) and analysis of its difference by gender and experience of AI education. Education and Information Technologies 27: 11635–67. [Google Scholar] [CrossRef]

- Jomaa, Nayef, Rais Attamimi, and Musallam Al Mahri. 2024. Utilising Artificial Intelligence (AI) in Vocabulary Learning by EFL Omani Students: The Effect of Age, Gender, and Level of Study. Forum for Linguistic Studies 6: 171–86. [Google Scholar] [CrossRef]

- Joseph, Ofem Usani, Iyam Mary Arikpo, Sylvia Victor Ovat, Chidirim Esther Nworgwugwu, Paulina Mbua Anake, Maryrose Ify Udeh, and Bernard Diwa Otu. 2024. Artificial Intelligence (AI) in academic research. A multi-group analysis of students’ awareness and perceptions using gender and programme type. Journal of Applied Learning and Teaching 7: 76–92. [Google Scholar] [CrossRef]

- Kelley, Stephanie, Anton Ovchinnikov, David R. Hardoon, and Adrienne Heinrich. 2022. Antidiscrimination Laws, Artificial Intelligence, and Gender Bias: A Case Study in Nonmortgage Fintech Lending. Manufacturing and Service Operations Management 24: 3039–59. [Google Scholar] [CrossRef]

- Kim, Keunjae, and Kyungbin Kwon. 2024. Designing an Inclusive Artificial Intelligence (AI) Curriculum for Elementary Students to Address Gender Differences With Collaborative and Tangible Approaches. Journal of Educational Computing Research 62: 1837–64. [Google Scholar] [CrossRef]

- Kovacova, Maria, Jana Kliestikova, Marian Grupac, Iulia Grecu, and Gheorghe Grecu. 2019. Automating gender roles at work: How digital disruption and artificial intelligence alter industry structures and sex-based divisions of labor. Journal of Research in Gender Studies 9: 153–59. [Google Scholar] [CrossRef]

- Lee, Min-Sun, Gi-Eun Lee, San Ho Lee, and Jang-Han Lee. 2024. Emotional responses of Korean and Chinese women to Hangul phonemes to the gender of an artificial intelligence voice. Frontiers in Psychology 15: 1357975. [Google Scholar] [CrossRef]

- Leong, Kelvin, and Anna Sung. 2024. Gender stereotypes in artificial intelligence within the accounting profession using large language models. Humanities and Social Sciences Communications 11: 1141. [Google Scholar] [CrossRef]

- Locke, Larry Galvin, and Grace Hodgdon. 2024. Gender bias in visual generative artificial intelligence systems and the socialization of AI. AI and Society 40: 2229–36. [Google Scholar] [CrossRef]

- Lütz, Fabian. 2022. Gender equality and artificial intelligence in Europe. Addressing direct and indirect impacts of algorithms on gender-based discrimination. ERA Forum 23: 33–52. [Google Scholar] [CrossRef]

- Manasi, Ardra, Subadra Panchanadeswaran, Emily Sours, and Seung Ju Lee. 2022. Mirroring the bias: Gender and artificial intelligence. Gender, Technology and Development 26: 295–305. [Google Scholar] [CrossRef]

- Marinucci, Ludovica, Claudia Mazzuca, and Aldo Gangemi. 2023. Exposing implicit biases and stereotypes in human and artificial intelligence: State of the art and challenges with a focus on gender. AI and Society 38: 747–61. [Google Scholar] [CrossRef]

- Møgelvang, Anja, Camilla Bjelland, Simone Grassini, and Kristine Ludvigsen. 2024. Gender Differences in the Use of Generative Artificial Intelligence Chatbots in Higher Education: Characteristics and Consequences. Education Sciences 14: 1363. [Google Scholar] [CrossRef]

- Noriega, Maria Noelle. 2020. The application of artificial intelligence in police interrogations: An analysis addressing the proposed effect AI has on racial and gender bias, cooperation, and false confessions. Futures 117: 102510. [Google Scholar] [CrossRef]

- Nouraldeen, Rasha Mohammad. 2023. The impact of technology readiness and use perceptions on students’ adoption of artificial intelligence: The moderating role of gender. Development and Learning in Organizations 37: 7–10. [Google Scholar] [CrossRef]

- Nwafor, Ifeoma E. 2024. Gender Mainstreaming into African Artificial Intelligence Policies: Egypt, Rwanda and Mauritius as Case Studies. Law, Technology and Humans 6: 53–68. [Google Scholar] [CrossRef]

- Parry, Aqib Javaid, Sana Altaf, and Akhtar Habib Shah. 2024. Gender Dynamics in Artificial Intelligence: Problematising Femininity in the Film Alita: Battle Angel. 3L: Language, Linguistics, Literature 30: 16–32. [Google Scholar] [CrossRef]

- Piva, Laura. 2024. Vulnerability in the age of artificial intelligence: Addressing gender bias in healthcare. BioLaw Journal 2024: 169–78. [Google Scholar] [CrossRef]

- Rodriguez-Saavedra, Miluska Odely, Luis Barrera Benavides, Ivan Galindo, Luis Campos Ascuña, Antonio Morales Gonzales, Jian Mamani Lopez, and Maria Alegre Chalco. 2025a. The Role of Law in Protecting Minors from Stress Caused by Social Media. Studies in Media and Communication 13: 373–85. [Google Scholar] [CrossRef]

- Rodriguez-Saavedra, Miluska Odely, Luis Gonzalo Barrera Benavides, Ivan Cuentas Galindo, Lui Miguel Campos Ascuña, Antonio Victor Morales Gonzales, Jiang Wagner Mamani Lopez, Ruben Washintong, and Arguedas-Catasi. 2025b. Augmented Reality as an Educational Tool: Transforming Teaching in the Digital Age. Information 16: 372. [Google Scholar] [CrossRef]

- Rodríguez-Saavedra, Miluska Odely, Felipe Gustavo Chávez-Quiroz, Wilian Quispe-Nina, Rocio Natividad Caira-Mamani, Wilfredo Alexander Medina-Esquivel, Edwing Gonzalo Tapia-Meza, and Raúl Romero-Carazas. 2024. Foreign Direct Investment (FDI) and Economic Development Impacted by Peru’s Public Policies. Journal of Ecohumanism 3: 317–32. [Google Scholar] [CrossRef]

- Schiller, Amy, and John McMahon. 2019. Alexa, alert me when the revolution comes: Gender, affect, and labor in the age of home-based artificial intelligence. New Political Science 41: 173–91. [Google Scholar] [CrossRef]

- Sutko, Daniel M. 2020. Theorizing femininity in artificial intelligence: A framework for undoing technology’s gender troubles. Cultural Studies 34: 567–92. [Google Scholar] [CrossRef]

- UNESCO. 2023. Ethical Considerations in Artificial Intelligence and Gender Bias Mitigation. Available online: https://www.unesco.org (accessed on 16 October 2025).

- UN Women. 2023. Gender-Based Violence in Times of Crisis: Analysis and Response Strategies. Available online: https://www.unwomen.org (accessed on 16 October 2025).

- World Bank. 2023. Gender Gaps in Access to Digital Services: A Global Analysis. Available online: https://www.worldbank.org (accessed on 16 October 2025).

- World Health Organization. 2023. Report on Gender-Based Violence and Its Impact on Public Health. Available online: https://www.who.int (accessed on 16 October 2025).

- Xia, Qi, Thomas Kia Fiu Chiu, and Ching Sing Chai. 2023. The moderating effects of gender and need satisfaction on self-regulated learning through Artificial Intelligence (AI). Education and Information Technologies 28: 8691–713. [Google Scholar] [CrossRef]

- Yim, Iris Heung Yue. 2024. Artificial intelligence literacy in primary education: An arts-based approach to overcoming age and gender barriers. Computers and Education: Artificial Intelligence 7: 100321. [Google Scholar] [CrossRef]

- Young, Erin, Judy Wajcman, and Laila Sprejer. 2023. Mind the gender gap: Inequalities in the emergent professions of artificial intelligence (AI) and data science. New Technology, Work and Employment 38: 391–414. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).