University Students’ Conceptualisation of AI Literacy: Theory and Empirical Evidence

Abstract

1. Introduction

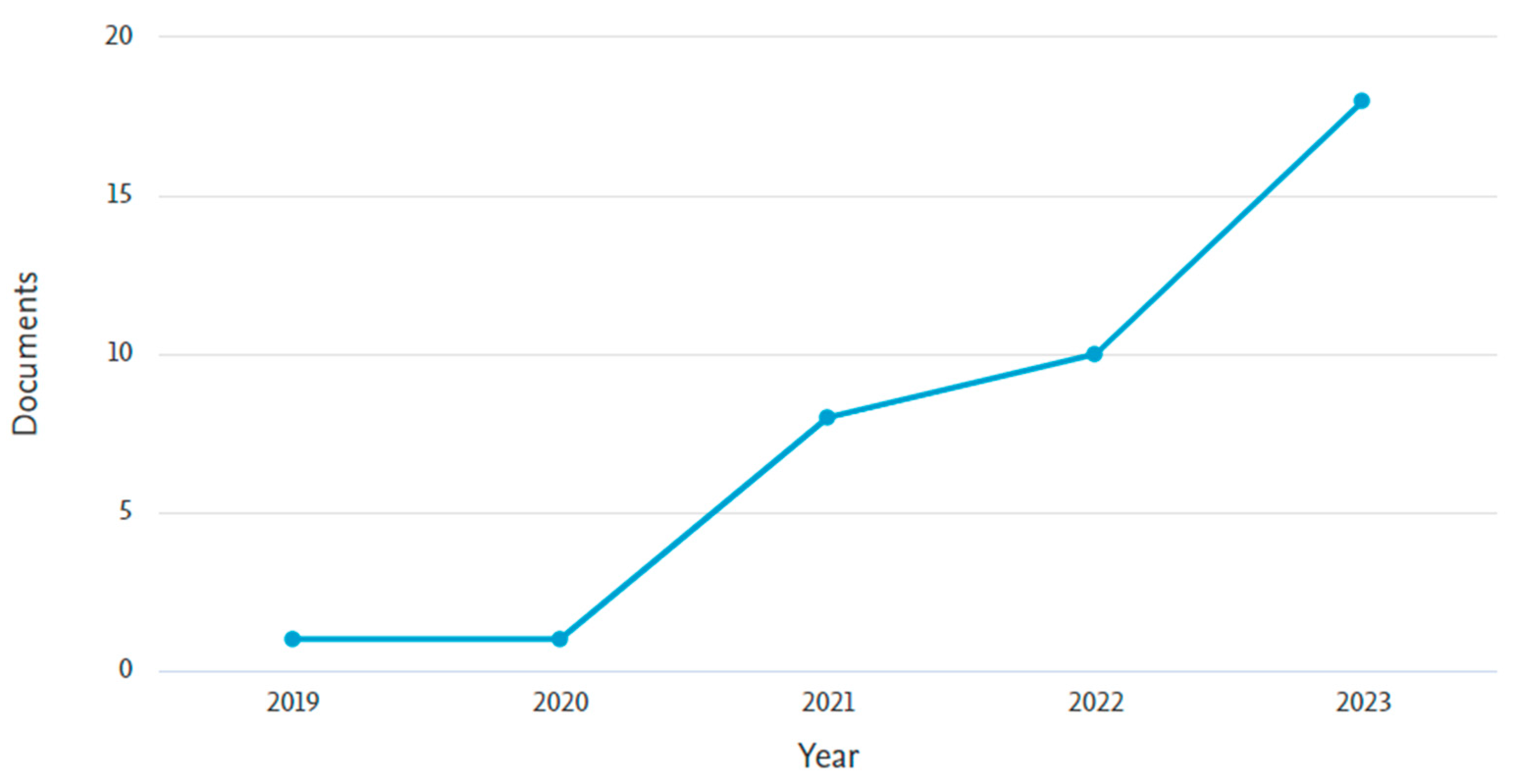

AI Literacy

TITLE-ABS-KEY (“AI Literacy”) AND (LIMIT-TO (EXACTKEYWORD, “Ai Literacy”) OR LIMIT-TO (EXACTKEYWORD, “Artificial Intelligence Literacy”) OR LIMIT-TO (EXACTKEYWORD, “AI Literacy”) AND (LIMIT-TO (DOCTYPE, “ar”).

- What tools are most often used to investigate AI literacy empirically?

- Which target groups are the studies addressing?

- Are empirical or theoretical studies predominant?

- How do different studies define AI literacy?

2. Methodology

2.1. Research Sample

2.2. Research Tool

- Describe what you think artificial intelligence means.

- Describe what AI can now be used for.

- Describe how you think AI works.

- Describe why artificial intelligence is used. You can relate your answer to specific areas or answer in general terms.

- Describe which AI tools you use (if there are more than one, choose one).

- Describe any ethical issues or challenges you may encounter when working with it.

- Describe what a person should be able to do to say that they are literate to work with AI.

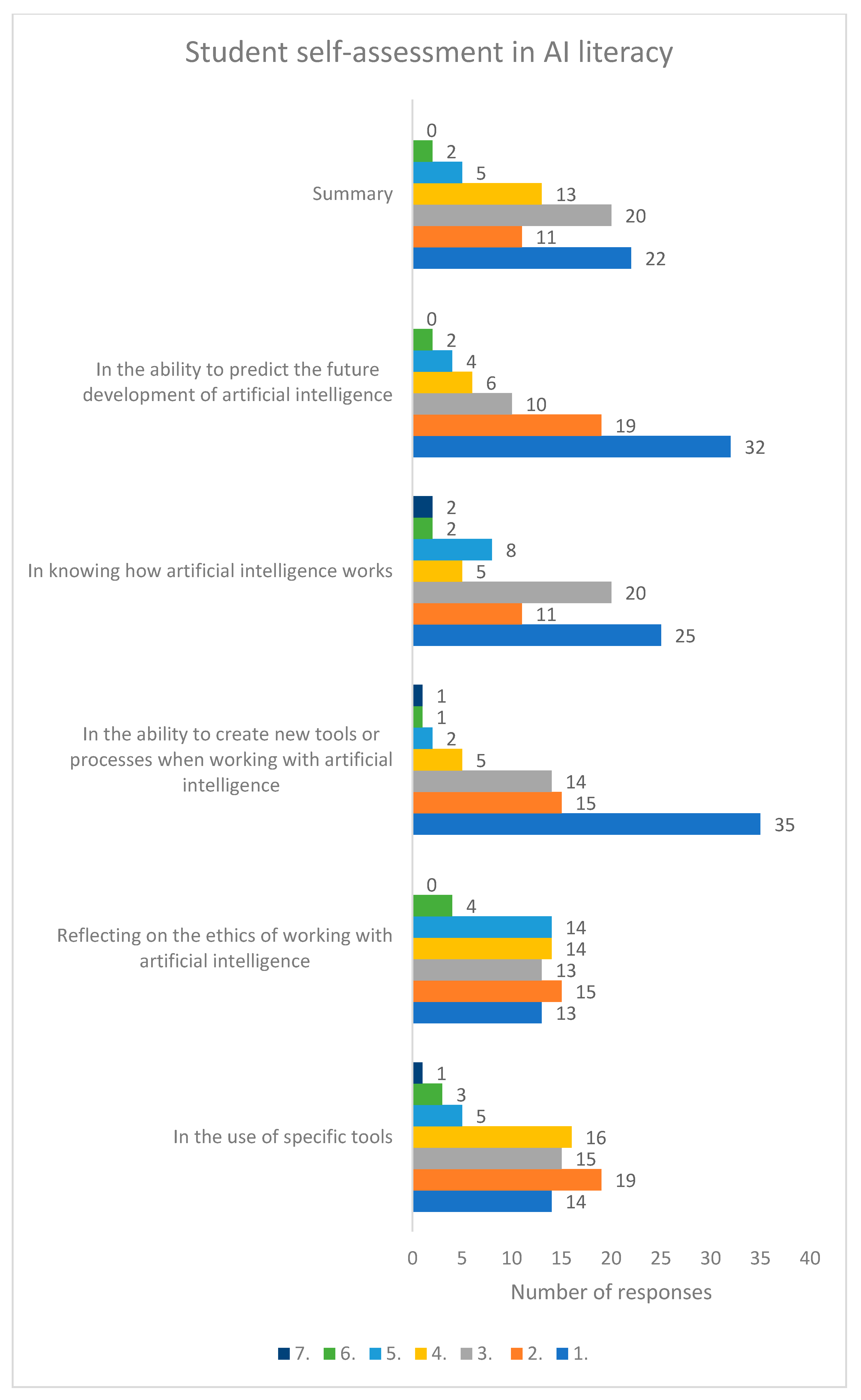

- In the use of specific tools;

- In reflecting on the ethics of working with artificial intelligence;

- In the ability to create new tools or processes when working with artificial intelligence;

- In the knowledge of how artificial intelligence works;

- In the ability to predict the future development of artificial intelligence;

- Overall.

2.3. Data Collection and Processing

2.4. Research Limits and Ethics

3. Results

3.1. What Do You Think Artificial Intelligence Means?

AI is a neural network that can produce adequate (most likely) output based on the patterns found from the learning data (learned neurons). More generally, I see AI as an effort to mimic human thinking/perception of the world technologically.

Artificial intelligence is a general umbrella term for a fairly broad field. Within AI, we are trying to make machines perform activities with the same quality as humans. As a sub-category, we can see, for example, machine learning, neural networks, deep learning and so on. A subset that has been talked about a lot lately is generative AI, which can generate content. This can be based on so-called transformers for text or diffusion for images.

A form of machine learning, an ever-expanding database.

Artificial intelligence (AI) is a phenomenon with a problematic definition; for me, the critical dimension of AI is learning and observing patterns, based on which it generates, sorts, combines, and personalises (…) digital content.

It is a program or technology capable of learning on its own and solving problems on its own.

A tool or technology that can learn and adapt to the situation. It can process large amounts of data in a short time. It encourages creativity and helps users to develop their thinking and formulations.

This is the ability of the program to mimic human thinking.

Model of man and his thinking.

AI is an attempt by humans to mimic human thinking in areas where only the human brain can. So, it is an attempt to create our artificial brain.

It is a “layer” that is capable of searching, sorting, analysing (perhaps with limitations), rearranging, and creating “new” forms of existing multimedia data. While it is inherently more efficient and capable than a human, it is also limited by how humans use it.

A device that could handle repetitive and programmable activities better than a human so that people can use creative thinking

A tool or technology that can learn and adapt to the situation. It can process large amounts of data in a short time. It encourages creativity and helps users to develop their thinking and formulations.

Technology is an evolving progressively field of computer science and discovery for humanity that will eventually be the same leap in technology technological leap as the Internet once was for society. Furthermore, more and more people will increasingly use it as a tool for everyday life.

3.2. What AI can Now Be Used for

Artificial intelligence is mainly used to make everyday life easier—it helps to organise the household, control electronic appliances or cars, perform routine tasks in the work process, collect large amounts of data and create statistics from them.

It’s the same thing we use humans for. To create virtual works—texts, audiovisual production, art; to communicate information—data, numbers, concepts; to control processes and machines—vehicles, weapons, production lines, accounting; to manipulate others—fake content, mass emails.

There are two levels to this. One is accessible to people with an internet connection. Here, the use appears to be in text creation, search, image creation and other enhancements. Then there is a more technical specialist plane that we still need insight into (complete robots—so more physical).

The better question is what it cannot be used for:

When studying and completing assignments, it is beneficial in formulating theoretical descriptive passages, summarising longer texts, creating tables from numbers embedded in the text, coding, programming, etc. However, it is necessary to check everything constantly—the error rate is relatively high.

One of the biggest buzzwords is content generation (images using datasets and tools like DALL-E text using ChatGTP). Still, it is also helpful in creating translations or personalising content on social media.

Prediction and forecasting (car collisions, traffic, …); combination and counting (chess, knowledge competitions); translation; image recognition; …

3.3. How Do You Think AI Works?

In a simplified form, a bunch of “if/else” conditions (if “something”, then “something”. otherwise “something”). More accurately and correctly, an artificial neural network is used.

The programmers create algorithms for how it will work and insert a data package as the basis. Based on that, the AI acts or can evolve.

It is programmed so that when we ask it to find specific books on a topic, it will search several libraries and write down certain books. It is easier because sometimes you need to think of particular titles, so it is up to you to see if the book has sufficiently broken down the problem.

It uses information from all sources in the world.

The Turing test determines whether a machine can have the same mindset as a human. It is a database of systems, data, and imputations applied to other systems, websites, and applications. It is a running program. It runs on algorithms that it learns from working with data.

It consists of two processes (probably). A learning process that works based on gradient descent. The neurons’ weights are used to compute their output function and then input to the other layers, which are adjusted based on each neuron’s inputs (learning data). Based on the desired output, the neurons change their weights based on gradient descent to minimise the deviation between the function produced by the neural network to the desired position (learning data that has the desired outputs beforehand). Somehow, once the neural network learns in this way, the data we want to know the AI’s response to is transferred to the input layer of the neural network, and the neural network selects the output that most closely approximates some pattern (excites specific neurons in each layer, whose values influence the output selection).

It is an artificial neural network with several layers trained with test data. According to the selected test data, it then chooses the most likely option when making decisions. The data entry process can be repeated indefinitely, with a different result.

It depends on the type of AI. It can be either an encoder-decoder-based algorithm (transformers) infusion diffusion. However, it is about taking a large amount of data to train the model. This training can be either spontaneous or supervised. Based on this, a model will be created that can be used by the intelligence.

AI is often thought of as a black box, and so am I. Still, I have a very rough understanding of the mechanics of machine learning, with its emphasis on observing patterns in a dataset and then making decisions based on those patterns.

Search for common themes to a question and calculate the probability of the following word.

Some AI models analyse massive datasets and use probability to predict and optimise. = The more data and training available, the more reliable and efficient the AI.

3.4. What Ethical Issues or Challenges you may Encounter when Working with AI

Issues of ownership, authorship and plagiarism. Where is the line between helping and inspiring and copying?

For example, AI uses texts, images and pictures from the Internet, i.e., they are subject to copyright and create texts and images from them. Also interesting is the issue of writing books in AI—the author of such a book is the human assigned the task, even though the AI draws on books already registered and the assignor made almost no effort in writing.

It does not quote, which means it takes an author’s work from a website and passes it off as its own.

In particular, the problem of authorship and plagiarism, using AI for school assignments, etc.

Racial and discriminatory, and also, who decides to restrict them? Other ethical issues could relate to, e.g., digital reanimation of deceased people, exploitation in music, cinema or images, e.g., fake pornography, fake cover songs (on YouTube Johnny Cash—Barbie Girl)

Discrimination, diversity. The challenge would be with the available data that AI (ChatGPT) will supply and paint only some of the pictures of some hot topics. Inability to assign emotional/moral ratings for given arguments. So, in pure quantification of ideas, people will say, “Look, this argument has so many pros and cons. Yours has fewer pros, so ours is better, and we’re right.”

Discrimination based on race, sex, age, workability and experience or financial situation in recruitment.

Overall, using artificial intelligence for something that one then passes off as one’s own seems slightly unethical to me, but at the same time, in this day and age, one cannot avoid artificial intelligence. At the same time, it can be a problem; for example, if AI is programmed to follow prejudices, it is not objective and can create unpleasant societal situations.

Lots of potential for abuse, plus loss of control and overall poor understanding of how AI models work. If AI systems are so complex in their computations that it is not easy for humanity to check how they arrived at their results, this may bring increasing distrust from users and creators. Society will be reluctant to entrust some tasks to computers that need to be sufficiently tested and secured with additional security measures.

Overall, using artificial intelligence for something that one then passes off as one’s own seems slightly unethical to me, but at the same time, in this day and age, one cannot avoid artificial intelligence. At the same time, it can be a problem; for example, if AI is programmed to follow prejudices, it is not objective and can create unpleasant societal situations.

It is difficult to determine the source from where he gets his information. If I make a decision based on data from her, I have to answer for it. An artificial intelligence does not have to answer for anything.

Lots of potential for abuse, plus loss of control and overall poor understanding of how AI models work. If AI systems are so complex in their computations that it is not easy for humanity to check how they arrived at their results, this may bring increasing distrust from users and creators. Society will be reluctant to entrust some tasks to computers that need to be sufficiently tested and secured with additional security measures.

Especially competition for job consultant positions (many positions can be eliminated as AI can answer all questions).

Offers/gives information to the wrong people (e.g., ChatGPT is already capable of putting together a plan to overthrow a government if you ask indirectly) and lacks empathy—it will offer a radical and statistically best solution to a problem. Still, the question remains whether it is the right one.

It is challenging to set any limits on what we should allow AI to control.

It reflects our society. If the data is racist in some way, for example, the AI will act that way. It is the same with gender issues or a Westernised worldview, for example.

Flawed information, propaganda by its authors, and biased information.

No sources are given; he makes stuff up when he does not know. However, you mean something more general- so if an analysis of the music of a particular composer is used in the creation of music- is it a work of authorship? And whose? Also, what data does he have available? What about social media data?

3.5. What a Person should Know to Be Considered AI Literate

To be able to critically distinguish the work of artificial intelligence, to know its limits, and to make decisions according to one’s intuition.

Quote: do not take the AI’s work as perfect; rewrite, proofread, and be careful what they produce.

Be critical, be analytical.

It opens up space for virtue and ethics in general. The children already mentioned did not have to have God knows what knowledge to use AI. However, they needed to be taught why AI should not be used for such a purpose.

Such a person knows approximately how it works, what is the monetary model behind them, who manages them and what their goal is to understand how they can help in a given area (I assume that the person will want to use it for assistance in some specific area, more if necessary), and then how to use it properly (for chatbot it is for example prompting, which is a whole science in itself and companies offer six digits of dollars a year for knowing the right “prompting”), to know where it has weaknesses and where it is often mistaken, and how to potentially correct/mitigate these mistakes.

A literate person is familiar with examples of use cases and knows what can be created with AI and its limits. Furthermore, they acquire essential digital competencies (they know how to use a computer).

He should be able to use the tools associated with artificial intelligence and know how it works and how to “control” it.

Create the correct assignment to get the answer he wanted exactly. Sometimes, working with prompts is also challenging and improving it will significantly multiply the use of AI.

Humans should use AI tools to be significantly more efficient with them.

He should be able to generate what he needs with it quickly. Text/image, etc, with minimum errors and the need for further editing. He should also realise that it is still a machine, a detailed tool and search engine and not literally “intelligence or thinking”.

He should be able to generate a professional text including citations, e.g., a final project report or a photograph.

He should be able to use various AI tools for his work and private life; he should be able to explain the basic principles of AI to someone else who does not have this knowledge. He should not be afraid to use these tools and should spread awareness. He should be able to recognise when an AI tool has been used.

To work with a computer, to know the applications and their functions. However, Defacto can learn something, as many programs work alone.

He should have computer experience, speak English and know all the risks.

To know what principle AI works on. Moreover, I see it as a helper and a tool.

For the first option, literacy is knowing the APIs for creating AI, a basic understanding of how AI works, where it can be used, and how to prepare data so that AI can learn from it.

Understands how AI works technically; knows how to use different AI tools effectively—has hands-on experience with AI; is aware of the ethical and social aspects of working with AI.

4. Analysis and Discussion

Student Perspective on AI Literacy

5. Conclusions

- AI literacy as a competence for everyday life;

- AI literacy as a prerequisite for future success in the labour market;

- AI literacy as part of the competence structure;

- AI literacy as a composite structure;

- AI literacy as a form of technical knowledge and skills.

- Ethics is primarily a social phenomenon; the individual’s behaviour impacts the whole, and if ethical reflection is to be meaningful, it must focus on issues of societal phenomena such as discrimination, public good, and sustainable development. Ethics pursuing the interests of the individual is egoistic, based on neoliberal discourse and unacceptable to students. At the same time, it is evident that it is associated with an emphasis on the isolated entity and its behaviour rather than the whole system.

- AI literacy should be understood as a manifestation of complexity, in which, on the one hand, it is necessary to consider the close connection between technology and ethics, which cannot be easily separated from each other; on the other hand, it is a complex phenomenon associated with many sub-components that are interrelated. The fundamental problem is the rigidity of concepts (control, responsibility, authorship) that rely on overly entity-centric, single-object mental constructs that fail to conceptualise complex phenomena such as the relationship between AI and society.

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Adam, Barbara, Ulrich Beck, and Joost van Loon, eds. 2000. The Risk Society and beyond: Critical Issues for Social Theory. London, Thousand Oaks and Calif: SAGE. [Google Scholar]

- Adams, Catherine, Patti Pente, Gillian Lemermeyer, and Geoffrey Rockwell. 2023. Ethical Principles for Artificial Intelligence in K-12 Education. Computers and Education: Artificial Intelligence 4: 100131. [Google Scholar] [CrossRef]

- Arnold, William E., James C. McCroskey, and Samuel V. O. Prichard. 1967. The Likert-type Scale. Today’s Speech 15: 31–33. [Google Scholar] [CrossRef]

- Baum, Seth. 2018. Countering Superintelligence Misinformation. Information 9: 244. [Google Scholar] [CrossRef]

- Bawden, David. 2001. Information and Digital Literacies: A Review of Concepts. Journal of Documentation 57: 218–59. [Google Scholar] [CrossRef]

- Beck, Ulrich. 1992. Risk Society: Towards a New Modernity. Theory, Culture & Society. London, Newbury Park and Calif: Sage Publications. [Google Scholar]

- Benko, Attila, and Cecília Sik Lányi. 2009. History of Artificial Intelligence. In Encyclopedia of Information Science and Technology, 2nd ed. Edited by Mehdi Khosrow-Pour. Hershey: IGI Global, pp. 1759–62. [Google Scholar] [CrossRef]

- Boker, Udi, and Nachum Dershowitz. 2022. What Is the Church-Turing Thesis? In Axiomatic Thinking II. Edited by Fernando Ferreira, Reinhard Kahle and Giovanni Sommaruga. Cham: Springer International Publishing, pp. 199–234. [Google Scholar] [CrossRef]

- Borji, Ali. 2023. Qualitative Failures of Image Generation Models and Their Application in Detecting Deepfakes. Image and Vision Computing 137: 104771. [Google Scholar] [CrossRef]

- Bostrom, Nick. 2016. Superintelligence: Paths, Dangers, Strategies. Oxford and New York: Oxford University Press. [Google Scholar]

- Bowker, Geoffrey C., Susan Leigh Star, William Turner, and Leslie George Gasser, eds. 2009. Social Science, Technical Systems, and Cooperative Work: Beyond the Great Divide. Reprinted 2009 by Psychology Press. New York: Psychology Press. London: Taylor & Francis Group. [Google Scholar]

- Braga, Adriana, and Robert Logan. 2017. The Emperor of Strong AI Has No Clothes: Limits to Artificial Intelligence. Information 8: 156. [Google Scholar] [CrossRef]

- Braun, Virginia, and Victoria Clarke. 2012. Thematic Analysis. In APA Handbook of Research Methods in Psychology, Vol 2: Research Designs: Quantitative, Qualitative, Neuropsychological, and Biological. Edited by Harris Cooper, Paul M. Camic, Debra L. Long, A. T. Panter, David Rindskopf and Kenneth J. Sher. Washington, DC: American Psychological Association, pp. 57–71. [Google Scholar] [CrossRef]

- Carolus, Astrid, Yannik Augustin, André Markus, and Carolin Wienrich. 2023. Digital Interaction Literacy Model—Conceptualizing Competencies for Literate Interactions with Voice-Based AI Systems. Computers and Education: Artificial Intelligence 4: 100114. [Google Scholar] [CrossRef]

- Cerbone, David R. 1999. Composition and Constitution: Heidegger’s Hammer. Philosophical Topics 27: 309–29. [Google Scholar] [CrossRef]

- Cetindamar, Dilek, Kirsty Kitto, Mengjia Wu, Yi Zhang, Babak Abedin, and Simon Knight. 2022. Explicating AI Literacy of Employees at Digital Workplaces. IEEE Transactions on Engineering Management 71: 810–23. [Google Scholar] [CrossRef]

- Chen, Jennifer J, and Jasmine C Lin. 2023. Artificial Intelligence as a Double-Edged Sword: Wielding the POWER Principles to Maximize Its Positive Effects and Minimize Its Negative Effects. Contemporary Issues in Early Childhood 25. [Google Scholar] [CrossRef]

- Cobb, Peter J. 2023. Large Language Models and Generative AI, Oh My!: Archaeology in the Time of ChatGPT, Midjourney, and Beyond. Advances in Archaeological Practice 11: 363–69. [Google Scholar] [CrossRef]

- Cobianchi, Lorenzo, Juan Manuel Verde, Tyler J Loftus, Daniele Piccolo, Francesca Dal Mas, Pietro Mascagni, Alain Garcia Vazquez, Luca Ansaloni, Giuseppe Roberto Marseglia, Maurizio Massaro, and et al. 2022. Artificial Intelligence and Surgery: Ethical Dilemmas and Open Issues. Journal of the American College of Surgeons 235: 268–75. [Google Scholar] [CrossRef] [PubMed]

- Coşkun, Selim, Yaşanur Kayıkcı, and Eray Gençay. 2019. Adapting Engineering Education to Industry 4.0 Vision. Technologies 7: 10. [Google Scholar] [CrossRef]

- Dai, Yun, Ching-Sing Chai, Pei-Yi Lin, Morris Siu-Yung Jong, Yanmei Guo, and Jianjun Qin. 2020. Promoting Students’ Well-Being by Developing Their Readiness for the Artificial Intelligence Age. Sustainability 12: 6597. [Google Scholar] [CrossRef]

- Damasio, Antonio R. 1994. Descartes’ Error: Emotion, Reason, and the Human Brain. New York: Putnam. [Google Scholar]

- Dewey, John. 1923. Democracy and Education: An Introduction to the Philosophy of Education. New York: Macmillan. [Google Scholar]

- Dorrien, Gary. 2009. Social Ethics in the Making: Interpreting an American Tradition. Hoboken: John Wiley & Sons, vol. 2009. [Google Scholar]

- Eguchi, Amy, Hiroyuki Okada, and Yumiko Muto. 2021. Contextualizing AI Education for K-12 Students to Enhance Their Learning of AI Literacy Through Culturally Responsive Approaches. KI—Künstliche Intelligenz 35: 153–61. [Google Scholar] [CrossRef] [PubMed]

- Estevez, Julian, Gorka Garate, and Manuel Grana. 2019. Gentle Introduction to Artificial Intelligence for High-School Students Using Scratch. IEEE Access 7: 179027–36. [Google Scholar] [CrossRef]

- European Commission. 2020. Directorate General for Communications Networks, Content and Technology. In The Assessment List for Trustworthy Artificial Intelligence (ALTAI) for Self Assessment. Luxembourg: Publications Office. [Google Scholar]

- Floridi, Luciano. 2013. The Ethics of Information. Oxford: Oxford University Press. [Google Scholar] [CrossRef]

- Floridi, Luciano. 2014. The Fourth Revolution: How the Infosphere Is Reshaping Human Reality. Oxford: OUP Oxford. [Google Scholar]

- Floridi, Luciano. 2019a. Establishing the Rules for Building Trustworthy AI. Nature Machine Intelligence 1: 261–62. [Google Scholar] [CrossRef]

- Floridi, Luciano. 2019b. The Logic of Information: A Theory of Philosophy as Conceptual Design. Oxford: Oxford University Press. [Google Scholar]

- Fyfe, Paul. 2023. How to Cheat on Your Final Paper: Assigning AI for Student Writing. AI & SOCIETY 38: 1395–405. [Google Scholar] [CrossRef]

- Ghobakhloo, Morteza. 2020. Industry 4.0, Digitization, and Opportunities for Sustainability. Journal of Cleaner Production 252: 119869. [Google Scholar] [CrossRef]

- Ginieis, Matías, María-Victoria Sánchez-Rebull, and Fernando Campa-Planas. 2012. The Academic Journal Literature on Air Transport: Analysis Using Systematic Literature Review Methodology. Journal of Air Transport Management 19: 31–35. [Google Scholar] [CrossRef]

- Gross, Melissa, and Don Latham. 2012. What’s Skill Got to Do with It?: Information Literacy Skills and Self-Views of Ability among First-Year College Students. Journal of the American Society for Information Science and Technology 63: 574–83. [Google Scholar] [CrossRef]

- Haenlein, Michael, and Andreas Kaplan. 2019. A Brief History of Artificial Intelligence: On the Past, Present, and Future of Artificial Intelligence. California Management Review 61: 5–14. [Google Scholar] [CrossRef]

- Hassabis, Demis. 2017. Artificial Intelligence: Chess Match of the Century. Nature 544: 413–14. [Google Scholar] [CrossRef]

- Henry, Julie, Alyson Hernalesteen, and Anne-Sophie Collard. 2021. Teaching Artificial Intelligence to K-12 Through a Role-Playing Game Questioning the Intelligence Concept. KI-Künstliche Intelligenz 35: 171–79. [Google Scholar] [CrossRef]

- Howard, John. 2019. Artificial Intelligence: Implications for the Future of Work. American Journal of Industrial Medicine 62: 917–26. [Google Scholar] [CrossRef]

- Iskender, Ali. 2023. Holy or Unholy? Interview with Open AI’s ChatGPT. European Journal of Tourism Research 34: 3414. [Google Scholar] [CrossRef]

- Kaspersen, Magnus Høholt, Karl-Emil Kjær Bilstrup, Maarten Van Mechelen, Arthur Hjort, Niels Olof Bouvin, and Marianne Graves Petersen. 2022. High School Students Exploring Machine Learning and Its Societal Implications: Opportunities and Challenges. International Journal of Child-Computer Interaction 34: 100539. [Google Scholar] [CrossRef]

- Keefe, Elizabeth B., and Susan R. Copeland. 2011. What Is Literacy? The Power of a Definition. Research and Practice for Persons with Severe Disabilities 36: 92–99. [Google Scholar] [CrossRef]

- Kong, Siu Cheung. 2014. Developing Information Literacy and Critical Thinking Skills through Domain Knowledge Learning in Digital Classrooms: An Experience of Practicing Flipped Classroom Strategy. Computers & Education 78: 160–73. [Google Scholar] [CrossRef]

- Lakoff, George. 2008. Women, Fire, and Dangerous Things: What Categories Reveal about the Mind. Chicago: University of Chicago Press. [Google Scholar]

- Lakoff, George, and Mark Johnson. 1999. Philosophy in the Flesh: The Embodied Mind and Its Challenge to Western Thought. New York: Basic Books. [Google Scholar]

- Latour, Bruno. 1993. We Have Never Been Modern. Cambridge: Harvard University Press. [Google Scholar]

- Latour, Bruno, and Julie Rose. 2021. After Lockdown: A Metamorphosis. Cambridge and Medford: Polity Press. [Google Scholar]

- Laupichler, Matthias C., Dariusch R. Hadizadeh, Maximilian W. M. Wintergerst, Leon Von Der Emde, Daniel Paech, Elizabeth A. Dick, and Tobias Raupach. 2022. Effect of a Flipped Classroom Course to Foster Medical Students’ AI Literacy with a Focus on Medical Imaging: A Single Group Pre-and Post-Test Study. BMC Medical Education 22: 803. [Google Scholar] [CrossRef] [PubMed]

- Lawless, J., Coleen E. Toronto, and Gail L. Grammatica. 2016. Health Literacy and Information Literacy: A Concept Comparison. Reference Services Review 44: 144–62. [Google Scholar] [CrossRef]

- Lee, Hye-Kyung. 2022. Rethinking Creativity: Creative Industries, AI and Everyday Creativity. Media, Culture & Society 44: 601–12. [Google Scholar] [CrossRef]

- Leichtmann, Benedikt, Christina Humer, Andreas Hinterreiter, Marc Streit, and Martina Mara. 2023. Effects of Explainable Artificial Intelligence on Trust and Human Behavior in a High-Risk Decision Task. Computers in Human Behavior 139: 107539. [Google Scholar] [CrossRef]

- Lin, Chun-Hung, Chih-Chang Yu, Po-Kang Shin, and Leon Yufeng Wu. 2021. STEM Based Artificial Intelligence Learning in General Education for Non-Engineering Undergraduate Students. Educational Technology & Society 24: 224–37. [Google Scholar]

- Lo, Chung Kwan. 2023. What Is the Impact of ChatGPT on Education? A Rapid Review of the Literature. Education Sciences 13: 410. [Google Scholar] [CrossRef]

- Long, Duri, Takeria Blunt, and Brian Magerko. 2021. Co-Designing AI Literacy Exhibits for Informal Learning Spaces. Proceedings of the ACM on Human-Computer Interaction 5: 1–35. [Google Scholar] [CrossRef]

- Mannila, Linda, Lars-Åke Nordén, and Arnold Pears. 2018. Digital Competence, Teacher Self-Efficacy and Training Needs. In Proceedings of the 2018 ACM Conference on International Computing Education Research. Espoo: ACM, pp. 78–85. [Google Scholar] [CrossRef]

- Manyika, James. 2017. A Future That Works: Automation, Employment, and Productivity. Available online: https://www.jbs.cam.ac.uk/wp-content/uploads/2020/08/170622-slides-manyika.pdf (accessed on 10 December 2023).

- Mareš, Jiří. 2013. Přehledové Studie: Jejich Typologie, Funkce a Způsob Vytváření. Pedagogická Orientace 23: 427–54. [Google Scholar] [CrossRef]

- Mertala, Pekka, Janne Fagerlund, and Oscar Calderon. 2022. Finnish 5th and 6th Grade Students’ Pre-Instructional Conceptions of Artificial Intelligence (AI) and Their Implications for AI Literacy Education. Computers and Education: Artificial Intelligence 3: 100095. [Google Scholar] [CrossRef]

- Mian, Syed Hammad, Bashir Salah, Wadea Ameen, Khaja Moiduddin, and Hisham Alkhalefah. 2020. Adapting Universities for Sustainability Education in Industry 4.0: Channel of Challenges and Opportunities. Sustainability 12: 6100. [Google Scholar] [CrossRef]

- Muthukrishnan, Nikesh, Farhad Maleki, Katie Ovens, Caroline Reinhold, Behzad Forghani, and Reza Forghani. 2020. Brief History of Artificial Intelligence. Neuroimaging Clinics of North America 30: 393–99. [Google Scholar] [CrossRef]

- Neyland, Daniel. 2019. Introduction: Everyday Life and the Algorithm. In The Everyday Life of an Algorithm. Palgrave Pivot. Cham: Palgrave Pivot. [Google Scholar] [CrossRef]

- Ng, Davy Tsz Kit, Jac Ka Lok Leung, Jiahong Su, Ross Chi Wui Ng, and Samuel Kai Wah Chu. 2023a. Teachers’ AI Digital Competencies and Twenty-First Century Skills in the Post-Pandemic World. Educational Technology Research and Development 71: 137–61. [Google Scholar] [CrossRef]

- Ng, Davy Tsz Kit, Jac Ka Lok Leung, Kai Wah Samuel Chu, and Maggie Shen Qiao. 2021a. Literacy: Definition, Teaching, Evaluation and Ethical Issues. Proceedings of the Association for Information Science and Technology 58: 504–9. [Google Scholar] [CrossRef]

- Ng, Davy Tsz Kit, Jac Ka Lok Leung, Samuel Kai Wah Chu, and Maggie Shen Qiao. 2021b. Conceptualizing AI Literacy: An Exploratory Review. Computers and Education: Artificial Intelligence 2: 100041. [Google Scholar] [CrossRef]

- Ng, Davy Tsz Kit, Min Lee, Roy Jun Yi Tan, Xiao Hu, J. Stephen Downie, and Samuel Kai Wah Chu. 2023b. A Review of AI Teaching and Learning from 2000 to 2020. Education and Information Technologies 28: 8445–501. [Google Scholar] [CrossRef]

- Ng, Davy Tsz Kit, Wanying Luo, Helen Man Yi Chan, and Samuel Kai Wah Chu. 2022. Using Digital Story Writing as a Pedagogy to Develop AI Literacy among Primary Students. Computers and Education: Artificial Intelligence 3: 100054. [Google Scholar] [CrossRef]

- Parekh, Rajesh. 2017. Designing AI at Scale to Power Everyday Life. Paper presented at the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, August 13–17; Halifax: ACM, p. 27. [Google Scholar] [CrossRef]

- Pinar Saygin, Ayse, Ilyas Cicekli, and Varol Akman. 2000. Turing Test: 50 Years Later. Minds and Machines 10: 463–518. [Google Scholar] [CrossRef]

- Porcaro, Lorenzo, Carlos Castillo, Emilia Gómez, and João Vinagre. 2023. Fairness and Diversity in Information Access Systems. arXiv arXiv:2305.09319. [Google Scholar]

- Rajpurkar, Pranav, Emma Chen, Oishi Banerjee, and Eric J. Topol. 2022. AI in Health and Medicine. Nature Medicine 28: 31–38. [Google Scholar] [CrossRef]

- Rupert, Robert D. 2010. Cognitive Systems and the Extended Mind. Philosophy of mind; 1. issued as an Oxford Univ. Press. Oxford: Oxford Univ. Press. [Google Scholar]

- Šíp, Radim. 2019. Proč školství a jeho aktéři selhávají. Brno: Masarykova univerzita. [Google Scholar]

- Southworth, Jane, Kati Migliaccio, Joe Glover, Ja’Net Glover, David Reed, Christopher McCarty, Joel Brendemuhl, and Aaron Thomas. 2023. Developing a Model for AI Across the Curriculum: Transforming the Higher Education Landscape via Innovation in AI Literacy. Computers and Education: Artificial Intelligence 4: 100127. [Google Scholar] [CrossRef]

- Stray, Jonathan. 2019. Making Artificial Intelligence Work for Investigative Journalism. Digital Journalism 7: 1076–97. [Google Scholar] [CrossRef]

- Su, Jiahong, and Yuchun Zhong. 2022. Artificial Intelligence (AI) in Early Childhood Education: Curriculum Design and Future Directions. Computers and Education: Artificial Intelligence 3: 100072. [Google Scholar] [CrossRef]

- Torres-Carrion, Pablo Vicente, Carina Soledad Gonzalez-Gonzalez, Silvana Aciar, and Germania Rodriguez-Morales. 2018. Methodology for Systematic Literature Review Applied to Engineering and Education. Paper presented at the 2018 IEEE Global Engineering Education Conference (EDUCON), Santa Cruz de Tenerife, Spain, April 17–20; Tenerife: IEEE, pp. 1364–73. [Google Scholar] [CrossRef]

- Tramontano, Carlo, Christine Grant, and Carl Clarke. 2021. Development and Validation of the E-Work Self-Efficacy Scale to Assess Digital Competencies in Remote Working. Computers in Human Behavior Reports 4: 100129. [Google Scholar] [CrossRef]

- Tuckett, Anthony G. 2005. Applying Thematic Analysis Theory to Practice: A Researcher’s Experience. Contemporary Nurse 19: 75–87. [Google Scholar] [CrossRef]

- Ulnicane, Inga. 2022. Artificial Intelligence in the European Union. In The Routledge Handbook of European Integrations, 1st ed. Edited by Thomas Hoerber, Gabriel Weber and Ignazio Cabras. London: Routledge, pp. 254–69. [Google Scholar] [CrossRef]

- Vuorikari Rina, Riina, Stefano Kluzer, and Yves Punie. 2022. DigComp 2.2, The Digital Competence Framework for Citizens: With New Examples of Knowledge, Skills and Attitudes. No. JRC128415. Brussels: Joint Research Centre. [Google Scholar]

- Wang, Fei-Yue, Jun Jason Zhang, Xinhu Zheng, Xiao Wang, Yong Yuan, Xiaoxiao Dai, Jie Zhang, and Liuqing Yang. 2016. Where Does AlphaGo Go: From Church-Turing Thesis to AlphaGo Thesis and Beyond. IEEE/CAA Journal of Automatica Sinica 3: 113–20. [Google Scholar] [CrossRef]

- Wang, Weiyu, and Keng Siau. 2019. Artificial Intelligence, Machine Learning, Automation, Robotics, Future of Work and Future of Humanity: A Review and Research Agenda. Journal of Database Management 30: 61–79. [Google Scholar] [CrossRef]

- Wienrich, Carolin, and Astrid Carolus. 2021. Development of an Instrument to Measure Conceptualizations and Competencies About Conversational Agents on the Example of Smart Speakers. Frontiers in Computer Science 3: 685277. [Google Scholar] [CrossRef]

- Wiljer, David, and Zaki Hakim. 2019. Developing an Artificial Intelligence–Enabled Health Care Practice: Rewiring Health Care Professions for Better Care. Journal of Medical Imaging and Radiation Sciences 50: S8–S14. [Google Scholar] [CrossRef] [PubMed]

- Wilkinson, Carroll Wetzel, and Courtney Bruch, eds. 2012. Transforming Information Literacy Programs: Intersecting Frontiers of Self, Library Culture, and Campus Community. ACRL Publications in Librarianship 64. Chicago: Association of College and Research Libraries, A Division of the American Library Association. [Google Scholar]

- Williams, Randi, Safinah Ali, Nisha Devasia, Daniella DiPaola, Jenna Hong, Stephen P. Kaputsos, Brian Jordan, and Cynthia Breazeal. 2023. AI + Ethics Curricula for Middle School Youth: Lessons Learned from Three Project-Based Curricula. International Journal of Artificial Intelligence in Education 33: 325–83. [Google Scholar] [CrossRef] [PubMed]

- Yang, Weipeng. 2022. Artificial Intelligence Education for Young Children: Why, What, and How in Curriculum Design and Implementation. Computers and Education: Artificial Intelligence 3: 100061. [Google Scholar] [CrossRef]

- Yi, Yumi. 2021. Establishing the Concept of AI Literacy: Focusing on Competence and Purpose. JAHR 12: 353–68. [Google Scholar] [CrossRef]

- Zhai, Xiaoming. 2022. ChatGPT User Experience: Implications for Education. SSRN Electronic Journal. [Google Scholar] [CrossRef]

| Category | Study | Description |

|---|---|---|

| AI literacy as a competence for everyday life | (Dai et al. 2020; Su and Zhong 2022; Leichtmann et al. 2023; Laupichler et al. 2022; Kaspersen et al. 2022; Fyfe 2023; Yang 2022) | Studies see this literacy as a prerequisite for successful everyday life: literacy in the true sense of the word. Its absence is a significant handicap to understanding the world in which we live. |

| AI literacy as a prerequisite for future success in the labour market | (Cetindamar et al. 2022; Eguchi et al. 2021; Williams et al. 2023; Henry et al. 2021) | Studies link the relationship of AI literacy to future employment or competitive advantage and competitiveness. Skills related to working with AI need to be developed through concrete activities, applications and examples with a view to practical application. |

| AI literacy as part of the competence structure | (Ng et al. 2023b; Long et al. 2021; Wiljer and Hakim 2019; Carolus et al. 2023; Wienrich and Carolus 2021) | Studies understand AI literacy as part of a broader competence field from which it emerges or in which it is constituted. It is not isolated; it cannot be developed but is always in a specific structural arrangement with other skills, knowledge, and attitudes. |

| AI literacy as a composite structure | (Ng et al. 2021b, 2022, 2023a; Kong 2014; Southworth et al. 2023; Chen and Lin 2023) | The definition of AI literacy relies heavily on the work of Ng et al., who view it as a set of four components: (1) knowledge and understanding of AI; (2) use and application of AI; (3) creation and evaluation of AI tools; and (4) AI and ethics, possibly in another analogous composite structure. |

| AI literacy as a form of technical knowledge and skills | (Yi 2021; Chen and Lin 2023; Lin et al. 2021; Mertala et al. 2022; Adams et al. 2023) | AI literacy is primarily (though not exclusively) associated with using or understanding—the technical means to implement AI in different ways to solve tasks. Thus, sufficient technical and computer science education is primary, which AI literacy extends. |

| Level | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

|---|---|---|---|---|---|---|---|

| Overall | 22 | 11 | 20 | 13 | 5 | 2 | 0 |

| Average | 23.8 | 15.8 | 14.4 | 9.2 | 6.6 | 2.4 | 0.8 |

| Difference | 1.8 | 4.8 | −5.6 | −3.8 | 1.6 | 0.4 | 0.8 |

| Question | Subtopics |

|---|---|

| What do you think artificial intelligence means? |

|

| What AI can now be used for |

|

| How do you think AI works? |

|

| What ethical issues or challenges you may encounter when working with it |

|

| What a person should know to be considered AI literate |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Černý, M. University Students’ Conceptualisation of AI Literacy: Theory and Empirical Evidence. Soc. Sci. 2024, 13, 129. https://doi.org/10.3390/socsci13030129

Černý M. University Students’ Conceptualisation of AI Literacy: Theory and Empirical Evidence. Social Sciences. 2024; 13(3):129. https://doi.org/10.3390/socsci13030129

Chicago/Turabian StyleČerný, Michal. 2024. "University Students’ Conceptualisation of AI Literacy: Theory and Empirical Evidence" Social Sciences 13, no. 3: 129. https://doi.org/10.3390/socsci13030129

APA StyleČerný, M. (2024). University Students’ Conceptualisation of AI Literacy: Theory and Empirical Evidence. Social Sciences, 13(3), 129. https://doi.org/10.3390/socsci13030129