Abstract

The application of deep learning in crack detection has become a research hotspot in Structural Health Monitoring (SHM). However, the potential of detection models is often limited due to the lack of large-scale training data, and this issue is particularly prominent in the crack detection of ancient wooden buildings in China. To address this challenge, “CrackModel”, an innovative dataset construction model, is proposed in this paper. This model is capable of extracting and storing crack information from hundreds of images of wooden structures with cracks and synthesizing the data with images of intact structures to generate high-fidelity data for training detection algorithms. To evaluate the effectiveness of synthetic data, systematic experiments were conducted using YOLO-based detection models on both synthetic images and real data. The results demonstrate that synthetic images can effectively simulate real data, providing potential data support for subsequent crack detection tasks. Additionally, these findings validate the efficacy of CrackModel in generating synthetic data. CrackModel, supported by limited baseline data, is capable of constructing crack datasets across various scenarios and simulating future damage, showcasing its broad application potential in the field of structural engineering.

1. Introduction

Structural Health Monitoring (SHM) plays a key role in ensuring the safety of infrastructures such as roads, bridges, and tunnels. By facilitating the early detection of minor damage, including cracks, corrosion, and deformation, SHM effectively prevents structural failures and prolongs the lifespan of these infrastructures [1]. Cracks serve as early indicators of structural degradation, making their monitoring and repair crucial for mitigating safety risks and avoiding economic losses [2,3,4]. In the field of SHM, the preservation of ancient buildings, which embody historical and cultural significance, is vital for cultural heritage conservation and historical research. A substantial proportion of the existing ancient buildings in China are constructed from wood. Over time, these wooden structures are prone to cracking due to the impacts of natural environments, climatic variations, and the biological properties of the wood. Such cracks not only compromise the aesthetic appeal of the buildings but may also undermine their structural stability and safety. Consequently, the detection of cracks in the wooden structures of ancient buildings is critically important for the preservation of cultural heritage and the maintenance of these architectural treasures.

In recent years, significant advancements have been made in the application of deep learning for crack detection in ancient wooden structures [5]. Notably, a crack detection approach based on You Only Look Once version 5 (YOLOv5) was proposed by us in 2021, addressing the limitations of traditional methods and enhancing both efficiency and accuracy [6]. Building on this foundation, our subsequent research in 2022 employed advanced models such as YOLO, Single Shot MultiBox Detector (SSD), and a Faster Region-based Convolutional Neural Network (R-CNN), which facilitated a more comprehensive exploration of deep learning applications in crack detection [7,8]. In 2023, innovative techniques were introduced for crack recognition and three-dimensional (3D) reconstruction using depth cameras, resulting in high-precision reconstructions with notable improvements in efficiency and cost [9]. These contributions not only enrich the theoretical framework of crack detection but also offer more effective and economical solutions for the preservation and maintenance of ancient buildings.

Despite these advancements, challenges remain in applying computer vision to crack detection and preventive protection. The primary issue is that the performance of deep learning models is significantly influenced by the quality and scale of the training datasets. Currently, data collection is heavily dependent on manual photography. This process is time-consuming and costly. It is frequently impacted by factors such as personnel expertise, weather conditions, and environmental influences, resulting in difficulties in obtaining high-quality and diverse data. In the field of crack detection in ancient wooden structures, other deep learning-based studies also struggle with data scarcity. Many ancient buildings are challenging to access or photograph due to their historical preservation requirements and cultural significance, which further limits the collection of crack data. Consequently, acquiring high-quality, diverse, and large-scale crack image data has become a critical factor in the detection of cracks in ancient wooden structures.

To address the challenge of data scarcity, methods for constructing a specialized dataset have been actively explored. This approach is inspired by the synthetic facial data developed by Microsoft’s MR & AI Lab in 2021 [10], which employed a parameterized facial model combined with diverse facial features (including texture, hair, and clothing) to generate facial images, effectively addressing the challenges associated with collecting real facial data. Therefore, a model for constructing a crack dataset for wooden structures, referred to as CrackModel, has been developed. This model extracts information from a limited number of crack images and simulates crack damage on images of intact wooden structures, facilitating the creation of a comprehensive dataset of crack images.

The development of CrackModel aims to overcome the limitations of traditional manual data collection, enabling the generation of crack data for various infrastructure scenarios, such as roads, bridges, and walls. Moreover, this model demonstrates significant advantages in simulating future damage. It can rapidly generate a substantial volume of damage data without requiring actual damage, while effectively capturing a range of rare or difficult-to-observe damage scenarios. This capability significantly enhances the diversity and comprehensiveness of the dataset. Consequently, this method not only expands the dataset’s scale but also provides robust data support for further research on and application of crack detection technologies.

CrackModel significantly enhances data diversity and comprehensiveness by synthesizing a large amount of future damage data. It can easily generate various rare or hard-to-observe damage scenarios without actual structural damage, providing extensive data support for studying potential damage in new buildings. This not only addresses the lack of damage data in the field of new construction but also helps engineers and researchers take preventive measures in the early stages of a project, leading to a better understanding of structural performance and potential risks.

This paper is organized into five sections. The first section serves as an introduction, establishing the context and significance of the research. This is followed by the second section, which offers a comprehensive review of existing data augmentation methods. Subsequently, the third section details the structural design of CrackModel, including its framework, implementation techniques, and the interactions among its components. The fourth section provides a validation of the synthetic dataset generated by CrackModel for crack detection, illustrated through an experimental case study conducted at the Bawang Academy of Shenyang Jianzhu University. Finally, the fifth section summarizes the research findings and outlines potential directions for future model optimization and research.

2. Related Work

Deep learning has achieved substantial progress in the field of crack detection, significantly enhancing both the efficiency and accuracy of detection methods. Currently, two primary approaches enhance crack detection performance through deep learning: algorithm optimization and the acquisition of high-quality, large-scale datasets. However, while research on algorithm optimization is relatively extensive, there is little research on the construction of large-scale and high-quality datasets. To address the issue of data scarcity in industrial applications, the crack detection performance of YOLOv5 has been successfully improved through model optimization, even with a mere 80 images of limited samples [11]. Furthermore, high-precision damage detection has been achieved under conditions of scarce labeled samples by enhancing the few-shot learning methodology of Prototypical Networks (ProtoNet) [12], effectively tackling the challenge of insufficient labeled data in automated bridge visual inspections. Although model optimization has substantially improved the detection accuracy of few-sample image data, data scarcity continues to be a critical limiting factor in the advancement of deep learning. Consequently, a method is proposed to construct large-scale and high-quality image datasets, ensuring that the quality of the generated image data aligns closely with that of real-world data.

Currently, the construction of datasets primarily relies on manual image collection, a resource-intensive process that poses challenges in achieving efficient, economical, and comprehensive data coverage. Therefore, the use of synthetic images to augment datasets presents an effective solution. Synthetic image datasets are now increasingly favored, particularly in fields that necessitate large amounts of labeled data for training and optimizing models, such as medical image analysis, autonomous driving technology, and facial recognition systems. For instance, the application of denoising diffusion probabilistic models to generate chest X-ray images has effectively addressed challenges related to data scarcity and class imbalance in the field of medical imaging [13]. Additionally, the Synchronous Anisotropic Diffusion Equation (S-ADE) has been employed to fuse multimodal information from Magnetic Resonance Imaging (MRI) and Computed Tomography (CT) images [14], resulting in synthetic images that integrate both imaging modalities, thereby providing more comprehensive medical image data. In the field of autonomous driving, diverse synthetic images of driving scenarios have been generated, reducing the cost of acquiring high-quality training data and enhancing the performance and reliability of autonomous driving technologies in various scenarios [15]. Moreover, the Range Image Fusion (RI-Fusion) technique [16] has been utilized to convert Light Detection and Ranging (LiDAR) point clouds into compact range views and fuse them with camera images. It effectively addresses the issues of LiDAR data sparsity and false detection, thereby significantly improving the accuracy of 3D (three-dimensional) object detection. To tackle the data scarcity issue in facial recognition, synthetic facial images with identity mixing and domain mixing characteristics have been created [17]. This deals with challenges such as label noise, privacy protection, and the lack of detailed annotations in real facial data collection.

Image data synthesis techniques can be categorized into traditional synthesis methods and deep learning-driven methods. Traditional image synthesis techniques encompass methods such as image stitching, fusion, reconstruction, and repair, which achieve intuitive editing and optimization of image content through pixel-level adjustments. In image fusion, wavelet transform fusion [18] generates high-quality images through multi-scale decomposition and frequency band fusion. Its variant, wavelet transform [19], extends the capabilities of frequency and spatial processing, while non-downsampling wavelet transform [20] overcomes the downsampling issues of traditional wavelet transforms, enhancing detail preservation and image quality. In image stitching technology, the Random sample Consensus (RANSAC) variant [21] RANSAC++ combines nonlinear and global optimization, enhancing the algorithm’s robustness in complex scenarios. Additionally, the Laplacian pyramid [22], a multi-resolution processing technique, is widely applied in various image processing tasks. In recent years, the rise of deep learning has further promoted the development of image synthesis. Generative Adversarial Networks (GANs) [23] utilize adversarial training to generate high-quality images, with variants such as conditional GANs (cGANs) and self-attention GANs (SAGANs) further enhancing control over the generation process and improving detail presentation. Besides GANs, Variational Autoencoders (VAEs) [24] and diffusion models [25] have also performed excellently in image synthesis, capable of generating diverse and high-fidelity images. Furthermore, in the synthesis of 3D image data, T. Kikuchi and his team have employed image translation techniques and procedural modeling to generate synthetic street scene images for training building facade instance segmentation. This approach addresses the high costs associated with traditional manual dataset generation while improving data generation efficiency and facilitating the automatic creation of annotated data [26]. The Microsoft team [10] has synthesized facial data through 3D modeling and rendering, bridging the domain gap between real and synthetic data in field-based facial recognition.

Although numerous methods have been employed for generating synthetic image data, their usability and cost-effectiveness based on experimental requirements remain critical factors in practical applications. Based on the specific techniques of traditional and deep learning methods described earlier, it is clear that each of these methods has its own advantages and limitations in the field of image synthesis. Traditional synthesis techniques are favored for their lower computational complexity, rapid processing speed, and controllability of results. However, these methods may be limited by the quality of input images and preprocessing steps, often requiring manual intervention and adjustments. In contrast, deep learning methods without the need for manual feature extraction significantly enhance the naturalness and diversity of synthetic images through their self-learning capabilities. Nonetheless, these methods depend on substantial computational resources and high-quality training data. Moreover, post-processing is imperative to ensure quality due to the uncertainty and randomness of the generated images. While 3D modeling and rendering technologies demonstrate specialization and precision in terms of specific applications, they also face high computational resource demands and technical barriers, making the production process time-consuming.

To address these challenges, an image synthesis model integrating traditional image processing with deep learning techniques has been developed to construct a cost-effective crack dataset. This model leverages limited foundational data to synthesize large-scale data efficiently and economically, demonstrating broad application potential across various structural engineering domains, such as roads and bridges. Furthermore, the crack feature data preserved during the processing of this model facilitates the continuous generation of data and its extension into other structural fields.

3. Model Introduction

CrackModel is designed to construct datasets specifically for damage structures such as cracks, as illustrated in Figure 1. This model consists of four key components: crack extraction, crack storage, crack synthesis, and effect verification. The datasets generated at each stage of the model are saved and maintained independently.

Figure 1.

Structure of CrackModel. The model acquires the original dataset through diversified data collection strategies. Synthetic images are ultimately produced after three stages: crack extraction, information storage, and crack synthesis. A detection model is employed to rigorously validate the effectiveness of the synthetic images.

3.1. Crack Extraction Process

3.1.1. Data Collection

In the initial phase of constructing CrackModel, data collection serves not only as the starting point of the entire modeling process but also as a critical step to ensure the quality and functionality of the model, providing the necessary foundational data for the entire process.

To ensure data quality and collection efficiency, it is essential to select appropriate image acquisition equipment. This typically involves advanced technologies such as high-resolution cameras, drones, and video monitoring systems to capture crack features across various scenarios.

Cracks, with a wide variety of types, are common defects in engineering structures, including but not limited to pavement cracks, bridge cracks, and building cracks. Since the formation of cracks is influenced by their specific environments, it is necessary to conduct a thorough analysis of the characteristics of different types of cracks during the data collection process to ensure that the collected data comprehensively reflect the diversity and complexity of cracks.

In the data collection of road cracks, challenges such as dynamic interference and cost are prevalent. Road cracks are characterized by complex and diverse forms, which are affected by multiple factors, including traffic flow and climatic changes. The frequent movement of vehicles and pedestrians increases the difficulty of crack detection. As a result, the equipment should be specialized, and costs will be higher. For the data collection of bridge cracks, it is crucial to consider dynamic traffic loads and the unique characteristics of bridge structures. Traffic loads have a direct impact on the formation and development of bridge cracks. In particular, the vibrations and repetitive stresses caused by vehicles may accelerate crack development. Moreover, detecting bridge cracks involves monitoring not only the bridge deck but also the substructure, adding to the complexity and difficulty of the collection process. In the data collection of building cracks, the characteristics of cracks can vary significantly due to differences in building materials. Modern constructions, owing to advancements in construction techniques, typically demonstrate high structural integrity, resulting in a relatively small number of cracks. Most of the cracks are fine ones that are difficult to detect using image recognition technology, which constrains the efficiency and scope of data collection. In contrast, ancient wooden structures, due to their natural properties and sensitivity to environmental changes, are more prone to cracking over long-term use or under the influence of environmental factors. Although image recognition may be affected by lighting and environmental conditions, wooden structure cracks with diverse forms and direct reflection of environmental changes are valuable sample resources for related research.

In summary, the data collection for different types of cracks is associated with specific challenges and requirements. In comparison, wooden structures are easier to be sampled due to their static nature and the prominence of cracks. This characteristic renders them a preferable choice for data collection. The data collection of cracks in structures such as roads and bridges is associated with higher costs and difficulties, requiring selection based on practical needs and resource investment. In practical applications, data collection strategies should be optimized based on different crack types and environmental factors, which can alleviate the collection burden for subsequent work and provide a high-quality data foundation for experimental research.

3.1.2. Crack Detection and Extraction

The objective of crack extraction is to accurately identify cracks in images and separate them from the background, thereby obtaining images or information solely related to the crack regions. Subsequently, the extracted data can be integrated into other images without structural damage to simulate and predict structural deterioration and degradation.

In the process of crack extraction, analyzing the differences between cracks on various materials (as shown in Figure 2) and their backgrounds is crucial for the development and adaptation of targeted and efficient crack extraction algorithms. For instance, metal cracks are characterized by their elongated shape, sharp edges, and high contrast. Moreover, they may appear either linear or convoluted. Concrete cracks, on the other hand, can be straight, curved, or network-like, including surface micro-cracks, shrinkage cracks, and structural cracks, which exhibit variability in width and depth as well as low contrast with the background. Cracks in wooden structures are often influenced by the wood grain and growth ring orientation. These cracks typically present as longitudinal splits or warping, with irregular edges and potential wood grain fissures that result in blurred edges. Algorithms need to be optimized to account for the texture and contrast variations across different materials to ensure accurate extraction of crack features.

Figure 2.

Cracks on different materials: (a) cracks in metallic materials; (b) cracks in concrete materials; (c) cracks in wooden structures.

After obtaining the crack images, the extraction process can be implemented through various methods, including traditional image processing techniques and deep learning-based approaches. Traditional image processing techniques focus on using edge detection algorithms [27], such as the Sobel operator [28] and Canny edge operator [29], which precisely locate crack edges by identifying regions with significant grayscale variations. Subsequently, image segmentation techniques, such as thresholding [30], region growing [31], and clustering algorithms [32], are employed to effectively separate the crack regions from the background. In recent years, deep learning techniques, particularly Convolutional Neural Networks (CNNs) [33], have achieved substantial progress in the field of image segmentation. This technology enables the precise identification and segmentation of crack regions directly from complex crack images through automated feature extraction and pattern recognition. Notably, network architectures specifically designed for image segmentation, such as U-Net [34] and SegNet [35], have become mainstream choices for handling such tasks. For example, in U-Net, the encoder effectively captures the contextual features of the crack images through convolutional and pooling layers, while the decoder progressively restores the details of the crack regions through upsampling and feature fusion, thereby achieving direct mapping from the input to the accurately segmented output. The advantages of these deep learning methods lie in their efficiency in automated feature extraction and high precision in crack recognition and segmentation.

During the crack extraction phase, through comprehensive analysis of the characteristics of cracks in different materials, appropriate extraction methods can be selected to ensure the precise extraction of crack regions, thereby providing reliable data support for subsequent analysis and applications.

3.2. Crack Storage

Once a substantial number of images containing cracks has been identified and the cracks have been recognized using algorithms, various methods can be employed for storing the crack information. Common storage methods include pixel-level storage and background removal storage.

The first method is pixel-level storage. This approach involves extracting crack information from each image on a pixel-by-pixel basis and saving the RGB values for each pixel. Specifically, a new data file that records the position of each pixel along with its corresponding RGB value can be created. The advantage of this method lies in the accurate preservation of all details about the cracks, including color, shape, and position. Since the crack information is saved on a pixel-by-pixel basis, this method is particularly suitable for applications that require high precision in analysis and processing. However, this method also has some drawbacks. Firstly, it generates a large amount of data, which requires more storage space and computational resources for saving and processing. Secondly, the data format is relatively complex, which can hinder direct visualization and rapid processing.

The second method involves converting other background portions to transparent colors, retaining only the image content of the cracks, and saving it in PNG or TIFF format. In practice, image processing software or programming languages can be utilized to preserve the detected crack areas while the other parts are filled with white. The advantage of this method is that the generated image files are more visual, making it easier to view and analyze directly. Additionally, PNG and TIFF formats support transparent backgrounds and lossless compression, which helps maintain the integrity of the crack information. Another benefit of this method is that the resulting files are relatively small, facilitating storage and transmission. However, it also has certain limitations. By removing the background, the spatial relationship between the cracks and the original image may be disrupted, potentially affecting applications that require the overall image context. Furthermore, the image processing steps may introduce some errors, especially in complex backgrounds, which could lead to the loss or distortion of crack information.

In summary, pixel-level storage and background removal storage both have their advantages and disadvantages. Pixel-level storage is suitable for scenarios that require high precision and detailed analysis, despite its larger data volume and complex processing. In contrast, background removal storage is more visual and easier to view. Though the spatial relationship between cracks and the background may be disrupted, it is suitable for applications that require quick processing and analysis. It is necessary to choose an appropriate method based on specific needs to better preserve and utilize crack information.

3.3. Image Synthesis

In the process of constructing a high-quality synthetic dataset, the integration of cracks with background images is a crucial step. This process involves multiple rules and techniques to ensure the authenticity and diversity of the synthetic images.

Firstly, to enhance the diversity of the synthetic images, random sampling [36] is performed from the crack dataset extracted in the previous phase. This dataset includes pixel-level annotated crack information or images of crack regions with the background removed. Algorithms such as simple random sampling, stratified sampling, or cluster sampling can be employed to achieve uniform sampling within large datasets, ensuring the diversity and representativeness of the samples, thereby providing rich crack features for the synthetic images. Next, when embedding the cracks into the background, a pseudo-random number generator [37] is utilized to determine the position of the cracks within the background image, increasing the diversity of the images and enhancing the model’s generalization capability.

Moreover, it is equally important to ensure the authenticity of the synthetic images. In addition to a reasonable simulation of the natural distribution of cracks, this requires avoiding overlaps between cracks and controlling the number of cracks with an appropriate range. Typically, spatial distribution algorithms, such as the quadtree data structures [38], are utilized to efficiently manage and query the spatial locations of the cracks, ensuring that there is adequate spacing between them and optimizing the distribution of cracks. Moreover, the number of cracks should be adjusted based on the size of the image and the specific application scenario. For instance, in applications such as road or building detection, the number of cracks can be flexibly adjusted according to actual needs.

Based on the previously mentioned rules, image synthesis can further be conducted using traditional image techniques or deep learning methods to ensure seamless integration of images. Subsequently, post-processing steps such as rotation, scaling, and color adjustment are applied to enhance the visual effects and improve the diversity of the images.

The synthetic image data are created by embedding randomly selected crack samples into background images according to predetermined rules, resulting in realistic and diverse crack images. This method effectively expands the scale and diversity of the dataset. For example, by utilizing 500 crack samples and adjusting the number and position of the cracks in various combinations, up to 10,000 crack images against different backgrounds can be generated. This synthetic strategy significantly enriches the dataset, providing a solid foundation for enhancing the generalization ability and accuracy of model training.

3.4. Effect Verification

Effect verification is a critical step to ensure the quality of the dataset.

The success of the synthetic images primarily depends on the following conditions and characteristics. First, the images should closely resemble the visual quality of real-world images. Second, the crack features in the synthetic images should be similar to those of actual cracks. Finally, the synthetic images should maintain distribution characteristics similar to those of the original dataset.

To verify the achievement of these standards, systematic evaluation experiments need to be conducted. Various methods should be employed in these experiments to comprehensively analyze the effectiveness of the synthetic images. For example, benchmark tests can be used to compare the synthetic results with existing data to assess the specific impact of the synthetic images on model performance. Additionally, cross-dataset validation can be employed to evaluate the model’s generalization ability. Ultimately, these experiments will be assessed using performance indicators such as accuracy, recall rate, F1 score, Intersection over Union (IoU), and mean Average Precision (mAP).

Furthermore, selecting an appropriate object detection model is crucial for the smooth execution of the evaluation experiments. Models such as Faster R-CNN [39] and YOLO [40] are particularly exemplary. The YOLO model in particular is favored for its rapid and accurate detection capabilities. This model achieves efficient detection, recognition, and localization of objects in images through a single Convolutional Neural Network architecture.

During the effect verification phase of the synthetic images, a comprehensive assessment of the synthesis method can be conducted through diverse experimental designs and model evaluations, combined with the analysis of various performance metrics. Effective verification ensures the fidelity of the synthetic images and the accuracy of the crack features while confirming the diversity and balance of the dataset, thereby enhancing the robustness and generalization ability of deep learning models. Additionally, based on feedback from key indicators, the synthesis methods can be adjusted to make the statistical characteristics of the dataset closer to those of real data, providing a scientific basis for further optimization of the synthesis algorithms.

4. Model Validation

4.1. Dataset Acquisition and Preparation

4.1.1. Data Acquisition

In the field of crack maintenance and detection for ancient wooden structures, extensive research and practical experience has been leveraged with professional personnel and tools capable of capturing crack images from multiple angles. This has provided a wealth of primary data for the research. Currently, a crack dataset has been successfully constructed, named DataSource (https://github.com/memymine-meng/CrackImages.git, accessed on 24 March 2025), which contains 594 images of cracks in ancient wooden structures. Among these, a dataset of 474 crack images has been publicly released. The data were collected from the Bawang Academy, a building that was originally part of the Bawang Temple established in 1415 and has a history of over 600 years. As a result of prolonged exposure to environmental factors and insufficient professional personnel and high-end equipment, the wooden structure has developed numerous cracks, which are distributed across columns, beams, doors, and windows.

4.1.2. Data Preprocessing

Due to the uncontrollability of the quality of manually-captured images, alongside the inherent characteristics of cracks, the images needed to be screened. As a result, those images that did not meet quality standards due to inaccurate focusing, inadequate lighting conditions, or other visual interferences had to be excluded. Subsequently, a series of preprocessing operations were performed on the filtered images, including image sharpening, noise reduction, and contrast enhancement, to improve their quality and usability. Finally, to increase the diversity of the dataset and enhance the model’s generalization capabilities, data augmentation techniques were employed, which included rotation, scaling, cropping of images, and potential color transformations, to simulate various shooting conditions and perspectives.

4.2. Crack Extraction Experiment

In the experiment for crack region extraction, an end-to-end deep learning architecture—specifically the U-Net model—was employed to achieve precise segmentation of crack regions, and these regions were isolated from the original images using image processing techniques (see Algorithm 1).

| Algorithm 1: Crack Extraction Algorithm. |

| Input: Original image dataset I Output: Crack regions dataset K 1 model = UNet(in_channels = 3, out_channels = 1) 2 model.load_state_dict(torch.load(’unet_model.pth’)) 3 with torch.no_grad(): 4 output = model(input) // Use the model for prediction 5 binary_mask = (output > 0.5) //Obtain binary mask 6 save(binary_mask, “S”) 7 for each image in S: 8 inverted_image = invert(image) 9 transparent_image(inverted_image) // Create a four-channel transparent image 10 transparent_image[:, :, 0:3] = inverted_image 11 transparent_image[:, :, 3] = 255 12 save(transparent_image, “O”) 13 Endfor 14 for each image in I: 15 original_image = I 16 transparent_image = O 17 if I ≠ ∅, O ≠ ∅ 18 output_image = create_output_image(original_image, transparent_image) 19 for c in range(3): 20 output_image[:, :, c] = original_image[:, :, c] 21 if transparent_image[:, :, 3] > 0 else 0 22 output_image[:, :, 3] = transparent_image[:, :, 3] 23 save(output_image, “K”, format = “png”) 24 Endfor |

To ensure a sufficient supply of crack segmentation masks for future use, the U-Net model was utilized for automatic segmentation of crack images. Initially, 100 crack images were manually annotated with precision, clearly defining the crack regions to create a high-quality training dataset. These annotated data were then used to train the U-Net model. During the training process, the model’s performance was optimized by adjusting hyperparameters and applying data augmentation techniques, enabling the model to learn and differentiate features between crack and non-crack regions. Upon completion of the training, the U-Net model was employed to generate segmentation masks for 500 crack images, with some of the segmentation results displayed in Figure 3.

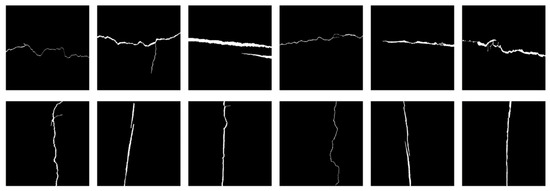

Figure 3.

Segmentation masks of crack images.

Subsequently, image processing techniques were applied to further refine the segmentation masks generated by U-Net. By performing pixel-by-pixel channel processing on the segmentation masks and the original images, the crack regions were visually separated as distinct entities to ensure clarity and completeness of the crack features. The results of this process are shown in Figure 4. The extracted crack regions were saved to the crack dataset Crack (https://github.com/memymine-meng/CrackImages.git, accessed on 24 March 2025). Crack serves as a multi-dimensional data resource, providing abundant resources for crack classification, feature analysis, and training of machine learning models. Additionally, it can support future crack research in civil engineering, materials science, and other related fields.

Figure 4.

Images of extracted crack regions.

4.3. Crack Synthesis Experiment

In this section, based on fuzzy processing, a crack synthesis algorithm is proposed (see Algorithm 2). The algorithm is designed to seamlessly embed crack regions into the background images of undamaged wooden structure.

| Algorithm 2: Crack Synthesis Algorithm |

| Input: Crack region dataset K, Background image L Output: Synthesized data P 1 Function BlendCrackWithBackground(K, L, P, feather_radius): 2 if K ≠ ∅, L ≠ ∅ 3 If L is portrait and crack_image is landscape: 4 crack_image = rotate(crack_image, 90) 5 If L is landscape and crack_image is portrait: 6 crack_image = rotate(crack_image, 90) 7 x_offset = Randomly select x position for placing K 8 y_offset = Randomly select y position for placing K // Extract the Alpha channel of the crack image and apply feathering 9 alpha_channel = Extract Alpha channel from crack_image 10 blurred_alpha = Apply Gaussian Blur to Alpha channel with radius feather_radius 11 For each color channel (R, G, B): 12 output_image[:,:, c] = blend_channel(crack_image[:, :, c], background_image[:, :, c], blurred_alpha) 13 Update the color channel in the output_image 14 Save output_image to P 15 EndFunction |

The core of the crack synthesis algorithm is the seamless integration of cracks with the background through Gaussian blur [41] and weighted averaging techniques [42]. The first step involves feathering the crack regions from the crack library Crack. By repeatedly applying Gaussian blur, the sharpness of the crack edges is gradually reduced, and the degree of blurriness of the Alpha channel is increased. Specifically, the kernel size for Gaussian blur is defined as feather_radius * 2 + 1, where feather_radius determines the radius of the feathering effect. The choice of this radius is crucial for achieving a satisfactory feathering outcome, as it ensures a smoother transition between the crack edges and the background, effectively reducing unnatural seams in the synthesized image. In the second step, a weighted averaging method is employed to blend the crack image with the background image. By calculating the weighted average of the pixel values from both the crack region and the background image, a natural transition is ensured in terms of color and brightness between the crack region and the background image. The weighted averaging fusion takes into consideration not only the pixel values of the crack region but also those of the background image, thereby achieving a visually seamless integration of the cracks with the background. The background images used in this experiment are a limited number of undamaged wooden structure images artificially collected under natural lighting conditions, which enhances the fidelity of the synthesized images.

By the use of the above method, a total of 5500 images were synthesized by integrating the crack regions from real images into the background images, which are stored in the synthesized dataset Synthesized_Image (https://github.com/memymine-meng/CrackImages.git, accessed on 24 March 2025), as shown in Figure 5. These synthesized images will be utilized for subsequent training of detection models.

Figure 5.

Synthesized images.

4.4. Validation with YOLO Model

In this study, the YOLO v8 object detection model for evaluation was employed to validate the effectiveness of the synthesized crack images generated by CrackModel. To ensure the reliability and comprehensiveness of the experimental results, two experimental methods were implemented with a total of 594 real images and 5500 synthesized images.

The first method is cross-dataset evaluation, whereby the model was trained on synthesized images and tested on real images to assess the model’s generalization performance on unseen real data. This approach effectively avoids overfitting to specific features of the training data, compared to traditional internal dataset evaluations. To ensure fairness in the experiment, the real dataset was divided into training, validation, and testing sets in an 8:1:1 ratio, and 594 synthesized images were randomly selected for partitioning in the same proportion. The experimental results are detailed in Table 1.

Table 1.

Results of cross-dataset testing.

The experimental results indicate that the model trained on synthetic data outperforms the baseline model (trained on real images) in terms of mAP@0.5, with the F1-score of the baseline model being 0.827 and the F1-score of the synthetic data-trained model being 0.783, showing a small difference between the two. Although synthetic images slightly underperform in precision and recall, which may lead to a small number of false positives and missed detections, the results still demonstrate that synthetic data can achieve performance similar to real data in training and show potential in crack detection tasks. In scenarios with insufficient data, synthetic data can serve as an effective supplement. To further validate that synthetic data meets practical application needs, we conducted a second experiment.

In the second experiment, we compared the training performance of real data and mixed data (containing both real and synthetic images). The experimental results are detailed in Table 2.

Table 2.

Comparison of real and mixed data in YOLOv8 experiment results.

The experimental results in Table 2 show that the mixed-data model outperforms the model trained solely on real images across multiple metrics, including precision, recall, and mAP. This indicates that synthetic data provide additional valuable information, especially in data-scarce situations, where the mixed-data strategy significantly enhances model performance. By combining the advantages of real and synthetic images, the mixed-data approach enriches feature information during training, improving the model’s adaptability to diverse data characteristics, thereby enhancing its applicability and robustness in real-world applications.

Although synthetic data have approached real data in some experimental metrics, there is still a gap in visual authenticity. The limitations of synthetic data are mainly manifested in their inability to fully capture the complexity and diversity of the real world, such as (1) the difficulty in simulating lighting changes: real images are often in environments with strong side lighting, scattered light, or complex shadows, while synthetic data are usually based on uniform lighting, which limits their ability to simulate real-world lighting changes; (2) the complete fusion of background and crack data: the edges of real wood surface cracks may show phenomena such as fiber breakage, indentation, and accumulation of tiny debris, while current synthetic methods based on texture blending and transparency adjustment may not be sufficient to fully present these details; (3) although it is possible to synthesize crack images, generating new crack images still requires a large number of background images to place the extracted cracks. Therefore, another challenge of the synthetic data method is that it still requires the collection of a large number of background images.

In summary, synthesized image data can be effective alternatives to real data, particularly when data are scarce. Therefore, synthesized image data can serve as an efficient and cost-effective alternative resource. The synthesized dataset, with its cost-effectiveness and diversity, provides a rich sample for model training, which is crucial in scenarios where collecting real data is challenging or costly. Furthermore, the controllability of synthesized images presents additional advantages for model training, allowing for customized data generation based on specific crack features. It significantly enhances the model’s precision in recognizing details. This study not only confirms the effectiveness of the synthesized dataset but also validates the efficiency of CrackModel in constructing crack datasets.

5. Conclusions

With the in-depth application of computer vision technology in the field of SHM, this study has developed an image synthesis model, CrackModel, which combines deep learning with traditional image processing techniques to generate high-quality training data for crack detection. Systematic experiments with CrackModel were conducted with crack images collected from Bawang Academy, a wooden structure with a long history. The results indicate that the model exhibits strong effectiveness and applicability in detecting cracks in wooden structures. It has extensive potential for cross-domain applications, and can provide effective support for crack research in other types of building structures, which also offers insights for potential directions in future research.

Despite the promising potential of synthesized images as substitutes for real data in the experiments, several challenges remain to be addressed in future research. The dataset construction process is to be further optimized, and algorithms could be enhanced for more precise crack extraction. Additionally, efforts could be made to improve the fidelity of synthesized images, particularly in handling the complex shapes of cracks and the variable characteristics of backgrounds. The latest research findings and technological advancements in the field will be reviewed and incorporated into CrackModel in a timely manner, ensuring the model’s sophistication and practicality. Furthermore, to further validate the generalizability of CrackModel, future experiments will be expanded to a broader range of scenarios, including different types of wood, wooden structures from different periods, and diverse styles of wooden architecture (such as ancient pagodas, bridges, and traditional wooden houses). By testing CrackModel in these varied scenarios, we will be able to comprehensively evaluate its effectiveness, thereby providing solid support for its widespread application in the field of Structural Health Monitoring.

Author Contributions

Conceptualization, J.M. and G.L.; methodology, Y.M. and G.L.; validation, Y.M. and X.G.; investigation, J.M. and X.G.; resources, J.M. and W.Y.; data curation, J.M. and W.Y.; writing—original draft, Y.M.; writing—review and editing, J.M., W.Y. and G.L.; visualization, Y.M.; supervision, J.M. and G.L.; project administration, Y.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Basic Scientific Research Project (General Program) of Higher Education Institutions of the Liaoning Provincial Department of Education (LJKZ0595).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are openly available in GitHub at https://github.com/memymine-meng/CrackImages.git (accessed on 24 March 2025). Due to privacy concerns, access to certain parts of the dataset is restricted and will be provided under appropriate data-sharing agreements.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ghaffari, A.; Shahbazi, Y.; Mokhtari Kashavar, M.; Fotouhi, M.; Pedrammehr, S. Advanced Predictive Structural Health Monitoring in High-Rise Buildings Using Recurrent Neural Networks. Buildings 2024, 14, 3261. [Google Scholar] [CrossRef]

- Liu, L.; Shen, B.; Huang, S.; Liu, R.; Liao, W.; Wang, B.; Diao, S. Binocular Video-Based Automatic Pixel-Level Crack Detection and Quantification Using Deep Convolutional Neural Networks for Concrete Structures. Buildings 2025, 15, 258. [Google Scholar] [CrossRef]

- Sohaib, M.; Arif, M.; Kim, J.-M. Evaluating YOLO Models for Efficient Crack Detection in Concrete Structures Using Transfer Learning. Buildings 2024, 14, 3928. [Google Scholar] [CrossRef]

- Rajesh, S.; Jinesh Babu, K.S.; Chengathir Selvi, M.; Chellapandian, M. Automated Surface Crack Identification of Reinforced Concrete Members Using an Improved YOLOv4-Tiny-Based Crack Detection Model. Buildings 2024, 14, 3402. [Google Scholar] [CrossRef]

- Zhou, J.; Ning, J.; Xiang, Z.; Yin, P. ICDW-YOLO: An Efficient Timber Construction Crack Detection Algorithm. Sensors 2024, 24, 4333. [Google Scholar] [CrossRef]

- Ma, J.; Yan, W.; Liu, G. Research on crack detection method of wooden ancient building based on YOLO v5. J. Shenyang Jianzhu Univ. Nat. Sci. 2021, 37, 927–934. [Google Scholar] [CrossRef]

- Ma, J.; Yan, W.; Liu, G. Complex texture contour feature extraction method of cracks in timber structures of ancient architecture based on deep learning. J. Shenyang Jianzhu Univ. Nat. Sci. 2022, 38, 896–903. [Google Scholar]

- Ma, J.; Yan, W.; Liu, G.; Xing, S.; Niu, S.; Wei, T. Complex texture contour feature extraction of cracks in timber structures of ancient architecture based on YOLO algorithm. Adv. Civ. Eng. 2022, 2022, 7879302. [Google Scholar] [CrossRef]

- Yan, W.; Shi, R.; Wang, J.; Liu, G.; Ma, J. Joint multi-resolution 3D reconstruction method for crack identification in timber structures of ancient architecture based on depth cameras. J. Shenyang Jianzhu Univ. Nat. Sci. 2023, 39, 518–524. [Google Scholar]

- Wood, E.; Baltrušaitis, T.; Hewitt, C.; Dziadzio, S.; Cashman, T.J.; Shotton, J. Fake it till you make it: Face analysis in the wild using synthetic data alone. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3681–3691. [Google Scholar] [CrossRef]

- Hu, N.; Yang, J.; Jin, X.; Fan, X. Few-shot crack detection based on image processing and improved YOLOv5. J. Civ. Struct. Health Monit. 2023, 13, 165–180. [Google Scholar] [CrossRef]

- Gao, Y.; Li, H.; Fu, W. Few-shot learning for image-based bridge damage detection. Eng. Appl. Artif. Intell. 2023, 126, 107078. [Google Scholar] [CrossRef]

- Paproki, A.; Salvado, O.; Fookes, C. Synthetic Data for Deep Learning in Computer Vision & Medical Imaging: A Means to Reduce Data Bias. ACM Comput. Surv. 2024, 56, 1–37. [Google Scholar] [CrossRef]

- Zhu, R.; Li, X.; Zhang, X.; Ma, M. MRI and CT medical image fusion based on synchronized-anisotropic diffusion model. IEEE Access 2020, 8, 91336–91350. [Google Scholar] [CrossRef]

- Song, Z.; He, Z.; Li, X.; Ma, Q.; Ming, R.; Mao, Z.; Pei, H.; Peng, L.; Hu, J.; Yao, D.; et al. Synthetic datasets for autonomous driving: A survey. IEEE Trans. Intell. Veh. 2023, 9, 1847–1864. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, L.; Zhang, G.; Lan, T.; Zhang, H.; Zhao, L.; Li, J.; Zhu, L.; Liu, H. RI-Fusion: 3D object detection using enhanced point features with range-image fusion for autonomous driving. IEEE Trans. Instrum. Meas. 2022, 72, 5004213. [Google Scholar] [CrossRef]

- Qiu, H.; Yu, B.; Gong, D.; Li, Z.; Liu, W.; Tao, D. Synface: Face recognition with synthetic data. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10880–10890. [Google Scholar] [CrossRef]

- Mallat, S.G. A theory for multiresolution signal decomposition: The wavelet representation. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 674–693. [Google Scholar] [CrossRef]

- Cocchi, M.; Seeber, R.; Ulrici, A. WPTER: Wavelet packet transform for efficient pattern recognition of signals. Chemom. Intell. Lab. Syst. 2001, 57, 97–119. [Google Scholar] [CrossRef]

- Guorong, G.; Luping, X.; Dongzhu, F. Multi-focus image fusion based on non-subsampled shearlet transform. IET Image Process. 2013, 7, 633–639. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Lai, W.S.; Huang, J.B.; Ahuja, N.; Yang, M.H. Fast and accurate image super-resolution with deep laplacian pyramid networks. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 2599–2613. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar]

- Kingma, D.P. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar] [CrossRef]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Kikuchi, T.; Fukuda, T.; Yabuki, N. Development of a synthetic dataset generation method for deep learning of real urban landscapes using a 3D model of a non-existing realistic city. Adv. Eng. Inform. 2023, 58, 102154. [Google Scholar] [CrossRef]

- Jing, J.; Liu, S.; Wang, G.; Zhang, W.; Sun, C. Recent advances on image edge detection: A comprehensive review. Neurocomputing 2022, 503, 259–271. [Google Scholar] [CrossRef]

- Xiao, H.; Xiao, S.; Ma, G.; Li, C. Image Sobel edge extraction algorithm accelerated by OpenCL. J. Supercomput. 2022, 78, 16236–16265. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Sahoo, P.K.; Soltani, S.; Wong, A.K.C. A survey of thresholding techniques. Comput. Vis. Graph. Image Process. 1988, 41, 233–260. [Google Scholar] [CrossRef]

- Hojjatoleslami, S.A.; Kittler, J. Region growing: A new approach. IEEE Trans. Image Process. 1998, 7, 1079–1084. [Google Scholar] [CrossRef]

- Ezugwu, A.E.; Ikotun, A.M.; Oyelade, O.O.; Abualigah, L.; Agushaka, J.O.; Eke, C.I.; Akinyelu, A.A. A comprehensive survey of clustering algorithms: State-of-the-art machine learning applications, taxonomy, challenges, and future research prospects. Eng. Appl. Artif. Intell. 2022, 110, 104743. [Google Scholar] [CrossRef]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A survey of convolutional neural networks: Analysis, applications, and prospects. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6999–7019. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015, Proceedings, Part III 18; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Olken, F.; Rotem, D. Random sampling from databases: A survey. Stat. Comput. 1995, 5, 25–42. [Google Scholar] [CrossRef]

- Bhattacharjee, K.; Das, S. A search for good pseudo-random number generators: Survey and empirical studies. Comput. Sci. Rev. 2022, 45, 100471. [Google Scholar] [CrossRef]

- Samet, H. The quadtree and related hierarchical data structures. ACM Comput. Surv. 1984, 16, 187–260. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Hussain, M. YOLO-v1 to YOLO-v8, the rise of YOLO and its complementary nature toward digital manufacturing and industrial defect detection. Machines 2023, 11, 677. [Google Scholar] [CrossRef]

- Deng, G.; Cahill, L.W. An adaptive Gaussian filter for noise reduction and edge detection. In Proceedings of the 1993 IEEE Conference Record Nuclear Science Symposium and Medical Imaging Conference, San Francisco, CA, USA, 31 October–6 November 1993; pp. 1615–1619. [Google Scholar] [CrossRef]

- Mosig, J. The weighted averages algorithm revisited. IEEE Trans. Antennas Propag. 2012, 60, 2011–2018. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).