1. Introduction

Ultra-high-performance concrete (UHPC) emerged as a significant and innovative construction material in the mid-1990s [

1,

2]. Ever-increasing research on UHPC has led to its widespread application globally, particularly in the construction of bridges, infrastructures, and other critical structures [

3,

4,

5]. Compared with conventional concrete, UHPC exhibits ultra-high compressive strength (commonly >120 MPa) and post-cracking strength (typically >5 MPa) and remarkable durability. These outstanding properties are primarily attributed to a low water-to-binder ratio (usually <0.2), a high fineness of supplementary cementitious materials, a discontinuous pore structure, and a high volume fraction of high-strength steel fibers [

6,

7,

8]. Numerous studies have shown that it is crucial to understand the mechanical responses of UHPC under different loading conditions for further investigation into its structural performance [

9], especially for UHPC with superior bending and tensile properties [

10,

11]. A comprehensive literature review reveals that extensive research has been conducted on the flexural behaviors of reinforced UHPC beams. This research has investigated a multitude of variables, including specimen size [

12,

13], compressive strength of UHPC [

14,

15,

16], reinforcement ratios of steel rebars [

6,

12,

17,

18,

19], and the shapes and volume fractions of steel fibers [

6,

15,

16,

20]. The findings of these studies have considerably advanced both the design optimization and structural application of UHPC beams [

6,

12,

15,

16,

17,

18,

19,

20,

21].

However, existing research regarding the flexural performance of reinforced UHPC elements is frequently based on a limited number of specimens considering a narrow range of parameter variables. Consequently, it is time-consuming and labor-intensive, and the conclusions obtained may be overestimated or insufficient to comprehensively describe the exact influence of these parameters. Furthermore, design codes and structural standards for UHPC beams remain relatively limited [

22,

23], despite some analytical methods and finite element models having been presented on the basis of some simplification assumptions with certain limitations. Therefore, additional experimental and analytical investigations are required to develop an efficient and energy-saving method to predict the flexural properties of UHPC-based structural elements [

13,

24,

25,

26].

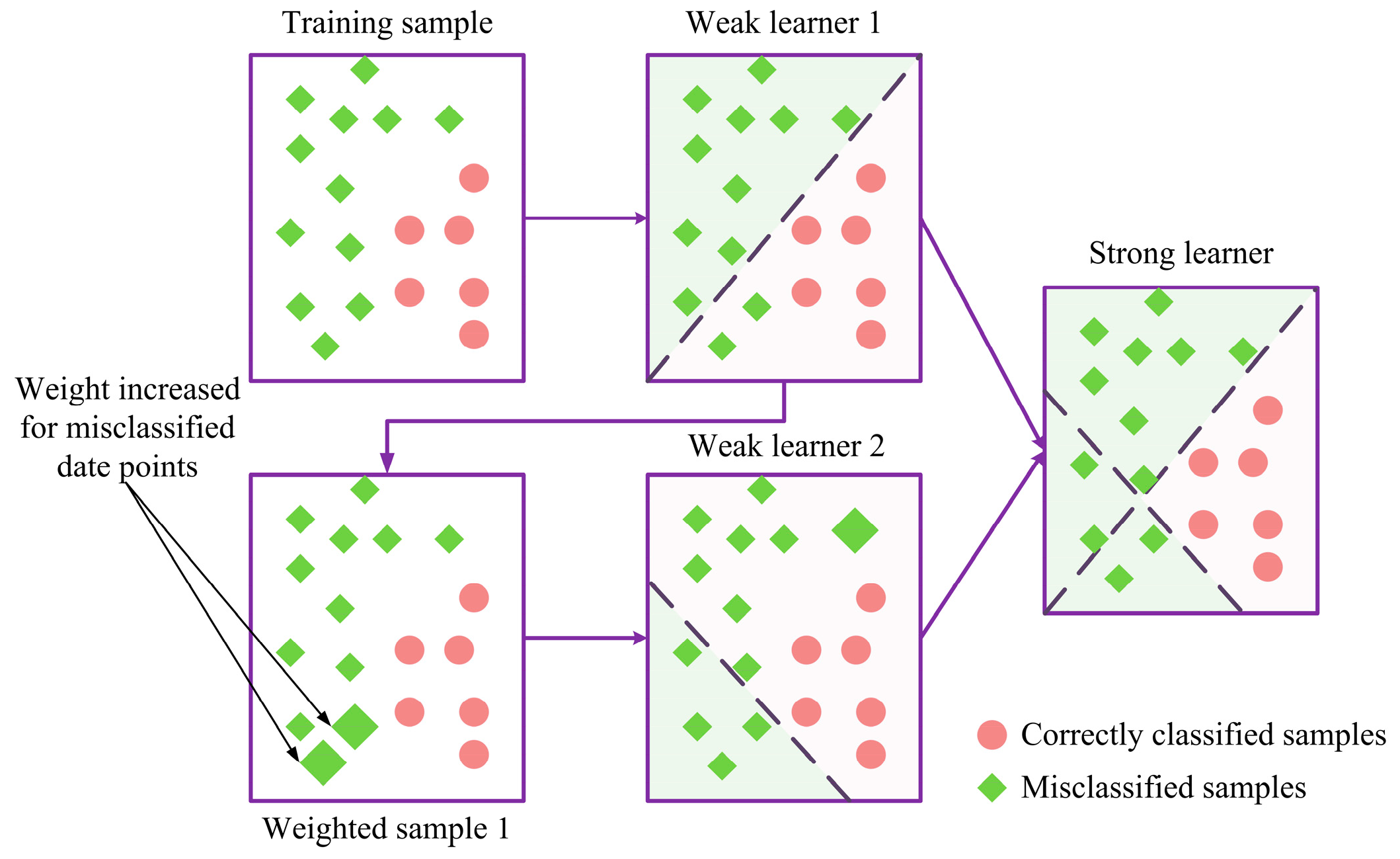

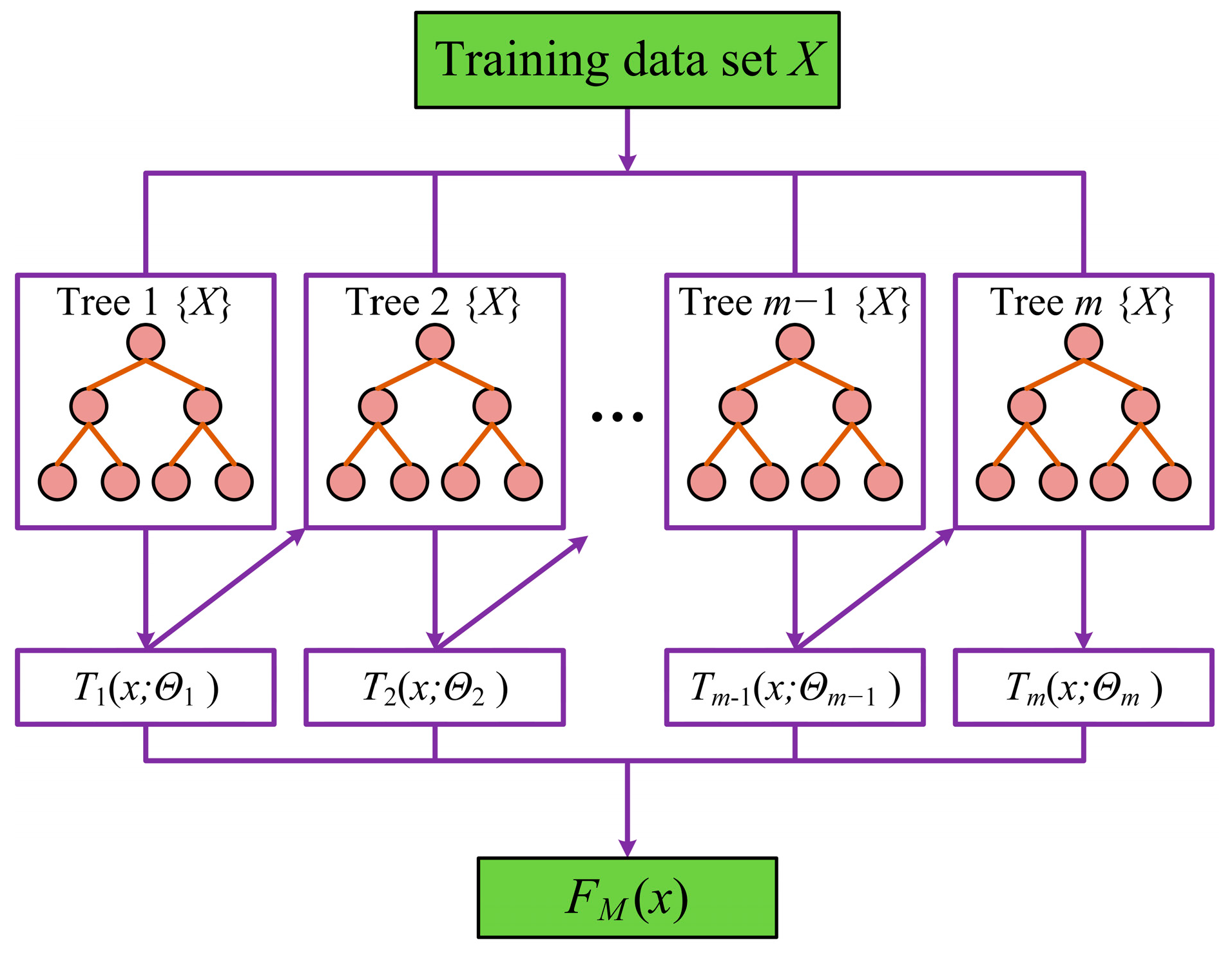

In recent years, machine learning (ML) has emerged as a powerful and versatile tool with a wide range of applications within the field of civil engineering, particularly in the context of predicting the performance of advanced building materials such as UHPC. The application of ML provides an effective and robust platform for predicting the structural response of UHPC-based elements, thereby significantly reducing the time and effort required for experimentation and modeling [

27]. Numerous studies have demonstrated that ML methods have been employed to predict basic properties of UHPC such as compressive strength, flexural strength, workability, and shrinkage performance, as well as to forecast the interface bonding strength and thus develop interpretable models that optimize UHPC mix designs [

28,

29,

30,

31]. Moreover, ML techniques have been utilized to determine the structural performance of reinforced concrete or UHPC beams [

30,

31,

32,

33]. For instance, a gradient boosting regression tree (GBRT) was used by Fu and Feng [

29] to forecast the residual shear strength of corroded reinforced concrete beams at different service periods. Feng et al. [

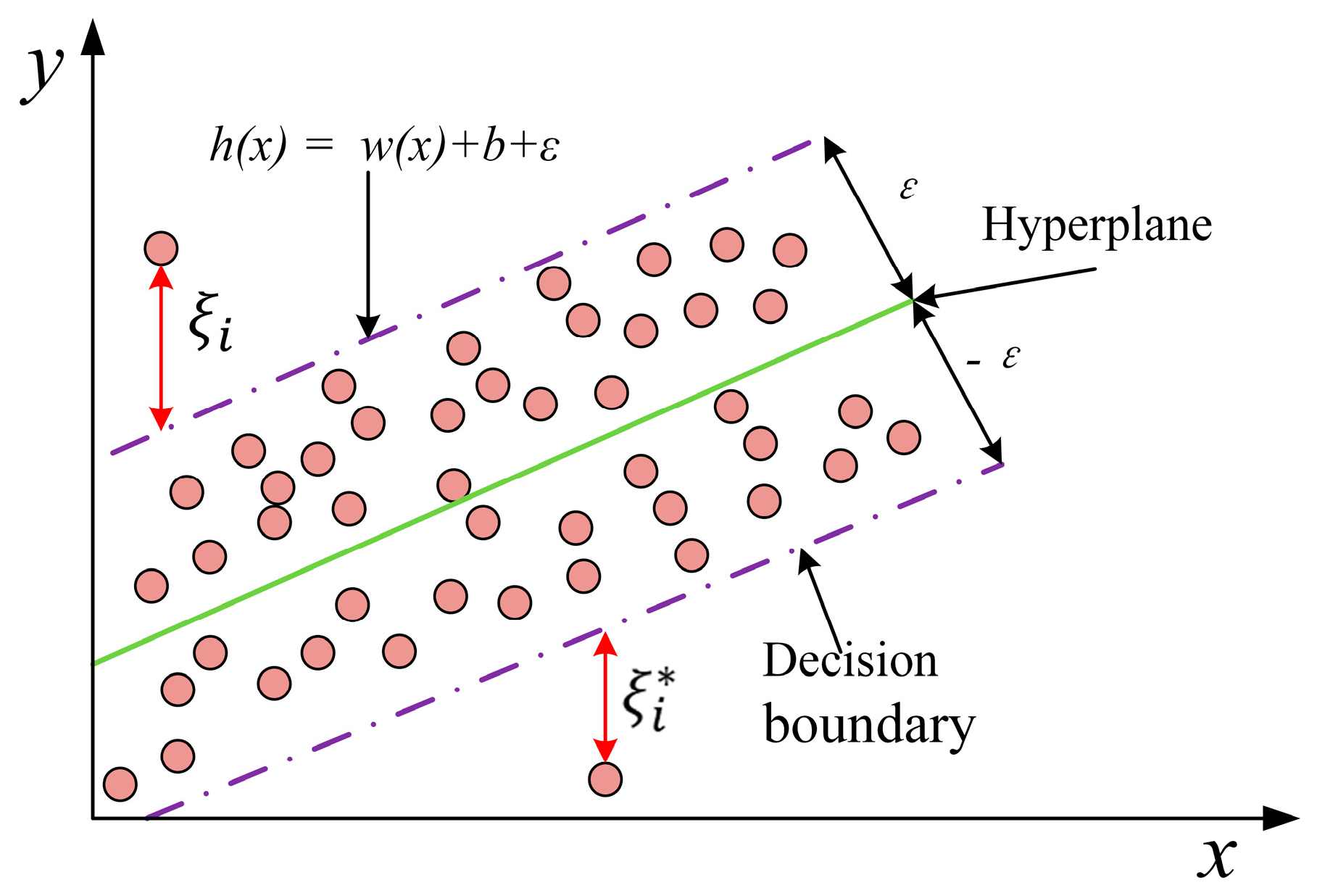

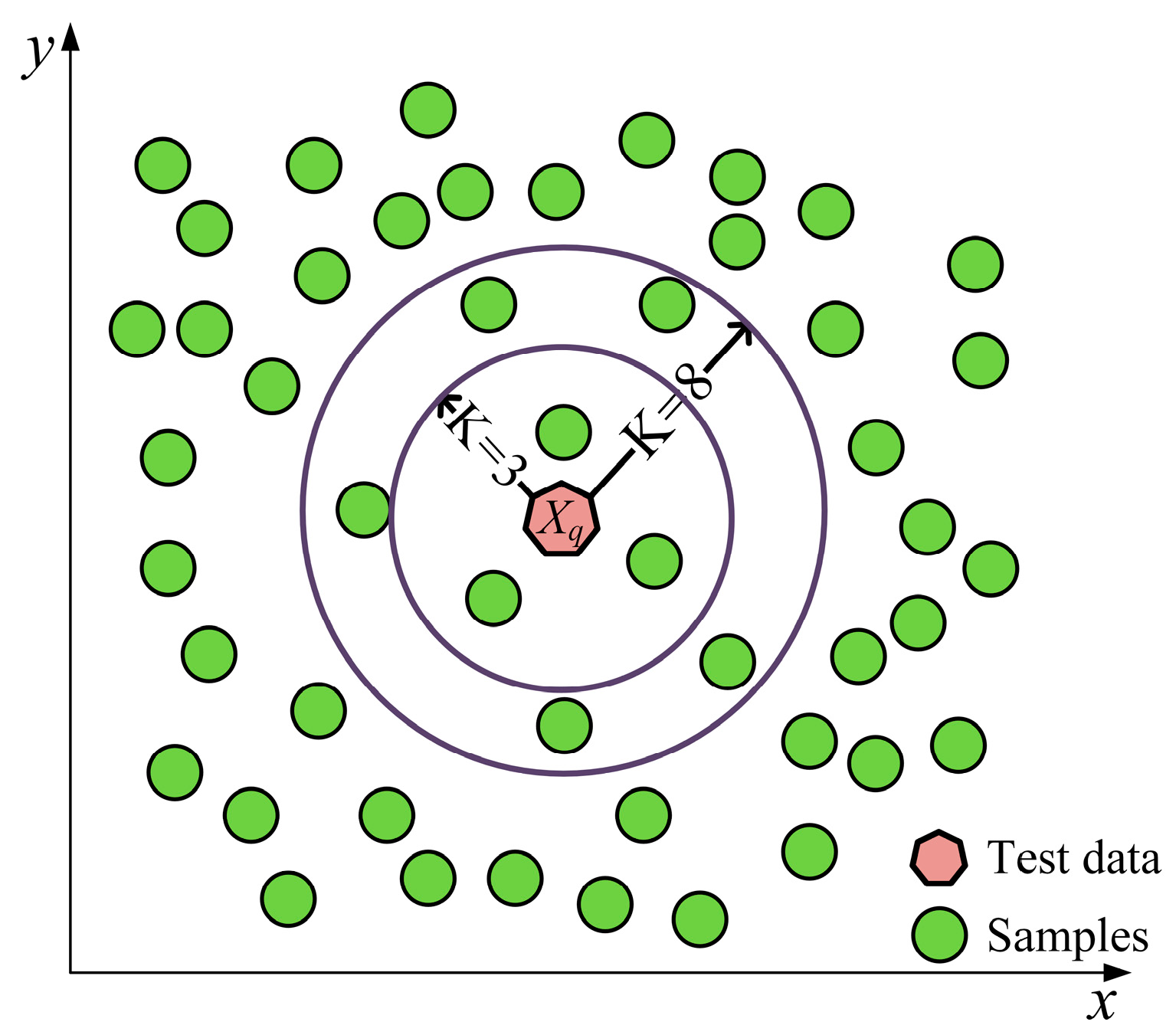

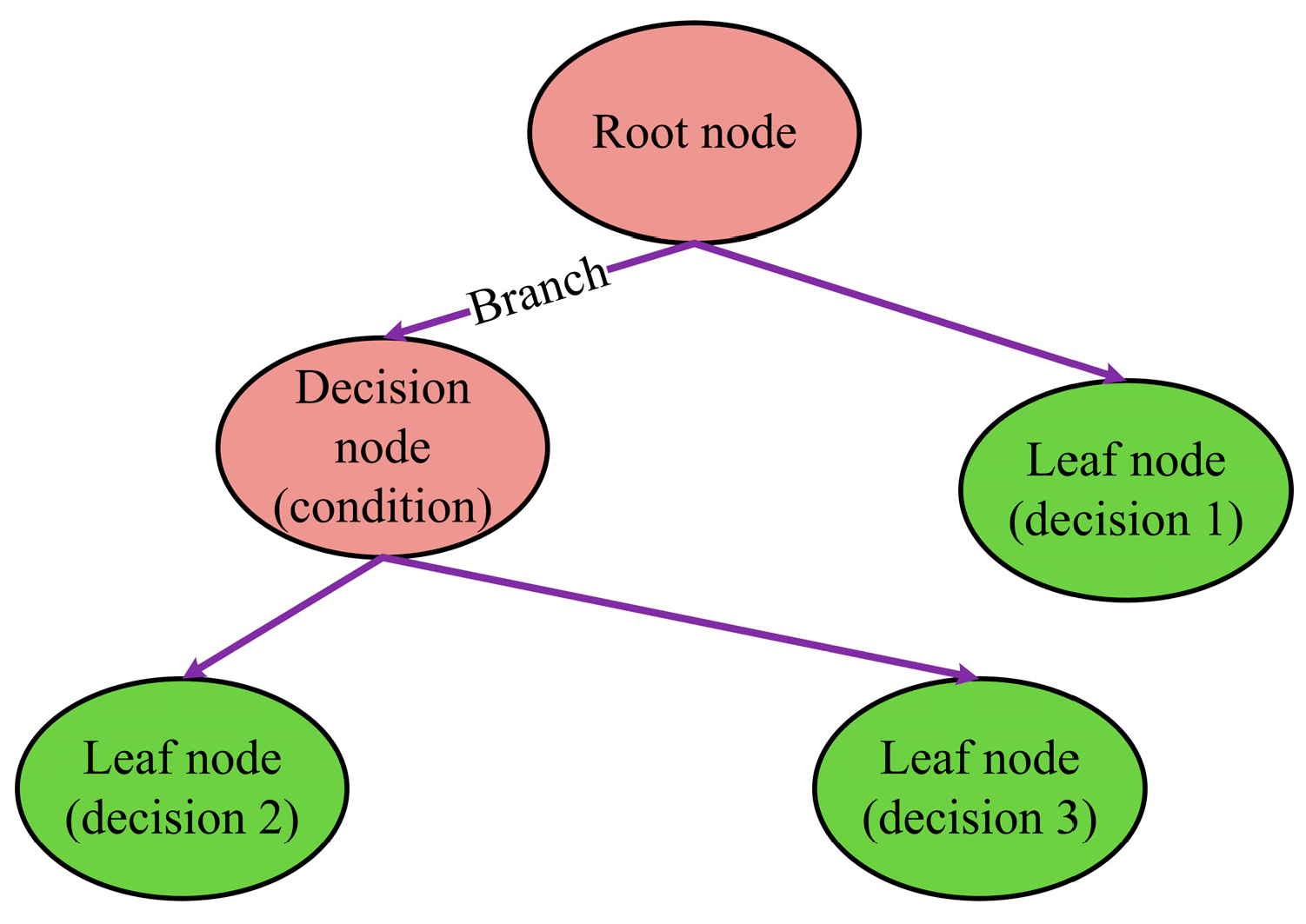

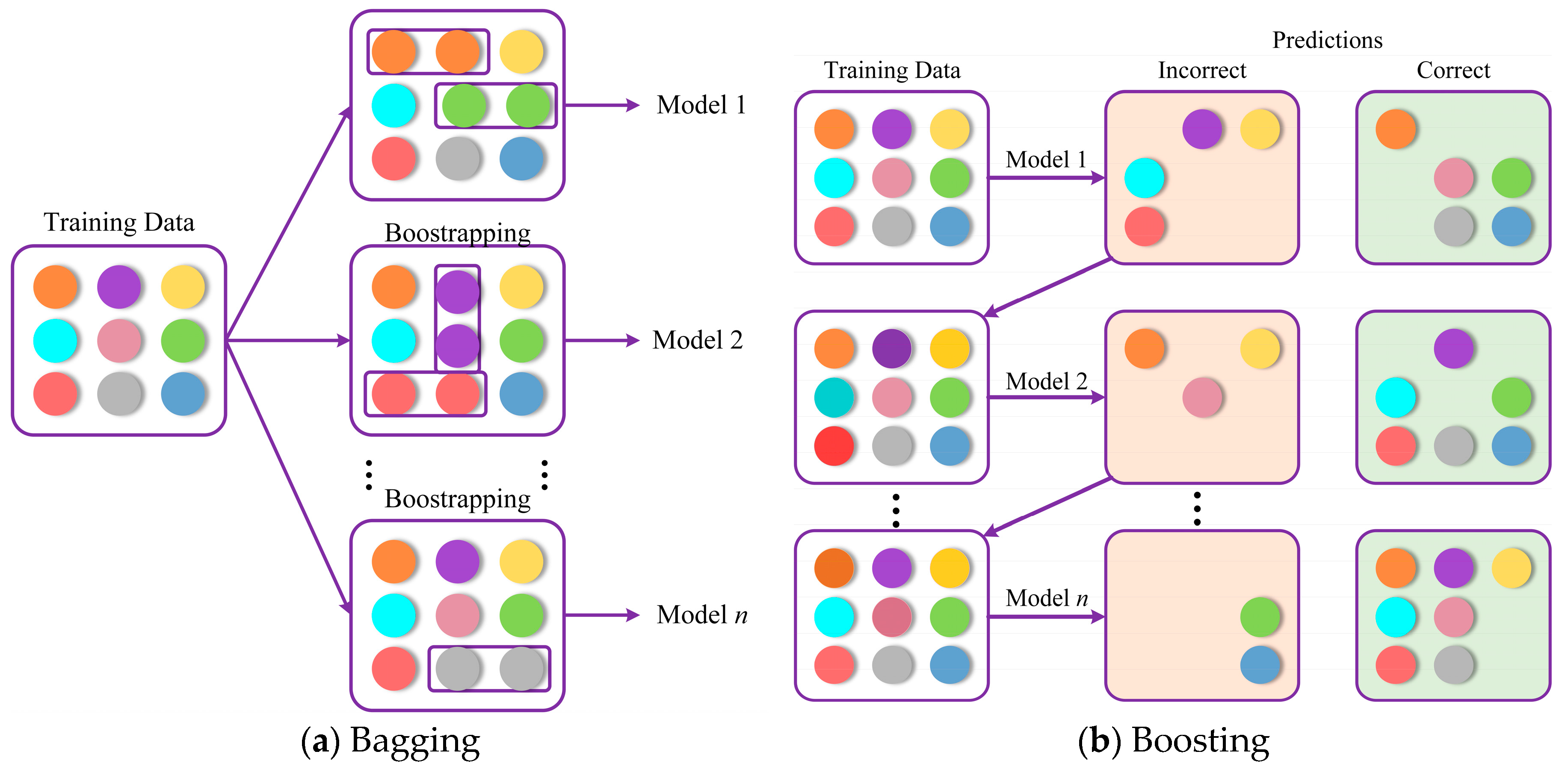

32] found that the ensemble ML models outperformed traditional mechanics-based models in terms of improved prediction accuracy and reduced bias. Similarly, a variety of ML algorithms, including support vector machines (SVMs), artificial neural networks (ANN), and ensemble learning (EL) methods, have also been used to identify failure modes and predict the shear capacity of UHPC beams under combined bending and shear forces, achieving a high prediction accuracy [

31,

32,

33].

Despite the application of ML methods in the prediction of the shear performance of UHPC beams, there is a growing interest in employing ML technologies to accurately and efficiently predict the flexural behavior of reinforced UHPC beams. However, this remains an emerging area of research, with few published studies exploring its application. Solhmirzaei et al. [

33] used support vector regression (SVR) and genetic programming to predict the flexural capacity of UHPC beams with varying cross-sectional dimensions and material properties. Ergen and Katlav [

34] explored the potential of deep learning (DL) models for predicting the flexural capacity of UHPC beams with and without steel fibers. Nevertheless, the effectiveness of ML models is largely contingent upon the quality of the database employed, which is frequently challenged by the selection of input variables. It is, therefore, important to expand and optimize the database of reinforced UHPC beam specimens. The employment of excessive input parameters is impractical for real-world design applications, while the inclusion of interrelated input parameters unnecessarily inflates the input features without adding a unique or distinct value to the ML model. Further, the versatility of ML algorithms has been shown to result in notable discrepancies in both the accuracy and efficiency of the performance predictions of reinforced UHPC specimens [

24,

28,

30,

31,

35]. A comprehensive assessment and comparison of the accuracy and efficiency of various ML models for predicting the flexural ultimate capacity of reinforced UHPC beams is a crucial gap in the current research. Moreover, in the context of machine learning, the division of the original database into training and testing sets represents a fundamental stage in the data processing. The extent to which the training set is divided affects the performance of the ML model in terms of both the accuracy of training and its capacity to generalize to new data. The optimal division ratio of training set to testing set depends on the subset size and characteristics of the database. It is necessary to analyze the model performance on varying subsets of data to ensure an efficient evaluation of both the model and the data quality. In addition to statistical evaluations of ML techniques, an adequate discussion is required regarding the physical and structural principles governing reinforced UHPC beams. For practical engineering applications, the comparison of EL models with physical principles alongside statistical model evaluations is essential. Previous research has shown that the categorical gradient boosting (CatBoost) model has excellent predictive stability and generalization ability [

36]. To evaluate the accuracy and reliability of EL methods, the CatBoost method is exemplified and compared with existing empirical methods and design standards [

25,

26,

37,

38,

39]. Additionally, it could be reasonably argued that the differences between various ML algorithms can significantly affect the reliability of parameter analysis in model interpretation. Therefore, it would appear prudent to undertake further research into a comparison of different ML models. SHAP (Shapley additive explanations) offers a promising method to clarify the contributions of features to predictions and has been widely used for model interpretation [

22,

30,

36,

40]. It can be argued that the discrepancies among various ML algorithms may have a substantial impact on the reliability of parameter analysis in model interpretation. In the context of predicting the flexural ultimate capacity of reinforced UHPC beams, the application of SHAP analysis to different ML models is still an unexplored research gap. Consequently, it is advisable to undertake further research to compare different ML models. An in-depth analysis using the SHAP method should be carried out by taking account into the impact of key design parameters on performance prediction to provide valuable insights for structural design purposes. Therefore, the prediction of flexural ultimate capacity for reinforced UHPC beams using ensemble learning and SHAP methods is promising.

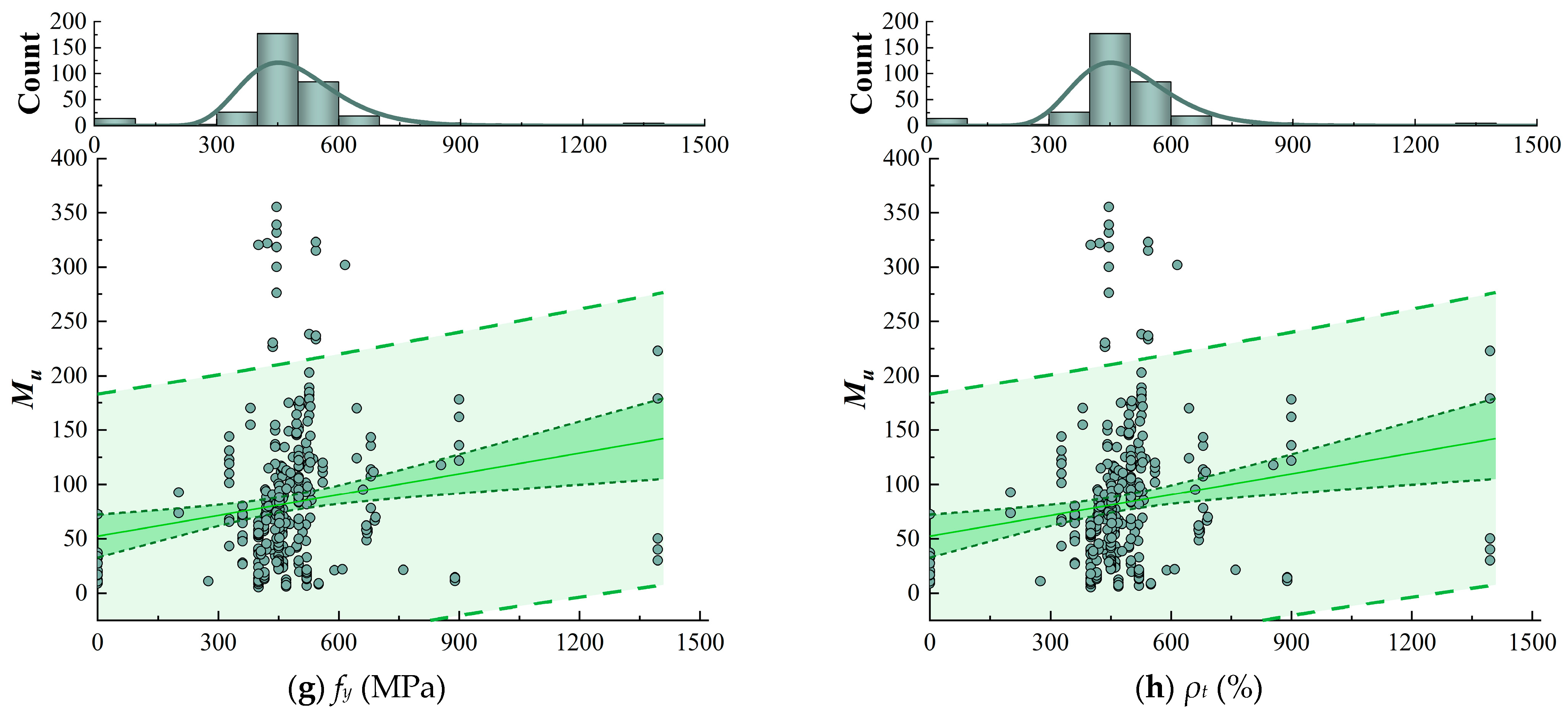

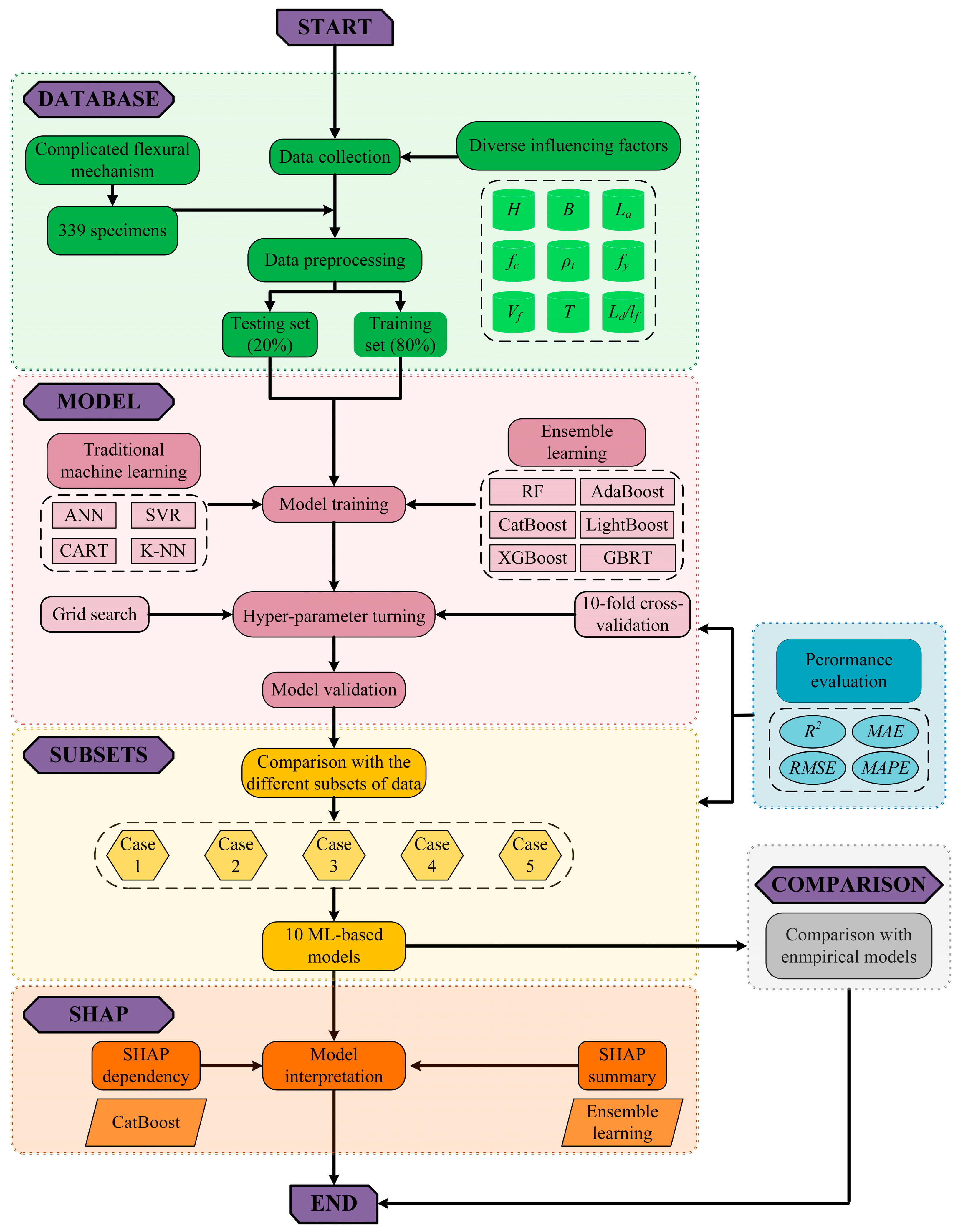

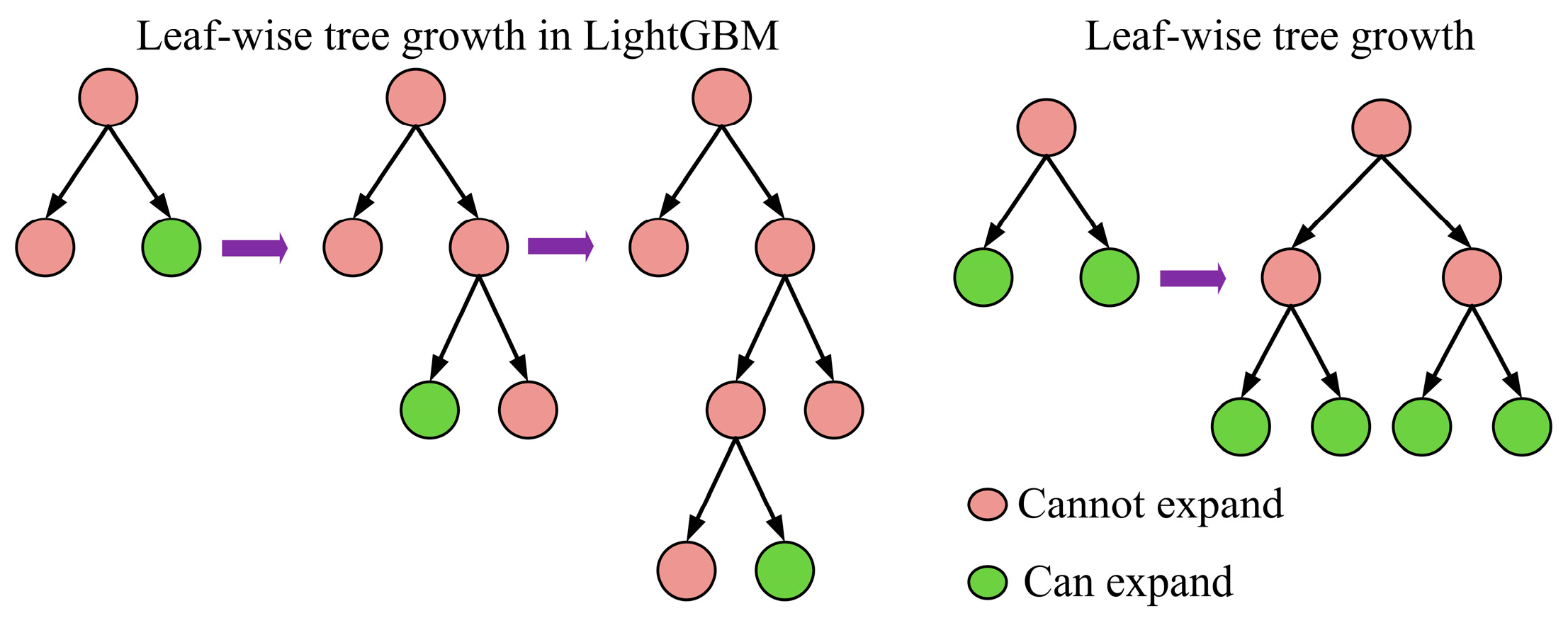

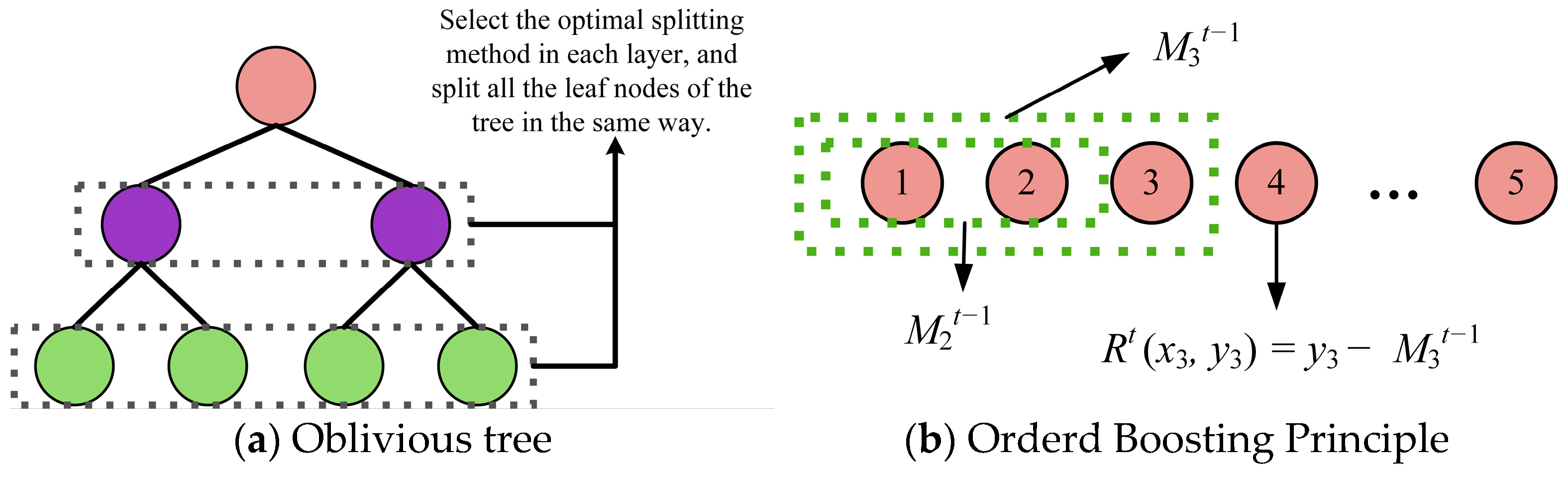

The objective of this study is to address the aforementioned limitations by expanding the available database and optimizing ML algorithms, thereby achieving greater accuracy and efficiency in predicting the flexural performance of reinforced UHPC beams and providing more reliable and efficient design recommendations for future applications. To be more specific, a comprehensive database containing data from 339 tests of reinforced UHPC beams with various design parameters is initially established. To balance model accuracy and practical implementation, a reliable and efficient approach involving nine input parameters is considered in this study. Furthermore, several ML algorithms are presented to develop optimized models for precisely predicting the flexural ultimate capacity (Mu) of reinforced UHPC specimens derived from the established database. Traditional models, including ANN, SVR, and k-nearest neighbors (K-NN), are first applied to make predictions. Additionally, ensemble learning models, such as classification and regression trees (CART), random forest (RF), adaptive boosting (AdaBoost), and gradient boosting regression trees (GBRT), are utilized for further optimization. To enhance prediction accuracy, advanced models like light gradient boosting machine (LightGBM), CatBoost, and extreme gradient boosting (XGBoost) are also employed. The performance of the ML models used is then evaluated using four statistical indicators to comprehensively assess and compare their prediction accuracies and capabilities for the flexural ultimate capacity of reinforced UHPC specimens. Subsequently, the sensitivity of ML models to varying data subsets is analyzed to ensure a highly efficient evaluation of the ML models used and the established database. Moreover, the CatBoost model is exemplified to compare the predictions with several existing empirical formulas alongside statistical evaluations for practical engineering applications. Finally, the SHAP method is employed to interpret multiple EL models, thereby substantiating their reliability and determining the extent of influence exerted by each feature on the prediction results of the flexural capacity of reinforced UHPC beams.

2. Acquisition of the Database

The establishment of a database represents a fundamental stage in the initial process of machine learning, which involves the collection, organization, and cleansing of data for model training. In the present study, by conducting a comprehensive review of the published literature, an ultimate capacity database of reinforced UHPC specimens under bending loads is developed and summarized by integrating test results from diverse experimental studies (see

Table A1). The database comprises the results of measurements of 339 UHPC-related specimens with varying design parameters sourced from 56 different experimental investigations [

12,

13,

18,

19,

20,

21,

41,

42,

43,

44,

45,

46]. As previously mentioned, flexural behaviors of reinforced UHPC beams are highly dependent on the specimen geometry, the material properties of UHPC, the shape and volume fraction of steel fibers, and the amount and strength of steel rebars. In the database shown in

Table A1, the height (

H) and width (

B) of a given cross-section and the length of the shear span (

La) are considered to represent the geometrical size of the specimen. Additionally, the cylinder compressive strength of UHPC material (

fc) and mechanic characteristics of blended fibers including the shape, length (

Lf), diameter (

df), and volume fraction (

Vf) are included. Furthermore, the yielding strength (

fy) and reinforcement ratio (

ρt) of steel rebars are also presented. Thus a total of nine performance-sensitive parameters are incorporated as input variables into the established database, while the ultimate capacity of bending moment (

Mu) is selected as the output variable.

Table 1 provides detailed information on the statistical characteristic values of the parameters involved.

The presence of longitudinal tensile reinforcement in plain concrete beams has been proven to enhance the load-carrying capacity and stiffness of the structure. Accordingly, more than 95 percent of the flexural specimens in the database are equipped with longitudinal tensile reinforcement. Furthermore, the incorporation of steel fibers into the UHPC matrix also improves its tensile strength and toughness. Consequently, 94.6% of the UHPC specimens selected in the database are blended with steel fibers, and the effects of various fiber characteristic parameters on their structural performance are explored. The inclusion of versatile steel fibers is particularly advantageous for enhancing the flexural capacity of UHPC structures. Specifically, the distribution percent of steel fiber shape of UHPC specimens included in the database is as follows: 79.5% straight fibers, 2.5% hooked-end fibers, 2.8% corrugated fibers, and 9.7% hybrid fibers. It should be noted that “T” represents steel fibers with different shapes. T is encoded as numbers to make it easier for models to process the data. Using numbers instead of words helps with calculations and analysis. Each number represents a different shape of fiber: 1 denotes straight fibers, 2 denotes corrugated fibers, 3 denotes hooked-end fibers, 4 denotes hybrid fibers, and 0 denotes specimens without steel fibers. In addition, 5.4% of the specimens in the database lack steel fibers, thus providing a basis for comparison in regard to the sensitivity of steel fibers. Overall, the database comprises a substantial number of experimental parameters, which may enhance the adaptability of machine learning models for training and evaluation.

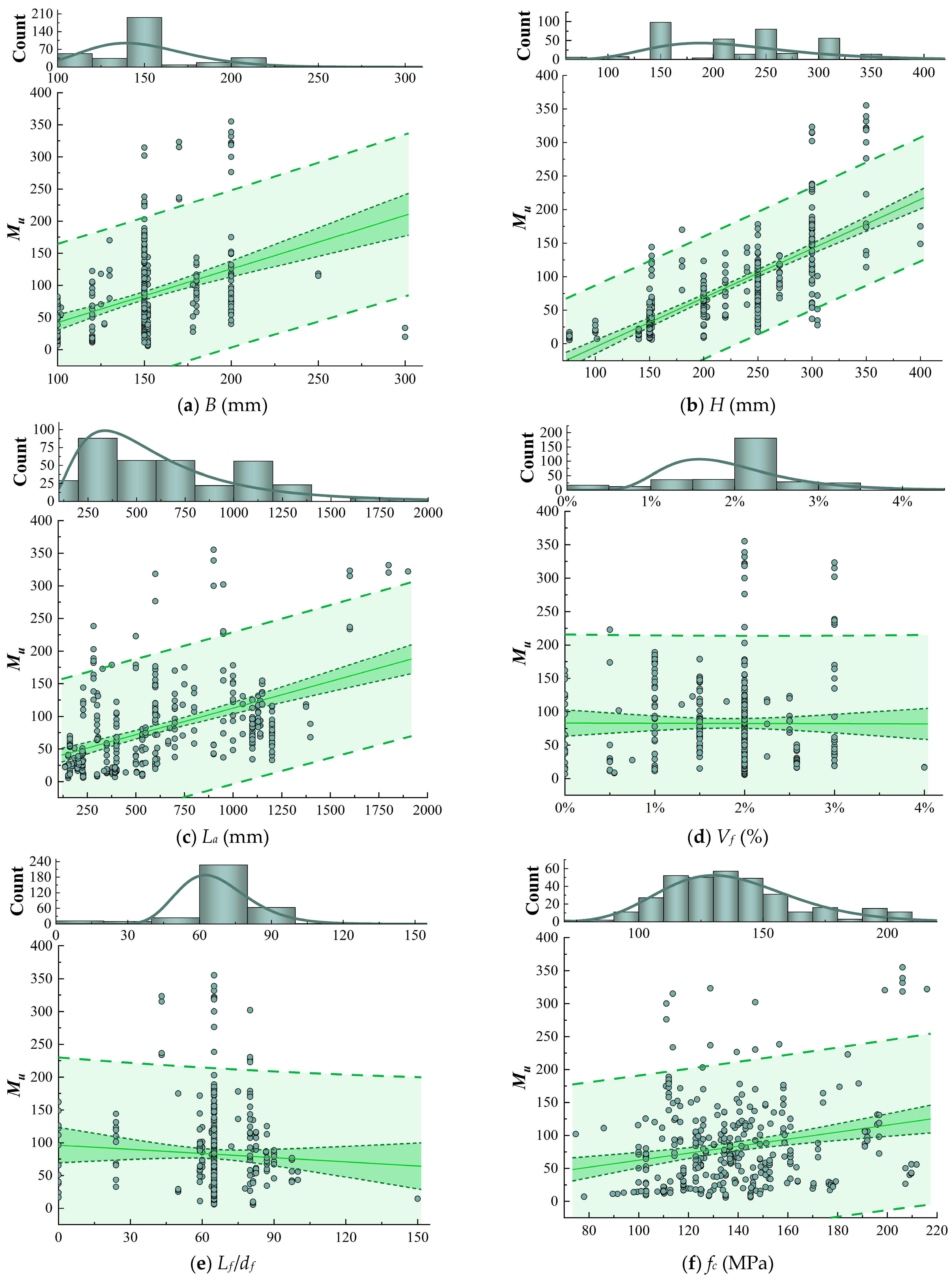

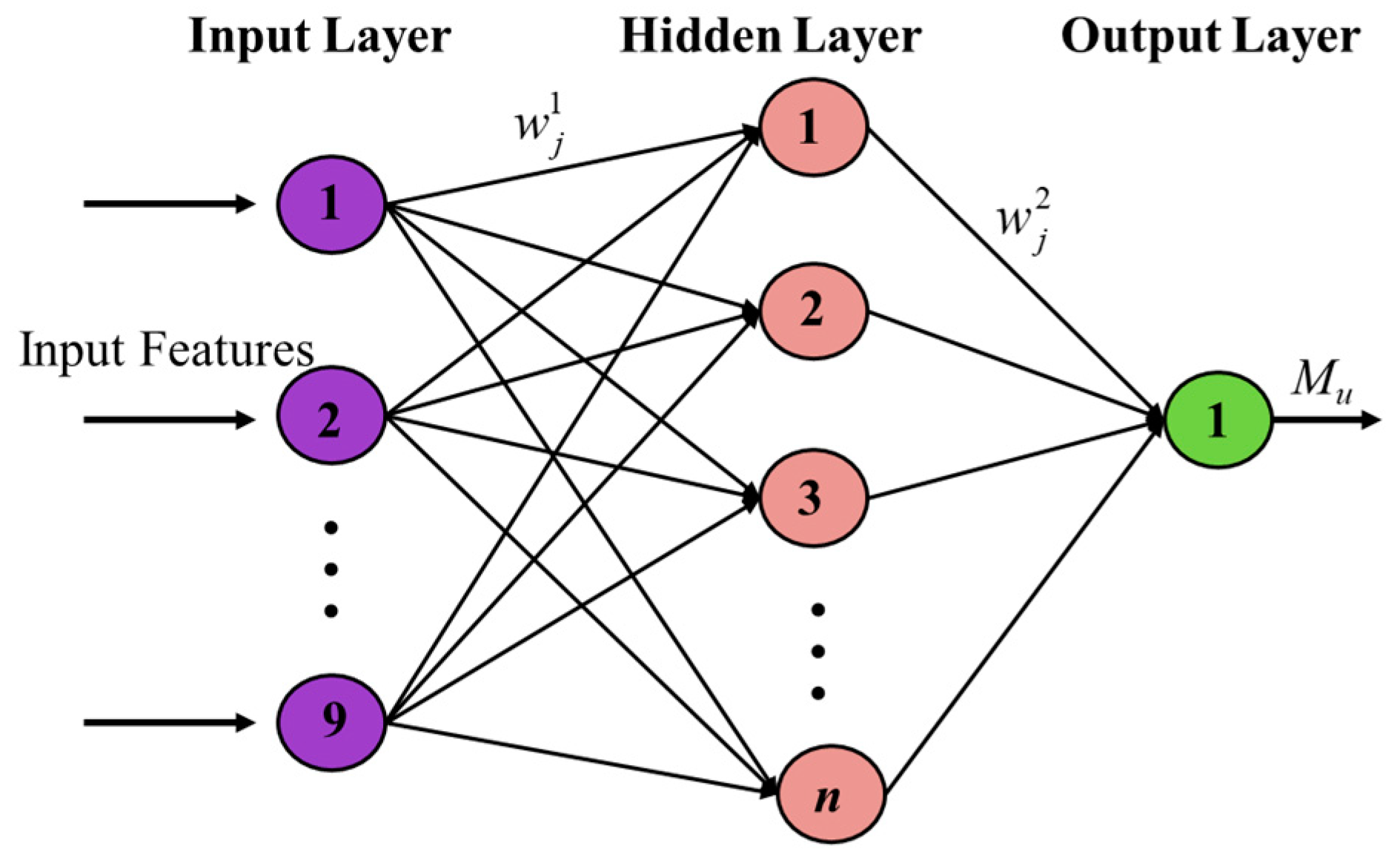

Figure 1 illustrates the frequency histograms of individual parameters, as well as the dependence between different input variables and the target output variable of the ultimate bending moment

Mu. Note that the dark green shadings in the

Figure 1 represent the data ranges with 95% confidence intervals, while the lighter green areas signify the data ranges with 95% prediction accuracies. It is evident that the estimation of the flexural capacity

Mu for UHPC specimens is a highly intricate and challenging process. As shown in

Figure 1, an increase in the value of the flexural capacity

Mu is observed with growing values of

H,

B,

La,

fc,

fy, and

ρt. This trend is consistent with the fundamental principles of structural design and material properties. The regression curves for the parameters

H and

B in

Figure 1 display larger slopes, indicating that these parameters exert a more pronounced influence on the flexural load-carrying capacity

Mu. In contrast, the linear slopes of the regression curves for the volume fraction

Vf and aspect ratio

Lf/

df of steel fibers are close to zero, making it challenging to assess their impact on

Mu. The relatively similar shapes of steel fibers employed in the bending tests may be responsible for the phenomenon, and additional research is required to confirm this hypothesis.

The application of simple linear regression is inadequate for clarifying the inherently complex relationship between the ultimate bending moment Mu and an individual input variable. As a result, finite element analysis methods and nonlinear numerical modeling have emerged as significant developments in prediction tools for structural evaluation, providing optimization solutions to an ever-increasing number of complicated structures. The purpose of this study is to estimate the flexural load-carrying capacity Mu of reinforced UHPC specimens in the aforementioned database using several ML-based algorithms, including both traditional ML models and EL models. These methods are capable of accommodating a range of complexities, which are user-friendly to employ, and thus facilitate highly nonlinear modeling. This methodology of ML will enable the design of UHPC-based structures with reduced environmental impact and enhanced sustainability, as well as an improved accuracy and efficiency of performance prediction.

4. Results and Discussions

4.1. Model Performance: A Comparison Across Diverse ML Algorithms

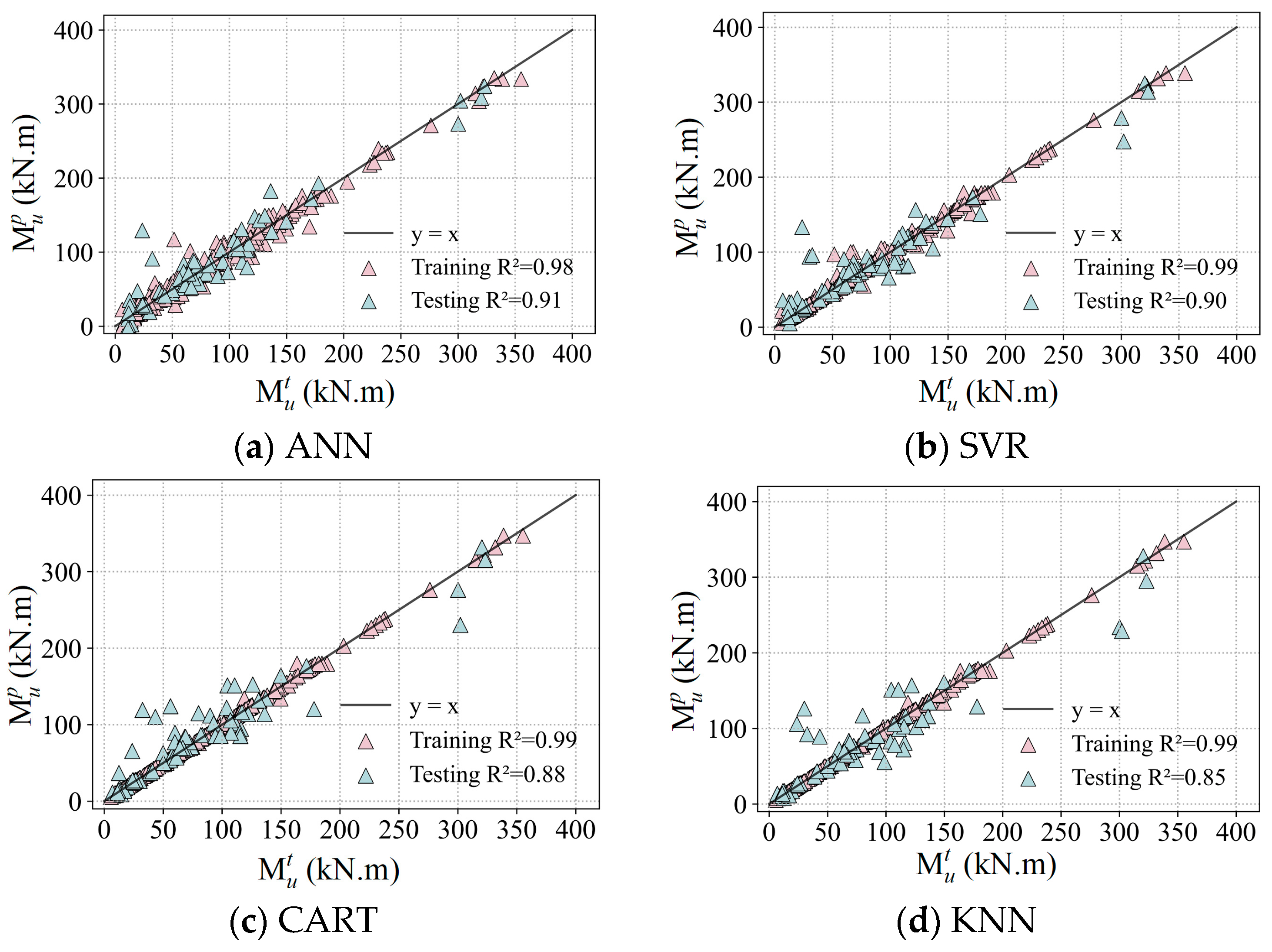

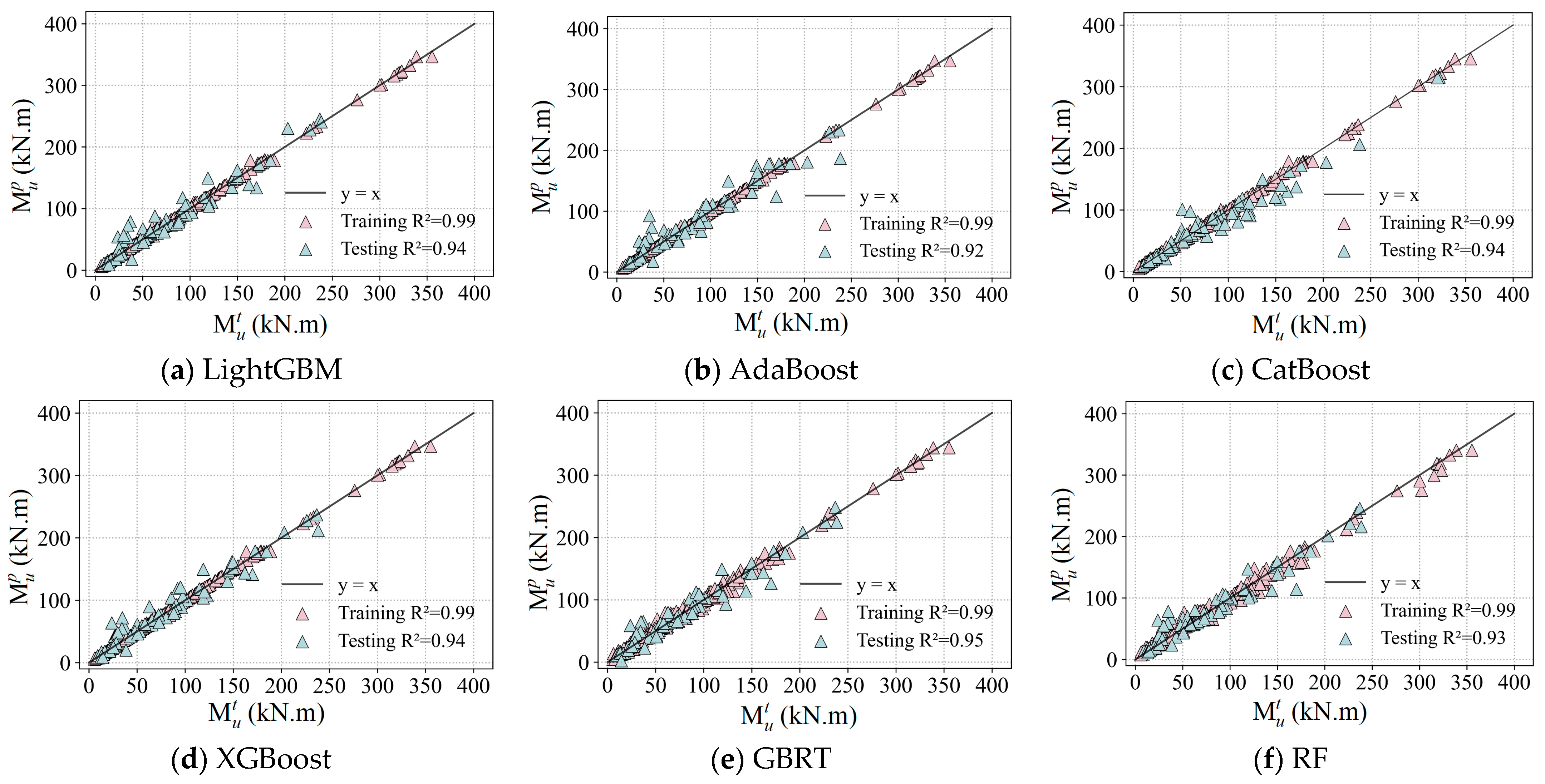

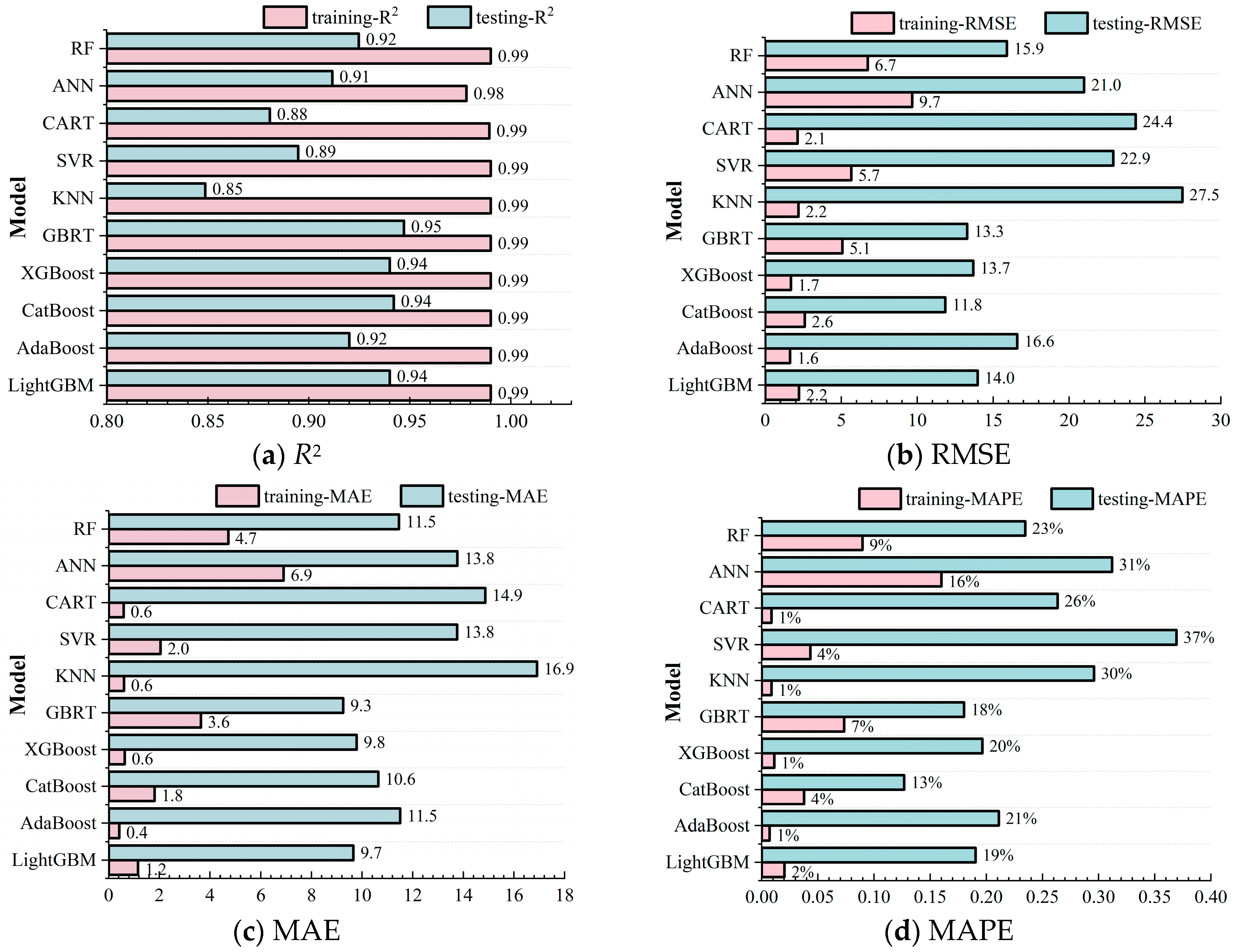

Ten different algorithms are employed to develop machine learning models based on the established database to predict the ultimate bending moment

Mu of reinforced UHPC beams. The dataset was divided into 80% for the training set and 20% for the testing set.

Figure 13 and

Figure 14 compare the predicted bending ultimate moments

Mup from traditional ML models and ensemble learning models, respectively, with the corresponding tested results

Mut from the established database. The relationships between the predicted ultimate moments and the measured values follow a linear fit with a slope of 1.0. Detailed results of this comprehensive evaluation for the bending moment capacity of reinforced UHPC specimens using various ML-based models are presented in

Figure 15.

On the training set, the coefficient of determination, R2, for all ML models except ANN is greater than 0.99, highlighting their excellent fitting abilities. The ANN model still shows a commendable performance, although it has a slightly lower R2 value of 0.98. In terms of RMSE, the KNN, AdaBoost, CatBoost, and XGBoost models have relatively lower values compared to other ML models, indicating the minimized discrepancy between their predicted values and tested results, with an average error margin of approximately 2.0. This underlines their exceptional model accuracy in predicting the flexural performance of reinforced UHPC beams. In contrast, higher RMSE values of 9.7 and 6.7 were recorded for the ANN and GBRT models, respectively. Despite these higher values, the model accuracy is still within an acceptable range.

Further analysis of the MAE reveals that the KNN, AdaBoost, CatBoost, LightGBM, and XGBoost models all maintain values below 2, indicating a negligible average deviation between the predicted and measured values, and thus a high degree of prediction accuracy. Moreover, the evaluation of MAPE clearly shows that the KNN, CART, AdaBoost, and XGBoost models retain values below 1%, confirming their accurate prediction capabilities. Although the ANN model has a MAPE of 16%, indicating a reduced predictive accuracy, possibly affected by its network structure and hyperparameter settings, it nevertheless meets the fundamental predictive benchmarks.

Considering the testing set, the coefficients of determination, R2, for the LightGBM, CatBoost, XGBoost, and GBRT models are all larger than 0.94, demonstrating their exceptional prediction potentials. This outstanding performance is primarily due to the inherent advantages of ensemble learning, which includes the reduction in the bias and variance in predictions by combining multiple models, thereby enhancing their ability to generalize to new datasets. Conversely, the KNN model gives the lowest R2 value of 0.85 on the testing set. Its performance limitations may be related to its decision mechanism, which relies on nearest-neighbor voting or averaging. This may fail in the presence of high-dimensional data or uneven data distributions, where the concept of “nearest neighbor” may be somewhat indeterminate.

The KNN, ANN, SVR, CART, and AdaBoost models present relatively high values when analyzing the three evaluation indicators of RMSE, MAE, and MAPE, indicating a decrease in prediction accuracy on the testing set. The increased sensitivity of these models to the distribution of data features and the presence of noise may be responsible for this trend. In stark contrast, the GBRT and CatBoost models outperform on all three of these indicators, further underscoring the superior effectiveness of ensemble learning models in improving the accuracy of predictions. Specifically, GBRT and CatBoost derive their superiority from the construction of multiple decision trees and the synthesis of the prediction insights of each tree to reduce potential errors inherent in singular models.

To summarize, the excellent performance of ensemble learning models such as LightGBM, CatBoost, XGBoost, and GBRT on the testing set is fundamentally related to the strategy of ensemble learning with model aggregation. Those approaches effectively reduce the bias and variance while improving the generalization ability of the models. Conversely, while the KNN model presents admirable results on the training set, its modest performance on the testing set highlights the importance of considering data characteristics and the compatibility of the logic of model decisions with the given problem during model selection. Overall, the ten ML-based models evaluated are capable of accurately predicting the ultimate bending moment values, Mu, of reinforced UHPC beams, confirming the profound potential of machine learning models to address challenging structural demands.

4.2. Data Subset Analysis for Model Performance and Stability

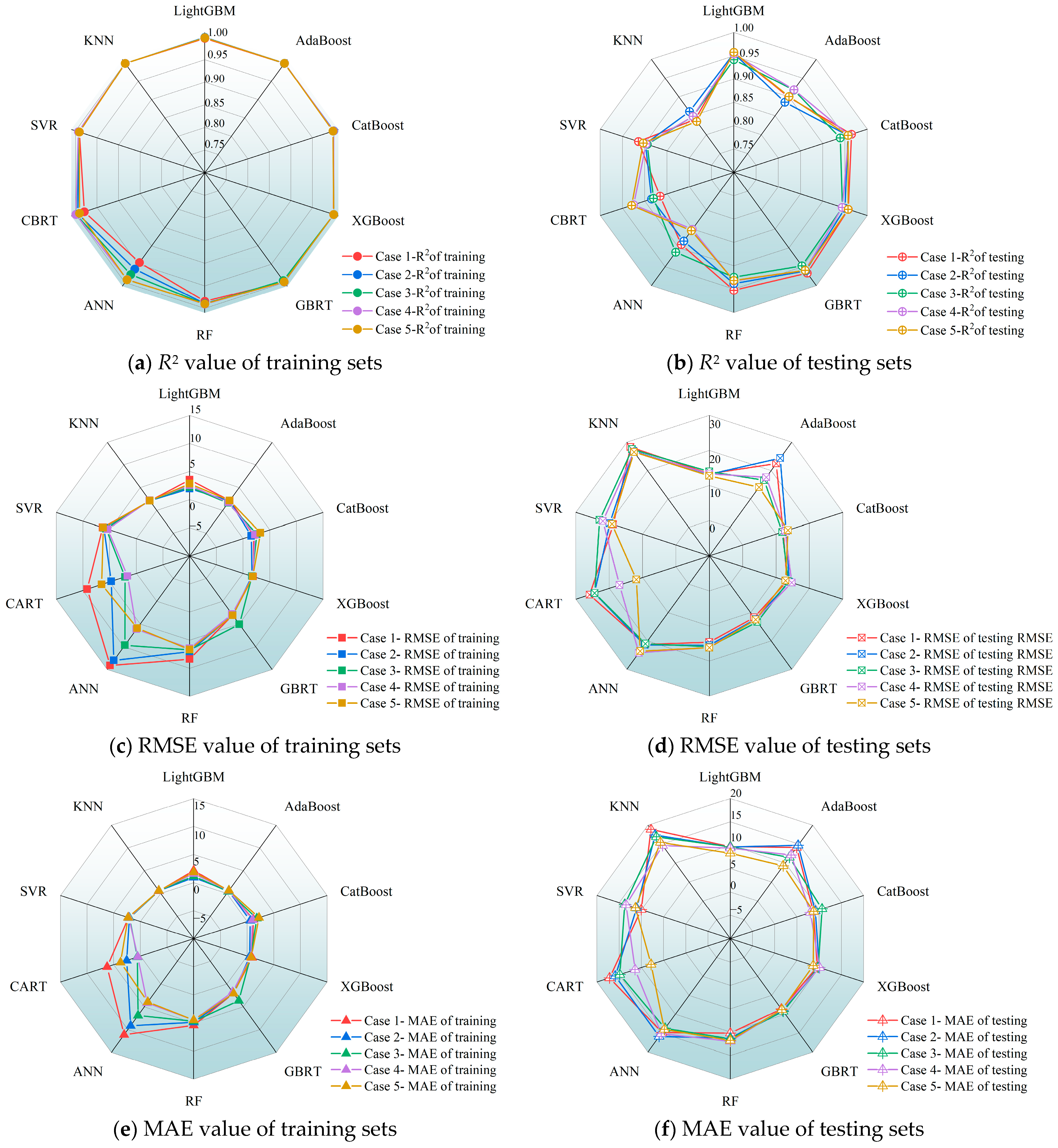

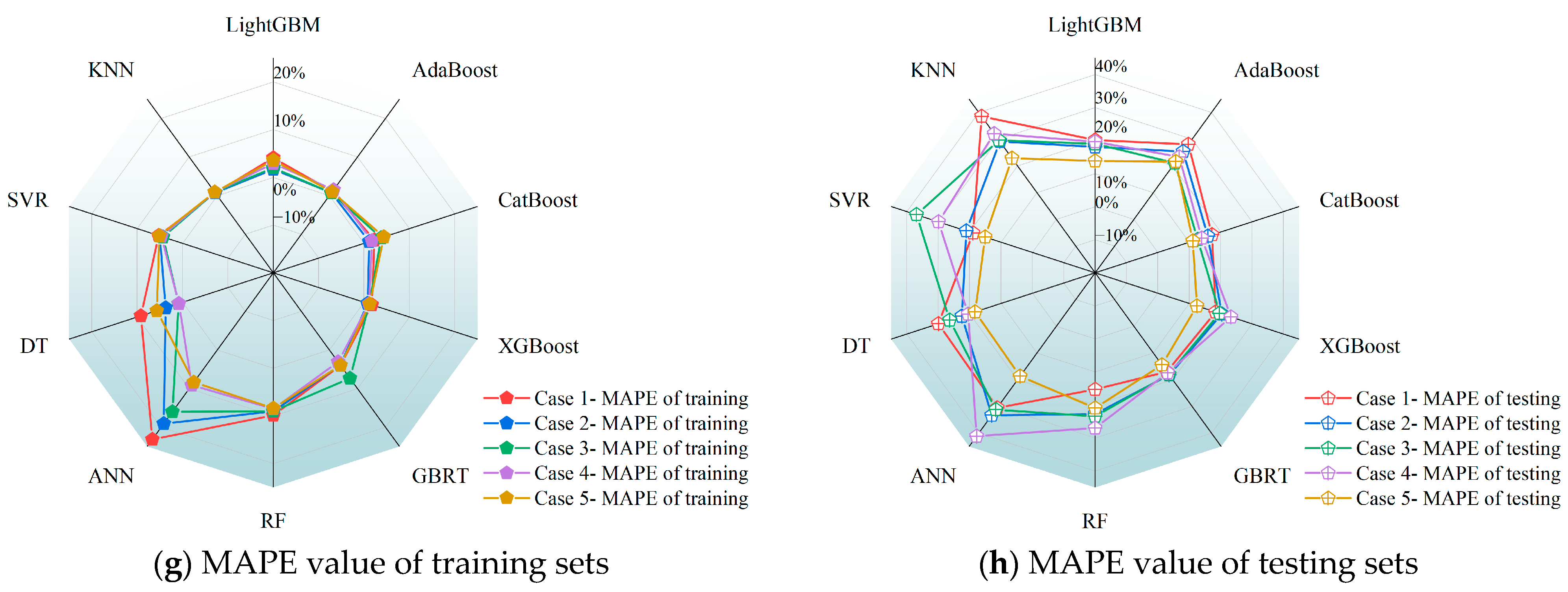

To systematically evaluate the qualities of the ML-based models and database employed, as well as to explore model stability, a methodical approach is taken by dividing the database previously established into subsets of varying sizes. This strategy makes it possible to examine model performance across a spectrum of dataset sizes, thereby providing insightful perspectives on how model performance varies with different dataset sizes. Based on findings from previous research and empirical evidence, five different cases of data subsets, as shown in

Figure 16, have been identified for in-depth analysis.

Figure 17 presents a comparative analysis of model performances with various cases of data subsets. An examination of the coefficients of determination,

R2, for all models, reveals that, across different cases of data subsets, the

R2 values associated with the training set are predominantly greater than 0.98, while the

R2 values of the testing set are generally larger than 0.90. These results underscore the overall robust performance of ML models. Nevertheless, the ensemble learning models exhibit relatively superior performance compared to their traditional ML model counterparts. Specifically, the CatBoost model achieves the highest

R2 value of 0.97 on the testing set for Case 1 and reaches a maximum

R2 value of 0.96 for the testing set for Case 2. For the third to fifth cases of data subsets, the

R2 value of the testing sets peak at 0.94, 0.96, and 0.96 with the ensemble models of GBRT, CatBoost, and GBRT, respectively.

This analysis highlights the superior performances of ensemble learning models over traditional ML models in most cases and explains the variance in model effectiveness when dealing with data subsets of different divisions. The sustained high R2 values of ensemble learning models across a variety of data subset configurations can be attributed to their elaborate structures and algorithms, which are able to capture data correlations and patterns in a more effective way. As a result, the accuracy of predictions is improved. Furthermore, integrated models enhance the prediction accuracy by combining several weak learners or regressors. This strategy is especially beneficial when dealing with large and diverse data sets. Conversely, due to their relatively simple algorithmic structures, traditional ML models may be unable to fully represent the intricacies of data relationships, which impacts to some extent their overall performance.

To conduct a thorough evaluation of model performance, three statistical performance indicators of ML models, RMSE, MAE, and MAPE, are discussed here. When evaluating the training set, a majority of ML models show exceptional and consistent proficiency across these performance indicators. Nonetheless, the KNN models demonstrate suboptimal performance under various cases of data subsets, especially for Case 1. This can be attributed to the fact that the KNN models encounter a deficit in training sample size within the data subset division for Case 1. This leads to an overfitting of training data with details and noise, thereby decreasing their generalization abilities. Moreover, the MAE for most of the models is around 10, suggesting a mean absolute deviation of approximately 10 units between model predictions and measured results.

Having analyzed the model performance with statistical indicators, it becomes evident that CatBoost and GBRT models significantly outperform the traditional ML models. The KNN, AdaBoost, SVR, and ANN models display inferior performance in various data subset arrangements. For instance, in the Case 4 data subset, the ANN model registers a dramatically high MAPE of 41%, suggesting an insufficient prediction accuracy. This may be due to the model not being trained on a sufficiently diverse or large dataset, which may have resulted in inadequate generalization to unseen data. However, in the Case 5 data subset, the MAPE values decrease to approximately 18%, revealing a reduction in the average percentage deviation between the prediction values and measured results to about 18%. The significant variation in MAPE values highlights the pronounced differences in the adaptability of diverse models to specific data subsets. It therefore emphasizes the need to consider the sensitivity and adaptability of a model to varying data subsets during the model selection and optimization process.

An in-depth evaluation of the ensemble learning models reveals that the second case is found to be the most effective and efficient database division strategy across all of the data subset cases. To be more specific, 75% of the database is allocated to the training set, while the remaining 25% of the data serves as the testing set. In contrast, the optimal data subset configuration for traditional ML models is identified in Case 3, where the data distribution percentages of the training set and testing set are 80% and 20%, respectively. The findings underscore the considerable influence of data division ratios on model effectiveness. Further investigation reveals that among ensemble learning algorithms, the CatBoost and GBRT models present a remarkable consistency with a varying data subset configuration. In terms of traditional ML models, the CART model stands out for its stability and robustness. Notably, the CatBoost model is distinguished by its superior division strategy of data subsets, considering both model efficiency and stability of data acquisition.

The insights gained from this analysis not only reveal the subtle differences in how each model will perform under different data subset distributions but also provide critical guidance for future model selection and optimization efforts. The foregoing analysis highlights the critical importance of proper data acquisition in improving model performance. Specifically, in the context of ensemble learning models, the selection of an appropriate data subset configuration is of paramount importance for the realization of peak performance. Furthermore, the model stability plays a crucial role in determining how well it performs. Therefore, the effects of data configuration and model stability should be properly considered during the model selection and optimization phases to ensure optimal model functionality in real-world applications.

4.3. Comparison with Existing Empirical Equations

Given the increasing utilization of UHPC-based materials in civil engineering, a multitude of standards and guidelines have emerged worldwide to facilitate the design of UHPC structures [

25,

26,

37,

38]. The prevailing standards in the field, the French standard NF P 18-710 [

37] and Swiss recommendation SIA 2052 [

38], provide guidelines for the design of UHPC-based structures. However, these standards face limitations in terms of their practical application and accuracy precision. The French standard emphasizes strain-based failure criteria, requiring iterative calculations without explicitly defined formulas, whereas the Swiss recommendation simplifies compressive stress distribution and applies a reduction factor to tensile contributions. The existing empirical formulas are presented in

Table 5, with symbol definitions available in the referenced literature. Similarly, the US design guides of ACI 544.4R-18 [

26] and FHWA HIF-13-032 [

25], which are based on the equilibrium and strain compatibility, fail to fully capture the nonlinear behavior of UHPC elements. The calculation model proposed by Li et al. [

39] is derived from experiments and incorporates UHPC’s tensile contribution with an assumption of uniform stress distribution, thereby reducing its applicability under varying reinforcement ratios. For reference, the key formulas for these empirical methods are presented in

Table 5. Despite the prevalence of existing empirical or code-based methods, numerous studies reveal that the empirical formulas provided for estimating the flexural capacity of reinforced UHPC beams frequently exhibit excessive conservatism, resulting in significant discrepancies between predicted values and experimental observations [

12,

13]. This study aims to demonstrate the superior predictability of the CatBoost model by comparing its model performance with several widely recognized models based on empirical formulas.

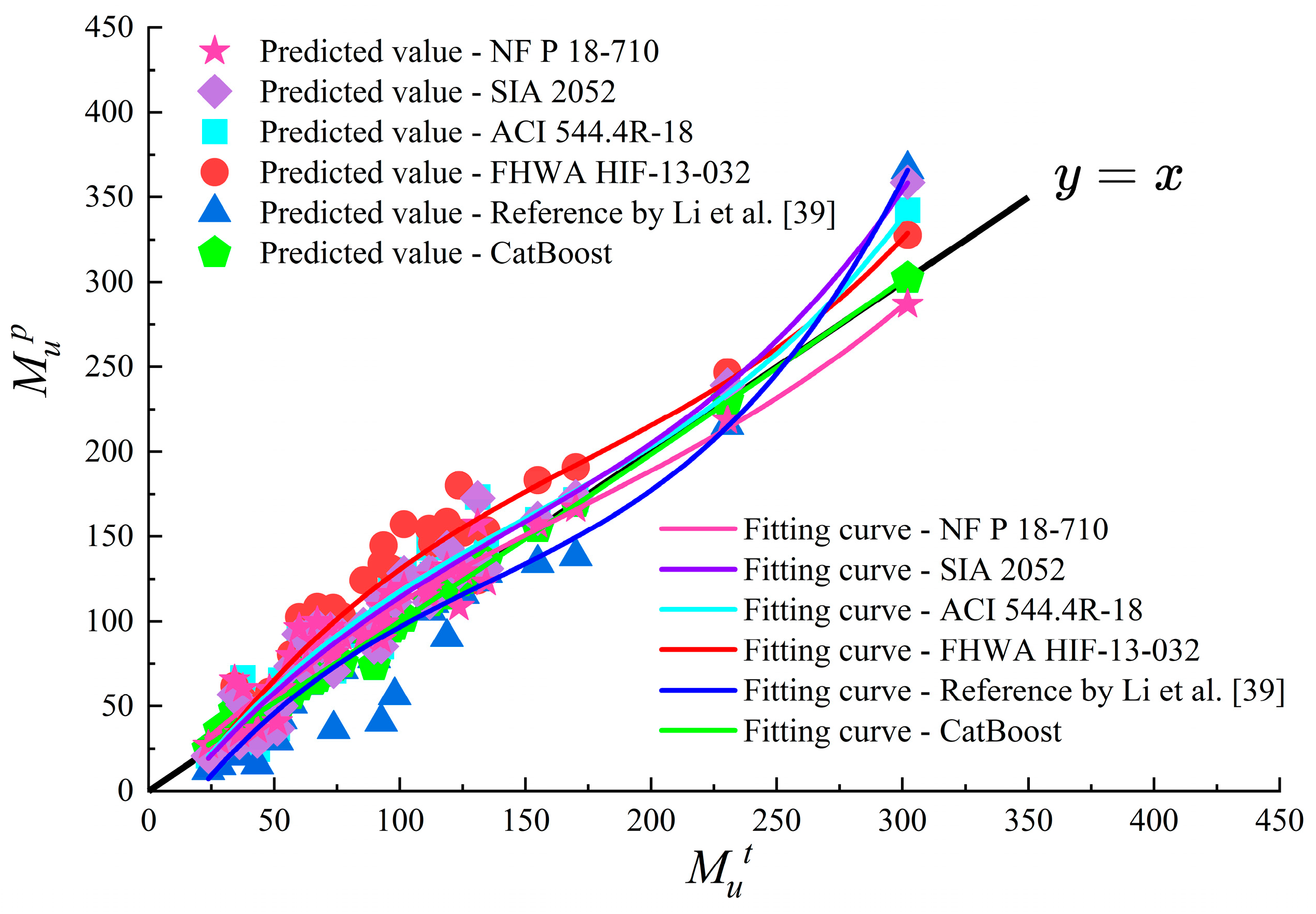

As shown in

Table 6, the comparison results reveal that the CatBoost model significantly outperforms the five representative empirical formulas in predicting the flexural capacity of UHPC beams. Empirical design models such as NF P 18-710 and SIA 2052 provide standardized approaches to the design of UHPC beams; however, they frequently rely on simplified assumptions about material behaviors, such as strain distributions or reduction factors, leading to conservative or inconsistent predictions. For instance, an examination of the calculation method proposed by Li et al. [

39] reveals an average predicted-to-measured flexural capacity ratio of 0.916, thus indicating a tendency to underestimate flexural capacity in practical applications. Conversely, the CatBoost model achieves a mean predicted-to-measured flexural capacity ratio of 1.022, the closest to 1, thereby signifying a higher degree of agreement with actual values.

In terms of quantitative performance, the CatBoost model demonstrates superior performance, attaining an

R2 value of 0.993. This indicates its superior predictive accuracy and fitting capability compared to the existing empirical methods. For example, the recommendation SIA 2052 and the method presented by Li et al. [

39] exhibit

R2 values of 0.925 and 0.851, respectively; while the FHWA method exhibits a significantly lower

R2 value of 0.711. Furthermore, the CatBoost model demonstrates the lowest RMSE value of 4.396, MAE value of 2.055, and MAPE value of 3.704%, exhibiting a substantial improvement in performance compared to empirical methods such as the ACI 544 and FHWA models. These models exhibit significantly higher RMSE values of 19.929 and 28.923, respectively. These findings underscore the efficacy of the CatBoost model in minimizing prediction errors and ensuring consistent accuracy across diverse datasets.

As illustrated in

Figure 18, the predicted data points with the CatBoost model are closely distributed around the baseline

, suggesting that the model demonstrates reliable and robust performance. The polynomial fitting curve (green) of the CatBoost model exhibits a strong alignment with the observed values and provides a reliable representation of the underlying data.

Despite its widespread use as an empirical approach, the FHWA method demonstrates significant deviations from observed values, exhibiting an RMSE of 28.923. This outcome indicates its inadequacy in accurately capturing the mechanical behavior of reinforced UHPC beams. The distribution of predictions with the FHWA method is scattered, indicating challenges in generalization that may stem from overly simplified assumptions regarding material behaviors. A comparable situation arises with the ACI 544 model, a frequently utilized model, which simplifies the tensile contribution of steel fiber reinforcement. Consequently, this leads to a substantial underestimation or overestimation of the flexural capacity of reinforced UHPC beams. The deviation of the fitting curve (cyan) from the measured data is particularly evident in this model, indicating a failure to accurately represent the data. While the NFP 18-710 model exhibits certain advancements, it is hindered by computational expense and practical limitations, rendering it unfavorable for engineering applications.

The reference model proposed by Li et al. [

39] also demonstrates deviations, with data points scattering away from the ideal baseline. This suggests the presence of inconsistencies in prediction accuracy. While this model incorporates a great number of refined parameters compared to purely empirical formulas, its limitations further highlight the need for more data-driven methodologies. The SIA 2052 model, likewise, fails to achieve the same level of precision as CatBoost, thereby reinforcing the assertion that machine learning approaches offer superior predictive capabilities.

A primary benefit of the CatBoost model is its capacity to detect underlying complex nonlinear correlations between input parameters and flexural performance, a task that conventional empirical models frequently encounter challenges in accomplishing. Moreover, by capitalizing on an extensive and refined dataset, CatBoost enhances generalization, reducing the risk of overfitting while preserving predictive precision across a range of configurations for reinforced UHPC beams. The strong correlation between its predictions and the observed values indicates a high degree of suitability for structural performance predictions, particularly for applications reliant on ML techniques, such as EL models.

Overall, the CatBoost model demonstrates superior performance in terms of predictive accuracy in comparison to empirical methods. Its enhanced applicability and adaptability are particularly notable, as it is capable of incorporating complex feature interactions and producing highly reliable results, which makes it an invaluable tool for practical engineering applications. The employment of data-driven methodologies by the CatBoost model presents a promising alternative to existing empirical methods, thereby paving the way for enhanced accuracy and efficiency in the field of UHPC structural design.

5. Model Interpretation

Advanced machine learning models, such as deep learning, are often considered “black boxes” because of the complexity and nonlinear nature of the models involved, which make it difficult to interpret their decision-making processes. The lack of transparency can have a negative impact on confidence in model predictions.

While techniques such as local interpretable model-agnostic explanations (LIME) and interpretive decision trees provide a degree of interpretability, they are limited. The Shapley additive explanations (SHAP) method has the potential to address these challenges by clarifying the contribution of features to model predictions. SHAP has been widely adopted for model interpretation since it enhances transparency and credibility through consistency, local interpretability, and model independence [

35,

36,

40,

62]. The explanatory model of SHAP, g(a′), is defined as

where

ϕ0 is the baseline value of the model, usually the average of all sample predictions;

ϕz is the SHAP value of the feature

z, which indicates the contribution of the feature

z to the prediction; and

a′

z is a binary indicator for the feature

z, indicating whether feature

z is in the explanatory model.

5.1. Analysis of Feature Importance Using SHAP

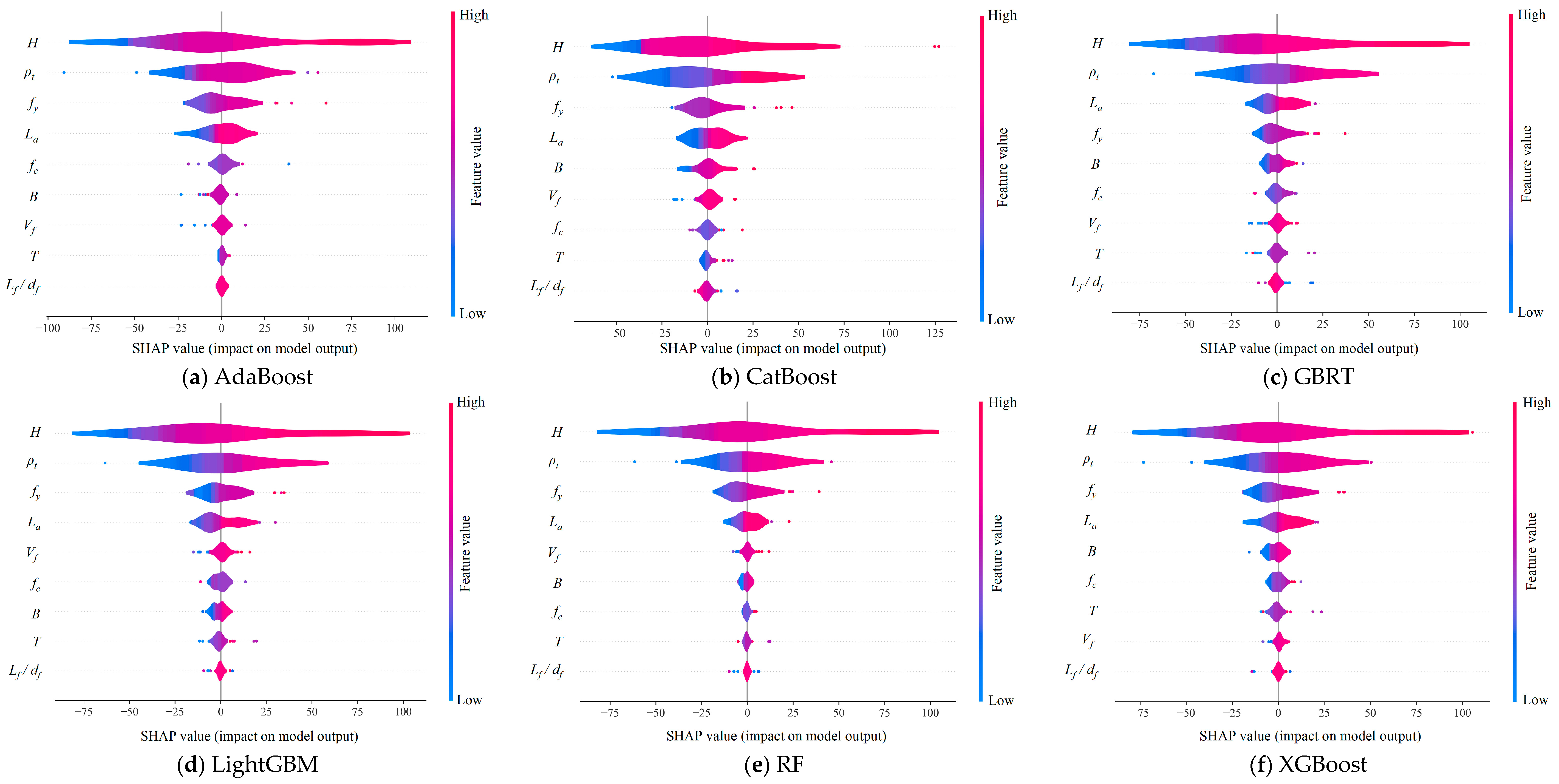

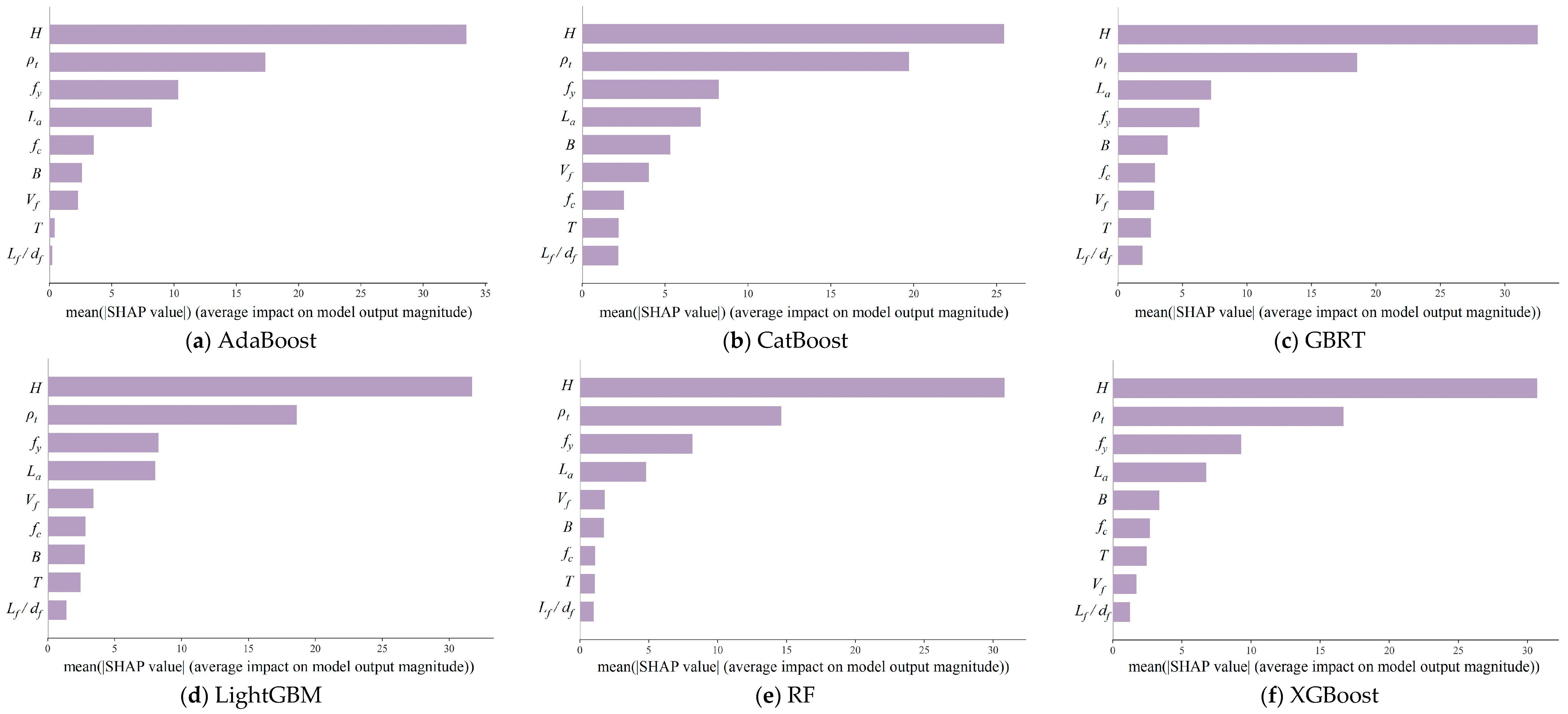

In exploratory analysis of ML models, reliance on the SHAP interpretation for a single model alone may not adequately capture the delicate effects of features on predictions. This limitation arises from the varying dependencies and interactions that different models have with the same set of characteristic parameters. To gain a deeper and more accurate understanding of feature significance and its influence on predictions, it is essential to perform a SHAP analysis with multiple models.

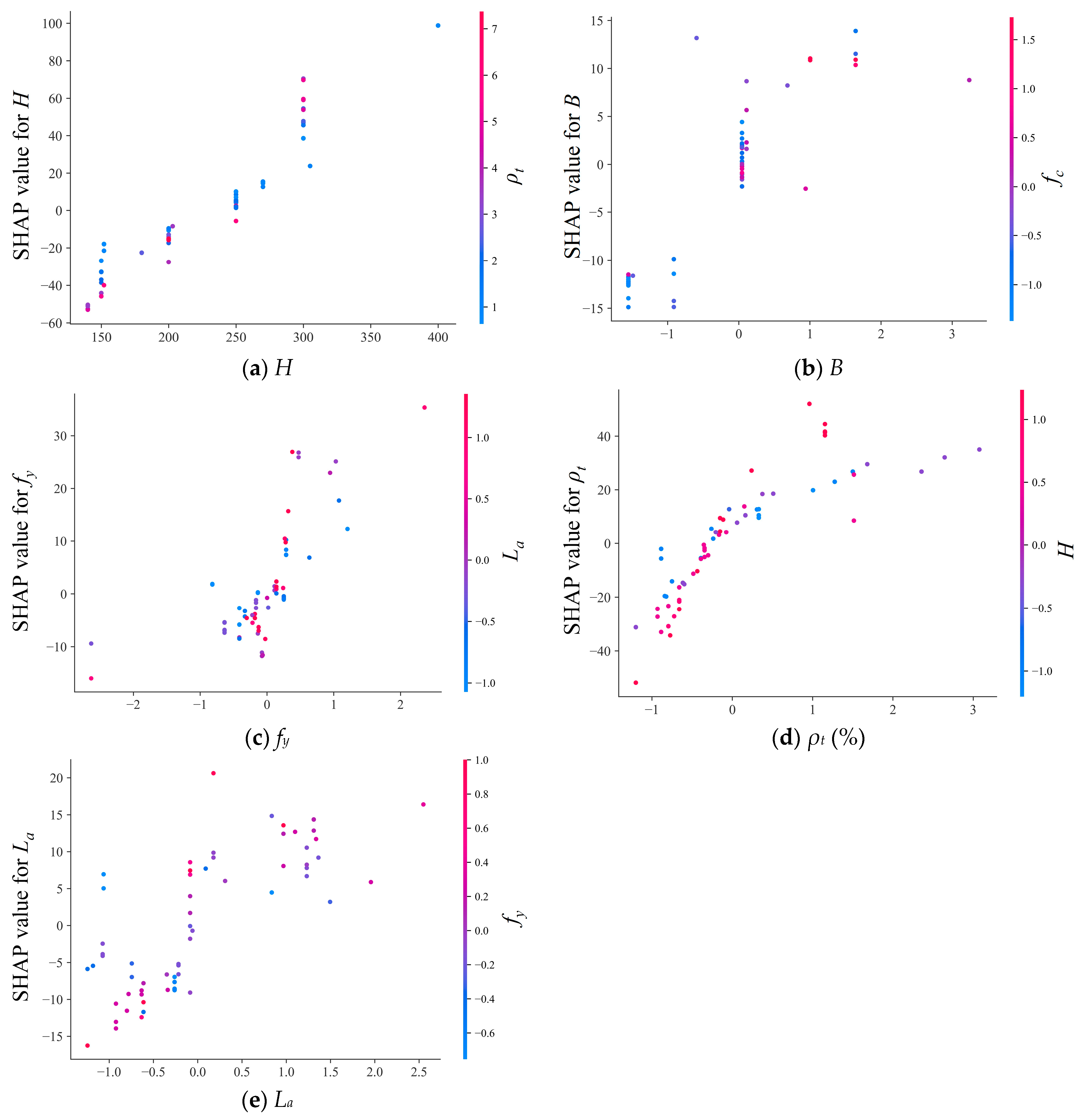

Taking advantage of the global interpretability and powerful visualization capabilities offered by SHAP, a global feature importance analysis across six ensemble learning models is conducted. To illustrate the impact and importance of each feature on model output,

Figure 19 presents the SHAP values for individual ensemble learning models. In this figure, the horizontal axis displays the SHAP values, which indicates the extent to which each feature affects the prediction accuracy of the model. Meanwhile, the vertical axis enumerates the features in order of importance, with a color gradient from blue to red representing the progression from lower to higher feature values. It is evident that there are marked discrepancies in how features rank in importance and the direction in which they affect different models.

From

Figure 19, the SHAP analysis for five models highlights the reinforcement ratio of longitudinal rebars,

ρt, the yielding strength of reinforcement,

fy, and the beam height,

H, as the most important features, each of which contributes positively to the prediction results. In contrast, the GBRT model emphasizes the beam height,

H, reinforcement ratio,

ρt, and the length of shear span,

La, as critical, demonstrating the inherent variability in feature prioritization between different models. These observations highlight the critical role of longitudinal reinforcement ratio

ρt in predicting the ultimate bending moment

Mu of reinforced UHPC beams, consistent with its recognized importance in the enhancement of steel rebars for reinforced concrete beams. Despite the limited tensile strength of concrete-based materials, the longitudinal reinforcements in UHPC beams overcome the limitation by providing essential tensile strength to negative bending moments. The steel reinforcement in UHPC beams effectively carries the tensile load during bending, while the lower section of the beam is longitudinally under tension.

Moreover, several features such as the beam height, H, and the beam width, B, attributes representative of the cross-sectional properties of UHPC beams, are highlighted. This emphasizes the critical role of cross-sectional characteristics in determining the flexural performance of UHPC beams. Feature B is highly significant in the CatBoost, XGBoost, and GBRT models, but it is of much less importance in the LightGBM model, where its influence is ranked remarkably lower. This discrepancy suggests that feature importance ratings vary due to the unique mechanisms that each model utilizes to process features. For the six ensemble learning models, the yielding strength of reinforcement, fy, and the length of shear span, La, show more consistency in both their importance and direction of impact. The SHAP values for these features are more concentrated, which is an indication of a more uniform influence on the prediction results. It is noteworthy that the yielding strength of reinforcement, fy, is recognized in the classical formulations, while the influence of shear span length, La, is absent in the design specifications. This discrepancy indicates that traditional empirical formulas may not fully capture the complexity associated with the prediction of the flexural capacity prediction Mu for reinforced UHPC beams.

A comparison of the results presented in

Figure 19 reveals several notable conclusions. The reinforcement ratio of longitudinal rebars (

ρt), the yielding strength of reinforcement (

fy), and beam height (

H) are consistently identified as the most influential features. The consistent importance of

ρt and

fy confirms their critical role in enhancing the flexural capacity of reinforced UHPC beams, particularly in resisting negative bending moments. However, variations in feature rankings across models reveal differences in how algorithms process structural parameters. For instance, GBRT attributes greater importance to shear span length (

La), a feature frequently disregarded in conventional empirical formulas but recognized by machine learning models for its substantial influence. Conversely, beam width (

B) is deemed crucial in CatBoost and XGBoost but is ranked less significant in LightGBM. This outcome underscores model-specific variations in feature utilization. The uniform SHAP impact of

fy validates established engineering principles, while the significant influence of

La suggests that traditional formulas may inadequately predict flexural behavior. These findings reinforce the advantages of data-driven models over empirical approaches, as ML models effectively identify nonlinear interactions and recognize parameters that have been overlooked. Consequently, the findings highlight the efficacy of EL models in delivering enhanced predictive reliability and interpretability. This underscores the potential for data-driven techniques in structural engineering to enhance conventional design methodologies.

Figure 20 presents the SHAP bar plots for the six ensemble learning models, illustrating the average impact magnitude of each feature on the predictions of those models. The CatBoost model features a relatively more uniform distribution of SHAP values across all features, suggesting a balanced consideration in the decision-making process without excessive dependence on specific features. This equilibrium potentially contributes to the superior performance and stability of the model, explaining its consistent performance across various data subsets among the ten ML models analyzed. In contrast, the SHAP bar plots for other ensemble learning models reveal that some features have significantly higher SHAP values, indicating a stronger reliance on particular features, such as in the AdaBoost, RF, and XGBoost models. This dependency might result in fluctuating model performance across different data subsets.

While an interpretation of SHAP values for a single model may not fully delve into the importance and impact of features on predictions, the analysis of SHAP interpretations across multiple models provides a more comprehensive and accurate understanding of feature importance and interactions. This approach facilitates model selection, optimization, and interpretation analysis with a solid theoretical foundation and practical insights.

The purpose of key feature interpretation is to clarify the explanation of how the importance of features varies across different models and how SHAP analysis can provide deeper insights into model behavior and feature impact, thereby supporting more informed decision-making in predictive modeling.

5.2. Key Feature Interpretation

Improving the transparency and interpretability of ML models is essential for the understanding of their decision processes. To assess the influence of features in the CatBoost model, visualization techniques are applied. A uniform distribution of SHAP values across features suggests a balanced influence, which leads to a focused analysis of the top five most influential features. This approach highlights the key features that drive the predictions of the model [

62]. Feature normalization, achieved by subtracting the mean and dividing by the standard deviation, is used to ensure uniform scaling, stabilize the model, and accelerate convergence.

where

X is the raw data,

μ is the mean,

σ is the standard deviation, and

is the normalized data. The objective of this normalization process is to neutralize the scaling differences among various features, thereby enhancing the stability of the model during its training phase and allowing for faster convergence of the algorithm.

The normalized SHAP values of the CatBoost model are represented in

Figure 21. The analysis of normalized SHAP value reveals a prevailing trend that increases in the eigenvalues of

H,

ρt,

B, and

La are associated with increases in the normalized SHAP values. This pattern suggests that increased eigenvalues of these features substantially increase flexural ultimate capacity

Mu, as reflected in the greater SHAP values. This notable positive correlation, particularly evident for parameters of

H,

ρt,

B, and

La, highlights their considerable influence on

Mu. Nevertheless, the feature

fy shows a clear nonlinear relationship with flexural ultimate capacity

Mu. This is attributed to the ability of the CatBoost model to capture the intricate interactions and nonlinear dynamics between the features. In the context of reinforced concrete beams, the steel reinforcement and the surrounding concrete work together to resist bending moments. The nonlinear influence of

fy is partly due to the complex stress–strain behavior of concrete, in particular its tendency to crack in tension and its ultimate compressive strength limit. An observed increase in the variability of normalized SHAP values for the yielding strength of reinforcement,

fy, deviating from zero, especially towards positive values, suggests a pronounced influence of reinforcement strength

fy on the flexural ultimate capacity

Mu in these regions. Deviating from a straightforward linear relationship, the contribution of

fy in increasing the flexural ultimate capacity

Mu is further evaluated by the distribution and depth of steel reinforcements within the concrete cross-section. As such, the feature of yielding strength of reinforcement,

fy, especially in certain regions, deserves a more in-depth analysis.

An interesting observation from

Figure 21d is the prevalence of more red dots at higher values of

ρt, which suggests a simultaneous increase in

H values in these areas, potentially amplifying their influence on the flexural ultimate capacity

Mu. Thus, when evaluating the effect of

ρt on the flexural ultimate capacity

Mu, it is crucial to consider the interactions with other features. The increases in the values of

La and

H are significantly beneficial to the flexural ultimate capacity

Mu, while the effect of

fy is nonlinear and more pronounced in certain regions. Furthermore, the interaction of

H with high values of

ρt deserves special attention. These findings allow for a deeper understanding of how each feature contributes to

Mu, thus laying a foundation for more accurate model optimization and feature engineering strategies.

6. Conclusions

A comprehensive review of existing literature is conducted to initially establish a database of flexural ultimate capacity for reinforced UHPC comprising the results of measurements of 339 UHPC-based specimens with varying design parameters from 56 different experimental investigations. Ten ML algorithms, namely ANN, SVR, K-NN, CART, RF, AdaBoost, GBRT, LightGBM, CatBoost, and XGBoost, are then employed to develop optimized models for predicting the flexural ultimate capacity Mu of reinforced UHPC specimens. Four statistical indicators of model performance are utilized to evaluate the prediction results of ML-based models presented. Moreover, the performances of ML-based models with different data subset sizes are analyzed to thoroughly assess the qualities of the models and database employed. A comparison analysis is conducted between the calculated values from the several existing empirical formulas and the predicted values of the CatBoost model. The SHAP method is finally used to interpret the ML models, validating their reliability and examining the effect of each feature on the prediction results. The following conclusions can be drawn.

For model training, CART, RF, XGBoost, CatBoost, AdaBoost, GBRT, and LightGBM show superior model performances, characterized by higher R2 values and lower values of RMSE, MAE, and MAPE compared to other ML-based models. The top three models for predicting the flexural ultimate capacity of reinforced UHPC beams are ranked in the order of GBRT > CatBoost > LightGBM. On the testing set, the GBRT model demonstrates the best prediction results with an R2 of 0.95, RMSE of 13.3, MAE of 9.3, and MAPE of 18%. Conversely, the ANN model is found to perform the least effectively. Overall, the developed ML models accurately predict the flexural ultimate capacity Mu of reinforced UHPC beams after optimization, with ensemble learning models typically providing a higher level of accuracy than traditional individual ML models.

An in-depth database subset analysis reveals that the most efficient data subset configuration for ensemble learning models is 75% for the training set and the remaining 25% for the testing set. For traditional ML-based models, the optimal data subset configuration for the data distribution percentages of the training set and testing set are 80% and 20%, respectively. This underscores the significant impact of the database division ratios of training-to-testing sets on the effectiveness of performance prediction for the ML models. Among the ensemble learning models, CatBoost and GBRT show remarkable consistencies with a varying data subset configuration, while the CatBoost model presents a distinguished performance prediction using a superior division strategy of data subsets. These insights highlight the importance of proper data acquisition in improving model performance prediction, providing crucial guidance for future model selection and optimization. The effects of database subset distribution on the performance prediction and model stability should be properly considered during the model selection and optimization process to ensure optimal model functionality in real-world applications.

The CatBoost model demonstrates superior performance in terms of predictive accuracy, as evidenced by its highest R2 value of 0.993, lowest RMSE value of 4.396, lowest MAE value of 2.055, and lowest MAPE value of 3.704%. This substantial improvement in performance prediction of the flexural capacity for reinforced UHPC beams is particularly notable when compared to existing empirical methods. Notably, the model’s enhanced applicability and adaptability enable it to handle complex feature interactions, leading to highly reliable results. This renders the EL model a potentially invaluable tool for practical engineering applications. The employment of data-driven methodologies by the CatBoost model presents a promising alternative to existing empirical methods, thereby paving the way for enhanced accuracy and efficiency in the field of UHPC structural design.

A SHAP-based feature importance analysis indicates that ρt, La, and H are the most critical features for the determination of the flexural ultimate capacity Mu of reinforced UHPC beams. This finding is consistent across ML models including RF, XGBoost, CatBoost, GBRT, AdaBoost, and LightGBM. In contrast, the aspect ratio of fiber length-to-diameter (Lf/df) is the least important characteristic. The CatBoost model displays a more uniform distribution of SHAP values for all parameters, suggesting a balanced decision-making process and contributing to its superior and stable model performance. Several ensemble learning models such as AdaBoost, RF, and XGBoost show higher SHAP values for certain features, indicating a greater dependence on those features and potentially leading to more variable performance for different subsets of the database.

The analysis of normalized SHAP values reveals a prevailing trend that increases in the eigenvalues of B, ρt, La, and H are associated with an increase in normalized SHAP values. This notable positive correlation highlights their substantial influence on predicting the flexural ultimate capacity Mu of reinforced UHPC beams. Nevertheless, the yield strength of longitudinal reinforcement, fy, shows a clear nonlinear relationship with ultimate capacity Mu. These findings allow for a deeper understanding of how each feature contributes to the prediction of the flexural ultimate capacity Mu of reinforced UHPC beams, thus laying a foundation for more accurate model optimization and feature engineering strategies.

The present study introduces several EL models (AdaBoost, CatBoost, GBRT, LightGBM, and XGBoost) for the prediction of the flexural capacity of reinforced UHPC beams, with a view to providing a more accurate and efficient tool for practical engineering applications. The SHAP method is employed to evaluate the impact of critical factors such as the longitudinal reinforcement ratio, yield strength, beam height, and shear span length, on the ultimate bending moment. The findings of this study could prove instrumental in empowering structural engineers to swiftly evaluate flexural capacity, optimize structural designs, minimize material consumption, enhance structural safety and efficiency, and thus foster sustainable growth for UHPC applications. In the future, there is a potential for the development of the present method into a graphical user-friendly interface (GUI), with subsequent integration with current design codes and engineering software (such as MIDAS, ABAQUS, and SAP2000). This would create an intelligent platform for structural design and evaluation, and thus further the adoption and standardization of UHPC technology.