1. Introduction

As a core contributor to global energy consumption and carbon emissions, the building sector has rendered energy conservation and carbon reduction in related fields a key issue for sustainable development [

1] while simultaneously undergoing a transformative shift from being merely energy-intensive to becoming smart and responsive [

2,

3]. This paradigm shift is fueled by advancements in data processing, storage, and transmission, which now enable the design and deployment of sophisticated control systems [

4]. Among these, predictive control stands out by optimizing Heating, Ventilating and Air-conditioning (HVAC) system operation based on forecasts of future conditions. It is this critical dependence on accurate and anticipatory energy demand forecasts that elevates building load prediction from a supportive tool to a cornerstone technology for improving energy system performance and achieving significant energy efficiency [

5].

Broadly, building load prediction methods fall into two categories: physics-based modeling and data-driven modeling [

6]. Physics-based approaches incorporate detailed building envelope and system parameters to simulate building loads. Although these methods can represent physical processes with high fidelity, they require extensive, labor-intensive data collection, and inaccuracies in input parameters can lead to significant discrepancies between simulated and actual performance. Data-driven approaches avoid such dependencies by learning the mapping between operational and environmental variables and load values directly from historical data, using algorithms such as Artificial Neural Networks (ANN), Extreme Learning Machines (ELM), Regression Trees (RT), and Support Vector Machines (SVM). Representative work includes Hu et al. [

7], who applied ANN to predict the thermal load of a zonally divided office building in Tianjin, enabling accurate room-level temperature estimation, and Guo et al. [

8], who compared Back Propagation Neural Network (BPNN), SVM, and ELM for short-term load forecasting, showing superior performance for ELM when incorporating thermal response time as input.

Compared to shallow architectures, deep learning models capture more complex, non-linear relationships between features, enabling end-to-end learning without manual feature engineering [

9]. LSTM networks, owing to their ability to handle long-sequence data, are especially valuable in load forecasting. Wang et al. [

10] used LSTM to estimate internal heat gains in U.S. office buildings, identifying miscellaneous electrical loads as the most influential variable, and later compared shallow learning with deep learning, concluding that LSTM excels in short-term forecasting, whereas Extreme Gradient Boosting (XGBoost) performs better for long-term tasks.

Recent efforts increasingly combine complementary neural network architectures into hybrid frameworks. An et al. [

11] employed a hybrid modeling framework that incorporated data processing and model optimization procedures with BP and ELMAN networks, resulting in accurate short-term residential load predictions. Li et al. [

12] integrated Convolutional Neural Networks (CNN) with Gated Recurrent Units (GRU), adopting transfer learning to address small dataset scenarios. Bui et al. [

13] proposed an electromagnetism-inspired Firefly Algorithm–ANN hybrid for thermal load prediction, assisting energy-efficient building design.

Alongside these developments, a wave of newer studies adopt more sophisticated feature engineering, decomposition techniques, and metaheuristic optimization. Feng et al. [

14] demonstrated that CNN-based spatial feature extraction, BiLSTM temporal modeling, and multi-head self-attention, optimized via an improved Rime algorithm, substantially enhanced cooling load accuracy in public buildings. Kong et al. [

15] addressed non-stationary loads from cascaded phase-change material buildings using SSA–VMD–PCA to preprocess data, thereby reducing prediction error by nearly 60%. Guo et al. [

16] improved Artificial Rabbit Optimization with adaptive crossover to fine-tune LSTM hyperparameters, yielding performance gains in dynamically varying heat load scenarios. Song et al. [

17] integrated gated multi-head temporal convolution with improved BiLSTM to robustly capture multi-scale temporal patterns, lowering prediction error over diverse forecasting horizons. Lu et al. [

18] further showed how attention mechanisms coupled with time representation learning and XGBoost correction can improve both accuracy and robustness under varying occupancy schedules.

Other contributions emphasize interpretability, such as Zhang et al., [

19] who combined clustering decision trees with adaptive multiple linear regression, matching black-box model accuracy while revealing that historical cooling loads and outdoor temperature are the most critical predictors. Salami et al. [

20] leveraged Bayesian metaheuristic optimization in explainable tree-based models, achieving high alignment between design parameters and load outcomes. Additionally, Fouladfar et al. [

21] developed adaptive ANN models retrained daily for residential thermal load prediction, enhancing flexibility and responsiveness. However, intelligent optimization algorithms often suffer from limitations such as slow convergence, premature convergence to local optima, and poor balance between global and local search—all affecting prediction accuracy. Therefore, integrating more advanced optimization algorithms with neural networks is essential to improve model accuracy and training speed. In the context of building energy consumption, load forecasting is generally classified into short-term and long-term horizons. Short-term load forecasting, typically spanning from minutes to several days, is particularly valuable for supporting real-time control of HVAC systems.

Accurate prediction of complex, dynamic building cooling loads remains a critical challenge in building energy efficiency. While data-driven models have advanced development, they still struggle with the stochastic, highly nonlinear, and non-smooth nature of cooling load data, leading to inadequate accuracy and robustness. To address these limitations, this study develops a high-accuracy hybrid model tailored to non-stationary and complex load characteristics. The proposed model provides more reliable support for intelligent control, real-time monitoring, and efficient management of HVAC systems. The shortcomings of existing research, along with the key contributions and innovations of this work, are summarized below:

(1) Limitations of Existing Research:

(a) Inadequate handling of non-stationary and complex cooling load data: Conventional single neural network models often struggle with the stochastic fluctuations, nonlinear variations, and non-stationary characteristics of building cooling load data, leading to compromised prediction accuracy and limited adaptability to complex real-world operational conditions.

(b) Room for improvement in data decomposition methods: Existing modal decomposition techniques may suffer from incomplete decomposition or insufficient processing of high-frequency components. As a result, data preprocessing may fail to adequately reduce data complexity, thereby constraining further improvements in prediction model performance.

(c) Suboptimal efficiency and accuracy in model parameter optimization: Parameter configuration in traditional neural networks often relies on empirical or trial-and-error approaches, which are inefficient and unlikely to yield optimal parameter sets. Meanwhile, intelligent optimization algorithms may exhibit slow convergence or a tendency to become trapped in local optima during the parameter search process.

(d) Lack of comprehensive validation of model robustness and generalizability: Many existing studies focus predominantly on accuracy metrics while paying insufficient attention to validating model robustness and generalization capability under varying operational and data conditions. This undermines the reliability of such models in practical engineering applications.

(2) Contributions and Innovations:

(a) Developed an innovative ICEEMDAN-Kmeans-VMD secondary decomposition framework that sequentially applies ICEEMDAN for initial decomposition, sample entropy-based K-means clustering for component aggregation, and VMD for refining high-frequency components. This multi-stage approach overcomes limitations of single decomposition methods, achieving more thorough data denoising and providing higher-quality inputs for prediction.

(b) Constructed a CPO-CNN-BiLSTM-Attention hybrid model that effectively combines CNN’s local feature extraction, BiLSTM’s long-term dependency capture, and Attention’s focus on critical temporal information. This integration enhances the model’s capability to learn complex cooling load patterns.

(c) Introduced the Crested Porcupine Optimizer for automated hyperparameter tuning, addressing the challenge of manual parameter adjustment in deep hybrid models. CPO demonstrates faster convergence, superior optimization results, and higher efficiency compared to traditional methods, significantly improving model performance.

(d) Established comprehensive validation through case studies using real building data with multiple metrics and benchmark comparisons. Extensive analysis across decomposition effectiveness, feature engineering impact, optimization performance, and model robustness confirms the superiority of the proposed approach in accuracy, robustness, and generalization capability.

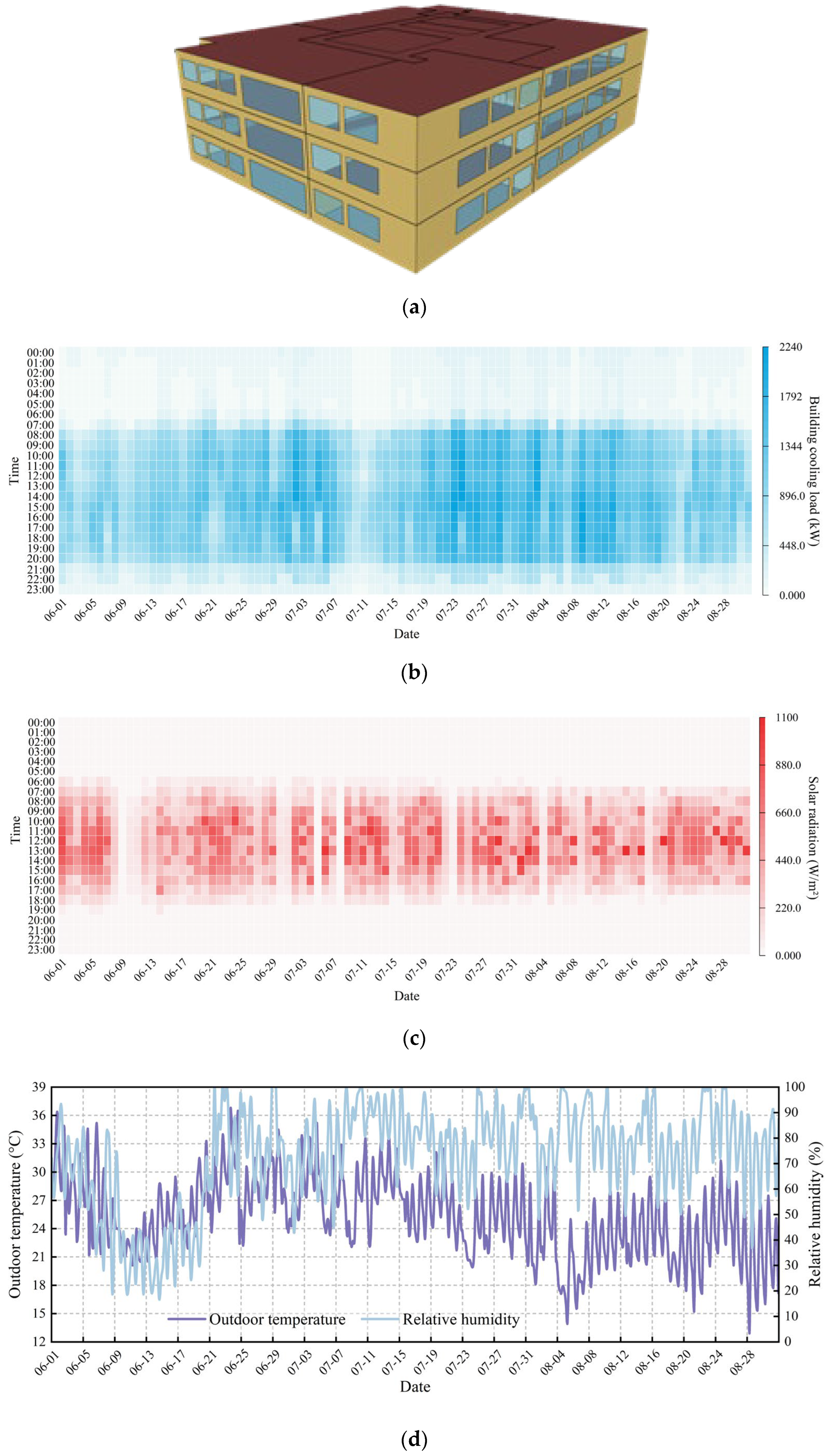

4. Results and Discussion

4.1. Data Decomposition Results

The ICEEMDAN method was employed to process the load profile of the case-study building. The decomposition was performed with a white noise amplitude of 0.2, 50 realizations of noise addition, and 100 iterations, yielding multiple IMF components. As shown in

Figure 9, the IMFs exhibit a distinct stratification in both frequency and amplitude. From IMF 1 to IMF 10, the oscillation frequency gradually decreases: IMF 1 to IMF 3 represent high-frequency oscillations, characterized by dense waveforms and large amplitude fluctuations. IMF 4 to IMF 6 show a marked reduction in frequency, with the waveforms transitioning from dense oscillations to relatively smooth fluctuations and a narrower amplitude range. IMF 7 to IMF 9 exhibit even lower-frequency, trend-like oscillations with further extended oscillation periods. Finally, IMF 10 reflects a slowly varying trend component with a relatively stable amplitude range and no apparent oscillations. This hierarchical decomposition demonstrates that the ICEEMDAN algorithm effectively separates the original cooling load signal into components with distinct frequency and amplitude characteristics, thereby laying the groundwork for precise prediction of each individual component in subsequent analysis.

To address the model complexity from excessive ICEEMDAN-generated IMFs, sample entropy analysis with K-means clustering was implemented. This grouped components into three frequency-based Co-IMFs (high, medium, low), effectively reducing the components from 10 to 3 as shown in

Figure 10 and significantly cutting training costs.

The high-frequency time series obtained from K-means clustering undergoes secondary modal decomposition via VMD to reduce complexity. As shown in

Figure 11, the Co-IMF components are decomposed into three VMD-IMFs. These are combined with the medium- and low-frequency components from the initial ICEEMDAN decomposition to form a new IMF set. Based on the classification criteria, VMD-IMF1 and VMD-IMF3 are categorized as high-frequency components, VMD-IMF4 as medium-frequency, and VMD-IMF5 as low-frequency. From the perspective of HVAC process dynamics, each frequency band corresponds to distinct physical phenomena influencing cooling load variations [

30]. High-frequency components are primarily driven by rapid changes in outdoor meteorological conditions, such as solar radiation spikes and wind disturbances, as well as instantaneous internal gains from occupant activities or equipment cycling. Medium-frequency components reflect daily operational cycles dictated by the building schedule and moderate thermal inertia of the envelope. Low-frequency components capture long-term trends arising from substantial envelope thermal inertia, indoor air heat storage, and seasonal climate variations. Subsequent to decomposition, each VMD-IMF is combined with the existing feature variables to construct new component-specific sample datasets, which are later used for feature selection and prediction testing.

4.2. Prediction Results

After undergoing secondary modal decomposition and feature selection, the processed cooling load dataset was partitioned into training (80%) and testing (20%) subsets. Simulations were conducted in MATLAB R2023a to implement and evaluate four models: CPO-CNN, CPO-BiLSTM, CPO-CNN-BiLSTM, and CPO-CNN-BiLSTM-Attention. The prediction performance of the CPO-CNN-BiLSTM-Attention model was thoroughly validated and analyzed. Through extensive testing and adjustment, an optimal parameter set was determined for the case study, as detailed in

Table 3. The results in

Figure 12 indicate that the CPO-CNN-BiLSTM-Attention model produces forecasts that align closely with the actual load profiles, demonstrating strong predictive accuracy.

Table 4 provides a comparative overview of the evaluation results for the five predictive models. The CPO-CNN-BiLSTM-Attention approach attained a MAPE of 3.2462% (96.7538% accuracy), meeting practical requirements. This configuration consistently surpasses all alternatives in the assessed criteria, yielding MAE, MAPE, and CV-RMSE values of 31.0252, 3.2462%, and 6.7212%, respectively—representing reductions of 7.47–40.39, 2.07–6.82%, and 1.43–7.72% over other models. Its R

2 value of 0.9929 also exceeds others by 0.0033–0.0256. The attention mechanism contributes a 2.07% accuracy gain, while CPO-optimized CNN and BiLSTM models show marked improvement over their base versions, confirming CPO’s effectiveness in parameter tuning.

4.3. Prediction Performance Analysis

4.3.1. Performance of Modal Decomposition

Figure 13 compares the predictive performance of secondary modal decomposition, primary modal decomposition, and non-decomposition methods using MAPE and CV-RMSE as evaluation metrics. Experimental results demonstrate the significant advantages of the proposed secondary modal decomposition method in both prediction accuracy and stability. Specifically, its MAPE (3.246%) and CV-RMSE (6.721%) are reduced by 6.542% and 6.81%, respectively, compared to primary decomposition, and by 10.879% and 10.751% compared to the non-decomposition approach.

To address the limitations of traditional single-stage decomposition—particularly its inadequate handling of high-frequency components leading to suboptimal prediction accuracy—this study constructs a hierarchical optimization framework of “decomposition-clustering-re-decomposition”. The process begins with initial noise reduction via ICEEMDAN decomposition, generating multi-frequency IMF components. Based on sample entropy dynamic clustering (high-frequency components: entropy >1.5; low-frequency components: entropy <0.8), high-frequency subsequences are selected. These high-frequency components then undergo secondary decomposition using VMD, where adaptive bandwidth adjustment restricts mode mixing error below 0.8%, effectively reducing high-frequency complexity. Experimental evidence confirms that this method, through its dual-stage decomposition and dynamic clustering mechanism, effectively mitigates input uncertainty while enhancing high-frequency processing capability, thereby optimizing computational efficiency.

To quantitatively evaluate the contribution of modal decomposition and its smoothing effect to prediction performance, three experimental schemes were designed using the CNN model as the baseline. Scheme 1: a CNN model without any decomposition; Scheme 2: a CPO–CNN–BiLSTM–Attention model without decomposition; Scheme 3: a CNN model incorporating dual-stage modal decomposition. The results are presented in

Table 5. Compared with the baseline CNN model, the deep model without decomposition reduced the MAPE by approximately 7.1% and the CV-RMSE by about 20.0%. In contrast, introducing dual-stage modal decomposition into the simple CNN framework reduced the MAPE by approximately 36.6% and the CV-RMSE by around 55.8%. These findings indicate that dual-stage modal decomposition plays a critical role in reducing the high-frequency complexity of the input signal and enhancing its predictability. Moreover, the improvement is not solely attributed to the smoothing effect; the decomposition also provides more stable and informative input features to the predictive model, thereby further improving forecasting accuracy.

4.3.2. Impact of Feature Engineering and Attention Mechanism on Model Prediction Accuracy

Feature engineering plays a crucial role in improving model prediction accuracy. To analyze its specific impact on load forecasting, a comparative study was designed with two model groups: an experimental group using input features processed by feature engineering—including fine-grained time types (e.g., hour, period), day type (weekday/weekend/holiday), outdoor temperature, outdoor humidity, cooling load at the previous timestep, and solar radiation at the previous timestep—and a control group using raw features without such processing. As shown in

Figure 14, the engineered features were filtered and transformed to reduce redundancy and noise. In contrast, the raw feature set, while more comprehensive—including current solar radiation, wind direction, and wind speed in addition to the same temporal and environmental variables—may suffer from multicollinearity and scale variation, potentially impairing model training and prediction.

Meanwhile, the attention mechanism in the CNN-BiLSTM-Attention model also plays a vital role by adaptively highlighting the importance of different timesteps in the sequential data. When handling long and complex time series, it learns varying weights for each step, amplifying relevant information while suppressing less useful inputs. This helps the model capture truly salient temporal patterns and further improves predictive accuracy. Further analysis of the attention weight distribution reveals that several timesteps corresponding to peaks in solar radiation received notably higher weights. This indicates that the model successfully identified the strong coupling between solar gains and building cooling demand [

31]. Solar radiation increases the heat load on the building envelope, directly affecting indoor thermal conditions and substantially raising cooling requirements during clear-sky periods. In this study, the close alignment between high-weight timesteps and solar radiation peaks further confirms that solar radiation is a critical physical driver of building load variations.

As summarized in

Figure 14, the contributions of feature engineering and attention mechanism to prediction accuracy were quantified. Models trained with feature-engineered inputs showed significant improvement over those using raw features, with accuracy gains ranging from 7.17% to 10.74% and averaging 9.29%. This substantially exceeded the gains from the attention mechanism, which ranged from 2.06% to 6.81% with feature engineering and 2.33% to 3.67% without. These results demonstrate that for building cooling load forecasting, improving input quality through feature engineering—by removing redundancy, reducing noise, and constructing informative features—is essential. It substantially enhances the model’s ability to capture dynamic load patterns and leads to markedly more accurate predictions.

4.3.3. Performance of Different Optimization Algorithms

To evaluate the optimization capability of the Crested Porcupine Optimizer (CPO) algorithm on three key parameters—L2 regularization coefficient, initial learning rate, and number of hidden units—of the CNN and BiLSTM models, convergence iteration count, error metrics, and runtime were used as performance metrics. For benchmarking, conventional optimization approaches including Genetic Algorithm (GA), Particle Swarm Optimization (PSO), and Bayesian Optimization (BO) were implemented under identical experimental conditions. Specifically, the search space for the L2 regularization coefficient was set to [1 × 10

−5, 1 × 10

−2], the initial learning rate to [1 × 10

−4, 1 × 10

−2], and the number of hidden units to [32, 256]. The maximum number of iterations was fixed at 100, the population size was kept constant across algorithms, and the same random seed was applied for initialization to ensure reproducibility and fairness of comparison. As summarized in

Table 6, CPO achieved superior optimization results with the shortest runtime and faster convergence speed compared to GA, PSO, and BO, demonstrating its effectiveness in tuning parameters for both CNN and BiLSTM. This approach significantly enhances model accuracy and accelerates the training process.

4.3.4. Robustness of the Models

The robustness assessment, illustrated in

Figure 15, displays box plots of relative errors across six prediction approaches. Among these, the CPO-CNN-BiLSTM-Attention configuration produces the most compact error spread, characterized by the smallest interquartile distance and minimal presence of outliers. This outcome implies that the model consistently confines prediction deviations within a limited range, underscoring its strong resilience against variability.

To assess the stability of the proposed method under different data-splitting conditions, multiple random splits of the dataset were performed within the original framework of 80% training and 20% testing sets. A total of six independent experiments were conducted. As shown in

Table 7, the R

2 values remained consistently high, ranging from 0.9911 to 0.9929, with an average of 0.9920, indicating strong fitting capability across different splits. The MAE and MAPE metrics were kept within low ranges of 30.6647–34.4826 and 4.2462–5.3559%, with averages of 32.9378 and 4.7431%, respectively. The average CV-RMSE was 7.1494%, with fluctuations across runs not exceeding 0.83%. These results demonstrate that the proposed modal decomposition and deep learning-based short-term building load forecasting method maintains stable and high predictive performance under varying random data splits, exhibiting strong generalization ability and robustness of conclusions.

4.3.5. Configuration of Hyperparameters

The hyperparameters for the CNN and BiLSTM components in the CPO-CNN-BiLSTM-Attention model were optimized following a predefined structure [

32]. A sequential tuning approach was applied to four key parameters: convolutional filters (16, 32, 64, 128), kernel number (2, 4, 8, 16), pooling window size (2, 4, 8, 16), and dropout rate (0.05, 0.1, 0.2, 0.3). Initial tests identified kernel number 16 and Dropout rate 0.1 as optimal, which were subsequently fixed. The model was trained and evaluated on each IMF component, with results visualized in

Figure 16. This systematic optimization established the final parameter set, enhancing the model’s cooling load prediction capability.

To verify the rationality of parameter selection and the stability of decomposition results, a sensitivity analysis was conducted on three key parameters in the decomposition framework: the white noise amplitude in ICEEMDAN, the number of clusters in K-means, and the preset number of modes in VMD. The relative prediction error of the proposed model was used as the evaluation metric, and the results are presented in

Figure 17. In the ICEEMDAN stage, the white noise amplitude was tested at 0.1, 0.2, and 0.3. As shown in

Figure 17a, an amplitude of 0.2 yielded a lower median prediction error and reduced dispersion, effectively suppressing mode mixing while preserving the dominant characteristics of the original signal. For K-means clustering, the cluster number K was set to 2, 3, and 4. As illustrated in

Figure 17b, K = 3 provided a stable error range and a lower maximum relative error, while reasonably distinguishing high-, medium-, and low-frequency IMF groups, thereby facilitating targeted secondary decomposition and modeling for each frequency band. For the secondary VMD decomposition applied to the high-frequency IMF sequence, the preset number of modes K was varied between 3, 4, and 5. As shown in

Figure 17c, K = 3 achieved lower errors and stable decomposition performance, effectively separating high-frequency components without excessively increasing computational complexity. Based on comparative analysis, the optimal parameter configuration in this study was determined as follows: the white noise standard deviation coefficient in ICEEMDAN was set to 0.2; the cluster number in K-means was fixed at K = 3, corresponding to high-, medium-, and low-frequency IMF groups; and the number of modes in the secondary VMD decomposition of high-frequency IMF sequences was set to K = 3, resulting in three VMD-IMF components.

4.3.6. Generalization Analysis of the Model

To evaluate the generalization capability of the proposed prediction model, the dataset was split into three non-overlapping subsets corresponding to 1–30 June, 1–31 July, and 1–31 August. Each subset was divided into training and testing sets at a ratio of 8:2, and simulations were conducted using the proposed hybrid prediction framework. As shown in

Table 8, consistently high predictive performance was achieved across all periods. Overall, the model shows strong and stable generalization ability under diverse monthly and climatic conditions.

4.4. Limitations and Future Work

Although the proposed hybrid prediction framework demonstrated strong performance on simulated datasets, its practical deployment in real-world scenarios still faces several limitations and challenges. To advance the engineering implementation and broaden the applicability of the method, future studies will focus on aspects including data acquisition, model optimization, adaptation to multiple prediction horizons, robustness under complex scenarios, and deep integration with intelligent control systems. The key directions are outlined as follows:

(1) At present, model validation has been conducted primarily on simulated data, without leveraging real measured data from buildings. As a result, the model may not fully capture the complexities of real-world scenarios, such as random noise, load fluctuations driven by occupant behavior, and control deficiencies of HVAC systems. Future work will prioritize real-data-driven optimization by collecting hourly load profiles, meteorological parameters, occupant behavior patterns, and HVAC operation records from representative buildings, thereby constructing a multi-scenario real energy consumption dataset. Model input processing and training strategies will be tailored to the statistical properties of measured data, and a fault diagnosis module will be incorporated to improve robustness and practicality in engineering applications. Computational efficiency will also be systematically evaluated across different hardware platforms, accompanied by the development of lightweight or pruned versions of the model and exploration of simplified decomposition strategies within acceptable accuracy trade-offs. Finally, deployment tests will be conducted in actual building management systems to assess scalability, latency, and operational stability in real-world environments.

(2) Incorporating behavioral and psychological correction factors could further enhance the dynamic responsiveness and realism of the model [

33,

34]. Future studies will integrate building behavior monitoring data—such as variations in occupant density and equipment usage triggered by meetings, holidays, or unexpected events—along with psychological state indicators obtained through surveys or sensors, and investigate their integration into deep learning-based prediction models.

(3) Performance evaluation under varying temporal resolutions is a prerequisite for effective integration of the model with control strategies. In addition to the default one-hour prediction horizon, this study also assessed a one-day time step, showing that the proposed hybrid prediction framework maintained high accuracy for long-horizon forecasts (R2 = 0.9857, MAE = 472.45, MAPE = 2.63%, CV-RMSE = 3.51%). Future research will systematically investigate performance across minute-, hourly, and daily level forecasts under diverse building load scenarios and climate zones. This will enable comprehensive assessment of model robustness and adaptability, thereby ensuring its capability to meet heterogeneous operational and planning needs in real-world applications.

(4) Complex or unexpected scenarios in building load forecasting can challenge the adaptability of prediction models. For instance, abrupt changes in occupant behavior—such as unscheduled meetings, gatherings, or extended absences—can lead to non-periodic load spikes that are difficult to capture in real time; equipment faults or control system failures may disrupt established load patterns; extreme weather events or other external disturbances could introduce unseen data distributions, undermining prediction reliability. Future work will address these challenges by incorporating real-time monitoring data, developing anomaly detection modules, and adopting online learning methods capable of rapidly adapting to new operational conditions, thereby enhancing adaptability and robustness in dynamic, non-stationary environments.

(5) The proposed hybrid prediction model is currently evaluated in an offline setting, and its forecasts have not yet been directly integrated into active HVAC control strategies. Future research will focus on deep integration of the prediction model with intelligent control systems, using real-time forecasts as inputs for load balancing, indoor temperature–humidity optimization, and peak shaving operations. Prediction-driven control experiments will be designed to quantitatively assess the actual benefits in terms of energy savings, peak load reduction, and occupant comfort improvement. In addition, applicability across various building types and climate conditions will be explored to ensure multi-scenario robustness and scalability of the approach.

5. Conclusions

This study proposes a novel ICEEMDAN-Kmeans-VMD-CPO-CNN-BiLSTM-Attention model for predicting building cooling loads. The model demonstrates outstanding predictive accuracy, along with excellent robustness and generalization capabilities. The main conclusions are as follows:

(1) The proposed secondary modal decomposition method significantly outperforms both primary modal decomposition and non-decomposition methods in terms of predictive accuracy and stability. Its MAPE (3.2462%) and CV-RMSE (6.7212%) are reduced by 6.542% and 6.81%, respectively, compared to primary modal decomposition, and by 10.879% and 10.751% compared to the non-decomposition approach. The proposed dual-stage decomposition and dynamic clustering strategy effectively smooths and reduces the uncertainty in the model inputs.

(2) The CPO-CNN-BiLSTM-Attention model achieved excellent predictive results for building load data across different periods, with R2, MAE, MAPE, and CV-RMSE reaching 0.9929, 31.0252, 3.2462%, and 6.7212%, respectively. This indicates that the proposed model can fully leverage the strengths of both the CNN and the BiLSTM.

(3) Feature engineering contributed more significantly to improving predictive accuracy than the attention mechanism. Feature engineering yielded an average accuracy improvement of 9.29%, while the attention mechanism provided a maximum improvement of 6.81%. Nevertheless, both components enhanced the model’s predictive performance.

(4) Both the CPO-CNN and CPO-BiLSTM models outperformed their unoptimized, single neural network counterparts across performance metrics. Their box plots of relative errors showed narrower interquartile ranges and fewer outliers, indicating that the CPO algorithm provides a reliable method for optimizing parameters in both CNN and BiLSTM models. In comparison with classical approaches such as GA, PSO, and BO, the CPO framework achieved higher optimization efficiency, reduced computational overhead, and quicker convergence, demonstrating its suitability for fine-tuning deep learning architectures of this type.

This study will evolve along two primary pathways: validating the model’s generalizability across a wider range of buildings and multi-energy load (cooling, heating, electricity, and gas), and exploring advanced data-driven structures for enhanced prediction accuracy. These efforts are aimed at transforming high-fidelity load prediction into a practical tool for optimizing building energy systems, thereby directly contributing to energy efficiency improvement and carbon neutrality.