Image Completion Network Considering Global and Local Information

Abstract

1. Introduction

2. Related Work

3. Methodology

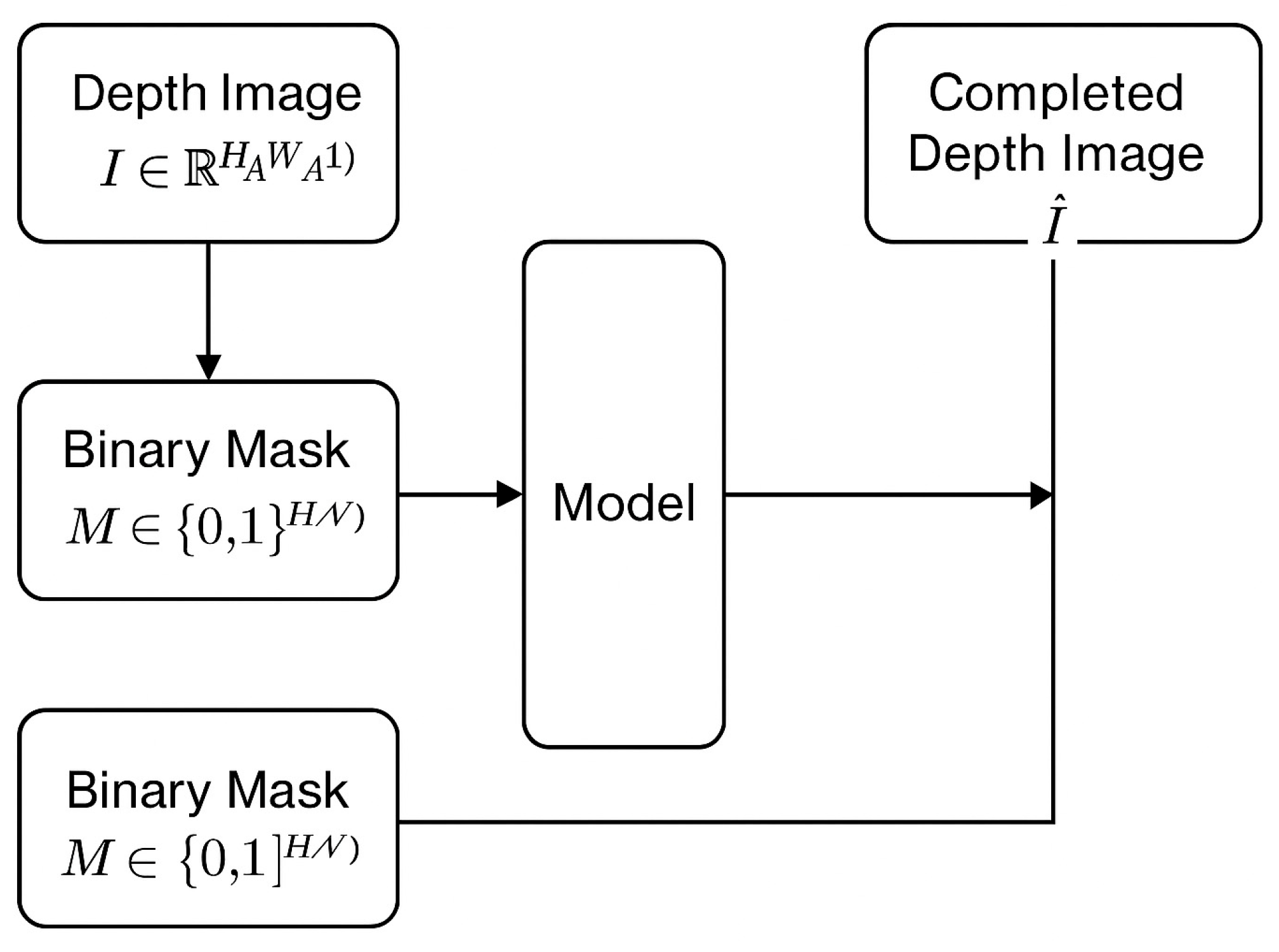

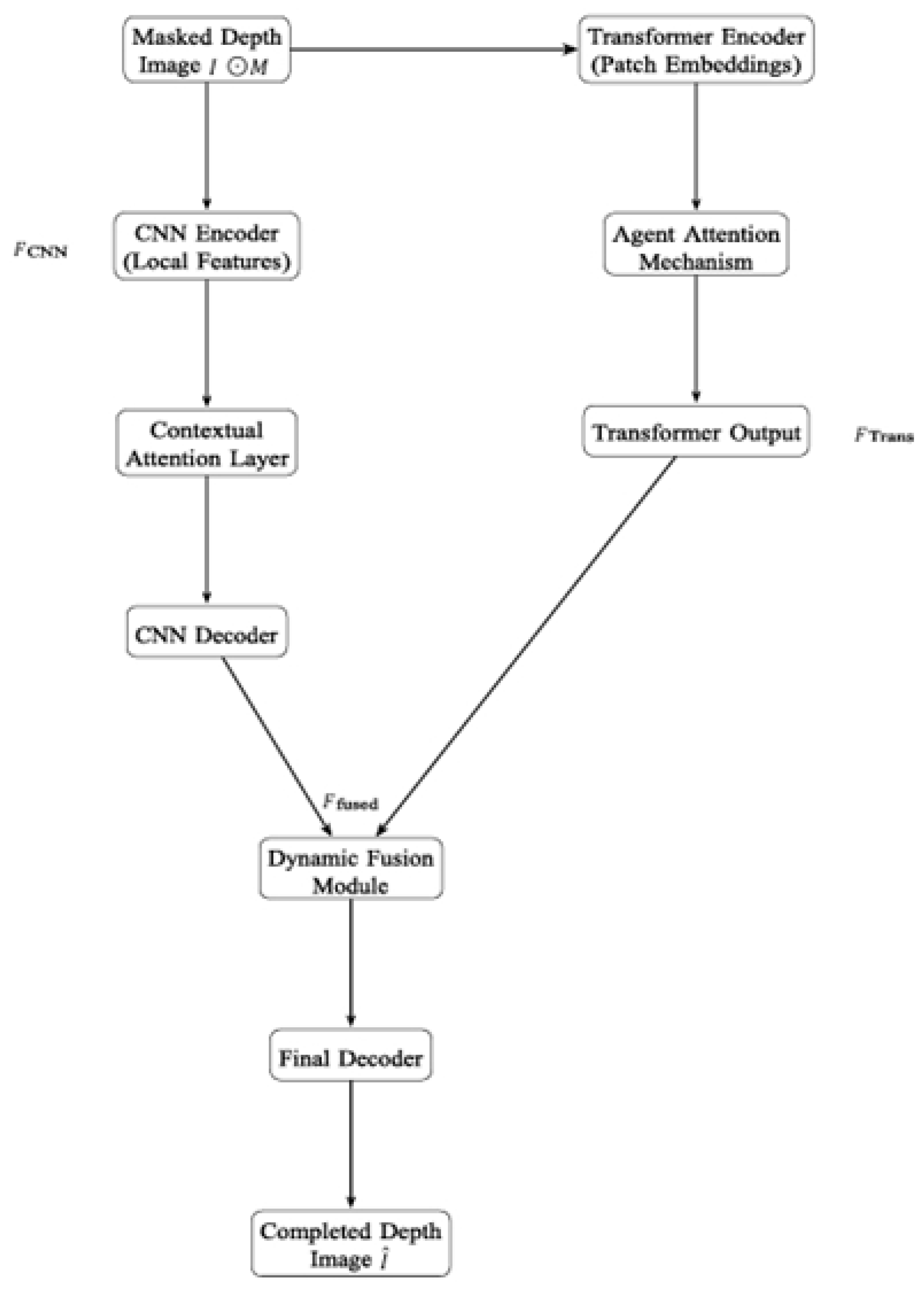

3.1. Method Overview

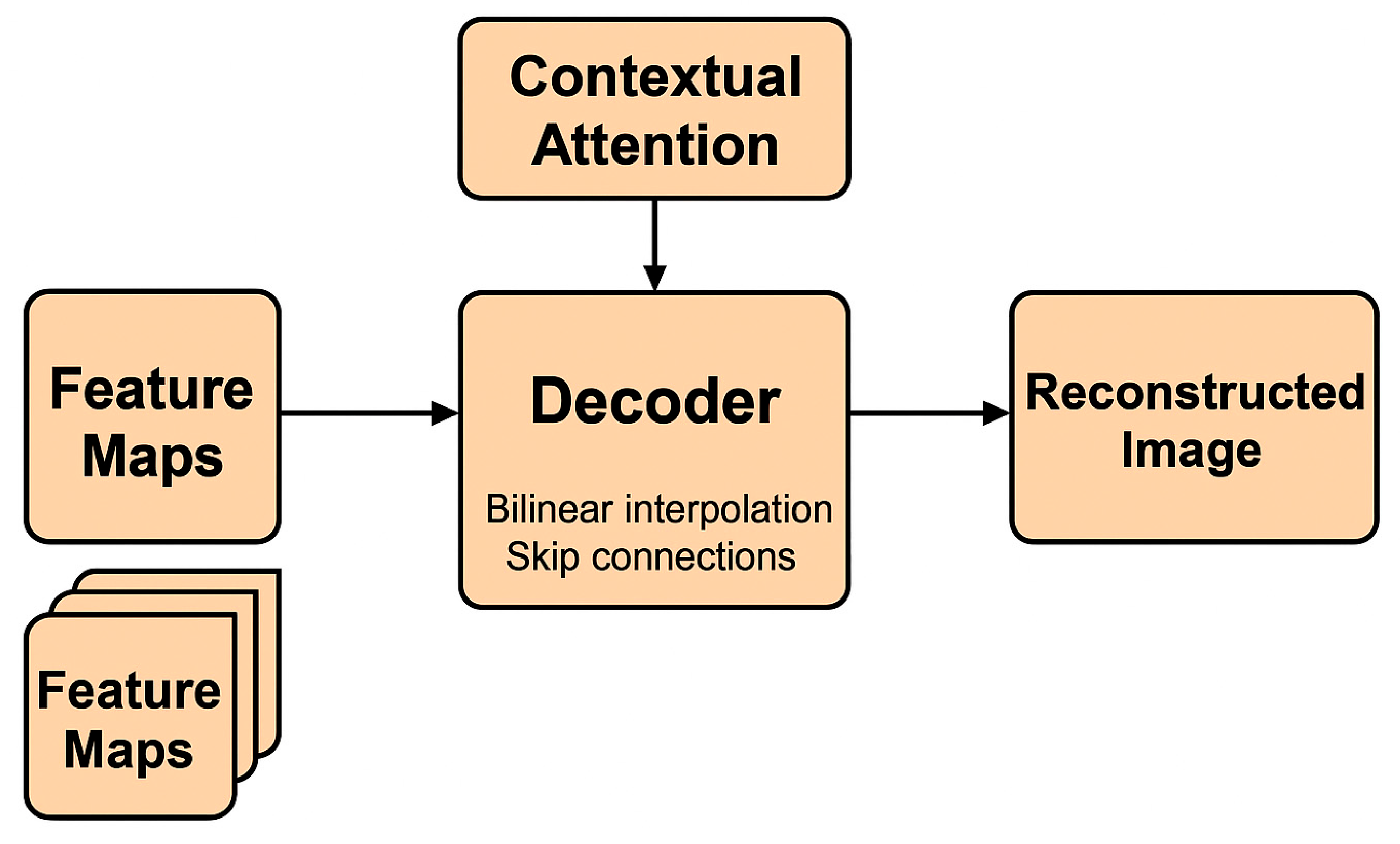

3.2. Local Information Modeling Network (CNN-Based)

3.3. Global Information Modeling Network

3.4. Loss Functions for Network Training

3.4.1. Pixel-Level Reconstruction Loss ()

3.4.2. Perceptual Loss ()

3.4.3. Adversarial Loss ()

3.4.4. Structural Consistency Loss ()

4. Experiments and Analysis

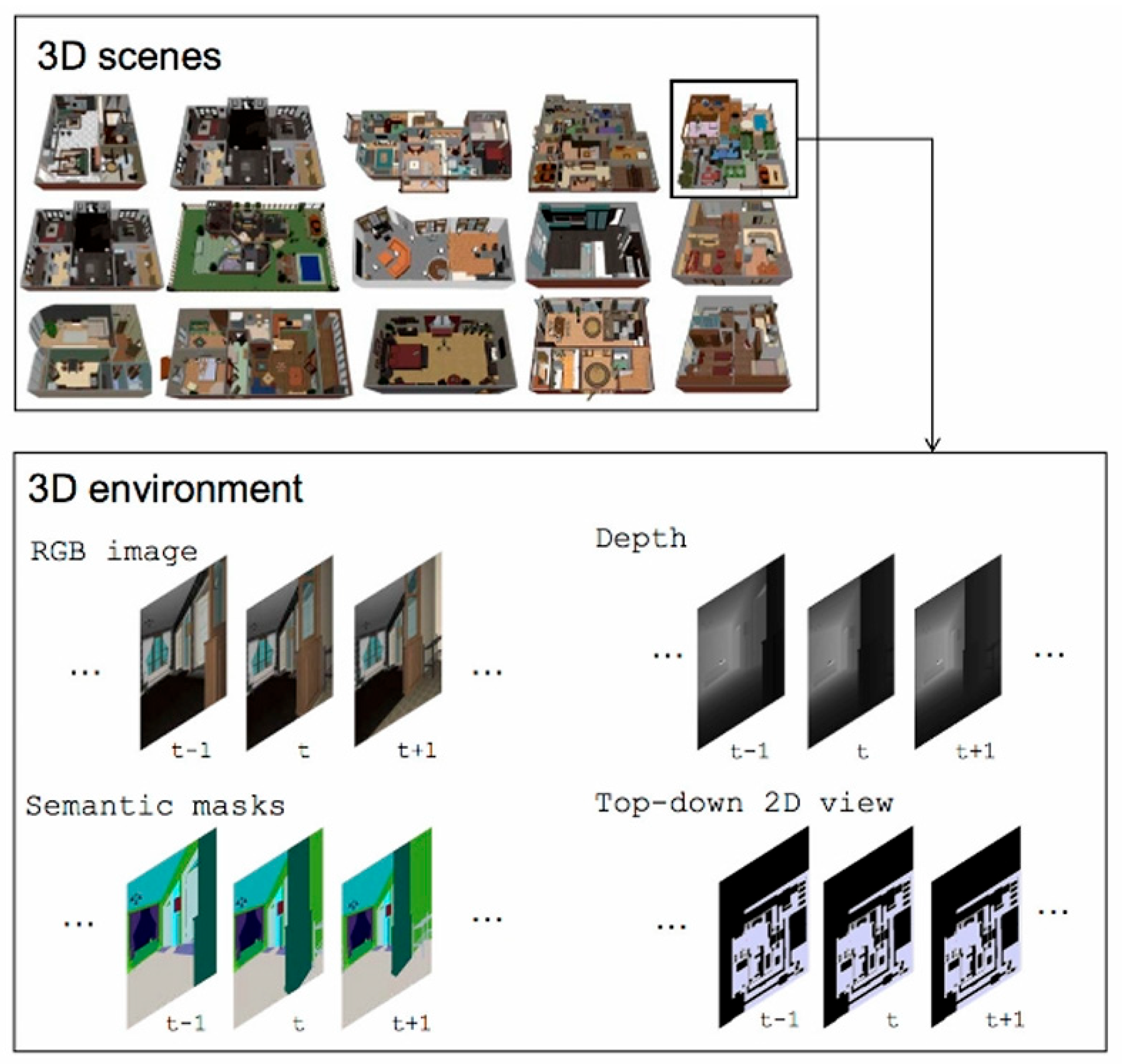

4.1. Dataset Description

4.2. Evaluation Metrics and Experimental Platform

4.2.1. Evaluation Metrics

4.2.2. Experimental Platform

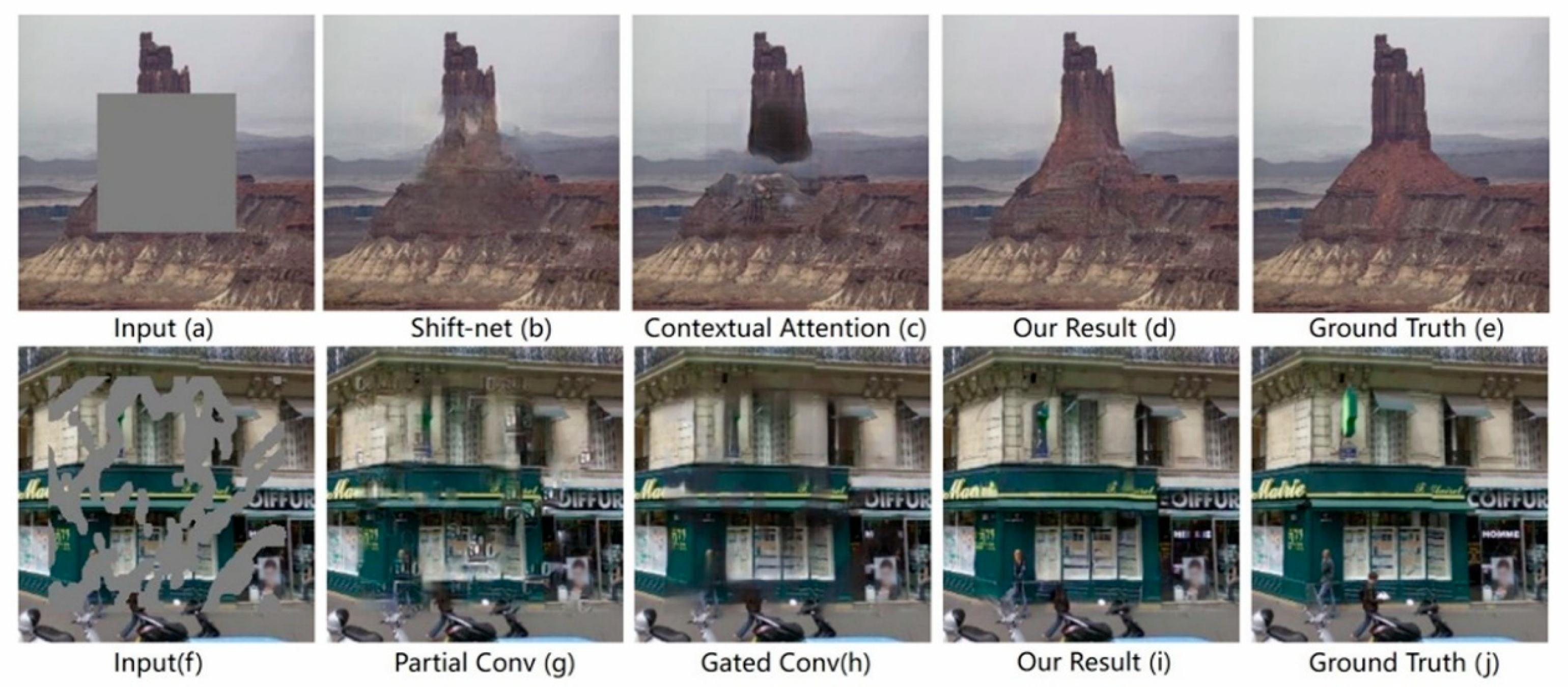

4.3. Comparison with Baseline Methods

4.3.1. Baseline Model Selection

4.3.2. Experimental Results and Analysis

4.4. Ablation Study of Modules

4.4.1. Experimental Design

4.4.2. Experimental Results and Analysis

4.5. Hyperparameter Analysis Experiments

4.5.1. Experimental Design

4.5.2. Experimental Results and Analysis

5. Applications, Discussion, and Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Pathak, D.; Krahenbuhl, P.; Donahue, J.; Darrell, T.; Efros, A.A. Context encoders: Feature learning by inpainting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2536–2544. [Google Scholar]

- Chan, T.F.; Shen, J. Nontexture inpainting by curvature-driven diffusions. J. Vis. Commun. Image Represent. 2001, 12, 436–449. [Google Scholar] [CrossRef]

- Bertalmio, M.; Vese, L.; Sapiro, G.; Osher, S. Simultaneous structure and texture image inpainting. IEEE Trans. Image Process. 2003, 12, 882–889. [Google Scholar] [CrossRef] [PubMed]

- Pei, Z.; Jin, M.; Zhang, Y.; Ma, M.; Yang, Y.-H. All-in-focus synthetic aperture imaging using generative adversarial network-based semantic inpainting. Pattern Recognit. 2021, 111, 107669. [Google Scholar] [CrossRef]

- Zou, Q.; Cao, Y.; Li, Q.; Mao, Q.; Wang, S. Automatic inpainting by removing fence-like structures in RGBD images. Mach. Vis. Appl. 2014, 25, 1841–1858. [Google Scholar] [CrossRef]

- Han, X.; Zhang, Z.; Du, D.; Yang, M.; Yu, J.; Pan, P.; Yang, X.; Liu, L.; Xiong, Z.; Cui, S. Deep reinforcement learning of volume-guided progressive view inpainting for 3D point scene completion from a single depth image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 234–243. [Google Scholar]

- Xiang, H.; Zou, Q.; Nawaz, M.A.; Huang, X.; Zhang, F.; Yu, H. Deep learning for image inpainting: A survey. Pattern Recognit. 2022, 134, 109046. [Google Scholar] [CrossRef]

- Xie, J.; Xu, L.; Chen, E. Image denoising and inpainting with deep neural networks. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Lake Tahoe, Nevada, USA, 3–8 December 2012; Volume 25. [Google Scholar]

- Qu, Z.; Garfinkel, A.; Weiss, J.N.; Nivala, M. Multi-scale modeling in biology: How to bridge the gaps between scalesscales? Prog. Biophys. Mol. Biol. 2011, 107, 21–31. [Google Scholar] [CrossRef] [PubMed]

- Fawzi, A.; Samulowitz, H.; Turaga, D.; Frossard, P. Image inpainting through neural networks hallucinations. In Proceedings of the Image, Video, and Multidimensional Signal Processing Workshop (IVMSP), Bordeaux, France, 11–12 July 2016; pp. 1–5. [Google Scholar]

- Zhang, J.; He, L.; Karkee, M.; Zhang, Q.; Zhang, X.; Gao, Z. Branch detection for apple trees trained in fruiting wall architecture using depth features and Regions-Convolutional Neural Network (R-CNN). Comput. Electron. Agric. 2018, 155, 386–393. [Google Scholar] [CrossRef]

- Yu, J.; Lin, Z.; Yang, J.; Shen, X.; Lu, X.; Huang, T.S. Generative image inpainting with contextual attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 5505–5514. [Google Scholar]

- Barnes, C.; Shechtman, E.; Finkelstein, A.; Goldman, D. PatchMatch: A randomized correspondence algorithm for structural image editing. ACM Trans. Graph. 2009, 28, 24. [Google Scholar] [CrossRef]

- Bertalmio, M.; Sapiro, G.; Caselles, V.; Ballester, C. Image inpainting. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 23–28 July 2000; pp. 417–424. [Google Scholar]

- Criminisi, A.; Pérez, P.; Toyama, K. Region filling and object removal by exemplar-based image inpainting. IEEE Trans. Image Process 2004, 13, 1200–1212. [Google Scholar] [CrossRef] [PubMed]

- Beya, O.; Hittawe, M.; Sidibé, D.; Mériaudeau, F. Automatic detection and tracking of animal sperm cells in microscopy images. In Proceedings of the 2015 11th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS), Bangkok, Thailand, 23–27 November 2015; pp. 155–159. [Google Scholar]

- Hays, J.; Efros, A.A. Scene completion using millions of photographs. ACM Trans. Graph. 2007, 26, 4. [Google Scholar] [CrossRef]

- Iizuka, S.; Simo-Serra, E.; Ishikawa, H. Globally and locally consistent image completion. ACM Trans. Graph. 2017, 36, 107. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Park, T.; Liu, M.-Y.; Wang, T.-C.; Zhu, J.-Y. Semantic image synthesis with spatially-adaptive normalization. In Proceedings of the IEEE/CVF IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 2337–2346. [Google Scholar]

- Nazeri, K.; Ng, E.; Joseph, T.; Qureshi, F.; Ebrahimi, M. EdgeConnect: Generative image inpainting with adversarial edge learning. arXiv 2019, arXiv:1901.00212. [Google Scholar] [CrossRef]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R. Masked autoencoders are scalable vision learners. In Proceedings of the IEEE/CVF IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 16000–16009. [Google Scholar]

- Burt, P.J.; Adelson, E.H. The Laplacian pyramid as a compact image code. IEEE Trans. Commun. 1983, 31, 532–540. [Google Scholar] [CrossRef]

- Ronneberger, Ö.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Hittawe, M.M.; Sidibé, D.; Mériaudeau, F. Bag of words representation and SVM classifier for timber knots detection on color images. In Proceedings of the 2015 14th IAPR International Conference on Machine Vision Applications (MVA), Tokyo, Japan, 18–22 May 2015; pp. 287–290. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE/CVF IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 5693–5703. [Google Scholar]

- Köhler, R.; Schuler, C.; Schölkopf, B.; Harmeling, S. Mask-specific inpainting with deep neural networks. In Proceedings of the German Conference on Pattern Recognition, Düsseldorf, Germany, 7–10 September 2014; pp. 523–534. [Google Scholar]

- Yu, Y.; Zhan, F.; Wu, R.; Pan, J.; Cui, K.; Lu, S.; Ma, F.; Xie, X.; Miao, C. Diverse image inpainting with bidirectional and autoregressive transformers. In Proceedings of the 29th ACM International Conference on Multimedia (ACM MM), Virtual Event, 20–24 October 2021. [Google Scholar]

- Paris StreetView Dataset. CSA-Inpainting. v3. Available online: https://github.com/KumapowerLIU/ (accessed on 8 September 2025).

- Liu, H.; Jiang, B.; Xiao, J.; Yang, C. Coherent Semantic Attention for Image Inpainting. arXiv 2019, arXiv:1905.12384v3. [Google Scholar] [CrossRef]

- Liu, G.; Reda, F.A.; Shih, K.J.; Wang, T.-C.; Tao, A.; Catanzaro, B. Image inpainting for irregular holes using partial convolutions. In Proceedings of the European Conference on Computer Vision (ECCV), Cambridge, UK, 19–22 September 2018; pp. 85–100. [Google Scholar]

- Nazeri, K.; Ng, E.; Joseph, T.; Qureshi, F.; Ebrahimi, M. EdgeConnect: Structure guided image inpainting using edge prediction. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27–28 October 2019; pp. 3265–3274. [Google Scholar]

- Pan, X.; Zhan, X.; Dai, B.; Lin, D.; Loy, C.C.; Luo, P. Exploiting deep generative prior for versatile image restoration and manipulation. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 262–277. [Google Scholar]

- Guo, Q.; Li, X.; Juefei-Xu, F.; Yu, H.; Liu, Y.; Wang, S. JPGNet: Joint predictive filtering and generative network for image inpainting. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual Event, 20–24 October 2021; pp. 386–394. [Google Scholar]

- Zhang, H.; Hu, Z.; Luo, C.; Zuo, W.; Wang, M. Semantic image inpainting with progressive generative networks. In Proceedings of the 26th ACM International Conference on Multimedia (ACM MM), Seoul, Republic of Korea, 22–26 October 2018; pp. 1939–1947. [Google Scholar]

- Probst, P.; Wright, M.N.; Boulesteix, A.L. Hyperparameters and tuning strategies for random forest. In Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery; Wiley: Hoboken, NJ, USA, 2019; Volume 9, p. e1301. [Google Scholar]

- Ramzan, M.; Abid, A.; Bilal, M.; Aamir, K.M.; Memonand, S.A.; Chung, T.-S. Effectiveness of pre-trained CNN networks for detecting abnormal activities in online exams. IEEE Access 2024, 12, 21503–21519. [Google Scholar] [CrossRef]

- Yu, J.; Lin, Z.; Yang, J.; Shen, X.; Lu, X.; Huang, T.S. Free-form image inpainting with gated convolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4470–4479. [Google Scholar]

- Avazov, K.; Jamil, M.K.; Muminov, B.; Abdusalomov, A.B.; Cho, Y.-I. Fire detection and notification method in ship areas using deep learning and computer vision approaches. Sensors 2023, 23, 7078. [Google Scholar] [CrossRef] [PubMed]

- Yeh, R.A.; Chen, C.; Yian, T.; Lim, A.G.; Schwing, M.; Hasegawa-Johnson, M.N. Do Semantic image inpainting with deep generative models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6882–6890. [Google Scholar]

- Wang, N.; Ma, S.; Li, J.; Zhang, Y.; Zhang, L. Multistage attention network for image inpainting. Pattern Recognit. 2020, 106, 107448. [Google Scholar] [CrossRef]

- Sagong, M.-C.; Shin, Y.-G.; Kim, S.-W.; Park, S.; Ko, S.-J. PEPSI: Fast image inpainting with parallel decoding network. In Proceedings of the IEEE/CVF IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 11360–11368. [Google Scholar]

- Li, J.; Wang, N.; Zhang, L.; Du, B.; Tao, D. Recurrent feature reasoning for image inpainting. In Proceedings of the IEEE/CVF IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 7757–7765. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017; Volume 30, pp. 5998–6008. [Google Scholar]

- Ren, J.S.; Xu, L.; Yan, Q.; Sun, W. Shepard convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Montreal, QC, Canada, 7–12 December 2015; Volume 28, pp. 907–915. [Google Scholar]

- Dapogny, A.; Cord, M.; Pérez, P. The missing data encoder: Cross-channel image completion with hide-and-seek adversarial network. Proc. AAAI Conf. Artif. Intell. 2020, 34, 10688–10695. [Google Scholar] [CrossRef]

- Xie, C.; Liu, S.; Li, C.; Cheng, M.-M.; Zuo, W.; Liu, X.; Wen, S.; Ding, E. Image inpainting with learnable bidirectional attention maps. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8857–8866. [Google Scholar]

- Ma, Y.; Liu, X.; Bai, S.; Wang, L.; He, D.; Liu, A. Coarse-to-fine image inpainting via region-wise convolutions and non-local correlation. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence (IJCAI), Macau, China, 10–16 August 2019; pp. 3123–3129. [Google Scholar]

- Cai, W.; Wei, Z. PiGAN: Generative adversarial networks for pluralistic image inpainting. IEEE Access 2020, 8, 48451–48463. [Google Scholar] [CrossRef]

| Method | NMSE (%) ↓ | PSNR (dB) ↑ | SSIM ↑ | LPIPS ↓ |

|---|---|---|---|---|

| Edge-Connect | 3.49 | 30.28 | 0.939 | 0.0496 |

| LBAM | 2.75 | 31.44 | 0.949 | 0.0384 |

| PIC_Net | 7.36 | 23.61 | 0.850 | 0.1242 |

| BAT | 3.27 | 28.51 | 0.945 | 0.00335 |

| Proposed Model | 1.45 | 36.5595 | 0.98725 | 0.00794 |

| Models | PSNR ↑ | SSIM ↑ | LPIPS ↓ |

|---|---|---|---|

| M1 | 36.557 | 0.98626 | 0.00796 |

| M2 | 36.555 | 0.98644 | 0.00799 |

| M3 | 36.4974 | 0.98624 | 0.00797 |

| M4 | 36.5595 | 0.98725 | 0.00794 |

| Transformer Layers | Batch Size | PSNR ↑ | SSIM ↑ | LPIPS ↓ | Time |

|---|---|---|---|---|---|

| 2 | 16 | 35.650 | 0.920 | 0.01284 | - |

| 4 | 16 | 36.557 | 0.98626 | 0.00796 | 28.3938 HR |

| 6 | 16 | 36.100 | 0.98325 | 0.01720 | - |

| 2 | 32 | - | - | - | - |

| 4 | 32 | 36.557 | 0.98626 | 0.00796 | - |

| 6 | 32 | - | - | - | - |

| 2 | 64 | - | - | - | - |

| 4 | 64 | - | - | - | - |

| 6 | 64 | - | - | - | - |

| Experimental Setting | PSNR (dB) ↑ | SSIM ↑ | LPIPS ↓ |

|---|---|---|---|

| Transformer Layers = 2 | 35.650 | 0.92000 | 0.01284 |

| Transformer Layers = 4 | 36.557 | 0.98626 | 0.00796 |

| Transformer Layers = 6 | 36.100 | 0.98325 | 0.01720 |

| Learning Rate = 1 × 10−3 | 34.882 | 0.91136 | 0.01357 |

| Learning Rate = 1 × 10−4 | 36.557 | 0.98626 | 0.00796 |

| Learning Rate = 1 × 10−5 | 35.213 | 0.96142 | 0.01051 |

| Batch Size = 16 | 36.674 | 0.98648 | 0.00793 |

| Batch Size = 32 | 36.557 | 0.98626 | 0.00796 |

| Batch Size = 64 | 35.983 | 0.98497 | 0.01067 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Chen, K.; Penn, A. Image Completion Network Considering Global and Local Information. Buildings 2025, 15, 3746. https://doi.org/10.3390/buildings15203746

Liu Y, Chen K, Penn A. Image Completion Network Considering Global and Local Information. Buildings. 2025; 15(20):3746. https://doi.org/10.3390/buildings15203746

Chicago/Turabian StyleLiu, Yubo, Ke Chen, and Alan Penn. 2025. "Image Completion Network Considering Global and Local Information" Buildings 15, no. 20: 3746. https://doi.org/10.3390/buildings15203746

APA StyleLiu, Y., Chen, K., & Penn, A. (2025). Image Completion Network Considering Global and Local Information. Buildings, 15(20), 3746. https://doi.org/10.3390/buildings15203746