Modelling Project Control System Effectiveness in Saudi Arabian Construction Project Delivery

Abstract

1. Introduction

- RQ1: How do organisational, human, and technological PCS determinants influence operational control determinants in construction projects?

- RQ2: How do operational control determinants influence project performance in construction projects?

- RQ3: To what extent do operational control determinants mediate the relationship between organisational, human, and technological PCS determinants and project performance?

- RQ4: Which areas should be prioritised to enhance PCS effectiveness in Saudi construction project delivery?

2. Literature Review

2.1. Overview of Project Control Systems in Construction

2.2. Review of the Existing Empirical Framework and Models of PCS

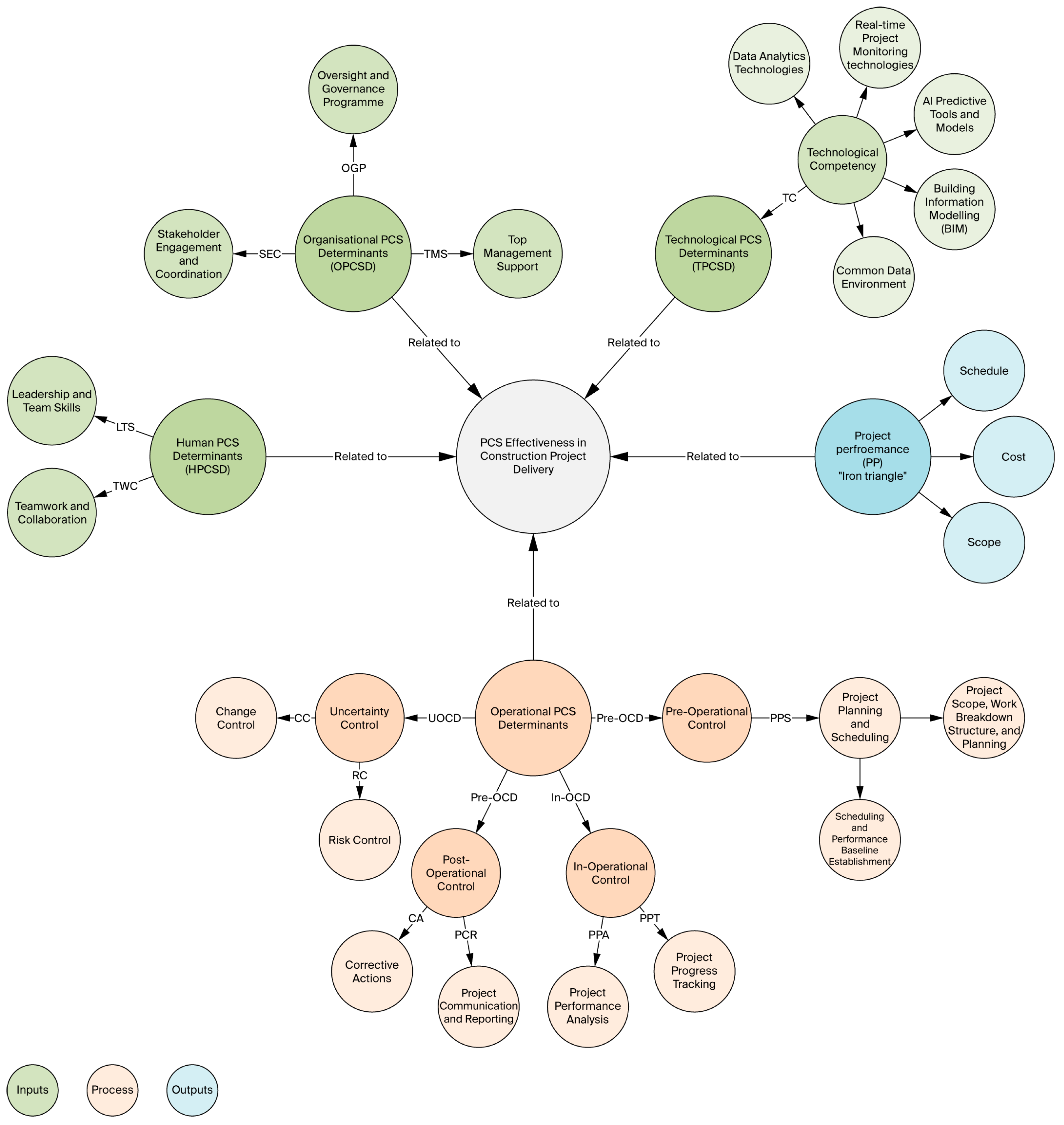

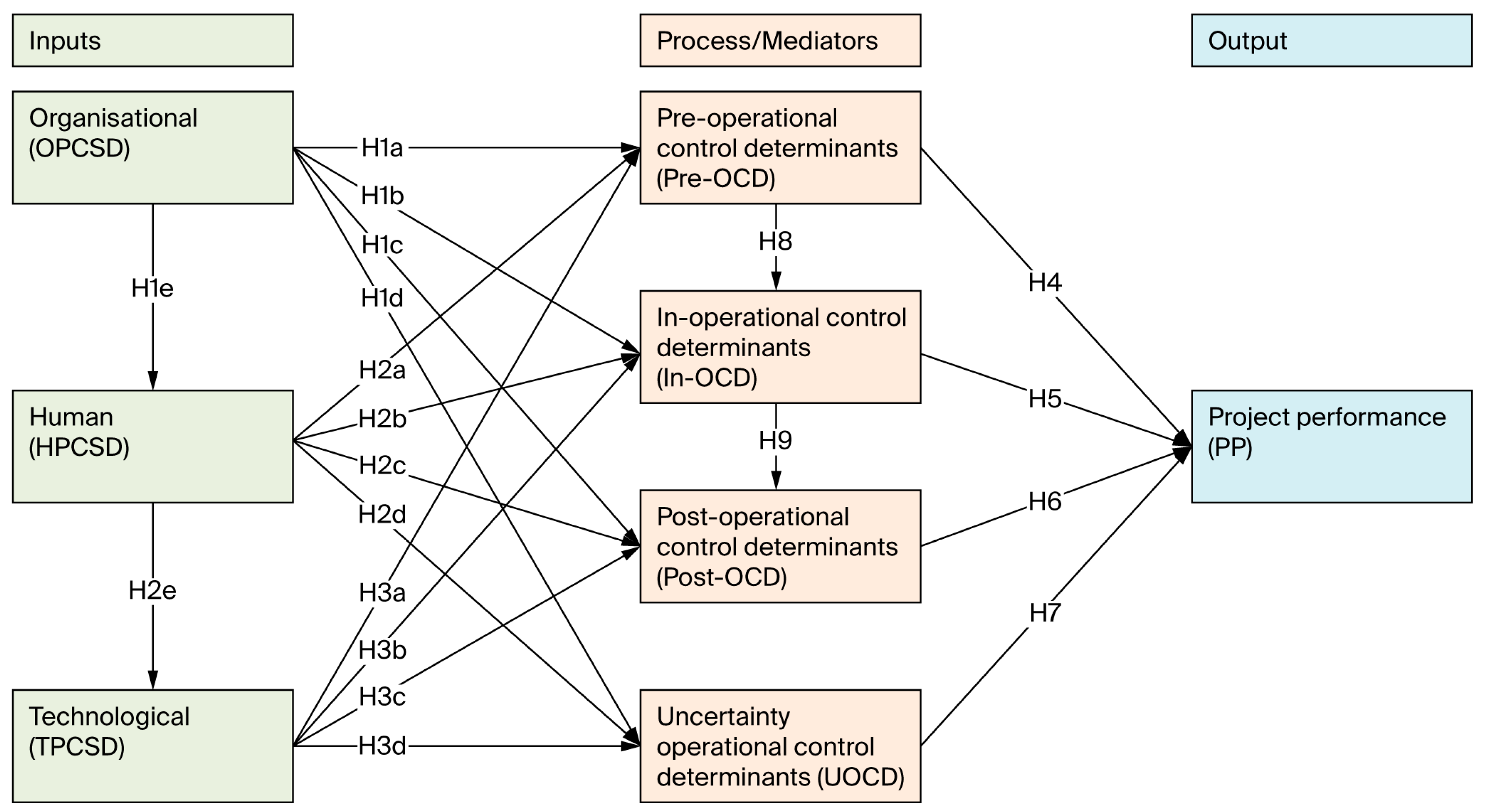

3. Conceptual Model and Hypotheses

3.1. Conceptual Model Overview

- Input determinants: Organisational (OPCSD), human (HPCSD), and technological (TPCSD) factors that collectively shape the environment for PCS implementation.

- Process constructs: Operational control mechanisms structured into four interrelated subcomponents, namely pre-operational (Pre-OCD), in-operational (In-OCD), post-operational (Post-OCD), and uncertainty-related control (UOCD), reflecting key functional stages of the project control cycle.

- Output construct: Project performance (PP), measured through adherence to time, cost, and scope targets, representing the direct outcome of effective control processes.

3.2. Hypothesis Development

3.2.1. H1: Positive Influence of Organisational PCS Determinants on Operational and Human Determinants

3.2.2. H2: Positive Influence of Human PCS Determinants on Operational and Technological Determinants

3.2.3. H3: Positive Influence of Technological PCS Determinants on Operational Control Determinants

3.2.4. H4–H7: Positive Influence of Operational Control Determinants on Project Performance

3.2.5. H8–H9: Sequential Nature of Operational Control

4. Methodology

4.1. Research Design

4.2. Instrument Development

4.3. Sample and Data Collection

4.4. Data Analysis Procedure

5. Results

5.1. Respondent and Project Profile

5.2. Measurement Model Evaluation

5.3. Structural Model Evaluation

5.3.1. Model Fit Assessment

5.3.2. Collinearity Diagnostics

5.3.3. Path Coefficients: Significance and Relevance

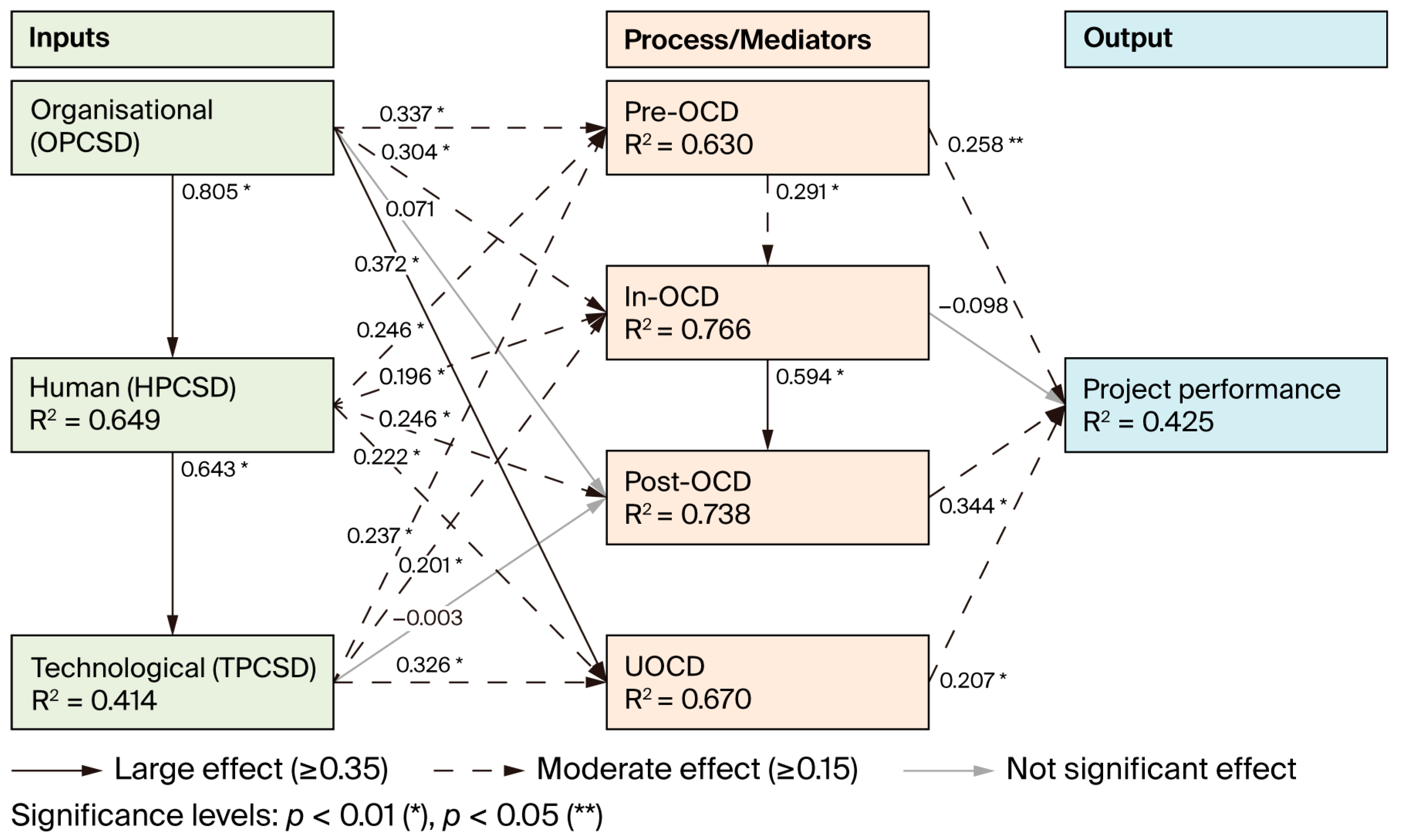

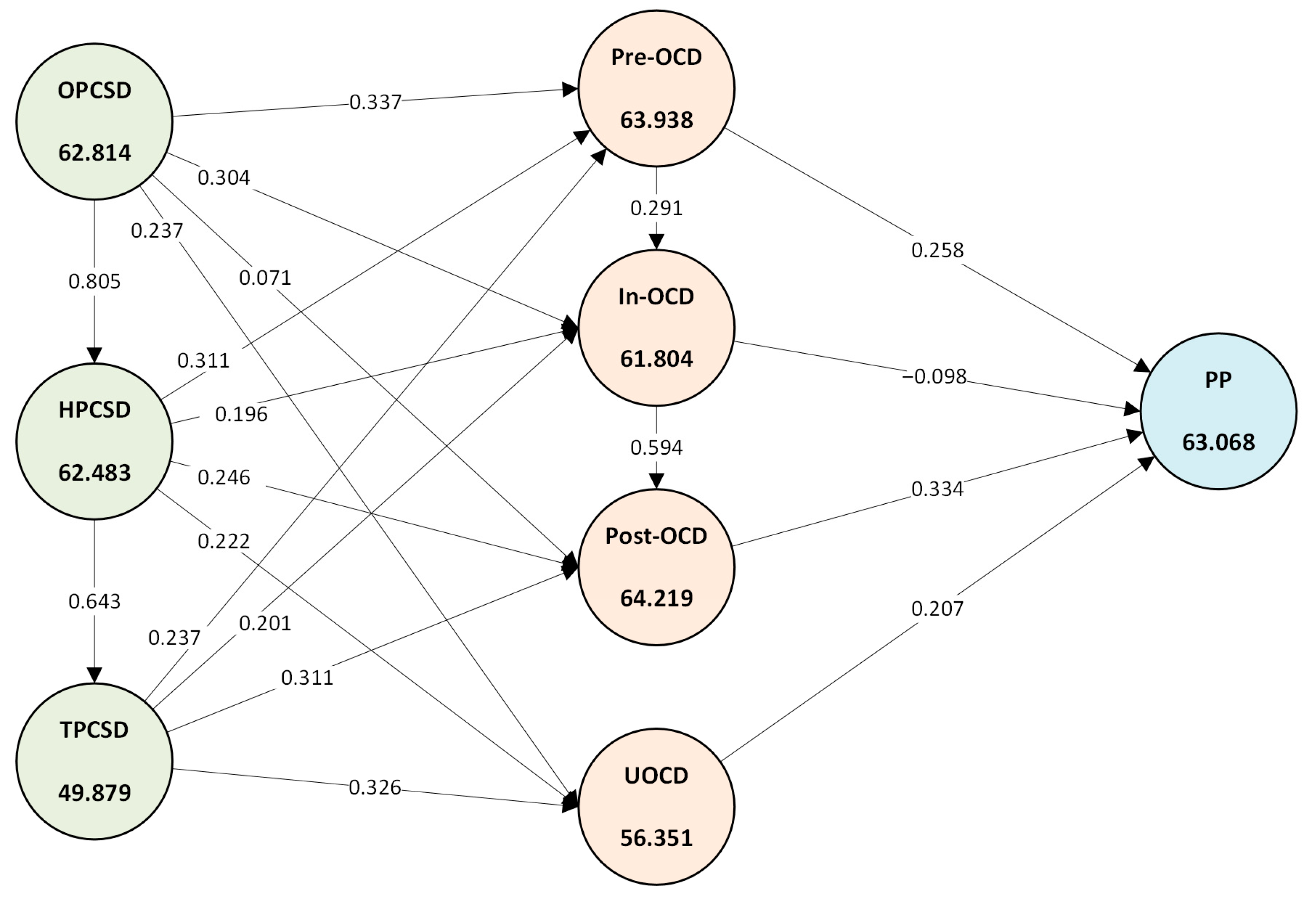

5.3.4. Coefficient of Determination (R2)

5.3.5. Predictive Relevance (Q2)

5.3.6. Effect Size (f2)

5.4. Mediation Analysis

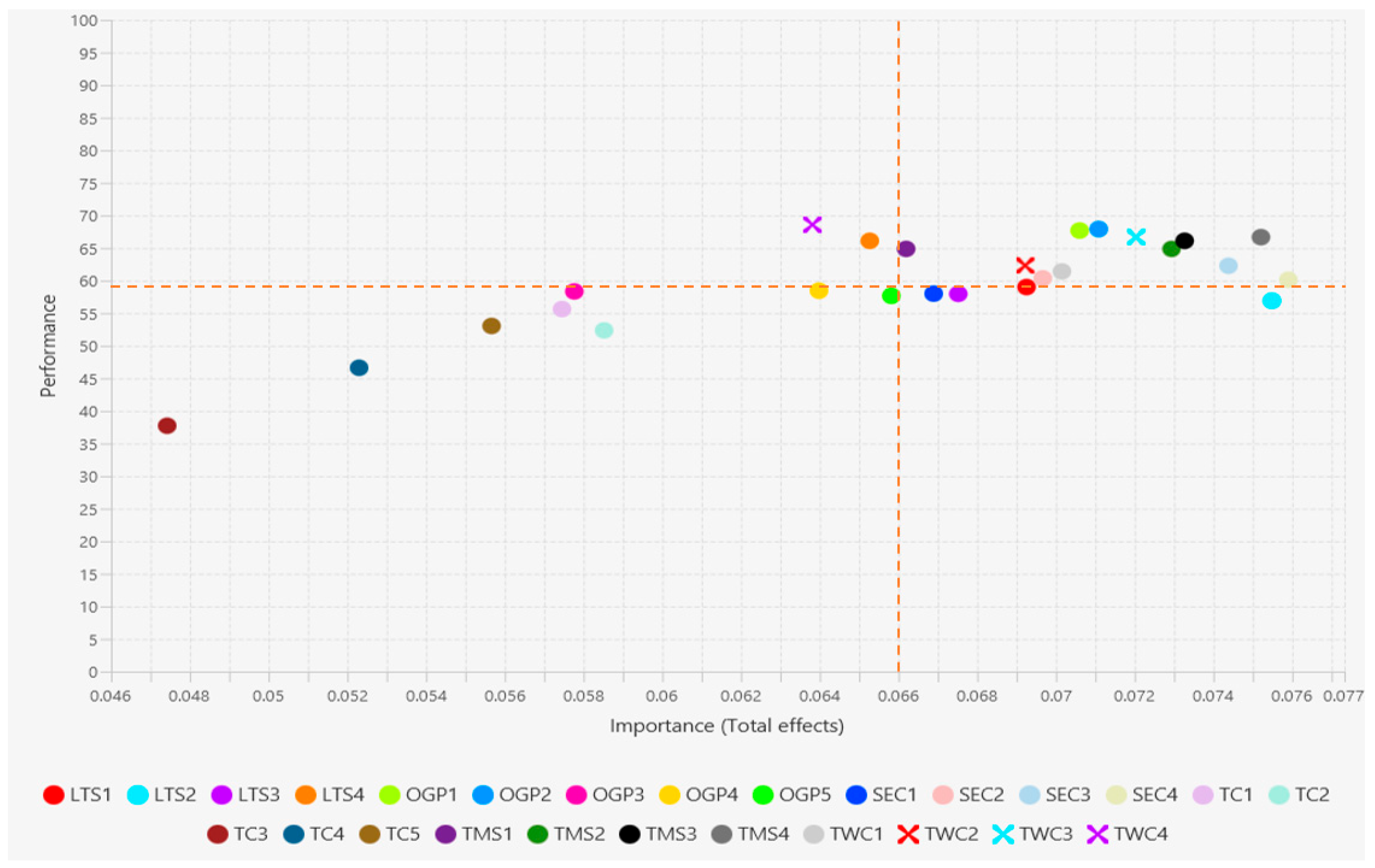

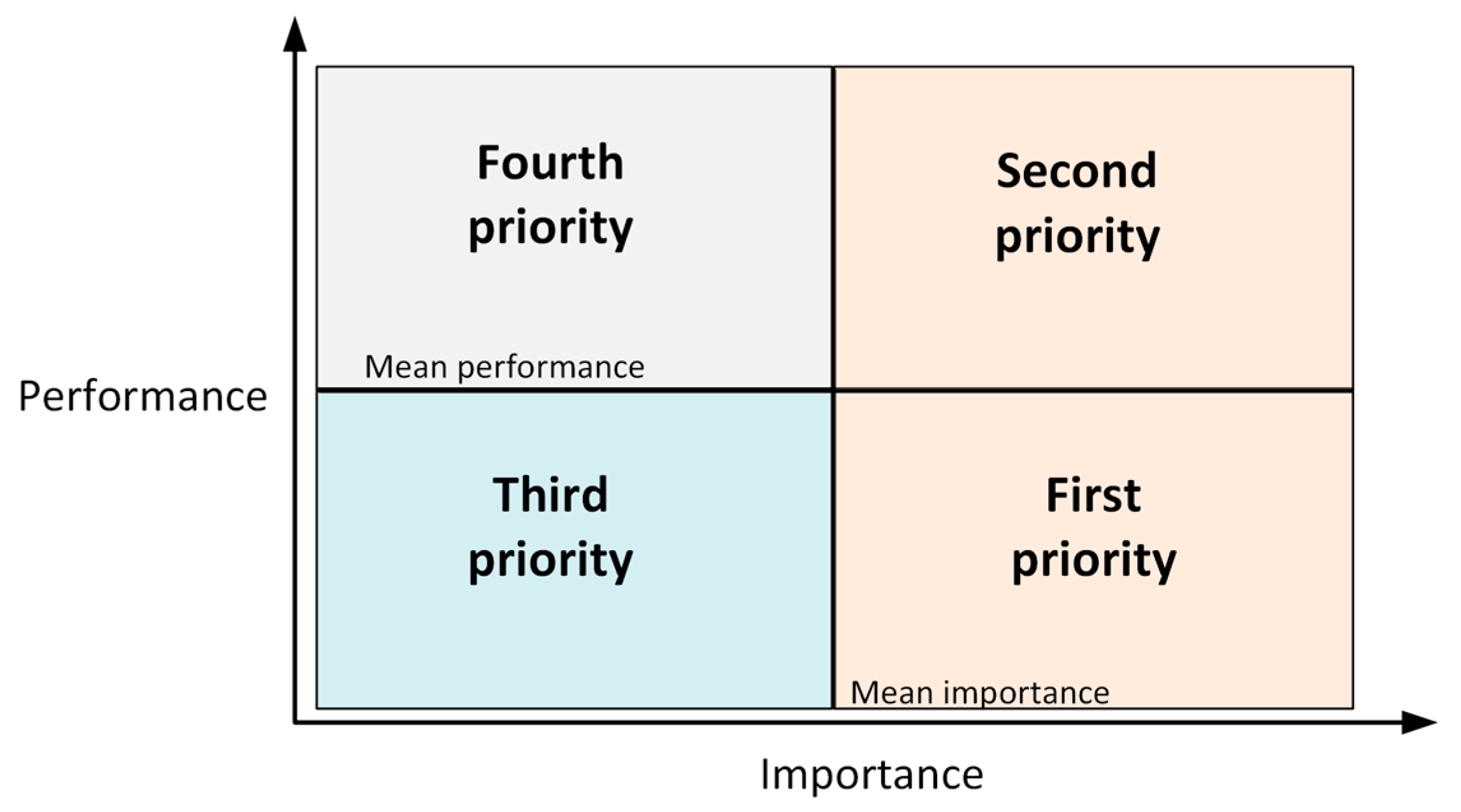

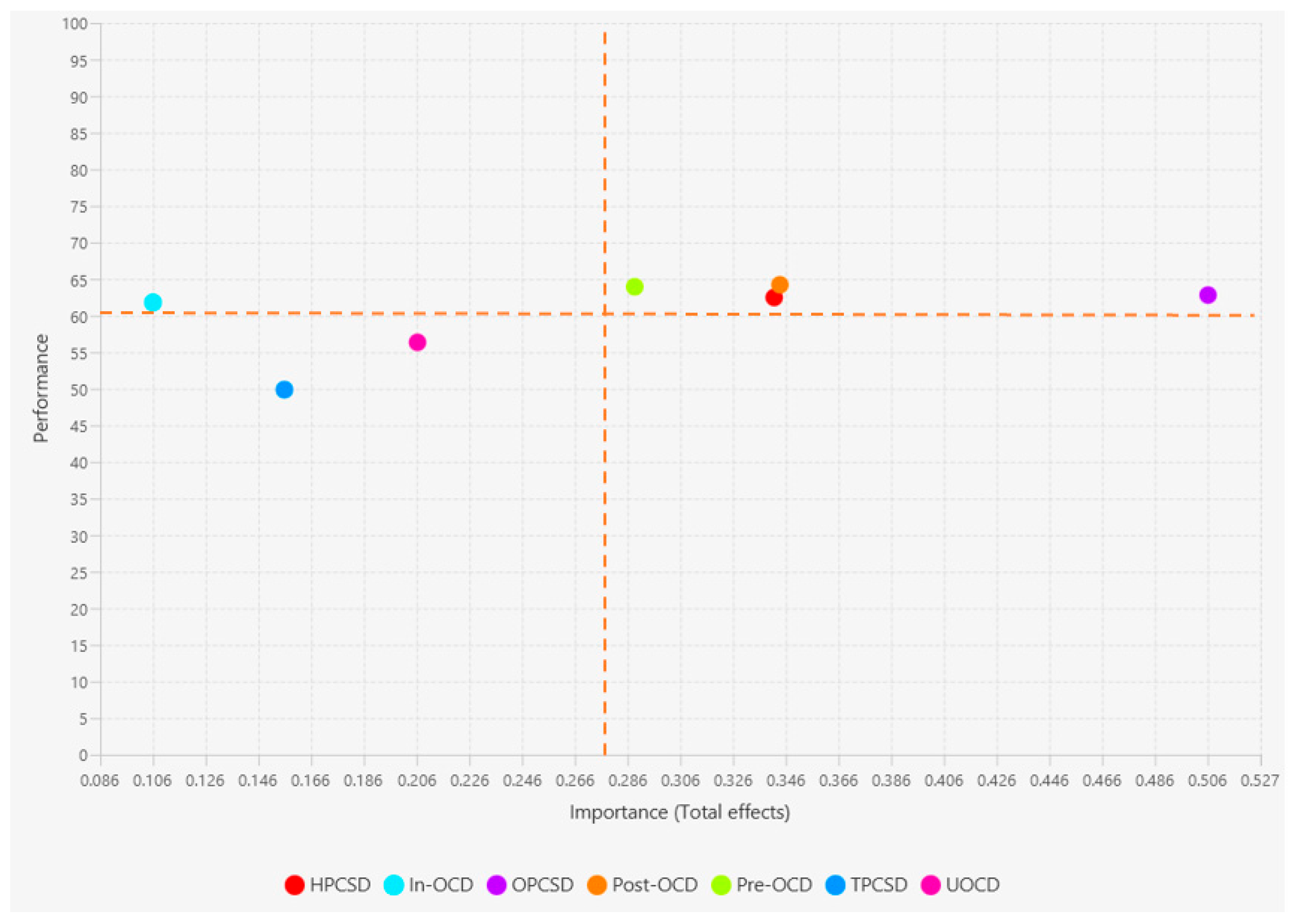

5.5. IPMA Results and Strategic Priorities

5.5.1. Construct-Level IPMA Results

5.5.2. Formulation of the Performance Model

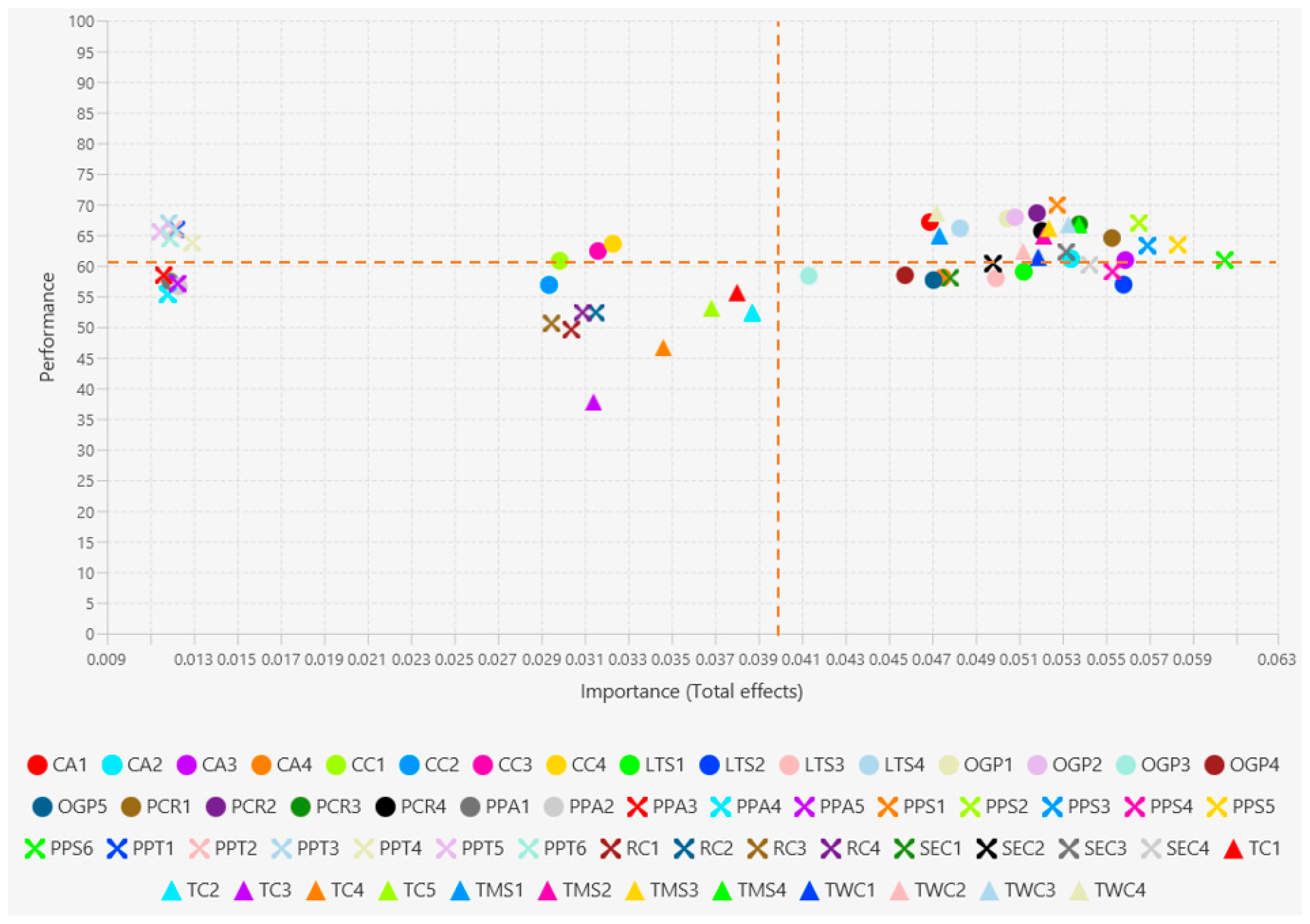

5.5.3. Indicator-Level IPMA Results

- LTS1, 2, and 3: Team members’ knowledge and expertise level, their skills, and manager’s technical and managerial capability.

- PPS4: Accuracy of project estimation.

- SEC1, 2, and 4: Assessment of stakeholder engagement, stakeholders’ understanding of their roles, and consistency and integration of control systems across stakeholders.

- OGP3, 4, and 5: PMO involvement, audits and compliance frequency, and application of knowledge management for continues improvement.

- CA4: Application of schedule compression techniques.

6. Discussion

6.1. Discussion of Key Findings

6.2. Theoretical Contributions

6.3. Practical Implications

7. Limitations and Future Research Recommendations

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| PCS | Project Control System |

| PCSDs | Project Control System Determinants |

| IPO | Input–Process–Output |

| PLS-SEM | Partial Least Squares Structural Equation Modelling |

| PP | Project Performance |

| OPCSD | Organisational Project Control System Determinant |

| HPCSD | Human Project Control System Determinant |

| TPCSD | Technological Project Control System Determinant |

| Pre-OCD | Pre-Operational Control Determinants |

| In-OCD | In-Operational Control Determinants |

| Post-OCD | Post-Operational Control Determinants |

| UOCD | Uncertainty Operational Control Determinants |

| TMS | Top management support |

| OGP | Oversight and Governance Programmes |

| SEC | Stakeholder Engagement Coordination |

| TWC | Teamwork and Collaboration |

| LTS | Leadership and Team Skills |

| TC | Technological Competency |

| PPS | Project Planning and Scheduling |

| CC | Change Control |

| RC | Risk Control |

| PPT | Project Progress Tracking |

| PPA | Project Performance Analysis |

| PCR | Project Communication and Reporting |

| CA | Corrective Actions |

Appendix A. Pilot Study Respondents

| Variable | Category | Number | Percentage (%) | Cumulative Percentage (%) |

|---|---|---|---|---|

| Years of experience | 11–15 years | 3 | 8.8 | 8.8 |

| 16–20 years | 8 | 23.5 | 32.4 | |

| 20 and above | 23 | 67.6 | 100.0 | |

| Respondent’s position | Project manager level | 31 | 91.2 | 91.2 |

| Contract manager | 3 | 8.8 | 100.0 | |

| Project type | Commercial | 1 | 2.9 | 2.9 |

| Industrial | 1 | 2.9 | 5.9 | |

| Infrastructure | 9 | 26.5 | 32.4 | |

| Residential | 19 | 55.9 | 88.2 | |

| Services | 4 | 11.8 | 100.0 | |

| Project sector | Private | 11 | 32.4 | 32.4 |

| Public | 23 | 67.6 | 100.0 | |

| Organisation role | Client | 10 | 29.4 | 29.4 |

| Consultant | 18 | 52.9 | 82.4 | |

| Contractor | 6 | 17.6 | 100.0 |

Appendix B. Descriptive Statistics

| Indicators | N | Minimum | Maximum | Mean | Std. Deviation |

|---|---|---|---|---|---|

| Schedule performance | 222 | 1 | 5 | 3.29 | 1.188 |

| Cost performance | 222 | 1 | 5 | 3.53 | 1.053 |

| Scope performance | 222 | 1 | 5 | 3.73 | 1.006 |

| TMS1 | 222 | 1 | 5 | 3.59 | 1.054 |

| TMS2 | 222 | 1 | 5 | 3.59 | 1.084 |

| TMS3 | 222 | 1 | 5 | 3.64 | 1.057 |

| TMS4 | 222 | 1 | 5 | 3.67 | 1.096 |

| OGP1 | 222 | 1 | 5 | 3.71 | 0.970 |

| OGP2 | 222 | 1 | 5 | 3.72 | 0.935 |

| OGP3 | 222 | 1 | 5 | 3.33 | 1.104 |

| OGP4 | 222 | 1 | 5 | 3.34 | 1.088 |

| OGP5 | 222 | 1 | 5 | 3.31 | 1.116 |

| SEC1 | 222 | 1 | 5 | 3.32 | 1.047 |

| SEC2 | 222 | 1 | 5 | 3.41 | 1.072 |

| SEC3 | 222 | 1 | 5 | 3.49 | 1.071 |

| SEC4 | 222 | 1 | 5 | 3.41 | 1.015 |

| TWC1 | 222 | 1 | 5 | 3.46 | 1.202 |

| TWC2 | 222 | 1 | 5 | 3.49 | 1.174 |

| TWC3 | 222 | 1 | 5 | 3.67 | 0.978 |

| TWC4 | 222 | 1 | 5 | 3.74 | 1.060 |

| LTS1 | 222 | 1 | 5 | 3.36 | 1.118 |

| LTS2 | 222 | 1 | 5 | 3.28 | 1.148 |

| LTS3 | 222 | 1 | 5 | 3.32 | 1.152 |

| LTS4 | 222 | 1 | 5 | 3.64 | 0.968 |

| TC1 | 222 | 1 | 5 | 3.23 | 1.094 |

| TC2 | 222 | 1 | 5 | 3.09 | 1.167 |

| TC3 | 222 | 1 | 5 | 2.51 | 1.297 |

| TC4 | 222 | 1 | 5 | 2.86 | 1.244 |

| TC5 | 222 | 1 | 5 | 3.12 | 1.137 |

| PPS1 | 222 | 1 | 5 | 3.80 | 0.965 |

| PPS2 | 222 | 1 | 5 | 3.68 | 0.923 |

| PPS3 | 222 | 1 | 5 | 3.53 | 0.935 |

| PPS4 | 222 | 1 | 5 | 3.36 | 1.053 |

| PPS5 | 222 | 1 | 5 | 3.54 | 0.935 |

| PPS6 | 222 | 1 | 5 | 3.44 | 0.995 |

| CCM1 | 222 | 1 | 5 | 3.43 | 1.021 |

| CCM2 | 222 | 1 | 5 | 3.27 | 1.200 |

| CCM3 | 222 | 1 | 5 | 3.50 | 1.088 |

| CMM4 | 222 | 1 | 5 | 3.54 | 1.079 |

| RM1 | 222 | 1 | 5 | 2.98 | 1.173 |

| RM2 | 222 | 1 | 5 | 3.09 | 1.167 |

| RM_3 | 222 | 1 | 5 | 3.02 | 1.220 |

| RM4 | 222 | 1 | 5 | 3.09 | 1.107 |

| PPT1 | 222 | 1 | 5 | 3.64 | 1.037 |

| PPT2 | 222 | 1 | 5 | 3.64 | 1.005 |

| PPT3 | 222 | 1 | 5 | 3.68 | 0.952 |

| PPT4 | 222 | 1 | 5 | 3.55 | 0.930 |

| PPT5 | 222 | 1 | 5 | 3.62 | 0.971 |

| PPT6 | 222 | 1 | 5 | 3.58 | 0.957 |

| PPA1 | 222 | 1 | 5 | 3.30 | 1.077 |

| PPA2 | 222 | 1 | 5 | 3.27 | 1.079 |

| PPA3 | 222 | 1 | 5 | 3.34 | 1.096 |

| PPA4 | 222 | 1 | 5 | 3.21 | 1.116 |

| PPA5 | 222 | 1 | 5 | 3.28 | 1.053 |

| PCR1 | 222 | 1 | 5 | 3.58 | 0.984 |

| PCR2 | 222 | 1 | 5 | 3.74 | 0.980 |

| PCR3 | 222 | 1 | 5 | 3.67 | 1.005 |

| PCR4 | 222 | 1 | 5 | 3.63 | 1.016 |

| CA1 | 222 | 1 | 5 | 3.68 | 0.988 |

| CA2 | 222 | 1 | 5 | 3.45 | 1.078 |

| CA3 | 222 | 1 | 5 | 3.44 | 1.043 |

| CA4 | 222 | 1 | 5 | 3.32 | 1.086 |

Appendix C. Mediation Analysis

| H | Relationships | Path Coefficient (B) | T Statistics (|O/STDEV|) | p Values | Significance (p < 0.05) |

|---|---|---|---|---|---|

| H1 | OPCSD → PP | 0.185 | 1.526 | 0.064 | Insignificant |

| H1a | OPCSD → Pre-OCD | 0.337 | 4.288 | 0.000 | Significant |

| H1b | OPCSD → In-OCD | 0.304 | 4.675 | 0.000 | Significant |

| H1c | OPCSD → Post-OCD | 0.071 | 0.883 | 0.189 | Insignificant |

| H1d | OPCSD → UOCD | 0.371 | 4.556 | 0.000 | Significant |

| H1e | OPCSD → HPCSD | 0.805 | 31.152 | 0.000 | Significant |

| H2 | HPCSD → PP | 0.062 | 0.491 | 0.312 | Insignificant |

| H2a | HPCSD → Pre-OCD | 0.312 | 3.712 | 0.000 | Significant |

| H2b | HPCSD → In-OCD | 0.196 | 2.694 | 0.004 | Significant |

| H2c | HPCSD → Post-OCD | 0.246 | 3.049 | 0.001 | Significant |

| H2d | HPCSD → UOCD | 0.223 | 2.856 | 0.002 | Significant |

| H2e | HPCSD → TPCSD | 0.643 | 13.863 | 0.000 | Significant |

| H3 | TPCSD → PP | 0.036 | 0.266 | 0.395 | Insignificant |

| H3a | TPCSD → Pre-OCD | 0.237 | 3.816 | 0.000 | Significant |

| H3b | TPCSD → In-OCD | 0.201 | 3.215 | 0.001 | Significant |

| H3c | TPCSD → Post-OCD | −0.003 | 0.069 | 0.473 | Insignificant |

| H3d | TPCSD → UOCD | 0.326 | 4.441 | 0.000 | Significant |

| H4 | Pre-OCD → PP | 0.202 | 1.61 | 0.054 | Insignificant |

| H5 | In-OCD → PP | −0.191 | 1.328 | 0.092 | Insignificant |

| H6 | Post-OCD → PP | 0.299 | 2.732 | 0.003 | Significant |

| H7 | UOCD → PP | 0.15 | 1.304 | 0.096 | Significant |

| H8 | Pre-OCD → In-OCD | 0.291 | 4.112 | 0.000 | Significant |

| H9 | In-OCD → Post-OCD | 0.594 | 8.565 | 0.000 | Significant |

| H | Relationships | Path Coefficient (B) | p Values | Significance (p < 0.05) | Mediation Type |

|---|---|---|---|---|---|

| H10a | OPCSD → Pre-OCD → PP | 0.087 | 0.028 | Significant | Full mediation |

| H10b | HPCSD → Pre-OCD → PP | 0.080 | 0.043 | Significant | Full mediation |

| H10c | TPCSD → Pre-OCD → PP | 0.061 | 0.021 | Significant | Full mediation |

| H11a | OPCSD → In-OCD → PP | −0.030 | 0.231 | Insignificant | Not supported |

| H11b | HPCSD → In-OCD → PP | −0.019 | 0.244 | Insignificant | Not supported |

| H11c | TPCSD → In-OCD → PP | −0.020 | 0.239 | Insignificant | Not supported |

| H12a | OPCSD → Post-OCD → PP | 0.024 | 0.203 | Insignificant | Not supported |

| H12b | HPCSD → Post-OCD → PP | 0.085 | 0.028 | Significant | Full mediation |

| H13c | TPCSD → Post-OCD → PP | −0.001 | 0.473 | Insignificant | Not supported |

| H14a | OPCSD → UOCD → PP | 0.077 | 0.028 | Significant | Full mediation |

| H14b | HPCSD → UOCD → PP | 0.046 | 0.018 | Significant | Full mediation |

| H14c | TPCSD → UOCD → PP | 0.067 | 0.027 | Significant | Full mediation |

| H15a | In-OCD → Post-OCD → PP | 0.205 | 0.001 | Significant | Full mediation |

| H15b | Pre-OCD → In-OCD → PP | −0.029 | 0.243 | Insignificant | Not supported |

| H15c | Pre-OCD → In-OCD → Post-OCD → PP | 0.059 | 0.005 | Significant | Full mediation |

| H16a | OPCSD → Pre-OCD → In-OCD → PP | −0.01 | 0.257 | Insignificant | Not supported |

| H16b | HPCSD → Pre-OCD → In-OCD → PP | −0.009 | 0.256 | Insignificant | Not supported |

| H16c | TPCSD → Pre-OCD → In-OCD → PP | −0.007 | 0.248 | Insignificant | Not supported |

| H17a | OPCSD → In-OCD → Post-OCD → PP | 0.062 | 0.004 | Significant | Full mediation |

| H17b | HPCSD → In-OCD → Post-OCD → PP | 0.04 | 0.027 | Significant | Full mediation |

| H17c | TPCSD → In-OCD → Post-OCD → PP | 0.041 | 0.015 | Significant | Full mediation |

| H18a | OPCSD → Pre-OCD → In-OCD → Post-OCD → PP | 0.020 | 0.017 | Significant | Full mediation |

| H18b | HPCSD → Pre-OCD → In-OCD → Post-OCD → PP | 0.019 | 0.021 | Significant | Full mediation |

| H18c | TPCSD → Pre-OCD → In-OCD → Post-OCD → PP | 0.014 | 0.016 | Significant | Full mediation |

Appendix D. Importance–Performance Values

| Indicators | Importance | Performance | Improvement Priority Quadrants |

|---|---|---|---|

| PPB6 | 0.060 | 60.923 | Second priority area |

| PPB5 | 0.058 | 63.401 | Second priority area |

| PPB3 | 0.057 | 63.288 | Second priority area |

| PPB2 | 0.056 | 67.005 | Second priority area |

| CA3 | 0.056 | 60.923 | Second priority area |

| LTS2 | 0.056 | 56.907 | First priority area |

| PPB4 | 0.055 | 59.009 | First priority area |

| PCSR1 | 0.055 | 64.527 | Second priority area |

| SEC4 | 0.054 | 60.135 | First priority area |

| PCSR3 | 0.054 | 66.779 | Second priority area |

| TMS4 | 0.054 | 66.667 | Second priority area |

| CA2 | 0.053 | 61.149 | Second priority area |

| TWC3 | 0.053 | 66.667 | Second priority area |

| SEC3 | 0.053 | 62.275 | Second priority area |

| PPB1 | 0.053 | 69.932 | Second priority area |

| TMS3 | 0.052 | 66.104 | Second priority area |

| TMS2 | 0.052 | 64.865 | Second priority area |

| PCSR4 | 0.052 | 65.653 | Second priority area |

| TWC1 | 0.052 | 61.411 | Second priority area |

| PCSR2 | 0.052 | 68.581 | Second priority area |

| LTS1 | 0.051 | 59.009 | First priority area |

| TWC2 | 0.051 | 62.312 | Second priority area |

| OGP2 | 0.051 | 67.905 | Second priority area |

| OGP1 | 0.050 | 67.680 | Second priority area |

| LTS3 | 0.050 | 57.958 | First priority area |

| SEC2 | 0.050 | 60.360 | First priority area |

| LTS4 | 0.048 | 66.104 | Second priority area |

| SEC1 | 0.048 | 57.995 | First priority area |

| CA4 | 0.047 | 58.108 | First priority area |

| TMS1 | 0.047 | 64.865 | Second priority area |

| TWC4 | 0.047 | 68.581 | Second priority area |

| OGP5 | 0.047 | 57.658 | First priority area |

| CA1 | 0.047 | 67.117 | Second priority area |

| OGP4 | 0.046 | 58.446 | First priority area |

| OGP3 | 0.041 | 58.333 | First priority area |

| TC2 | 0.039 | 52.365 | Third priority area |

| TC1 | 0.038 | 55.631 | Third priority area |

| TC5 | 0.037 | 53.041 | Third priority area |

| TC4 | 0.034 | 46.622 | Third priority area |

| CC4 | 0.032 | 63.572 | Fourth priority area |

| CC3 | 0.031 | 62.387 | Fourth priority area |

| RC2 | 0.031 | 52.365 | Third priority area |

| TC3 | 0.031 | 37.725 | Third priority area |

| RC4 | 0.031 | 52.365 | Third priority area |

| RC1 | 0.030 | 49.550 | Third priority area |

| CC1 | 0.030 | 60.811 | Fourth priority area |

| RC3 | 0.029 | 50.563 | Third priority area |

| CC2 | 0.029 | 56.869 | Third priority area |

| PPT4 | 0.013 | 63.739 | Fourth priority area |

| PPA2 | 0.012 | 56.644 | Third priority area |

| PPA5 | 0.012 | 57.095 | Third priority area |

| PPT1 | 0.012 | 65.878 | Fourth priority area |

| PPT2 | 0.012 | 65.991 | Fourth priority area |

| PPA1 | 0.012 | 57.432 | Third priority area |

| PPT6 | 0.012 | 64.414 | Fourth priority area |

| PPT3 | 0.012 | 67.005 | Fourth priority area |

| PPA4 | 0.012 | 55.293 | Third priority area |

| PPA3 | 0.011 | 58.446 | Third priority area |

| PPT5 | 0.011 | 65.541 | Fourth priority area |

| Mean | 0.040 | 60.678 |

| Indicators | Importance | Performance | Improvement Priority Quadrants |

|---|---|---|---|

| SEC4 | 0.087 | 60.135 | Second priority area |

| LTS2 | 0.075 | 56.907 | First priority area |

| TMS4 | 0.075 | 66.667 | Second priority area |

| SEC3 | 0.074 | 62.275 | Second priority area |

| TMS3 | 0.073 | 66.104 | Second priority area |

| TMS2 | 0.073 | 64.865 | Second priority area |

| TWC3 | 0.072 | 66.667 | Second priority area |

| OGP2 | 0.071 | 67.905 | Second priority area |

| OGP1 | 0.071 | 67.680 | Second priority area |

| TWC1 | 0.070 | 61.411 | Second priority area |

| SEC2 | 0.070 | 60.360 | Second priority area |

| LTS1 | 0.069 | 59.009 | First priority area |

| TWC2 | 0.069 | 62.312 | Second priority area |

| LTS3 | 0.067 | 57.958 | First priority area |

| SEC1 | 0.067 | 57.995 | First priority area |

| TMS1 | 0.066 | 64.865 | Second priority area |

| OGP5 | 0.066 | 57.658 | First priority area |

| LTS4 | 0.065 | 66.104 | Fourth priority area |

| OGP4 | 0.064 | 58.446 | Third priority area |

| TWC4 | 0.064 | 68.581 | Fourth priority area |

| TC2 | 0.058 | 52.365 | Third priority area |

| OGP3 | 0.058 | 58.333 | Third priority area |

| TC1 | 0.057 | 55.631 | Third priority area |

| TC5 | 0.056 | 53.041 | Third priority area |

| TC4 | 0.052 | 46.622 | Third priority area |

| TC3 | 0.047 | 37.725 | Third priority area |

| Mean | 0.066 | 59.908 |

| Indicators | Importance | Performance | Improvement Priority Quadrants |

|---|---|---|---|

| SEC4 | 0.082 | 60.135 | First priority area |

| TMS4 | 0.082 | 66.667 | Second priority area |

| SEC3 | 0.081 | 62.275 | Second priority area |

| TMS3 | 0.080 | 66.104 | Second priority area |

| TMS2 | 0.079 | 64.865 | Second priority area |

| OGP2 | 0.077 | 67.905 | Second priority area |

| OGP1 | 0.077 | 67.680 | Second priority area |

| SEC2 | 0.076 | 60.360 | First priority area |

| LTS2 | 0.075 | 56.907 | First priority area |

| SEC1 | 0.073 | 57.995 | First priority area |

| TMS1 | 0.072 | 64.865 | Second priority area |

| OGP5 | 0.071 | 57.658 | First priority area |

| TWC3 | 0.071 | 66.667 | Second priority area |

| TWC1 | 0.069 | 61.411 | Second priority area |

| OGP4 | 0.069 | 58.446 | First priority area |

| LTS1 | 0.069 | 59.009 | First priority area |

| TWC2 | 0.069 | 62.312 | Second priority area |

| LTS3 | 0.067 | 57.958 | Third priority area |

| TC2 | 0.067 | 52.365 | Third priority area |

| TC1 | 0.065 | 55.631 | Third priority area |

| LTS4 | 0.065 | 66.104 | Fourth priority area |

| TC5 | 0.063 | 53.041 | Third priority area |

| TWC4 | 0.063 | 68.581 | Fourth priority area |

| OGP3 | 0.063 | 58.333 | Third priority area |

| PPS6 | 0.061 | 60.923 | Fourth priority area |

| TC4 | 0.059 | 46.622 | Third priority area |

| PPS5 | 0.058 | 63.401 | Fourth priority area |

| PPS3 | 0.057 | 63.288 | Fourth priority area |

| PPS2 | 0.057 | 67.005 | Fourth priority area |

| PPS4 | 0.055 | 59.009 | Third priority area |

| TC3 | 0.054 | 37.725 | Third priority area |

| PPS1 | 0.053 | 69.932 | Fourth priority area |

| Mean | 0.068 | 60.662 |

| Constructs | Importance | Performance | Improvement Priority Quadrants |

|---|---|---|---|

| LTS2 | 0.084 | 56.907 | First priority area |

| TWC3 | 0.080 | 66.667 | Second priority area |

| TWC1 | 0.078 | 61.411 | Second priority area |

| SEC4 | 0.077 | 60.135 | First priority area |

| LTS1 | 0.077 | 59.009 | First priority area |

| TWC2 | 0.077 | 62.312 | Second priority area |

| TMS4 | 0.077 | 66.667 | Second priority area |

| SEC3 | 0.076 | 62.275 | Second priority area |

| LTS3 | 0.075 | 57.958 | First priority area |

| TMS3 | 0.075 | 66.104 | Second priority area |

| TMS2 | 0.074 | 64.865 | Second priority area |

| LTS4 | 0.073 | 66.104 | Second priority area |

| OGP2 | 0.073 | 67.905 | Second priority area |

| OGP1 | 0.072 | 67.680 | Second priority area |

| SEC2 | 0.071 | 60.360 | First priority area |

| TWC4 | 0.071 | 68.581 | Second priority area |

| PPT4 | 0.071 | 63.739 | Second priority area |

| SEC1 | 0.068 | 57.995 | First priority area |

| PPA2 | 0.068 | 56.644 | First priority area |

| TMS1 | 0.068 | 64.865 | Second priority area |

| PPA5 | 0.067 | 57.095 | First priority area |

| OGP5 | 0.067 | 57.658 | First priority area |

| PPT1 | 0.067 | 65.878 | Second priority area |

| PPT2 | 0.066 | 65.991 | Second priority area |

| PPA1 | 0.065 | 57.432 | First priority area |

| PPT6 | 0.065 | 64.414 | Second priority area |

| OGP4 | 0.065 | 58.446 | First priority area |

| PPT3 | 0.065 | 67.005 | Second priority area |

| PPA4 | 0.065 | 55.293 | First priority area |

| PPA3 | 0.064 | 58.446 | First priority area |

| PPT5 | 0.063 | 65.541 | Second priority area |

| OGP3 | 0.059 | 58.333 | Third priority area |

| TC2 | 0.039 | 52.365 | Third priority area |

| TC1 | 0.038 | 55.631 | Third priority area |

| TC5 | 0.037 | 53.041 | Third priority area |

| PPB6 | 0.036 | 60.923 | Fourth priority area |

| PPB5 | 0.035 | 63.401 | Third priority area |

| TC4 | 0.035 | 46.622 | Third priority area |

| PPB3 | 0.034 | 63.288 | Fourth priority area |

| PPB2 | 0.034 | 67.005 | Fourth priority area |

| PPB4 | 0.033 | 59.009 | Third priority area |

| PPB1 | 0.031 | 69.932 | Fourth priority area |

| TC3 | 0.031 | 37.725 | Third priority area |

| Mean | 0.062 | 60.899 |

| Indicators | Importance | Performance | Improvement Priority Quadrants |

|---|---|---|---|

| TC2 | 0.080 | 52.365 | First priority area |

| TC1 | 0.079 | 55.631 | First priority area |

| SEC4 | 0.077 | 60.135 | Second priority area |

| TC5 | 0.076 | 53.041 | First priority area |

| TMS4 | 0.076 | 66.667 | Second priority area |

| SEC3 | 0.075 | 62.275 | Second priority area |

| TMS3 | 0.074 | 66.104 | Second priority area |

| TMS2 | 0.074 | 64.865 | Second priority area |

| OGP2 | 0.072 | 67.905 | First priority area |

| TC4 | 0.072 | 46.622 | Second priority area |

| OGP1 | 0.071 | 67.680 | Second priority area |

| SEC2 | 0.070 | 60.360 | Second priority area |

| LTS2 | 0.070 | 56.907 | First priority area |

| SEC1 | 0.068 | 57.995 | First priority area |

| TWC3 | 0.067 | 66.667 | Second priority area |

| TMS1 | 0.067 | 64.865 | Third priority area |

| OGP5 | 0.067 | 57.658 | Fourth priority area |

| TWC1 | 0.065 | 61.411 | Fourth priority area |

| TC3 | 0.065 | 37.725 | Third priority area |

| OGP4 | 0.065 | 58.446 | Third priority area |

| LTS1 | 0.064 | 59.009 | Third priority area |

| TWC2 | 0.064 | 62.312 | Third priority area |

| LTS3 | 0.063 | 57.958 | Third priority area |

| LTS4 | 0.061 | 66.104 | Fourth priority area |

| TWC4 | 0.059 | 68.581 | Fourth priority area |

| OGP3 | 0.058 | 58.333 | Third priority area |

| Mean | 0.069 | 59.908 |

References

- Assaf, S.A.; Al-Hejji, S. Causes of Delay in Large Construction Projects. Int. J. Proj. Manag. 2006, 24, 349–357. [Google Scholar] [CrossRef]

- Olawale, Y.A. Challenges to Prevent in Practice for Effective Cost and Time Control of Construction Projects. J. Constr. Eng. Proj. 2020, 10, 16–32. [Google Scholar]

- Flyvbjerg, B.; Holm, M.K.S.; Buhl, S.L. How Common and How Large Are Cost Overruns in Transport Infrastructure Projects? Transp. Rev. 2003, 23, 71–88. [Google Scholar] [CrossRef]

- KPMG International. KPMG International Cooperative 2015 Global Construction Project Owner’s Survey; KPMG International Cooperative: Zug, Switzerland, 2015. [Google Scholar]

- Orgut, R.E.; Batouli, M.; Zhu, J.; Mostafavi, A.; Jaselskis, E.J. Critical Factors for Improving Reliability of Project Control Metrics throughout Project Life Cycle. J. Manag. Eng. 2020, 36, 04019033. [Google Scholar] [CrossRef]

- CII (Construction Industry Institute). Performance Assessment; CII: Austin, TX, USA, 2012. [Google Scholar]

- Alotaibi, R.; Sohail, M.; Edum-Fotwe, F.T.; Soetanto, R. Determining Project Control System Effectiveness in Construction Project Delivery. Eng. Constr. Archit. Manag. 2025; ahead of print. [Google Scholar] [CrossRef]

- Jawad, S.; Ledwith, A.; Khan, R. Project Control System (PCS) Implementation in Engineering and Construction Projects: An Empirical Study in Saudi’s Petroleum and Chemical Industry. Eng. Constr. Archit. Manag. 2022, 31, 181–207. [Google Scholar] [CrossRef]

- Baars, W.; Harmsen, H.; Kramer, R.; Sesink, L.; Van Zundert, J. Project Management Handbook DANS—Data Archiving and Networked Services The Hague—2006; DANS: The Hague, The Netherlands, 2006. [Google Scholar]

- Datta, A. Integrated Project Controls Can Help Avoid Major Disappointments on Mega-Projects. Available online: https://www.turnerandtownsend.com/en/perspectives/integrated-project-controls-can-help-avoid-major-disappointments-on-mega-projects/ (accessed on 16 July 2023).

- Maqsoom, A.; Hamad, M.; Ashraf, H.; Thaheem, M.J.; Umer, M. Managerial Control Mechanisms and Their Influence on Project Performance: An Investigation of the Moderating Role of Complexity Risk. Eng. Constr. Archit. Manag. 2020, 27, 2451–2475. [Google Scholar] [CrossRef]

- Yean, F.; Ling, Y.; Ang, W.T. Using Control Systems to Improve Construction Project Outcomes. Eng. Constr. Archit. Manag. 2013, 20, 576–588. [Google Scholar] [CrossRef]

- Alsulami, B.T. Risk Management Planning for Project Control through Risk Analysis: A Petroleum Pipeline-Laying Project. Buildings 2025, 15, 87. [Google Scholar] [CrossRef]

- Alsuliman, J.A. Causes of Delay in Saudi Public Construction Projects. Alex. Eng. J. 2019, 58, 801–808. [Google Scholar] [CrossRef]

- Alshammari, A.; Ghazali, F.E.M. A Comprehensive Review of the Factors and Strategies to Mitigate Construction Projects Delays in Saudi Arabia. Open Constr. Build. Technol. J. 2024, 18, e18748368318470. [Google Scholar] [CrossRef]

- Aldossari, K.M. Cost and Schedule Performance in Higher Education Construction Projects in Saudi Arabia. Adv. Civ. Eng. 2025, 2025, 7448384. [Google Scholar] [CrossRef]

- Willems, L.L.; Vanhoucke, M. Classification of Articles and Journals on Project Control and Earned Value Management. Int. J. Proj. Manag. 2015, 33, 1610–1634. [Google Scholar] [CrossRef]

- Hazir, Ö. A Review of Analytical Models, Approaches and Decision Support Tools in Project Monitoring and Control. Int. J. Proj. Manag. 2015, 33, 808–815. [Google Scholar] [CrossRef]

- Müller, R.; Lecoeuvre, L. Operationalizing Governance Categories of Projects. Int. J. Proj. Manag. 2014, 32, 1346–1357. [Google Scholar] [CrossRef]

- Zwikael, O.; Smyrk, J. Project Governance: Balancing Control and Trust in Dealing with Risk. Int. J. Proj. Manag. 2015, 33, 852–862. [Google Scholar] [CrossRef]

- McGrath, J.E. Groups: Interaction and Performance; Prentice-Hall: Hoboken, NJ, USA, 1984; p. 287. [Google Scholar]

- Hackman, J.R.; Morris, C.G. Group Tasks, Group Interaction Process, and Group Performance Effectiveness: A Review and Proposed Integration. Adv. Exp. Soc. Psychol. 1975, 8, 45–99. [Google Scholar] [CrossRef]

- Ilgen, D.R.; Hollenbeck, J.R.; Johnson, M.; Jundt, D. Teams in Organizations: From Input-Process-Output Models to IMOI Models. Annu. Rev. Psychol. 2005, 56, 517–543. [Google Scholar] [CrossRef]

- Mathieu, J.; Maynard, T.M.; Rapp, T.; Gilson, L. Team Effectiveness 1997–2007: A Review of Recent Advancements and a Glimpse into the Future. J. Manag. 2008, 34, 410–476. [Google Scholar] [CrossRef]

- Wang, D.; Jia, J.; Jiang, S.; Liu, T.; Ma, G. How Team Voice Contributes to Construction Project Performance: The Mediating Role of Project Learning and Project Reflexivity. Buildings 2023, 13, 1599. [Google Scholar] [CrossRef]

- Kwofie, T.E.; Alhassan, A.; Botchway, E.; Afranie, I. Factors Contributing towards the Effectiveness of Construction Project Teams. Int. J. Constr. Manag. 2015, 15, 170–178. [Google Scholar] [CrossRef]

- Loganathan, S.; Forsythe, P. Unravelling the Influence of Teamwork on Trade Crew Productivity: A Review and a Proposed Framework. Constr. Manag. Econ. 2020, 38, 1040–1060. [Google Scholar] [CrossRef]

- Mubarak, S. Construction Project Scheduling and Control; Wiley: Hoboken, NJ, USA, 2015. [Google Scholar]

- Olawale, Y. Project Control Methods and Best Practices: Achieving Project Success; Business Expert Press: Hampton, NJ, USA, 2022. [Google Scholar]

- Kerzner, H. Project Management: A Systems Approach to Planning, Scheduling, and Controlling, 12th ed.; Wiley: Hoboken, NJ, USA, 2017. [Google Scholar]

- Urgiles, P.; Sebastian, M.A.; Claver, J. Proposal and Application of a Methodology to Improve the Control and Monitoring of Complex Hydroelectric Power Station Construction Projects. Appl. Sci. 2020, 10, 7913. [Google Scholar] [CrossRef]

- Le, A.T.H.; Sutrisna, M. Project Cost Control System and Enabling-Factors Model: PLS-SEM Approach and Importance-Performance Map Analysis. Eng. Constr. Archit. Manag. 2023, 31, 2513–2535. [Google Scholar] [CrossRef]

- Al-khalil, M.I.; Al-ghafly, M.A. Delay in Public Utility Projects in Saudi Arabia. Int. J. Proj. Manag. 1999, 17, 101–106. [Google Scholar] [CrossRef]

- Alofi, A.; Kashiwagi, J.; Kashiwagi, D. The Perception of the Government and Private Sectors on the Procurement System Delivery Method in Saudi Arabia. Procedia Eng. 2016, 145, 1394–1401. [Google Scholar] [CrossRef][Green Version]

- Mahamid, I. Schedule Delay in Saudi Arabia Road Construction Projects: Size, Estimate, Determinants and Effects. Int. J. Archit. Eng. Constr. 2017, 6, 51–58. [Google Scholar] [CrossRef]

- Park, J.E. Schedule Delays of Major Projects: What Should We Do about It? Transp. Rev. 2021, 41, 814–832. [Google Scholar] [CrossRef]

- Huo, T.; Ren, H.; Cai, W.; Shen, G.Q.; Liu, B.; Zhu, M.; Wu, H. Measurement and Dependence Analysis of Cost Overruns in Megatransport Infrastructure Projects: Case Study in Hong Kong. J. Constr. Eng. Manag. 2018, 144, 05018001. [Google Scholar] [CrossRef]

- Shehu, Z.; Endut, I.R.; Akintoye, A.; Holt, G.D. Cost Overrun in the Malaysian Construction Industry Projects: A Deeper Insight. Int. J. Proj. Manag. 2014, 32, 1471–1480. [Google Scholar] [CrossRef]

- Amoatey, C.T.; Ankrah, A.N.O. Exploring Critical Road Project Delay Factors in Ghana. J. Facil. Manag. 2017, 15, 110–127. [Google Scholar] [CrossRef]

- Jawad, S.; Ledwith, A.; Panahifar, F. Enablers and Barriers to the Successful Implementation of Project Control Systems in the Petroleum and Chemical Industry. Int. J. Eng. Bus. Manag. 2018, 10, 1847979017751834. [Google Scholar] [CrossRef]

- Olawale, Y.; Sun, M. Construction Project Control in the UK: Current Practice, Existing Problems and Recommendations for Future Improvement. Int. J. Proj. Manag. 2015, 33, 623–637. [Google Scholar] [CrossRef]

- Sandbhor, S.; Choudhary, S.; Arora, A.; Katoch, P. Identification of Factors Leading to Construction Project Success Using Principal Component Analysis. Int. J. Appl. Eng. Res. 2014, 9, 4169–4180. [Google Scholar]

- Jawad, S.; Ledwith, A. A Measurement Model of Project Control Systems Success for Engineering and Construction Projects Case Study: Contractor Companies in Saudi’s Petroleum and Chemical Industry. Eng. Constr. Archit. Manag. 2021, 29, 1218–1240. [Google Scholar] [CrossRef]

- Waqar, A.; Othman, I.B.; Mansoor, M.S. Building Information Modeling (BIM) Implementation and Construction Project Success in Malaysian Construction Industry: Mediating Role of Project Control. Eng. Constr. Archit. Manag. 2024; ahead of print. [Google Scholar] [CrossRef]

- Dallasega, P.; Marengo, E.; Revolti, A. Strengths and Shortcomings of Methodologies for Production Planning and Control of Construction Projects: A Systematic Literature Review and Future Perspectives. Prod. Plan. Control 2021, 32, 257–282. [Google Scholar] [CrossRef]

- Jaskula, K.; Kifokeris, D.; Papadonikolaki, E.; Rovas, D. Common Data Environments in Construction: State-of-the-Art and Challenges for Practical Implementation. Constr. Innov. 2024. [Google Scholar] [CrossRef]

- Pellerin, R.; Perrier, N. A Review of Methods, Techniques and Tools for Project Planning and Control. Int. J. Prod. Res. 2019, 57, 2160–2178. [Google Scholar] [CrossRef]

- Olawale, Y.; Sun, M. PCIM: Project Control and Inhibiting-Factors Management Model. J. Manag. Eng. 2013, 29, 60–70. [Google Scholar] [CrossRef]

- Joslin, R.; Müller, R. The Relationship between Project Governance and Project Success. Int. J. Proj. Manag. 2016, 34, 613–626. [Google Scholar] [CrossRef]

- Too, E.G.; Weaver, P. The Management of Project Management: A Conceptual Framework for Project Governance. Int. J. Proj. Manag. 2014, 32, 1382–1394. [Google Scholar] [CrossRef]

- Samset, K.; Volden, G.H. Front-End Definition of Projects: Ten Paradoxes and Some Reflections Regarding Project Management and Project Governance. Int. J. Proj. Manag. 2016, 34, 297–313. [Google Scholar] [CrossRef]

- Baumol, W.J.; Stewart, M. On the Behavioral Theory of the Firm. Corp. Econ. 1971, 118–143. [Google Scholar]

- Cyert, R.M.; March, J.G. A Behavioral Theory of the Firm 1963. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=1496208 (accessed on 1 January 2024).

- Todeva, E. Behavioural Theory of the Firm 1. Int. Encycl. Organ. Stud. Sage 2007, 1, 113–118. [Google Scholar]

- BSI. Organisation and Digitisation of Information About Buildings and Civil Engineering Works, Including Building Information Modelling (BIM) Part 1: Concepts and Principles; BSI: London, UK, 2018. [Google Scholar]

- Dwivedi, Y.K.; Wade, M.R.; Schneberger, S.L. Informations Systems Theory: Volume 2; Springer: Berlin/Heidelberg, Germany, 2012; Volume 28, p. 461. [Google Scholar] [CrossRef]

- Ahmad, I.B.; Omar, S.S.; Ali, M.; Ali, S. A Conceptual Study of TOE and Organisational Performance. Proc. Eur. Conf. Innov. Entrep. ECIE 2023, 2, 962–968. [Google Scholar] [CrossRef]

- Tornatzky, L.G.; Fleischer, M. The Processes of Technological Innovation; D. C. Heath and Company: Lexington, MA, USA, 1990; Volume 298. [Google Scholar]

- Barney, J. Firm Resources and Sustained Competitive Advantage. J. Manag. 1991, 17, 99–120. [Google Scholar] [CrossRef]

- Wang, J.; Yuan, Z.; He, Z.; Zhou, F.; Wu, Z. Critical Factors Affecting Team Work Efficiency in BIM-Based Collaborative Design: An Empirical Study in China. Buildings 2021, 11, 486. [Google Scholar] [CrossRef]

- Alnaser, A.A.; Al-Gahtani, K.S.; Alsanabani, N.M. Building Information Modeling Impact on Cost Overrun Risk Factors and Interrelationships. Appl. Sci. 2024, 14, 10711. [Google Scholar] [CrossRef]

- Murguia, D.; Vasquez, C.; Demian, P.; Soetanto, R. BIM Adoption among Contractors: A Longitudinal Study in Peru. J. Constr. Eng. Manag. 2023, 149, 04022140. [Google Scholar] [CrossRef]

- Ayman, H.M.; Mahfouz, S.Y.; Alhady, A. Integrated EDM and 4D BIM-Based Decision Support System for Construction Projects Control. Buildings 2022, 12, 315. [Google Scholar] [CrossRef]

- Lin, J.J.; Golparvar-Fard, M. Visual and Virtual Production Management System for Proactive Project Controls. J. Constr. Eng. Manag. 2021, 147, 04021058. [Google Scholar] [CrossRef]

- Gledson, B.J.; Greenwood, D.J. Surveying the Extent and Use of 4D BIM in the UK. J. Inf. Technol. Constr. 2016, 21, 57–71. [Google Scholar]

- Hegazy, T.; Menesi, W. Enhancing the Critical Path Segments Scheduling Technique for Project Control. Can. J. Civ. Eng. 2012, 39, 968–977. [Google Scholar] [CrossRef]

- Roofigari-Esfahan, N.; Paez, A.; Razavi, S.N. Location-Aware Scheduling and Control of Linear Projects: Introducing Space-Time Float Prisms. J. Constr. Eng. Manag. 2015, 141, 06014008. [Google Scholar] [CrossRef]

- Duarte-Vidal, L.; Herrera, R.F.; Atencio, E.; Muñoz-La Rivera, F. Interoperability of Digital Tools for the Monitoring and Control of Construction Projects. Appl. Sci. 2021, 11, 10370. [Google Scholar] [CrossRef]

- Wang, X.; Yung, P.; Luo, H.; Truijens, M. An Innovative Method for Project Control in LNG Project through 5D CAD: A Case Study. Autom. Constr. 2014, 45, 126–135. [Google Scholar] [CrossRef]

- Morris, P.; Pinto, J. The Wiley Guide to Project Control; John Wiley Sons, Inc.: Hoboken, NJ, USA, 2010. [Google Scholar]

- Pollack, J.; Helm, J.; Adler, D. What Is the Iron Triangle, and How Has It Changed? Int. J. Manag. Proj. Bus. 2018, 11, 527–547. [Google Scholar] [CrossRef]

- Project Management Institute. The Standard for Project Management and a Guide to the Project Management Body of Knowledge (PMBOK Guide); Project Management Institute: Newtown Square, NJ, USA, 2021; ISBN 9781628256642. [Google Scholar]

- Meredith, J.R.; Mantel, S.J. Project Management: A Managerial Approach, 8th ed.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2012. [Google Scholar]

- Turner, J.R.; Müller, R. On the Nature of the Project as a Temporary Organization. Int. J. Proj. Manag. 2003, 21, 1–8. [Google Scholar] [CrossRef]

- Winch, G.M. Managing Construction Projects an Information Processing Approach, 2nd ed.; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2010. [Google Scholar]

- Babbie, E. The Practice of Social Research, 4th ed.; CENGAGE Learning: Boston, MA, USA, 2016. [Google Scholar]

- Bell, E.; Bryman, A.; Harley, B. Business Research Methods, 4th ed.; Oxford University Press: Oxford, UK, 2019. [Google Scholar]

- Hair, J.F.; Risher, J.J.; Sarstedt, M.; Ringle, C.M. When to Use and How to Report the Results of PLS-SEM. Eur. Bus. Rev. 2019, 31, 2–24. [Google Scholar] [CrossRef]

- Batra, S. Exploring the Application of PLS-SEM in Construction Management Research: A Bibliometric and Meta-Analysis Approach. Eng. Constr. Archit. Manag. 2023, 32, 2697–2727. [Google Scholar] [CrossRef]

- Baratta, A. The Triple Constraint, a Triple Illusion the Classical Triple Constraint Exhibit 1—Classical Triple Constraint; PMI: Newtown Square, PA, USA, 2006. [Google Scholar]

- Del Pico, W.J. Project Control Integrating Cost and Schedule in Construction; RSMeans: Greenville, SC, USA, 2013. [Google Scholar]

- Al-Tmeemy, S.M.H.M.; Abdul-Rahman, H.; Harun, Z. Future Criteria for Success of Building Projects in Malaysia. Int. J. Proj. Manag. 2011, 29, 337–348. [Google Scholar] [CrossRef]

- Amoah, A.; Berbegal-Mirabent, J.; Marimon, F. Making the Management of a Project Successful: Case of Construction Projects in Developing Countries. J. Constr. Eng. Manag. 2021, 147, 04021166. [Google Scholar] [CrossRef]

- Idogawa, J.; Bizarrias, F.S.; Câmara, R. Critical Success Factors for Change Management in Business Process Management. Bus. Process Manag. J. 2023, 29, 2009–2033. [Google Scholar] [CrossRef]

- Malik, M.O.; Khan, N. Analysis of ERP Implementation to Develop a Strategy for Its Success in Developing Countries. Prod. Plan. Control 2021, 32, 1020–1035. [Google Scholar] [CrossRef]

- Iyer, K.C.; Jha, K.N. Critical Factors Affecting Schedule Performance: Evidence from Indian Construction Projects. J. Constr. Eng. Manag. 2006, 132, 871–881. [Google Scholar] [CrossRef]

- Back, W.E.; Grau, D.; Mejia-Aguilar, G. Effectiveness Evaluation of Contract Incentives on Project Performance. Int. J. Constr. Educ. Res. 2013, 9, 288–306. [Google Scholar] [CrossRef]

- Jagtap, M.; Kamble, S. Evaluating the Modus Operandi of Construction Supply Chains Using Organization Control Theory. Int. J. Constr. Supply Chain Manag. 2015, 5, 16–33. [Google Scholar] [CrossRef]

- Durmic, N. Factors Influencing Project Success: A Qualitative Research. TEM J. 2020, 9, 1011–1020. [Google Scholar] [CrossRef]

- Sakka, O.; Barki, H.; Côté, L. Relationship between the Interactive Use of Control Systems and the Project Performance: The Moderating Effect of Uncertainty and Equivocality. Int. J. Proj. Manag. 2016, 34, 508–522. [Google Scholar] [CrossRef]

- Hsu, J.S.C.; Shih, S.P.; Li, Y. The Mediating Effects of In-Role and Extra-Role Behaviors on the Relationship between Control and Software-Project Performance. Int. J. Proj. Manag. 2017, 35, 1524–1536. [Google Scholar] [CrossRef]

- Al Khoori, A.A.A.G.; Abdul Hamid, M.S.R. Topology of Project Management Office in United Arab Emirates Project-Based Organizations. Int. J. Sustain. Constr. Eng. Technol. 2022, 13, 8–20. [Google Scholar] [CrossRef]

- Chen, C.C.; Liu, J.Y.C.; Chen, H.G. Discriminative Effect of User Influence and User Responsibility on Information System Development Processes and Project Management. Inf. Softw. Technol. 2011, 53, 149–158. [Google Scholar] [CrossRef]

- Wiener, M.; Mähring, M.; Remus, U.; Saunders, C. Control Configuration and Control Enactment in Information Systems Projects: Review and Expanded Theoretical Framework. MIS Q. 2016, 40, 741–774. [Google Scholar] [CrossRef]

- Martens, A.; Vanhoucke, M. The Impact of Applying Effort to Reduce Activity Variability on the Project Time and Cost Performance. Eur. J. Oper. Res. 2019, 277, 442–453. [Google Scholar] [CrossRef]

- Molenaar, K.R.; Javernick-Will, A.; Bastias, A.G.; Wardwell, M.A.; Saller, K. Construction Project Peer Reviews as an Early Indicator of Project Success. J. Manag. Eng. 2013, 29, 327–333. [Google Scholar] [CrossRef]

- Gustafson, G. Why Project Peer Review? J. Manag. Eng. 1990, 6, 350–354. [Google Scholar] [CrossRef]

- Yujing, W.; Yongkui, L.; Peidong, G. Modelling Construction Project Management Based on System Dynamics. Metall. Min. Ind. 2015, 9, 1056–1061. [Google Scholar]

- Trappey, A.J.C.; Chiang, T.A.; Ke, S. Developing an Intelligent Workflow Management System to Manage Project Processes with Dynamic Resource Control. J. Chinese Inst. Ind. Eng. 2006, 23, 484–493. [Google Scholar] [CrossRef]

- Li, Y.Y.; Chen, P.-H.; Chew, D.A.S.; Teo, C.C.; Ding, R.G. Critical Project Management Factors of AEC Firms for Delivering Green Building Projects in Singapore. J. Constr. Eng. Manag. 2011, 137, 1153–1163. [Google Scholar] [CrossRef]

- Kivilä, J.; Martinsuo, M.; Vuorinen, L. Sustainable Project Management through Project Control in Infrastructure Projects. Int. J. Proj. Manag. 2017, 35, 1167–1183. [Google Scholar] [CrossRef]

- Ghavamifar, K.; Touran, A. Owner’s Risks versus Control in Transit Projects. J. Manag. Eng. 2009, 25, 230–233. [Google Scholar] [CrossRef]

- Almunifi, A.A.; Almutairi, S. Lessons Learned Framework for Efficient Delivery of Construction Projects in Saudi Arabia. Constr. Econ. Build. 2021, 21, 115–141. [Google Scholar] [CrossRef]

- Mähring, M.; Wiener, M.; Remus, U. Getting the Control across: Control Transmission in Information Systems Offshoring Projects. Inf. Syst. J. 2018, 28, 708–728. [Google Scholar] [CrossRef]

- Abu-Hijleh, S.F.; Ibbs, C.W. Systematic Automated Management Exception Reporting. J. Constr. Eng. Manag. 1993, 119, 87–104. [Google Scholar] [CrossRef]

- Franz, B.; Leicht, R.; Molenaar, K.; Messner, J. Impact of Team Integration and Group Cohesion on Project Delivery Performance. J. Constr. Eng. Manag. 2017, 143, 04016088. [Google Scholar] [CrossRef]

- Alkhalifah, S.J.; Tuffaha, F.M.; Al Hadidi, L.A.; Ghaithan, A. Factors Influencing Change Orders in Oil and Gas Construction Projects in Saudi Arabia. Built Environ. Proj. Asset Manag. 2023, 13, 430–452. [Google Scholar] [CrossRef]

- Zhu, L.; Cheung, S.O.; Gao, X.; Li, Q.; Liu, G. Success DNA of a Record-Breaking Megaproject. J. Constr. Eng. Manag. 2020, 146, 05020009. [Google Scholar] [CrossRef]

- Andary, E.G.; Abi Shdid, C.; Chowdhury, A.; Ahmad, I. Integrated Project Delivery Implementation Framework for Water and Wastewater Treatment Plant Projects. Eng. Constr. Archit. Manag. 2019, 27, 609–633. [Google Scholar] [CrossRef]

- Cheng, M.-Y.; Peng, H.-S.; Wu, Y.-W.; Chen, T.-L. Estimate at Completion for Construction Projects Using Evolutionary Support Vector Machine Inference Model. Autom. Constr. 2010, 19, 619–629. [Google Scholar] [CrossRef]

- Rezakhani, P. Hybrid Fuzzy-Bayesian Decision Support Tool for Dynamic Project Scheduling and Control under Uncertainty. Int. J. Constr. Manag. 2022, 22, 2864–2876. [Google Scholar] [CrossRef]

- Ezzeddine, A.; Shehab, L.; Lucko, G.; Hamzeh, F. Forecasting Construction Project Performance with Momentum Using Singularity Functions in LPS. J. Constr. Eng. Manag. 2022, 148, 04022063. [Google Scholar] [CrossRef]

- Xiao, L.; Bie, L.; Bai, X. Controlling the Schedule Risk in Green Building Projects: Buffer Management Framework with Activity Dependence. J. Clean. Prod. 2021, 278, 123852. [Google Scholar] [CrossRef]

- Lee, N.; Rojas, E.M. Visual Representations for Monitoring Project Performance: Developing Novel Prototypes for Improved Communication. J. Constr. Eng. Manag. 2013, 139, 994–1005. [Google Scholar] [CrossRef]

- Aliverdi, R.; Moslemi Naeni, L.; Salehipour, A. Monitoring Project Duration and Cost in a Construction Project by Applying Statistical Quality Control Charts. Int. J. Proj. Manag. 2013, 31, 411–423. [Google Scholar] [CrossRef]

- Raymond, L.; Bergeron, F. Project Management Information Systems: An Empirical Study of Their Impact on Project Managers and Project Success. Int. J. Proj. Manag. 2008, 26, 213–220. [Google Scholar] [CrossRef]

- Sakka, O.; Barki, H.; Côté, L. Interactive and Diagnostic Uses of Management Control Systems in IS Projects: Antecedents and Their Impact on Performance. Inf. Manag. 2013, 50, 265–274. [Google Scholar] [CrossRef]

- Lei, Z.; Hu, Y.; Hua, J.; Marton, B.; Goldberg, P.; Marton, N. An earned-value-analysis (EVA)-based project control framework in large-scale scaffolding projects using linear regression modeling. J. Inf. Technol. Constr. 2022, 27, 630–641. [Google Scholar] [CrossRef]

- Bruni, M.E.; Beraldi, P.; Guerriero, F.; Pinto, E. A Scheduling Methodology for Dealing with Uncertainty in Construction Projects. Eng. Comput. 2011, 28, 1064–1078. [Google Scholar] [CrossRef]

- Mitchell, V.L. Mitchell Knowledge Integration and Information Technology Project Performance. MIS Q. 2006, 30, 919. [Google Scholar] [CrossRef]

- Isaac, S.; Navon, R. Can Project Monitoring and Control Be Fully Automated? Constr. Manag. Econ. 2014, 32, 495–505. [Google Scholar] [CrossRef]

- Association for Project Management. APM Body of Knowledge, 7th ed.; Association for Project Management: Princes Risborough, UK, 2019; ISBN 9781903494820. [Google Scholar]

- Iroroakpo Idoro, G. Influence of the Monitoring and Control Strategies of Indigenous and Expatriate Nigerian Contractors on Project Outcome. J. Constr. Dev. Ctries. 2012, 17, 49–67. [Google Scholar]

- Annamalaisami, C.D.; Kuppuswamy, A. Managing Cost Risks: Toward a Taxonomy of Cost Overrun Factors in Building Construction Projects. ASCE-ASME J. Risk Uncertain. Eng. Syst. Part A Civ. Eng. 2021, 7, 04021021. [Google Scholar] [CrossRef]

- Robert Carr, B.I. Cost, Schedule, and Time Variances and Integration. J. Constr. Eng. Manag. 1993, 119, 245–265. [Google Scholar] [CrossRef][Green Version]

- Nguyen, T.Q.; Yeoh, J.K.-W.; Angelia, N. Predicting Percent Plan Complete through Time Series Analysis. J. Constr. Eng. Manag. 2023, 149, 04023038. [Google Scholar] [CrossRef]

- Song, J.; Martens, A.; Vanhoucke, M. The Impact of a Limited Budget on the Corrective Action Taking Process. Eur. J. Oper. Res. 2020, 286, 1070–1086. [Google Scholar] [CrossRef]

- Votto, R.; Lee Ho, L.; Berssaneti, F. Multivariate Control Charts Using Earned Value and Earned Duration Management Observations to Monitor Project Performance. Comput. Ind. Eng. 2020, 148, 106691. [Google Scholar] [CrossRef]

- Abdul-Rahman, H.; Wang, C.; Binti Muhammad, N. Project Performance Monitoring Methods Used in Malaysia and Perspectives of Introducing EVA as a Standard Approach. J. Civ. Eng. Manag. 2011, 17, 445–455. [Google Scholar] [CrossRef]

- Hanna, A.S.; Asce, F. Using the Earned Value Management System to Improve Electrical Project Control. J. Constr. Eng. Manag. 2012, 138, 449–457. [Google Scholar] [CrossRef]

- Salehipour, A.; Naeni, L.M.; Khanbabaei, R.; Javaheri, A. Lessons Learned from Applying the Individuals Control Charts to Monitoring Autocorrelated Project Performance Data. J. Constr. Eng. Manag. 2016, 142, 04015105. [Google Scholar] [CrossRef]

- Colin, J.; Vanhoucke, M. A Comparison of the Performance of Various Project Control Methods Using Earned Value Management Systems. Expert Syst. Appl. 2015, 42, 3159–3175. [Google Scholar] [CrossRef]

- Martens, A.; Vanhoucke, M. A Buffer Control Method for Top-down Project Control. Eur. J. Oper. Res. 2017, 262, 274–286. [Google Scholar] [CrossRef]

- Assaad, R.; El-Adaway, I.H.; Abotaleb, I.S. Predicting Project Performance in the Construction Industry. J. Constr. Eng. Manag. 2020, 146, 04020030. [Google Scholar] [CrossRef]

- Lipke, W. Schedule Adherence and Rework. PM World Today 2011, 13, 1–14. [Google Scholar]

- Hu, X.; Cui, N.; Demeulemeester, E. Effective Expediting to Improve Project Due Date and Cost Performance through Buffer Management. Int. J. Prod. Res. 2015, 53, 1460–1471. [Google Scholar] [CrossRef]

- Marques, G.; Gourc, D.; Lauras, M. Multi-Criteria Performance Analysis for Decision Making in Project Management. Int. J. Proj. Manag. 2011, 29, 1057–1069. [Google Scholar] [CrossRef]

- Li, M.; Niu, D.; Ji, Z.; Cui, X.; Sun, L. Forecast Research on Multidimensional Influencing Factors of Global Offshore Wind Power Investment Based on Random Forest and Elastic Net. Sustainability 2021, 13, 2262. [Google Scholar] [CrossRef]

- Alkhattabi, L.; Alkhard, A.; Gouda, A. Effects of Change Orders on the Budget of the Public Sector Construction Projects in the Kingdom of Saudi Arabia. Results Eng. 2023, 20, 101628. [Google Scholar] [CrossRef]

- Yap, J.B.H.; Skitmore, M.; Gray, J.; Shavarebi, K. Systemic View to Understanding Design Change Causation and Exploitation of Communications and Knowledge. Proj. Manag. J. 2019, 50, 288–305. [Google Scholar] [CrossRef]

- Naji, K.K.; Gunduz, M.; Naser, A.F. The Effect of Change-Order Management Factors on Construction Project Success: A Structural Equation Modeling Approach. J. Constr. Eng. Manag. 2022, 148, 04022085. [Google Scholar] [CrossRef]

- Khalafallah, A.; Asce, M.; Shalaby, Y. Change Orders: Automating Comparative Data Analysis and Controlling Impacts in Public Projects. J. Constr. Eng. Manag. 2019, 145, 04019064. [Google Scholar] [CrossRef]

- Motaleb, O.; Kishk, M. Controlling the Risk of Construction Delay in the Middle East: State-of-the-Art Review. In Proceedings of the COBRA 2011—Proceedings of RICS Construction and Property Conference, Manchester, UK, 12–13 September 2011; pp. 1351–1363. [Google Scholar]

- Obondi, K.C. The Utilization of Project Risk Monitoring and Control Practices and Their Relationship with Project Success in Construction Projects. J. Proj. Manag. 2022, 7, 35–52. [Google Scholar] [CrossRef]

- Dey, P.; Tabucanon, M.T. Planning for Project Control through Risk Analysis: A Petroleum Pipeline-Laying Project. Int. J. Proj. Manag. 1994, 12, 23–33. [Google Scholar] [CrossRef]

- Blumberg, B.; Cooper, D.; Schindler, P. Business Research Methods, 4th ed.; McGraw-Hill Education: Maidenhead, UK, 2014; ISBN 9780077157487. [Google Scholar]

- Greener, S.L. Business Research Methods; BookBoon: London, UK, 2008. [Google Scholar]

- Harlacher, J. An Educator’s Guide to Questionnaire Development; REL 2016-108; Regional Educational Laboratory Central: Tallahassee, FL, USA, 2016. [Google Scholar]

- Creswell, J.W.; Creswell, J.D. Research Design: Qualitative, Quantitative & Mixed Methods Approaches, 5th ed.; SAGE: Newcastle upon Tyne, UK, 2018. [Google Scholar]

- Hair, J.F.; Ringle, C.M.; Sarstedt, M.; Hult, G.T.M. Partial Least Squares Structural Equation Modeling; Springer Nature: London, UK, 2021; ISBN 9783319574134. [Google Scholar]

- Mackenzie, S.B.; Podsakoff, P.M.; Podsakoff, N.P. Linked References Are Available on JSTOR for This Article: Construct Measurement and Validation Procedures in MIS and Behavioral Research: Integrating New and Existing Techniques1. MIS Q. 2011, 35, 293–334. [Google Scholar] [CrossRef]

- Wilson, M. Constructing Measures: An Item Response Modeling Approach, 2nd ed.; Routledge: London, UK, 2023; pp. 1–364. [Google Scholar]

- Edmondson, D.R. Likert Scales: A History. In Proceedings of the CHARM 2005: Conference on Historical Analysis & Research in Marketing, Long Beach, CA, USA, 28 April–1 May 2005; pp. 127–133. [Google Scholar]

- Salant, P.; Dillman, D. How to Conduct Your Own Survey; John Wiley & Sons: Hoboken, NJ, USA, 1994. [Google Scholar]

- Hair, J.; Hollingsworth, C.L.; Randolph, A.B.; Chong, A.Y.L. An Updated and Expanded Assessment of PLS-SEM in Information Systems Research. Ind. Manag. Data Syst. 2017, 117, 442–458. [Google Scholar] [CrossRef]

- Kock, N.; Hadaya, P. Minimum sample size estimation in PLS-SEM: The inverse square root and gamma-exponential methods. Inf. Syst. J. 2018, 28, 227–261. [Google Scholar] [CrossRef]

- Armstrong, J.S.; Overton, T.S. Estimating Nonresponse Bias in Mail Surveys. J. Mark. Res. 1977, 14, 396. [Google Scholar] [CrossRef]

- Hair, J.F.; Babin, B.J.; Anderson, R.E.; Black, W.C. Multivariate Data Analysis, 8th ed.; Cengage Learning EMEA: Hampshire, UK, 2018. [Google Scholar]

- Ringle, C.M.; Sarstedt, M. Gain More Insight from Your PLS-SEM Results the Importance-Performance Map Analysis. Ind. Manag. Data Syst. 2016, 116, 1865–1886. [Google Scholar] [CrossRef]

- Gefen, D.; Straub, D. A practical guide to factorial validity using pls-graph: Tutorial and annotated example. Commun. Assoc. Inf. Syst. 2005, 21, 16. [Google Scholar] [CrossRef]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A New Criterion for Assessing Discriminant Validity in Variance-Based Structural Equation Modeling. J. Acad. Mark. Sci. 2015, 43, 115–135. [Google Scholar] [CrossRef]

- Henseler, J.; Dijkstra, T.K.; Sarstedt, M.; Ringle, C.M.; Diamantopoulos, A.; Straub, D.W.; Ketchen, D.J.; Hair, J.F.; Hult, G.T.M.; Calantone, R.J. Common Beliefs and Reality About PLS: Comments on Rönkkö and Evermann (2013). Organ. Res. Methods 2014, 17, 182–209. [Google Scholar] [CrossRef]

- Hair, J.F.; Sarstedt, M. Factors versus Composites: Guidelines for Choosing the Right Structural Equation Modeling Method. Proj. Manag. J. 2019, 50, 619–624. [Google Scholar] [CrossRef]

- Streukens, S.; Leroi-Werelds, S. Bootstrapping and PLS-SEM: A Step-by-Step Guide to Get More out of Your Bootstrap Results. Eur. Manag. J. 2016, 34, 618–632. [Google Scholar] [CrossRef]

- Akter, S.; D’Ambra, J.; Ray, P. Trustworthiness in MHealth Information Services: An Assessment of a Hierarchical Model with Mediating and Moderating Effects Using Partial Least Squares (PLS). J. Am. Soc. Inf. Sci. Technol. 2011, 62, 100–116. [Google Scholar] [CrossRef]

- Cohen, J. The Analysis of Variance and Covariance. In Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 1988; pp. 273–403. [Google Scholar]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences. In Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Routledge: New York, NY, USA, 2013. [Google Scholar] [CrossRef]

- Sarstedt, M.; Cheah, J.H. Partial least squares structural equation modeling using SmartPLS: A software review. J. Mark. Anal. 2019, 7, 196–202. [Google Scholar] [CrossRef]

- Dang, C.N.; Le-Hoai, L. Critical Success Factors for Implementation Process of Design-Build Projects in Vietnam. J. Eng. Des. Technol. 2016, 14, 17–32. [Google Scholar] [CrossRef]

- Mitra, S.; Wee Kwan Tan, A. Lessons Learned from Large Construction Project in Saudi Arabia. Benchmarking Int. J. 2012, 19, 308–324. [Google Scholar] [CrossRef]

- Love, P.E.D.; Zhou, J.; Matthews, J. Project Controls for Electrical, Instrumentation and Control Systems: Enabling Role of Digital System Information Modelling. Autom. Constr. 2019, 103, 202–212. [Google Scholar] [CrossRef]

- Vanhoucke, M. Tolerance Limits for Project Control: An Overview of Different Approaches. Comput. Ind. Eng. 2019, 127, 467–479. [Google Scholar] [CrossRef]

- Ong, C.H.; Bahar, T. Factors Influencing Project Management Effectiveness in the Malaysian Local Councils. Int. J. Manag. Proj. Bus. 2019, 12, 1146–1164. [Google Scholar] [CrossRef]

- Zhang, J.; Yuan, J.; Mahmoudi, A.; Ji, W.; Fang, Q. A Data-Driven Framework for Conceptual Cost Estimation of Infrastructure Projects Using XGBoost and Bayesian Optimization. J. Asian Archit. Build. Eng. 2025, 24, 751–774. [Google Scholar] [CrossRef]

- Olawale, Y.A.; Sun, M. Cost and Time Control of Construction Projects: Inhibiting Factors and Mitigating Measures in Practice. Constr. Manag. Econ. 2010, 28, 509–526. [Google Scholar] [CrossRef]

- Amer, F.; Jung, Y.; Golparvar-Fard, M. Transformer Machine Learning Language Model for Auto-Alignment of Long-Term and Short-Term Plans in Construction. Autom. Constr. 2021, 132, 103929. [Google Scholar] [CrossRef]

- Pal, A.; Lin, J.J.; Hsieh, S.H.; Golparvar-Fard, M. Automated Vision-Based Construction Progress Monitoring in Built Environment through Digital Twin. Dev. Built Environ. 2023, 16, 100247. [Google Scholar] [CrossRef]

- Sánchez-Rodríguez, A.; Soilán, M.; Hussain, O.A.I.; Moehler, R.C.; Walsh, S.D.C.; Ahiaga-Dagbui, D.D. Minimizing Cost Overrun in Rail Projects through 5D-BIM: A Systematic Literature Review. Infrastructures 2023, 8, 93. [Google Scholar] [CrossRef]

- Chen, Q.; Hall, D.M.; Adey, B.T.; Haas, C.T. Identifying Enablers for Coordination across Construction Supply Chain Processes: A Systematic Literature Review. Eng. Constr. Archit. Manag. 2020, 28, 1083–1113. [Google Scholar] [CrossRef]

- Chou, J.-S.; Irawan, N.; Pham, A.-D. Project Management Knowledge of Construction Professionals: Cross-Country Study of Effects on Project Success. J. Constr. Eng. Manag. 2013, 139, 04013015. [Google Scholar] [CrossRef]

- Jahangoshai Rezaee, M.; Yousefi, S.; Chakrabortty, R.K. Analysing Causal Relationships between Delay Factors in Construction Projects: A Case Study of Iran. Int. J. Manag. Proj. Bus. 2021, 14, 412–444. [Google Scholar] [CrossRef]

- Aldossari, K.M. Client Project Managers’ Knowledge and Skill Competencies for Managing Public Construction Projects. Results Eng. 2024, 24, 103546. [Google Scholar] [CrossRef]

- Alshihri, S.; Al-Gahtani, K.; Almohsen, A. Risk Factors That Lead to Time and Cost Overruns of Building Projects in Saudi Arabia. Buildings 2022, 12, 902. [Google Scholar] [CrossRef]

- Bajjou, M.S.; Chafi, A. Empirical Study of Schedule Delay in Moroccan Construction Projects. Int. J. Constr. Manag. 2020, 20, 783–800. [Google Scholar] [CrossRef]

- Alotaibi, A.; Edum-Fotwe, F.; Price, A.D.F. Critical Barriers to Social Responsibility Implementation within Mega-Construction Projects: The Case of the Kingdom of Saudi Arabia. Sustainability 2019, 11, 1755. [Google Scholar] [CrossRef]

- Alotaibi, S.; Martinez-Vazquez, P.; Baniotopoulos, C. Mega-Projects in Construction: Barriers in the Implementation of Circular Economy Concepts in the Kingdom of Saudi Arabia. Buildings 2024, 14, 1298. [Google Scholar] [CrossRef]

- Othayman, M.B.; Meshari, A.; Mulyata, J.; Debrah, Y. The Challenges Confronting the Delivery of Training and Development Programs in Saudi Arabia: A Critical Review of Research. Am. J. Ind. Bus. Manag. 2020, 10, 1611–1639. [Google Scholar] [CrossRef]

- Mosly, I. Construction Cost-Influencing Factors: Insights from a Survey of Engineers in Saudi Arabia. Buildings 2024, 14, 3399. [Google Scholar] [CrossRef]

- Alragabah, Y.A.; Ahmed, M. Impact Assessment of Critical Success Factors (CSFs) in Public Construction Projects of Saudi Arabia. Front. Eng. Built Environ. 2024, 4, 184–195. [Google Scholar] [CrossRef]

- Mahamid, I. Micro and Macro Level of Dispute Causes in Residential Building Projects: Studies of Saudi Arabia. J. King Saud Univ.—Eng. Sci. 2016, 28, 12–20. [Google Scholar] [CrossRef]

- Alotaibi, N.O.; Sutrisna, M.; Chong, H.-Y. Guidelines of Using Project Management Tools and Techniques to Mitigate Factors Causing Delays in Public Construction Projects in Kingdom of Saudi Arabia. J. Eng. Proj. Prod. Manag. 2016, 6, 90. [Google Scholar] [CrossRef]

- Alahmadi, N.; Alghaseb, M. Challenging Tendering-Phase Factors in Public Construction Projects—A Delphi Study in Saudi Arabia. Buildings 2022, 12, 924. [Google Scholar] [CrossRef]

- Sanni-Anibire, M.O.; Mohamad Zin, R.; Olatunji, S.O. Causes of Delay in the Global Construction Industry: A Meta Analytical Review. Int. J. Constr. Manag. 2022, 22, 1395–1407. [Google Scholar] [CrossRef]

- Alsugair, A.M. Cost Deviation Model of Construction Projects in Saudi Arabia Using PLS-SEM. Sustainability 2022, 14, 16391. [Google Scholar] [CrossRef]

- Yap, J.B.H.; Lim, B.L.; Skitmore, M.; Gray, J. Criticality of Project Knowledge and Experience in the Delivery of Construction Projects. J. Eng. Des. Technol. 2022, 20, 800–822. [Google Scholar] [CrossRef]

- Mahmoodzadeh, A.; Nejati, H.R.; Mohammadi, M. Optimized Machine Learning Modelling for Predicting the Construction Cost and Duration of Tunnelling Projects. Autom. Constr. 2022, 139, 104305. [Google Scholar] [CrossRef]

- Alghaseb, M.; Alali, H. Exploring Project Management Office Models for Public Construction Projects in Hail, Saudi Arabia. Sustainability 2024, 16, 8177. [Google Scholar] [CrossRef]

- Alghuried, A. Assessing the Critical Success Factors for the Sustainable Construction Project Management of Saudi Arabia. J. Asian Archit. Build. Eng. 2025, 24, 1–19. [Google Scholar] [CrossRef]

- Ahmad, S.; Aftab, F.; Eltayeb, T.; Siddiqui, K. Identifying Critical Success Factors for Construction Projects in Saudi Arabia. E3S Web Conf. 2023, 371, 02047. [Google Scholar] [CrossRef]

- Chen, G.X.; Shan, M.; Chan, A.P.C.; Liu, X.; Zhao, Y.Q. Investigating the Causes of Delay in Grain Bin Construction Projects: The Case of China. Int. J. Constr. Manag. 2019, 19, 1–14. [Google Scholar] [CrossRef]

- Derakhshanalavijeh, R.; Teixeira, J.M.C. Cost Overrun in Construction Projects in Developing Countries, Gas-Oil Industry of Iran as a Case Study. J. Civ. Eng. Manag. 2017, 23, 125–136. [Google Scholar] [CrossRef]

- Mashali, A.; Elbeltagi, E.; Motawa, I.; Elshikh, M. Stakeholder Management Challenges in Mega Construction Projects: Critical Success Factors. J. Eng. Des. Technol. 2023, 21, 358–375. [Google Scholar] [CrossRef]

- Ingle, P.V.; Mahesh, G. Construction Project Performance Areas for Indian Construction Projects. Int. J. Constr. Manag. 2022, 22, 1443–1454. [Google Scholar] [CrossRef]

- Alenazi, E.; Adamu, Z.; Al-Otaibi, A. Exploring the Nature and Impact of Client-Related Delays on Contemporary Saudi Construction Projects. Buildings 2022, 12, 880. [Google Scholar] [CrossRef]

- Allahaim, F.S.; Liu, L. Causes of Cost Overruns on Infrastructure Projects in Saudi Arabia. Int. J. Collab. Enterp. 2015, 5, 32–57. [Google Scholar] [CrossRef]

- Mahamid, I. Relationship between Delay and Productivity in Construction Projects. Int. J. Adv. Appl. Sci. 2022, 9, 160–166. [Google Scholar] [CrossRef]

- Caldas, C.; Gupta, A. Critical Factors Impacting the Performance of Mega-Projects. Eng. Constr. Archit. Manag. 2017, 20, 920–934. [Google Scholar] [CrossRef]

- Scott-Young, C.M.; Georgy, M.; Grisinger, A. Shared Leadership in Project Teams: An Integrative Multi-Level Conceptual Model and Research Agenda. Int. J. Proj. Manag. 2019, 37, 565–581. [Google Scholar] [CrossRef]

- Yunpeng, G.; Zaman, U. Exploring Mega-Construction Project Success in China’s Vaunted Belt and Road Initiative: The Role of Paternalistic Leadership, Team Members’ Voice and Team Resilience. Eng. Constr. Archit. Manag. 2024, 31, 3801–3825. [Google Scholar] [CrossRef]

- Al-Gahtani, K.; Alsugair, A.; Alsanabani, N.; Alabduljabbar, A.; Almutairi, B. Forecasting Delay-Time Model for Saudi Construction Projects Using DEMATEL–SD Technique. Int. J. Constr. Manag. 2024, 24, 1225–1239. [Google Scholar] [CrossRef]

- Abougamil, R.A.; Thorpe, D.; Heravi, A. An Investigation of BIM Advantages in Analysing Claims Procedures Related to the Extension of Time and Money in the KSA Construction Industry. Buildings 2024, 14, 426. [Google Scholar] [CrossRef]

- Igwe, U.S.; Mohamed, S.F.; Dzahir, M.A.M.; Yusof, Z.M.; Khiyon, N.A. Towards a Framework of Automated Resource Model for Post Contract Cost Control of Construction Projects. Int. J. Constr. Manag. 2022, 22, 3088–3097. [Google Scholar] [CrossRef]

- Li, Y.; Liu, C. Applications of Multirotor Drone Technologies in Construction Management. Int. J. Constr. Manag. 2019, 19, 401–412. [Google Scholar] [CrossRef]

- Omar, T.; Nehdi, M.L. Data Acquisition Technologies for Construction Progress Tracking. Autom. Constr. 2016, 70, 143–155. [Google Scholar] [CrossRef]

- Felemban, H.; Sohail, M.; Ruikar, K. Exploring the Readiness of Organisations to Adopt Artificial Intelligence. Buildings 2024, 14, 2460. [Google Scholar] [CrossRef]

| Hypothesis | Relationship | Abbreviations |

|---|---|---|

| H1a | Organisational PCS determinants → Pre-operational control determinants | OPCSD → Pre-OCD |

| H1b | Organisational PCS determinants → In-operational control determinants | OPCSD → In-OCD |

| H1c | Organisational PCS determinants → Post-operational control determinants | OPCSD → Post-OCD |

| H1d | Organisational PCS determinants → Uncertainty control determinants | OPCSD → UOCD |

| H1e | Organisational PCS determinants → Human PCS determinants | OPCSD → HPCSD |

| H2a | Human PCS determinants → Pre-operational control determinants | HPCSD → Pre-OCD |

| H2b | Human PCS determinants → In-operational control determinants | HPCSD → In-OCD |

| H2c | Human PCS determinants → Post-operational control determinants | HPCSD → Post-OCD |

| H2d | Human PCS determinants → Uncertainty control determinants | HPCSD → UOCD |

| H2e | Human PCS determinants → Technological PCS determinants | HPCSD → TPCSD |

| H3a | Technological PCS determinants → Pre-operational control determinants | TPCSD → Pre-OCD |

| H3b | Technological PCS determinants → In-operational control determinants | TPCSD → In-OCD |

| H3c | Technological PCS determinants → Post-operational control determinants | TPCSD → Post-OCD |

| H3d | Technological PCS determinants → Uncertainty control determinants | TPCSD → UOCD |

| H4 | Pre-operational control determinants → Project performance | Pre-OCD → PP |

| H5 | In-operational control determinants → Project performance | In-OCD → PP |

| H6 | Post-operational control determinants → Project performance | Post-OCD → PP |

| H7 | Uncertainty control determinants → Project performance | UOCD → PP |

| H8 | Pre-operational control determinants → In-operational control determinants | Pre-OCD → In-OCD |

| H9 | In-operational control determinants → Post-operational control determinants | In-OCD → Post-OCD |

| Construct | Code/Item | Items for Construct | Sources |

|---|---|---|---|

| Project performance (PP) | PP1 | The project adhered to its predetermined schedule | [8,30,70,71,80,81,82,83] |

| PP2 | The project adhered to its predetermined budget | ||

| PP3 | The project adhered to its predetermined scope |

| Construct | PCSD | Code/Item | Items for Construct | Sources |

|---|---|---|---|---|

| Organisational PCS determinant (OPCSD) | Top management support (TMS) | TMS1 | The sufficiency of resource allocations provided by TM | [40,42,48,84,85,86,87,88,89] |

| TMS2 | Promptness of top management in decision-making and changes | |||

| TMS3 | Top management fostering trust and a successful project culture | |||

| TMS4 | Top management’s understanding and commitment to PC principles | |||

| Oversight and governance programme (OGP) | OGP1 | Clarity of the project’s organisational structure | [8,30,40,42,90,91,92,93,94,95,96,97,98] | |

| OGP2 | Clarity of established project control procedures | |||

| OGP3 | Engagement of PMO/independent peer reviews | |||

| OGP4 | Audit and evaluation frequency for project control processes | |||

| OGP5 | Application of knowledge and lessons learned for process improvement | |||

| Stakeholder engagement and coordination (SEC) | SEC1 | Application of stakeholder engagement assessment | [5,42,48,88,90,99,100,101,102,103,104,105,106,107,108] | |

| SEC2 | Stakeholders’ understanding of their roles in PC engagement | |||

| SEC3 | Interaction level among stakeholders | |||

| SEC4 | Consistency and integration of PC systems across stakeholders | |||

| Human PCS determinant (HPCSD) | Teamwork and collaboration (TWC) | TWC1 | Team members’ commitment to their roles, responsibilities, and alignment with project objectives | [5,8,30,40,41,42,83,90,91,92,93,99,100,106,109] |

| TWC2 | Team collaboration in information sharing, task coordination, decision-making, and open communication | |||

| TWC3 | Trust levels and conflict management between team members | |||

| TWC4 | Transparency and integrity in information reporting and sharing | |||

| Leadership and team skills (LTS) | LTS1 | Team members knowledge and expertise level in their respective areas | [5,8,30,40,41,42,83,89,91,94,107] | |

| LTS2 | Team members’ skills, including technical proficiency, critical thinking, and task independence | |||

| LTS3 | Manager’s technical and managerial capability | |||

| LTS4 | Ability of leaders in guiding, motivating, and managing team members | |||

| Technological PCS determinant (TPCSD) | Technological competency (TC) | TC1 | Extent to which advanced technologies such as data analytics are integrated into project control processes | [30,65,69,100,108,109,110,111,112,113,114,115,116,117,118,119,120,121] |

| TC2 | Use of automated and sophisticated devices for real-time project monitoring | |||

| TC3 | Utilisation of advanced prediction and forecasting tools, such as artificial intelligence (AI) and machine learning | |||

| TC4 | The use of Building Information Modelling (BIM) technology and infographic visualisation tools | |||

| TC5 | The adaptation of the common data environment for information management within the project |

| Construct | PCSD | Code/Item | Items for Construct | Sources |

|---|---|---|---|---|

| Pre-operational control determinant (Pre-OCD) | Project planning and scheduling (PPS) | PPS1 | Clarity of the project scope | [5,30,40,42,43,48,72,107,122] |

| PPS2 | Accuracy of the Work Breakdown Structure (WBS) in capturing the project scope | |||

| PPS3 | Comprehensiveness and detail of resource allocation for project activities | |||

| PPS4 | Efficacy of estimated project duration, costs, and resources in meeting project goals | |||

| PPS5 | Project milestones and scheduled activities in terms of clarity, relevance, accuracy, and realism | |||

| PPS6 | The alignment between the established performance measurement metrics and the project scope and objectives | |||

| In-operational control determinant (Pre-OCD) | Project progress tracking (PPT) | PPT1 | Frequency of physical inspections and reviews to track project progress on site | [5,30,40,41,43,82,105,122,123,124] |

| PPT2 | Extent of tracking budget expenditures and payments | |||

| PPT3 | Frequency of tracking the project schedule activities and milestone completions | |||

| PPT4 | Regular monitoring of project deliverables against requirements, specifications, and quality benchmarks | |||

| PPT5 | Extent of tracking resource allocation, usage, and productivity throughout the project | |||

| PPT6 | Efficacy of project tracking in updating project progress documentation and logs | |||

| Project performance analysis (PPA) | PPA1 | Comparing actual project trends to planned ones using techniques such as Earned Value Analysis (EVA) and assessing variance measurements (CV and SV) | [2,5,30,40,43,48,72,108,111,112,113,115,118,122,123,125,126,127,128,129,130,131,132,133,134,135,136,137] | |

| PPA2 | Forecasting project completion time and total cost | |||

| PPA3 | Reviewing critical and near-critical path activities | |||

| PPA4 | Conducting financial analysis and benefit–cost evaluation | |||

| PPA5 | Accuracy of performance measurement and KPIs for detecting project deviations | |||

| Post-operational control determinant (Post-OCD) | Project communication and reporting (PCR) | PCSR1 | Clarity of communication plan in exchanging data and distribution of information | [5,29,30,40,41,42,65,72,92,99,100,105,107,108,109,114,122,128,131,137] |

| PCSR2 | Frequency of updating project performance reports | |||

| PCSR3 | The accessibility, clarity, and accuracy of project reports | |||

| PCSR4 | Availability of real-time data for decision-making | |||

| Corrective actions (CAs) | CA1 | Frequency of conducting review meetings for project performance and corrective actions | [5,30,40,41,43,48,95,97,113,122,126,127,132,134,136,138] | |

| CA2 | Responsiveness in resolving critical issues, such as scope creep, budget overruns, and schedule deviations | |||

| CA3 | Frequency of applying resource optimisation in correcting the project pathway | |||

| CA4 | Frequency of employing fast tracking and schedule-crashing techniques | |||

| Uncertainty operational control determinant (UOCD) | Change control (CC) | CC1 | The adherence to established change control procedures and policies | [5,28,29,30,43,72,107,122,139,140,141,142] |

| CC2 | Timeliness of change approvals or rejections, ensuring they align with project needs | |||

| CC3 | The tracking and monitoring of change requests from submission to resolution | |||

| CC4 | Efficacy of the change review and implementation process | |||

| Risk control (RC) | RC1 | Frequency of identifying potential risks and their root causes | [20,30,40,72,81,88,102,113,122,138,143,144,145] | |

| RC2 | Adherence to risk monitoring and documentation, including risk registers and action plans | |||

| RC3 | Allocation level of contingency and reserve funds for risk response | |||

| RC4 | Efficacy of risk mitigation strategies in reducing or eliminating identified risks |

| Variable | Category | Number | Percentage (%) | Cumulative Percentage (%) |

|---|---|---|---|---|

| Years of experience | 11–15 years | 38 | 17.1 | 17.1 |

| 16–20 years | 47 | 21.2 | 38.3 | |

| 20 and above | 76 | 34.2 | 72.5 | |

| 6–10 years | 32 | 14.4 | 86.9 | |

| Less than 6 years | 29 | 13.1 | 100.0 | |

| Respondent’s position | Construction manager level | 26 | 11.7 | 11.7 |

| Director and executive level | 8 | 3.6 | 15.3 | |

| Project manager level | 146 | 65.8 | 81.1 | |

| Contract manager | 2 | 0.9 | 82.0 | |

| Other | 36 | 16.2 | 98.2 | |

| Project controls manager | 4 | 1.8 | 100.0 | |

| Project type | Commercial | 29 | 13.1 | 13.1 |

| Industrial | 17 | 7.7 | 20.7 | |

| Infrastructure | 62 | 27.9 | 48.6 | |

| Residential | 68 | 30.6 | 79.3 | |

| Services | 46 | 20.7 | 100.0 | |

| Project sector | Other | 2 | 0.9 | 0.9 |

| Private | 103 | 46.4 | 47.3 | |

| Public | 117 | 52.7 | 100.0 | |

| Organisation role | Client | 75 | 33.8 | 33.8 |

| Consultant | 64 | 28.8 | 62.6 | |

| Contractor | 72 | 32.4 | 95.0 | |

| Sub-Contractor | 11 | 5.0 | 100.0 | |

| Project delivery method | Design-Bid-Build (DBB) | 78 | 35.1 | 35.1 |

| Design-Build (DB) | 39 | 17.6 | 52.7 | |

| Construction Manager at Risk (CMAR) | 8 | 3.6 | 56.3 | |

| Integrated Project Delivery (IPD) | 94 | 42.3 | 98.6 | |

| Other | 3 | 1.4 | 100.0 | |

| Duration of project | 1 to 12 months | 46 | 20.7 | 20.7 |

| 13 to 18 months | 42 | 18.9 | 39.6 | |

| 19 to 24 months | 46 | 20.7 | 60.4 | |

| 25 to 36 months | 68 | 30.6 | 91.0 | |

| 37 months and above | 20 | 9.0 | 100.0 | |

| Project budget | SAR 100–499 M | 59 | 26.6 | 26.6 |

| SAR 30–99.99 M | 36 | 16.2 | 42.8 | |

| SAR 500 M+ | 38 | 17.1 | 59.9 | |

| SAR 6–29.99 M | 43 | 19.4 | 79.3 | |

| <SAR 6 M | 46 | 20.7 | 100.0 |

| Construct | Code/Item | Factor Loading | Composite Reliability | Cronbach’s Alpha | AVE |

|---|---|---|---|---|---|

| Project performance (PP) | PP1 | 0.847 | 0.806 | 0.808 | 0.721 |

| PP2 | 0.870 | ||||

| PP3 | 0.829 | ||||

| Organisational PCS determinants (OPCSD) | TMS1 | 0.723 | 0.948 | 0.950 | 0.618 |

| TMS2 | 0.806 | ||||

| TMS3 | 0.832 | ||||

| TMS4 | 0.852 | ||||

| OGP1 | 0.745 | ||||

| OGP2 | 0.757 | ||||

| OGP3 | 0.710 | ||||

| OGP4 | 0.722 | ||||

| OGP5 | 0.759 | ||||

| SEC1 | 0.764 | ||||

| SEC2 | 0.818 | ||||

| SEC3 | 0.854 | ||||

| SEC4 | 0.852 | ||||

| Human PCS determinants (HPCSD) | TWC1 | 0.847 | 0.940 | 0.941 | 0.705 |

| TWC2 | 0.857 | ||||

| TWC3 | 0.856 | ||||

| TWC4 | 0.790 | ||||

| LTS1 | 0.868 | ||||

| LTS2 | 0.881 | ||||

| LTS3 | 0.830 | ||||

| LTS4 | 0.780 | ||||

| Technological PCS determinants (TPCSD) | TC1 | 0.883 | 0.923 | 0.927 | 0.764 |

| TC2 | 0.904 | ||||

| TC3 | 0.851 | ||||

| TC4 | 0.859 | ||||

| TC5 | 0.874 | ||||

| Pre-operational control PCS determinants (Pre-OCD) | PPS1 | 0.824 | 0.925 | 0.926 | 0.727 |

| PPS2 | 0.859 | ||||

| PPS3 | 0.851 | ||||

| PPS4 | 0.817 | ||||

| PPS5 | 0.879 | ||||

| PPS6 | 0.883 | ||||

| In-operational control determinants (In-OCD) | PPT1 | 0.825 | 0.949 | 0.949 | 0.670 |

| PPT2 | 0.819 | ||||

| PPT3 | 0.821 | ||||

| PPT4 | 0.844 | ||||

| PPT5 | 0.806 | ||||

| PPT6 | 0.797 | ||||

| PPA1 | 0.806 | ||||

| PPA2 | 0.819 | ||||

| PPA3 | 0.833 | ||||

| PPA4 | 0.809 | ||||

| PPA5 | 0.825 | ||||

| Post-operational control determinants (Post-OCD) | PCSR1 | 0.845 | 0.935 | 0.936 | 0.687 |

| PCSR2 | 0.853 | ||||

| PCSR3 | 0.862 | ||||

| PCSR4 | 0.842 | ||||

| CA1 | 0.806 | ||||

| CA2 | 0.821 | ||||

| CA3 | 0.836 | ||||