Large Language Models for Construction Risk Classification: A Comparative Study

Abstract

1. Introduction

2. Literature Review

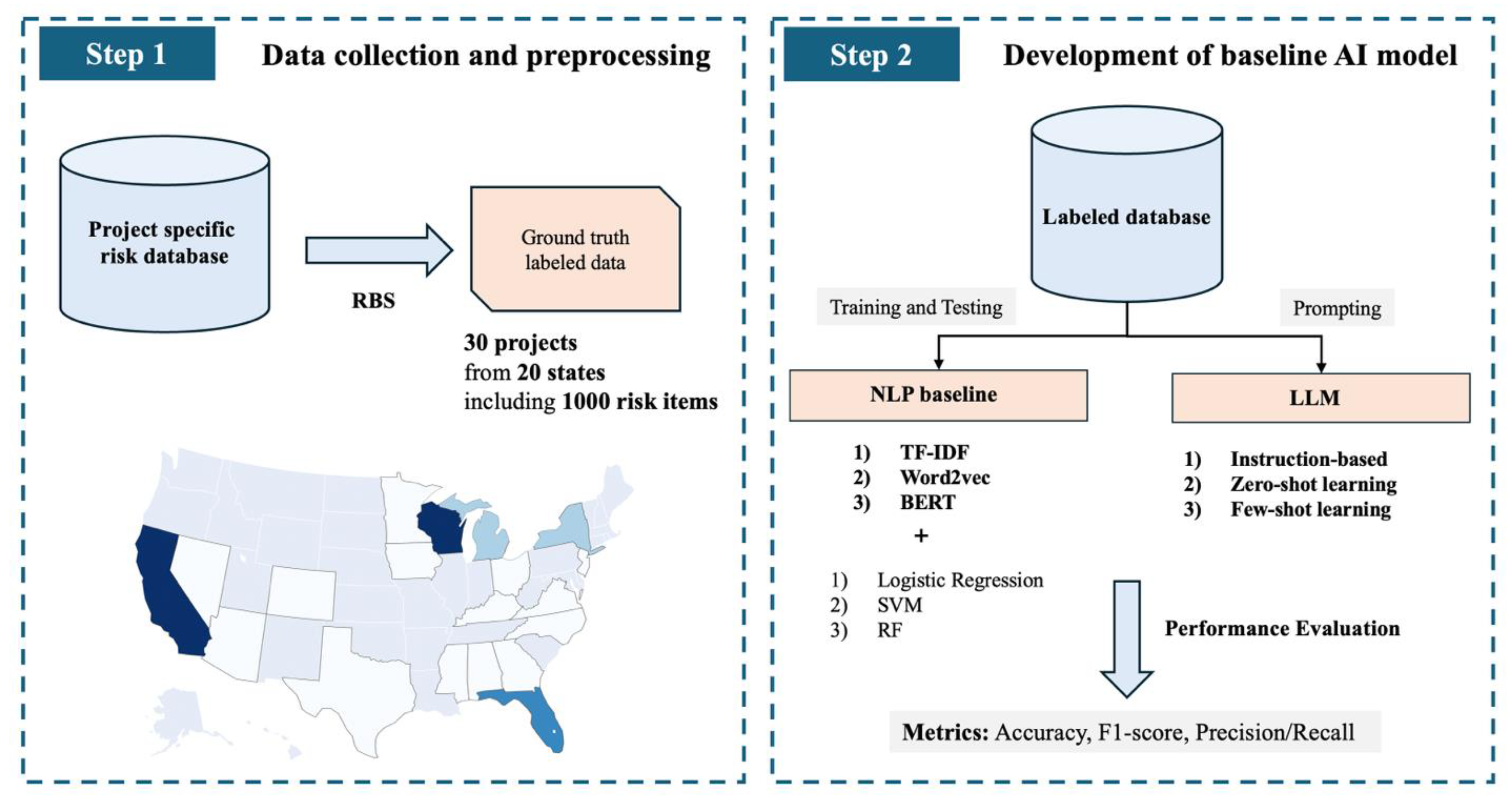

3. Research Methodology

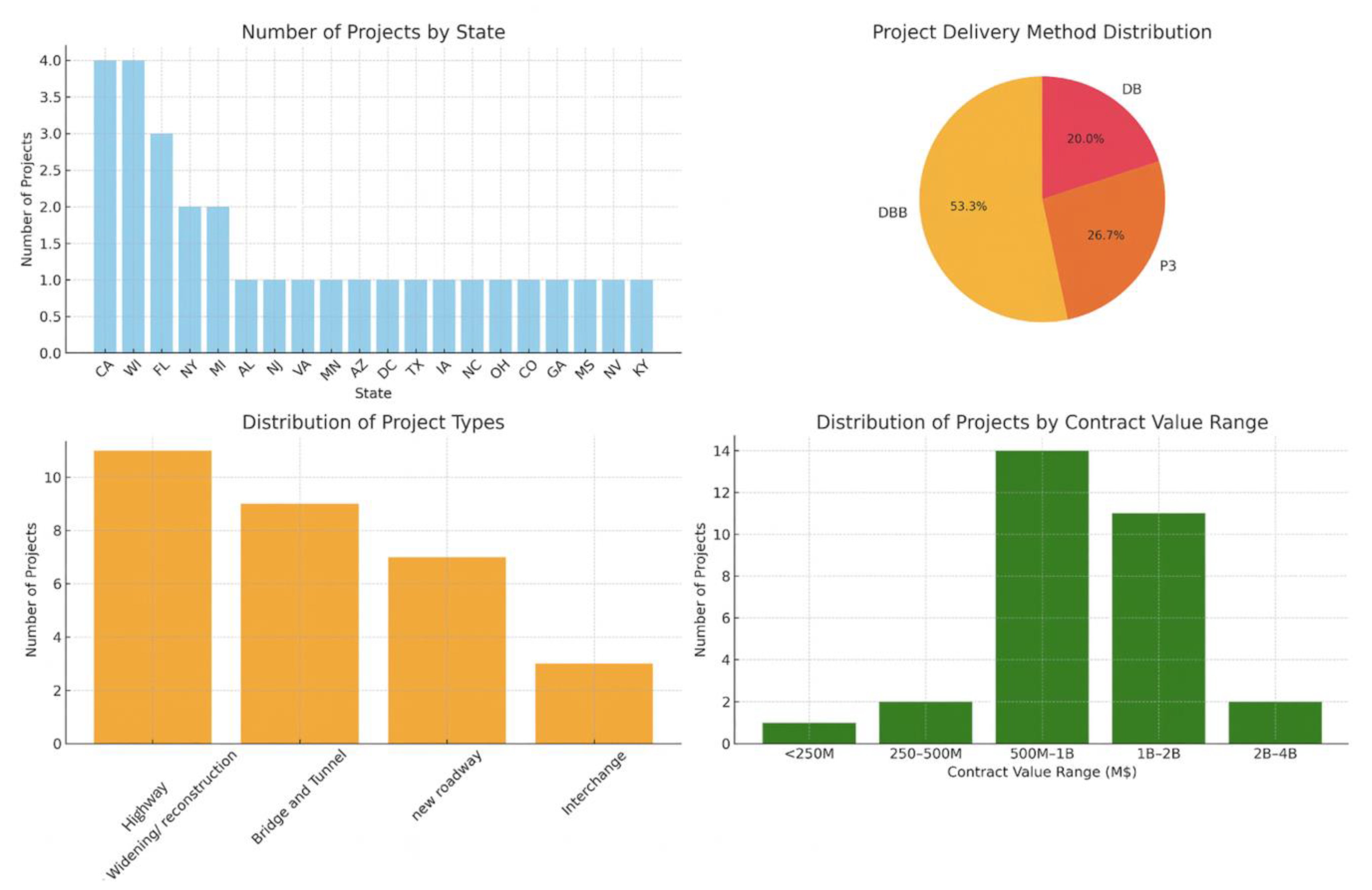

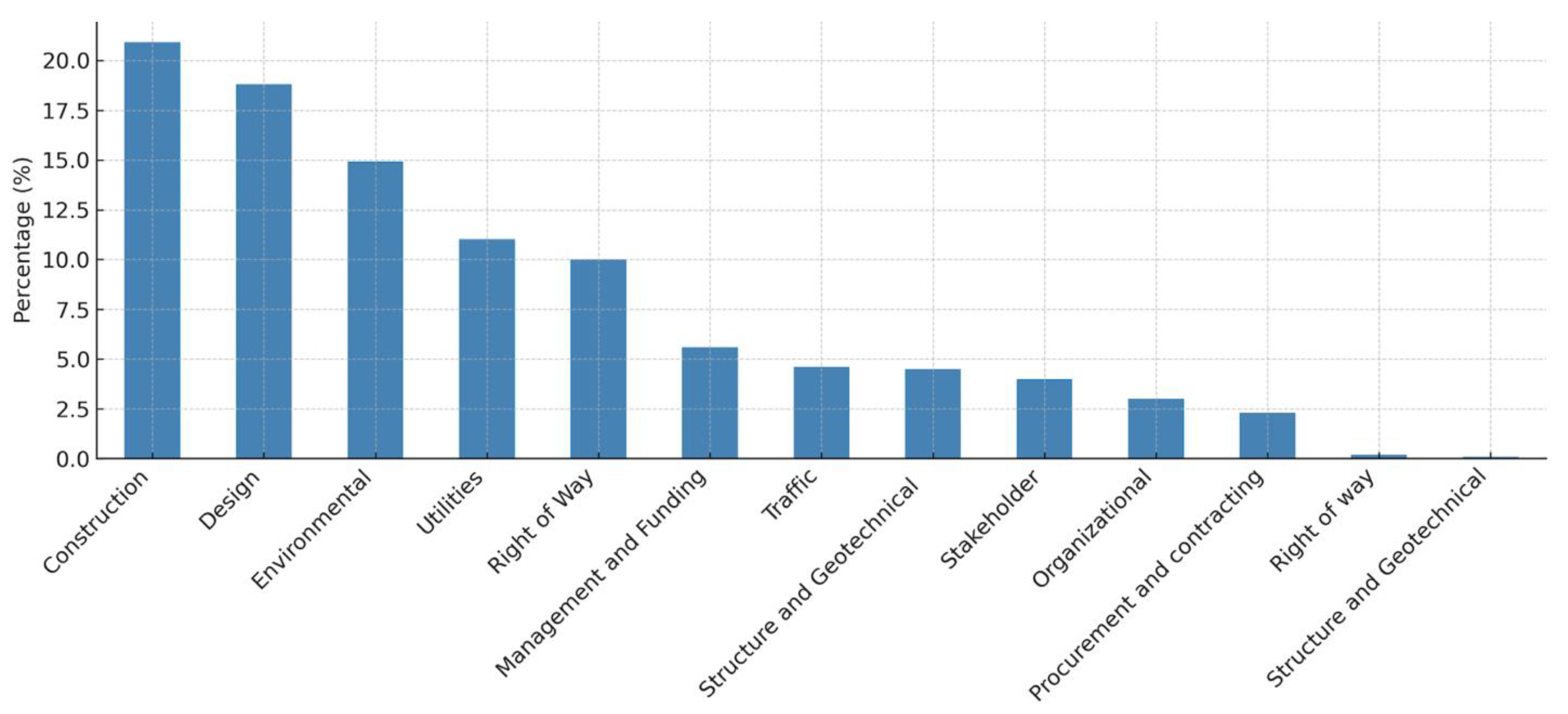

3.1. Data Collection and Preprocessing

3.2. Benchmarking NLP and LLM Approaches for Risk Classification

- where

- : Number of occurrences of terms t in the document.

- N: Total number of terms in the document.

- k: Total number of documents.

- : Number of documents containing the term t.

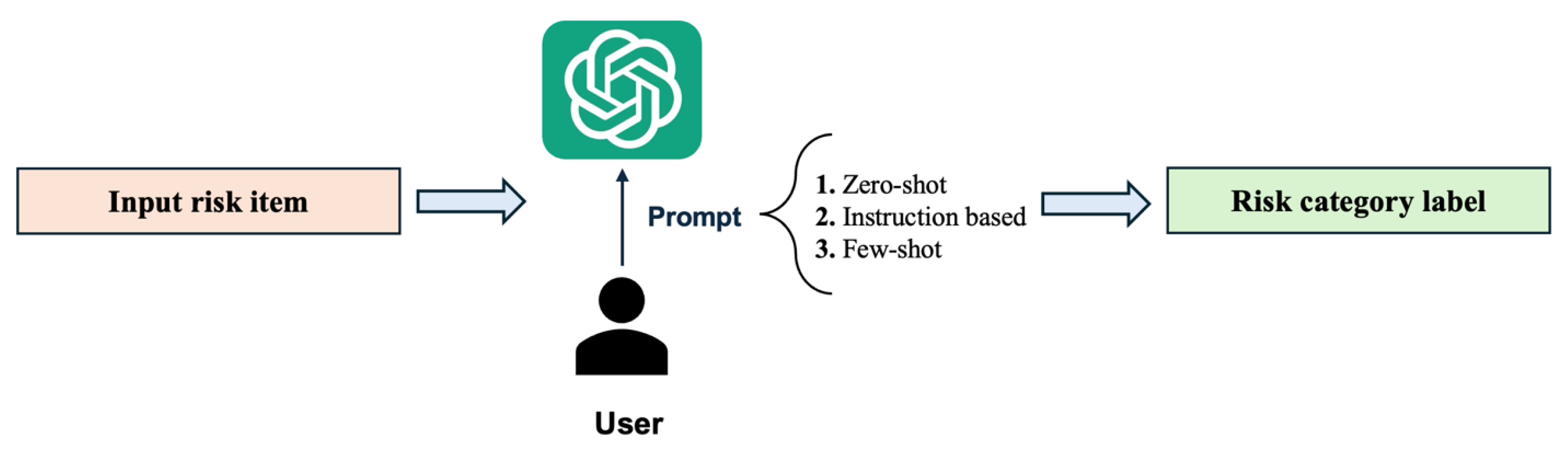

3.3. LLMs: Prompt Engineering Approaches

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Taroun, A. Towards a better modelling and assessment of construction risk: Insights from a literature review. Int. J. Proj. Manag. 2014, 32, 101–115. [Google Scholar] [CrossRef]

- Lafhaj, Z.; Rebai, S.; AlBalkhy, W.; Hamdi, O.; Mossman, A.; Alves Da Costa, A. Complexity in construction projects: A literature review. Buildings 2024, 14, 680. [Google Scholar] [CrossRef]

- Osei-Kyei, R.; Jin, X.; Nnaji, C.; Akomea-Frimpong, I.; Wuni, I.Y. Review of risk management studies in public-private partnerships: A scientometric analysis. Int. J. Constr. Manag. 2023, 23, 2419–2430. [Google Scholar] [CrossRef]

- Siraj, N.B.; Fayek, A.R. Risk identification and common risks in construction: Literature review and content analysis. J. Constr. Eng. Manag. 2019, 145, 03119004. [Google Scholar] [CrossRef]

- Al-Mhdawi, M.K.S.; O’Connor, A.; Qazi, A.; Rahimian, F.; Dacre, N. Review of studies on risk factors in critical infrastructure projects from 2011 to 2023. Smart Sustain. Built Environ. 2025, 14, 342–376. [Google Scholar] [CrossRef]

- Arijeloye, B.T.; Ramabodu, M.S.; Chikafalimani, S.H.P. Application of Fuzzy Risk Allocation Decision Model for Improving the Nigerian Public–Private Partnership Mass Housing Project Procurement. Buildings 2025, 15, 2866. [Google Scholar] [CrossRef]

- Alba-Rodríguez, M.D.; Lucas-Ruiz, V.; Marrero, M. Systematic Methodology for Estimating the Social Dimension of Construction Projects—Assessing Health and Safety Risks Based on Project Budget Analysis. Buildings 2025, 15, 2313. [Google Scholar] [CrossRef]

- El-Sayegh, S.M.; Manjikian, S.; Ibrahim, A.; Abouelyousr, A.; Jabbour, R. Risk identification and assessment in sustainable construction projects in the UAE. Int. J. Constr. Manag. 2021, 21, 327–336. [Google Scholar] [CrossRef]

- Erfani, A.; Ma, Z.; Cui, Q.; Baecher, G.B. Ex post project risk assessment: Method and empirical study. J. Constr. Eng. Manag. 2023, 149, 04022174. [Google Scholar] [CrossRef]

- Dicks, E.P.; Molenaar, K.R. Causes of Incomplete Risk Identification in Major Transportation Engineering and Construction Projects. Transp. Res. Rec. 2024, 2679, 619–628. [Google Scholar] [CrossRef]

- Bepari, M.; Narkhede, B.E.; Raut, R.D. A comparative study of project risk management with risk breakdown structure (RBS): A case of commercial construction in India. Int. J. Constr. Manag. 2024, 24, 673–682. [Google Scholar] [CrossRef]

- Serpell, A.; Ferrada, X.; Rubio, L.; Arauzo, S. Evaluating risk management practices in construction organizations. Procedia-Soc. Behav. Sci. 2015, 194, 201–210. [Google Scholar] [CrossRef]

- Yousri, E.; Sayed, A.E.B.; Farag, M.A.; Abdelalim, A.M. Risk identification of building construction projects in Egypt. Buildings 2023, 13, 1084. [Google Scholar] [CrossRef]

- Bahamid, R.A.; Doh, S.I.; Khoiry, M.A.; Kassem, M.A.; Al-Sharafi, M.A. The current risk management practices and knowledge in the construction industry. Buildings 2022, 12, 1016. [Google Scholar] [CrossRef]

- Tavakolan, M.; Mohammadi, A. Risk management workshop application: A case study of Ahwaz Urban Railway project. Int. J. Constr. Manag. 2018, 18, 260–274. [Google Scholar] [CrossRef]

- Goh, C.S.; Abdul-Rahman, H.; Abdul Samad, Z. Applying risk management workshop for a public construction project: Case study. J. Constr. Eng. Manag. 2013, 139, 572–580. [Google Scholar] [CrossRef]

- Erfani, A.; Cui, Q. Predictive risk modeling for major transportation projects using historical data. Autom. Constr. 2022, 139, 104301. [Google Scholar] [CrossRef]

- Gao, N.; Touran, A.; Wang, Q.; Beauchamp, N. Construction risk identification using a multi-sentence context-aware method. Autom. Constr. 2024, 164, 105466. [Google Scholar] [CrossRef]

- Diao, C.; Liang, R.; Sharma, D.; Cui, Q. Litigation risk detection using Twitter data. J. Leg. Aff. Disput. Resolut. Eng. Constr. 2020, 12, 04519047. [Google Scholar] [CrossRef]

- Pham, H.T.; Han, S. Natural language processing with multitask classification for semantic prediction of risk-handling actions in construction contracts. J. Comput. Civ. Eng. 2023, 37, 04023027. [Google Scholar] [CrossRef]

- Wong, S.; Zheng, C.; Su, X.; Tang, Y. Construction contract risk identification based on knowledge-augmented language models. Comput. Ind. 2024, 157, 104082. [Google Scholar] [CrossRef]

- Kazemi, M.H.; Alvanchi, A. Application of NLP-based models in automated detection of risky contract statements written in complex script system. Expert Syst. Appl. 2025, 259, 125296. [Google Scholar] [CrossRef]

- Kim, J.; Kwon, B.; Lee, J.; Mun, D. Inherent risks identification in a contract document through automated rule generation. Autom. Constr. 2025, 172, 106044. [Google Scholar] [CrossRef]

- Jallan, Y.; Ashuri, B. Text mining of the securities and exchange commission financial filings of publicly traded construction firms using deep learning to identify and assess risk. J. Constr. Eng. Manag. 2020, 146, 04020137. [Google Scholar] [CrossRef]

- Erfani, A.; Cui, Q. Natural language processing application in construction domain: An integrative review and algorithms comparison. In Proceedings of the ASCE International Conference on Computing in Civil Engineering 2021, Orlando, FL, USA, 12–14 September 2021; Available online: https://ascelibrary.org/doi/10.1061/9780784483893.004 (accessed on 11 September 2025).

- Mohamed, M.A.H.; Al-Mhdawi, M.K.S.; Ojiako, U.; Dacre, N.; Qazi, A.; Rahimian, F. Generative AI in construction risk management: A bibliometric analysis of the associated benefits and risks. Urban. Sustain. Soc. 2025, 2, 196–228. [Google Scholar] [CrossRef]

- Project Management Institute (PMI). The Project Management Body of Knowledge (PMBOK Guide), 5th ed.; Project Management Institute: Newtown Square, PA, USA, 2013. [Google Scholar]

- Zhao, X. Construction risk management research: Intellectual structure and emerging themes. Int. J. Constr. Manag. 2024, 24, 540–550. [Google Scholar] [CrossRef]

- Al-Mhdawi, M.K.S.; Brito, M.; Onggo, B.S.; Qazi, A.; O’Connor, A.; Namian, M. Construction risk management in Iraq during the COVID-19 pandemic: Challenges to implementation and efficacy of practices. J. Constr. Eng. Manag. 2023, 149, 04023086. [Google Scholar] [CrossRef]

- Su, G.; Khallaf, R. Research on the influence of risk on construction project performance: A systematic review. Sustainability 2022, 14, 6412. [Google Scholar] [CrossRef]

- Hanna, A.S.; Thomas, G.; Swanson, J.R. Construction risk identification and allocation: Cooperative approach. J. Constr. Eng. Manag. 2013, 139, 1098–1107. [Google Scholar] [CrossRef]

- Dicks, E.P.; Molenaar, K.R. Analysis of Washington State Department of Transportation risks. Transp. Res. Rec. 2023, 2677, 1690–1700. [Google Scholar] [CrossRef]

- Erfani, A.; Cui, Q.; Baecher, G.; Kwak, Y.H. Data-driven approach to risk identification for major transportation projects: A common risk breakdown structure. IEEE Trans. Eng. Manag. 2023, 71, 6830–6841. [Google Scholar] [CrossRef]

- Duijm, N.J. Recommendations on the use and design of risk matrices. Saf. Sci. 2015, 76, 21–31. [Google Scholar] [CrossRef]

- Pan, Y.; Zhang, L. Roles of artificial intelligence in construction engineering and management: A critical review and future trends. Autom. Constr. 2021, 122, 103517. [Google Scholar] [CrossRef]

- Abioye, S.O.; Oyedele, L.O.; Akanbi, L.; Ajayi, A.; Delgado, J.M.D.; Bilal, M.; Ahmed, A. Artificial intelligence in the construction industry: A review of present status, opportunities and future challenges. J. Build. Eng. 2021, 44, 103299. [Google Scholar] [CrossRef]

- Erfani, A.; Shayesteh, N.; Adnan, T. Data-augmented explainable AI for pavement roughness prediction. Autom. Constr. 2025, 176, 106307. [Google Scholar] [CrossRef]

- Tian, K.; Zhu, Z.; Mbachu, J.; Moorhead, M.; Ghanbaripour, A. Artificial intelligence in construction risk management: A decade of developments, challenges, and integration pathways. J. Risk Res. 2025, 1–33. [Google Scholar] [CrossRef]

- Chung, S.; Kim, J.; Baik, J.; Chi, S.; Kim, D.Y. Identifying issues in international construction projects from news text using pre-trained models and clustering. Autom. Constr. 2024, 168, 105875. [Google Scholar] [CrossRef]

- Erfani, A.; Cui, Q.; Cavanaugh, I. An empirical analysis of risk similarity among major transportation projects using natural language processing. J. Constr. Eng. Manag. 2021, 147, 04021175. [Google Scholar] [CrossRef]

- Zhang, F. A hybrid structured deep neural network with Word2Vec for construction accident causes classification. Int. J. Constr. Manag. 2022, 22, 1120–1140. [Google Scholar] [CrossRef]

- Ye, Y.X.; Shan, M.; Gao, X.; Li, Q.; Zhang, H. Examining causes of disputes in subcontracting litigation cases using text mining and natural language processing techniques. Int. J. Constr. Manag. 2024, 24, 1617–1629. [Google Scholar] [CrossRef]

- Erfani, A.; Mansouri, A. Applications of Multimodal Large Language Models in Construction Industry. 2025. Available online: https://ssrn.com/abstract=5278215 (accessed on 11 September 2025).

- Martin, H.; James, J.; Chadee, A. Exploring Large Language Model AI tools in construction project risk assessment: ChatGPT limitations in risk identification, mitigation strategies, and user experience. J. Constr. Eng. Manag. 2025, 151, 04025119. [Google Scholar] [CrossRef]

- Chen, G.; Alsharef, A.; Ovid, A.; Albert, A.; Jaselskis, E. Meet2Mitigate: An LLM-powered framework for real-time issue identification and mitigation from construction meeting discourse. Adv. Eng. Inform. 2025, 64, 103068. [Google Scholar] [CrossRef]

- Jeon, K.; Lee, G. Hybrid large language model approach for prompt and sensitive defect management: A comparative analysis of hybrid, non-hybrid, and GraphRAG approaches. Adv. Eng. Inform. 2025, 64, 103076. [Google Scholar] [CrossRef]

- Yao, D.; de Soto, B.G. Enhancing cyber risk identification in the construction industry using language models. Autom. Constr. 2024, 165, 105565. [Google Scholar] [CrossRef]

- Dikmen, I.; Eken, G.; Erol, H.; Birgonul, M.T. Automated construction contract analysis for risk and responsibility assessment using natural language processing and machine learning. Comput. Ind. 2025, 166, 104251. [Google Scholar] [CrossRef]

- Prieto, S.A.; Mengiste, E.T.; García de Soto, B. Investigating the use of ChatGPT for the scheduling of construction projects. Buildings 2023, 13, 857. [Google Scholar] [CrossRef]

- Tsai, W.L.; Le, P.L.; Ho, W.F.; Chi, N.W.; Lin, J.J.; Tang, S.; Hsieh, S.H. Construction safety inspection with contrastive language-image pre-training (CLIP) image captioning and attention. Autom. Constr. 2025, 169, 105863. [Google Scholar] [CrossRef]

- Gao, Y.; Gan, Y.; Chen, Y.; Chen, Y. Application of large language models to intelligently analyze long construction contract texts. Constr. Manag. Econ. 2025, 43, 226–242. [Google Scholar]

- Liu, C.Y.; Chou, J.S. Automated legal consulting in construction procurement using metaheuristically optimized large language models. Autom. Constr. 2025, 170, 105891. [Google Scholar] [CrossRef]

- He, C.; He, W.; Liu, M.; Leng, S.; Wei, S. Enriched construction regulation inquiry responses: A hybrid search approach for large language models. J. Manag. Eng. 2025, 41, 04025001. [Google Scholar] [CrossRef]

- Qin, S.; Guan, H.; Liao, W.; Gu, Y.; Zheng, Z.; Xue, H.; Lu, X. Intelligent design and optimization system for shear wall structures based on large language models and generative artificial intelligence. J. Build. Eng. 2024, 95, 109996. [Google Scholar] [CrossRef]

- Sonkor, M.S.; García de Soto, B. Using ChatGPT in construction projects: Unveiling its cybersecurity risks through a bibliometric analysis. Int. J. Constr. Manag. 2025, 25, 741–749. [Google Scholar] [CrossRef]

- Nyqvist, R.; Peltokorpi, A.; Seppänen, O. Can ChatGPT exceed humans in construction project risk management? Eng. Constr. Archit. Manag. 2024, 31, 223–243. [Google Scholar] [CrossRef]

- Aladağ, H. Assessing the accuracy of ChatGPT use for risk management in construction projects. Sustainability 2023, 15, 16071. [Google Scholar] [CrossRef]

- Isah, M.A.; Kim, B.S. Question-answering system powered by knowledge graph and generative pretrained transformer to support risk identification in tunnel projects. J. Constr. Eng. Manag. 2025, 151, 04024193. [Google Scholar] [CrossRef]

- Johnson, S.J.; Murty, M.R.; Navakanth, I. A detailed review on word embedding techniques with emphasis on Word2Vec. Multimed. Tools Appl. 2024, 83, 37979–38007. [Google Scholar] [CrossRef]

- Zhang, K.; Erfani, A.; Beydoun, O.; Cui, Q. Procurement benchmarks for major transportation projects. Transp. Res. Rec. 2022, 2676, 363–376. [Google Scholar] [CrossRef]

- You, Z.; Wu, C. A framework for data-driven informatization of the construction company. Adv. Eng. Inform. 2019, 39, 269–277. [Google Scholar] [CrossRef]

- AlTalhoni, A.; Alwashah, Z.; Liu, H.; Abudayyeh, O.; Kwigizile, V.; Kirkpatrick, K. Data-driven identification of key pricing factors in highway construction cost estimation during economic volatility. Int. J. Constr. Manag. 2025, 1–16. [Google Scholar] [CrossRef]

- Abu-Mahfouz, E.; Al-Dahidi, S.; Gharaibeh, E.; Alahmer, A. A novel feature engineering-based hybrid approach for precise construction cost estimation using fuzzy-AHP and artificial neural networks. Int. J. Constr. Manag. 2025, 1–11. [Google Scholar] [CrossRef]

- Paik, Y.; Chung, F.; Ashuri, B. Preliminary cost estimation of pavement maintenance projects through machine learning: Emphasis on trees algorithms. J. Manag. Eng. 2025, 41, 04025027. [Google Scholar] [CrossRef]

- ElAlem, M.A.; Mahdi, I.M.; Mohamadien, H.A.; Hosny, S. Forecasting scope creep in Egyptian construction projects: An evaluation using artificial neural network (ANN) and random forest models. Int. J. Constr. Manag. 2025, 1–20. [Google Scholar] [CrossRef]

- Adnan, T.; Erfani, A.; Cui, Q. Paving equity: Unveiling socioeconomic patterns in pavement conditions using data mining. J. Manag. Eng. 2025, 41, 04025041. [Google Scholar] [CrossRef]

- Zeberga, M.S.; Haaskjold, H.; Hussein, B.; Lædre, O.; Wondimu, P.A. Artificial intelligence–driven contractual conflict management in the AEC industry: Mapping benefits, practice, readiness, and ethical implementation strategies. J. Manag. Eng. 2025, 41, 04025016. [Google Scholar] [CrossRef]

- Gondia, A.; Siam, A.; El-Dakhakhni, W.; Nassar, A.H. Machine learning algorithms for construction projects delay risk prediction. J. Constr. Eng. Manag. 2020, 146, 04019085. [Google Scholar] [CrossRef]

- Moussa, A.; Ezzeldin, M.; El-Dakhakhni, W. Machine learning and optimization strategies for infrastructure projects risk management. Constr. Manag. Econ. 2025, 43, 557–582. [Google Scholar] [CrossRef]

- Shamshiri, A.; Ryu, K.R.; Park, J.Y. Text mining and natural language processing in construction. Autom. Constr. 2024, 158, 105200. [Google Scholar] [CrossRef]

- Wu, C.; Li, X.; Guo, Y.; Wang, J.; Ren, Z.; Wang, M.; Yang, Z. Natural language processing for smart construction: Current status and future directions. Autom. Constr. 2022, 134, 104059. [Google Scholar] [CrossRef]

- Ding, Y.; Ma, J.; Luo, X. Applications of natural language processing in construction. Autom. Constr. 2022, 136, 104169. [Google Scholar] [CrossRef]

- Erfani, A.; Hickey, P.J.; Cui, Q. Likeability versus competence dilemma: Text mining approach using LinkedIn data. J. Manag. Eng. 2023, 39, 04023013. [Google Scholar] [CrossRef]

- Cheng, M.Y.; Kusoemo, D.; Gosno, R.A. Text mining-based construction site accident classification using hybrid supervised machine learning. Autom. Constr. 2020, 118, 103265. [Google Scholar] [CrossRef]

- Patil, R.; Boit, S.; Gudivada, V.; Nandigam, J. A survey of text representation and embedding techniques in NLP. IEEE Access 2023, 11, 36120–36146. [Google Scholar] [CrossRef]

- Gardazi, N.M.; Daud, A.; Malik, M.K.; Bukhari, A.; Alsahfi, T.; Alshemaimri, B. BERT applications in natural language processing: A review. Artif. Intell. Rev. 2025, 58, 166. [Google Scholar] [CrossRef]

- Li, L.; Erfani, A.; Wang, Y.; Cui, Q. Anatomy into the battle of supporting or opposing reopening amid the COVID-19 pandemic on Twitter: A temporal and spatial analysis. PLoS ONE 2021, 16, e0254359. [Google Scholar] [CrossRef] [PubMed]

- Mohammadi, P.; Rashidi, A.; Malekzadeh, M.; Tiwari, S. Evaluating various machine learning algorithms for automated inspection of culverts. Eng. Anal. Bound. Elem. 2023, 148, 366–375. [Google Scholar] [CrossRef]

- Mansouri, A.; Erfani, A. Machine learning prediction of urban heat island severity in the Midwestern United States. Sustainability 2025, 17, 6193. [Google Scholar] [CrossRef]

- Vujović, Ž. Classification model evaluation metrics. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 599–606. [Google Scholar] [CrossRef]

- Zheng, J.; Fischer, M. Dynamic prompt-based virtual assistant framework for BIM information search. Autom. Constr. 2023, 155, 105067. [Google Scholar] [CrossRef]

- Yong, G.; Jeon, K.; Gil, D.; Lee, G. Prompt engineering for zero-shot and few-shot defect detection and classification using a visual-language pretrained model. Comput.-Aided Civ. Infrastruct. Eng. 2023, 38, 1536–1554. [Google Scholar] [CrossRef]

- Sun, Y.; Gu, Z.; Yang, S.B. Probing vision and language models for construction waste material recognition. Autom. Constr. 2024, 166, 105629. [Google Scholar] [CrossRef]

- Uhm, M.; Kim, J.; Ahn, S.; Jeong, H.; Kim, H. Effectiveness of retrieval augmented generation-based large language models for generating construction safety information. Autom. Constr. 2025, 170, 105926. [Google Scholar] [CrossRef]

- Jiang, G.; Ma, Z.; Zhang, L.; Chen, J. Prompt engineering to inform large language model in automated building energy modeling. Energy 2025, 316, 134548. [Google Scholar] [CrossRef]

- Erfani, A.; Frias-Martinez, V. A fairness assessment of mobility-based COVID-19 case prediction models. PLoS ONE 2023, 18, e0292090. [Google Scholar] [CrossRef] [PubMed]

| Reference | Data Source | Methodology | Key Findings |

|---|---|---|---|

| Dikmen et al. (2025) [48] | Contract | Word2Vec, Glove (NLP: embedding technique) BERT (NLP: Transformers) | Detect risk exposures in contract clauses |

| Gao et al. (2024) [18] | News articles | BERT (NLP: Transformers) | Identify and extract risk-related sentences from news articles |

| Erfani and Cui (2022) [17] | Risk registers | Word2Vec (NLP: embedding technique) | Predictive models can offer an initial step by capturing more than 50% of risks |

| Diao et al. (2020) [19] | Social media | Bayesian network | Estimate litigation risk using Twitter |

| Jallan and Ashuri (2020) [24] | Financial reports | FastTex (NLP: embedding technique) | Identified 18 categories of risk using 10-K filings |

| Project-Specific Risk Item | Assigned RBS Level 1 |

|---|---|

| Changes to structural element design required | Design |

| Rock excavation in I-285/SR400 Interchange | Structure and geotechnical |

| Opportunity to use existing I-15 pavement for traffic detours because of the shifting of I-15 to the east | Traffic |

| Additional Right of Way may need to be acquired in east section | Right of Way |

| Section 4(f) resources affected National Environmental Policy Act Review | Environmental |

| Approach | Prompt |

|---|---|

| Zero-shot | You are a risk management expert. Given the name of a construction project risk, assign it to the most appropriate high-level category from the list below. Return only one category name from this list: construction, design, environmental, utilities, right of way, management and funding, traffic, stakeholder, organizational, and procurement and contracting. |

| Instruction | You are a construction risk classification expert. Your task is to assign the following unstructured risk statement to the most appropriate high-level risk category, based on the definitions below: - Construction: Issues related to construction access, safety, materials, subcontractor performance, buried objects, construction methods, or weather-related impacts. - Design: Problems involving design changes, delays, incompleteness, exceptions, or esthetic concerns. - Environmental: Risks related to permitting, NEPA processes, endangered species, hazardous materials, water/air quality, or archeological constraints. - Utilities: Issues with utility coordination, conflicts, requirements, relocation, or funding gaps. - Right of Way: Challenges acquiring or relocating right of way, railroad access, or right-of-way planning. - Management and Funding: Delays in decisions, scope changes, cash flow problems, economic conditions, labor disruptions, or force majeure. - Traffic: Risks involving traffic growth, tolling, mobility impacts, land use, or pedestrian/bicycle access. - Stakeholder: Public opposition, stakeholder changes, late requests, or communication breakdowns with external parties. - Organizational: Internal changes in leadership, policy, resources, or organizational priorities. - Procurement and contracting: Delays or disputes related to procurement methods, contract terms, or change orders. - Structure and geotechnical: Issues involving excavation, soil/geotech conditions, structural vibration, or foundation design. Classify the following risk statement into the most appropriate category from the list above. Return only the category name. |

| Few-shot | You are a risk management expert. Given the name of a construction project risk, assign it to the most appropriate high-level category. Use the following examples to guide your reasoning. Examples: Risk Name: “developer schedule exposes owner to risk of unsubstantiated schedule delays” Category: Construction Risk Name: “cost savings opportunity for redecking bridges” Category: Design Risk Name: “additional sound walls required due to new development” Category: Environmental Risk Name: “damage to unknown utilities during construction” Category: Utilities Risk Name: “delay in right of way document internal approval process” Category: Right Of Way Risk Name: “labor strike” Category: Management And Funding Risk Name: “coordination issues between DB and tolling contractors” Category: Traffic Risk Name: “timely railroad collaboration (railroad undertaking own construction scope)” Category: Stakeholder Risk Name: “ongoing involvement of owner staff” Category: Organizational Risk Name: “management experience with alternative procurement delivery” Category: Procurement And Contracting Risk Name: “rock excavation” Category: Structure And Geotechnical Now, classify the following risk. |

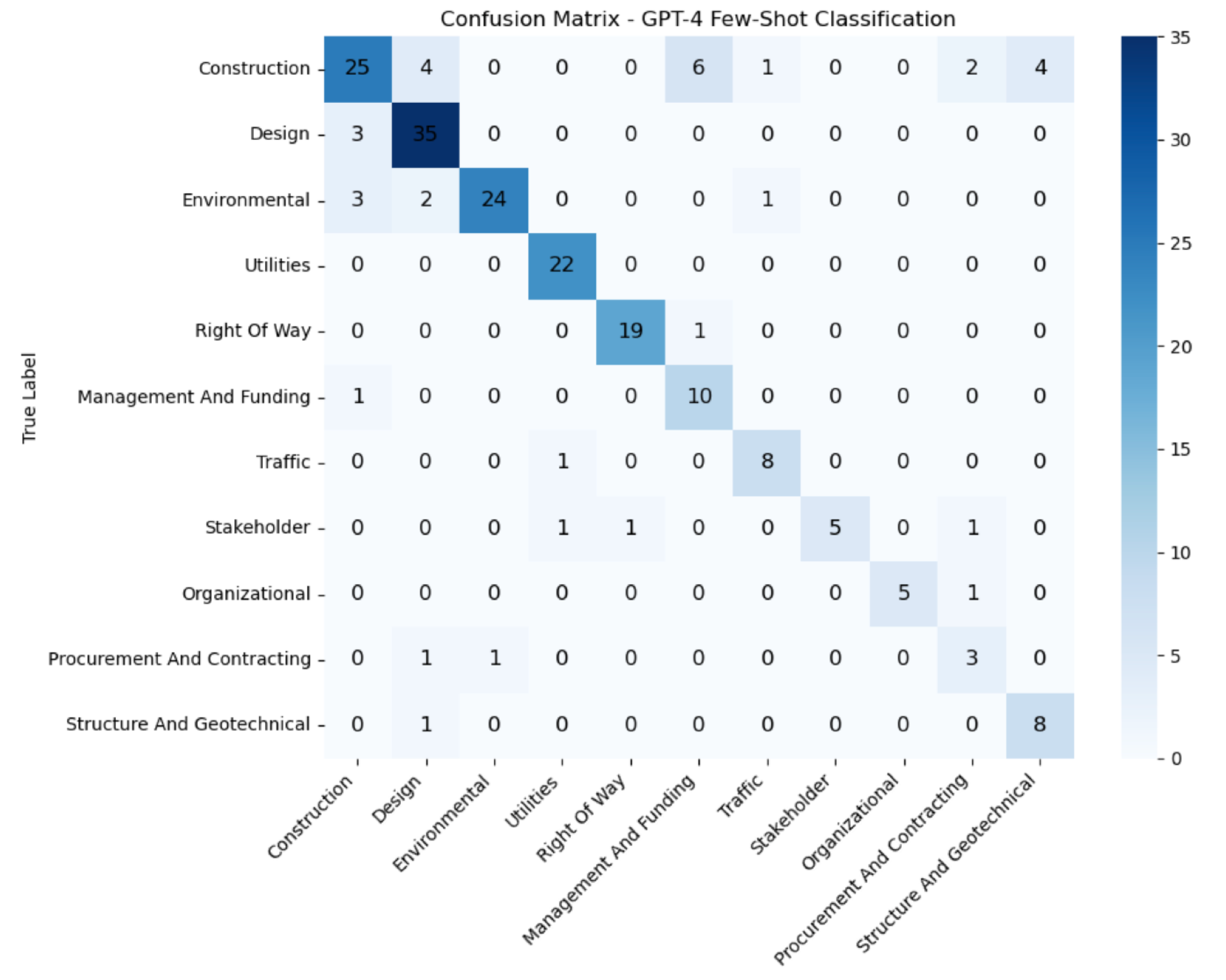

| Approach | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| TF-IDF (LR) | 0.77 | 0.82 | 0.77 | 0.73 |

| TF-IDF (SVM) | 0.80 | 0.86 | 0.80 | 0.78 |

| TF-IDF (RF) | 0.83 | 0.87 | 0.83 | 0.83 |

| Word2Vec (LR) | 0.83 | 0.85 | 0.83 | 0.83 |

| Word2Vec (SVM) | 0.78 | 0.84 | 0.78 | 0.76 |

| Word2Vec (RF) | 0.73 | 0.73 | 0.73 | 0.70 |

| BERT (LR) | 0.82 | 0.82 | 0.82 | 0.81 |

| BERT (SVM) | 0.86 | 0.88 | 0.86 | 0.86 |

| BERT (RF) | 0.80 | 0.83 | 0.80 | 0.77 |

| GPT (Zero-shot) | 0.79 | 0.82 | 0.79 | 0.79 |

| GPT (Instruction) | 0.82 | 0.84 | 0.82 | 0.82 |

| GPT (Few-shot) | 0.81 | 0.86 | 0.81 | 0.81 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Erfani, A.; Khanjar, H. Large Language Models for Construction Risk Classification: A Comparative Study. Buildings 2025, 15, 3379. https://doi.org/10.3390/buildings15183379

Erfani A, Khanjar H. Large Language Models for Construction Risk Classification: A Comparative Study. Buildings. 2025; 15(18):3379. https://doi.org/10.3390/buildings15183379

Chicago/Turabian StyleErfani, Abdolmajid, and Hussein Khanjar. 2025. "Large Language Models for Construction Risk Classification: A Comparative Study" Buildings 15, no. 18: 3379. https://doi.org/10.3390/buildings15183379

APA StyleErfani, A., & Khanjar, H. (2025). Large Language Models for Construction Risk Classification: A Comparative Study. Buildings, 15(18), 3379. https://doi.org/10.3390/buildings15183379