1. Introduction

Eurostat incidence-rate data for civil-engineering operations from 2013 to 2022 reveal that construction remains a high-risk business in Europe (see

Figure 1, [

1]). Safety performance has undergone significant changes despite COVID-19 shutdowns.

Figure 1 compares the EU-27 average to Austria, Germany, and Italy, chosen for their significant economic and cultural linkages to South Tyrol’s German-speaking community and large construction projects. Denser lines represent Non-Lethal (NL) accidents, while spaced ones indicate Lethal accidents (L). This graph displays Europe as blue, Italy as green, Austria as red, and Germany as black. Deaths declined from 121 in 2018 to 102 in 2020 (−15%) in the EU-27, and fatal injuries dropped from 40.9 to 39.9 per 100,000 workers. In 2021, 150 accidents and 42.6 per 100,000 workers occurred after sites reopened. German construction was an “essential sector” and ran at 80% capacity; therefore, fatalities reduced from 19 to 16 but increased from 19.0 to 19.9 per 100,000. Italian fatal accidents declined from 10 to 8 (rate 2.06 to 1.61 per 100,000), whereas Austria’s fell from 1.69 to 1.48 per 100,000, despite underreporting keeping the absolute figure at 1. After limitations are loosened, exposure and risk rise swiftly, as shown by the post-2020 rebound. Germany’s fatal injury rate grew from 12 to 20 per 100,000 during a decade, whereas Italy’s and Austria’s remained stable. Inequalities indicate that national safety culture, enforcement harshness, workforce composition, and reporting resilience outlast pandemics.

These data underscore the need for robust safety management strategies, including innovative technologies like conversational agents (CAs) enhanced by Large Language Models (LLMs) and computer vision (CV). These research areas have seen a significant expansion across sectors such as healthcare, education, fire safety, and logistics. However, their straightforward application in the domain of Occupational Health Safety (OHS) within construction sites, specifically for professionals with low coding knowledge, remains unexplored.

LLMs are significant instruments capable of generating, interpreting, and utilizing human natural language [

2]. Indeed, LLMs have held a niche within the extensive academic landscape for an extended period and have seen remarkable evolution in recent years, emerging as a significant area of scholarly interest [

3,

4]. Shortly after the emergence of LLMs, Multimodal Large Language Models (MLLMs), a subset of the former, garnered attention [

5,

6,

7,

8]. MLLMs are capable of processing multiple types of data, such as text, images, audio, and video. CAs, systems designed to interact naturally with humans through dialogue, have shown promise in domains such as manufacturing [

9,

10,

11,

12,

13] and healthcare [

14,

15,

16]. However, their potential in construction-site safety remains insufficiently explored [

17,

18,

19]. More specifically, construction sites are dynamic, high-risk environments in which many simultaneous hazards can occur; hence, OHS tries to address these issues [

20,

21,

22,

23,

24].

This research seeks to examine the applicability of MLLMs in construction sites, building upon prior studies in the field, such as [

20,

25,

26]. It specifically examines the role of professionals with limited or no programming skills and evaluates how effectively the system supports their use. To achieve this objective, it is crucial to understand the reliability of the outcomes in relation to the ground truth. In parallel with insights from related disciplines, we investigate the degree to which model efficacy is influenced by prompt design, the quality of the dataset, and the environment of deployment.

This study contributes by proposing a novel risk-management framework investigating how MLLM agents can assist health-and-safety professionals on construction sites. What sets this work apart is its emphasis on using commercially available, user-friendly tools that require low to no programming skills. These are systems that professionals can use immediately, thereby democratizing access to powerful safety assessment tools. Part of this investigation questions whether multimodal agents with image-interpretation capabilities can automatically detect such risks and how closely their outputs align with regulatory requirements, focusing on Italy’s Legislative Decree 81/08 as the national interpretation of the EU regulations (stemming from 89/391/EEC).

Adopting an applied and empirical approach, we analyzed a set of 100 real-world construction-site images. Each image was processed by a commercially available, low-code multimodal conversational agent to (i) flag hazards, (ii) rate risk severity, and (iii) assess compliance with relevant safety provisions. The MLLM assessments were then benchmarked against the ground truth to quantify accuracy and identify failure modes.

This article is structured as follows. First, this study includes a comprehensive literature review on methods and approaches applied for OHS in construction sites and other relevant fields. It discusses relevant prior studies, highlighting the gaps in existing knowledge. Second, the authors clarify the objectives and hypothesis, laying out the specific goals of the research and the assumptions being evaluated. Third, the authors detail the systematic approach adopted within the methodology section. The methodology includes dataset preparation, model interaction, and ground truth comparison. Fourth, the experimental results are reported, followed by a detailed discussion of the implications and limitations of the findings. Finally, the conclusion summarizes the study’s outcome and suggests directions for future research.

2. Literature Review

This section offers a comparative analysis of existing CAs applications in adjacent domains and draws parallels and distinctions with the proposed research.

2.1. Computer Vision for Safety Monitoring

Numerous studies have focused on the use of CV for safety monitoring on construction sites. Research interests have a fragmented approach for specific topics, including but not limited to personal protective equipment detection [

21,

22], ergonomics [

23,

24], and scaffolding safety [

27,

28].

These domain-specific solutions are inherently risk-focused, addressing specific subsets of hazards rather than providing a holistic safety approach. For instance, Fan et al. investigated the potential of Generative Pretrained Transformers (GPTs) with visual–text capabilities to extract ergonomic risks [

29]. Their fine-tuned model achieved an accuracy of 81%, while the model before finetuning achieved only 28%. Their results are highly specific for ergonomic risks.

Similarly, Jung et al. developed VisualSiteDiary, a Vision Transformer-based image captioning model that generates human-readable captions for daily progress and work activity logs, enhancing both documentation and image retrieval tasks [

30]. While these studies demonstrate the potential of customized solutions to improve safety measures, they often produce fragmented knowledge, limited to specific risks or tasks. Chou et al., for example, applied amodal instance segmentation optimized by metaheuristics to detect unsafe behaviors associated with fall risk [

31]. Despite its technical sophistication, such approaches demand advanced programming skills, which are frequently unavailable in small- and medium-sized enterprises (SMEs), limiting large-scale deployment.

In the context of personal protective equipment, one common unsafe behavior is the failure to wear hard hats. Fang et al. addressed this by applying a Faster R-CNN model to detect workers exhibiting this risk behavior [

32]. However, broader challenges remain in applying CV to occupational health and safety (OHS), particularly in action recognition and tracking accuracy [

33]. These challenges are consistent with our findings, which highlight the difficulties of CV systems in managing complex, ambiguous construction site scenarios. The literature underscores the limitations of CV in interpreting behavior-based safety, an issue critical to addressing environmental threats and ergonomic hazards.

Recent reviews further consolidate these insights. Zhang et al. conducted a comprehensive review of vision-based OHS monitoring, identifying key technical limitations, including reliability, accuracy, and applicability in dynamic construction environments [

34]. These findings align closely with our study, which also emphasizes the challenges of achieving robust hazard detection under varying site conditions. Notably, Zhang highlights the lack of comprehensive scene understanding as a significant barrier, a limitation echoed in our results. In the same year, Fang et al. reviewed deep learning and CV applications in construction safety but did not consider multimodal CAs [

35]. This distinction is critical: unlike prior studies, our work integrates both CV and multimodal CAs capable of processing textual and visual information for real-time hazard detection and regulatory compliance, addressing a key gap in the current literature.

2.2. CAs for Risk Management and Safety in High-Risk Industries

Another relevant area of interest concerning the integration of CAs for OHS is represented by risk management. It is a growing area of interest, particularly in high-risk sectors, including construction sites, as well as mining and oil and gas.

Two interesting studies, published in 2024, addressed the potential of MLLM in automating hazard detection and emphasized real-time monitoring for predictive intelligence. Tang delved into the former topic, moving from identifying, assessing, and controlling risks to prevent the occurrence of injuries. Initially, in the design phase, Tang envisions that CAs and building information modeling (BIM) can be integrated. The study continues analyzing how MLLM can be deployed in an operational context through CV, sensor networks, and ML to capture real-time conditions [

36]. Its application is to create personalized training for workers, which differs from our use case. The latter topic, addressed by Shah et al. [

37], aligns better with our application using MLLM-driven technologies to improve construction site safety; despite its focus on predictive insights, the current research specifically addresses real-time hazard identification and regulatory compliance via a visual dataset.

2.3. Multimodal CAs in Safety

Kandoi et al. explored multimodal conversational agents (MCAs), which integrate both speech and visual analysis to understand human intent and context. Their study demonstrated how MCAs can enhance user experience and context awareness [

38]. While this research emphasizes the integration of speech and image processing, the current study goes further by applying conversational MLLM agents in construction settings to detect hazards and ensure compliance with legal frameworks such as Legislative Decree 81/08. This is a crucial distinction, as the primary application of Kandoi’s work targets virtual assistants, whereas the present research applies CAs specifically to safety-critical construction sites. Similarly, Hussain et al. developed a VR-based conversational system to improve construction safety training, showing positive outcomes in worker knowledge and safety practices [

39]. Unlike the VR approach, which is focused on training, the present study employs CAs for real-time hazard detection and regulatory compliance monitoring on actual construction sites, aiming to prevent accidents rather than solely improving post-event knowledge. Another application for training purposes is Popeye, outlined by Colabianchi et al. [

40]. Popeye is a multimodal chatbot for training operators in hazardous container inspection tasks. It integrates voice input, natural language understanding, and CV to simulate critical safety procedures. Popeye is the closest analogue to our work. Both projects use CV and natural language processing to support occupational safety in high-risk environments. While Popeye is primarily used for training purposes in a simulated setting, our project aims to deploy vision-enhanced CAs in live construction site environments.

It is necessary to mention two studies that, similarly to this one, concern chatbots. Amiri et al. [

41] analyzed chatbots used during the COVID-19 pandemic, providing a taxonomy for structuring chatbot functionalities like risk assessment and information dissemination. Although their study provides useful insights into chatbot functionalities, the current research goes beyond rule-based, decision-tree chatbots by integrating multimodal capabilities, i.e., vision and text for monitoring in construction sites. Further studies, conducted by Caccavale et al., described the deployment of ChatGMP, a chatbot used for compliance auditing in a chemical engineering course. Its study, while more aligned with our use case of conducting audits, represents an educational simulation allowing students to interactively explore technical documentation and procedures [

42]. Unlike Caccavale’s approach, the current research deals with real-world, unstructured visual data, where the system must reason through dynamic and complex site conditions for real-time safety assessments. Lastly, Hostetter et al. compare ChatGPT-4, released in March 2023, and Google Bard in their ability to address fire engineering queries. They conclude that while ChatGPT outperforms Bard in accuracy, prompt engineering and human oversight are critical for reliability. Both projects assess technical compliance and safety risks in engineered systems using LLMs [

43].

From another field, the medical one, May et al. [

44] provide a review on CAs, emphasizing the ethical, legal, and technical challenges related to privacy and data protection. This study is particularly relevant as it underscores the necessity of “privacy-by-design” approaches in any system dealing with personal or sensitive data. Visual inspections of construction sites can easily capture personal information (e.g., faces and license plates), requiring rigorous privacy management. May et al. [

44] address an important topic, which was taken into consideration when analyzing our dataset. This aspect may also be crucial to our study.

2.4. Integration with Other Technologies

In the context of the construction industry, it is almost impossible not to mention BIM as a general methodological framework that encompasses many research areas. As previously seen in Tang’s study on integration of artificial intelligence with BIM for training purposes [

36], Kulinan et al. also discussed BIM integration with CV for real-time workforce safety monitoring. Kulinan’s main goal was to increase BIM capabilities contained in its 3D model and merge with real-world workers’ location through closed-circuit televisions (CCTV) to visualize color-based maps based on risk assessment [

45]. The findings, while still relevant, moved from the assumption that the system could be deployed by highly skilled programmers, a competence that is seldom available in construction sites’ operational context.

Arshad et al. provided a systematic literature review on different technologies, which concluded that CV and IoT are the most prominent for OHS, the former being dominant in terms of a low-level intrusion approach to data collection. The authors concluded that CV has proved to be beneficial for better accuracy prediction, real-time data monitoring, and model development for OHS [

46]. This serves as a solid foundation for our work to sustain our research direction.

2.5. Contribution to Knowledge Gaps

This work aims to provide quantitative validation and reliability thresholds of an MLLM commercial agent. This study will provide clear operational reliability metrics and measured precision and recall of an available MLLM as a comparison with the prototype developed by Saka et al. [

17].

This work also aims to expand hazard coverage and account for diverse real-world conditions by testing the system’s robustness in field environments. Also, this work is intended to broaden hazard coverage and real-world diversity conditions by testing robustness under field conditions. Chen et al. limited their study to 32 hazard categories [

47]. While we acknowledge the value of a tailored taxonomy, this study identifies the weakest categories, directly addressing the limitations of narrow scope and class imbalance. Furthermore, Nath et al.’s work tested hard hats and vests in controlled settings [

48] while our contribution lies in addressing, among others, varied light scenes and multi-story scaffoldings.

More importantly, our contribution lies in providing an evaluation of commercially available MLLM agents that require minimal to no programming knowledge. This work evaluated whether today’s plug-and-play assistant solutions can truly deliver operational hazard detection actionable for on-site practitioners.

3. Objective and Hypotheses

This study investigates the feasibility of deploying commercially available multimodal CAs in the field of OHS, focusing on construction sites. The investigation is situated at the intersection of intuitive virtual assistant tools and high-risk, dynamic operational contexts. The core focus is to understand whether these accessible agents, which require limited programming skills, can meaningfully contribute to hazard identification by analyzing image datasets of real-world construction scenarios.

A primary objective is to quantify the extent to which the LLM agents can detect safety hazards with operationally acceptable reliability. This involves evaluating how consistently the system can recognize different types of risks, such as falls, trip hazards, mechanical issues, or environmental threats, across varied visual inputs. It is essential to assess not only whether the system can detect a hazard, but also how trustworthy that detection is (i.e., the ratio of true positives to total detections). This metric differs from existing benchmarks as it encompasses the evaluation of MLLMs that were not fine-tuned.

This leads to a further objective: to define the threshold of reliability above which a system may be considered dependable for operational use. Another key objective is to map the relationship between the systems’ detection capability and the intrinsic features of the hazards themselves. This includes investigating whether more visually explicit hazards (e.g., a worker at height without a harness) are systematically easier for the system to identify than more context-dependent ones (e.g., unstable terrain or obstructed evacuation paths). By analyzing this relationship, this study aims to highlight both the strengths and the blind spots of a vision-based approach to safety. Furthermore, this study seeks to formalize an understanding of the variability in performance across hazard categories. This research aims to identify whether the system’s reliability is consistent across repeated assessments of the same visual input, or if the output is sensitive to test conditions, ambiguity in image context, or overlapping hazard types. These insights are crucial for evaluating the feasibility of integrating such tools into real-world construction safety workflows. The analysis is guided by a series of “a priori” research questions rather than formal null–hypothesis significance testing. First, we ask whether the CA can detect at least a subset of high-risk hazards—specifically, fall-related hazards, physical hazards, and those related to personal protective equipment (PPE). Second, we ask which hazards the professional might overlook and which the CA may fail to detect or interpret correctly, thereby indicating limitations in its current recognition capabilities. Third, we examine whether hazard categories with greater visual distinctiveness are associated with higher detection frequency and reliability, reflecting the vision-centric nature of the system. Lastly, we assess whether the distribution of reliability scores is non-uniform and clusters around specific hazard types, suggesting potential systematic biases or modeling constraints in the system’s interpretive logic based on existing applications. Findings are reported descriptively (point estimates and effect sizes) without accept/reject decisions. Together, these objectives and hypotheses define the analytic framework of the study and ensure a critical, structured examination of the role that accessible conversational technologies might play in advancing occupational safety in complex construction settings.

4. Research Methodology

This study reports a systematic and empirical evaluation of the performance capabilities of a commercially available multimodal conversational agent, specifically applied within the context of OHS management at construction sites. The primary aim is to assess the model’s proficiency in identifying safety violations and evaluating occupational risks in alignment with Italian occupational safety legislation (Legislative Decree 81/08).

In this study, low-code denotes a workflow that (i) uses an off-the-shelf, hosted multimodal model via API with no custom training or fine-tuning, (ii) expresses task logic through prompting rather than model development code, and (iii) adds only a thin “glue” layer (a simple WebUI plus a lightweight JSON parser) to batch images and export structured outputs. This design intentionally avoids the usual ML engineering stack (training scripts, hyperparameter tuning, GPU provisioning, and model serving), thereby reducing implementation effort and broadening accessibility for OHS professionals without machine learning expertise.

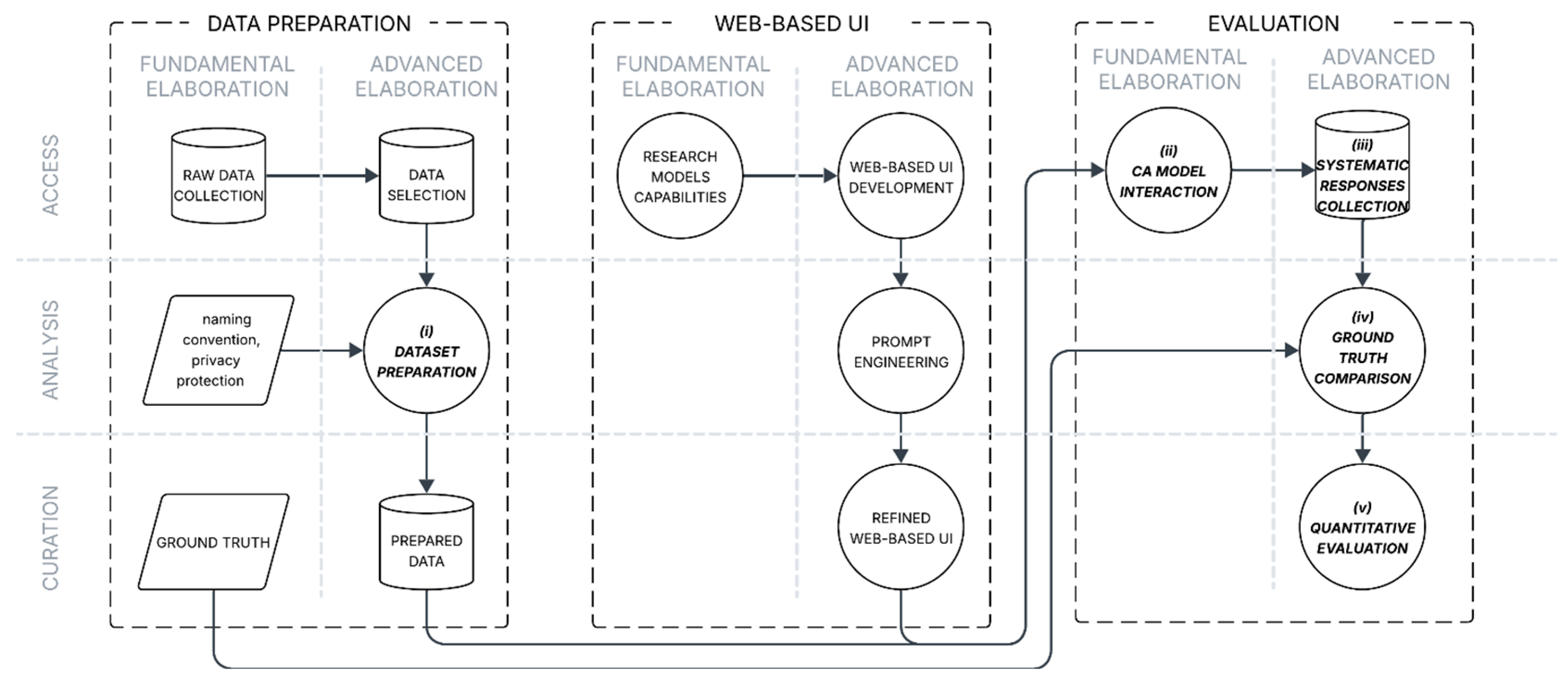

The methodology involves the development of a representative image dataset and a rigorous analytical protocol structured in three tiers (see

Figure 2). Each activity in the tier is clustered according to the data level of elaboration and to the use of data. The three tiers represent the workflow’s main activities, which are (A) data preparation, (B) web-based UI, and (C) evaluation. In each tier, there are two levels of elaboration: (a) fundamental and (b) advanced. The former consists of base activities upon which the latter builds. Fundamental elaborations encompass activities like data collection, assigning of naming convention, construction of ground truth, research about model capabilities (thus including choosing the most suitable one), and CA model interaction. The latter, the level of advanced elaboration, includes data selection, web-based UI development, systematic responses collection, and more. Each tier is also divided into three other stacks, which group how the data are treated. The three data stacks are (I) access, (II) analysis, and (III) curation. In this articulated structure, five distinct major activities are explained: (i) dataset preparation, (ii) CA model interaction, (iii) systematic collection of model responses, (iv) ground truth comparison, and (v) quantitative metric-based evaluation of performance.

In raw data collection, a large number of pictures are collected and stored from real sites. Out of this initial collection, a carefully curated dataset comprising 100 geotag-stripped JPEG images (mean resolution = 879 × 667 px, standard deviation = 316 × 237 px) capturing heterogeneous construction-site scenarios was assembled. The collection was assembled from private and non-fully shareable sources to provide the readers with useful examples. Some pictures have been edited to remove any indications of places, companies, and people involved (see the Experimental Results section). These images were deliberately selected to represent diverse operational contexts typically observed in construction environments, with a particular focus on infrastructure sites. The scenarios presented include diverse visual circumstances and occupational dangers designed to rigorously test the interpretation abilities of the system. The dataset encompasses instances of the presence or absence of mandated personal protective equipment (PPE) among workers, arrangements of scaffolding and other temporary structures that signify compliance or non-compliance with regulatory standards, and machinery that is either properly safeguarded or operated unsafely. Additionally, scenarios incorporating inadequate safety signage, fall risks including open edges and unprotected platforms, exposure to electrical hazards, and adverse meteorological conditions (e.g., rain, fog, and insufficient lighting) were systematically represented.

All datasets were evaluated to establish the ground truth. The ground truth was established by a panel of three Italian professionals. These three selected professionals had to be certified Safety Managers according to Italian Legislative Decree 81/2008 and had construction site experience of at least five years. Disagreements were resolved through a consensus process in which the three safety managers jointly reviewed and discussed the divergent cases until a common classification was reached. At this stage, no formal inter-rater reliability index (e.g., Cohen’s Kappa) was calculated, as priority was given to achieving consensus. We acknowledge that including such measures would further strengthen transparency and reproducibility, and we highlight this as a limitation. Future work will incorporate inter-rater reliability reporting, particularly as the dataset and the pool of experts are expanded.

The observed safety infractions in each photograph are classified according to a predetermined four-tier scale: Low (1), Medium (2), High (3), and Critical (4) risk categories. Ground truth comprises a textual account of observed conditions, a systematic catalog of identified infractions, and direct citations to relevant regulatory laws from Legislative Decree 81/2008.

Subsequently, all inferences were processed with gpt-4o-2024-11-20, the 20th of November 2024 snapshot of OpenAI’s “omni” multimodal GPT-4o, to ensure reproducibility. Decoding was fully deterministic (temperature = 0.0, top-p = 1.0) and allowed up to 6384 completion tokens within the 128k-token context window. Each request bundled the textual prompt with a Base-64-encoded JPEG of the image.

Interactions with the model were rigorously standardized by means of a single, task-oriented system prompt (

Appendix A).

The prompt, see Listing 1, follows a zero-shot, instruction-only paradigm: no exemplar Q&A pairs are supplied; instead, the model receives a precise task definition (hazard detection under Italian Decree 81/08), a five-step analytical workflow (scene description, hazard categorization, likelihood/severity scoring, preventive measures, and legal compliance check), and a fixed output schema. Reusing this prompt verbatim for every image removes prompt-induced variance, enforces structured chain-of-thought reasoning, and ensures full reproducibility across the entire test set.

| Listing 1. Prompt used in WebUI application, also available in GitHub repository (Appendix A). |

“IMAGE_ANALYSIS_PROMPT = “““

##Task Definition##

Analyze a dataset of heterogeneous workplace images from construction sites

in accordance with Italian Decree 81/08 on workplace health and safety.

Your objective is to identify hazards, assess risks, and suggest corrective

actions in a structured manner.

##Image Analysis Guidelines##

The images may depict:

Active workplace activities (workers operating machinery, using equipment, performing tasks).

Static workplace elements (equipment, tools, protective barriers).

Environmental and contextual factors (signage, lighting, workspace organization).

Empty or unclear scenes (where no direct activity is visible but risks may still be present).

For each image, follow these structured steps:

*1. Describe the Image*

Identify any visible activities (e.g., a worker using a jackhammer, a crane lifting materials, etc…).

Mention the presence of equipment and machinery.

If no clear activity is visible, describe the context (e.g., an empty scaffold, an unorganized work area, etc…).

*2. Identify and Categorize Hazards*

Classify hazards.

If no immediate hazard is visible, mention it.

Use structured reasoning to explain why each hazard is a safety concern.

*3. Assess Risk Probability and Impact*

Assign a Likelihood Score (1–4) based on the probability of the hazard occurring.

Assign a Severity Score (1–4) based on the potential damage an incident could cause.

Justify the scores using environmental context, worker behavior, and regulatory factors.

*4. Recommend Preventive Measures*

Provide practical safety solutions for each identified hazard.

Explain why these measures would be effective in mitigating risks.

If no visible hazard is found, suggest best practices for overall safety improvements.

*5. Check for Legal Compliance*

Compare the situation with Italian Decree 81/08 safety standards.

If applicable, cite the specific article or regulation being violated.

If no violation is evident, indicate compliance with workplace safety best practices.”“” |

Here follows a meticulous description of the system’s analytical reply. Firstly, the CA provided a detailed descriptive analysis of each image, noting the observable occupational activities, the presence and condition of machinery, and other pertinent contextual factors, even in scenarios devoid of direct human presence. Secondly, the model identified and categorized potential hazards, explicitly articulating the logical reasons supporting each hazard classification. Thirdly, each identified hazard was quantitatively evaluated by assigning two distinct numerical scores: a likelihood rating ranging from 1 to 4, denoting the estimated probability of hazard occurrence, and a severity rating also spanning from 1 to 4, indicative of the potential gravity of associated consequences. Both scores were restricted to integer values; fractional scores were disallowed by schema validation. Fourthly, the system suggested relevant preventive or corrective actions for mitigating each identified hazard, providing a justification of their anticipated effectiveness. Finally, the model evaluated legal compliance by systematically referencing applicable provisions from Legislative Decree 81/08, explicitly citing the relevant regulatory articles or sections to substantiate its evaluations.

To enable subsequent comparative analyses, all generated outputs were systematically archived in JavaScript Object Notation (JSON), a widely adopted, human-readable open-standard format. This format helped to analyze the results and draw conclusions.

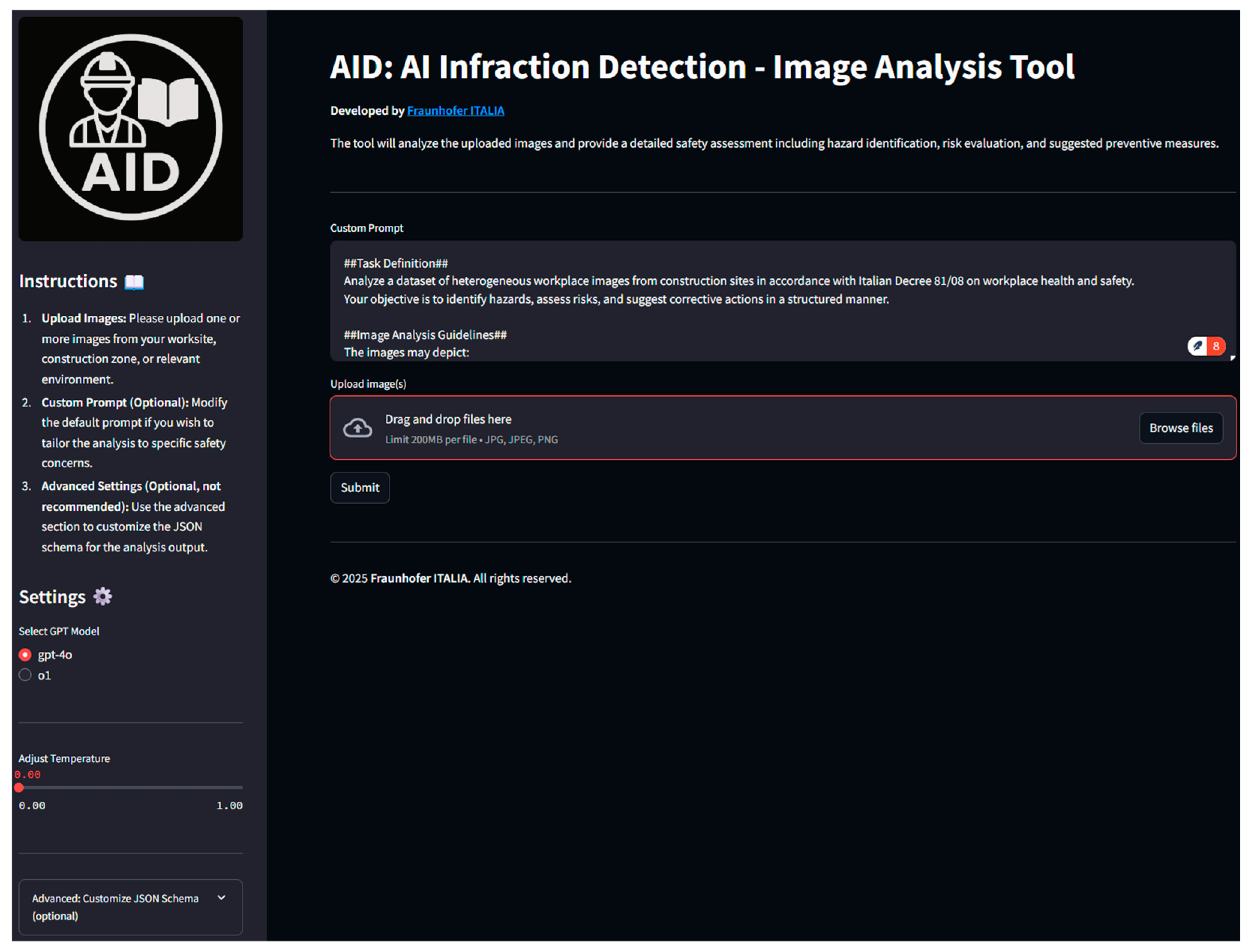

To streamline interaction with the model and ensure reproducibility across the entire dataset, a dedicated web-based user interface (WebUI) was developed (see

Figure 3). This solution was one of the few coded parts, as it was implemented to facilitate the process of uploading pictures (overcoming the limitation of uploading a maximum of 10 photos per prompt) and downloading the resulting JSONs for the following analyses. The interface was developed in Streamlit (v 1.43.2) and orchestrated calls through the official OpenAI Python SDK (v 1.66.3). This WebUI integrates with the OpenAI API, enabling systematic uploading and automated processing of the entire image dataset. A custom-designed parser was embedded within the WebUI to facilitate automated extraction, structuring, and conversion of artificially generated responses into the standardized JSON format, significantly enhancing workflow consistency, methodological rigor, and reproducibility. In line with the low-code objective, this WebUI and the embedded parser were the only custom components implemented; no data-augmentation pipelines, fine-tuning procedures, training loops, or deployment/serving code were required.

A conventional computer vision approach for hazard detection typically requires dataset-specific labeling schemas; model selection (detection/segmentation), training scripts, and hyperparameter tuning; GPU/cluster provisioning and run management; export/conversion for inference; and deployment/monitoring infrastructure. Our pipeline eliminates those stages by (a) calling a hosted multimodal model via API, (b) encoding task requirements in a reusable prompt, and (c) using only a thin Streamlit UI and JSON parser for batching and export. This substantially lowers the engineering burden and helps democratize access for non-ML practitioners in OHS.

The subsequent phase entailed rigorous comparative analyses between automatically generated outputs and ground truth, the latter serving as the reference standard. The central performance metric was the AI Infraction Detection (AID) score (see Equation (1)), sometimes also referred to as the Critical Success Index (CSI), defined in [

49], where TP (true positive) denotes a violation correctly flagged by the model, FP (false positive) denotes an alarm raised where no violation existed, and FN (false negative) denotes a violation the model failed to detect. Because AID omits the large number of true-negative frames, it is robust to the class imbalance typical of occupational safety images while penalizing both missed hazards and false alarms. For a diagnostic breakdown, we also report precision, recall, their harmonic mean, and the F1 score (see Equations (2)–(4)).

Together, these three metrics indicate whether limitations arise chiefly from false alarms (low precision), missed hazards (low recall), or a combination of both (low F1), thereby complementing the single-number AID and yielding a complete, actionable picture of model behavior. For operational use, high AID scores are sought, whereas lower scores signal serious deficiencies that preclude immediate deployment.

Finally, to ensure methodological transparency, replicability, and facilitate future research, used prompts, WebUI application code and associated parser tools used within this study will be openly available in the GitHub repo (see

Appendix A).

5. Experimental Results

Based on the data contained in the JSON files, it was possible to visualize and analyze the results.

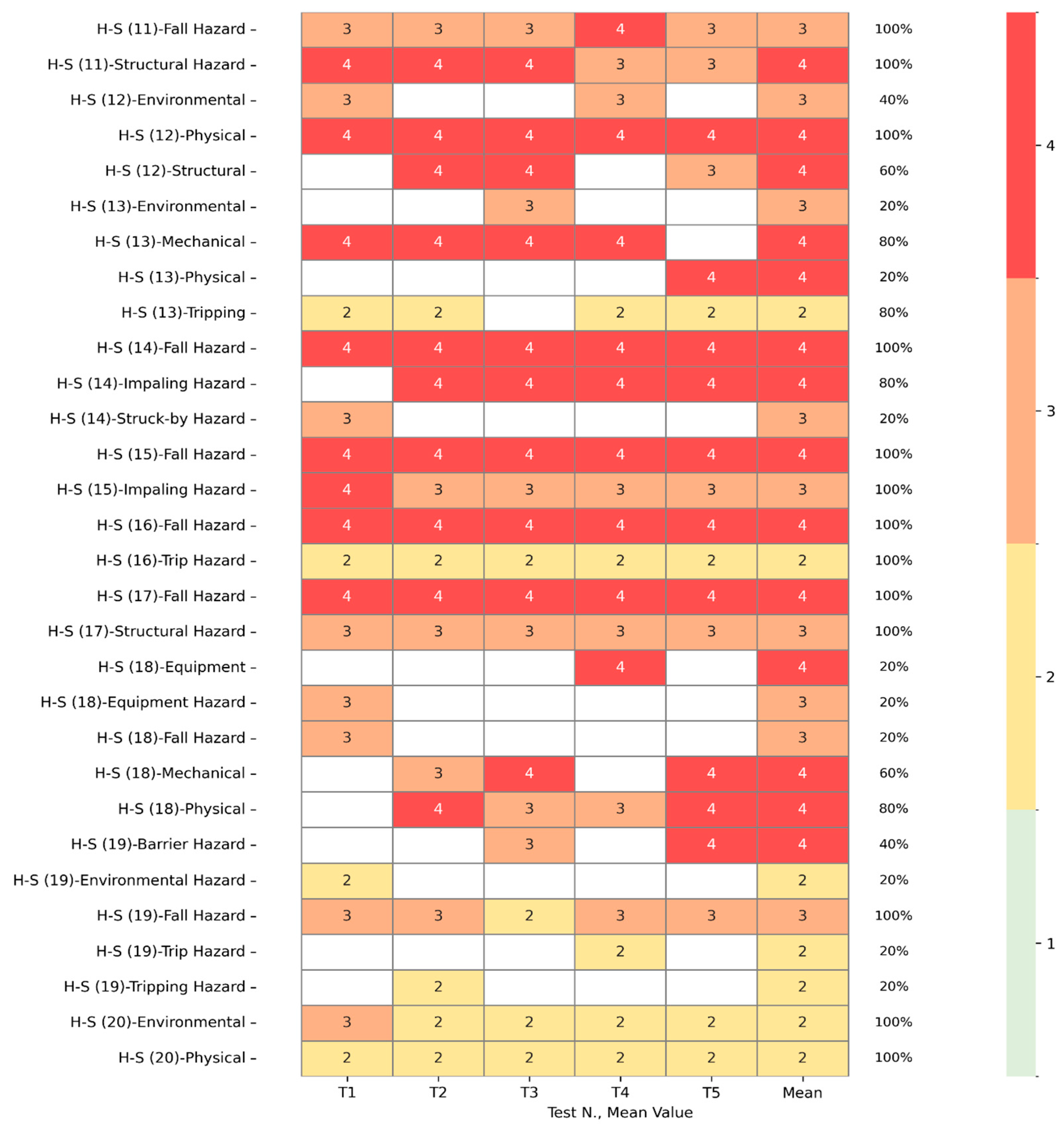

The heatmap in

Figure 4 helps to visually represent the occurrence likelihood of batch group n.02, the detected hazards in the dataset across five test runs (from T1 to T5). Each row corresponds to a specific hazard scenario (denoted by H-S (picture number)—hazard type). To improve readability, the results were grouped into ten different batches, each containing ten dataset images. The first five columns from left represent T1 to T5, and the last column represents the mean value of the determined likelihood. In both heatmap pictures, the vacant cells indicate that the system failed to detect the specified hazard during that run. The mean value was determined without considering the undetected hazards to highlight how consistently the system can evaluate the scene. The percentage value, in each line, shows how reliable that hazard can be considered, based solely on the CA evaluation. The percentage in each line shows how many times the same error was identified from the CA, hence how reliable that specific hazard can be considered across the five test runs. Similarly,

Figure 5 shows the determined damage of every specific hazard according to the system. Both figures are organized similarly to each other. The results of the five test runs (from T1 to T5), in JSON format, are available in

Appendix A.

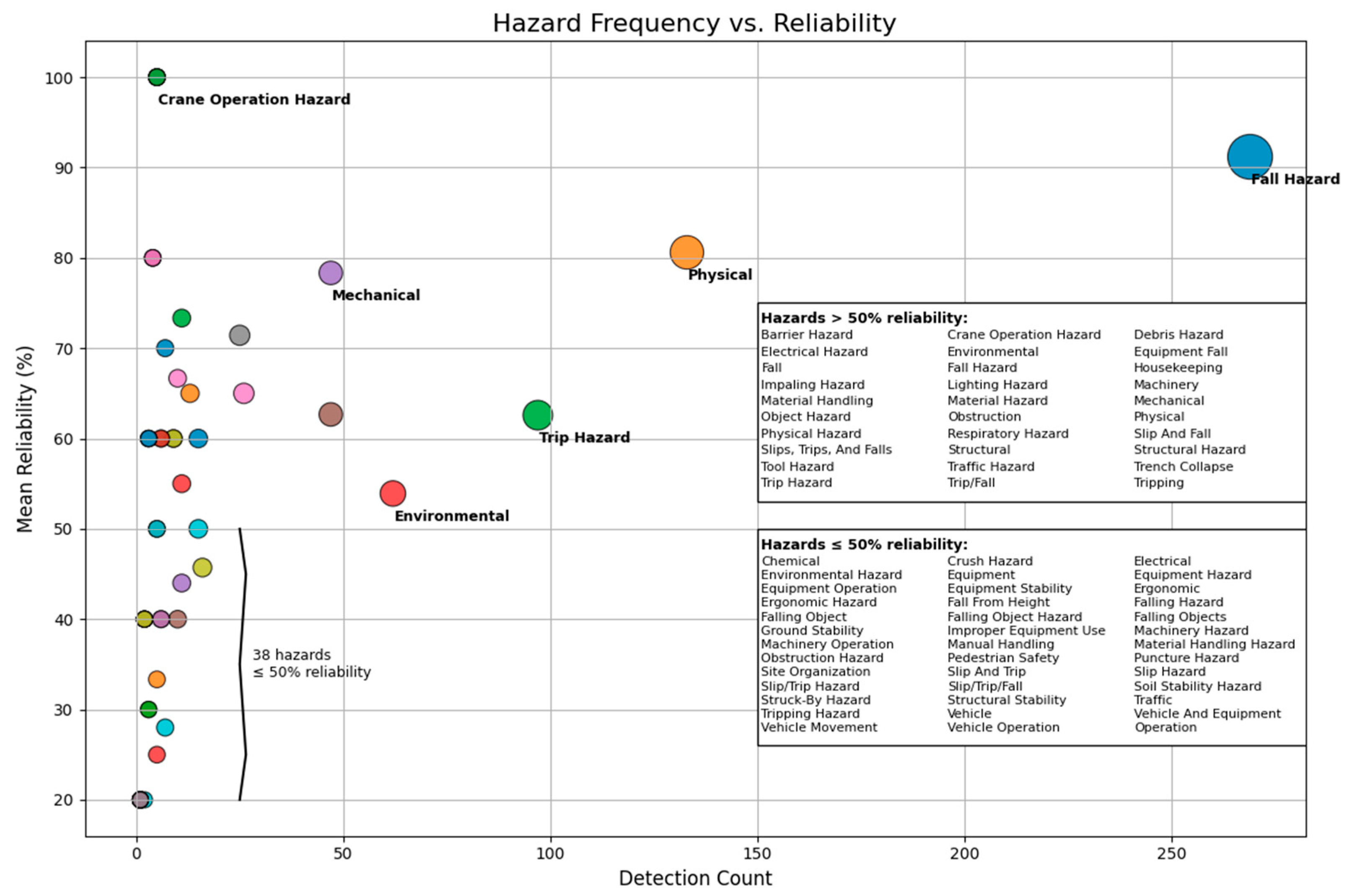

The chart in

Figure 6 illustrates the relationship between the detection frequency of occurrence of a specific hazard and its reliability, considering how many times it has been detected by the system. Each bubble represents a specific hazard, positioned according to its detection count (horizontal axis) and mean reliability (vertical axis) determined based on the five test runs. Some hazards stand out from this chart:

High-frequency, high-reliability hazards, like fall hazards and physical hazards.

Low-frequency, high-reliability hazards, such as crane operation.

Moderate-frequency, moderate-reliability hazards, like trip hazards.

Additionally, the chart helped to identify two main categories: the first above 50% of reliability, and the second below this threshold.

The study revealed that the selected multimodal conversational agent was able to detect and identify hazards. Even if no acceptance threshold was established, the figures determined during the evaluation show that the system’s performance requires a professional to validate the results. To envision autonomous use, further refinement and validation are necessary before standalone use.

The empirical evaluation of the commercially available, vision-enabled CA reveals that it detects fewer than half of the hazards compared to the ground truth while generating nearly the same number of false alarms. These results highlight several qualitative shortcomings of the system. Over five separate test runs (T1–T5), the system processed 100 annotated images per run.

Table 1 presents the performance metrics across the five different runs. TP ranged from 110 to 113, FP remained consistently high between 85 and 87, and FN ranged from 41 to 45.

The subsequent phase involved rigorous comparative analyses between AI-generated outputs and ground truth, the latter serving as the authoritative gold reference standard. The central performance evaluation metric employed was designated as the AID score: the model’s performance ranged between 46.1% and 47.2%, with a mean of only 46.6% (see

Table 1).

Overall performance is sub-optimal: average precision is 0.56, recall is 0.72, and F1-score is 0.63 (see

Table 1 for per-run values). Thus, only 56% of the alarms correspond to real hazards (44% are false positives), and 28% of true hazards remain undetected. These results indicate that the agent both misses slightly more than a quarter of the hazards and generates almost as many correct alerts as false ones.

Beyond these headline numbers, our in-depth analysis reveals four interlinked failure modes that jointly drive down the AID score: (1) an oversimplified hazard taxonomy, (2) shallow causal reasoning, (3) context-agnostic object detection, and (4) acute sensitivity to image quality.

Table 2 maps each mode to its predominant metric impact (FP, FN, or both). A prominent pattern in the error data is the model’s tendency to over-segment semantically similar hazards into numerous subtly different categories, causing more difficult clustering (e.g., slip/trip, trip/fall, slip/trip/falls, and trip hazards—see

Figure 6). Conversely, the model also exhibits a tendency to under-specify distinct hazards by grouping them under overly broad labels, obscuring important safety-relevant distinctions. Similarly, in

Figure 7, specifically in Test 2, the ground truth is that the image depicts a collapse hazard, namely, the instability of an excavation slope. The model, however, tags this same scenario under the generic heading of “Physical” risk. This coarse-grained taxonomy leads to two interrelated problems: (a) every time a slope-instability image appears, it never counts as a true positive for “Collapse”, and (b) the model’s mislabeling is treated as a false negative against the ground truth. Across the dataset, such systematic category mismatches contribute heavily to both FP and FN totals, reducing the overall proportion of correct detections.

Even when the agent correctly identifies a hazard’s general class, its reasoning often misses the mark. Considering the results contained in

Figure 5, it can be noted that Test1 of H-S (19) (see

Figure 8) entails the absence of a guardrail or safety net in the background of the picture, close to the existing building, thus creating a clear fall risk. The LLM model flags a “Fall Hazard,” but its accompanying description attributes the danger to the operator slipping on an uneven surface. Here, the system demonstrates object-level competence as it recognizes “fall” conditions, but it fails to trace the correct causal chain.

As a result, the model’s justification does not align with the hazard’s true origin, making it harder for safety professionals to trust or act on the model’s advice and contributing further to spurious alerts.

Our observations indicate that the considered LLM is adept at spotting equipment and structural elements often associated with elevated-work risks, such as scaffolding planks, folding ladders, and similar tools. Yet, this strength becomes a liability when the model applies a one-size-fits-all checklist rather than evaluating how those objects are used in situ. In H-S (29) (see

Figure 9), a worker stands securely close to a correctly deployed folding ladder, positioned well within the ladder’s safe-use parameters. Despite this, the agent warns of a fall risk on the grounds that there is no collective fall protection barrier detected around the ladder. A human inspector would recognize that, under proper ladder usage guidelines, guardrails are not required in that context. The model’s failure to appreciate operational context inflates its false positive count, again reducing its net reliability.

Finally, our dataset includes photographs with varying resolution, lighting, and framing—mirroring real-world constraints on field imagery. We find that when the visual quality dips below a critical threshold, the model frequently issues unwarranted hazard alerts. In

Figure 10, the scaffold is correctly equipped with guardrails, yet the language model flags a “Fall-From-Height” risk because it cannot visually confirm the presence of protective devices. Such cases drive up false positives and simultaneously contribute to false negatives when the system fails to register the compliance that a human reviewer can discern. This phenomenon highlights a practical limitation: unless input images meet minimum clarity standards, any vision-based model, even one with a high theoretical capacity, will struggle to produce dependable safety assessments.

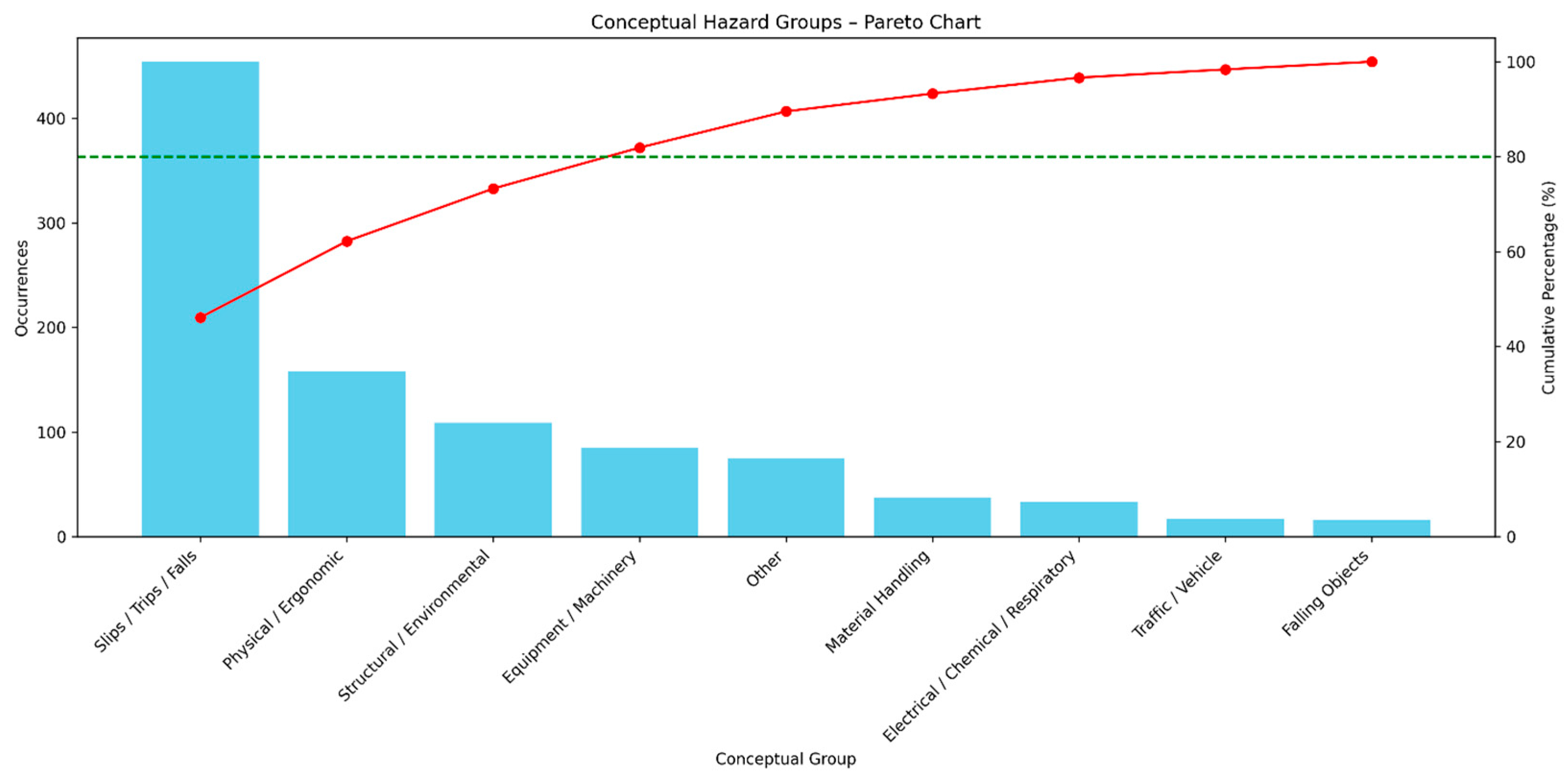

Figure 11 presents a Pareto analysis of all hazard occurrences derived from the annotated dataset. The depicted occurrences are meant to show how excessive granularity affects the result; it is noted that TP, TN, and FP are all included in the charts. This serves as proof of concept to demonstrate the importance of proper taxonomy. Future work will involve a comparison between detection frequency and ground truth distribution.

The blue bars represent the absolute frequency of each hazard category, sorted in descending order from the most to the least frequent. The red line denotes the cumulative percentage of all hazard occurrences, with the dashed green line indicating the 80% threshold conventionally used to identify the “vital few” categories that account for most events. The chart reveals a pronounced skew: a small subset of hazard categories, most prominently fall hazards, physical hazards, trip hazards, environmental hazards, and mechanical hazards, collectively contribute the largest proportion of detected hazards. The cumulative curve surpasses the 80% threshold within the first six to eight categories, suggesting that targeted mitigation efforts focused on these dominant hazards would address many recorded occurrences. However, the same figure also illustrates the model’s propensity for over-segmenting semantically similar hazards into multiple sub-categories (e.g., slip/trip, trip/fall, slip/trip/fall, and trip hazards), which inflates the apparent diversity of hazards while complicating frequency analysis. Conversely, under-specification, where distinct hazards are grouped under overly broad headings such as “Physical”, masks relevant distinctions and can shift hazards away from their appropriate high-frequency categories. Both effects distort the distribution in

Figure 11, inflating counts in certain labels while diluting others, thereby limiting the interpretive accuracy of the Pareto distribution as a guide for prioritization.

To address the distortions introduced by over-segmentation and under-specification observed in the category-level distribution of

Figure 6, the hazard data were subsequently consolidated into broader conceptual groups, as illustrated in

Figure 12. This categorization resulted in nine broader groups. The figure below presents the updated Pareto analysis of hazard. The “vital few” are now represented by four hazard categories, i.e., slips, trips, falls; physical/ergonomic; structural/environmental; and equipment/machinery. The cumulative hazards total 806, comprising 80% of all hazards. This updated Pareto analysis confirms that the largest proportion of hazards is still related to slips, trips, and falls.

The AID performance quantitatively validates our qualitative observations: while the agent reliably detects simple, visually conspicuous cues, such as unprotected edges or obvious PPE violations, it flounders on nuanced judgments requiring taxonomic precision, cause-and-effect reasoning, or contextual discernment. Each of these shortcomings directly maps to inflated FP and FN counts.

6. Discussion and Implications

The present results show a clear dichotomy in the current generation of GPT-4o-based agents. When hazards manifest as salient, visually unambiguous cues—for example, a worker standing un-tethered on a scaffold edge or a cracked sling on a lifting device—the model achieves high precision, confirming its value for first-pass triage. It serves as an augmentation technology that accelerates the detection of clear hazards without replacing expert judgment. Conversely, performance deteriorates sharply for context-dependent or latent hazards such as poor ergonomics, cumulative noise exposure, and inadequate ventilation, whose recognition requires causal inference, temporal continuity, or tacit domain knowledge not encoded in the zero-shot prompt. This pattern is consistent with the overall metrics (AID = 0.46, precision = 0.56, recall = 0.72, and F1 = 0.63): the agent flags many genuine threats but also misses roughly one in four and produces almost as many false alarms as true ones.

Two methodological factors explain the situation: First, reasoning depth: LLMs excel at pattern completion but still struggle with the “connect-the-dots” reasoning, which reinforces their role as augmentation rather than automation while relying on humans for depth and nuance. Second, the absence of explicit context: a single still image rarely conveys workload duration, ambient conditions, or the sequence of preceding actions; without such information, the model cannot reliably estimate severity or likelihood for ergonomic and environmental risks. The absence of inter-rater reliability metrics is noted as a limitation and will be addressed in future work.

From an academic standpoint, this study deepens the empirical understanding of how commercially available MLLMs perform in a highly demanding, real-world application domain. It provides quantifiable reliability metrics (AID, precision, recall, and F1) against a legally grounded gold standard, exposing where current models excel (salient, visually distinct hazards) and where they fail (context-dependent, taxonomically nuanced, or low-quality imagery). This evidence base can inform future AI-safety research by highlighting concrete limitations (e.g., taxonomy granularity, causal reasoning, and contextual awareness). Additionally, the methodological design, with deterministic prompting, rigorous ground truthing, and open-source tooling, contributes a reproducible evaluation framework that other scholars can adapt to benchmark emerging MLLM architectures or multimodal safety agents in adjacent high-risk domains.

The findings offer a sober roadmap for industry stakeholders considering MLLMs as part of site safety protocols. Rather than endorsing full automation, the results support a “human-in-the-loop” model in which CAs act as force multipliers for safety officers, efficient at triaging obvious hazards but dependent on expert oversight for subtle, situationally complex risks. This study’s detailed failure analysis can directly guide procurement and deployment decisions: for example, pairing these agents with refined hazard taxonomies, enforcing minimum image quality thresholds, or integrating supplementary temporal/sensor data before trusting compliance outputs. By identifying the “vital few” hazard categories that dominate incident likelihood, this work also points to high-impact areas for targeted AI augmentation, potentially reducing inspection burdens while improving safety outcomes. In short, it grounds the promise of MLLM-based safety tools in realistic operational constraints, helping both researchers and practitioners avoid the trap of overestimating present capabilities.

Equally critical are the ethical and privacy dimensions of deploying such systems in real-world environments. In this study, we addressed these concerns by systematically removing geotags from all shared datasets and by blurring faces and license plates in illustrative examples. These measures ensured compliance with privacy safeguards and highlight that any practical deployment of this technology must treat ethical considerations as foundational, not peripheral, requirements.

In summary, the proposed method is best viewed as an augmentation technology that amplifies inspectors’ reach, improves triage efficiency, and systematically reduces inspection burdens, while still depending on human expertise for contextual interpretation.

7. Conclusions and Outlooks

Commercially available vision-enabled CAs show promise as first-pass screeners for construction-site safety, yet the present study confirms that they remain useful tools in the hands of construction site professionals. Across five test runs (100 images each), the system achieved a mean AI-Infraction-Detection (AID) score of 46.6%. On average, the model correctly detected about 110 hazards per run but simultaneously generated ≈85 false alarms and missed about 43 genuine hazards, exposing three root causes: an oversimplified hazard taxonomy, shallow causal reasoning, and the absence of explicit scene context in single-frame images.

These findings suggest three immediate research priorities: (i) Systematic benchmarking—a controlled comparison of both proprietary models and open-source models is needed to distinguish intrinsically strong architectures from prompt- or data-limited ones and to guide model selection for specific safety domains. (ii) Taxonomy refinement—refining the label set in collaboration with safety experts, from coarse classes such as “equipment hazard” to granular sub-categories, should reduce false positives by providing the model with clearer decision boundaries. (iii) Causal and contextual augmentation—newer multimodal transformers that fuse vision with temporal cues, graph-based object relations, or lightweight on-device small language models can be fine-tuned to reason about how objects interact and how sequence or duration modulates risk.

In parallel, a multi-agent framework in which specialized sub-agents focus on distinct hazard clusters can be trained with reinforcement-learning signals derived from task-level metrics (e.g., precision, recall, and AID score). By allowing agents to cooperate or compete under a shared reward function, the ensemble can learn complementary decision policies that collectively raise overall reliability while containing false-alarm rates. Finally, vision alone under-represents the dynamic complexity of construction sites. Fusing real-time sensor telemetry, lighting, microclimate, particulate load, and equipment telematics with image data would give the agent the environmental context it presently lacks and should improve both precision and recall for latent or evolving hazards. Prototyping such sensor-enriched pipelines, followed by open-source deployment, constitutes a coherent roadmap toward an LLM assistant that meaningfully augments, rather than attempts to replace, human safety expertise.