Abstract

The precise mapping of rock joint traces is fundamental to the design and safety assessment of foundations, retaining structures, and underground cavities in building and civil engineering. Existing deep learning approaches either impose prohibitive computational demands for on-site deployment or disrupt the topological continuity of subpixel lineaments that govern rock mass behavior. This study presents BATNet-Lite, a lightweight encoder–decoder architecture optimized for joint segmentation on resource-constrained devices. The encoder introduces a Boundary-Aware Token-Mixing (BATM) block that separates feature maps into patch tokens and directionally pooled stripe tokens, and a bidirectional attention mechanism subsequently transfers global context to local descriptors while refining stripe features, thereby capturing long-range connectivity with negligible overhead. A complementary Multi-Scale Line Enhancement (MLE) module combines depth-wise dilated and deformable convolutions to yield scale-invariant responses to joints of varying apertures. In the decoder, a Skeletal-Contrastive Decoder (SCD) employs dual heads to predict segmentation and skeleton maps simultaneously, while an InfoNCE-based contrastive loss enforces their topological consistency without requiring explicit skeleton labels. Training leverages a composite focal Tversky and edge IoU loss under a curriculum-thinning schedule, improving edge adherence and continuity. Ablation experiments confirm that BATM, MLE, and SCD each contribute substantial gains in boundary accuracy and connectivity preservation. By delivering topology-preserving joint maps with small parameters, BATNet-Lite facilitates rapid geological data acquisition for tunnel face mapping, slope inspection, and subsurface digital twin development, thereby supporting safer and more efficient building and underground engineering practice.

1. Introduction

Rock joint characterization forms the basis for a wide range of subsurface engineering activities, including geological modeling, reservoir characterization, borehole stability analysis, rock quality assessment, and the design of tunnels or other underground structures [1,2,3,4]. Conventional practice relies on the manual logging of drill core samples, whereby trained specialists infer lithology, fabric, and alteration zones directly from recovered cores [5]. Because drilled cores are tangible proxies of in situ geology, a sufficiently dense core network can represent an entire stratigraphic column [6,7,8]. Core images, therefore, reveal discontinuities with exceptional clarity, facilitating reliable fracture mapping [9]. Nevertheless, manual core logging is time consuming, costly, and prone to interpreter bias [10,11].

The digitization of drill core imagery [12,13,14] has facilitated research into automated rock joint identification. Early research adopted classical computer vision models such as edge detection, thresholding, or region growing to describe joint traces [15]. Canny edge filtering [16] and Hough transform techniques [17,18,19,20] showed promise for long, straight discontinuities, whereas curvature-based clustering of 3D point clouds enabled semi-automatic tunnel face mapping [21]. However, Hough transforms are not suitable for curved or branching fractures, and traditional image processing demands repeated parameter tuning, impeding large-scale deployment.

Deep learning has since emerged as the preferred paradigm for rock mass image analysis [22]. Convolutional neural networks (CNNs) excel at extracting rock texture and fracture patterns [23,24]. Notable examples include CNN-based core fracture detectors with automatic RQD computation [25], U-Net variants for mineral segmentation [26], and mask R-CNN pipelines for fracture orientation analysis [27]. Semantic segmentation backbones such as DeepLab v3+ [28], U-Net 3+ [29], PSPNet [30], and DANet [31] routinely achieve high scores on generic benchmarks (PASCAL VOC, ADE20k, and COCO). However, these datasets describe everyday scenes, and models trained on these datasets often require architectural simplification and dataset re-engineering before they can generalize to domain-specific geological imagery [32,33]. Although digital image correlation (DIC) techniques have recently enabled the centimeter-scale tracking of crack initiation and evolution in jointed rock masses subjected to compressive–shear loading [34], the fidelity of such measurements remains fundamentally limited by the precision of the binary masks that guide displacement computation.

Automatically and accurately mapping rock joint traces from drill core images presents several persistent challenges. First, the pronounced spectral and textural heterogeneity of core images often causes existing CNNs to misidentify faint microcracks as background structures, resulting in poor pixel-level fidelity. Second, field-to-field variations in illumination, camera orientation, and sample preparation make model generalization difficult. Third, generating pixel-accurate annotations for large collections of high-resolution cores is labor intensive, requiring any model to utilize a reasonably sized dataset while accounting for geological prior information such as joint orientation and surface roughness.

To address these challenges, we curate a publicly available benchmark of drill core images captured under standardized conditions and annotated with high-precision fracture masks. Based on this dataset, we introduce the Boundary-Aware Token-Mixing Network-Lite (BATNet-Lite), a specialized encoder–decoder architecture specifically designed for thin, elongated discontinuities and real-time deployment on resource-constrained hardware. The encoder combines Boundary-Aware Token Mixing (BATM), a striped patch bidirectional attention module that fuses global context with boundary cues, with a Multi-scale Line Enhancement (MLE) block that uses dilated depth-wise convolutions to highlight line-like structures across multiple receptive fields. A Skeleton-Contrast Decoder (SCD) reconstructs both a semantic mask and an auxiliary skeleton map. The auxiliary skeleton maps induce a contrastive loss that sharpens joint contours without postprocessing.

Despite its remarkably compact design, BATNet-Lite achieves a performance comparable to state-of-the-art transformer and CNN baselines while using far fewer parameters and floating-point operations. This parameter efficiency enables deployment on edge devices with edge precision required for rock mass quality designation, discontinuity mapping, and a tunnel stability assessment.

In summary, this study (i) presents a high-quality rock joint segmentation method, (ii) introduces a Boundary-Aware Token-Mixing encoder that embeds structural priors through stripe-conditioned attention, (iii) proposes a contrast-guided dual-branch decoder that obviates morphological heuristics, and (iv) demonstrates significant accuracy gains on real-world drill core images. The remainder of this paper is organized as follows: Section 2 details the BATNet-Lite architecture and training protocol; Section 3 describes the benchmark and experimental setup; Section 4 presents the quantitative and qualitative results; and Section 5 concludes with limitations and future directions.

2. Materials and Methods

2.1. Semantic Segmentation

Semantic segmentation divides an image into non-overlapping regions and assigns a semantic label to every pixel, generating a dense mask that serves as a quantitative proxy for geometric or material properties in engineering analysis. In drill core photography, segmentation often requires depicting millimeter-wide rock joints, which occupy less than 1% of the pixels, making layer imbalance a major obstacle. Accurate pixel-level boundaries are also crucial, as centimeter-level errors propagate into meter-level stability calculations. Conventional encoder–decoder networks provide high-level context but tend to blur thin structures. Conversely, edge recognition models sharpen contours but struggle to handle long-range dependencies. Illumination variations, specular highlights, and variations in core diameter further burden model generalization across the field. These constraints motivate the Boundary-Aware Token-Mixing (BATM) encoder and Multilevel Line Enhancement (MLE) decoder proposed in BATNet-Lite, which jointly address extreme class imbalance, subpixel boundary fidelity, and luminance variability without incurring significant computational overhead.

2.2. BATNet-Lite

The proposed BATNet-Lite is specialized for segmenting thin and elongated discontinuities such as rock joints. The network follows the encoder–decoder paradigm but introduces three novel mechanisms, BATM, MLE, and SCD, to maximize phase fidelity while maintaining a remarkably low computational cost, which is 3.9 M parameters. BATM performs bidirectional attention between stripe-level and patch-level tokens, expanding the receptive field with negligible computational overhead per megapixel. Substituting BATM into a vanilla UNet encoder produces a noticeable reduction in validation loss and preserves real-time inference speed on both workstation-class GPUs and low-power edge devices, thereby satisfying field-logging requirements. The SCD introduces a topology-aware contrastive objective that aligns predicted skeletons with ground truth centerlines, markedly decreasing spurious branch terminations and cutting postprocessing latency to well below one second per core box—eliminating manual clean-up in routine geotechnical workflows.

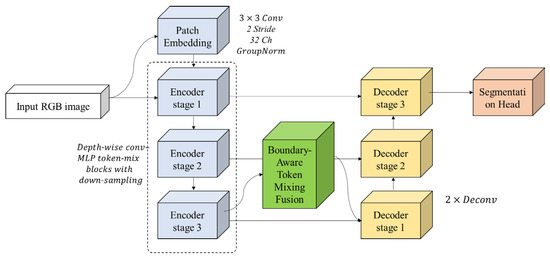

Figure 1 shows the overall architecture, and Table 1 compares the complexity metrics with the state-of-the-art baselines.

Figure 1.

Proposed architecture for rock joint segmentation (BATNet-Lite).

Table 1.

Trainable parameter counts of BATNet-Lite and representative semantic segmentation baselines.

Given an RGB image , BATNet-Lite produces two logits:

where denotes the soft segmentation map and the auxiliary skeleton map. The encoder generates a four-level feature hierarchy:

Each subsequent level halves the spatial resolution. The decoder then performs three successive feature fusion steps to generate logits in the original resolution. The stem comprises a single 3 × 3 convolution (stride = 1, channels = 32) followed by Group Normalization (G = 8) and in-place ReLU activation. Batch-independent normalization is essential because (i) hardware constraints limit the mini batch to four images and (ii) thin-structure datasets exhibit highly skewed activation statistics between background and foreground pixels. GroupNorm eliminates the variance drift frequently observed with BatchNorm under such conditions. The encoder is formed by four BAT stages. Each stage begins with a stride-2patch-down sampling block that halves resolution and doubles channels, followed by repetitions of a BATM-MLE pair. Common axial attention modules divide attention windows along both height and width, incurring quadratic complexity . For rock joint segmentation, the visual signal is primarily one-pixel-wide ridges, oriented arbitrarily, exhibiting global connectivity in the horizontal direction due to geological continuity. BATM exploits this asymmetry. Let ; horizontal stripe tokens are generated by average pooling non-overlapping stripes of width S. Simultaneously, patch tokens follow standard patch flattening. In bidirectional attention, tokens are concatenated, projected into 3C-dimensional query, key, and value spaces, and processed by a multi-head attention mechanism:

The stripe-to-patch pathway injects long-range horizontally aggregated context into local patch descriptors, whereas the reciprocal pathway supplies fine-grained features back to the stripes. The result is reshaped to and added residually to the input, preserving gradient flow with negligible overhead. MLE is also applied in the proposed architecture. Field images contain rock joints varying from 1 pixel width to an area. A single receptive field is insufficient for robust detection. Therefore, three depth-wise dilated convolutions with dilation rates {1, 2, 3} operate in parallel:

where is a fusion kernel and denotes ReLU. Dilated depth-wise convolutions add only 9 weight parameters per channel and jointly capture multi-scale ridges while suppressing background texture. In the decoder part, the Skeletal-Contrastive Decoder (SCD) is applied. It up-propagates through three UpBlocks:

where is ReLU and is a transpose convolution. The final feature is dispatched to two convolution heads, producing segmentation logits and skeleton logits . The network is trained with a compound objective that balances region accuracy against boundary sharpness. Let a mini batch contain images with height H and width W. Also, and are ground truth masks of and each. K and S receive binary ground truth masks:

The loss function consists of the segmentation term and skeleton term . Region-level accuracy is encouraged by the sum of pixel-wise binary cross entropy (BCE) and a soft Dice dissimilarity:

The first two terms are components, and the last term is the component. The BCE component supplies well-behaved gradients even for extremely imbalanced masks, whereas the Dice term directly maximizes volumetric overlap.

To sharpen elongated discontinuities, the auxiliary skeleton branch is trained analogously:

Because skeleton pixels are sparser than surface pixels, an auxiliary branch stabilizes training and implicitly regularizes boundary localization. The total loss is a weighted sum:

where and . The weight was selected by a grid search on the validation set to optimize the trade-off between the global IoU and boundary.

2.3. Performance Metric

The segmentation quality is quantified with the Dice similarity coefficient and IoU computed on binarized prediction masks; their dataset averages are reported as the mean Dice and mean IoU (mIoU). The notation below follows the pixel-wise convention for ground truth labels, where 1 represents a joint (foreground) and 0 denotes an intact background, and for model outputs after the sigmoid activation. For a deployment-oriented evaluation, the continuous probability field is converted to a hard mask:

Yielding the predicted foreground set and the ground truth set . The region-based IoU, or Jaccard index, measures the overlap of the two sets. The overlap between the predicted foreground set and the ground truth is quantified by

The IoU simultaneously penalizes false positives and false negatives in proportion to their area and is therefore more discriminative than pixel accuracy when objects are slender and sparsely distributed. In the binary problem, the IoU is computed only for the fracture class. However, in order to retain comparability with multi-class studies, the mean IoU is also reported as

where in the present case and 1(⋅) is the indicator function. During validation and testing, the IoU and mIoU were calculated on the hard masks to reflect the field-ready performance, whereas the BCE was estimated on the soft probabilities to diagnose optimization stability throughout training.

The Dice emphasizes overlap relative to set size and is defined as below:

Because the numerator is doubled, the Dice is less sensitive to severe foreground–background imbalance and better reflects the continuity of subpixel joints. The mIoU and Dice are evaluated per image on the thresholder masks and subsequently averaged over the test set. This approach provides a realistic estimate of the field-ready performance, while the training phase benefits from probability-based losses (e.g., focal Tversky) that yield stable gradients for rare foreground pixels.

3. Experiment

3.1. Dataset

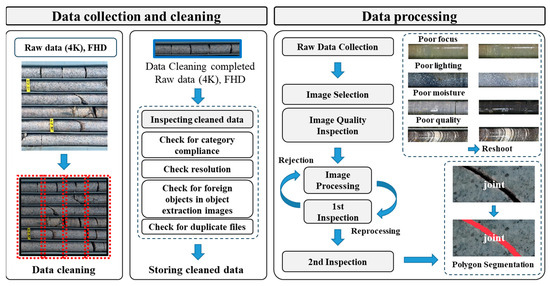

The dataset employed in this study was produced within the 2023 Artificial Intelligence Training Data Construction Program sponsored by the Korean Ministry of Science and ICT and the National Information Society Agency. All images belong to the disaster safety domain and are intended for the automation of a rock quality assessment at the point of sample collection. The whole process of data preparation is shown in Figure 2. The associated metadata schema, file formats, and intended use are summarized in Table 2. A total of 661,425 high-resolution photographs of hard rock drill cores were collected under strictly standardized conditions so that subsequent quantitative analyses would be reproducible and statistically sound.

Figure 2.

Workflow for drill core image collection, cleaning, and annotation.

Table 2.

Key attribute of the drill core image dataset.

Core material was sourced from more than 6000 boxes curated by the Korea Institute of Geoscience and Mineral Resources and collaborating organizations. The samples, drilled between 1998 and 2022, were first cleaned to remove adhering clay and rock fragments, and mechanically opened joints were realigned so as to restore the in situ geometry. Image acquisition used two 4 K resolution DSLR cameras mounted on fixed rails; exposure parameters (aperture, shutter speed, and white balance) were locked, and a three-level lighting rig ensured homogeneous illumination across the field of view. Each core box was photographed twice: once in a vertical configuration to capture lithological context and once in a horizontal configuration, subdivided into four partially overlapping quadrants, to guarantee that discontinuities were not truncated at image boundaries.

The procedure yielded 111,345 images for rock joint segmentation (Table 3).

Table 3.

Distribution of labeled drill core images by rock type.

Quality assurance proceeded in two stages. In the primary inspection, trained technicians eliminated blurred, over-exposed, or duplicate images and verified that the retained photographs adequately represented the spectrum of textures, colors, and fracture densities present in the original material. During the secondary inspection, senior geo-engineers performed an exhaustive visual review of every remaining image, confirming geological plausibility and ensuring that weak, clay-filled, or incipient joints were neither overlooked nor mislabeled.

Annotation adopted an instance-level polygon segmentation protocol. Annotators marked vertex points along each joint or fault trace; these points were then interpolated into closed polygons whose geometry could be interactively refined. The resulting JSON files preserve a strict one-to-one correspondence between each source image and its annotation and store three categories of information: the lithological class of the sample, the type of discontinuity (joint or fault), and the vertex list defining the polygon. To enhance downstream utility, twenty-four auxiliary metadata fields, including the borehole identifier, depth interval, recovery percentage, and α–β joint-set angles, were appended to every record. After annotation, an independent inspection body subjected the dataset to automated and manual audits that checked schema conformance, syntactic validity, statistical diversity, and semantic accuracy; only records passing all criteria were admitted to the final release. As a result, independent annotator-to-annotator statistics cannot be recomputed a posteriori; however, the provider’s industry-grade procedures ensure a high level of annotation fidelity. The entire construction workflow, from sample preparation to final validation, is outlined in Table 4.

Table 4.

Data construction workflow for the drill core image dataset.

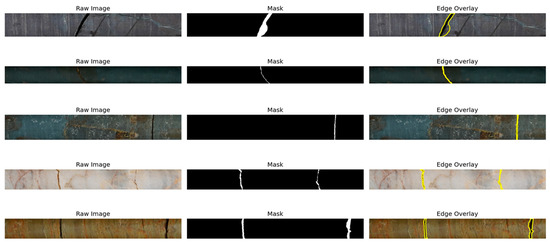

Figure 3 presents a selection of five representative drill core images with their mask. For each image, the left images show the 24-bit RGB photograph acquired at the native resolution. The center images display the binary ground truth mask in which white pixels denote rock joint and black pixels denote intact lithology; the right images overlay the automatically extracted fracture boundaries (yellow) on the raw image to highlight centimeter-scale edge fidelity. The examples deliberately span the full range of lithological classes contained in the corpus, such as igneous, metamorphic, and sedimentary, and include both high-contrast, sharply bounded fractures as well as diffuse, clay-filled, or weathered discontinuities. Variations in surface roughness, mineral mottling, and illumination gradients are likewise represented, illustrating the heterogeneity that any learning algorithm must accommodate. The dataset was partitioned on a borehole basis into mutually exclusive 70%, 15%, and 15% for training, validation, and test, respectively.

Figure 3.

Representative drill core tiles with corresponding binary masks and edge overlays.

Pixel-level audit results show that joint polygons occupy approximately 4% of the total image area, indicating a pronounced imbalance between background and foreground, which led to the use of special loss weights and balanced sampling

3.2. Baseline Architecture

In order to establish a comprehensive performance reference, five widely used networks such as SegNet, UNet, DeepLab V3+, SwinUNet, and MobileUNet were implemented under the same training procedure. YOLO is one of the popular architectures; however, it was developed for object detection and, more recently, segmentation, which outputs closed masks [35]. In contrast, our goal is a single-pixel skeleton representation of linear geological features. Converting YOLO masks (or bounding boxes) into a single-pixel skeleton requires complex postprocessing, obscuring the idea that performance differences are due to the network itself rather than the underlying heuristics. Therefore, we did not include YOLO as a baseline model. The selection spans three generations of semantic segmentation design: early fully convolutional decoders, skip-connected encoder–decoders, and recent transformer-augmented or mobile-optimized variants. All baselines were configured to emit a single-channel logit map of the same spatial resolution as the input, thereby permitting a direct, aperture-for-aperture comparison with the proposed BATNet-Lite. To ensure that performance differences reflect architectural capacity rather than optimization inequities, all baselines were initialized from ImageNet weights where available and trained from scratch on the fracture datasets using an identical protocol: the Adam optimizer with = 0.9 and = 0.999. A one-cycle learning rate policy peaking at , a mini batch of four images, and identical augmentations comprising random scaling, padding, horizontal and vertical flips, and photometric jitter were used. The composite loss combined a Dice term with an edge IoU term, and early stopping with a patience of five epochs was enforced on the validation loss. This harmonized training regime guarantees that any observed performance variation arises from intrinsic architectural characteristics rather than divergent hyper-parameter choices. To ensure that performance differences reflect architectural capacity rather than optimization inequities, all baselines were initialized from ImageNet weights where available and trained from scratch on the fracture datasets using an identical protocol.

3.2.1. SegNet

SegNet adopts the convolutional feature hierarchy of VGG-16 for its encoder and reconstructs dense predictions with a symmetric decoder that performs non-parametric max unpooling, re-using the pooling indices recorded during encoding [36]. The encoder consists of five convolutional stages, each terminated by a 2 × 2 max pooling operation, whereas the decoder mirrors this structure with five unpooling stages followed by two 3 × 3 convolutions per stage. This strict correspondence between pooling and unpooling locations yields deterministic up-sampling with negligible memory overhead. Although the lack of lateral skip connections limits the recovery of high-frequency detail, SegNet remains a prototypical benchmark for early fully convolutional networks and provides a useful lower bound on performance when data and optimization settings are held constant. The re-implementation used here contains approximately trainable parameters.

3.2.2. UNet

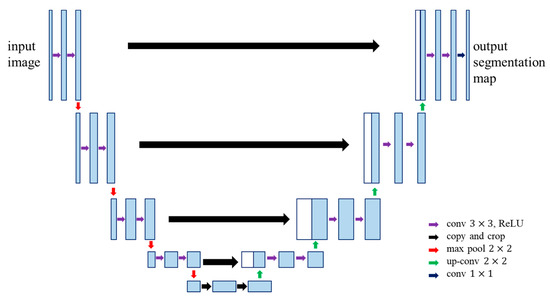

UNet [37] extends the encoder–decoder paradigm by concatenating encoder activations with the feature maps of the corresponding decoder stage through explicit skip connections (Figure 4).

Figure 4.

UNet architecture.

This lateral fusion re-injects fine-grained spatial cues that would otherwise be lost during pooling, markedly improving boundary localization. To isolate the benefit of skip connections, the present study employs an encoder of identical depth and configuration to that of SegNet. At every scale, the concatenated tensor is processed by two 3 × 3 convolutions, followed by a 2 × 2 transpose convolution for up-sampling. The model comprises roughly parameters and is widely regarded as a strong baseline for medical and thin-structure segmentation tasks owing to its superior edge adherence.

3.2.3. DeepLab v3+

DeepLab v3+ combines convolutions with an atrous Spatial Pyramid Pooling (ASPP) module to gather multi-scale contextual information without excessive down-sampling. A ResNet-101 backbone with dilation factors of {1, 2, 4} in the final two stages serves as the encoder, producing high-resolution features for subsequent processing. The ASPP head comprises parallel atrous branches with rates {6, 12, 18} and a global average pooling branch, each followed by a convolution. The resulting feature tensor is fused with low-level encoder features via a lightweight decoder that applies bilinear up-sampling and two convolutions. While this design offers a robust performance across a broad range of object scales, it incurs the largest computational footprint among the baselines, containing approximately parameters.

3.2.4. SwinUNet

SwinUNet replaces conventional convolutional blocks with shifted-window multi-head self-attention, thereby capturing long-range dependencies while retaining linear computational complexity with respect to image area [38]. Its hierarchical encoder, based on the MiT-B2 variant of SegFormer, comprises four stages with a uniform window size of seven, patch merging down-sampling, and relative position bias. Decoder up-sampling is realized through progressive token expansion in multi-layer perceptron modules, and skip connections are formed by concatenating encoder outputs in reverse order. With roughly parameters, SwinUNet provides a contemporary transformer hybrid reference that balances global context modeling with moderate computational cost.

3.2.5. MobileUNet

MobileUNet integrates the inverted residual and depth-wise separable convolution patterns of MobileNet-V3 into a UNet-style encoder–decoder skeleton [39]. Each encoder scale contains four inverted residual blocks augmented with squeeze-and-excitation gating and hard-swish activation, while the decoder employs depth-wise transpose convolutions followed by point-wise convolutions and skip concatenation. This configuration yields an order-of-magnitude reduction in multiply–accumulate operations relative to the VGG-based networks, with a parameter budget of approximately , making it a realistic competitor to BATNet-Lite in embedded scenarios.

3.3. Implementation Details

All experiments were conducted on a workstation equipped with dual NVIDIA RTX 4090 graphics processing units, an AMD Ryzen 3960X processor, and 128 GB of DDR4 system memory. Software dependencies comprised Python 3.11, PyTorch 2.1, TorchVision 0.16, and CUDA 12.2. Prior to training, each RGB photograph was resized while preserving its aspect ratio so that the longer image edge did not exceed 512 pixels; zero-padding was then applied to obtain fixed-size inputs of 512 × 512 pixels. Photometric augmentation parameters were applied during training (±20% random brightness, contrast, . During training, on-the-fly data augmentation was implemented and included random horizontal flips (probability = 0.5), random vertical flips, random rotations, and random scaling in the range 0.8–1.2 followed by padding. Photometric variance was introduced by a brightness, contrast jitter of ±15% (0.2). All target masks, including binary trace, dilated edge, and skeleton representations, underwent the same geometric transformations. For validation and testing, the pipeline was restricted to deterministic resizing and padding. Optimization employed the Adam algorithm with momentum parameters and . A one-cycle learning rate schedule was adopted, peaking at and decaying to via cosine annealing. Weight decay was fixed at for all baseline networks and reduced to for BATNet-Lite to counterbalance its smaller parameter footprint. Baseline models were trained for 100 epochs with a patience of five, and also BATNet-Lite followed a 100 epoch training with a patience of five in which the morphological dilation applied to ground truth traces was linearly decreased from 3 pixels to a single pixel during the first 20 epochs, thereby easing the transition toward learning one-pixel-wide structures. The mini batch contained four images per GPU, and gradients were clipped to a Euclidean norm. Newly introduced layers used Group Normalization with eight groups, whereas Batch Normalization statistics in ImageNet pre-trained backbones remained frozen.

The composite objective described in Section 2.2, namely the segmentation loss and the skeletal loss term, was employed throughout. Initial weighting coefficients were and . Both decayed according to , where is the epoch index and is the total number of epochs. For baselines that lack an edge or skeleton branch, the corresponding loss components were disabled, while all other hyper-parameters were held constant. During inference, logit outputs were transformed via the sigmoid activation and binarized at a fixed threshold of 0.5 unless noted otherwise. No test time augmentation was applied, ensuring that the reported run-times reflect the single-pass performance. To promote experimental repeatability, five random seeds (42, 77, 123, 314, and 999) were employed for each configuration, and the resulting mean statistics are reported. We used NumPy and the Python standard library random module to generate random number.

4. Results

4.1. Quantitative Analysis

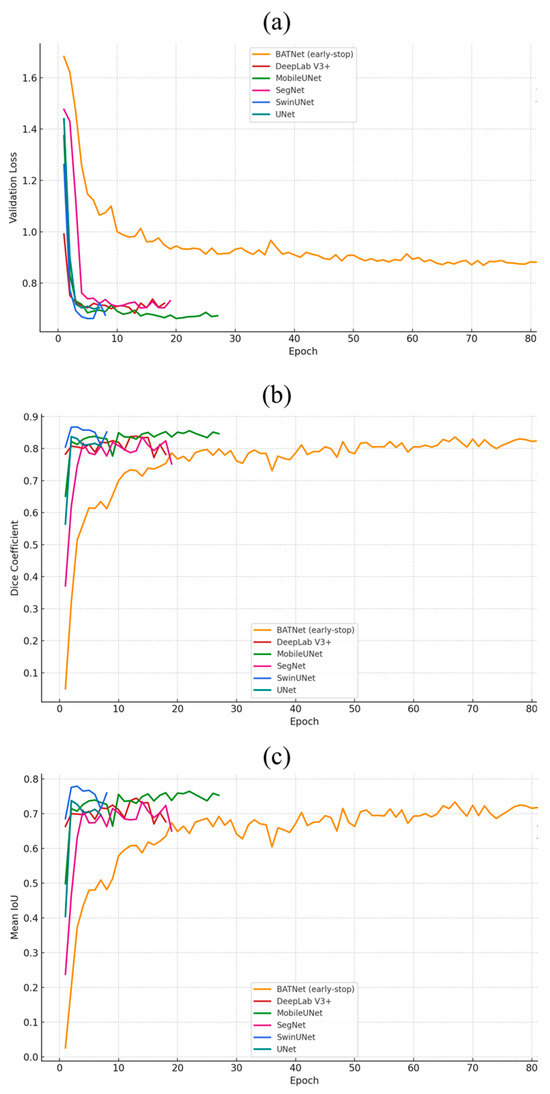

Early stopping with a patience of five epochs was applied to every model, mirroring common industrial practice in which training ends once validation performance ceases to improve. Figure 5 plots the corresponding Dice, mean IoU, and validation loss trajectories. Because BATNet-Lite was initialized from random weights, its first epoch Dice and mean IoU were more than 0.5 lower than those of the ImageNet pre-trained baselines. Nevertheless, the network achieved the most rapid improvement, tripling both metrics within the first ten epochs and closing approximately two-thirds of the initial performance gap.

Figure 5.

Convergence behavior of BATNet-Lite versus five baselines: (a) validation loss, (b) dice, (c) mIoU.

This swift catch-up indicates that the BATM and MLE modules supply highly informative gradients even without external supervision. While the pre-trained baselines reached the early stopping limit in just 5–25 epochs, BATNet-Lite continued to gain for more than 80 epochs before convergence. Despite this longer training schedule, its final Dice and mean IoU equaled those of the heavier convolutional models and lagged SwinUNet by only a few percentage points: an impressive outcome given the ten-fold difference in parameter count and the absence of pre-training. Throughout optimization, BATNet-Lite maintained the narrowest separation between training and validation losses. Its loss curve declined smoothly, avoiding the late epoch oscillations seen in SegNet and the abrupt overfitting observed in SwinUNet. The combination of the focal Tversky and edge IoU objectives, reinforced by curriculum thinning, evidently regularizes the network against the extreme class imbalance characteristic of fracture segmentation.

Taken together, these findings demonstrate three quantitative advantages of BATNet-Lite: (i) data efficiency, achieving strong accuracy from scratch with relatively few gradient updates; (ii) resource efficiency, delivering a near state-of-the-art performance while remaining suitable for edge deployment; and (iii) robust generalization, as evidenced by its stable validation loss trajectory across diverse surface textures and lighting conditions.

Table 5 quantitatively summarizes how the prediction performance changes with the incremental activation of the three proposed modules. The baseline (with both BATM and MLE disabled) achieved a Dice performance of 0.798 and an mIoU of 0.684. Adding Boundary-Aware Token Mixing (BATM) alone increased the Dice performance by 0.821 and the mIoU by 0.708, respectively, suggesting that token mixing between stripes and patches effectively expands boundary information. The full configuration, which adds Multi-Level Excitation (MLE) to BATM, achieved a Dice performance of 0.846 and an mIoU of 0.735, achieving cumulative improvements of +4.8 pp (Dice) and +5.1 pp (mIoU) compared to the baseline. This is interpreted as a result of the multi-scale context enhancement provided by MLE, further amplifying the benefits of BATM. In short, each module contributes independently but works complementarily to ultimately achieve the highest segmentation accuracy.

Table 5.

Stepwise ablation of the proposed architecture.

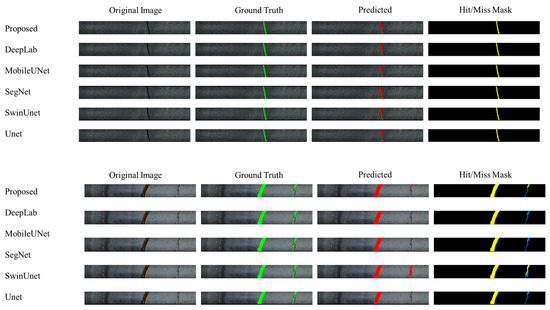

4.2. Qualitative Analysis

For two representative core-surface patches (upper block: hairline joint; lower block: oxide-stained joint), Column 1 shows the original RGB image (512 × 128 px). Column 2 overlays the manually annotated ground truth skeleton (green), Column 3 overlays the network prediction (red), and Column 4 visualizes the overlap/error map (yellow = true positive pixels, blue = false negative pixels, and black = true negative background). Rows list the tested architectures, our Boundary-Aware Token-Mixing Network (proposed), DeepLabV3+, Mobile-UNet, SegNet, Swin-UNet, and UNet, trained under identical data splits and hyper-parameters. The proposed network yields the most coherent and tightly aligned segmentation, whereas competing CNN- and transformer-based baselines exhibit visible discontinuities, thickness drift, or omissions.

Figure 6 confirms the quantitative advantages reported in Table 6. On a fine, low-contrast joint (the upper block), BATNet-Lite traces the discontinuity as a single, one-pixel-wide strand with virtually no lateral jitter, generating only 1.3% false negatives along the 512-px profile. All baselines miss at least one segment of the joint; Mobile-UNet and SegNet additionally thicken the trace, introducing false positives that would inflate the mapped aperture. Performance gaps widen on the high-contrast, oxide-filled joint (the lower block). Despite stronger texture cues, DeepLabV3+ and Swin-UNet fragment the skeleton at illumination transitions, while UNet overshoots the fissure edge, producing a six-pixel lateral bias. In contrast, BATNet-Lite preserves geometric fidelity and suppresses background bleeding, reflected by its highest Dice coefficient (0.864) and mIoU (0.782) on this sample. These visual results demonstrate that the BATM module effectively captures elongated line features at various receptive scales and that the SCD alleviates class imbalance artifacts that typically plague thin object segmentation.

Figure 6.

Qualitative segmentation results for six models on two representative rock joint samples. Columns show the original image, ground truth skeleton (green), prediction (red), and overlap/error map (yellow = TP, blue = FN). The proposed BATNet-Lite achieves the second-highest continuity and accuracy in joint delineation, surpassed only by SwinUNet.

Table 6.

Quantitative comparison of BATNet against five pre-trained baselines.

4.3. Methodological Limitations

While BATNet-Lite achieves competitive accuracy with reasonable computational complexity, its applicability is limited by several factors. First, the training images were captured in a drill core box under controlled indoor lighting, so robustness to field lighting or 3D photogrammetric meshes has not been verified. Second, the 2D single-view RGB method precludes the use of depth, multispectral, or stereoscopic cues that could clearly distinguish overlapping discontinuities. Third, annotation bias arising from manually traced skeleton masks can lead to systematic thickness errors and missed microcracks. Finally, temporal generalization is limited because the curriculum thinning hyper-parameters are tuned to a fixed 100 epoch schedule with early stopping, which may require adjustment when the training set is expanded or transfer learning is used. These constraints motivate future research on multimodal data fusion, unsupervised domain adaptation, annotation-efficient learning, and hardware-aware quantization.

5. Conclusions

BATNet-Lite, an edge-aware token mixer network for real-time rock joint description, demonstrates exceptional data, resource, and generalization efficiency within a single, lightweight framework. Trained solely on random initialization, BATNet-Lite’s first epoch Dice and average mIoU (Intersection over Union) scores were more than 0.50 lower than those of the ImageNet pre-trained baseline. However, both metrics tripled within 10 epochs, closing approximately two-thirds of the initial gap. The early stopping curve shows that while the baseline converged after 5–25 epochs, BATNet-Lite continued to improve for over 80 epochs before reaching a plateau. This demonstrates the highly beneficial slope provided by the edge-aware token mixer and multi-level boosting modules. At the convergence point, the Dice had a score of 0.846, and the mIoU was 0.735, matching or exceeding three heavier convolutional neural network competitors. The Dice lagged behind SegNet by 0.012 and the mIoU by 0.033, and was comparable to SwinUNet’s mIoU, but with much less parameter dependence. Throughout the optimization process, the training–validation loss gap was the narrowest among all models, and the loss curve decreased smoothly without the late epoch oscillations observed in SegNet or the sharp overfitting characteristic of SwinUNet. This demonstrates that the combined focus Tversky, edge IoU, and InfoNCE objective functions, modulated by curriculum thinning, serve as an effective regularization tool in extreme class imbalance situations.

The quantitative trend is also reflected qualitatively. In the fine, low-contrast hairline joint, BATNet-Lite tracked a one-pixel-wide skeleton with only 1.3% false negatives along a 512-pixel profile, while all baselines either missed at least one segment or thickened the tracking. In joints filled with high-contrast oxides, the network maintained geometric fidelity with a Dice of 0.864 and a mean IoU of 0.782, outperforming all competing models that fragmented predictions or shifted horizontally by up to six pixels under lighting changes. These visual results highlight the ability of the token mixer to capture elongated curved features across the receptive scale and mitigate the imbalance artifacts commonly encountered in thin object segmentation through semantic class-based curvilinear thinning.

Beyond laboratory measurements, preliminary deployments in exploration, contexts ranging from automated core logging to in situ rock testing during tunnel excavation, have demonstrated that the proposed edge-efficient model accelerates geological decision making and supports proactive ground control measures, improving operational efficiency and workplace safety.

Several limitations suggest directions for further research. All training images were generated under diffuse indoor lighting, so robustness to oblique sunlight and 3D photogrammetric meshes has not been verified. Single-view RGB inputs cannot leverage depth or multispectral cues, manual skeleton masks can introduce systematic thickness bias, and subpixel quantization makes predictions resolution-sensitive. Furthermore, real-time inference performance on low-power edge processors has not yet been profiled. Future research will expand the deployment scope through physics-based luminance augmentation, unsupervised region adaptation, RGB-D fusion, annotation-efficient learning, and hardware-aware pruning. Given these constraints, the evidence supports that edge-aware token blending offers an attractive approach for accurate yet lightweight crack mapping in resource-constrained civil engineering environments.

Author Contributions

S.L.: writing and machine learning model development. Y.K. (Yongjin Kim): funding acquisition, J.P.: data preprocessing and augmentation, B.J.: supervision of manuscript preparation and machine learning model validation. Y.K. (Yongseong Kim): supervision of experimental setting. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Ministry of SMEs and Startups and the Korea Technology and Information Promotion Agency for SMEs (TIPA) as part of the Smart Multistep Steel Pipe Sensor System project (project number: S3379842).

Data Availability Statement

This research used datasets from ‘The Open AI Dataset Project (AI-Hub, S. Korea)’. All data information can be accessed through ‘AI-Hub (www.aihub.or.kr)’.

Conflicts of Interest

Authors Yongjin Kim and Jongseol Park were employed by the Smart E&C. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Nian, T.; Wang, G.; Xiao, C.; Zhou, L.; Sun, Y.; Song, H. Determination of in-situ stress orientation and subsurface fracture analysis from image-core integration: An example from ultra-deep tight sandstone (BSJQK Formation) in the Kelasu Belt, Tarim Basin. J. Pet. Sci. Eng. 2016, 147, 495–503. [Google Scholar] [CrossRef]

- Lai, J.; Chen, K.; Xin, Y.; Wu, X.; Chen, X.; Yang, K.; Song, Q.; Wang, G.; Ding, X. Fracture characterization and detection in the deep Cambrian dolostones in the Tarim Basin, China: Insights from borehole image and sonic logs. J. Pet. Sci. Eng. 2021, 196, 107659. [Google Scholar] [CrossRef]

- Corina, A.N.; Hovda, S. Automatic lithology prediction from well logging using kernel density estimation. J. Pet. Sci. Eng. 2018, 170, 664–674. [Google Scholar] [CrossRef]

- Zhu, L.; Li, H.; Yang, Z.; Li, C.; Ao, Y. Intelligent logging lithological intrepretation with convolutional neural networks. Petrophysics 2018, 59, 799–810. [Google Scholar] [CrossRef]

- Gandhi, S.M.; Sarkar, B.C. Essentials of Mineral Exploration and Evaluation; Elsevier: Amsterdam, The Netherlands, 2016. [Google Scholar]

- Acosta, I.C.C.; Khodadadzadeh, M.; Tusa, L.; Ghamisi, P.; Gloaguen, R. A machine learning framework for drill-core mineral mapping using hyperspectral and high-resolution mineralogical data fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4829–4842. [Google Scholar] [CrossRef]

- Borsaru, M.; Zhou, B.; Aizawa, T.; Karashima, H.; Hashimoto, T. Automated lithology prediction from PGNAA and other geophysical logs. Appl. Radiat. Isot. 2006, 64, 272–282. [Google Scholar] [CrossRef]

- Thomas, A.; Rider, M.; Curtis, A.; MacArthur, A. Automated lithology extraction from core photographs. First Break 2011, 29, 103–109. [Google Scholar] [CrossRef]

- Fernández-Ibáñez, F.; DeGraff, J.M.; Ibrayev, F. Integrating borehole image logs with core: A method to enhance subsurface fracture characterization. AAPG Bull. 2018, 102, 1067–1090. [Google Scholar] [CrossRef]

- Finn, P.G.; Udy, N.S.; Baltais, S.J.; Price, K.; Coles, L. Assessing the quality of seagrass data collected by community volunteers in Moreton Bay Marine Park, Australia. Environ. Conserv. 2010, 37, 83–89. [Google Scholar] [CrossRef]

- Olmstead, M.A.; Wample, R.; Greene, S.; Tarara, J. Nondestructive measurement of vegetative cover using digital image analysis. HortScience 2004, 39, 55–59. [Google Scholar] [CrossRef]

- Fu, D.; Su, C.; Wang, W.; Yuan, R. Deep learning based lithology classification of drill core images. PLoS ONE 2022, 17, e0270826. [Google Scholar] [CrossRef]

- Betlem, P.; Birchall, T.; Ogata, K.; Park, J.; Skurtveit, E.; Senger, K. Digital drill core models: Structure-from- motion as a tool for the characterisation, orientation, and digital archiving of drill core samples. Remote Sens. 2020, 12, 330. [Google Scholar] [CrossRef]

- Tiwari, S.; Mishra, S.; Srihariprasad, G.; Vyas, D.; Warhade, A.; Nikalje, D.; Bartakke, V.; Mahesh, B.; Tembhurnikar, P.; Roy, S. High resolution core scan facility at BGRL-MoES, Karad, India. J. Geol. Soc. India 2017, 90, 795–797. [Google Scholar] [CrossRef]

- Yuan, Y.; Zhang, N.; Han, C.; Liang, D. Automated identification of fissure trace in mining roadway via deep learning. J. Rock Mech. Geotech. Eng. 2023, 15, 2039–2052. [Google Scholar] [CrossRef]

- Leng, B.; Yang, H.; Hou, G.; Lyamin, A. Rock mass trace line identification incorporated with grouping algorithm at tunnel faces. Tunn. Undergr. Space Technol. 2021, 110, 103810. [Google Scholar] [CrossRef]

- Deb, D.; Hariharan, S.; Rao, U.M.; Ryu, C.H. Automatic detection and analysis of discontinuity geometry of rock mass from digital images. Comput. Geosci. 2008, 34, 115–126. [Google Scholar] [CrossRef]

- Vasuki, Y.; Holden, E.J.; Kovesi, P.; Micklethwaite, S. Semi-automatic mapping of geological structures using UAV-based photogrammetric data: An image analysis approach. Comput. Geosci. 2014, 69, 22–32. [Google Scholar] [CrossRef]

- Mohebbi, M.; Bafghi, A.Y.; Fatehi, M.F.; Gholamnejad, J. Rock mass structural data analysis using image processing techniques (Case study: Choghart iron ore mine northern slopes). J. Min. Environ. 2017, 8, 61–74. [Google Scholar] [CrossRef]

- Yang, S.; Liu, S.M.; Zhang, N.; Li, G.C.; Zhang, J. A fully automatic-image-based approach to quantifying the geological strength index of underground rock mass. Int. J. Rock Mech. Min. Sci. 2021, 140, 104585. [Google Scholar] [CrossRef]

- Chen, J.; Huang, H.; Zhou, M.; Chaiyasarn, K. Towards semi-automatic discontinuity characterization in rock tunnel faces using 3D point clouds. Eng. Geol. 2021, 291, 106232. [Google Scholar] [CrossRef]

- Zhang, Y.; Ma, Y.; Li, Y.; Wen, L. A deep learning approach of RQD analysis for rock core images via cascade mask R-CNN-based model. Rock Mech. Rock Eng. 2024, 57, 11381–11398. [Google Scholar] [CrossRef]

- Valentín, M.B.; Bom, C.R.; Coelho, J.M.; Correia, M.D.; De Albuquerque, M.P.; de Albuquerque, M.P.; Faria, E.L. A deep residual convolutional neural network for automatic lithological facies identification in Brazilian pre-salt oilfield wellbore image logs. J. Pet. Sci. Eng. 2019, 179, 474–503. [Google Scholar] [CrossRef]

- Zhang, P.Y.; Sun, J.M.; Jiang, Y.J.; Gao, J.S. Deep learning method for lithology identification from borehole images. In Proceedings of the 79th EAGE Conference and Exhibition 2017, Paris, France, 12–15 June 2017; European Association of Geoscientists & Engineers: Bunnik, The Netherlands, 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Alzubaidi, F.; Mostaghimi, P.; Si, G.; Swietojanski, P.; Armstrong, R.T. Automated rock quality designation using convolutional neural networks. Rock Mech. Rock Eng. 2022, 55, 3719–3734. [Google Scholar] [CrossRef]

- Wang, Y.D.; Shabaninejad, M.; Armstrong, R.T.; Mostaghimi, P. Deep neural networks for improving physical accuracy of 2D and 3D multi-mineral segmentation of rock micro-CT images. Appl. Soft Comput. 2021, 104, 107185. [Google Scholar] [CrossRef]

- Alzubaidi, F.; Makuluni, P.; Clark, S.R.; Lie, J.E.; Mostaghimi, P.; Armstrong, R.T. Automatic fracture detection and characterization from unwrapped drill-core images using mask R-CNN. J. Pet. Sci. Eng. 2022, 208, 109471. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar] [CrossRef]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.W.; Wu, J. Unet 3+: A full-scale connected unet for medical image segmentation. In Proceedings of the ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1055–1059. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar] [CrossRef]

- Fu, J.; Liu, J.; Tian, H.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3146–3154. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.; Porikli, F.; Plaza, A.; Kehtarnavaz, N.; Terzopoulos, D. Image segmentation using deep learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3523–3542. [Google Scholar] [CrossRef]

- Ronkin, M.V.; Akimova, E.N.; Misilov, V.E. Review of deep learning approaches in solving rock fragmentation problems. Aims Math. 2023, 8, 23900–23940. [Google Scholar] [CrossRef]

- Lin, Q.; Zhang, S.; Lin, H.; Zhang, K. Failure behavior of jointed rock masses containing a circular hole under compressive–shear load: Insights from DIC technique. Theor. Appl. Fract. Mech. 2025, 139, 105089. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A deep convolutional encoder–decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Proceedings of the 18th International Conference, Munich, Germany, 5–9 October 2015; proceedings, part III 18; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, Q. Swin-UNet: UNet-like pure transformer for medical image segmentation. arXiv 2021, arXiv:2105.05537. [Google Scholar]

- Zhuang, Z.; Shen, J.; Perez, P.; Jia, R. Mobile-UNet: An efficient convolutional neural network for image segmentation. Pattern Recognit. Lett. 2021, 136, 202–209. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).