Dynamic Object Mapping Generation Method of Digital Twin Construction Scene

Abstract

1. Introduction

2. Literature Review

2.1. Composition of Digital Twin Construction Scene

2.2. Calculation Method of Scene Object Conversion

2.3. The Shortcomings of Existing Research

3. Research Objectives and Problem Definition

3.1. Research Questions and Objectives

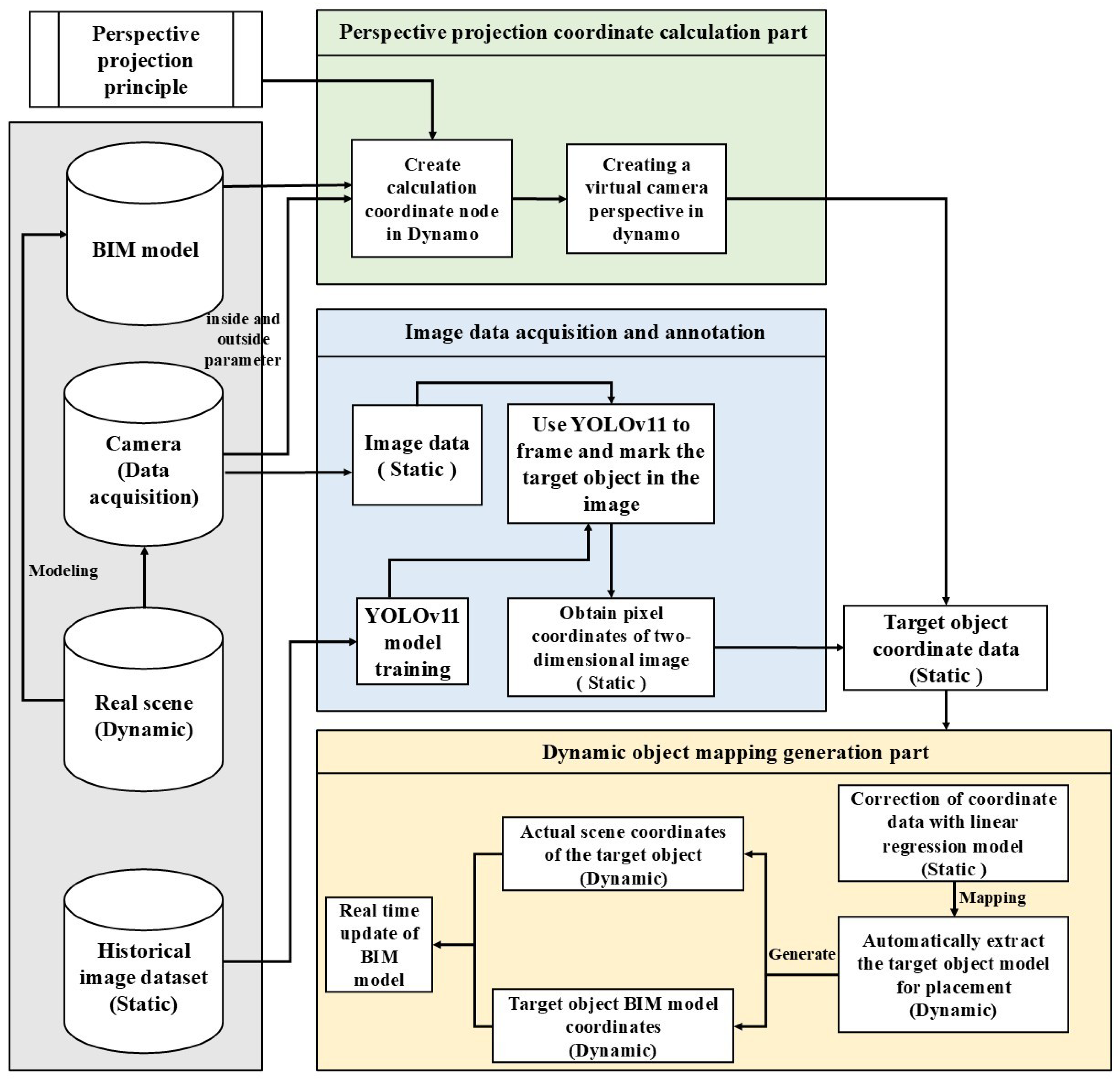

3.2. Digital Twin Construction Scene Integration Framework

4. Materials and Methods

4.1. Image Data Acquisition and Annotation

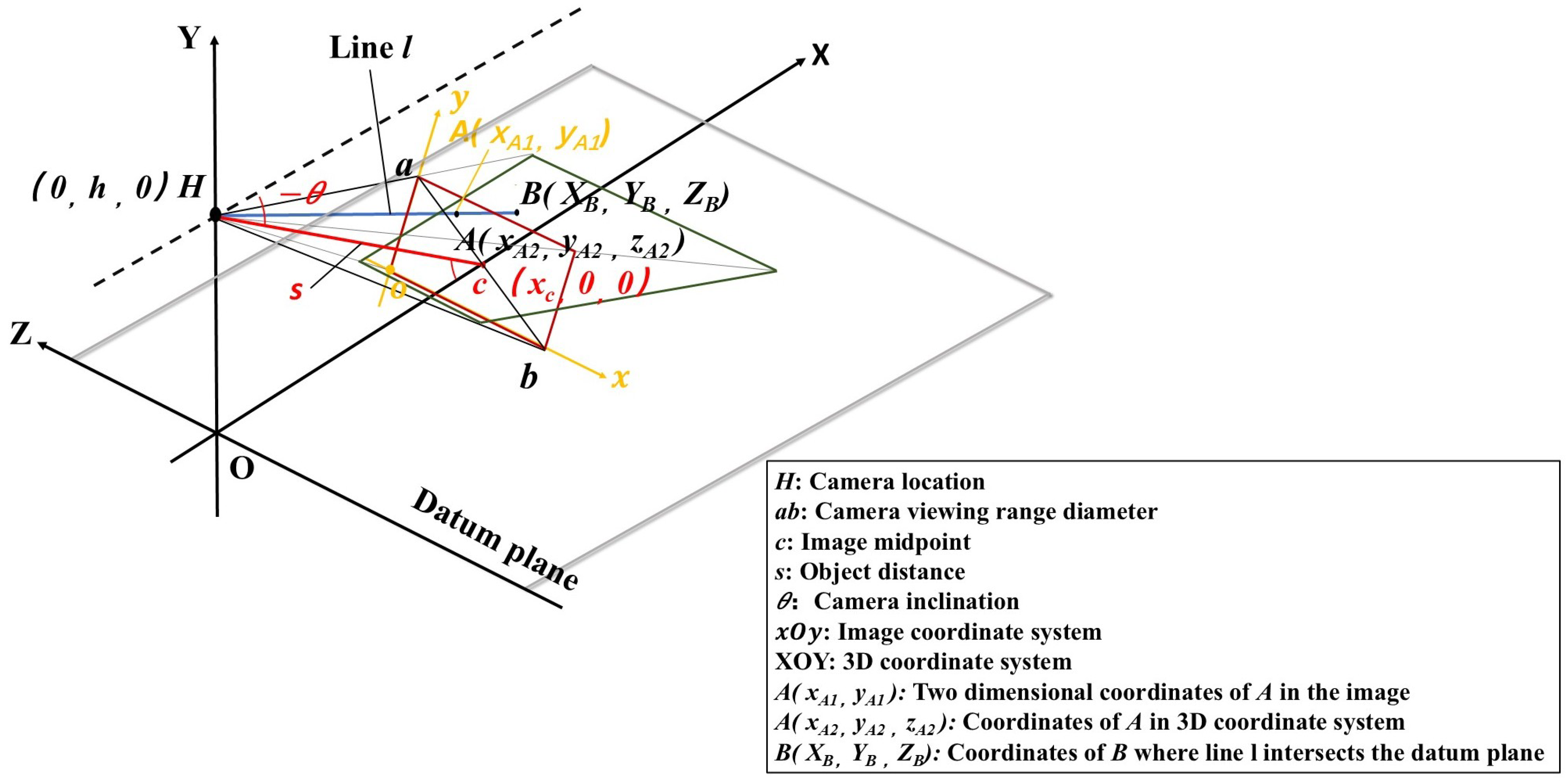

4.2. Calculation of Perspective Projection Coordinates

4.2.1. Definition of Camera Parameters

4.2.2. Shift of Perspectives

4.2.3. Correction Through Linear Regression

4.3. Dynamic Object Mapping Generation

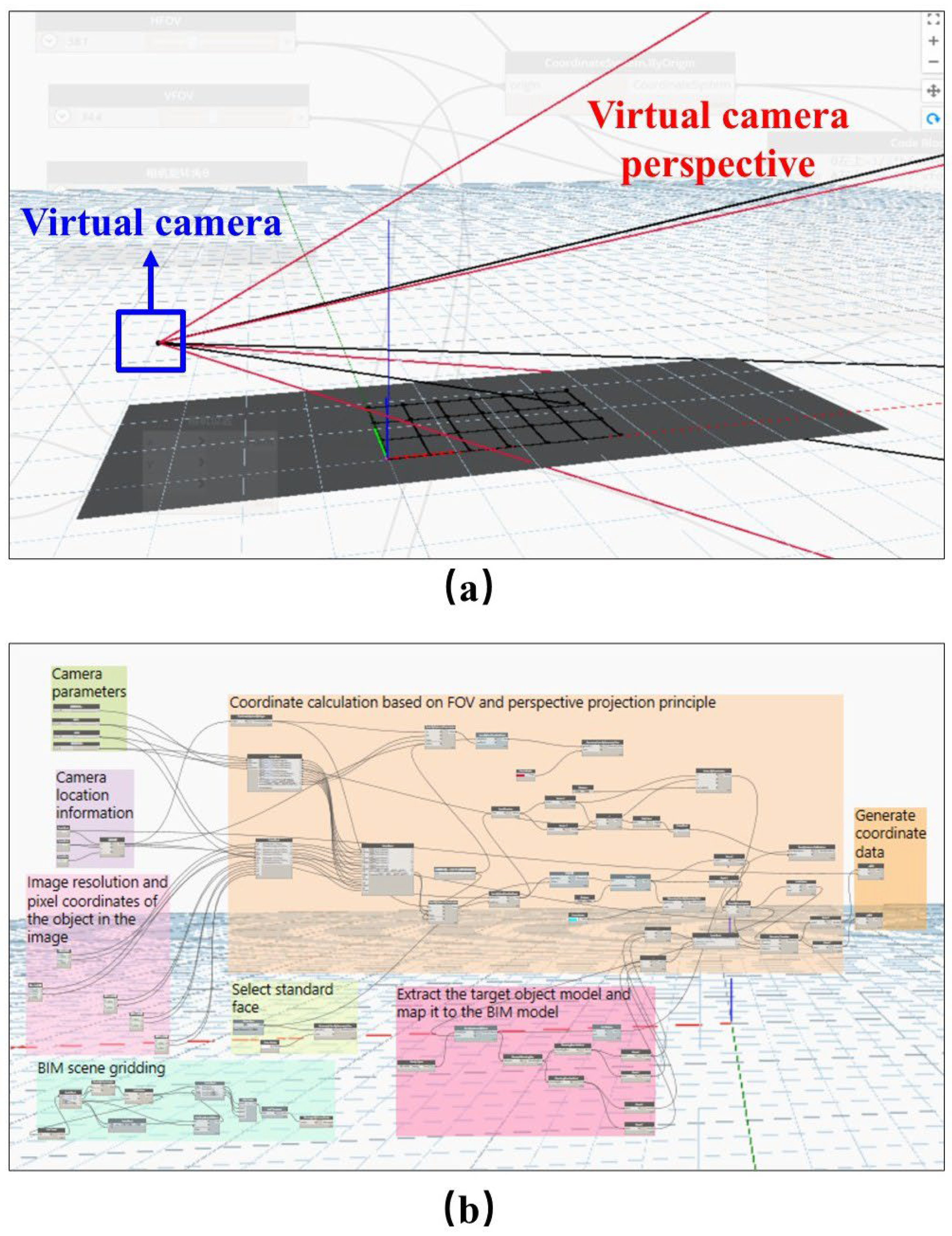

4.3.1. Virtual Camera Angle Setting

4.3.2. Mapping Generation

5. System Test and Results Analysis

5.1. System Test

5.1.1. Scene Construction and Image Data Acquisition

5.1.2. Frame Selection and Classification of Image Data

5.1.3. Distortion Error of Target Object and Real-Time Mapping Generation

5.2. Analysis of Test Results

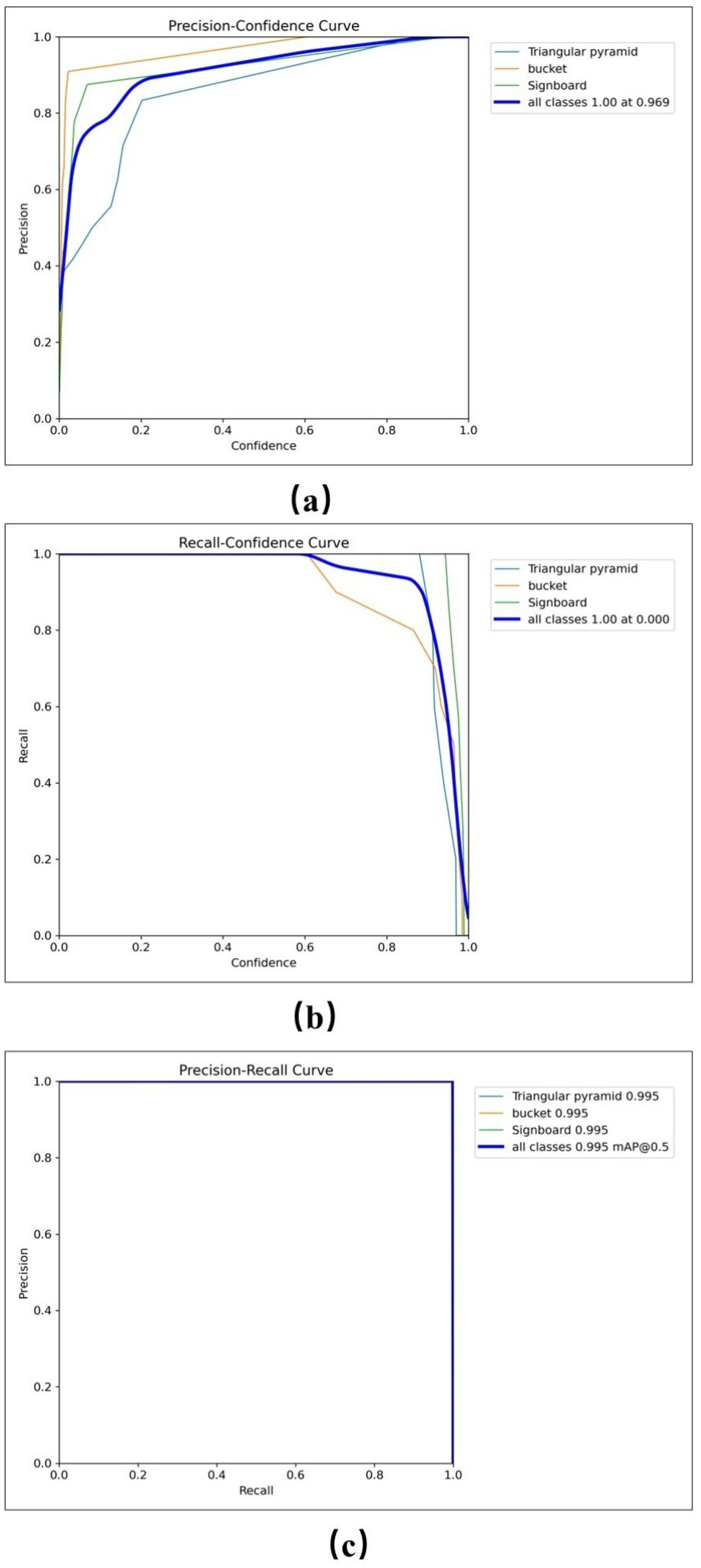

5.2.1. Target Object Detection and Classification Performance

5.2.2. Coordinate Accuracy of Target Object

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Abuimara, T.; Hobson, B.W.; Gunay, B.; O’Brien, W.; Kane, M. Current state and future challenges in building management: Practitioner interviews and a literature review. J. Build. Eng. 2021, 41, 102803. [Google Scholar] [CrossRef]

- Long, W.Y.; Bao, Z.K.; Chen, K.; Ng, S.T.T.; Wuni, I.Y. Developing an integrative framework for digital twin applications in the building construction industry: A systematic literature review. Adv. Eng. Inform. 2024, 59, 102346. [Google Scholar] [CrossRef]

- Ushasukhanya, S.; Jothilakshmi, S. Real-time human detection for electricity conservation using pruned-SSD and Arduino. Int. J. Electr. Comput. Eng. 2021, 11, 1510–1520. [Google Scholar]

- Sun, X.; Bao, J.; Li, J.; Zhang, Y.; Liu, S.; Zhou, B. A digital twin-driven approach for the assembly-commissioning of high precision products. Rob. Comput. Integr. Manuf. 2020, 61, 101839. [Google Scholar] [CrossRef]

- Laaki, H.; Miche, Y.; Tammi, K. Prototyping a Digital Twin for Real Time Remote Control Over Mobile Networks: Application of Remote Surgery. IEEE Access 2019, 7, 20235–20336. [Google Scholar] [CrossRef]

- Madubuike, O.; Anumba, C.J.; Khallaf, R. A review of digital twin applications in construction. ITcon 2022, 27, 145–172. [Google Scholar] [CrossRef]

- Dagimawi, D.E.; Miriam, A.M.C.; Girma, T.B. Toward Smart-Building Digital Twins: BIM and IoT Data Integration. IEEE Access 2022, 10, 130487–130506. [Google Scholar]

- Opoku, D.G.J.; Perera, S.; Osei-Kyei, R.; Rashidi, M. Digital twin application in the construction industry: A literature review. J. Build. Eng. 2021, 40, 102726. [Google Scholar] [CrossRef]

- Cruz, R.J.M.D.; Tonin, L.A. Systematic review of the literature on digital twin: A discussion of contributions and a framework proposal. Gest. Prod. 2022, 29, e9621. [Google Scholar] [CrossRef]

- Teizer, J.; Johansen, K.W.; Schultz, C. The concept of digital twin for construction safety. Constr. Res. Congr. 2022, 2022, 1156–1165. [Google Scholar]

- Johansen, K.W.; Schultz, C.; Teizer, J. Hazard ontology and 4D benchmark model for facilitation of automated construction safety requirement analysis. Comput. Aided Civ. Infrastruct. Eng. 2023, 38, 2128–2144. [Google Scholar] [CrossRef]

- Ahn, Y.; Choi, H.; Kim, B.S. Development of early fire detection model for buildings using computer vision-based CCTV. J. Build. Eng. 2023, 65, 105647. [Google Scholar] [CrossRef]

- Cheng, J.C.; Chen, K.; Wong, P.K.-Y.; Chen, W.; Li, C.T. Graph-based network generation and CCTV processing techniques for fire evacuation. Build. Res. Inf. 2021, 49, 179–196. [Google Scholar] [CrossRef]

- Dhou, S.; Motai, Y. Dynamic 3D surface reconstruction and motion modeling from a pan–tilt–zoom camera. Comput. Ind. 2015, 70, 183–193. [Google Scholar] [CrossRef]

- Dahmane, W.M.; Dollinger, J.-F.; Ouchani, S. A BIM-based framework for an optimal WSN deployment in smart building. In Proceedings of the 11th International Conference on Network of the Future (NoF), Dublin, Ireland, 12–14 October 2020; pp. 110–114. [Google Scholar]

- Speiser, K.; Teizer, J. An efficient approach for generating training environments in virtual reality using a digital twin for construction safety. In Proceedings of the CIB W099 & W123 Annual International Conference: Digital Transformation of Health and Safety in Construction, Porto, Portugal, 21–22 June 2023; University of Porto: Porto, Portugal, 2023; pp. 481–490. [Google Scholar]

- Speiser, K.; Teizer, J. An ontology-based data model to create virtual training environments for construction safety using BIM and digital twins. In Proceedings of the International Conference on Intelligent Computing in Engineering, London, UK, 4–7 June 2023. [Google Scholar]

- Bjørnskov, J.; Jradi, M. An ontology-based innovative energy modeling framework for scalable and adaptable building digital twins. Energy Build. 2023, 292, 113146. [Google Scholar] [CrossRef]

- Zaimen, K.; Dollinger, J.F.; Moalic, L.; Abouaissa, A.; Idoumghar, L. An overview on WSN deployment and a novel conceptual BIM-based approach in smart buildings. In Proceedings of the 7th International Conference on Internet of Things: Systems, Management and Security (IOTSMS), Paris, France, 14–16 December 2020; pp. 1–6. [Google Scholar]

- Piras, G.; Muzi, F.; Tiburcio, V.A. Digital Management Methodology for Building Production Optimization through Digital Twin and Artificial Intelligence Integration. Buildings 2024, 14, 2110. [Google Scholar] [CrossRef]

- Lee, K.; Hasanzadeh, S. Understanding cognitive anticipatory process in dynamic hazard anticipation using multimodal psychophysiological responses. J. Constr. Eng. Manag. 2024, 150, 04024008. [Google Scholar] [CrossRef]

- Liu, Z.; Meng, X.; Xing, Z.; Jiang, A. Digital twin-based safety risk coupling of prefabricated building hoisting. Sensors 2021, 21, 3583. [Google Scholar] [CrossRef]

- Shariatfar, M.; Deria, A.; Lee, Y.C. Digital twin in construction safety and its implications for automated monitoring and management. Constr. Res. Congr. 2022, 2022, 591–600. [Google Scholar]

- Wang, W.; Guo, H.; Li, X.; Tang, S.; Li, Y.; Xie, L.; Lv, Z. BIM information integration-based VR modeling in digital twins in Industry 5.0. J. Ind. Inf. Integr. 2022, 28, 100351. [Google Scholar] [CrossRef]

- Ogunseiju, O.R.; Olayiwola, J.; Akanmu, A.A.; Nnaji, C. Digital twin-driven framework for improving self-management of ergonomic risks. Smart Sustain. Built Environ. 2021, 10, 403–419. [Google Scholar] [CrossRef]

- Wang, H.; Wang, G.; Li, X. Image-based occupancy positioning system using pose-estimation model for demand-oriented ventilation. J. Build. Eng. 2021, 39, 102220. [Google Scholar] [CrossRef]

- Guo, Z. Research on 4D-BIM Non-Modeled Workspace Conflict Analysis Model and Method. Master’s Thesis, Wuhan University of Technology, Wuhan, China, 2018. (In Chinese). [Google Scholar]

- Elfarri, E.M.; Rasheed, A.; San, O. Artificial intelligence-driven digital twin of a modern house demonstrated in virtual reality. IEEE Access 2023, 11, 35035–35058. [Google Scholar] [CrossRef]

- Khajavi, S.H.; Motlagh, N.H.; Jaribion, A.; Werner, L.C.; Holmstrom, J. Digital twin: Vision, benefits, boundaries, and creation for buildings. IEEE Access 2019, 7, 147406–147419. [Google Scholar] [CrossRef]

- Lu, Q.; Chen, L.; Li, S.; Pitt, M. Semi-automatic geometric digital twinning for existing buildings based on images and CAD drawings. Autom. Constr. 2020, 115, 103183. [Google Scholar] [CrossRef]

- Lu, Q.; Parlikad, A.K.; Woodall, P.; Don Ranasinghe, G.; Xie, X.; Liang, Z.; Konstantinou, E.; Heaton, J.; Schooling, J. Developing a digital twin at building and city levels: Case study of West Cambridge campus. J. Manag. Eng. 2020, 36, 5020004. [Google Scholar] [CrossRef]

- Mokhtari, K.E.; Panushev, I.; McArthur, J.J. Development of a cognitive digital twin for building management and operations. Front. Built Environ. 2022, 8, 856873. [Google Scholar] [CrossRef]

- Liu, M.; Fang, S.; Dong, H.; Xu, C. Review of digital twin about concepts, technologies, and industrial applications. J. Manuf. Syst. 2021, 58, 346–361. [Google Scholar] [CrossRef]

- Fuller, A.; Fan, Z.; Day, C.; Barlow, C. Digital twin: Enabling technologies, challenges and open research. IEEE Access 2020, 8, 108952–108971. [Google Scholar] [CrossRef]

- Lv, Z.; Shang, W.-L.; Guizani, M. Impact of digital twins and metaverse on cities: History, current situation, and application perspectives. Appl. Sci. 2022, 12, 12820. [Google Scholar] [CrossRef]

- Angjeliu, G.; Coronelli, D.; Cardani, G. Development of the simulation model for digital twin applications in historical masonry buildings: The integration between numerical and experimental reality. Comput. Struct. 2020, 238, 106282. [Google Scholar] [CrossRef]

- Züst, S.; Züst, R.; Züst, V.; West, S.; Stoll, O.; Minonne, C. A graph-based Monte Carlo simulation supporting a digital twin for the curatorial management of excavation and demolition material flows. J. Clean. Prod. 2021, 310, 127453. [Google Scholar] [CrossRef]

- Zhang, Y.; Luo, H.; Skitmore, M.; Li, Q.; Zhong, B. Optimal camera placement for monitoring safety in metro station construction work. J. Constr. Eng. Manag. 2019, 145, 04018118. [Google Scholar] [CrossRef]

- Soh, R.S.C.; Ahmad, N. The Implementation of Video Surveillance System at School Compound in Batu Pahat, Johor; Foresight Studies in Malaysia; Universiti Tun Hussein Onn Malaysia: Johor, Malaysia, 2019; pp. 119–134. [Google Scholar]

- Kim, J.; Ham, Y.; Chung, Y.; Chi, S. Systematic camera placement framework for operation-level visual monitoring on construction jobsites. J. Constr. Eng. Manag. 2019, 145, 04019019. [Google Scholar] [CrossRef]

- An, J.; Yao, H.T. Research on the design of an AI intelligent camera module applied to self-service terminals. J. Qilu Univ. Technol. 2024, 38, 25–29. (In Chinese) [Google Scholar]

- Albahri, A.H.; Hammad, A. Simulation-based optimization of surveillance camera types, number, and placement in buildings using BIM. J. Comput. Civ. Eng. 2017, 31, 04017055. [Google Scholar] [CrossRef]

- Hu, M.C.; Liu, D.L.; Sang, X.J.; Zhang, S.J.; Chen, Q. Intelligent identification method of debris flow scene for camera video surveillance. Comput. Moderniz. 2024, 3, 41–46. (In Chinese) [Google Scholar]

- Tran, S.V.-T.; Nguyen, T.L.; Chi, H.-L.; Lee, D.; Park, C. Generative planning for construction safety surveillance camera installation in 4D BIM environment. Autom. Constr. 2022, 134, 104103. [Google Scholar] [CrossRef]

- Conci, N.; Lizzi, L. Camera placement using particle swarm optimization in visual surveillance applications. In Proceedings of the 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 3485–3488. [Google Scholar]

- Chen, Z.; Lai, Z.; Song, C.; Zhang, X.; Cheng, J.C.P. Smart camera placement for building surveillance using OpenBIM and an efficient bi-level optimization approach. J. Build. Eng. 2023, 77, 107257. [Google Scholar] [CrossRef]

- Chen, X.; Zhu, Y.; Chen, H.; Ouyang, Y.; Luo, X.; Wu, X. BIM-based optimization of camera placement for indoor construction monitoring considering the construction schedule. Autom. Constr. 2021, 130, 103825. [Google Scholar] [CrossRef]

- Zhou, X.; Sun, K.; Wang, J.; Zhao, J.; Feng, C.; Yang, Y.; Zhou, W. Computer vision enabled building digital twin using building information model. IEEE Trans. Ind. Inf. 2023, 19, 2684–2692. [Google Scholar] [CrossRef]

- Wu, S.; Hou, L.; Zhang, G.K.; Chen, H. Real-time mixed reality-based visual warning for construction workforce safety. Autom. Constr. 2022, 139, 104252. [Google Scholar] [CrossRef]

- Kim, J.; Ham, Y.; Chung, Y.; Chi, S. Artificial intelligence quality inspection of steel bars installation by integrating mask R-CNN and stereo vision. Autom. Constr. 2021, 130, 103850. [Google Scholar] [CrossRef]

- Sitnik, R.; Kujawińska, M.; Błaszczyk, P.M. New structured light measurement and calibration method for 3D documenting of engineering structures. Opt. Metrol. 2011, 8082, 383–393. [Google Scholar]

- Niskanen, I.; Immonen, M.; Makkonen, T.; Keränen, P.; Tyni, P.; Hallman, L.; Hiltunen, M.; Kolli, T.; Louhisalmi, Y.; Kostamovaara, J.; et al. 4D modeling of soil surface during excavation using a solid-state 2D profilometer mounted on the arm of an excavator. Autom. Constr. 2020, 112, 103112. [Google Scholar] [CrossRef]

- Soltani, M.M.; Zhu, Z.; Hammad, A. Framework for location data fusion and pose estimation of excavators using stereo vision. J. Comput. Civ. Eng. 2018, 32, 04018045. [Google Scholar] [CrossRef]

- Chi, S.; Caldas, C.H. Image-based safety assessment: Automated spatial safety risk identification of earthmoving and surface mining activities. J. Constr. Eng. Manag. 2012, 138, 341–353. [Google Scholar] [CrossRef]

- Pan, Y.; Braun, A.; Brilakis, I.; Borrmann, A. Enriching geometric digital twins of buildings with small objects by fusing laser scanning and AI-based image recognition. Autom. Constr. 2022, 140, 104375. [Google Scholar] [CrossRef]

- Son, H.; Seong, H.; Choi, H.; Kim, C. Real-time vision-based warning system for prevention of collisions between workers and heavy equipment. J. Comput. Civ. Eng. 2019, 33, 04019029. [Google Scholar] [CrossRef]

- Houng, S.C.; Pal, A.; Aff, M.; Lin, J.J. 4D BIM and reality model–driven camera placement optimization for construction monitoring. J. Constr. Eng. Manag. 2024, 150, 04024045. [Google Scholar] [CrossRef]

- Quinn, C.; Shabestari, A.Z.; Misic, T.; Gilani, S.; Litoiu, M.; McArthur, J.J. Building automation system–BIM integration using a linked data structure. Autom. Constr. 2020, 118, 103257. [Google Scholar] [CrossRef]

- Liu, G.H. Research on Safe Distance Measurement Technology Based on Binocular Stereo Vision. Master’s Thesis, Wuhan Institute of Technology, Wuhan, China, 2008. (In Chinese). [Google Scholar]

- Kulinan, A.A.S.; Park, M.; Aung, P.P.W.; Cha, G.; Park, S. Advancing construction site workforce safety monitoring through BIM and computer vision integration. Autom. Constr. 2024, 158, 105227. [Google Scholar] [CrossRef]

- Borgstein, E.H.; Lamberts, R.; Hensen, J.L.M. Evaluating energy performance in non-domestic buildings: A review. Energy Build. 2016, 128, 734–755. [Google Scholar] [CrossRef]

- Huang, X.; Liu, Y.; Huang, L.; Onstein, E.; Merschbrock, C. BIM and IoT data fusion: The data process model perspective. Autom. Constr. 2023, 149, 104792. [Google Scholar] [CrossRef]

- Katić, D.; Krstić, H.; Otković, I.I.; Juričić, H.B. Comparing multiple linear regression and neural network models for predicting heating energy consumption in school buildings in the Federation of Bosnia and Herzegovina. J. Build. Eng. 2024, 97, 110728. [Google Scholar] [CrossRef]

- Petruseva, S.; Zileska-Pancovska, V.; Žujo, V.; Brkan-Vejzović, A. Construction costs forecasting: Comparison of the accuracy of linear regression and support vector machine models. Tech. Gaz. 2017, 24, 1431–1438. [Google Scholar]

- Ding, Y.; Zhang, Y.; Huang, X. Intelligent emergency digital twin system for monitoring building fire evacuation. J. Build. Eng. 2024, 77, 107416. [Google Scholar] [CrossRef]

| Name | Parameter Value |

|---|---|

| HFOV (β) | 47° |

| VFOV (α) | 27° |

| DFOV (ω) | 61° |

| Effective pixel | 200 PPI |

| Resolving power | 1920 × 1080 px |

| Focal length | 6 mm |

| Region | Initial Test Data | Corrected Test Data | Improvement Range | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| X-Axis Direction | Standard Deviation (X) | Z-Axis Direction | Standard Deviation (Z) | X-Axis Direction | Standard Deviation (X) | Z-Axis Direction | Standard Deviation (Z) | X-Axis Direction | Z-Axis Direction | |

| Far | 0.39 | 0.13 | 0.07 | 0.03 | 0.06 | 0.09 | 0.02 | 0.04 | 84.6% | 71.4% |

| Medium | 0.23 | 0.08 | 0.07 | 0.05 | 0.04 | 0.07 | 0.02 | 0.02 | 82.6% | 71.4% |

| Near | 0.11 | 0.07 | 0.09 | 0.09 | 0.02 | 0.03 | 0.04 | 0.05 | 81.8% | 55.6% |

| Totally | 0.24 | 0.16 | 0.08 | 0.06 | 0.04 | 0.07 | 0.03 | 0.04 | 83.3% | 62.5% |

| No. | Ref. | Application Method | Target Object | Reference Point Selection Position | Coordinate Information | Mapping Mode | Dynamic, Static and Real-Time Degree | Characteristics and Service Conditions |

|---|---|---|---|---|---|---|---|---|

| 1 | Wu et al. [49] | Yolov4-tiny and deep sort algorithms are used for real-time tracking of workers’ trajectories. Digital twins are generated based on BIM and mapping methods for hazard identification. Finally, MR glasses are used to realize information visualization. | Workers, hazardous areas. | Target center point. | Physical coordinates. | Combine virtual reality with MR equipment. | Dynamic; real time, but manual alignment is required. |

|

| 2 | Kulinan et al. [60] | Yolov8m algorithm is used to detect and classify workers, sort algorithm is used to track, perspective projection is used to obtain the position of workers, and finally two technologies are connected through the database. | Workers. | A certain distance from the bottom center of the boundary box of the target. | Physical coordinates. | Model visualization through Dynamo code. | Dynamic; real time. |

|

| 3 | Ding et al. [65] | Yolov4 is selected to detect the evacuees, and combined with the DeepSORT algorithm to track the trajectory. The inverse perspective mapping is used to transform the perspective, and the speed estimation algorithm is developed. | Workers | Not have. | Not have. | The data collected at the scene are transferred to the local personal computer and input into the intelligent object detector and tracker, so as to realize the mapping between the actual scene and the virtual scene. | Dynamic; it has real-time performance, but its accuracy is weak when facing multiple targets. |

|

| 4 | This study | Yolov11 algorithm is used to detect and classify dynamic objects; in Dynamo, the FOV component is used to virtual camera angle; With the help of the perspective projection principle, the pixel coordinates are converted into actual physical coordinates in Dynamo; implement object mapping and BIM model updating through dynamo. | Workers, Object. | Bottom center point of target bounding box. | Pixel coordinates and physical coordinates. | The mapping is realized through the virtual camera and perspective projection code in Dynamo. | Dynamic; real time. |

|

| Comparison Dimension | This Study (YOLOv11) | SLAM Methods | Multi-Sensor Fusion Methods |

|---|---|---|---|

| Core Objective | Object detection and classification in static images or video frames. | Real-time mapping of the environment and camera trajectory estimation. | Integrating multiple sensor data to enhance perception robustness. |

| Data Source | Image data from a monocular camera. | Image and motion data from monocular or stereo cameras. | Image, IMU, LiDAR, and other heterogeneous sources. |

| Output Information | Object categories and bounding box positions. | Environment map, camera position and orientation. | High-precision spatiotemporal localization and object detection/tracking. |

| Advantages | Fast detection speed, adaptable, suitable for real-time video analysis. | Able to obtain relative motion trajectory; supports localization and mapping. | Strong perception stability; suitable for complex dynamic environments. |

| Limitations | Cannot obtain structural or ego-motion information. | Sensitive to lighting, texture, and occlusion; complex initialization. | High cost, system complexity, requires data synchronization and registration. |

| Application Scenarios | Construction site object recognition, intelligent surveillance, hazard detection. | Indoor/outdoor localization and navigation, robot path planning. | Autonomous driving, intelligent inspection, perception in complex environments. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fang, J.; Wu, Z.; Yang, R.; Lian, Y.; Li, X.; Chu, T.J.; Jin, J. Dynamic Object Mapping Generation Method of Digital Twin Construction Scene. Buildings 2025, 15, 2942. https://doi.org/10.3390/buildings15162942

Fang J, Wu Z, Yang R, Lian Y, Li X, Chu TJ, Jin J. Dynamic Object Mapping Generation Method of Digital Twin Construction Scene. Buildings. 2025; 15(16):2942. https://doi.org/10.3390/buildings15162942

Chicago/Turabian StyleFang, Jingwen, Zhiming Wu, Ronghua Yang, Yuxin Lian, Xiufang Li, Ta Jen Chu, and Jilan Jin. 2025. "Dynamic Object Mapping Generation Method of Digital Twin Construction Scene" Buildings 15, no. 16: 2942. https://doi.org/10.3390/buildings15162942

APA StyleFang, J., Wu, Z., Yang, R., Lian, Y., Li, X., Chu, T. J., & Jin, J. (2025). Dynamic Object Mapping Generation Method of Digital Twin Construction Scene. Buildings, 15(16), 2942. https://doi.org/10.3390/buildings15162942