Deflection Prediction of Highway Bridges Using Wireless Sensor Networks and Enhanced iTransformer Model

Abstract

1. Introduction

- (1)

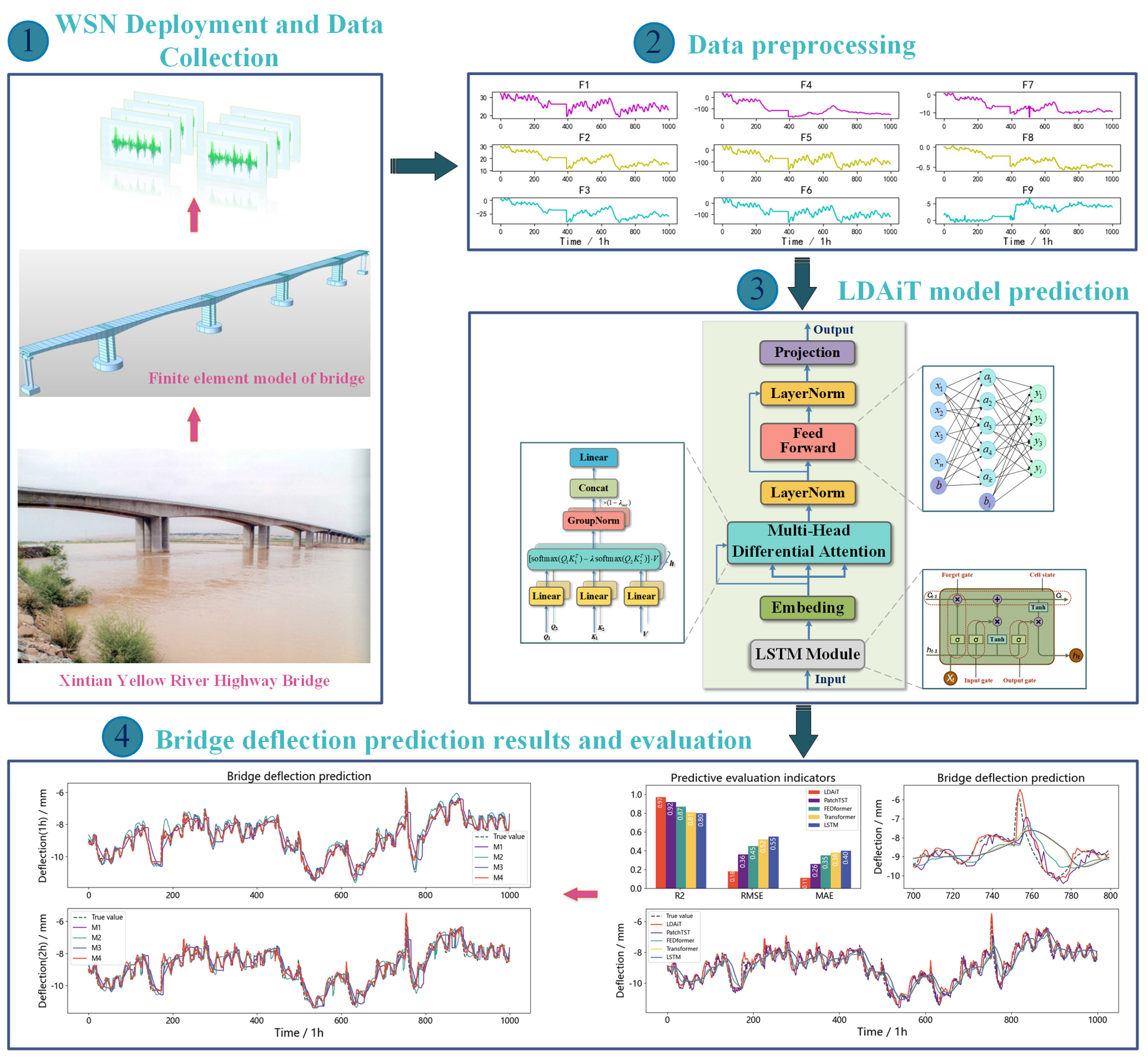

- Fusing WSN technology to achieve the real-time collection of bridge structural response data, constructing a high-quality time series correlation feature dataset oriented to deflection prediction, and providing accurate and dynamic input support for model training.

- (2)

- The LDAiT bridge deflection prediction model is proposed, which firstly enhances the modeling capability of the nonlinear dynamic evolution process of the bridge structure by introducing LSTM for time series feature extraction of the initial input data and then embeds the differential attention mechanism into the iTransformer architecture to enhance the model’s sensitivity to the changes in the local response so as to effectively improve the accuracy and stability of the prediction.

- (3)

- Comparative experiments are carried out on the measured dataset of Xintian Yellow River Bridge to verify the effectiveness and performance advantages of the LDAiT model, which provides powerful support for bridge health monitoring and intelligent operation and maintenance.

2. Related Work

3. The Proposed Method

3.1. LSTM Module

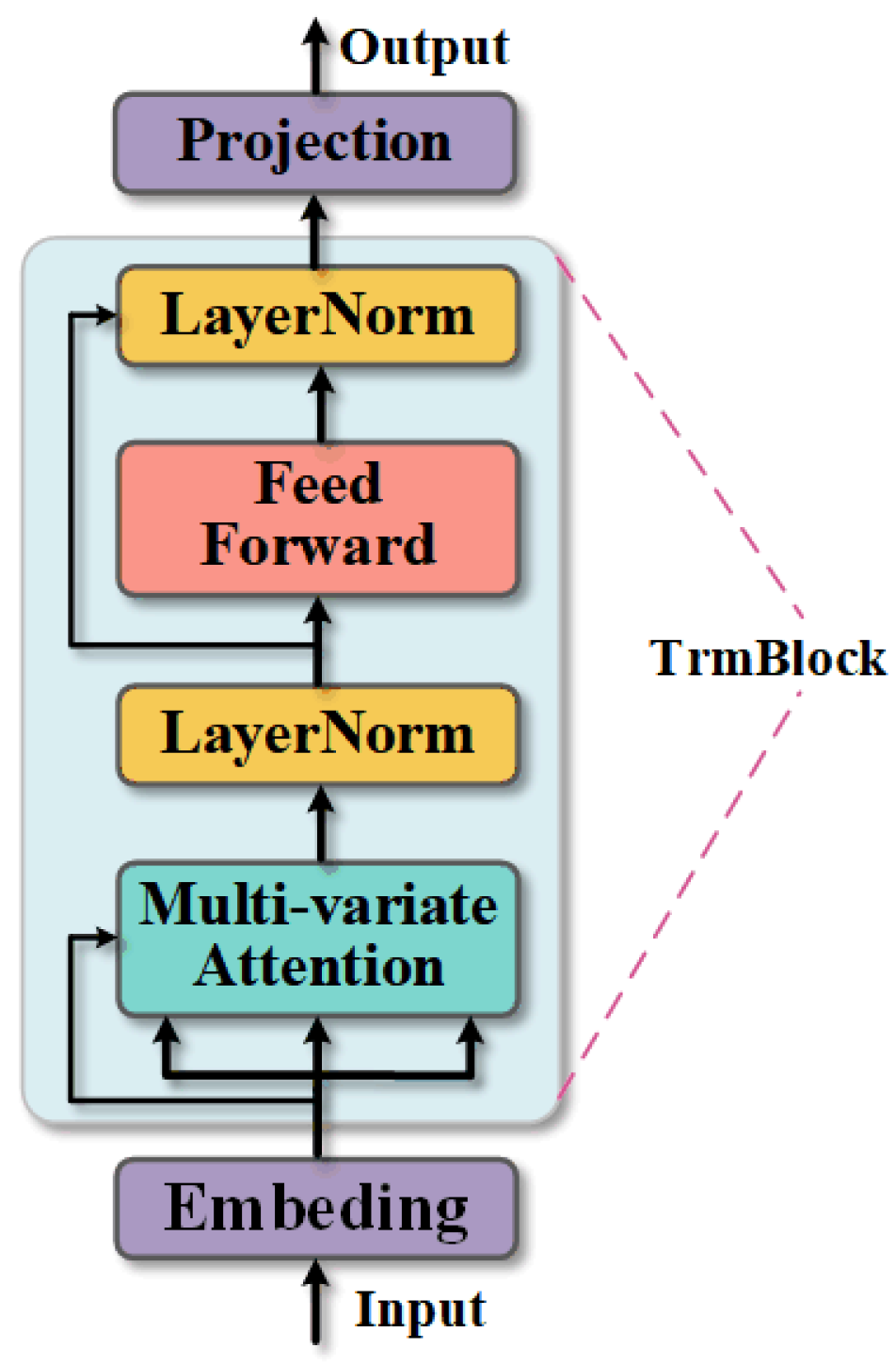

3.2. iTransformer Module

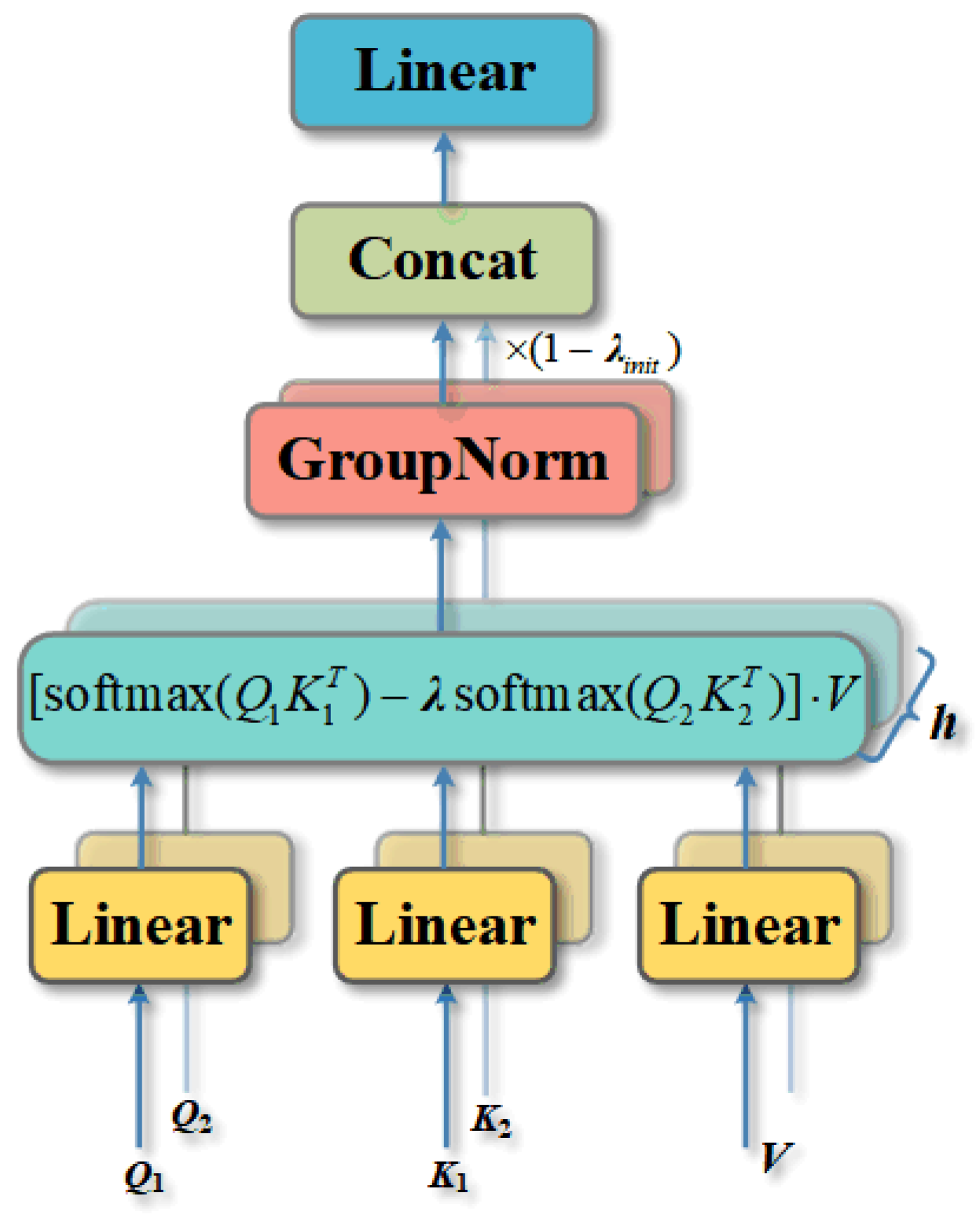

3.3. Multi-Head Differential Attention

3.4. LDAiT Prediction Model

3.5. Overall Framework for Bridge Deflection Prediction

4. Experiment Validation

4.1. Data Acquisition and Processing

4.2. Performance Evaluation Indicators

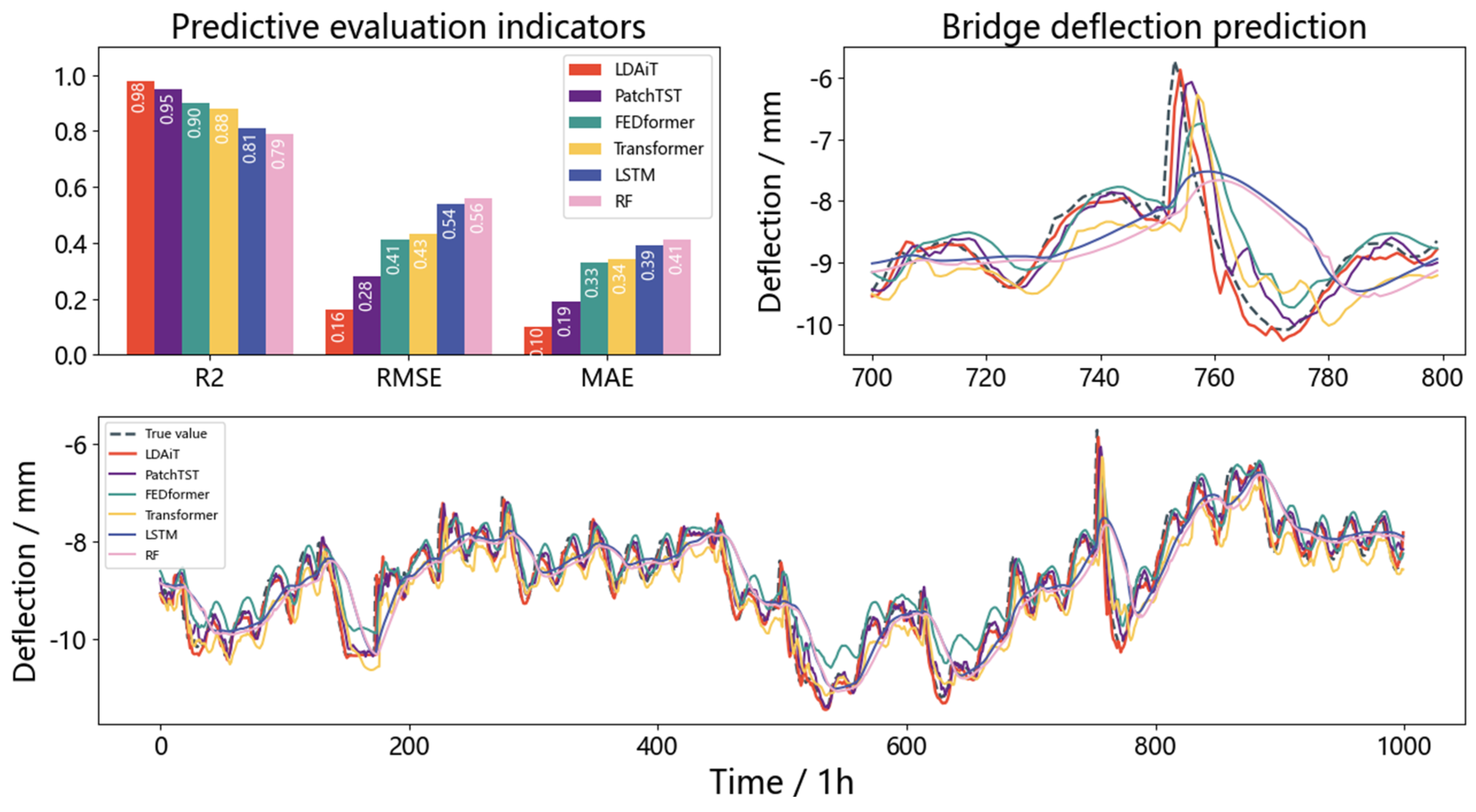

4.3. Comparative Experimental Results and Analysis

4.4. Results and Analysis of Ablation Experiments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Giordano, P.F.; Quqa, S.; Limongelli, M.P. The Value of Monitoring a Structural Health Monitoring System. Struct. Saf. 2023, 100, 102280. [Google Scholar] [CrossRef]

- Xin, J.; Liu, Q.; Tang, Q.; Li, J.; Zhang, H.; Zhou, J. Damage identification of arch bridges based on dense convolutional networks and attention mechanisms. J. Vib. Shock 2024, 43, 18–28. [Google Scholar] [CrossRef]

- Yang, J.; Huo, J.; Mu, C. A Novel Clustering Routing Algorithm for Bridge Wireless Sensor Networks Based on Spatial Model and Multicriteria Decision Making. IEEE Internet Things J. 2024, 11, 27775–27789. [Google Scholar] [CrossRef]

- Ni, Y.Q.; Wang, Y.W.; Zhang, C. A Bayesian Approach for Condition Assessment and Damage Alarm of Bridge Expansion Joints Using Long-Term Structural Health Monitoring Data. Eng. Struct. 2020, 212, 110520. [Google Scholar] [CrossRef]

- Sonbul, O.S.; Rashid, M. Algorithms and Techniques for the Structural Health Monitoring of Bridges: Systematic Literature Review. Sensors 2023, 23, 4230. [Google Scholar] [CrossRef] [PubMed]

- Zeng, G.; Deng, Y.; Ma, B.; Liu, T. Monitoring-based reliability assessment of vertical deflection for in-service long-span bridges. J. Cent. South Univ. 2021, 52, 3636–3646. [Google Scholar]

- Han, Q.; Ma, Q.; Liu, M. Structural Health Monitoring Research under Varying Temperature Condition: A Review. J. Civ. Struct. Health Monit. 2020, 11, 1–25. [Google Scholar] [CrossRef]

- Li, G.; Zhang, C.; Hu, S.; Wang, X.; Guo, J. WSN Clustering Routing Protocol for Bridge Structure Health Monitoring. J. Syst. Simul. 2022, 34, 62–69. [Google Scholar] [CrossRef]

- Kustiana, W.A.A.; Trilaksono, B.R.; Riyansyah, M.; Putra, S.A.; Caesarendra, W.; Królczyk, G.; Sulowicz, M. Bridge Damage Detection with Support Vector Machine in Accelerometer-Based Wireless Sensor Network. J. Vib. Eng. Technol. 2024, 12, 21–40. [Google Scholar] [CrossRef]

- Feng, R.; Xie, G.; Zhang, Y.; Kong, H.; Wu, C.; Liu, H. Research on Vehicle Fatigue Load Spectrum of Highway Bridges Based on Weigh-in-Motion Data. Buildings 2025, 15, 675. [Google Scholar] [CrossRef]

- Entezami, A.; Behkamal, B.; Michele, C.D.; Stefano, M. Displacement prediction for long-span bridges via limited remote sensing images: An adaptive ensemble regression method. Measurement 2025, 245, 116567. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.M.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Xin, J.; Wang, Y.; Jiang, Y.; Huang, L.; Zhang, H.; Zhou, J. Bridge Structural Response Prediction Method Integrating SWD and PatchTST. J. Vib. Eng. 2025, 1–13. Available online: https://link.cnki.net/urlid/32.1349.TB.20250418.1932.002 (accessed on 13 May 2025).

- Liu, Y.; Hu, T.; Zhang, H.; Wu, H.; Wang, S.; Ma, L.; Long, M. iTransformer: Inverted Transformers Are Effective for Time Series Forecasting. arXiv 2023, arXiv:2310.06625. [Google Scholar]

- Qu, G.; Song, M.M.; Sun, L.M. Bayesian Dynamic Noise Model for Online Bridge Deflection Prediction Considering Stochastic Modeling Error. J. Civ. Struct. Health Monit. 2025, 15, 245–262. [Google Scholar] [CrossRef]

- Kaloop, M.R.; Hussan, M.; Kim, D. Time-Series Analysis of GPS Measurements for Long-Span Bridge Movements Using Wavelet and Model Prediction Techniques. Adv. Space Res. 2019, 63, 3505–3521. [Google Scholar] [CrossRef]

- Entezami, A.; Sarmadi, H. Machine learning-aided prediction of windstorm-induced vibration responses of long-span suspension bridges. Comput.-Aided Civ. Infrastruct. Eng. 2025, 40, 1043–1060. [Google Scholar] [CrossRef]

- Li, S.; Xin, J.; Jiang, Y.; Wang, C.; Zhou, J.; Yang, X. Temperature-Induced Deflection Separation Based on Bridge Deflection Data Using the TVFEMD-PE-KLD Method. J. Civ. Struct. Health Monit. 2023, 13, 781–797. [Google Scholar] [CrossRef]

- Guo, Y.; Zhang, M.; Wang, K.; Liu, L.; Chen, W. CEEMDAN-VMD-PSO-LSTM Model for Bridge Deflection Prediction. Saf. Environ. Eng. 2024, 31, 150–159. [Google Scholar] [CrossRef]

- Nie, H.; Ying, J.; Deng, J. Bridge Deflection Prediction Method Based on Bi-LSTM Model for Health Monitoring. Highway 2024, 69, 213–219. [Google Scholar]

- Wang, M.Y.; Ding, Y.L.; Zhao, H.W. Digital Prediction Model of Temperature-Induced Deflection for Cable-Stayed Bridges Based on Learning of Response-Only Data. J. Civ. Struct. Health Monit. 2022, 12, 629–645. [Google Scholar] [CrossRef]

- Ju, H.; Deng, Y.; Li, A. Correlation Model of Deflection-Temperature-Vehicle Load Monitoring Data for Bridge Structures. J. Vib. Shock 2023, 42, 79–89. [Google Scholar] [CrossRef]

- Entezami, A.; Behkamal, B.; Michele, C.D.; Mariani, S. A kernelized deep regression method to simultaneously predict and normalize displacement responses of long-span bridges via limited synthetic aperture radar images. Struct. Health Monit. 2025. [Google Scholar] [CrossRef]

- Qi, X.; Sun, X.; Wang, S.; Cao, S. Study on Modal Deflection Prediction Method of Bridges Based on Additional Mass Blocks. J. Vib. Shock 2022, 41, 104–113. [Google Scholar] [CrossRef]

- Xiao, X.; Liu, X.; Zhang, H.; Wan, Z.; Chen, F.; Luo, Y.; Liu, Y. Deflection Prediction and Early Warning Method of Suspension Bridge Main Girder Based on CNN-LSTM-GD. J. Vib. Shock 2025, 1–11. [Google Scholar] [CrossRef]

- Nie, Y.; Nguyen, N.H.; Sinthong, P.; Kalagnanam, J. A Time Series Is Worth 64 Words: Long-Term Forecasting with Transformers. In Proceedings of the ICLR, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Pei, X.-Y.; Hou, Y.; Huang, H.-B.; Zheng, J.-X. A Deep Learning-Based Structural Damage Identification Method Integrating CNN-BiLSTM-Attention for Multi-Order Frequency Data Analysis. Buildings 2025, 15, 763. [Google Scholar] [CrossRef]

- Ye, T.; Dong, L.; Xia, Y.; Sun, Y.; Zhu, Y.; Huang, G.; Wei, F. Differential Transformer. arXiv 2024, arXiv:2410.05258. [Google Scholar]

- Aydin, C.; Cahit, E. Distinguishing between stochastic and deterministic behavior in foreign exchange rate returns: Further evidence. Econ. Lett. 1996, 51, 323–329. [Google Scholar] [CrossRef]

- Briggs, S.; Behazin, M.; King, F. Validation of Water Radiolysis Models Against Experimental Data in Support of the Prediction of the Radiation-Induced Corrosion of Copper-Coated Used Fuel Containers. Corros. Mater. Degrad. 2025, 6, 14. [Google Scholar] [CrossRef]

- Lunardi, L.R.; Cornélio, P.G.; Prado, L.P.; Nogueira, C.G.; Felix, E.F. Hybrid Machine Learning Model for Predicting the Fatigue Life of Plain Concrete Under Cyclic Compression. Buildings 2025, 15, 1618. [Google Scholar] [CrossRef]

- Hong, J.; Bai, Y.; Huang, Y.; Chen, Z. Hybrid Carbon Price Forecasting Using a Deep Augmented FEDformer Model and Multimodel Optimization Piecewise Error Correction. Expert Syst. Appl. 2024, 247, 123325. [Google Scholar] [CrossRef]

- Gong, Y.; Wang, Y.; Xie, Y.; Peng, X.; Peng, Y.; Zhang, W. Dynamic Fusion LSTM-Transformer for Prediction in Energy Harvesting from Human Motions. Energy 2025, 327, 136192. [Google Scholar] [CrossRef]

- Bhanbhro, J.; Memon, A.A.; Lal, B.; Talpur, S.; Memon, M. Speech Emotion Recognition: Comparative Analysis of CNN-LSTM and Attention-Enhanced CNN-LSTM Models. Signals 2025, 6, 22. [Google Scholar] [CrossRef]

| No. | Features | Count | Mean | Std | Min | Median | Max |

|---|---|---|---|---|---|---|---|

| F1 | Mid-span box girder top surface right temperature/°C | 17,497 | 15.82 | 13.04 | −23.79 | 18.53 | 40.04 |

| F2 | Mid-span box girder bottom surface left temperature/°C | 17,497 | 10.83 | 13.34 | −21.69 | 15 | 30.43 |

| F3 | Mid-span box girder bottom surface left strain/mm | 17,497 | 27.07 | 80.75 | −165.69 | 46.63 | 140.81 |

| F4 | Mid-span box girder bottom surface right strain/mm | 17,497 | 10.83 | 165.96 | −314.64 | 33.5 | 347.75 |

| F5 | Mid-span box girder top surface left strain/mm | 17,497 | 73.57 | 264.77 | −568.80 | 138.67 | 463.95 |

| F6 | Mid-span box girder top surface right strain/mm | 17,497 | 70.24 | 246.93 | −521.77 | 125.56 | 460.63 |

| F7 | Mid-span vibration/Hz | 17,497 | 0.61 | 2.04 | −12.57 | 1.052 | 3.18 |

| F8 | Mid-span crack/mm | 17,497 | 0.10 | 0.83 | −1.86 | 0.308 | 1.23 |

| F9 | Bridge deflection/mm | 17,497 | 1.44 | 7.92 | −11.64 | −6.35 | 0.31 |

| MAPE/% | LDAiT | PatchTST | FEDformer | Transformer | LSTM | RF |

|---|---|---|---|---|---|---|

| 1 h | 1.29 | 2.12 | 3.04 | 4.14 | 4.57 | 4.79 |

| 2 h | 1.68 | 2.62 | 3.43 | 4.56 | 4.71 | 4.88 |

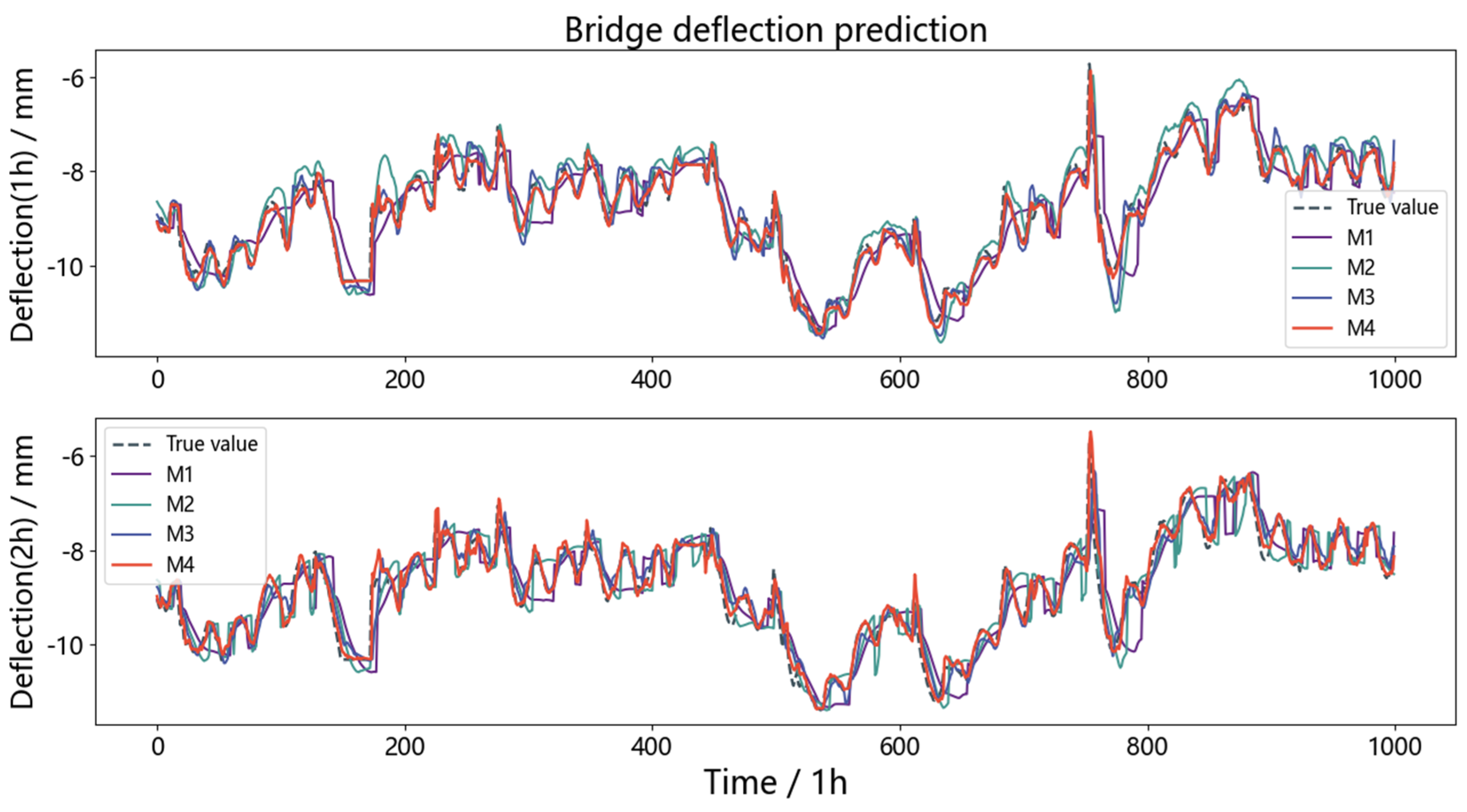

| Method | iTransformer | LSTM | MHDA |

|---|---|---|---|

| M1 | √ | ||

| M2 | √ | √ | |

| M3 | √ | √ | |

| M4 | √ | √ | √ |

| Evaluation Indicator | M1 | M2 | M3 | M4 | |

|---|---|---|---|---|---|

| 1 h | R2 | 0.87 | 0.91 | 0.96 | 0.98 |

| RNSE | 0.43 | 0.37 | 0.24 | 0.17 | |

| MAE | 0.32 | 0.29 | 0.17 | 0.10 | |

| MAPE/% | 3.87 | 3.56 | 2.02 | 1.29 | |

| 2 h | R2 | 0.85 | 0.88 | 0.94 | 0.97 |

| RNSE | 0.46 | 0.41 | 0.29 | 0.18 | |

| MAE | 0.35 | 0.32 | 0.20 | 0.11 | |

| MAPE/% | 4.17 | 3.89 | 2.24 | 1.68 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mu, C.; Chang, C.; Huo, J.; Yang, J. Deflection Prediction of Highway Bridges Using Wireless Sensor Networks and Enhanced iTransformer Model. Buildings 2025, 15, 2176. https://doi.org/10.3390/buildings15132176

Mu C, Chang C, Huo J, Yang J. Deflection Prediction of Highway Bridges Using Wireless Sensor Networks and Enhanced iTransformer Model. Buildings. 2025; 15(13):2176. https://doi.org/10.3390/buildings15132176

Chicago/Turabian StyleMu, Cong, Chen Chang, Jiuyuan Huo, and Jiguang Yang. 2025. "Deflection Prediction of Highway Bridges Using Wireless Sensor Networks and Enhanced iTransformer Model" Buildings 15, no. 13: 2176. https://doi.org/10.3390/buildings15132176

APA StyleMu, C., Chang, C., Huo, J., & Yang, J. (2025). Deflection Prediction of Highway Bridges Using Wireless Sensor Networks and Enhanced iTransformer Model. Buildings, 15(13), 2176. https://doi.org/10.3390/buildings15132176