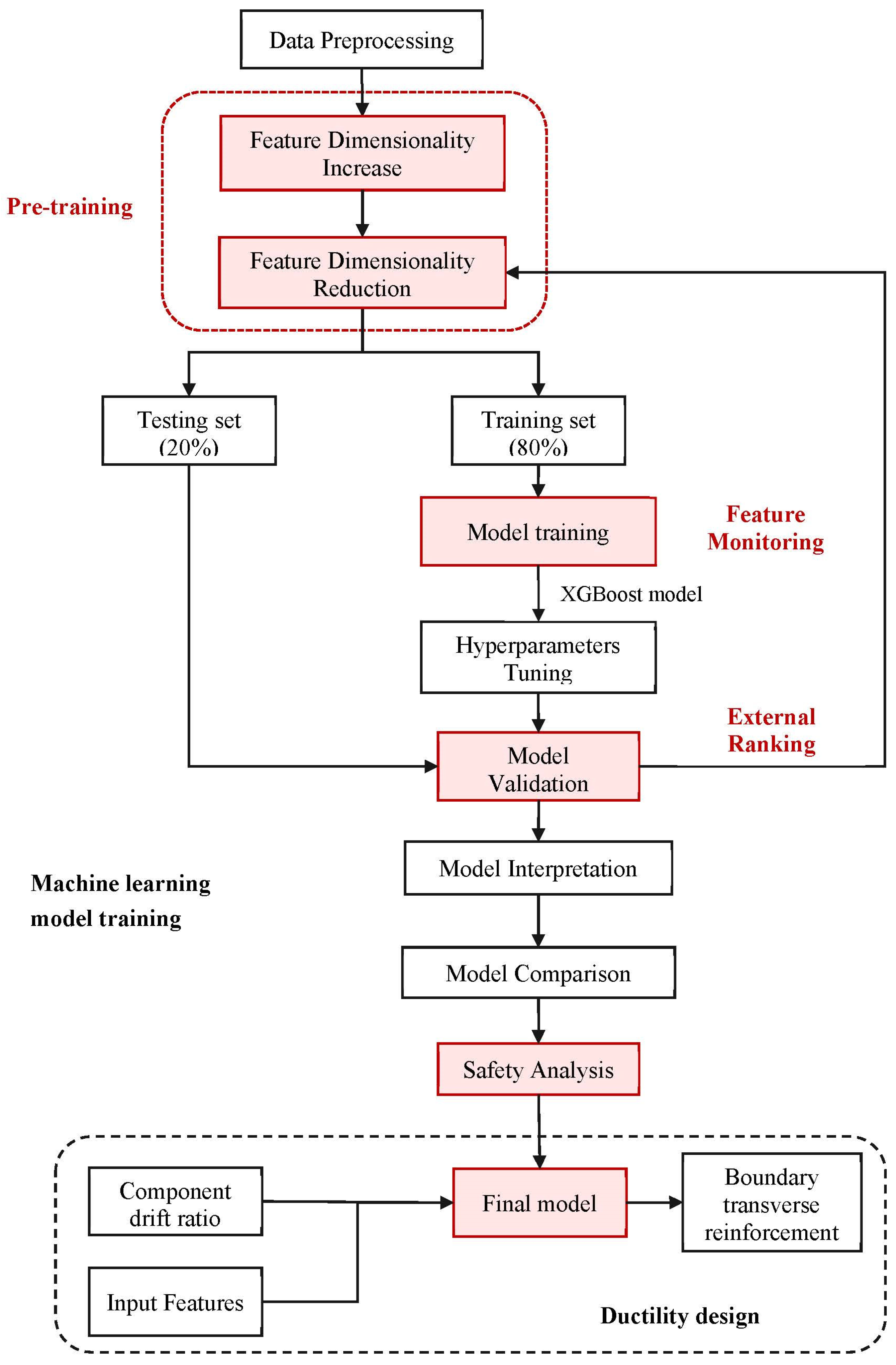

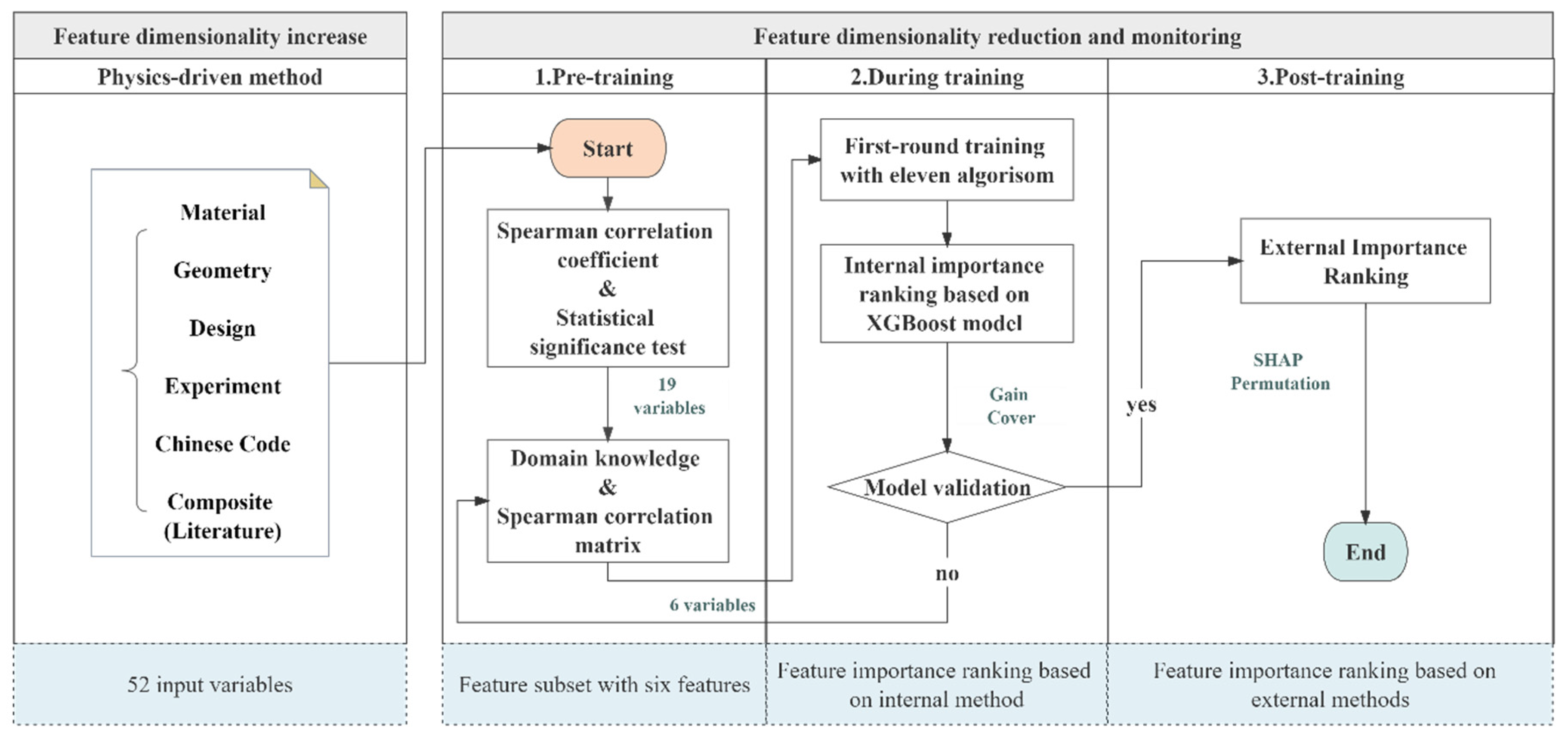

4.1. Feature Dimensionality Increase

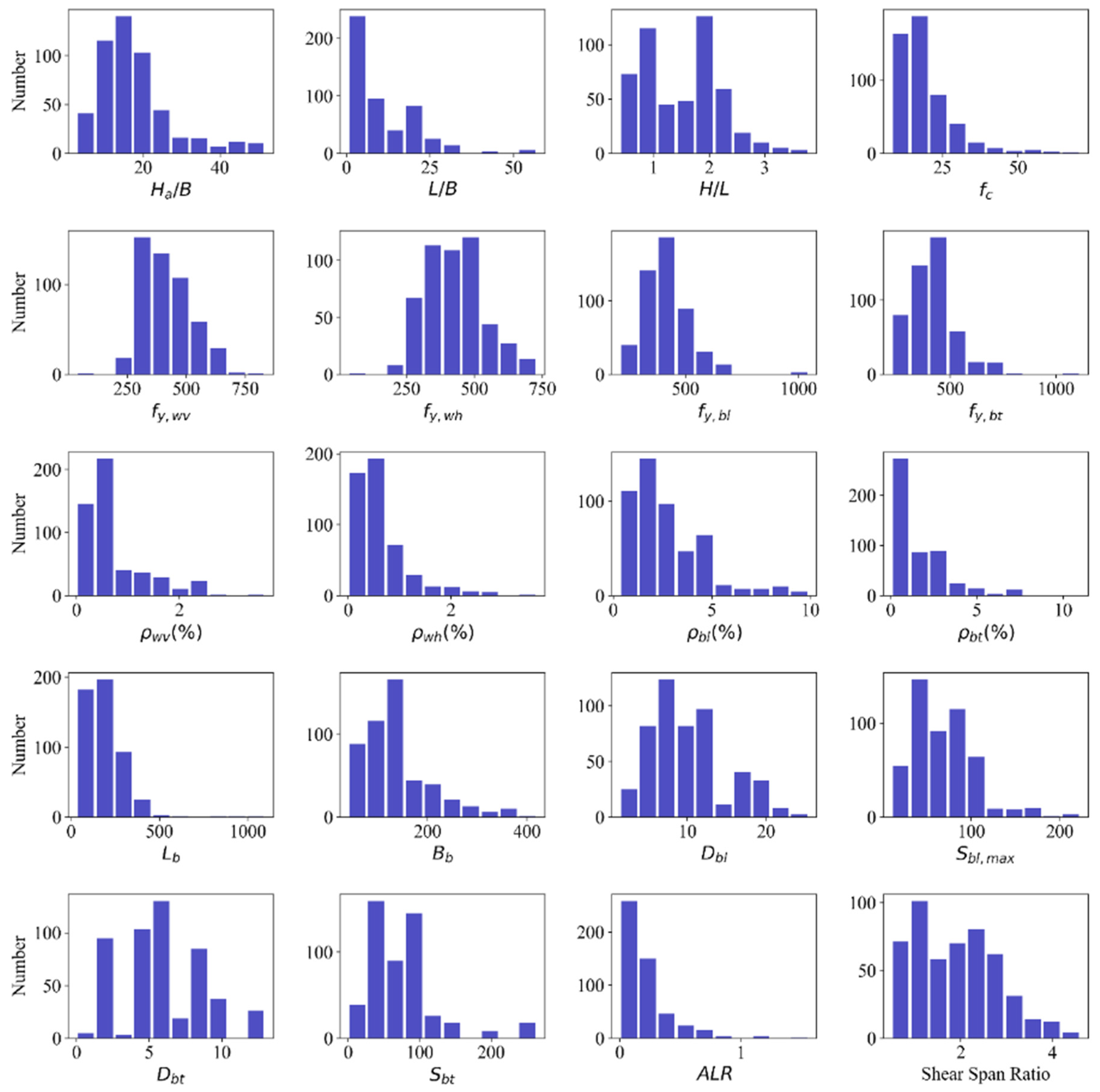

In this study, feature dimensionality increases are achieved via the physics-driven method, i.e., the collection of physical quantities that conform to fundamental laws. These are drawn from three sources: (1) simple variables from a database, such as height or thickness in geometry; (2) calculated variables used in design, such as axial compression ratio; and (3) composite variables utilized in previous research or codes. The input variables to be considered include materials, geometry, design, test to code-related, literature, and composite parameters. There is a total of 52 parameters, as shown in

Table 1. It should be explained that the categorical variable, namely, the configuration of the boundary transverse reinforcement, is not set as an input variable. This is because most of the samples only have one identical single arrangement, with no evident difference between versions.

Before the next step, preprocessing and transformation are implemented, because some of the categorical variables are not suitable for correlated analysis or certain algorithms. An encoding technique must be used to transform the categorical variables into numeric ones. In this study, target encoding (mean encoding) is adopted, where each category (loading modes and cross-sectional shapes) is replaced with the mean of the target variable for samples sharing that category.

4.2. Feature Dimensionality Reduction

Feature dimensionality reduction or feature selection is then performed to identify the most suitable feature subset for subsequent training by analyzing the relationship between input variables and the target variable. Statistical methods are utilized to obtain a subset with minimal features before model training.

Firstly, input variables weakly correlated with target variables are quantitatively assessed using the Spearman’s correlation coefficient, a non-parametric measure of rank correlation which assesses the strength and direction of association between two ranked variables. Unlike Pearson correlation coefficient, it does not assume that data are from a specific distribution. Hence, it can be used with ordinal, interval, and ratio data. Additionally, it assesses both linear and non-linear monotonic relationships. Thus, it is applicable to the data from this study. The coefficient

is computed as:

where

is the difference between the ranks of corresponding variables, and

is the number of observations.

After analyzing the relationships in samples, validation should be further expanded to the population. Statistical significance testing provides mechanisms to determine if the result is likely due to chance or if there might be a genuine effect or difference, indicating whether the sample observation also applies to the population.

Table 2 reports the Spearman’s correlation coefficient and the

p-value from the statistical significance test. More details of the parameters are listed as

Table A1 in

Appendix A. A null hypothesis (

) is tested there, with a statement that there is no strong monotonic correlation in the population. The hypothesis test is expressed as

and

. The alpha level (

) is set at 0.05, meaning that a

-value less than 0.05 indicates that the null hypothesis is invalid—that is, there is no strong association between the two variables. Overall, 33 input variables with

or

are typically eliminated.

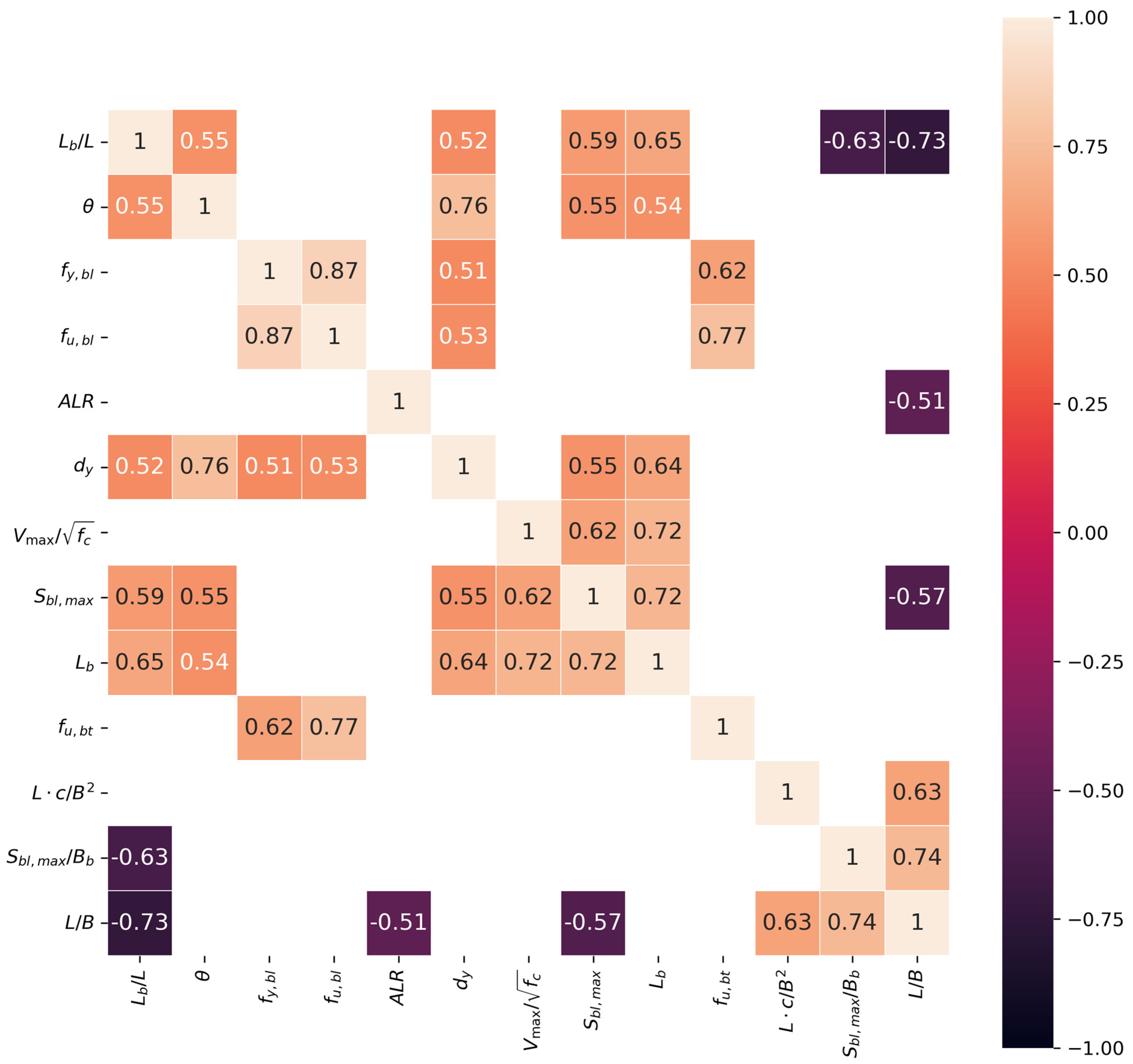

Secondly, with the aim of establishing a minimal subset of features that exhibit the highest degree of mutual orthogonality, there is a need to reduce variables for model training by cutting off the highly correlated variables in the preselected subset above. Through domain knowledge and the Spearman correlation matrix, groups of input variables with high mutual correlation are identified. Only one feature is retained for each pair and used to mitigate their potential influence on the model outcomes. Obviously,

,

, and

display direct computed relationships with the target variable (

) and ductility demand (

).

is included in the parameter

with higher

value. Similarly,

and

are also parts of parameters with higher

value, namely

and

. Thus,

,

,

,

,

, and

are removed before proceeding to the next phase. It can also be seen in

Table 2 that the ductility demand

as an indicator of the prediction in this study, is very suitable because of its relatively high ranking and low

-value compared with the ductility factor (

).

In general, it is good practice to remove highly correlated features prior to training, regardless of the adopted algorithm. Thus, only several features are retained for each pair of highly correlated features.

Figure 4 presents the Spearman correlation matrix, in which there are three pairs of highly correlated features (

): (1)

—

,

,

,

; (2)

—

,

; (3)

,

,

—

. To fulfill the aim of this study, four input variables relevant to the factor (

) in this study are removed. In the second pair,

displays stronger correlation with transverse reinforcement and a lower

-value compared with its correlated versions, meaning that it is retained in the second group.

is simultaneously related to other input variables within the same group, which indirectly indicates that it can be largely characterized by the other variables. In order to maintain both the relative completeness and diversity of information conveyance, it is excluded here.

In summary, a robust feature subset can be defined as one that encompasses the maximum amount of effective information with the smallest subset size, while also minimizing mutual interference among features. This subset is selected from input variables via the following process:

- (1)

Weak correlation elimination with the target is conducted by utilizing Spearman’s rank correlation coefficient () to compute and explore nonlinear relationships between input variables and the target variable. Validating the applicability of these patterns from the sample to the general population via significance testing () identifies nineteen input variables with strong correlation to the target variable;

- (2)

Physical dimensionality reduction is performed. Leveraging domain knowledge in civil engineering, the nineteen input variables are subjected to substitutability analysis, eliminating replaceable variables;

- (3)

Elimination of collinear input variables is conducted, using the Spearman correlation matrix to identify groups of variables with strong correlations. The selective elimination of highly correlated input variables within each group ensures that the feature subset conveys more effective information within a simplified structure, reducing the mutual influence of input variables. This approach enhances the precision of model training and reduces subsequent computational demands.

Finally, six input variables, namely,

,

,

,

,

, and

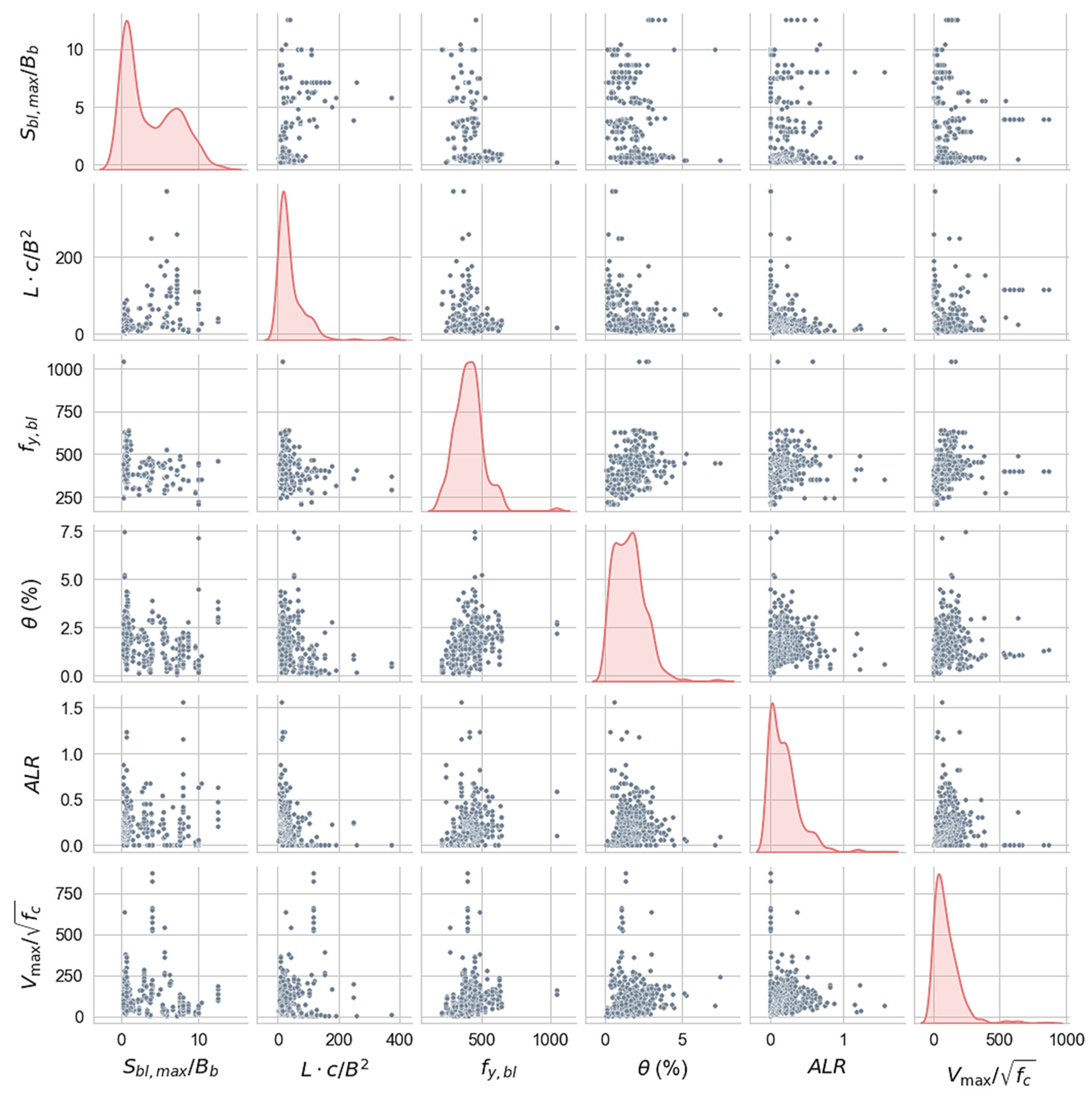

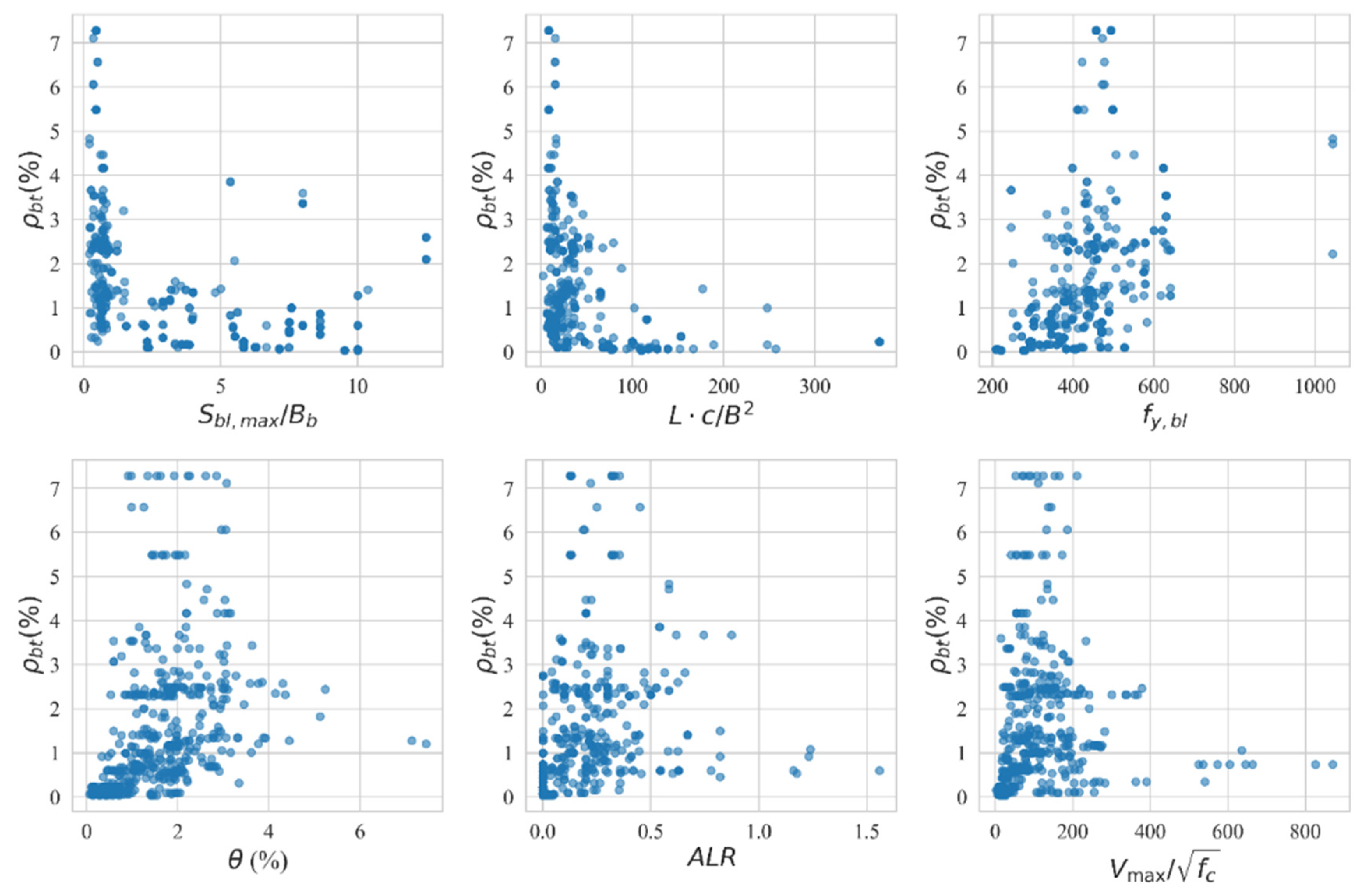

, are selected through the following steps and they constitute the feature subset for model training. The scatter matrix is shown in

Figure 5, and the variation in transverse reinforcement with respect to each feature is given in

Figure 6. These values, respectively, represent the yield strength of the longitudinal reinforcement in BEs, the ductility demand, the level of shear stress related to the failure mode, the ratio of the spacing of longitudinal reinforcements along the length of the wall to the thickness of BEs, the relative slenderness of BEs, and the design axial load ratio. Each of these factors is physically related to wall deformation. In other words, they are all potentially associated with the configuration of boundary transverse reinforcement.

4.4. Machine Learning Algorithm

A feature subset of six features is utilized to pre-train the model. Some 11 algorithms are considered and compared to select a suitable one. The first group comprised (1) linear algorithms: ordinary least squares (OLS), lasso regression (lasso), ridge regression (ridge), K-nearest neighbors (KNN), and support vector regression (SVR). We also considered (2) tree-based algorithms: decision trees (DT), random forests (RF), AdaBoost, LightGBM, and CatBoost, as shown in

Table 3. Among the tree-based algorithms, there are two categories of strategy, namely, parallel ensemble techniques (bagging methods) and sequential ensemble techniques (boosting methods). Linear regression models are utilized as the basic model for comparison. Comparing the predictions of several linear models, tree-based models increase accuracy and dig out potential nonlinear relationships.

In pre-training process, the training set (80% of the data) is utilized to train the models based on 11 algorithms. Then, the performance is evaluated based on the testing set by comparing the predicted values with the experimental data, as shown in

Table 3. Tree-based models display better performance than linear models. Statistically, a model with a high value of

and corresponding low values of error (RMSE, WAPE) is considered to possess better performance in terms of accuracy and robustness (the three metrics are summarized in

Section 4.3). The XGBoost algorithm [

48,

49] is selected as the final prediction model of boundary transverse reinforcement via comparison with various algorithms, because it shows the best performance (

= 0.819, RMSE = 0.239, and WAPE = 0.294) on testing sets among all 11 ML models.

Additionally, we remove two samples after pre-training because of the outlier degree, which exerts a negative effect on accuracy. An example of the final XGBoost model weight tree for this study is shown in

Figure 7.

4.5. Model Training with Hyperparameter Tuning and Cross-Validation

After selecting the model training algorithm, it is necessary to adopt reasonable methods for training and prediction and to optimize the selection of hyperparameters. It effectively enhances the generalization ability while reducing the likelihood of overfitting during the training process. This involves hyperparameter selection and model training methods. In this study, k-fold cross-validation (CV), ratio sampling, and grid searching are combined to train the final model with the optimal combination of model parameters.

This study uses the following methods to optimize the model. We apply a random split with 20% data for testing and 10-fold cross-validation to process a single round of training and prediction, whereby single rounds are repeated 100 times via random ratio sampling. To obtain the best output from the final model, grid search is applied to tune hyperparameters. Eight hyperparameters are considered for tuning, and they are listed in

Table 4. We built and evaluated every combination of these factors. The combination with relatively higher value of

and lower values in RMSE and WAPE is selected. To alleviate the inherent randomness of selecting training and testing samples, a k-fold cross-validation process is employed [

42]. Finally, under conditions of very small error variability, the overall performance is improved. The

of the original model increases from 0.819 to 0.884, marking an improvement of 8%. The statistical performance of the final XGBoost model is listed in

Table 5. Additionally, as a variant of the coefficient of determination, the adjusted R-squared value considers the influence of the number of features on the complexity of the model and is used to perform feature selection or reduction during training.

While training, a model-based or internal method is seen as a means of performing feature selection, providing direct assessment of the importance of features according to the current model, and offering instruction for feature adjustments. The gain (Gini importance) and cover of the XGBoost model offer a more holistic view of the feature’s contribution. Gain (Gini importance) refers to the average optimization of information gain (objective function) brought by the feature corresponds to the reduction in prediction error after the feature is split during node splitting. The higher the value of this feature, the greater its contribution to enhancing the performance of the model. Cover refers to the number of samples covered by the leaf nodes of a feature divided by the number of times the feature is used for splitting in tree models during splitting. The closer the split is to the root, the larger the cover value, indicating that the feature covers a larger scale of node splits in the model. This can be used to complement and verify the ranking results of Gain. Determined based on distinct objectives, external importance analysis is employed to comprehensively discern the influence of individual features within the model on the final outcomes with greater confidence in the results. In this study, two ways of ranking external importance are given to reflect the points of different emphasized in later sections, namely, the SHAP method and the permutation importance method.

Internal importance rankings of features are obtained, as shown in

Figure 8. The greater enhancement of a feature means that it makes a more important contribution to the performance improvement of the model, where gain refers to the reduction in prediction error due to segmentation using this feature. Additionally, external methods are applied to provide more perspectives of relationship between features and the model, as shown in

Figure 9. Permutation importance reflects the degree of depreciation of the target after the corresponding feature is scattered, while the SHAP value shows the marginal effect of the feature being removed.

,

, and

display the highest feature importance values on most occasions. In both internal and external methods,

and

emerge as the predominant features. Their significance suggests that they play a crucial role in enhancing model performance, including in the presence of disturbances.

and

score higher in internal ranking (cover), but behave relatively worse in external ranking, showing that the disturbance to the model is weaker when each of these two features changes. The performance of the model is more sensitive to changes in

and

as they have higher scores in external ranking.

4.6. Interpretation of XGBoost Transverse Reinforcement Model

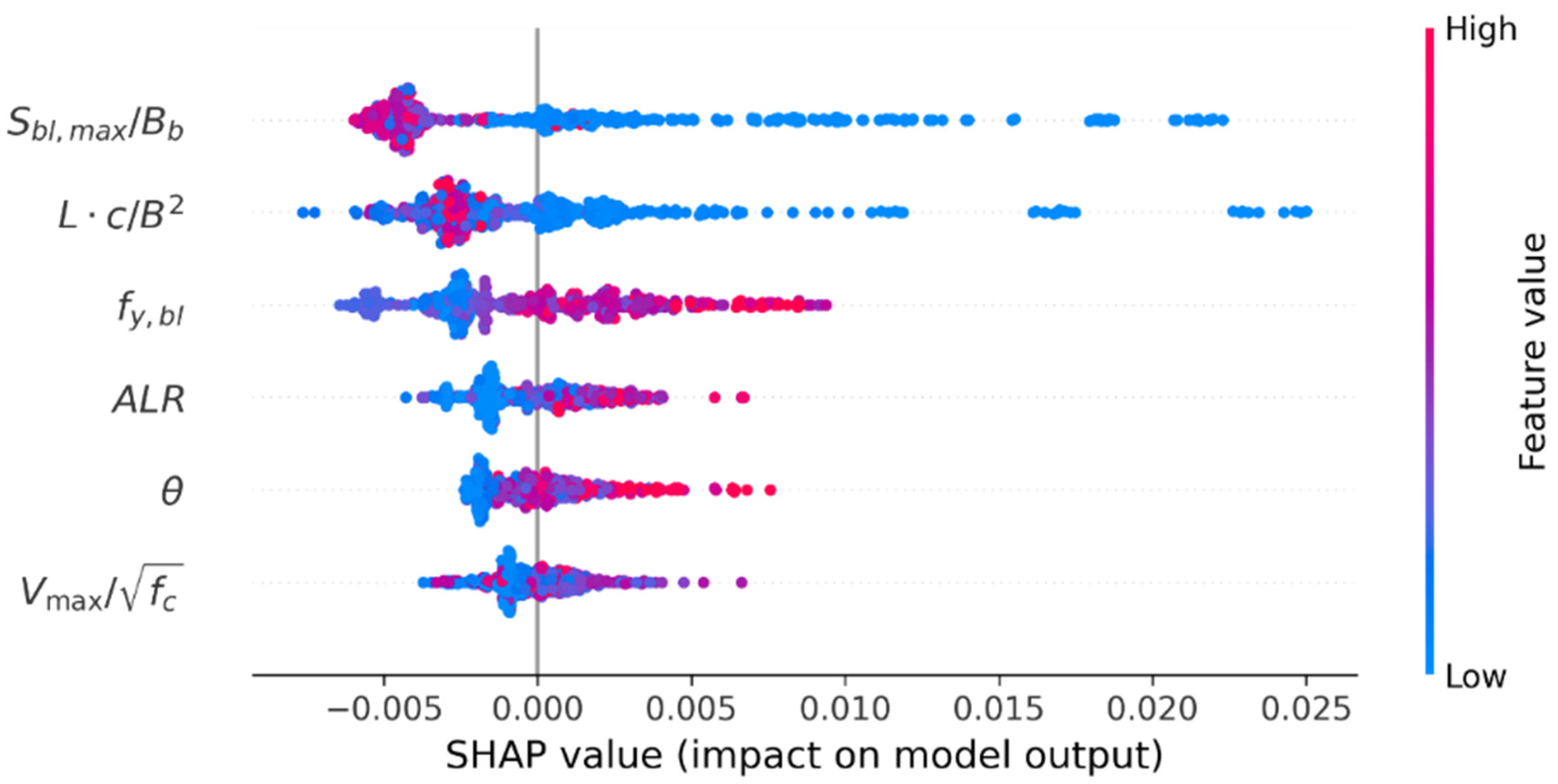

To understand the behavior of the proposed XGBoost model, tools for global explanation, local explanation, and error analysis are used to obtain greater understanding and interpretation. On the basis of robust statistical indicators and small errors, the trend of the feature’s impact is explained by SHAP values and a partial dependence plot (PDP).

SHAP values, proposed by Lundberg and Lee [

50], are computed to obtain a global explanation of the prediction trend affected by features.

Figure 10 presents the SHAP summary plot for an XGBoost model trained on 501 samples. It illustrates the impact of each feature on the output, as conveyed by SHAP values derived from the XGBoost model. The horizontal axis, representing the SHAP values, reveals their influence on the predicted variable. Features are placed in order on the vertical axis based on their SHAP importance. SHAP values closer to zero suggest a less significant impact on the prediction, while values further from zero imply a more pronounced influence. The dots symbolize samples from the database. Their color, transitioning from blue to red, indicates the progression of feature values from low to high. The dot density represents the concentration region of a particular feature. When interpreting the impact trend of individual features, (1) the progression of the corresponding row from left to right and the distinct change in the dot color from blue to red indicates that an increase in the feature value is favorable for enhancing transverse reinforcement. The trend is the opposite when the change is from red to blue. (2) The broader the range provided by the SHAP values and the sparser the dots, the more sensitive the predicted variable is to alterations in feature values. Furthermore, (3) in areas where dots are predominantly clustered in blue and located on the left of the zero axis, it is possible to surmise that that for most classifications with small feature values, a smaller value of this feature is unfavorable for transverse reinforcement. The interpretations for other scenarios are analogous.

In this model, exhibits the greatest predictive power, followed by and then . It should also be observed that high and values are associated with lower boundary transverse reinforcement. For and , the opposite conclusions are drawn. This aligns with a multitude of experimental findings suggesting that boundary longitudinal reinforcement with a higher yield strength, greater ductility demand, and larger design axial load ratio increases the transverse reinforcement in the boundary element. The influence trend for is not evident. Simultaneously, both and exhibit a broader SHAP value range, and smaller values display a more pronounced impact. This suggests that the prediction of transverse reinforcement is sensitive to the abovementioned features, rendering them unsuitable for referencing in boundary transverse reinforcement design, even if they possess greater importance. In contrast, the ductility demand () used in this study displays a narrow SHAP value range. As the value increases, the SHAP value changes uniformly, and the negative impact is minimal, causing less perturbation to the model. The concentrated values for both and are for larger feature values, whereas the other four features are for smaller values.

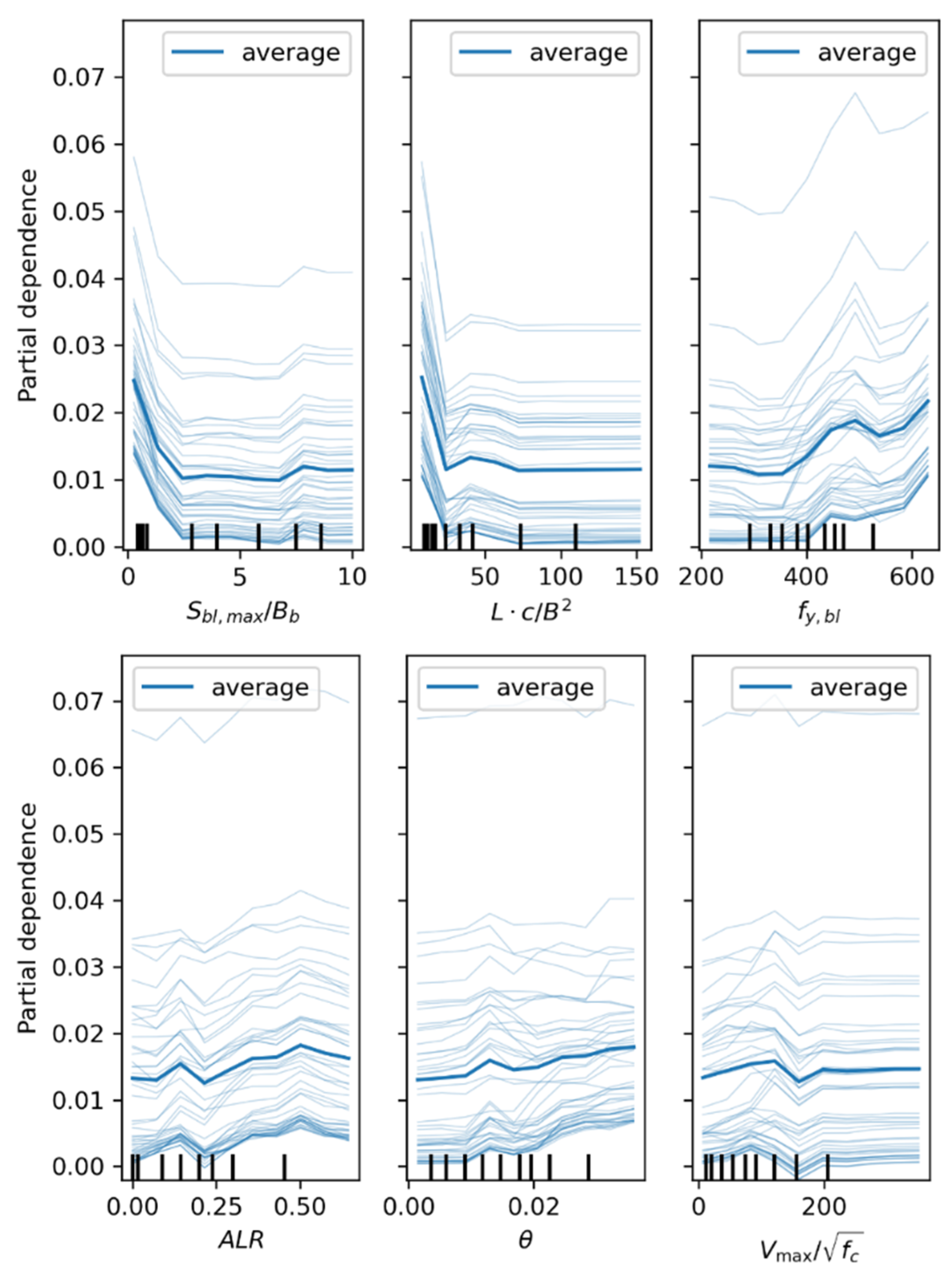

Additionally, a partial dependence plot (PDP) [

49,

51,

52] is a visualization tool that displays the marginal effect of one or two features on the average prediction of a machine learning model. PDP plots are typically represented graphically, where the

x-axis represents the values of the feature and the

y-axis represents the predicted value of the model. Each light-colored line represents the result line of one sample. For easier viewing, only 100 individual sample result lines are displayed, while the dark-colored line is the average line of all samples. It can help to understand how the model predictions change when the feature values increase or decrease.

Figure 11 displays the one-way PDPs of predicted boundary transverse reinforcement with different features in the XGBoost model. The trends that correspond to different features are essentially consistent with the patterns presented in the SHAP plot. This provides a reliable basis for the identified patterns and visually demonstrates the degree of change in prediction for different value segments. Among these trends, both

and

exhibit a distinct negative relationship with the prediction, especially within the intervals

, and

.

exhibits a clear positive relationship with the prediction, although a negative relationship is evident in the range

. The relationship between the change in

and the predicted transverse reinforcement is complex. Overall, it is a positive relationship, especially in the intervals

and

. However, when the axial load ratio increases (i.e.,

), a negative relationship is instead revealed. The impact of

on the prediction is not pronounced (it is positively correlated when

, and essentially unchanged when

).

displays a clear positive relationship with the prediction (it is positively correlated when

and

, and negatively correlated when

). It is worth noting that, with the changes in

,

, and

, the average predicted value range is between 1% and 2.5%. With the fluctuations in

,

, and

, the predicted value primarily hovers between 1% and 2%.

In addition, residual analysis is also used to reflect the specific errors in the trained model based on the selected algorithm. A variant of the coefficient of determination, known as adjusted R-squared, considers the influence of the number of features on the complexity of the model, and is used to feature selection or reduction during training. A histogram of errors for the XGBoost model is shown in

Figure 12. As illustrated in

Figure 12, there are more samples with positive error (predicted value greater than the experimental value, approximately 3/5). Approximately 90 percent of the sample error is within 1% of the absolute value, including the interval with the largest error between 0 and 0.5%.

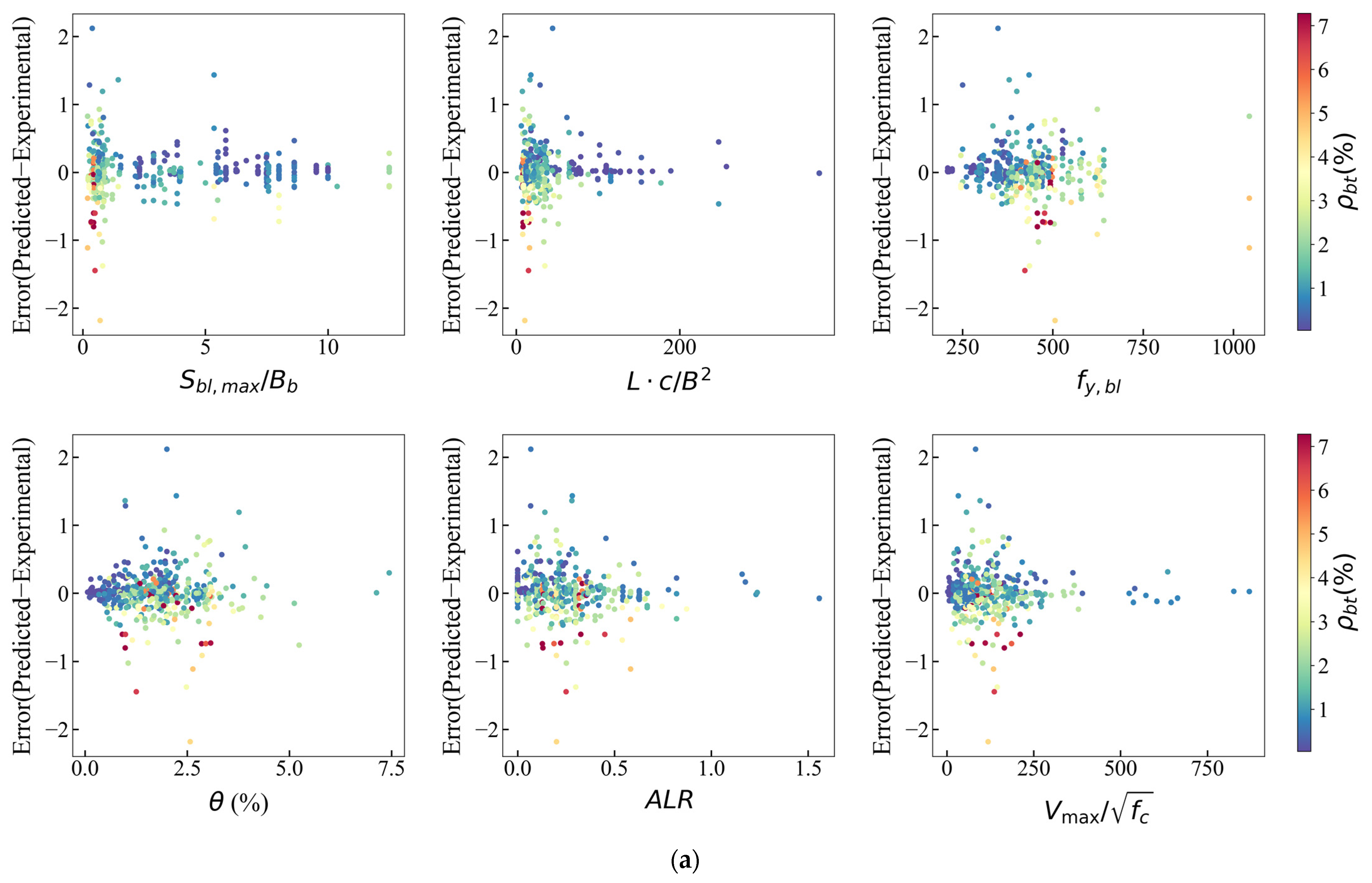

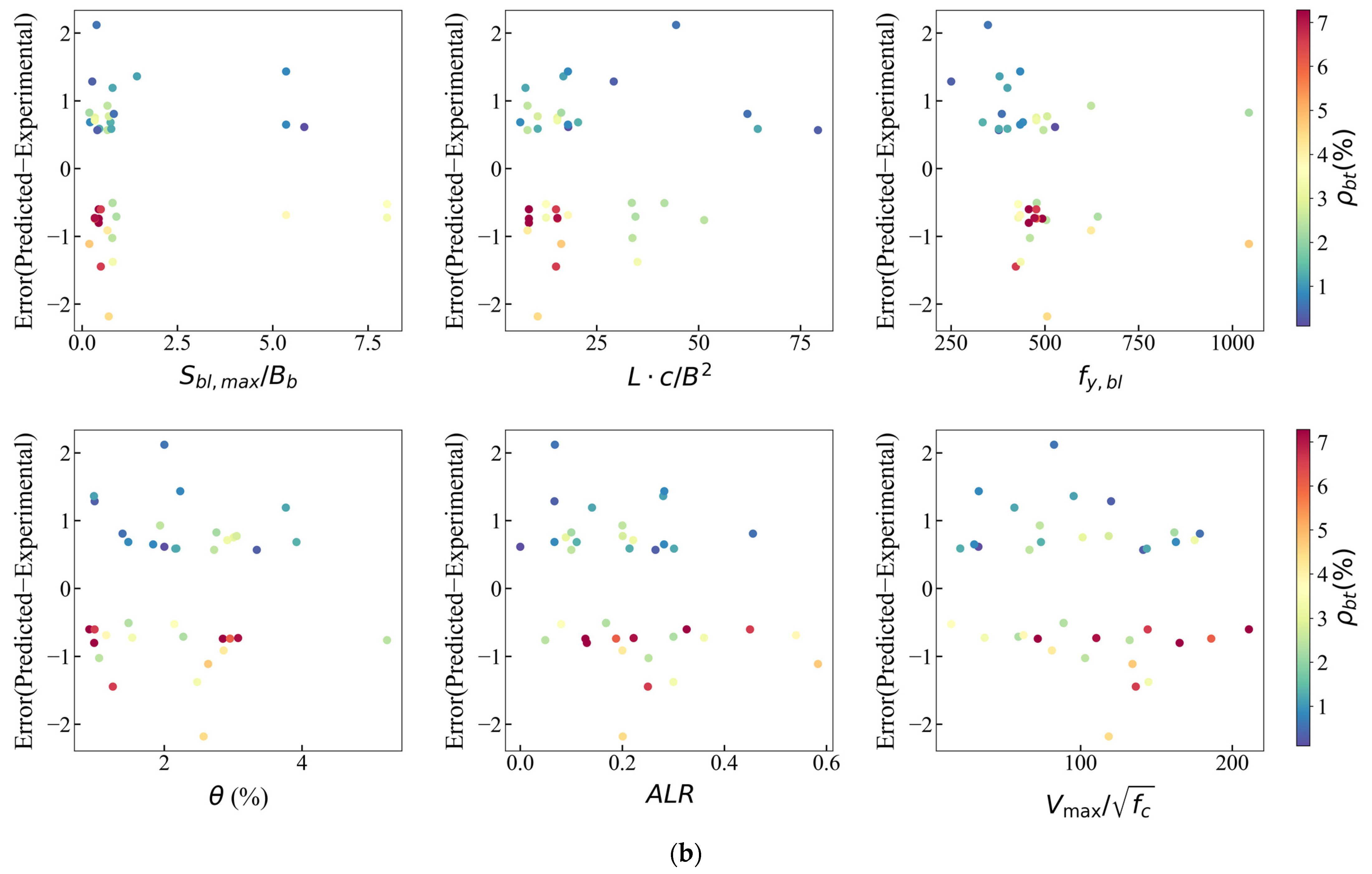

In order to further investigate the relationship between the error distribution and features and quantify the scope of application of each feature in the model with relatively lower error, error scatter plots are plotted, as shown in

Figure 13.

Figure 13a displays the error points for samples with smaller or larger experimental boundary transverse reinforcement, and they tend to be symmetrical. After further investigation of the data points with the highest (>0.5%) absolute error values,

Figure 13b is displayed. It is observed that most of the higher errors appear to be concentrated in the lower values of

,

,

(

),

and

. The error distribution has no obvious trend with the change in

.

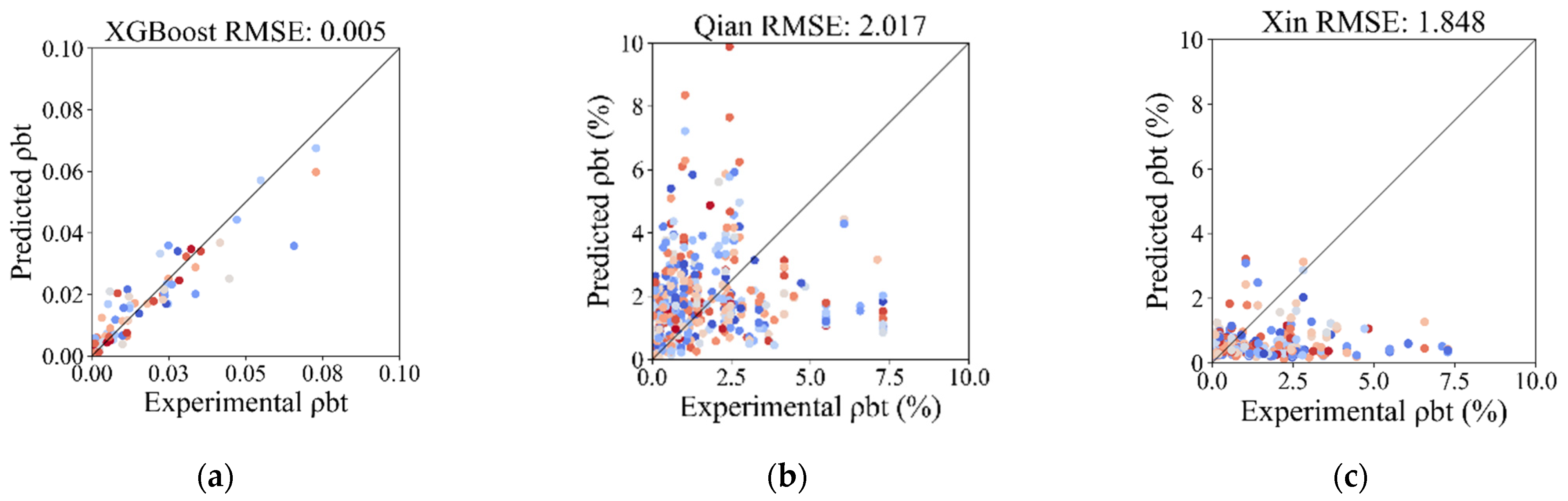

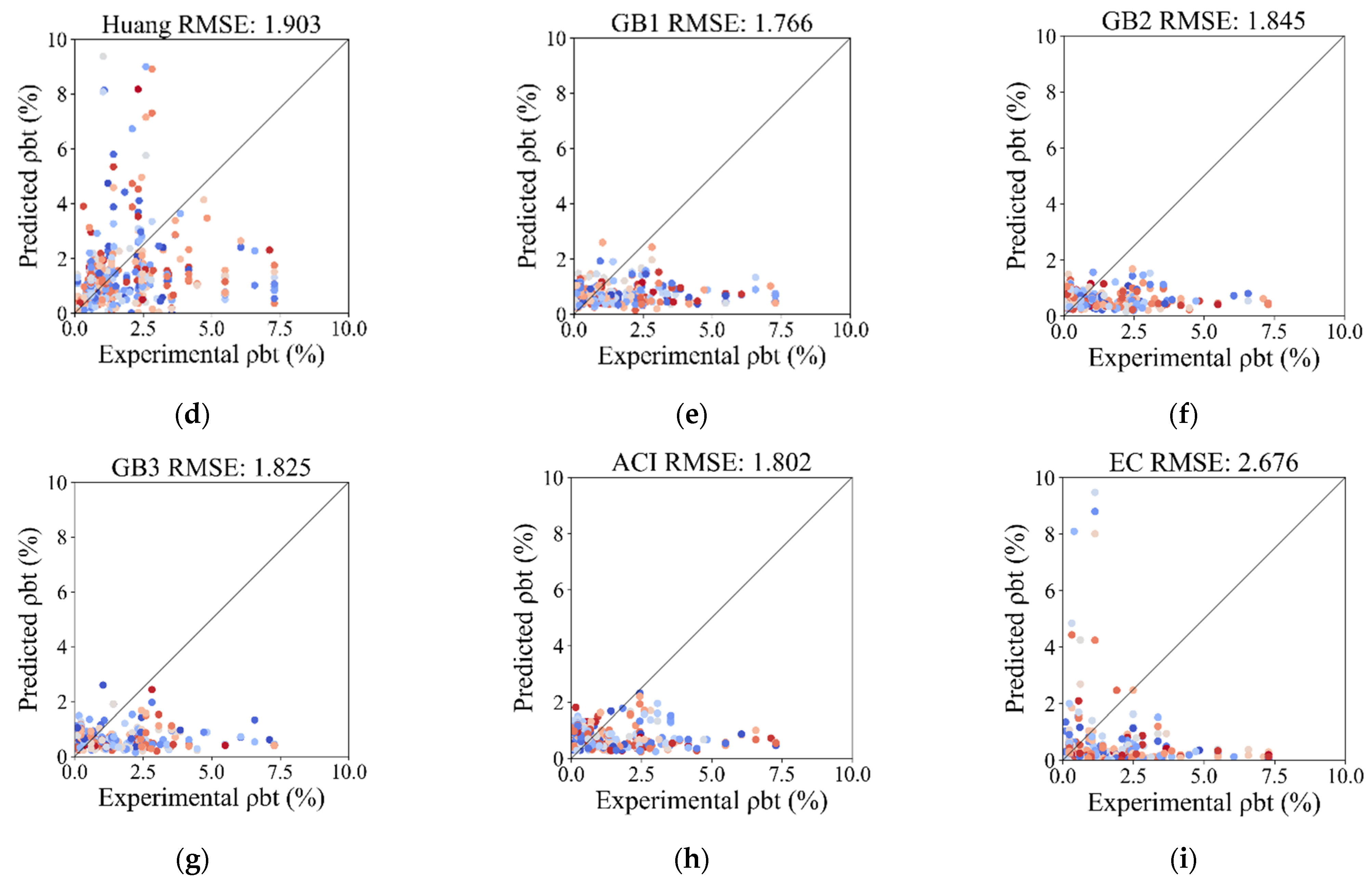

4.7. Comparison of Empirical Equations and Codes

In order to validate the applicability and potential superiority of the proposed XGBoost model, traditional empirical models proposed by researchers and codes are used to predict the transverse reinforcement ratio and test the performance with the same data utilized in this study. GB1–3 correspond to three different combinations of seismic degrees and seismic precautionary intensity in the Chinese code [

10], indicating decreasing severity. Methods in the US code (ACI 318-19) [

11] and the European code (Eurocode 8) [

12] are also included. The results are displayed in

Figure 14 and listed in

Table 6.

Figure 14 and

Table 6 show that the predictive performance of the proposed ML model is more accurate, robust, and stable than that exhibited by its competitors. In

Figure 14, the root mean square errors (RMSEs) of the empirical formulas of Qian et al. [

7], Ma et al. [

8], and Huang et al. [

9] are 2.017, 1.848, and 1.903, respectively, while the RMSE of the XGBoost model on the testing set is 0.005. The standard deviation values of the error between the experimental and predicted transverse reinforcement ratios of the empirical formulas of Qian et al. [

7], Ma et al. [

8], and Huang et al. [

9] are 1.997, 1.620, and 1.881, respectively, while that of the XGBoost model on the testing set is 0.005. The figures show greater sensitivity and lesser accuracy in empirical formulas than those produced using the XGBoost model. This also reflects the robustness and stability of the ML model in prediction compared with empirical methods, because RMSE is sensitive to outliers and penalizes large errors more severely. In

Table 6, the lowest weighted absolute percentage error (WAPE) of three types in the Chinese code (GB 50010-2015) [

10], ACI 318-19 [

11], and Eurocode 8 [

12] is 0.772, which is obviously higher than that of the XGBoost model, with a value of 0.246. This shows that the overall predictive performance of the XGBoost model is better, because WAPE is a summary statistic that provides a holistic, global error assessment which focuses on the overall degree of prediction error.

The primary reasons for and advantages of the superiority of the XGBoost model can be attributed to the following facts: (1) Empirical formulas are derived based on a limited number of original shear wall specimens, which implies that the accuracy and applicability might be constrained by the scope (e.g., cross-sectional shape and level of confinement) and diversity of the initial experimental data (e.g., axial load ratio). They may not be fully applicable to new situations or a broader range of shear wall types that significantly differ from the original data. However, this could be improved in XGBoost models based on the richness of the newly built database. (2) The ability of ML methods, especially tree-based algorithms, to dig out complex and nonlinear relationships is stronger than that of traditional linear regression formulas. (3) Empirical formulas might neglect, overestimate, or underestimate parameters that contribute to the transverse reinforcement ratio, due to the restriction of computing and analysis abilities.

4.8. Safety Analysis

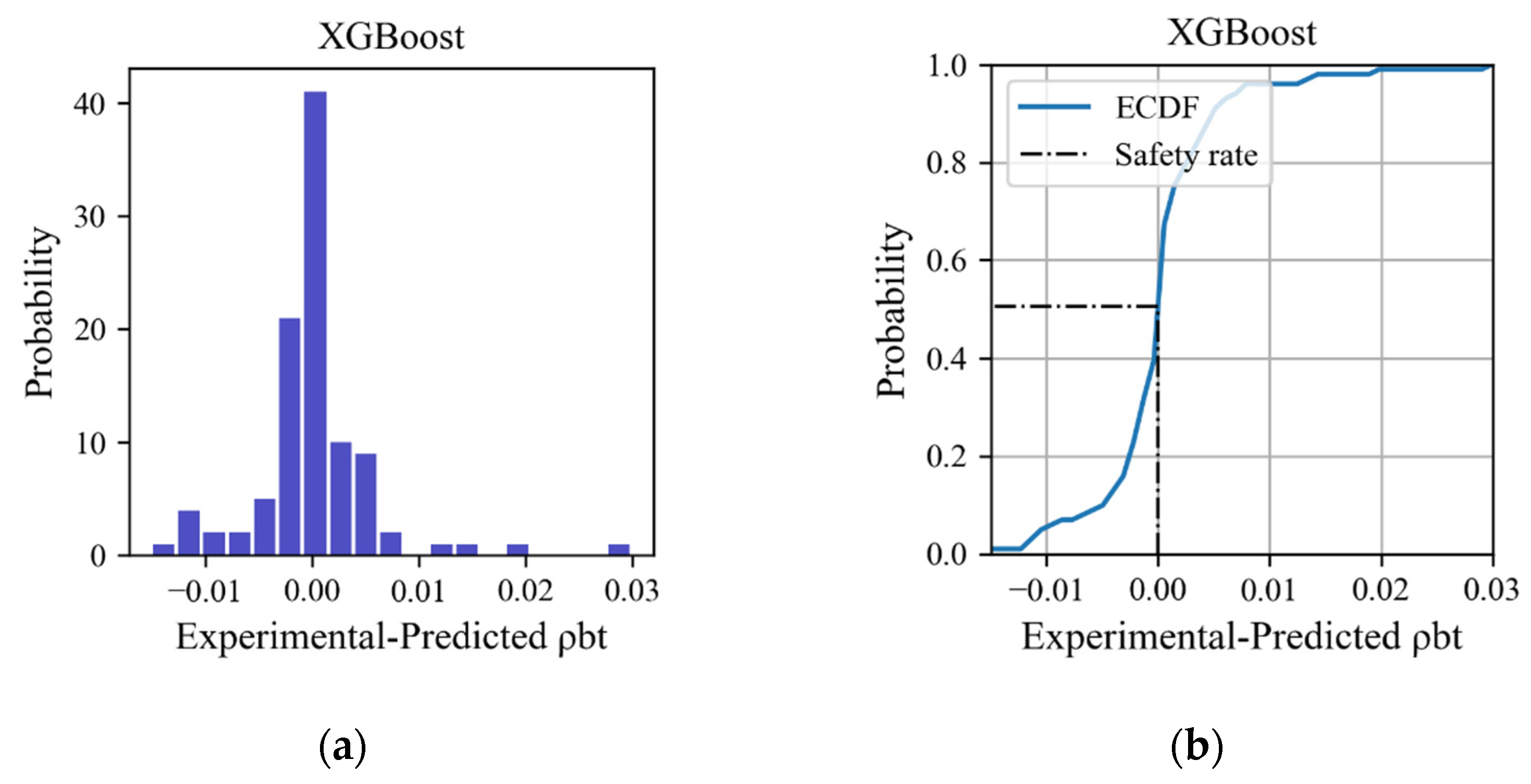

Figure 15 displays the histogram and empirical cumulative distribution of the error between the experimental and predicted transverse reinforcement ratio of the XGBoost model when applied to testing sets. The vertical line at error = 0 indicates that the predicted value is equal to the experimental value. Errors less than 0 indicate safety, whereas errors larger than 0 indicate insecurity. Most of the transverse reinforcement ratios predicted by the XGBoost model are very close to the experimental values, with a safety probability of 57%.

When applied in engineering practice, factoring in safety, structural stability, and reliability, the prediction model should be sufficiently conservative to ensure the safe design of RC structures. Acceptance criteria (AC) [

50] provide limiting values of properties, such as drift, strength demand, and inelastic deformation. These are used to determine the acceptability of a component at a given performance level. ASCE 41-17 [

44] provides a procedure for defining AC based on experimental data. However, for more severe performance objectives related to safety and structural stability, considerations for the selection of AC should reflect the probability of exceeding a threshold behavioral state (i.e., life safety, LS, and collapse prevention, CP) [

41,

53], corresponding to the onset of lateral-strength degradation or axial failure. This method is more appropriate and consistent with the performance objectives of columns in ACI 369-17 [

54] by Ghannoum and Matamoros [

53].

As primary components, shear walls must resist seismic forces and accommodate deformations if they are to achieve the selected performance level. Additionally, acceptance criteria with a fixed probability are recommended by Wallace and Abdullah [

41]. When assessing a member critical to the stability of a structure, its ability to satisfy the AC for LS would indicate an 80% level of confidence that the member under consideration has not initiated lateral strength degradation [

41]. On this basis, an additional value of 0.24% should be added to the value predicted by the XGBoost model established in this study. Furthermore, in order to meet different demands, several additional values with guaranteed rates are provided in

Table 7.