1. Introduction

With the advancement of the Fourth Industrial Revolution, information and communication technology (ICT), and digital technology, information has emerged as a tool that creates core value across modern industries, surpassing mere statistical and analytical tools [

1,

2,

3,

4]. With the growing importance of and interest in information, the use of information technology in various research fields such as industry, society, and healthcare has surged, necessitating new methods to effectively select, classify, extract, and utilize the rapidly increasing valuable information [

5,

6]. In line with these demands, digital technology serves as a crucial intermediary in the advancement of information technology by causing the expansion and sophistication of information collection methods, including big data, data mining, and machine learning, thereby enhancing the qualitative value of collected information [

7,

8]. Furthermore, in modern architecture, digital technology has been utilized not only to facilitate the design process but also to expand design possibilities. Recently, it has been used as an intermediary that connects information technology with architecture in areas such as urban planning, energy efficiency, facility planning, and simulation by offering new design methods utilizing information technology [

9,

10,

11,

12].

Various types of information can also be extracted from images (image information) through digital technology, which is closely related to architecture. Images with elements such as color and outline are similar to architecture, with interrelationships between form and materials, in that they both create sensory atmospheres. Additionally, images, such as sketches, drawings, and perspectives, have been used as communication tools to convey architects’ intentions and have been recognized as elements that directly influence architectural design [

13,

14]. Specifically, when utilizing image feature information, the unique information of a specific image can be derived [

15]. This suggests that different types of valuable information can be extracted depending on the image type, which can be used as a parameter to expand the potential for form generation from various perspectives.

Thus, the combination of architectural and image information technologies mediated by digital technology can suggest the potential for expanding the architectural design domain based on the inherent possibilities of image information. Specifically, approaches that use image feature information to derive unique information from specific images or to extract various types of valuable information can offer new possibilities for form generation in architectural design and indicate the need for related research.

However, the use of information technology in architecture has predominantly been perceived as an ancillary element in the design process, either as a tool to support the functional optimization of design by effectively extracting and analyzing information on quantifiable conditions and phenomena or as a means to create intuitive, aesthetic patterns through information visualization [

16]. This suggests that the architectural use of information technology has remained at a basic stage, mainly for analysis purposes, and that existing research has been limited to functional optimization without fully exploiting its potential as a primary element in design generation. Moreover, there is a lack of research on form generation methodologies utilizing the information itself.

This study aims to systematize the form generation process using image feature information. Specifically, it extracts and analyzes feature information from images using digital technology and examines how this information can complement each other. Based on the analysis, it identifies the types and characteristics of various valuable pieces of information derived from either the feature information itself or their combinations. Furthermore, by analyzing the morphological characteristics of designs generated using this information, the study seeks to systematically establish the form generation process based on image feature information.

When applying this study to actual architectural design, various images related to the project (surrounding photographs, past historical records, landscape photographs, etc.) can be used, and the information extracted from these images can be used as important data for implementing design goals, such as indicating the context and characteristics of the project site and defining the nature of the design. It also suggests that not only image information but also various types of information such as sound, physical movement, and topography can be used as variables for form generation, and indicates the potential to serve as foundational data for architectural design methodologies using information technology. Automated information extraction and shape generation can reduce the time and mental effort required in the design decision stage, support designers’ decisions, and enable the generation of various alternatives, thereby expanding design possibilities. Future research is expected to analyze the characteristics and sensory atmospheres presented by information according to the types of valuable information and systematize the relationships between this information and the generated forms. This can lead to an automated process for generating alternatives based on causal relationships rather than random forms generated by arbitrary values.

2. Research Methodology

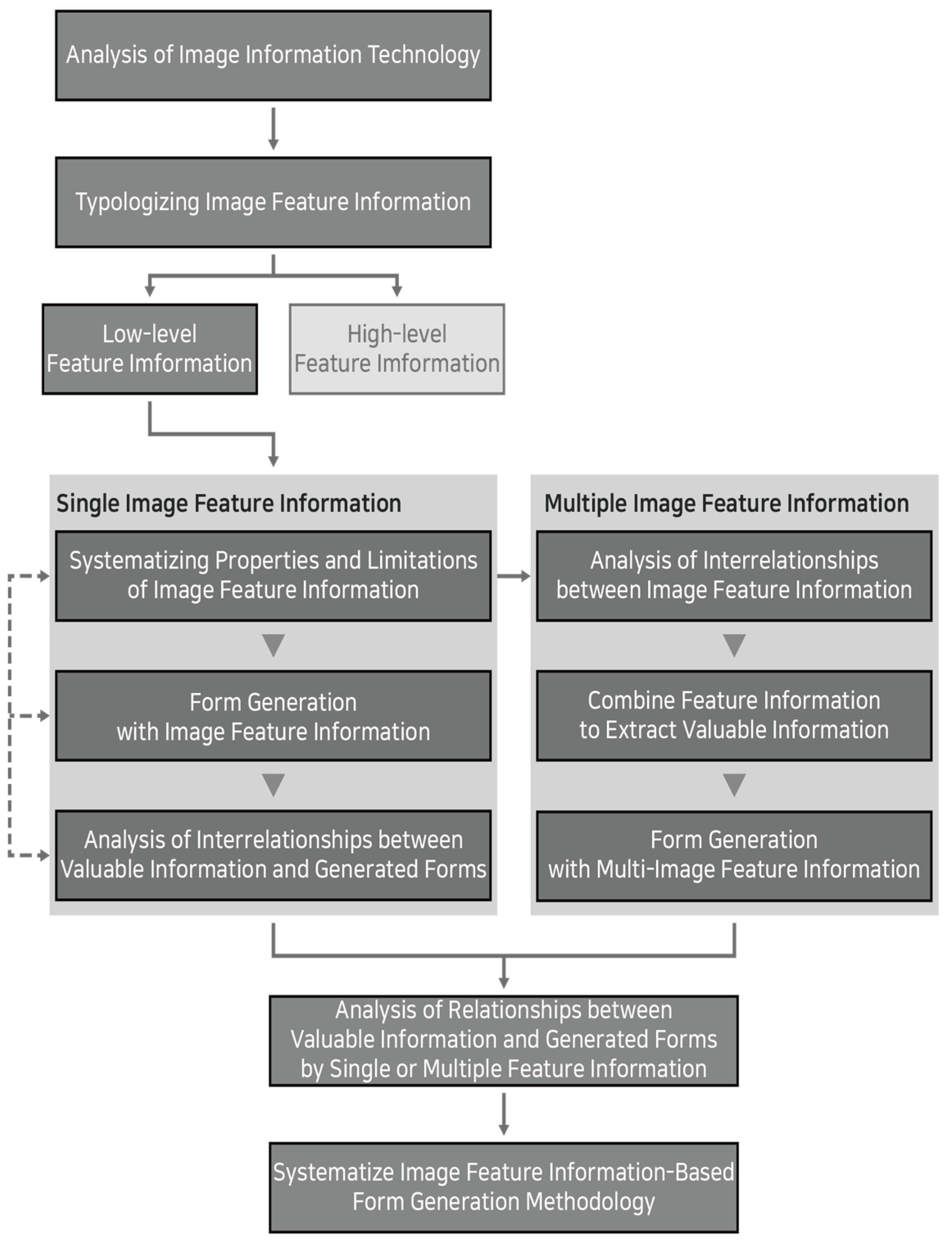

This paper is structured as follows: 1. Theoretical consideration of image information technology; 2. Analysis of the characteristics of image feature information by type; 3. Establishment of the form generation methodology; 4. Analysis of the design process; and 5. (

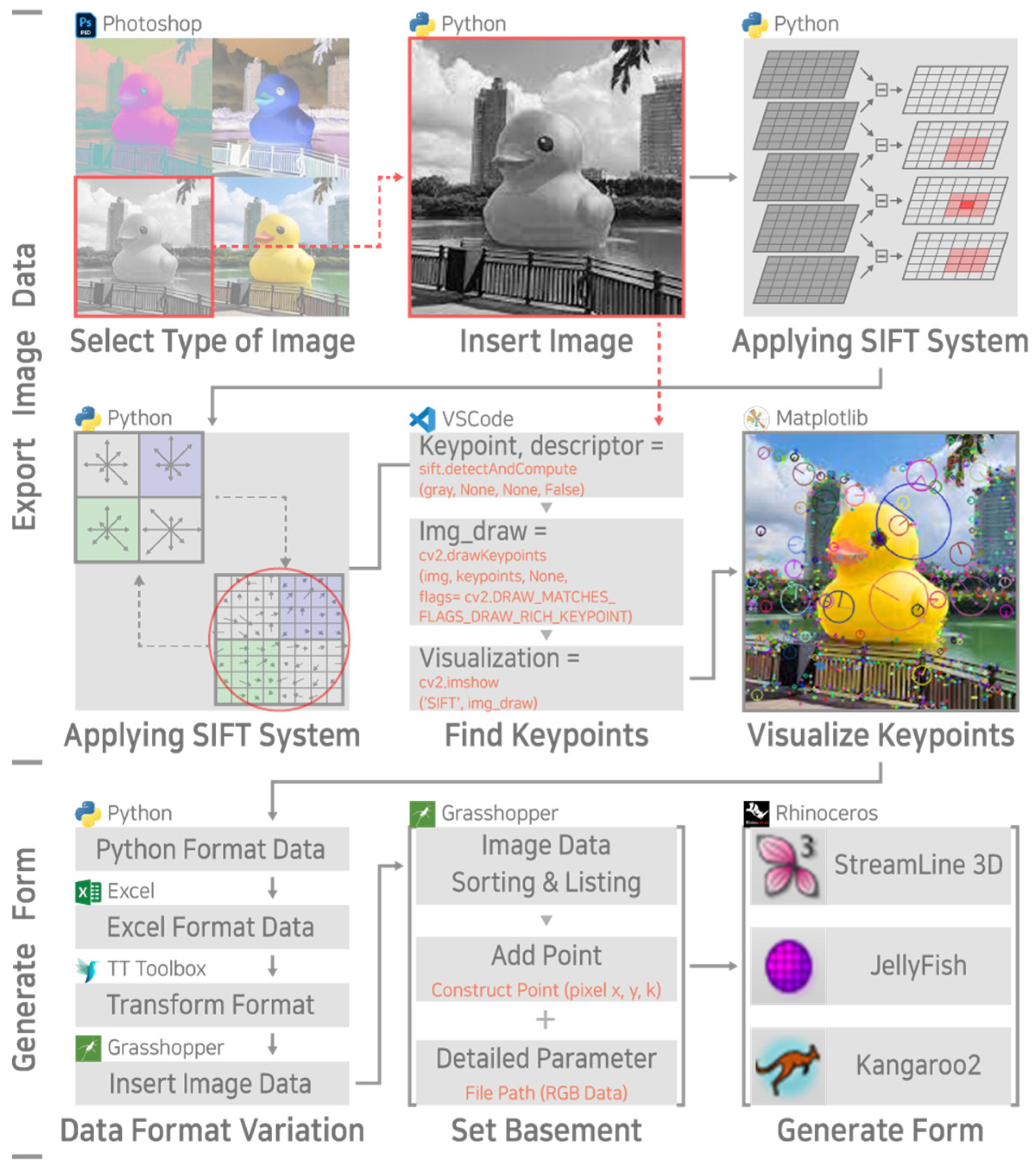

Figure 1). Analysis of the characteristics of forms generated based on single and multiple feature information. First, a theoretical consideration of image information technology, the foundational stage for systematizing the form generation process based on image feature information, was conducted. The characteristics of valuable information derived from the interaction between digital technology and image feature information were explored, and the principles utilized for information extraction were analyzed. Additionally, the distinctiveness between vector and raster-graphics-based images was analyzed, and a theoretical consideration of image feature information using raster graphics was performed. Then, in the analysis of the characteristics of image feature information by type, practically used image feature information was categorized by analyzing previous studies. Most of all, the characteristics of low-level features that could be derived from image data were analyzed as foundational data for image information through an intuitive information extraction method, excluding image transformations, effects, and filters. A form generation methodology suitable for the characteristics and utilization of low-level feature information by type was established. Through this, three-dimensional form results were derived. In the design process analysis, the characteristics of image feature information that emerged in the form generation process and the correlation with the generated forms were analyzed. Additionally, the morphological characteristics and design aspects of the generated designs were analyzed. In the analysis of the characteristics of forms generated based on single and multiple feature information, the interrelationships and characteristics of forms generated using single valuable information and those generated by combining multiple valuable information were analyzed. Through this series of processes, the architectural design and form generation methodology process based on the types of valuable information of image feature information was systematized and schematized.

This research utilized Visual Studio Code to apply libraries of various digital languages to the process and the OpenCV library to automate the extraction of meaningful information from images. In addition, Matplotlib was utilized to visualize the information, and TT Toolbox, a plug-in for Microsoft Excel and Rhinoceros, was used to convert the information into a sculptural language. Grasshopper was utilized to generate simplified sculptures based on the extracted information.

3. Image Information Technologies

3.1. Theoretical Consideration of Image Information

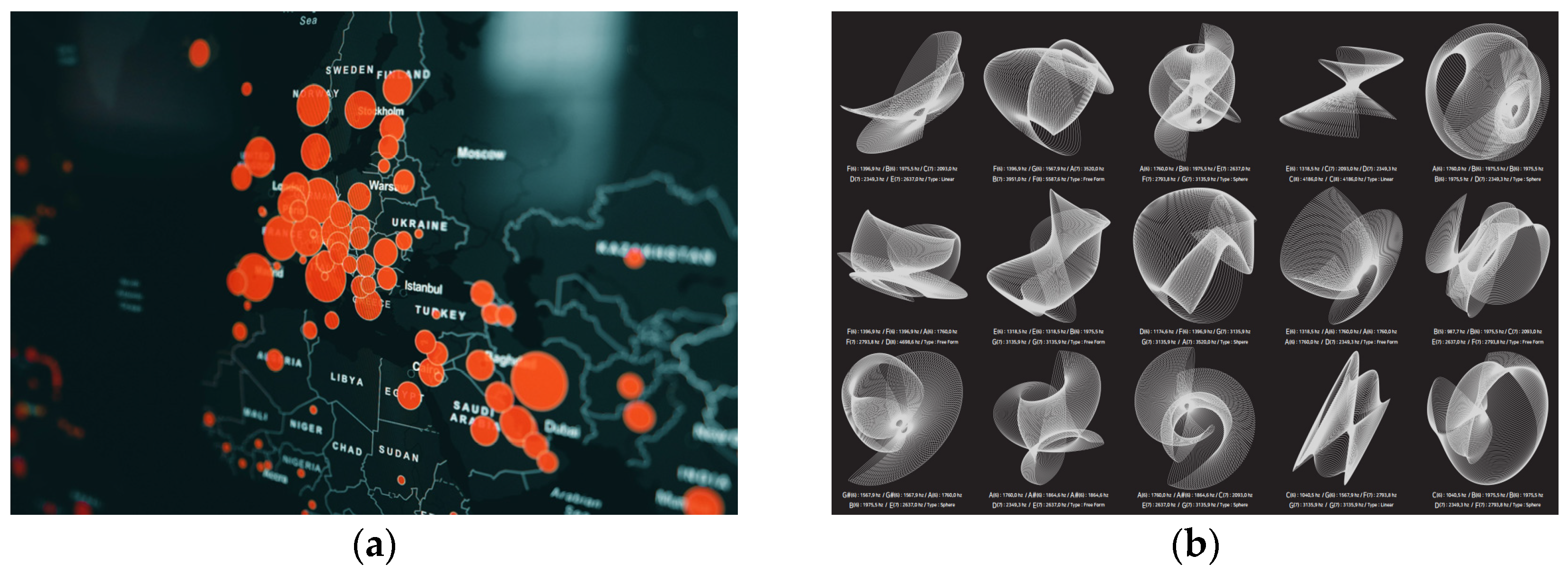

With the recent popularization of multimedia authoring tools and the widespread availability of digital tools such as image production and scanning technologies, image information can be easily obtained. Image information conveys visual information, such as text, among multimedia media. However, due to its ability to deliver information more effectively through diagrams, photographs, graphs, etc., than through text, it is increasingly used as a medium with the potential for efficient communication and the creation of new value (

Figure 2) [

17].

In architecture, images have been used as effective communication tools by visualizing data resulting from the analysis of specific phenomena or functional optimization [

18]. However, with the advancement of digital technology, including AI and machine learning, in modern architecture, images are now recognized not merely as ancillary elements for visualizing specific information but as elements containing information with inherent design potential [

7,

8].

Digital technologies, including AI and machine learning, along with large clusters of image data, have enabled the classification of design types and styles of specific eras or architects. This has made it possible to automate the generation of architectural designs that reflect the styles of specific eras and architects [

19]. Additionally, it has become possible to recognize sketches that abstractly explain design concepts or visualize rough forms and transform them into three-dimensional shapes and spaces [

20]. In this way, images in architecture have demonstrated their potential as intermediaries and primary elements in design generation based on their inherent information.

3.2. Characteristics of Extracted Information Based on Image Formats

The digitization of 2D images can be categorized into raster graphics and vector graphics [

21]. Raster graphics are defined as bitmaps and consist of clusters of pixels and the bit information contained within sub-pixels [

18]. Vector graphics are visualized results based on mathematical principles (Bézier curves) that define geometric shapes on the Cartesian plane, including points, the relationships between points, the start and end of points, and lines [

22]. While vector-based images do not suffer from resolution changes or distortion during scaling, which makes them useful design tools, the types of information derivable from them are limited to mathematical equations, gradients, and coordinates of points, which describe the overall geometric form. Contrarily, pixel-based images, composed of clusters of small grids, such as points, allow for detailed image representation. The changes in pixel information with resolution changes and the relationships between pixels can generate an infinite range of information, allowing for the derivation of various types of information (

Table 1). This study focuses on systematizing the characteristics and utilization of different types of information that can be extracted for three-dimensional form generation from pixel-based bitmaps and raster images, rather than vector-based images that provide complete information through geometric and mathematical principles.

4. Typification and Organization of Pixel-Based Image Feature Information

4.1. Classification of Feature Information Extractable from Pixel Images

The term “pixel” is a portmanteau of “picture” and “element” [

23], and it refers to the smallest unit of information in an image [

24]. Pixels, created based on grids and points, contain only individual bit information, but the digital images formed by clusters of pixels can generate various pieces of information based on the interrelationships between the constituent pixels [

25]. Utilizing the features inherent in pixel clusters allows for numerical analysis of images and the extraction of unique mathematical information depending on the image.

The feature information of pixel images is utilized primarily in the fields of computer vision, including CBIR (content-based image retrieval), within digital technology and industry [

15]. It is classified into low-level and high-level features. High-level image features involve information analysis methods using machine learning algorithms such as CNN, DNN, and K-means [

26]. Low-level image features convert intuitive characteristics such as pixel color and clusters into quantified information based on mathematical functions. Low-level features are further divided into global features, which analyze the overall characteristics of the image, and local features, which analyze key points at specific locations in the image [

27].

Recently, image feature information has been used in various fields beyond computer vision, including art and healthcare. Analyzing previous research allows for the classification of detailed elements of image feature information and their use as parameters in form generation. Such previous research can be summarized as presented in

Table 2.

By analyzing the trends and utilization patterns of image feature information in various previous studies, including the data presented in

Table 2, two key points can be drawn. First, the types of functions primarily used to extract image feature information are defined as presented in

Table 3. High-level image feature information, derived through algorithms including machine learning, is categorized into supervised learning and unsupervised learning. Additionally, with the advent of reinforcement learning and deep learning for information extraction, research utilizing deep learning-based image information has been rapidly increasing. Low-level image feature information is categorized into global and local features. Global features are classified into color features, shape features, texture features, statistical features, and spatial features. Local features are subdivided according to the types of functions used for key point detection, with SIFT, SURF, FAST, and ORB being commonly used.

Second, while research on form generation methodologies or architectural applications using image feature information is lacking, architectural research using machine learning is diverse. Particularly in the construction sector, there is active research utilizing high-level image features to detect site conditions and risks. Conversely, architectural research based on functions used to extract low-level image feature information or valuable information from images remains at a basic stage, with no methodologies for form generation utilizing this information (

Table 4). Against this backdrop, this study aims to systematize the potential applications of the form generation process based on information derived from low-level image features.

4.2. Theoretical Consideration of Low-Level Image Feature Information

This study focuses on the most commonly used features in various fields among low-level image feature information, including color, shape, texture, and locational features, while excluding spatial and statistical features due to their relatively low applicability in information and digital technology. However, these features can be used as ancillary elements to complement and enhance the limitations of existing image feature information rather than as primary elements of form generation, making them part of future research tasks.

Color features are essential for recognizing objects in images and simplifying the extraction process of inherent information [

28]. Color, processed by vision through light reflection, immediately impacts human perception and offers various synesthetic experiences [

35]. Colors are digitized through mathematical codes, and their interrelationships and relative values are clearly distinguishable compared to other information, providing convenience in the analysis and classification process. Therefore, color features are widely used in the field of image information utilization, based on the ease of specifying the color of pixels, distinguishing between colors, and analyzing color similarities and relative values (

Figure 3).

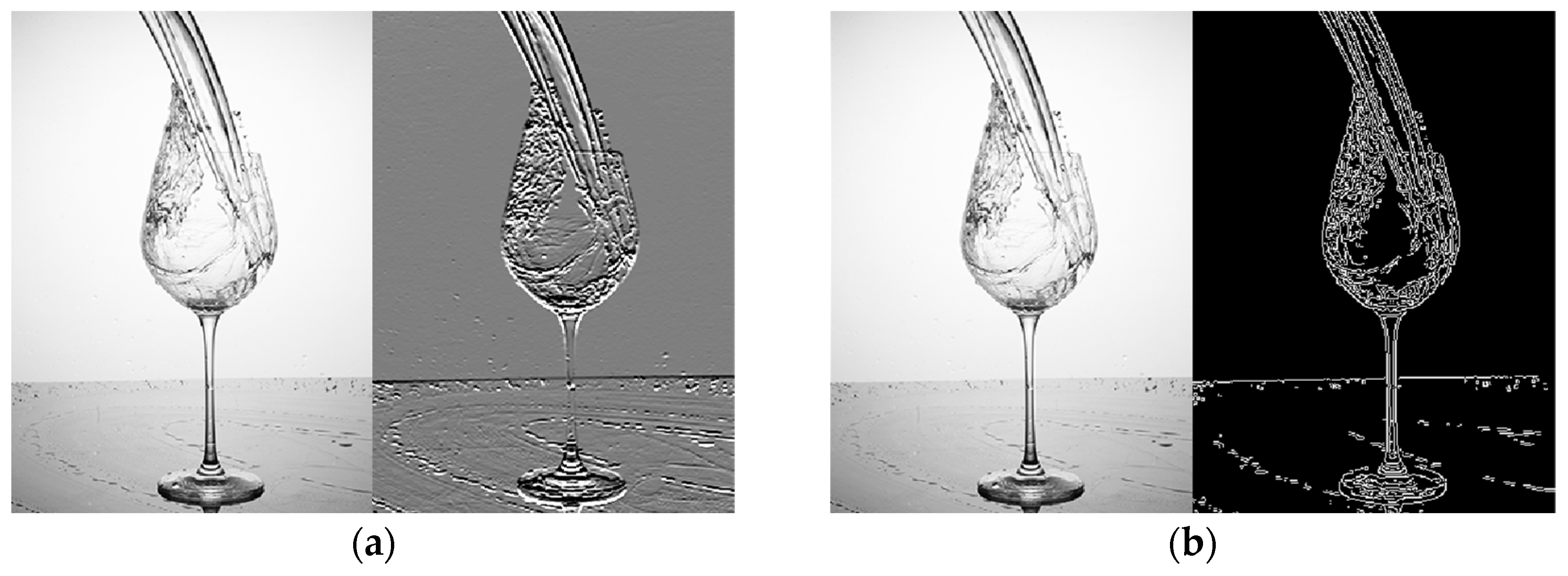

Shape features include intuitive characteristics such as the perimeter, outline, boundary, and diameter of visually recognizable forms within an image, including edge detection [

36]. Edge detection aims to identify points of sharp change in the relationship between pixels or points where discontinuous flows appear in the mathematical system of pixel information [

37]. Previously, these irregular changes were analyzed using image scale changes, blurring effects, or filters. With the advancement of digital technology, various methodologies for extracting and recognizing image information through algorithmic technologies, including machine learning, have emerged (

Figure 4).

Texture features refer to the physical feel of a surface perceived by human touch and the recognition of visually observed forms or components and inherent elements [

38]. Historically, texture in images described the brightness changes between pixels [

39] or the properties and information represented by the arrangement of grayscale levels in specific pixel areas [

40]. Modern techniques can quantify macroscopic roughness and pixel contrast effects and include histogram methods for visualizing the distribution by distinguishing color elements. As such, texture features involve analyzing and deriving information from the detailed structure of pixel clusters perceived in an image based on the arrangement of color and pixel intensity values (

Figure 5).

Locational or local features include feature detection within an image using digital filters based on mathematical algorithms that derive a large amount of inherent information from the positions of key points and pixels [

41]. These are primarily used to analyze similarities and differences between images based on key points and are extracted for image information analysis. With the advancement of algorithms, filters for various purposes have been developed, with scale-invariant feature transform (SIFT) and speeded-up robust features (SURF) being commonly used to derive extreme values based on scale changes or image blurriness as key points (

Figure 6). With the development of digital technology, various methods have been developed to optimize function calculations, with FAST being a representative example.

5. Form Generation Process with Pixel-Based Image Feature Information

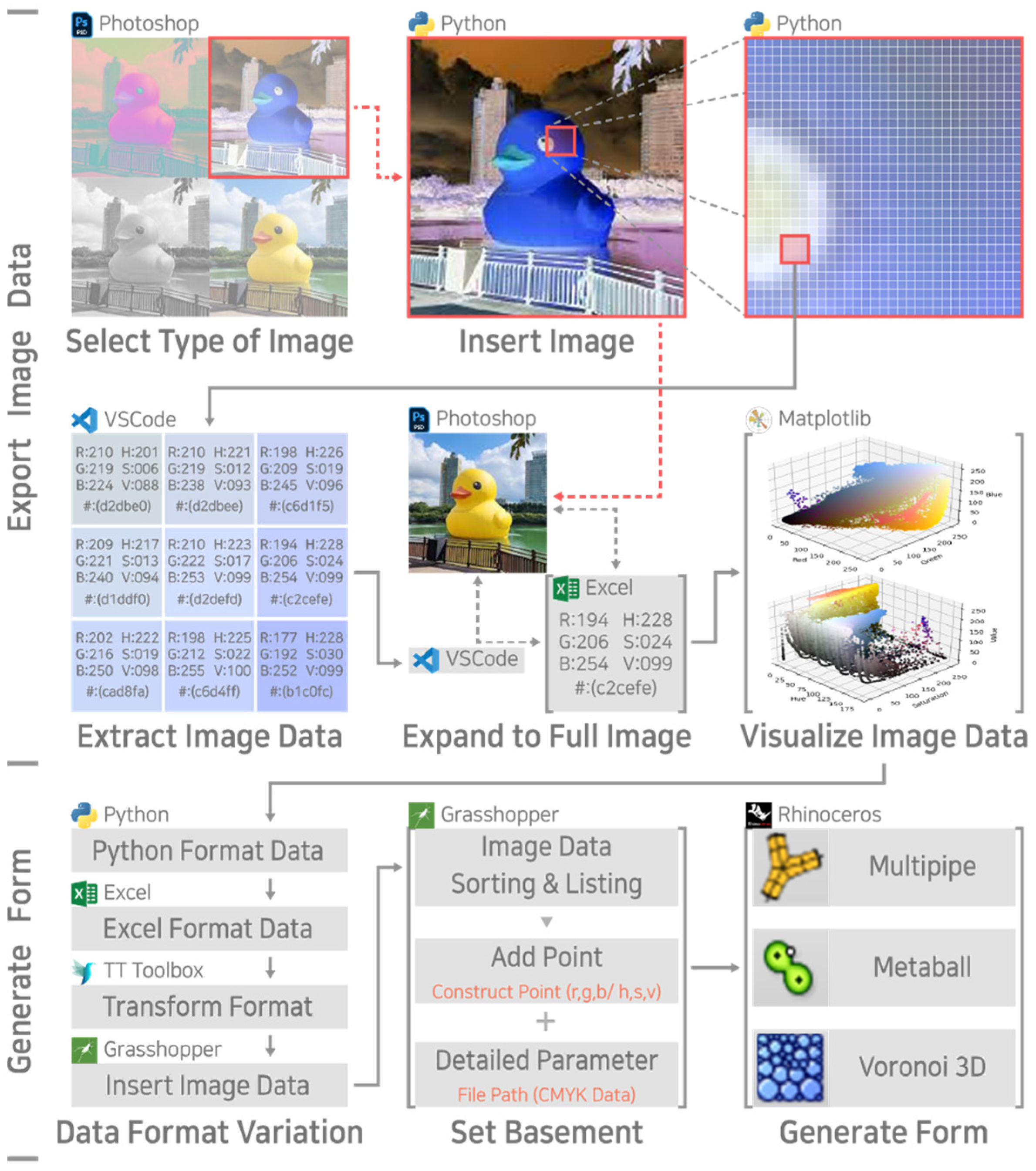

In this study, image data were resized and simplified to 300 × 300 pixel bitmap images, and a digital language system based on Python (Python 3.7.9) was used as a tool for generating image information. Image data were applied to Python using the ‘imread’ function built into OpenCV. OpenCV was adopted because it can accommodate not only image information but also 3D images and motion-capture information and is faster to adapt to the new technologies and more efficient in utilizing information than similar libraries. Rhinoceros and Grasshopper were used as digital tools for form generation based on the generated image information. Additional Python functions and Grasshopper plugins were used as necessary, based on reference materials, to meet the requirements of the form generation process. The form generation process, which utilizes image features, comprises image transformation, data extraction, and form generation (

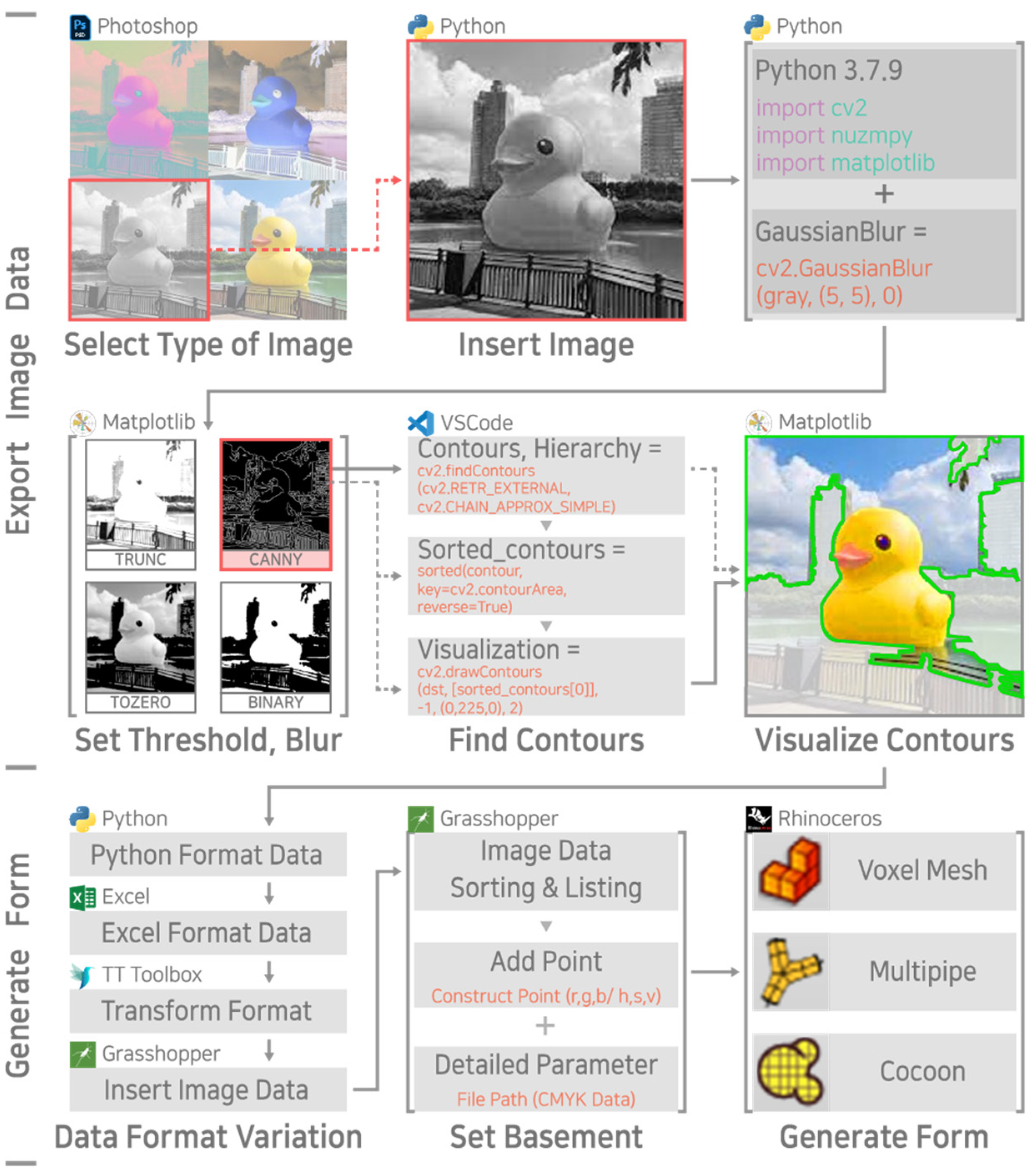

Figure 7). Through form generation experiments, the characteristics of each image feature are analyzed, and the relationships, limitations, and interrelationships between the generated forms and the image features are examined.

5.1. Characteristics and Form Generation Using Color Feature Information

Color features are utilized as fundamental elements in extracting and generating image information. By quantifying the detailed color combinations inherent in each pixel, a cluster of information is formed. This means that color features serve as a medium for converting visible properties into a mathematical language. Using this, the information derived from each pixel includes ten types of image color information, such as the primary colors of light (RGB), the properties and arrangement methods of colors (HSV), and the subtractive color mixing method (CMYK). Color features provide ease of information extraction processes and databases required for form generation, allowing visual information to be quantified and used as parameters for form generation.

Various digital tools can be used to derive color feature information, but this study utilized OpenCV (4.9.0.8) functions applicable in Python. In Python, images are expressed in the BGR system, which is converted into pixel images based on the RGB system using ‘cvtColor’ function. Then, the color feature information converted into digital language can be derived using split, and NumPy and Matplotlib functions are required to visualize the derived information. The primary information derivable from color features includes RGB, HSV, and CMYK. RGB and HSV, excluding the multi-element CMYK, can be visualized in three-dimensional space with values ranging from 0 to 255 on the x, y, and z axes. Color feature information can be used as basic data (database) for other feature information (

Figure 8).

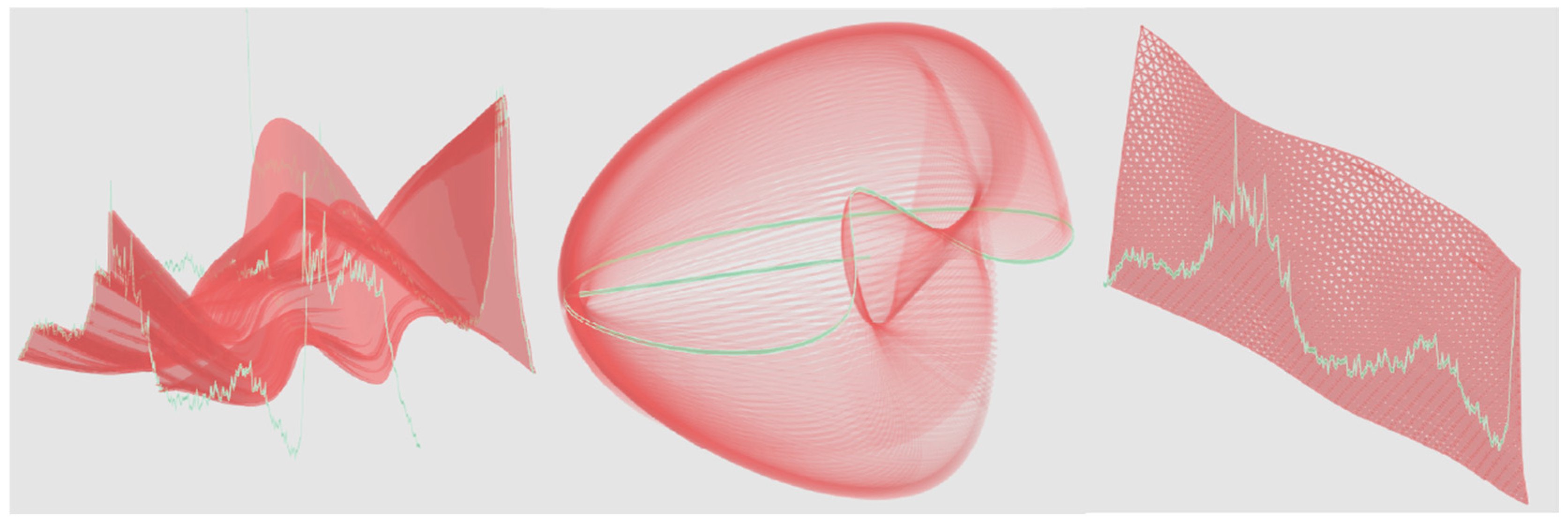

When applying information derived from color features to the form generation process, Rhinoceros is used, and the information generated in Python is converted into an Excel format file extension and applied to Grasshopper. The color feature information of an image shows specific directionality, and since it is derived from a single image, it forms interrelationships rather than differences in the inherent information values between pixels. Transforming this into spatial coordinates allows for the creation of forms with organic shapes and directionality through these interrelationships. However, the type of information derived from color features can generate ten pieces of information per pixel, and for an image composed of 300 × 300 pixels, the extractable information reaches 900,000. While using color feature information as parameters in the form generation process can expand the potential for form generation, the large amount of information increases the complexity and time required for digital calculations. Thus, an efficient classification system is required for the extracted information (

Figure 9).

5.2. Characteristics and Form Generation Using Shape Feature Information

Shape features are used as principles for deriving the outlines of objects in an image, allowing for the classification of objects. Deriving outlines requires a process of identifying points where the characteristics of pixel information, including contrast between pixels, clearly change. This is interpreted as a method of classifying areas within an image based on pixel information, an essential process for reasonably reducing calculation speed when applying numerous pixels and inherent information to digital technology. In the form generation process, a large amount of information makes it difficult to generate and define forms, and shape features serve as support tools to reasonably classify and extract specific information.

To obtain shape feature information, the Python-based OpenCV (4.9.0.8) functions used in the color feature information generation process were applied. The image is inserted through imread, and a preprocessing step is required to extract objects within the image. Since shape feature information is extracted using pixel values based on grayscale images, a color system conversion process through cvtColor is required, and adjustments of contrast values between objects through Gaussian Blur or bitwise_not are needed. Based on the transformed image, functions such as digital language-based Canny Edge, Binary, Binary_inv, Trunc, Tozero, and Tozero_inv thresholds are applied, and finally, contours with a clear separation between objects within the image are extracted through findContours. The areas distinguished by contours form clusters of pixels. This study used the Sorted function to arrange information clusters by size to classify and select information belonging to desired objects. The generated information can be visualized using NumPy and Matplotlib functions (

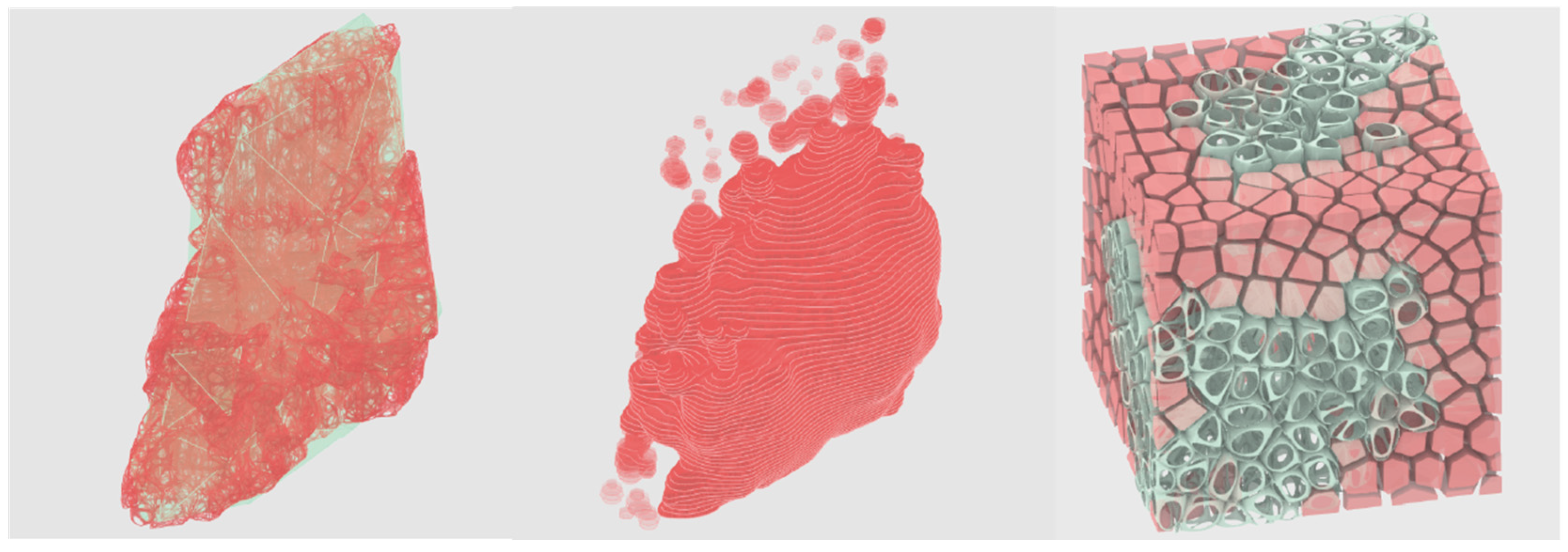

Figure 10).

When applying Python-based information derived from shape features to the form generation process, it is converted into a Microsoft Excel format file extension and applied to Rhinoceros and Grasshopper. The information clusters derived using shape features of an image are relatively densely packed or biased toward specific points, a phenomenon that occurs because the information is derived through specific objects within the image. Thus, the information inherent in the pixels that compose parts of objects rather than the entire image shows significant similarities between each other. Forms generated using shape feature-based information are created with dense forms centered on specific parts or several areas. With the advancement of digital technology, new functions for generating shape feature information have been developed and refined, enabling efficient information management by removing unnecessary information and specifying specific areas more finely among the numerous pieces of information in existing images. However, to clearly specify areas, various functions must be applied, as well as a process to find the optimized functions for each image. The preprocessing step and repetitive tasks to find optimized areas for specific purposes result in unnecessary human labor in digital technology. This limitation is being addressed by developing machine learning and similar technologies (

Figure 11).

5.3. Characteristics and Form Generation Using Texture Feature Information

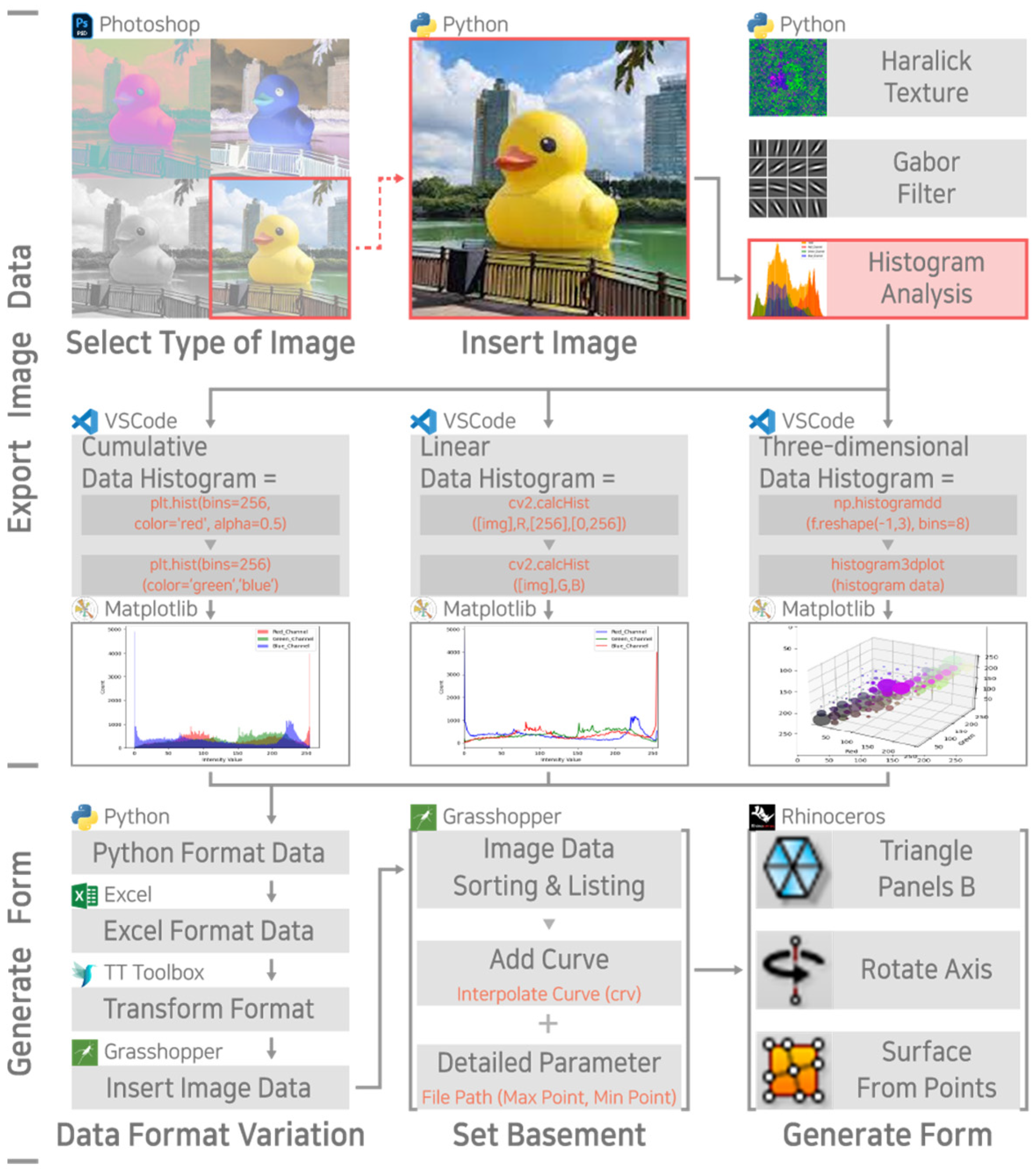

Texture features provide quantifiable information about the distribution of colors through histogram methods or the roughness of an image, including the contrast between pixel clusters, based on the overall pixel database of bitmap images. Histograms, in particular, analyze and visualize the distribution of colors, thereby deriving the color combination information of the image. Color combinations can create specific atmospheres, allowing the perception of the overall sensory atmosphere or tone of the target image. Functions such as Sort, which alter color distribution, enable new variations in image composition and derive new information clusters from the same image. These texture features have the potential to be used not only as variables in the form generation process but also as tools for defining form types.

Haralick and Gabor textures, as types of texture features, apply machine learning to their functions. Therefore, this study utilized histograms suitable for low-level feature information. OpenCV functions were applied to derive histogram information based on texture features, and a definition (histogramplot) was created using NumPy arange, meshgrid, array, and vstack to generate histograms of the image. Using the generated functions, it is possible to create 2D and 3D histograms, derive distribution and contrast value information of colors, and visualize this using Matplotlib (

Figure 12).

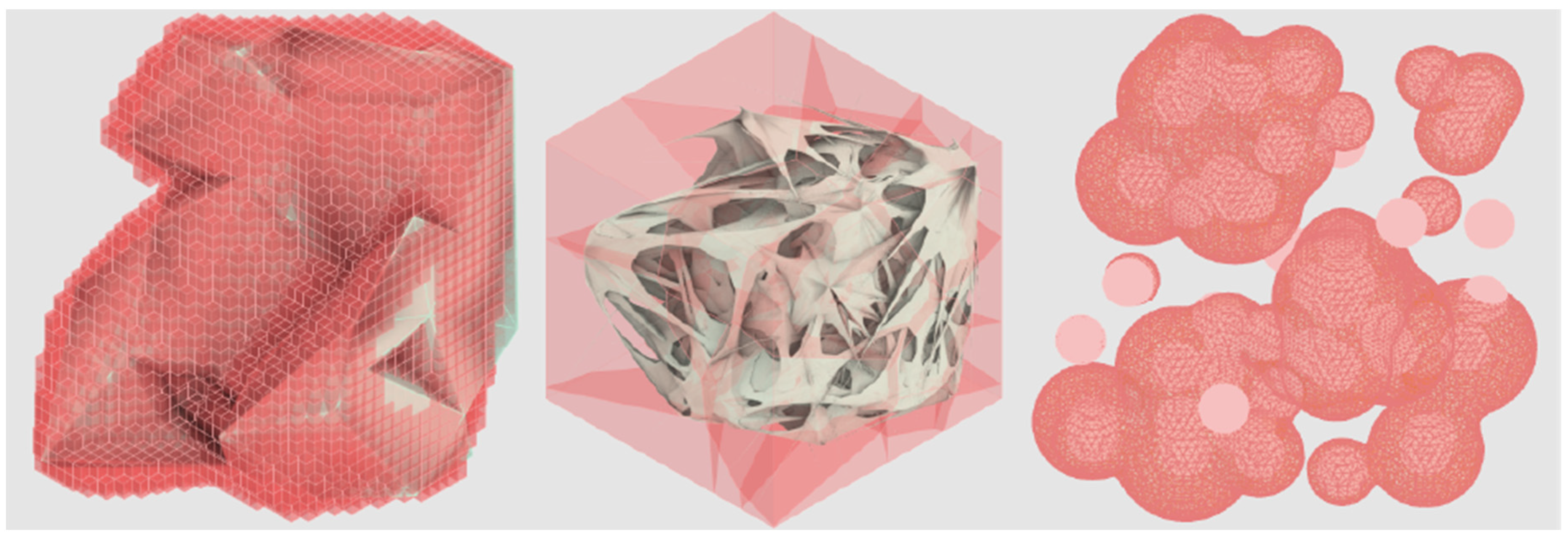

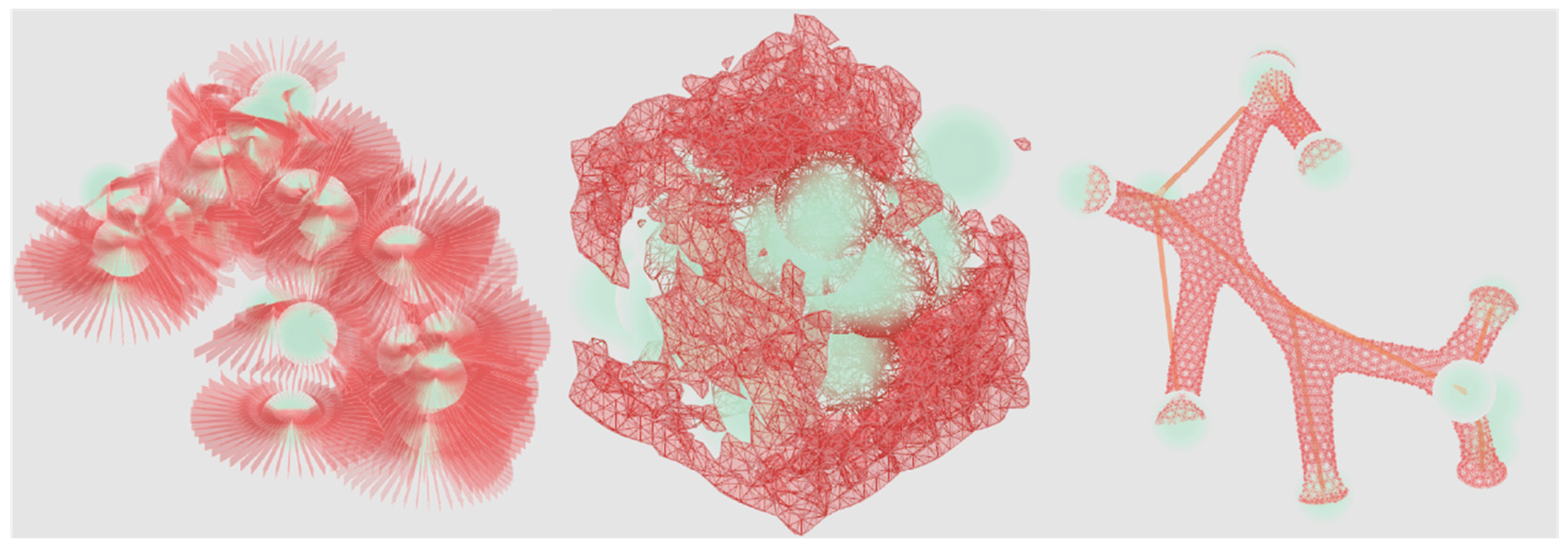

Information derived from texture features takes the form of graphs but is processed as text to be applied to Rhinoceros and Grasshopper. While image information derived through Haralick and Gabor textures creates clusters of information classified by pixel values according to grayscale (K) and detailed colors (R, G, B, etc.), the histograms used in this study analyze clusters of information based on the frequency of each pixel value, allowing linear information to be derived. Forms generated using texture feature information convert pixel values into coordinates, creating forms with clusters of points and utilizing curve and linear elements. Interest in texture features is increasing due to their application in image similarity evaluation technology, medical data analysis, and image analysis, and advancements in digital technology are refining these applications. This has enabled clear derivation of contrast and color information between pixels in images. Texture, as an element that evokes the atmosphere or sense of an image, shows various potential applications but lacks tools for quantitative analysis, making it a subjective concept. However, advancements in modern technology have enabled the quantification of abstract areas using tools such as EEG, expanding the potential applications of texture feature information (

Figure 13).

5.4. Characteristics and Form Generation Using Locational and Local Feature Information

Locational features are used as tools for analyzing similarities and differences between images, but in the process, they extract clusters of pixels composed of unique key points of the target image. Depending on the extraction method, the type and composition of algorithms and functions differ, but they commonly explore the positions and inherent information of characteristic pixels that are less affected by overall image changes. Thus, locational features are pixel information of points representing the characteristics of the target image and can be used as tools for generating unique clusters of key points that change depending on the target image in the form generation process.

Various functions can be used to derive locational feature information, but this study utilized the SIFT function based on the Gaussian Filter and Scale (sigma), which is the basis of SURF, FAST, and ORB. SIFT, such as traditional feature extraction methods, is applied using Python-based OpenCV (4.9.0.8). Since the SIFT function utilizes single pixel values, the BGR image is converted to grayscale using cvtColor. The SIFT function is embedded in OpenCV as a definition format, and the extractor can be immediately created through cv2.SIFT_create(). The blank space indicates the number of key points to be extracted, and Sift.DetectAndCompute simultaneously performs key point detection and feature descriptor calculation using the created SIFT extractor. The derived key points are visualized using cv2.drawKeypoints and generated as images through Matplotlib (

Figure 14).

When applying information derived from locational features to the form generation process, the exact coordinates of key point data are required. To obtain precise pixel coordinates, the function cv2.KeyPoint_convert must be additionally utilized, and the coordinates of the key points derived through this process are converted into mathematical information and applied to Rhinoceros and Grasshopper. The information derived from locational features represents the unique properties of an image, implying that it derives random information depending on the specific image. Thus, image information based on locational features does not have any clear regularity or system compared to other feature information. Therefore, when using key points as coordinates to generate forms, the resulting forms are unpredictable and random, and they require an efficient method to utilize the relatively large amount of information, which includes locational and color information of pixels. Although locational feature-based information generates random forms, which involve substantial subjective opinions from designers, it also means that the forms can only be created from the input image, establishing clear causal and interrelationships between the initial information and the resulting forms (

Figure 15).

6. Results

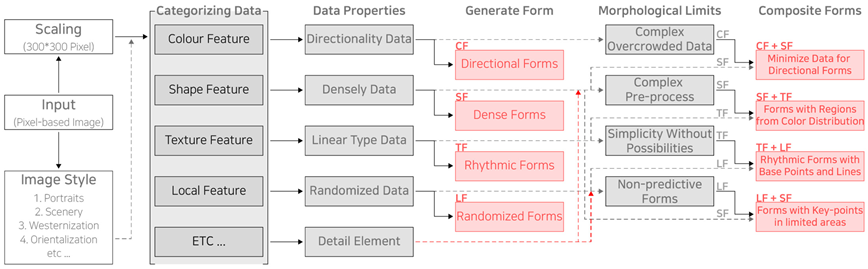

6.1. Systematization of Form Generation Characteristics by the Type of Image Feature Information

Table 5 analyzes the characteristics of each type of image feature information and their potential as parameters for form generation, aiming to identify the interrelationships between the inherent properties of each feature and the generated forms.

Color feature-based image information converts visible characteristics into mathematical digital language, serving as the basic element for extracting and generating other image feature information. By quantifying visual information, the generated color feature information exhibits specific directionality based on the interrelationships between pixels. Forms generated using this information display organic shapes through the interrelationships and specific directionality between points (coordinates). Such a vast amount of diverse information can be used as parameters to expand the potential for form generation, but it also leads to increased complexity and significant time consumption in digital calculations.

Shape feature-based image information uses pixel value changes and contrasts to classify object areas inherent in the image. By removing unnecessary information, it enables rational classification and efficient management of information, with the generated information appearing densely packed or biased toward specific points. Forms generated using this information are densely packed around specific parts or multiple areas, requiring a complex preprocessing step and the designer’s intuitive judgment to identify the optimal areas.

Texture feature-based image information quantifies the contrast and distribution between clusters of visual data, namely pixels, and the inherent pixel information. Thus, visualized data such as roughness, which indicates the contrast of overall pixel values, or histograms, which quantify specific color pixel values, are provided as linear information in the form of graphs. Forms generated using this information create shapes based on the value variations of pixels, including curves and rhythmic, linear elements. While texture is used as an element to create sensory and atmospheric effects in design, it has limitations in quantifying it. Thus, texture information has limitations as basic data that merely analyzes the distribution of information.

Locational feature-based image information extracts clusters of pixels containing characteristics unique to the target image. Key points representing the unique properties of the image appear differently depending on the target image and do not have any clear regularity or system. Forms generated using this information are unpredictable and random due to the diverse image types, with clear causal relationships and interrelationships between the input image and the generated result. However, random forms may result in outcomes that do not align with the designer’s intent or are generated through subjective aesthetics.

6.2. Systematization of Interrelationships of Image Feature Information

Based on the systematization results of the interrelationships between valuable information and design as parameters in the form generation process by the type of image feature information, the interrelationships of image feature information and the forms that can be generated using them are presented in

Table 6. Each type of image feature information has positive characteristics and clear limitations, which can be overcome by combining characteristic information based on complementarity. This allows for the creation of new forms different from existing ones, expanding the potential for form generation.

The limitation of image information derived from color features is the significant time consumption and complexity of digital calculations in the form generation process due to the enormous amount of information. This limitation can be addressed by using and combining shape feature information to remove unnecessary information and to enable rational classification and efficient management of information. Consequently, the organically formed shapes previously generated based on interrelationships and specific directionality between points (coordinates) may change into shapes that are densely packed or somewhat biased at specific points, effectively reducing the complexity and time required for the form generation process.

The limitation of image information derived from shape features is the complex preprocessing step required to classify objects within the image and the intuitive judgment needed by designers to identify optimal areas. This limitation can be mitigated by using visualized graph information, such as histograms that quantitatively represent specific color pixel values, derived from texture feature information. This helps identify the distribution and color types of each area, aiding designers in selecting areas that align with their intentions. Through this approach, irregular shapes densely packed in multiple areas can be transformed into homogeneous shapes densely packed in fewer areas.

The limitation of image information derived from texture features is that it is basic data that merely analyzes the distribution of specific color and pixel information. This limitation can be addressed by using locational feature information, which generates new types of information through key points representing the unique properties of the image. This allows the forms that previously varied based on pixel values, curve- and line-based flows, and rhythmic forms to use key points as reference points or axes, expanding the potential for generating organic shapes through various changes.

The limitation of image information derived from locational features is that it generates unpredictable forms, leading to outcomes that differ from the designer’s intent or are based on subjective aesthetics. This limitation can be mitigated by using shape feature-based image information to reduce the area of analysis within the image, thereby narrowing the range in which random key points are generated. This approach allows for the generation of forms densely packed in specific areas, addressing the issue of key points being scattered due to a lack of any clear regularity or system.

7. Conclusions

In today’s society, information has emerged as a core value creation tool across modern industries, driven by the Fourth Industrial Revolution and advancements in ICT. Digital technologies, including big data and data mining, have diversified and advanced information collection methods, leading to research on effective selection, classification, and utilization of rapidly increasing data as a paradigm of the times. The architectural use of information technology has predominantly been viewed as a tool for functional optimization by effectively extracting and analyzing quantifiable conditions and phenomena or as an ancillary element for generating aesthetic patterns in architectural design.

This study analyzed and categorized image feature information extractable from pixel-based bitmap images. Through a form generation methodology based on image feature information established in conjunction with digital technology, it analyzed the results of form generation according to the types of information, analyzed and systematized the characteristics of image information used as parameters, and analyzed the relationships between the types of generated forms. The interrelationships and potential uses of information, or the interrelationships between information and forms, in the form generation methodology based on image feature information can be analyzed and systematized as follows.

First is the interrelationship between the characteristics of each type of image feature information and the generated forms. Depending on the type of image feature used to extract valuable information, information with directionality, linearity, density, etc., is derived. Even the same feature information produces different characteristics depending on the input image. When the inherent characteristics of each type of image feature information are used as parameters for form generation, various form outcomes can be derived, confirming that three-dimensional design generation is possible through the interrelationship between image information and digital technology.

Second is the complementarity between valuable information types to address the limitations of each type of image feature information. While image feature information contains positive characteristics depending on its type, the form generation process has some limitations. The limitations present in image feature information can be partially addressed through a complementary relationship between valuable information types, creating new image information through their combination. Forms generated through the complementary relationship between valuable information types differ from those generated through single valuable information, expanding the potential for form generation.

Third is the potential for architectural application of three-dimensional form generation methodologies using image feature information. The advancement of digital technology has led to an explosive increase in various types of information, including the image feature information used in this research. Therefore, information-based form generation methodologies can derive various forms proportional to the amount and type of information, confirming that generated forms can be used to propose alternatives in the early form analysis (mass-study stage) and concept-building stages of the architectural design process.

The form generation process that utilizes numerical information is based on the characteristics of each type of information. It expands interrelationships between complementary information types and between information and forms to create and materialize new forms in practical environments. This is similar to generative design technology, which instantly generates numerous alternatives through random variables. However, unlike the results derived from abstract and subjective aesthetics in digital-based generative technology, this methodology produces results based on the interrelationship between the inherent characteristics of information and forms, providing an instantly logical form generation method with clear causality. Additionally, this study can support the design composition or concept construction process in the early stages of architectural design. By securing a logical basis in the decision process of the design process, convenience in the design process can be obtained, and through this, architectural aesthetics can be enhanced. In this study, we dealt with the technique of extracting data contained in images based on images and the application of behavior generation. Currently, we are working on improving the method of extracting and classifying information by applying information such as 3D information, image information, and motion capture. In addition, we are analyzing the possibility from various perspectives, such as the use of image automation generation technologies such as Midjourney neural networks or ControlNet, or machine learning neural networks (e.g., pre-trained convolutional neural networks CNN, such as VGG, ResNet, EfficientNet, etc.), based on the relationship between the extracted information characteristics and the generated form. If these latest technologies are applied to this process, the quality evaluation of the generated shapes and the generation process can be replaced by machine learning (e.g., concordance coefficient, machine learning metrics), which is not subject to the designer, and it is expected that it will be possible to advance the current research and systematize it into an objective process by establishing classification evaluation metrics, etc. In addition, if the information-based shape generation process is systematized through future research, it is expected that it can be effectively utilized throughout the design process.

The logical extraction, classification, and form generation methodology of information shown in this study can be used as a preliminary study and reference material for other similar studies and can be used as basic information for research.